Abstract

Introduction

Radiology reports communicate imaging findings to ordering physicians. The substantial information in these reports often causes physicians to focus on the summarized “impression” section. This study evaluated how often a critical finding is documented in the report’s “impression” section and describes how an automated application can improve documentation.

Methods

A retrospective review of all chest CT scan reports finalized between October, 2009 and September, 2010 at an academic institution was performed. A natural language processing application was utilized to evaluate the frequency of reporting a pulmonary nodule in the “impression” section, versus the “findings” section of a report.

Results

Results showed 3,401 reports with documented pulmonary nodules in the “findings” section, compared to 2,162 in the “impression” section – a 36.4% difference.

Conclusion

The study revealed significant discrepant documentation in the “findings” versus “impression” sections. Automated systems could improve such critical findings documentation and communication between ordering physicians and radiologists.

Introduction

Communication is fundamental in assuring delivery of quality and safe health care.1 For that reason, in 2011, the Joint Commission established communication of critical results as one of its top goals for its National Patient Safety Standards.2,3 Prior to the Joint Commission establishing this goal, several studies described how physicians often failed to address critical results promptly due to poor communication.3,4,5,6 The result of missing these critical results compromised patient care and resulted in several malpractice lawsuits.6

The primary purpose of the radiology report is for radiologists to clearly communicate imaging results to ordering physicians.7,8 Practice guidelines for the communication of diagnostic imaging findings by the American College of Radiology (ACR) outlines a structured format of the radiology report. In their guidelines, the ACR states that imaging findings are contained in the body of the report, while specific diagnosis should be given in the separate ‘impression’ section.8

Despite these guidelines, there is extensive variation in (1) usage of terms for describing imaging findings and (2) the section within the report where findings are documented.9 This flexibility in documentation confuses referring physicians who often focus only on the ‘impression’ section of the report. Thus, critical imaging findings are missed in this universal form of communication between radiologists and referring physicians.10

Throughout its early history, imaging informatics focused on the technological advancements of its imaging modalities and made great advances in transmitting and processing imaging data. However, the communication of results of these images by radiologists to referring physicians was not addressed as extensively.11,12 In recent years, the clear and concise communication of imaging and textual data has become a greater focus.

Hripsack et al have focused on a fixed, new structure of radiology reports and the utilization of natural language processing (NLP) applications for understanding the contents of these narrative reports.12,13 Singh et al have focused on alerting systems for communicating critical results to referring physicians.5 Khorasani and his department have created an automated notification system to report urgency levels and critical results to ordering physicians.14 Despite these recent advances in the field, many critical findings are still not communicated adequately to the referring physician.4,5,6 More importantly, the most pervasive means of communication between radiologist and referring physician, the radiology report, still needs to be addressed.

This study’s goal is to evaluate documentation of a critical imaging finding, a pulmonary nodule, in the “impression” section of the radiology report versus the “findings” section of the report. In addition, we will analyze reasons for discrepancy in critical finding documentation within different sections of the radiology report and describe how an automated application can improve documentation.

Methods

We conducted a retrospective review of all reports of chest CT scans performed at a 750-bed tertiary referral academic medical center from October 1, 2009 to September 31, 2010. Reports of CT scans from affiliated sites, including a cancer center, a community hospital, and six outpatient imaging facilities, were also included. We obtained institutional review board approval for this HIPAA-compliant study, as well as a waiver of informed consent for performing retrospective medical record review.

In order to evaluate documentation of the presence of a pulmonary nodule in the radiology reports, a natural language processing (NLP) application was utilized for performing document retrieval (DR). The study is divided into two phases of evaluation – validation of the NLP application for retrieving radiology reports containing pulmonary nodules, and utilizing this application for evaluating how often this critical imaging finding is documented in the “impression” section of the radiology report versus the “findings” section.

NLP Validation

We utilized a publicly available toolkit, Information from Searching Content with an Ontology-Utilizing Toolkit (iSCOUT), to perform DR of radiology reports. iSCOUT components were originally developed at our institution, written in the Java programming language and distributed as jar files (upon request). It enables queries and retrieves relevant documents, particularly radiology reports, and includes a Terminology Interface component, which allows query expansion based on related terms that are provided by an expert or derived from a standard terminology. Other components include the Data Loader, Header Extractor, Reviewer and Analyzer. The Header Extractor component enables selective retrieval of reports by restricting search to various sections or headers of a report (e.g. impression, findings). This component was specifically utilized for this study.

An expert-derived list of terms was developed to retrieve radiology reports that documented the presence of a pulmonary nodule. Two radiologists, including a thoracic radiologist, provided guidance in generating the list. Table 1 enumerates the list of terms that were utilized for this study. Variants that differed only by capitalization or plurality were included.

Table 1:

Term list for pulmonary nodule query expansion

| Terms |

|---|

| pulmonary nodule |

| lung nodule |

| spn |

| sub pleural nodule |

| subpleural nodule |

| nodular opacity |

Precision and recall were measured to evaluate DR. Precision is defined as the proportion of true positive reports to the total number of reports retrieved. Recall is defined as the proportion of true positives that were actually retrieved to all reports that should have been retrieved. For this first phase, 200 randomly selected chest CT scan reports were reviewed by two physicians independently. When both agreed that a report indicated a finding consistent with a pulmonary nodule, this report was included in the list. When there was disagreement between the two reviewers, the report was discussed until they agreed on a final adjudication.

“Findings” versus “Impression”

In the second phase, we assessed the frequency that a pulmonary nodule was documented in the “impression” section of the report, compared to the “findings” section. iSCOUT was utilized to retrieve radiology reports, limiting the search to the “findings” section initially. Of all these reports retrieved, iSCOUT was again utilized to retrieve radiology reports, limiting the search to the “impression” section. As discussed earlier, the Header Extractor component enables search restriction to selected areas of a report.

After documenting that there is discrepancy in documentation of the presence of pulmonary nodules in the “findings” versus “impression” sections of radiology reports, a manual chart review of 100 radiology reports was performed. These 100 reports were randomly selected from reports that had the presence of pulmonary nodules documented in the “findings” section, but not in the “impression” section, and the review focused on identifying factors which may have contributed to the discrepancy in documentation.

Results

There were 3,905 chest CT scan reports obtained from our study site during the study period. For the NLP validation, 200 chest CT scan reports were reviewed manually and 60 reports were identified as having a documented pulmonary nodule. Using this as gold standard, the precision and recall of iSCOUT for identifying pulmonary nodules were 0.96 and 0.80, respectively.

“Findings” versus “Impression”

Of 3,905 radiology reports identified, 3,401 reports had documented pulmonary nodules in the “findings” section of the report. Among these 3,401 reports, 2,162 were also documented in the “impression” section. Therefore, 36.4% of nodules detected by NLP in the “findings” section of the report were not documented in the “impression” section (n=1,239).

Further review of reports that had pulmonary nodules documented in the “findings” section, but not in the “impression” section, identified reasons for the discrepancy. Table 2 enumerates the reasons for the discrepancy in documentation based on a manual review of 100 out of these 1,239 reports.

Table 2:

List of reasons for discrepancy in documentation

| Reasons identified from manual review | (n=100) |

|---|---|

| Presence of malignancy | 53 |

| More urgent/acute finding (e.g. pulmonary embolism) | 20 |

| Non-suspicious pulmonary nodule (e.g. unchanged from previous) | 4 |

| No apparent reason (e.g. missed) | 5 |

| Miscellaneous (e.g. granulomatous disease) | 5 |

| iSCOUT precision error | 13 |

The manual review found that a common reason for discrepancy in documentation was due to the complexity of the other findings in the report. Several reports had reported multiple malignancies, in addition to the pulmonary nodule. Furthermore, in many instances, pulmonary nodules represented a less urgent finding, compared to another significant disease process. In this scenario, the radiologist utilizes the impression section to describe the other “urgent” findings in more detail, thus omitting any mention of the pulmonary nodule. Other reports that document pulmonary nodules in the “findings” section describe the nodule as not appearing malignant or suspicious. This may have influenced why the finding was not further documented in the “impression” section of the radiology report.

Discussion

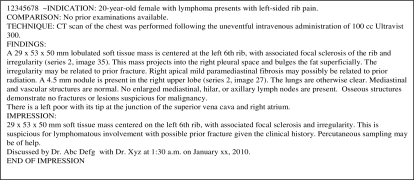

Our study revealed discrepant documentation in the “findings” versus “impression” sections of radiology reports, with over a third of “impressions” missing a critical finding. Figure 1 illustrates a radiology report where a pulmonary nodule is documented in the “findings section, but not in the “impression.”

Figure 1:

Radiology report with a pulmonary nodule in the “findings” section

In this de-identified report, the patient had a lymphoma (a malignant condition), and a soft tissue mass in the lungs, associated with the malignancy. Thus, the 4.5 mm lung nodule, which was mentioned in the “findings” section, was never documented in the “impression.” In a manual review of radiology reports, factors that were identified to be responsible for the discrepancy in documentation of this critical finding in the two distinct sections of the radiology report ranged from prioritizing reporting of other more significant or urgent findings to ignoring a non-suspicious finding. Despite these reasons, a pulmonary nodule is a potentially critical imaging finding, and needs to be appropriately communicated to the referring physician. Thus, it is important that it be documented in the impression section of the radiology report, especially because this is often the primary section viewed by ordering physicians when reading the report.10

In a recent report, entitled “Best Practices in Radiology Reporting,” the authors recognize that information can be more easily retrieved and analyzed to support medical research and quality improvement analyses if consistent formats and terminology are used in radiology reports.7 Further studies have also demonstrated that an itemized reporting system could facilitate complete documentation of information in the radiology report and is preferred by referring physicians and radiologists.15

This study illustrates a first step in ensuring that potentially critical imaging findings are not missed in the “impression” section of a report. In particular, iSCOUT enables automatic retrieval of reports that contain a critical finding. Automatically retrieving critical findings from the “findings” section can therefore be utilized to create an automated summary of critical findings, and augment the “impression” section, similar to an automated text summarization task.16 The near-perfect precision is ideal for ensuring that only “true positive” findings are included in this summarized portion of the report.

Finally, a consistent terminology for reporting findings in the “impression” section of the radiology report could further enhance the utility of radiology reports for enhancing physician communication. Similar to “problem lists” encountered in electronic health records, the “impression” in radiology reports can be utilized for quality improvement initiatives, as well as to track patients’ progress and ensure appropriate follow up. Furthermore, diagnostic imaging data can be indexed more efficiently and large volumes of information can be retrieved and analyzed for clinical outcomes and translational research.14

Conclusion

The study revealed discrepant documentation in the “findings” versus “impression” sections of radiology reports, with over a third of “impressions” missing a potentially critical finding, a pulmonary nodule. Notwithstanding the importance of ensuring that critical results are mentioned in the “impression” section of a report, the section most frequently reviewed by referring physicians, radiologists often fail to document these results, especially when other imaging findings that are deemed more significant are also present. Utilizing an automated system for retrieval and summarization can potentially improve documentation of critical findings and promote more effective communication between radiologists and ordering physicians.

Acknowledgments

This work was supported in part by the National Library of Medicine grant LM007092 and Grant R18HS019635 from the Agency for Healthcare Research and Quality.

References

- 1.Berlin L. Standards for radiology interpretation and reporting in the emergency setting. Pediatrics Radiology. 2008;28(supp 4):S639–644. doi: 10.1007/s00247-008-0989-4. [DOI] [PubMed] [Google Scholar]

- 2.The Joint Commission 2011. National Patient Safety Goals. http://www.jointcommission.org/standards_information/npsgs.aspx. 2011.

- 3.Lucey LL, Kushner DC. The ACR guideline on communication: to be or not to be, that is the question. J Am Coll Radiol. 2010;7:109–114. doi: 10.1016/j.jacr.2009.11.007. [DOI] [PubMed] [Google Scholar]

- 4.Gandhi TK. Fumbled handoffs: one dropped ball after another. Annals of Internal Medicine. 2005;142(5):352–358. doi: 10.7326/0003-4819-142-5-200503010-00010. [DOI] [PubMed] [Google Scholar]

- 5.Singh H, Arora HS, Vij MS, et al. Communication outcomes of critical imaging results in a computerized notification system. J Am Med Informatics Assoc. 2007;14:459–466. doi: 10.1197/jamia.M2280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Berlin L. Malpractice issues in radiology. American Journal of Roentgenology. 1997;169:943–946. doi: 10.2214/ajr.169.1.9207493. [DOI] [PubMed] [Google Scholar]

- 7.Kahn CE, Langlotz CP, Burnside ES, Carrino JA, Channin DS, Hovsepian DM, Rubin DL. Toward Best Practices in Radiology Reporting. Radiology. 2009;252(3):852–56. doi: 10.1148/radiol.2523081992. [DOI] [PubMed] [Google Scholar]

- 8.ACR practice guideline for communication of diagnostic imaging findings. Revised 2010. www.acr.org/.../quality_safety/guidelines/dx/comm_diag_rad.aspx. Accessed January10, 2011 [Google Scholar]

- 9.Sobel J, Pearson M, Gross K, et al. Information content and clarity of radiologists’ reports for chest radiography. Academic Radiology. 1996;3:709–717. doi: 10.1016/s1076-6332(96)80407-7. [DOI] [PubMed] [Google Scholar]

- 10.Clinger NJ, Hunter TB, Hillman BJ. Radiology reporting: attitudes of referring physicians. Radiology. 1988;169:825–826. doi: 10.1148/radiology.169.3.3187005. [DOI] [PubMed] [Google Scholar]

- 11.Sinha U, Bui A, Taira R, Dionisio J, Morioka C, Johnson D, Kangarloo H. A review of medical imaging informatics. Annals of the New York Academy of Sciences. 2002;980:168–197. doi: 10.1111/j.1749-6632.2002.tb04896.x. [DOI] [PubMed] [Google Scholar]

- 12.Langlotz CP. Automatic structuring of radiology reports: harbinger of a second information revolution in radiology. Radiology. 2002;224:5–7. doi: 10.1148/radiol.2241020415. [DOI] [PubMed] [Google Scholar]

- 13.Hripcsak G, Austin JHM, Alderson PO, Friedman C. Use of natural language processing to translate clinical information from a database of 889,921 chest radiographic reports. Radiology. 2002;224:157–163. doi: 10.1148/radiol.2241011118. [DOI] [PubMed] [Google Scholar]

- 14.Khorasani R. Optimizing communication of critical test results. Journal of the American College of Radiology. 2009;6(10):721–723. doi: 10.1016/j.jacr.2009.07.011. [DOI] [PubMed] [Google Scholar]

- 15.Naik S, Hanbidge A, Wilson S. Radiology reports: examining radiologist and clinician preferences regarding style and content. American Journal of Roentgenology. 2001;176:591–598. doi: 10.2214/ajr.176.3.1760591. [DOI] [PubMed] [Google Scholar]

- 16.Lacson R, Barzilay R, Long W. Automatic analysis of medical dialogue in the home hemodialysis domain: structure induction and summarization. J Biomed Inform. 2006;39(5):541–555. doi: 10.1016/j.jbi.2005.12.009. [DOI] [PubMed] [Google Scholar]