Abstract

Electronic Medical Records (EMRs) are increasingly used in modern health care. As a result, systematically applying usability principles becomes increasingly vital in creating systems that provide health care professionals with satisfying, efficient, and effective user experiences, as opposed to frustrating interfaces that are difficult to learn, hard to use, and error prone. This study demonstrates how the TURF framework [1] can be used to evaluate the usability of an EMR module and subsequently redesign its interface with dramatically improved usability in a unified, systematic, and principled way. This study also shows how heuristic evaluations can be utilized to complement the TURF framework.

Keywords: UFuRT, TURF, Interface Design, Usability, Meaningful Use, Electronic Medical Record (EMR)

1. Introduction

Continually rising health care costs without concomitant benefits are a major public concern that affects everyone, especially those with limited resources [2] [3]. In an effort to advance health care system, the US government’s Health Information Technology for Economic and Clinical Health Act (HITECH) rewards providers and hospitals that implement electronic medical records (EMRs) that achieve certification by satisfying the Meaningful Use (MU) criteria [4]. While the national HITECH program offers tremendous promise to drive change throughout the health care industry, the usability of EHRs is not currently addressed with sufficient attention and resources. However, usability is being widely discussed for potential inclusion in later stages of Meaningful Use [5].

Usability is described under TURF as how useful, usable, and satisfying a system is for the intended users to accomplish goals in the work domain by performing certain sequences of tasks [1] [6]. As such usability is a key aspect of creating a successful EMR. Poor usability can lead to reduced acceptance by users, unnecessary quantities of user errors, reduced efficiency by users that takes time away from patient care, and ultimately poor usability may affect patient safety [7].

Human-centered design methodologies like TURF have been applied successfully in fields such as aviation for designing cockpits and automotive for improving human interfaces [8]. Software interface design has lagged behind in the use of methods to improve efficiency, effectiveness and productivity [9]. With the impetus provided by the national focus on improving healthcare, users of EMRs stand to benefit from the application of usability principles. As of March 2011, the list of government certified EMR products and modules has over 470 products and it is growing [10]. While there are a large number of products, what is missing is a framework that can be applied to EMRs that enables EMR developers to improve their product usability and which also enables EMR users to evaluate usability of products under consideration.

2. Background

This paper will demonstrate that the TURF analysis framework enables developers to design systems with improved usability and allows users to effectively evaluate the usability of existing systems. TURF stands for Task Analysis, User Analysis, Representational Analysis, and Functional Analysis. (TURF was previously known by the acronym UFuRT.) It is based on the principles of work-centered design which espouse the development of a work ontology to provide a high-level view of the work of specific users and extend the studies to representation and task interaction. By applying the principles of TURF, the designer gains a thorough understanding of the users of the systems and the functionality that is required to meet the work requirement. From this information TURF then identifies the necessary information and considers how the information can be best represented to the users. Lastly, the task analysis provides the designer with a process for evaluating the complexity and performance of the interface design so usability bottlenecks can be identified and remediated [1].

It is against the backdrop of Meaningful Use and EMRs that TURF will be used to improve the design of an existing interface. In particular of the 45 test procedures developed by NIST to evaluate Meaningful Use, our research will look specifically at the test procedure §170.302(e) Maintain active medication allergy list [11]. Medication allergies are an important patient safety issue as medication allergies can result in life-threatening situations. Ensuring that this information is presented in the most effective way is a design challenge that TURF will address.

Usability heuristics were employed as a complementary method to the TURF findings. This technique involves comparing the interface against a set of heuristic principles, or rules-of-thumb, to identify aspects of the interface that violate the heuristic principles. Usability heuristics are valued as a low-cost, low time-commitment method for usability evaluation [12].

The usability heuristics used for this study are based on the 14 usability heuristics described in Zhang et al. [13]. These 14 heuristics evolved from research based on Nielsen’s 10 heuristics [12] and Shneiderman’s eight golden rules [14]. The heuristics [and their shorthand versions] are: consistency and standards [consistency]; visibility of system state [visibility]; match between system and world [match]; memory load [memory]; informative feedback [feedback]; flexibility and efficiency [flexibility]; good error messages [messages]; prevent errors [errors]; clear closure [closure]; reversible actions [undo]; use users’ language [language]; users in control [control]; and help and documentation [help] [13].

3. Methods

3.1. System Description

The reference system, OpenVista, utilizes a client-server architecture. The server is Linux-based and versions of the client are available for Microsoft Windows or MONO on Linux. This architecture makes the client application responsible for the user interface [15]. OpenVista utilizes a tab-based user interface to control how the user accesses different functionality. For each tab, the display is divided into panels to present related information for the functional area. The patient banner, a common panel at the top of the screen, provides key patient information.

The prototype was developed using GUI Design Studio Professional, a tool for creating prototypes [16].

3.2. Study Design

The NIST test procedure Maintain an Active Medication Allergy List is central to the design of this study. This test procedure requires the user of an EMR to be able to record a new medication allergy, modify an existing medication allergy, and retrieve a list of medication allergies [17].

Using the NIST test procedure as the foundation, the first step was to evaluate the interface design of the medication allergy component of OpenVista using TURF and usability heuristics. While the results from the TURF evaluation of the OpenVista interface served as the primary inputs, the usability heuristics provided good supporting information for the new design. The next step for the design team was to create a prototype. The prototype design was reviewed by a second design team of six students with similar backgrounds and by two experts in the TURF methodology.

The design team was comprised of six students from varying backgrounds in a graduate-level interface design course. The team included a physician, a nurse, a social worker, and three students with technology backgrounds but no design expertise.

3.3. TURF Analysis

TURF is an integrative conceptual framework that encompasses user-centered design (UCD) in the evaluation, analysis, and design of interfaces. TURF brings together user analysis, functional analysis, representational analysis, and task analysis to create a cohesive method for examining the needs of the users, determining the functionality that is necessary, taking into consideration how the information is represented to the users, and constructing an ontology of the tasks that are necessary to achieve the desired outcome [6] [18] [19].

3.3.1. User Analysis

The starting point with TURF is the user analysis. Obviously if the system does not meet the needs of the end-user, then the user cannot achieve maximum usefulness. Identifying the key users of the system is essential to understanding the functionality required by each type of users. In addition, it is important to understand the user environment and the different user attributes, like education level, age, computer expertise, work experience, and such to ensure that the interface design provides the best possible match to the characteristics of its users.

3.3.2. Functional Analysis

The functional analysis identifies the functionality that is required to perform the work tasks. The functional analysis is defined independently of the technology required to implement a solution. In this study, the functionality is determined by the NIST §170.302(e) Maintain active medication allergy list test procedure. This test procedure describes the three previous described operations that the EMR must support: record, modify, and retrieve medication allergies. The test case also provides example data to reduce ambiguity [17]. For each of the operations, the necessary process steps are identified.

The functionality of the interface is analyzed by categorizing user interface widgets as objects or operations. An object is an interface widget that cannot cause another action or trigger an event. A common example is the label that is usually found next to a text entry box, like “Address”. Any widget that can cause another action to occur is classified as an operation. For example, the “Save” button that causes work to be saved to a disk is an operation. Operations are further classified as either overhead or domain operations. Overhead operations are those which are not associated with the work domain, such as a “Next” button to move to a subsequent screen or window. Domain operations are those which are necessary for the users to complete their work, like checkboxes to select symptoms [20].

The redesign process looked at data collected from the functional analysis of OpenVista. The goal was to follow the TURF methodology of functional analysis by identifying and eliminating unwanted and unused processes that are considered overhead and remove them from the system. The method was to decompose the existing OpenVista system and build the prototype using the data collected from functional analysis to guide the process of adding functions that maximize the efficiency of users.

3.3.3. Representational Analysis

In the representational analysis the goal is to ensure that data is presented in the most effective fashion. Internal representations, which can be thought of as rules that a user has to memorize in order to use a system, are targeted to be replaced by external representations, which provide visual cues that users find more intuitive [21]. The dimensions of the data are identified and classified according to their scale. To maximize usability, the scale of the widgets used to represent the data should match the scale of the patient’s medication allergy data. The term scale refers to a way to categorize data according to its mathematical properties. These categories are nominal (data can be assigned to a group), ordinal (the data has order and can be ranked), interval (data takes on values but they are not absolute), and ratio (zero-based data values allow reliable statistical comparisons). More detailed information can be found in Zhang’s article [21].

Another key design goal with respect to representations is to create an interface that provides as much direct manipulation as possible by using continuous representations, physical actions and reversible operations with system feedback. Direct manipulation describes widgets that mimic their counterparts in the physical world, such as using the mouse to drag the icon of a file to the icon of a trash can to delete it. Direct manipulation improves the user experience because it allows users to work symbolically with representations that are familiar [14]. Consistent with direct manipulation, an emphasis is placed on external representations to lessen the cognitive load on the user.

3.3.4. Task Analysis

In TURF the task analysis step ensures that a comprehensive understanding of the tasks is achieved. This involves understanding how work flows, what constraints exist, what inputs and outputs are necessary, and how the information is organized. The foundation for the task analysis was based on information from the functional analysis and from interviews with our medical experts. Once the design team was able to show a prototype of the redesigned interface the medical experts were asked to evaluate how well the interface addressed the issues that had been identified. Based on the feedback from the medical experts as well as feedback from an expert in interface design, the initial redesign effort was redone to reflect new, improved design ideas.

During the task analysis phase, the design team also conducted a structured analysis of the OpenVista interface using Keyboard Level Modeling (KLM) that allowed the team to model and quantify user activity. KLM accounts for the mental operations necessary to complete a task, but the focus is on the physical actions required of the user, like typing on the keyboard or using the mouse [22]. This process provides quantifiable data about the steps that a user follows to complete a task. With this information, trouble spots can be identified. KLM measurements also provide a viable way to compare the original design against the redesigned interface with regard to task performance.

3.4. Heuristic Evaluation

The information from the TURF evaluation was the main method for evaluating the OpenVista interface. To provide an additional crosscheck of the TURF evaluation a heuristic evaluation of OpenVista was also performed. The results of the heuristic evaluation would serve as the baseline for comparing the improvements in the redesigned interface.

The medication allergy entry form in OpenVista was reviewed using the usability heuristic principles by the six team members. Each individual performed an independent review to look for usability violations. The evaluators were provided with a tabular form for entering the violations as they were found. After the prototype design was created, a heuristic evaluation was performed on the prototype, thus enabling the comparison between the two interface designs.

3.5. Design Standards

As part of our design effort our team considered several design standards and selected the Microsoft standards [23] [24] [25]. The standards provided guidance on the use and location of standard control buttons, as well as more specific recommendations on what information should be displayed for adverse drug reactions. Our design complies with many of the principles detailed in the guidelines. Just a few examples are: we display the fields that Microsoft recommends; we handle the option to remove a record in a way that meets their recommendation; we provide save and cancel buttons, we allow for multi-line entries; and we preserve history and comments.

We also made some design choices that deviated from the Microsoft standard because the experiences of our medical experts steered us towards different design choices. The prototype displays medication allergies with the most recent ones first; Microsoft recommends keeping the list in alphabetical order. Microsoft also recommends that deleted records are not visible to users. On this too, our medical experts felt like this information should be visible to reduce legal risk and because the historical information could be useful to a clinician.

4. Results

4.1. User Analysis Results

The user analysis is based on hypothetical data that was generated by the domain experts on the team. Based on their clinical experiences, a matrix of users and their attributes was constructed that details a conceivable user community. The resulting matrix demonstrates possible, not actual, user attributes.

Table 1 summarizes the key attributes of the most common users of the medication allergy application. Abilities and skills are broken down to four levels; low, moderate, high and expert. Users are divided into two functional groups. “Assessors”, who have clinical responsibility to assess, record or modify a patient’s allergies. The second group, “Viewers”, have clinical responsibility to view and utilize knowledge of patient allergies during patient care but this group would only read the allergy assessment. They should not have the capability in the system to record or modify records.

Table 1.

User Analysis Results

| Allergy Assessors | Allergy Viewers | |||||||

|---|---|---|---|---|---|---|---|---|

| Physician | Phys. Asst/Adv. Practice Nurse | Pharmacist | Nurse | Nurse Aid/Tech | Ancillary Technicians | Unit Secretary | Dietician | |

| Allergy Application Skill Level | Expert | Expert | Expert | Expert | Low | Low | Low | Low |

| Expertise & Skills | High | High | High | High | Moderate | Moderate | Low | Moderate |

| Domain Knowledge | High | High | High | High | Moderate | Moderate | Low | Moderate |

| Education Background | High | High | High | Moderate | Moderate | Moderate | Low | Moderate |

| Computer Knowledge | Moderate | Moderate | High | Moderate | Moderate | Moderate | High | Moderate |

| Frequency of System Use | High | High | High | High | Moderate | Moderate | Low | Moderate |

| Available Time to Learn | Low | Low | Low | Low | Low | Low | Low | Low |

| Motivation for Change | Low | Low | Moderate | Low | Low | Low | Low | Low |

| Work Environment: Distraction | High | High | Moderate | High | High | Moderate | Moderate | Moderate |

- 3. Allergy Assessors - Clinical staff that assess patient allergies with credentials, permissions and responsibilities to record or modify patient allergies

- 4. Allergy Viewers – Clinical staff that utilize allergy assessments (read-only), without credentials/permissions to record or modify patient allergies

Any other user criteria will be considered moderate or above skill/knowledge for all users with the assumption that all users have skills and training specific to competently perform their job function regarding medical ramifications of allergies in an in-patient hospital environment.

4.2. Functional Analysis Results

The functional analysis provided information illustrating improvements in the prototype. As seen in Figure 1, there are 18% fewer interface objects on the screens. Overhead operations, which are not related to the work domain, diminished by 80%, and domain-related operations showed an increase of 89%. Overall the number of operations decreased from 127 to 72, while the number of domain operations increased from less than 22% to 74% of the operations. The reduction in overhead operations and the increase in domain operations suggest that the interface will be more efficient and less complex.

Figure 1.

Functional analysis results, counts of objects and operations

4.3. Representational Analysis Results

As part of the representational analysis, nine essential data elements and their attributes were identified. These variables were then categorized based on their scale type and their distributed representation model. Existing aspects of the interface that required training or memorization were opportunities for improvement. Because external representations serve to cue user actions, external representations were used to improve the user experience. Consequently by reducing the use of confusing internal representation the design demands less cognitive processing by the user. One example of the improved cognitive processing is the elimination of OpenVista’s Lookup Allergy / ADR (Adverse Reactions) lookup display. In the redesign, the allergy lookup is done in the assessment display using a drop down menu pulling from one source instead of many. This created a consistent display of user information across different views.

The representational analysis showed benefits in the reduction of cognitive processing required by the users. Direct manipulation was achieved by collapsing the OpenVista’s “Lookup Allergy / ADR” functionality into one process in the redesigned Record Allergy display. In addition, the user can select and directly edit medication allergy information in the Record Allergy display. Where choices are constrained, interface widgets are used to show the user what choices are available. Where lists are used, choices are filtered as text is entered. And existing allergy records are visible to provide examples for users.

The redesign process also highlighted other improvement opportunities in the representational analysis. OpenVista’s design utilizes five discrete display screens or dialogs each with attendant system overhead. The redesigned system utilizes only three screens or dialogs to represent the same information. These are a snapshot display (Patient Summary view) for basic allergy information; a comprehensive assessment display (Record, Modify or View) for detailed information and a Record allergy display which is a derivative of the previous display.

Overall the representation analysis resulted in numerous changes in prototype design that reduced the cognitive burden on users by replaced confusing dialog boxes with complex rules by fewer screens where the important data fields were visible, unimportant data fields were left off, and widgets were used which would provide visual guidance for users.

4.4. Task Analysis Results

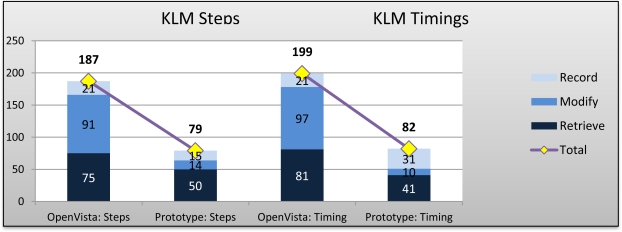

Keystroke Level Modeling (KLM) was used to assess both the original design and the redesign [22]. Figure 2 has the individual and the total KLM results for the three test procedures. Looking first at the results for the Record test procedure, we see a modest improvement in steps and times for the prototype. KLM steps were reduced from 75 to 50, a reduction of 33%. And KLM times were reduced from 81 seconds to 41 seconds, a reduction of 49%.

Figure 2.

Comparison of steps to modify an allergy entry

The Modify test procedure results are the most eye-catching for the differences. These differences are large for two reasons. First, OpenVista does not provide the capability to modify a medication allergy; the user must delete the existing medication allergy and then record a new medication allergy. However, the use of direct manipulation to modify fields also contributes greatly to the improvements in the Modify test procedure. Consequently, as we see in Figures 2 & 3, the step count decreased from 91 for OpenVista to 14 for the prototype, an 85% decrease. The KLM timings decreased from 97 seconds for OpenVista to 10 seconds for the prototype, a 90% decrease.

Figure 3.

Summary of KLM results

For the Retrieve test procedure, the numbers show improvements also. The step counts went from 75 for OpenVista to 50 for the prototype, a 33% reduction. And the timings went from 81 for OpenVista to 41 for the prototype. This is a 49% reduction.

Overall, the improvements shown in the TURF task analysis demonstrate undeniable improvements in the number of KLM steps and the KLM timings. Significant gains were realized in the prototype in total tasks counts and timing totals. KLM step count decreased from 187 in OpenVista to 79 in the prototype. KLM timing for task completion decreased from 199 seconds in OpenVista to 64 seconds in the prototype. In this example the prototype reduces the KLM time by 68%.

Another design change to point out is the additional external representation in the header panel. OpenVista, includes allergies in the header for access on any clinical tab, but relies on the user’s recall to access the “A” symbol (presumably for “Allergy”) to review. The prototype adds an “Allergy” field to the same section of the header, with the allergies clearly listed. If the user wishes to view the detailed allergy information this is done simply with one click on the allergy field in the header.

Changes on the “Patient Summary” tab show how improving the representation of information can benefit task performance. OpenVista lists only the allergy name, whereas the prototype uses the available field space to list all NIST specified data for review. In most cases access to the more specific details will not be required. This change presents the commonly accessed information on the main screen which has the additional benefit of improving performance times by reducing the need to view a second screen.

The NIST Test Procedure requires three data fields: drug name, reaction, and (active or inactive) status. Both systems support a detailed view of the required allergy information, but the prototype has the required information on the Patient Summary screen and allows ready access to additional details from either location on the Patient Summary screen. OpenVista displays only the drug or adverse reaction and requires complicated internal mental processes to remember how to access the allergy details.

The TURF task analysis resulted in a valuable decrease in the complexity of tasks in the prototype as compared to OpenVista. External representation improvements, decreased task functions, and decreased times to complete tasks all strongly suggesting a significant decrease in complexity and corresponding improved usability. Lastly, the improvements also provide a corresponding facilitation of the user’s focus on the patient care interaction that should reduce the risk of errors associated with cognitive burdens.

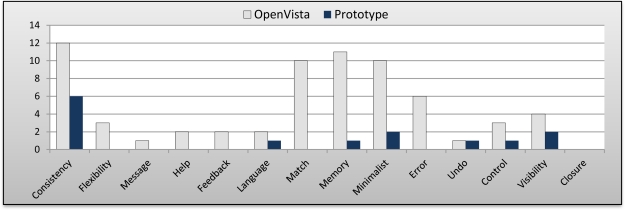

4.5. Heuristic Evaluation Results

Figure 4 shows the results of the heuristic evaluation for OpenVista and the prototype. 67 heuristics violations were found in the OpenVista’s medication allergy application. The categories with the largest number of violations are Consistency (12), Match (10), Memory (11), and Minimalist (10). These account for 64% of the violations found. It is interesting that Message and Undo heuristic violations accounted for the only 2 violations that were rated as catastrophic by the reviewers. For the prototype there were 14 heuristic violations, representing a 79% decrease versus OpenVista. The residual violations were due to limitations in the prototype and to the potential of the “Entry Error” feature of the new design to confuse a user.

Figure 4.

Heuristic violations by category

5. Discussion

Although this study was conducted as a course project with constraints that limit its reliability and validity, the study has determined that the TURF framework enabled the design team to make measurable improvements in interface design and user performance. The results of this research are consistent with the findings of other projects using TURF that demonstrate that the TURF framework is an effective method for conducting usability evaluations that can directly lead to improvements in the user interface of systems [6] [19] [20] [26] [27].

TURF is a viable methodology for evaluating the design of an interface and isolating areas that can prove problematic for users. TURF provides a comprehensive framework that encourages incorporating usability concepts during the planning stages of interface. But in those cases where an application has been developed already, TURF serves as a structure for identifying usability issues and developing alternatives based on the analysis of the problems. In this study the use of the TURF framework resulted in significant improvements in the tasks required to manage allergies. The amount of steps to perform tasks has been reduced and the amount of external representation has been increased. Our heuristic evaluations indicate substantial improvements in the interface design.

The diversity of the design team also proved beneficial. The healthcare backgrounds of design team members provided guidance and leadership on domain-related requirements of the design. The technical members consolidated the domain concepts into the prototype which was further validated by the medical domain experts. The team approach reinforced the value of user-centered design.

This study could be extended or improved in several ways.

This is a preliminary study that leverages theoretical estimation of improvement. In lieu of hypothetical users, user data should be gathered using the spectrum of methods that TURF encourages, such as interviews, focus groups, surveys, and observations. Future work needs to utilize user testing to validate the findings of this study.

Utilizing the TURF framework with additional NIST Test Procedures or with different EMRs would demonstrate the generalizability of our the findings of this study.

User analysis can be implemented using a sampling of users of the target system. The impact of the TURF framework on improving system performance through the development of the work ontology and user requirements was not demonstrated by this study.

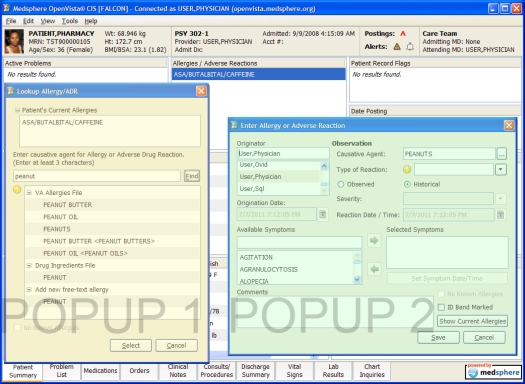

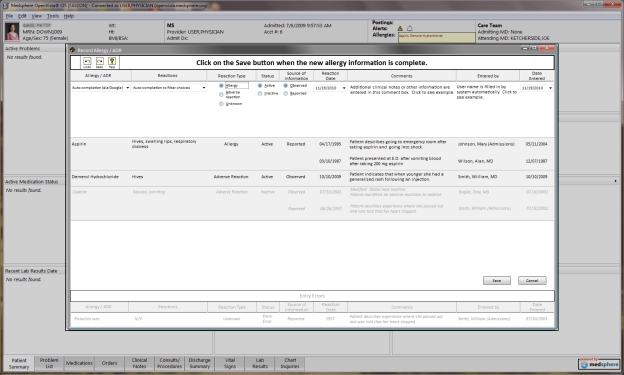

Screen shots of the key screens used during the Add Allergy operation are located in the appendix. Note that in Figure 5, two screen shots of OpenVista have been combined into a single screen shot; this construct was made to enable the presentation of both OpenVista screens at the highest possible resolution in the available space. Figure 6 shows how a prototype user would perform the same operation using a single screen.

4. Conclusion

This study demonstrates that the TURF framework can be used to evaluate an existing user interface and create a new EMR interface design that is measurably more satisfying, less complex and easier to use. With the increased impetus to implement and utilize EMRs, TURF provides a method for software developers to maximize the usability of their user interface. Similarly, prospective purchasers of EMRs can leverage TURF to compare the usability of EMR systems being considered.

5. Acknowledgments

We would also like to acknowledge the contributions of Dr. Rui Zeng, who served as our expert guide in SecondLife, which served as our virtual classroom.

This project was supported in part by Grant No. 10510592 for Patient-Centered Cognitive Support under the Strategic Health IT Advanced Research Projects Program (SHARP) from the Office of the National Coordinator for Health Information Technology.

Appendix: Key Add Allergy Screen Shots

Figure 5.

OpenVista composite screen shot showing 2 Add Allergy popup screens

Figure 6.

Prototype screen shot displaying Add Allergy operation

References

- 1.Zhang J, Walji M. TURF: A unified framework of EHR Usability. [Manuscript under review] [DOI] [PubMed]

- 2.Congressional Budget Office Technological change and the growth of health care spending. 2008.

- 3.Docteur E, Berenson R. How does the quality of US health care compare internationally? Robt Wood Johnson Found. 2009 [Google Scholar]

- 4.Dept of HHS. 45 CFR Part 170: Health information technology: initial set of standards, implementation, specifications, and certification criteria for electronic health record technology. Federal Register; 2010. final rule. [PubMed] [Google Scholar]

- 5.Friedman CP. The strategic importance of usability in Meaningful Use. HIMSS Conference 11, 2011; 2011. pp. 1–12. [Google Scholar]

- 6.Zhang J, Butler K. UFuRT: a work-centered framework and process for design and evaluation of information systems. HCI Int Proc. 2007:1–5. [Google Scholar]

- 7.Horsky J, Zhang J, VL P. To err is not entirely human: complex technology and user cognition. J Biomed Inform. 2005;38:264–266. doi: 10.1016/j.jbi.2005.05.002. [DOI] [PubMed] [Google Scholar]

- 8.Hooey BL, Foyle DC, Andre AD. Integration of cockpit displays for surface operations: The final stage of human-centered design approach. SAE Transactions: Journal of Aerospace. 2000;109:1053–1065. [Google Scholar]

- 9.Bevan N. Design for usability. Proc of HCI Intl 1999; Aug 1999 1–6. [Google Scholar]

- 10.Office of the National Coordinator for Health Information Technology Certified health IT list. [Internet] 2011. Available from: http://onc-chpl.force.com/ehrcert.

- 11.NIST Approved test procedures version 1.1. [Internet] 2010. Available from: http://healthcare.nist.gov/use_testing/effective_requirements.html.

- 12.Nielsen J. Usability Engineering. Academic Press; 1994. [Google Scholar]

- 13.Tang Z, Johnson T, Tindall R, Zhang J. Applying heuristic evaluation to improve the usability of a tele-medicine system. Telemed and eHealth. 2006;12(1):24–34. doi: 10.1089/tmj.2006.12.24. [DOI] [PubMed] [Google Scholar]

- 14.Shneiderman B, Plaisant K. Designing the user interface. Addison-Wesley; 2010. [Google Scholar]

- 15.Medsphere Systems System Architecture. [Internet] 2011. [cited 2011 Mar 14]. Available from: http://www.medsphere.com/solutions/system-architecture.

- 16.Caretta Software User interface design and software prototyping tools. [Internet] 2010. Available from: http://www.carettasoftware.com.

- 17.NIST Test procedure for §170.302(d), Maintain active medication list. [Internet] 2010. Available from: http://healthcare.nist.gov/docs/170.302.d_medicationlist_v1.1.pdf.

- 18.Butler K, Zhang J, Esposito C, Bahrami A, Hebron R, Kieras D. Work-centered design: a case study of a mixed-initiative scheduler. CHI 07. 2007. pp. 747–756.

- 19.Zhang J, Patel V, Johnson K, Smith J, Malin J. Designing human-centered distributed information systems. IEEE Intell Syst. 2002;17(5):42–47. [Google Scholar]

- 20.Zhang Z, Walji M, Patel V, Gimbel R, Zhang J. Functional analysis of interfaces in the US military electronic health record system using UFuRT framework. AMIA 2009 Symp Proc; 2009. pp. 730–734. [PMC free article] [PubMed] [Google Scholar]

- 21.Zhang J. A representational analysis of relational information displays. Intl J Hum-Comp Studies. 1996;45(1):59–74. [Google Scholar]

- 22.Wikipedia contributors GOMS. [Internet] 2010. Available from: http://en.wikipedia.org/wiki/GOMS.

- 23.Microsoft . User experience interaction guidelines for Windows 7 and Windows Vista. Microsoft; 2010. [Google Scholar]

- 24.Microsoft . Design guidance: displaying adverse drug reaction risks. Microsoft; 2009. [Google Scholar]

- 25.Microsoft . Design guidance: recording adverse drug reaction risks. Microsoft; 2009. [Google Scholar]

- 26.Zhang J. Usability issues with EMRs [Internet] 2010.

- 27.Zhang J, Johnson T, Patel V, Paige D, Kubose T. Using usability heuristics to evaluate patient safety of medical devices. J of Biomed Inform. 2003;36(1/2):23–30. doi: 10.1016/s1532-0464(03)00060-1. [DOI] [PubMed] [Google Scholar]

- 28.Schumacher R. NIST guide to the processes approach for improving the usability of electronic health records. National Institute of Standards and Technology; 2010. NISTIR 7741. [Google Scholar]