Abstract

Biomarker discovery from high-dimensional data is a crucial problem with enormous applications in biology and medicine. It is also extremely challenging from a statistical viewpoint, but surprisingly few studies have investigated the relative strengths and weaknesses of the plethora of existing feature selection methods. In this study we compare  feature selection methods on

feature selection methods on  public gene expression datasets for breast cancer prognosis, in terms of predictive performance, stability and functional interpretability of the signatures they produce. We observe that the feature selection method has a significant influence on the accuracy, stability and interpretability of signatures. Surprisingly, complex wrapper and embedded methods generally do not outperform simple univariate feature selection methods, and ensemble feature selection has generally no positive effect. Overall a simple Student's t-test seems to provide the best results.

public gene expression datasets for breast cancer prognosis, in terms of predictive performance, stability and functional interpretability of the signatures they produce. We observe that the feature selection method has a significant influence on the accuracy, stability and interpretability of signatures. Surprisingly, complex wrapper and embedded methods generally do not outperform simple univariate feature selection methods, and ensemble feature selection has generally no positive effect. Overall a simple Student's t-test seems to provide the best results.

Introduction

Biomarker discovery from high-dimensional data, such as transcriptomic or SNP profiles, is a crucial problem with enormous applications in biology and medicine, such as diagnosis, prognosis, patient stratification in clinical trials or prediction of the response to a given treatment. Numerous studies have for example investigated so-called molecular signatures, i.e., predictive models based on the expression of a small number of genes, for the stratification of early breast cancer patients into low-risk or high-risk of relapse, in order to guide the need for adjuvant therapy [1].

While predictive models could be based on the expression of more than a few tens of genes, several reasons motivate the search for short lists of predictive genes. First, from a statistical and machine learning perspective, restricting the number of variables is often a way to reduce over-fitting when we learn in high dimension from few samples and can thus lead to better predictions on new samples. Second, from a biological viewpoint, inspecting the genes selected in the signature may shed light on biological processes involved in the disease and suggest novel targets. Third, and to a lesser extent, a small list of predictive genes allows the design of cheap dedicated prognostic chips.

Published signatures share, however, very few genes in common, raising questions about their biological significance [2]. Independently of differences in cohorts or technologies, [3] and [4] demonstrate that a major cause for the lack of overlap between signatures is that many different signatures lead to similar predictive accuracies, and that the process of estimating a signature is very sensitive to the samples used in the phase of gene selection. Specifically [5], suggest that many more samples than currently available would be required to reach a descent level of signature stability, meaning in particular that no biological insight should be expected from the analysis of current signatures. On the positive side, some authors noticed that the biological functions captured by different signatures are similar, in spite of the little overlap between them at the gene level [6]–[8].

From a machine learning point of view, estimating a signature from a set of expression data is a problem of feature selection, an active field of research in particular in the high-dimensional setting [9]. While the limits of some basic methods for feature selection have been highlighted in the context of molecular signatures, such as gene selection by Pearson correlation with the output [5], there are surprisingly very few and only partial investigations that focus on the influence of the feature selection method on the performance and stability of the signature [10]. compared various feature selection methods in terms of predictive performance only, and [11] suggest that ensemble feature selection improves both stability and accuracy of SVM recursive feature elimination (RFE), without comparing it with other methods. However, it remains largely unclear how “modern” feature selection methods such as the elastic net [12], SVM RFE or stability selection [13] behave in these regards and how they compare to more basic univariate techniques.

Here we propose an empirical comparison of a panel of feature selection techniques in terms of accuracy and stability, both at the gene and at the functional level. Using four breast cancer datasets, we observe significant differences between the methods. Surprisingly, we find that ensemble feature selection, i.e., combining multiple signatures estimated on random subsamples, has generally no positive impact, and that simple filters can outperform more complex wrapper or embedded methods.

Materials and Methods

Feature selection methods

We compare eight common feature selection methods to estimate molecular signatures. All methods take as input a matrix of gene expression data for a set of samples from two categories (good and bad prognosis in our case), and return a set of genes of a user-defined size  . These genes can then be used to estimate a classifier to predict the class of any sample from the expression values of these genes only. Feature selection methods are usually classified into three categories [9], [14]: filter methods select subsets of variables as a pre-processing step, independently of the chosen predictor; wrapper methods utilize the learning machine of interest as a black box to score subsets of variable according to their predictive power; finally, embedded methods perform variable selection in the process of training and are usually specific to given learning machines. We have selected popular methods representing these three classes, as described below.

. These genes can then be used to estimate a classifier to predict the class of any sample from the expression values of these genes only. Feature selection methods are usually classified into three categories [9], [14]: filter methods select subsets of variables as a pre-processing step, independently of the chosen predictor; wrapper methods utilize the learning machine of interest as a black box to score subsets of variable according to their predictive power; finally, embedded methods perform variable selection in the process of training and are usually specific to given learning machines. We have selected popular methods representing these three classes, as described below.

Filter methods

Univariate filter methods rank all variables in terms of relevance, as measured by a score which depends on the method. They are simple to implement and fast to run. To obtain a signature of size  , one simply takes the top

, one simply takes the top  genes according to the score. We consider the following four scoring functions to rank the genes: the Student's t-test and Wilcoxon sum-rank test, which evaluate if each feature is differentially expressed between the two classes; and the Bhattacharyya distance and relative entropy to calculate a distance between the distributions of the two groups. We used the MATLAB Bioinformatics toolbox to compute these scoring functions.

genes according to the score. We consider the following four scoring functions to rank the genes: the Student's t-test and Wilcoxon sum-rank test, which evaluate if each feature is differentially expressed between the two classes; and the Bhattacharyya distance and relative entropy to calculate a distance between the distributions of the two groups. We used the MATLAB Bioinformatics toolbox to compute these scoring functions.

Wrapper methods

Wrapper methods attempt to select jointly sets of variables with good predictive power for a predictor. Since testing all combinations or variables is computationally impossible, wrapper methods usually perform a greedy search in the space of sets of features. We test SVM recursive feature elimination (RFE)

[15], which starts with all variables and iteratively removes the variables which contribute least to a linear SVM classifier trained on the current set of variables. We remove  of features at each iteration until

of features at each iteration until  remain, and then remove them one by one in order to rigourously rank the first

remain, and then remove them one by one in order to rigourously rank the first  . Following [11], we set the SVM parameter

. Following [11], we set the SVM parameter  to

to  , and checked afterwards that other values of

, and checked afterwards that other values of  did not have a significant influence on the results. Alternatively, we test a Greedy Forward Selection (GFS) strategy for least squares regression also termed Orthogonal Matching Pursuit, where we start from no variable and add them one by one by selecting each time the one which minimizes the sum of squares, in a

did not have a significant influence on the results. Alternatively, we test a Greedy Forward Selection (GFS) strategy for least squares regression also termed Orthogonal Matching Pursuit, where we start from no variable and add them one by one by selecting each time the one which minimizes the sum of squares, in a  -fold internal cross-validation setting. This algorithm was implemented in the SPAMS toolbox for Matlab initially published along with [16].

-fold internal cross-validation setting. This algorithm was implemented in the SPAMS toolbox for Matlab initially published along with [16].

Embedded methods

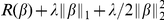

Embedded methods are learning algorithms which perform feature selection in the process of training. We test the popular Lasso regression [17], where a sparse linear predictor  is estimated by minimizing the objective function

is estimated by minimizing the objective function  , where

, where  is the mean square error on the training set (considering the two categories as

is the mean square error on the training set (considering the two categories as  values) and

values) and  .

.  controls the degree of sparsity of the solution, i.e., the number of features selected. We fix

controls the degree of sparsity of the solution, i.e., the number of features selected. We fix  as the smallest value which gives a signature of the desired size

as the smallest value which gives a signature of the desired size  . Alternatively, we tested the elastic net [12], which is similar to the Lasso but where we replace the

. Alternatively, we tested the elastic net [12], which is similar to the Lasso but where we replace the  norm of

norm of  by a combination of the

by a combination of the  and

and  norms, i.e., we minimize

norms, i.e., we minimize  and

and  . By allowing the selection of correlated predictive variables, the elastic net is supposed to be more robust than the Lasso while still selecting predictive variables. Again, we tune

. By allowing the selection of correlated predictive variables, the elastic net is supposed to be more robust than the Lasso while still selecting predictive variables. Again, we tune  to achieve a user-defined level of sparsity. For both algorithms, we used the code implemented in the SPAMS toolbox.

to achieve a user-defined level of sparsity. For both algorithms, we used the code implemented in the SPAMS toolbox.

Ensemble feature selection

Many feature selection methods are known to be sensitive to small perturbations of the training data, resulting in unstable signatures. In order to “stabilize” variable selection, several authors have proposed to use ensemble feature selection on bootstrap samples: the variable selection method is run on several random subsamples of the training data, and the different lists of variables selected are merged into a hopefully more stable subset [11], [13], [18].

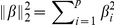

For each feature selection method described above, we tested in addition the following three aggregation strategies for ensemble feature selection. We first bootstrap the training samples  times (i.e., draw a sample of size

times (i.e., draw a sample of size  from the data with replacement

from the data with replacement  times) to get

times) to get  rankings

rankings  of all features by applying the feature selection method on each sample. For filter methods, the ranking of features is naturally obtained by decreasing score. For RFE and GFS, the ranking is the order in which the features are added or removed in the iterative process. For Lasso and elastic net, the ranking is the order in which the variables become selected when

of all features by applying the feature selection method on each sample. For filter methods, the ranking of features is naturally obtained by decreasing score. For RFE and GFS, the ranking is the order in which the features are added or removed in the iterative process. For Lasso and elastic net, the ranking is the order in which the variables become selected when  decreases. We then aggregate the

decreases. We then aggregate the  lists by computing a score

lists by computing a score  for each gene

for each gene  as an average function of its rank

as an average function of its rank  in the

in the  -th bootstrap experiment. We test the following functions of the rank for aggregation:

-th bootstrap experiment. We test the following functions of the rank for aggregation:

Ensemble-mean [11]: we simply average the rank of a gene over the bootstrap experiments, i.e., we take

.

.Ensemble-stability selection [13]: we measure the percentage of bootstrap samples for which the gene ranks in the top

, i.e.,

, i.e.,  if

if  ,

,  otherwise.

otherwise.Ensemble-exponential: we propose a soft version of stability selection, where we average an exponentially decreasing function of the rank, namely

.

.

Finally, for each rank aggregation strategy, the aggregated list is the set of  genes with the largest score.

genes with the largest score.

Accuracy of a signature

In order to measure the predictive accuracy of a feature selection method, we assess the performance of various supervised classification algorithms trained on the data restricted to the selected signature. More precisely, we test  classification algorithms: nearest centroids (NC), k-nearest neighbors (KNN) with

classification algorithms: nearest centroids (NC), k-nearest neighbors (KNN) with  , linear SVM with

, linear SVM with  , linear discriminant analysis (LDA) and naive Bayes (BAYES). The parameters of the KNN and SVM methods are fixed to arbitrary default values, and we have checked that no significantly better results could be obtained with other parameters by testing a few other parameters. We assess the performance of a classifier by the area under the ROC curve (AUC), in two different settings. First, on each dataset, we perform a 10-fold cross-validation (CV) experiment, where both feature selection and training of the classifier are performed on

, linear discriminant analysis (LDA) and naive Bayes (BAYES). The parameters of the KNN and SVM methods are fixed to arbitrary default values, and we have checked that no significantly better results could be obtained with other parameters by testing a few other parameters. We assess the performance of a classifier by the area under the ROC curve (AUC), in two different settings. First, on each dataset, we perform a 10-fold cross-validation (CV) experiment, where both feature selection and training of the classifier are performed on  of the data, and the AUC is computed on the remaining

of the data, and the AUC is computed on the remaining  of the data. This is a classical way to assess the relevance of feature selection of a given dataset. Second, to assess the performance of the signature across datasets, we estimate a signature on one dataset, and assess its accuracy on other datasets by again running a 10-fold CV experiment where only the classifier (restricted to the genes in the signature) is retrained on each training set. In both cases, we report the mean AUC across the folds and datasets, and assess the significance of differences between methods with a paired ANOVA test.

of the data. This is a classical way to assess the relevance of feature selection of a given dataset. Second, to assess the performance of the signature across datasets, we estimate a signature on one dataset, and assess its accuracy on other datasets by again running a 10-fold CV experiment where only the classifier (restricted to the genes in the signature) is retrained on each training set. In both cases, we report the mean AUC across the folds and datasets, and assess the significance of differences between methods with a paired ANOVA test.

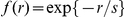

Stability of a signature

To assess the stability of feature selection methods, we compare signatures estimated on different samples in various settings. First, to evaluate stability with respect to small perturbation of the training set, we randomly subsample each dataset into pairs of subsets with  of sample overlap, estimate a signature on each subset, and compute the overlap between two signatures in a pair as the fraction of shared genes, i.e.,

of sample overlap, estimate a signature on each subset, and compute the overlap between two signatures in a pair as the fraction of shared genes, i.e.,  . Note that this corresponds to the figure of merit defined by [5]. The random sampling of subsets is repeated

. Note that this corresponds to the figure of merit defined by [5]. The random sampling of subsets is repeated  times on each dataset, and the stability values are averaged over all samples. We will refer to this procedure the soft-perturbation setting in the remaining. Second, to assess stability with respect to strong perturbation within a dataset, we repeat the same procedure but this time with no overlap between two subsets of samples. In practice, we can only sample subsets of size

times on each dataset, and the stability values are averaged over all samples. We will refer to this procedure the soft-perturbation setting in the remaining. Second, to assess stability with respect to strong perturbation within a dataset, we repeat the same procedure but this time with no overlap between two subsets of samples. In practice, we can only sample subsets of size  , where

, where  is the number of samples in a dataset, to ensure that they have no overlap. Again, we measure the overlap between the signatures estimated on training sets with no sample in common. We call this procedure the hard-perturbation setting. Finally, to assess the stability across datasets, we estimate signatures on each dataset independently, using all samples on each dataset, and measure their overlap. We call this procedure the between-datasets setting below.

is the number of samples in a dataset, to ensure that they have no overlap. Again, we measure the overlap between the signatures estimated on training sets with no sample in common. We call this procedure the hard-perturbation setting. Finally, to assess the stability across datasets, we estimate signatures on each dataset independently, using all samples on each dataset, and measure their overlap. We call this procedure the between-datasets setting below.

Functional interpretability and stability of a signature

To interpret a signature in terms of biological functions, we perform functional enrichment analysis by inspecting the signature for over-represented Gene Ontology (GO) terms. This may hint at biological hypothesis underlying the classification [6], [7]. We perform a hypergeometrical test on each of the  GO biological process (BP) terms that are associated to at least one gene in our dataset, and correct the resulting p-values for multiple testing through the procedure of [19]. To assess the interpretability of a signature, i.e., how easily one can extract a biological interpretation, we compute the number of GO terms over-represented at

GO biological process (BP) terms that are associated to at least one gene in our dataset, and correct the resulting p-values for multiple testing through the procedure of [19]. To assess the interpretability of a signature, i.e., how easily one can extract a biological interpretation, we compute the number of GO terms over-represented at  FDR. To compare two signatures in functional terms, we first extract from each signature the list of 10 GO terms with the smallest p-values, and compare the two lists of GO terms by the similarity measure of [20] which takes into account not only the overlap between the lists but also the relationships between GO BP. Finally, to assess the functional stability of a selection method, we follow a procedure similar to the one presented in the previous section and measure the mean functional similarity of signatures in the soft-perturbation, hard-perturbation and between-datasets settings.

FDR. To compare two signatures in functional terms, we first extract from each signature the list of 10 GO terms with the smallest p-values, and compare the two lists of GO terms by the similarity measure of [20] which takes into account not only the overlap between the lists but also the relationships between GO BP. Finally, to assess the functional stability of a selection method, we follow a procedure similar to the one presented in the previous section and measure the mean functional similarity of signatures in the soft-perturbation, hard-perturbation and between-datasets settings.

Data

We collected  breast cancer datasets from Gene Expression Omnibus [21], as described in Table 1. The four datasets address the same problem of predicting metastatic relapse in breast cancer on different cohorts, and were obtained with the Affymetrix HG-U133A technology. We used a custom CDF file with EntrezGene ids as identifiers [22] to estimate expression levels for

breast cancer datasets from Gene Expression Omnibus [21], as described in Table 1. The four datasets address the same problem of predicting metastatic relapse in breast cancer on different cohorts, and were obtained with the Affymetrix HG-U133A technology. We used a custom CDF file with EntrezGene ids as identifiers [22] to estimate expression levels for  genes on each array, and normalized all arrays with the Robust Multi-array Average procedure [23].

genes on each array, and normalized all arrays with the Robust Multi-array Average procedure [23].

Table 1. Data.

The four breast cancer datasets used in this study.

Results

Accuracy

We first assess the accuracy of signatures obtained by different feature selection methods. Intuitively, the accuracy refers to the performance that a classifier trained on the genes in the signature can reach in prediction. Although some feature selection methods (wrapper and embedded) jointly estimate a predictor, we dissociate here the process of selecting a set of genes and training a predictor on these genes, in order to perform a fair comparison common to all feature selection methods. We test the accuracy of 100-gene signatures obtained by each feature selection method, combined with 5 classifiers to build a predictor as explained in the Methods section. Table 2 shows the mean accuracies (in AUC) over the datasets as reached by the different combinations in 10-fold cross-validation.

Table 2. AUC (10-fold cross-validation).

| Class. | Type | Random | t-test | Entropy | Bhatt. | Wilcoxon | SVM RFE | GFS | Lasso | Elastic Net |

| NC | S | 0.62(0.17) | 0.66(0.14) | 0.58(0.15) | 0.60(0.15) | 0.62(0.15) | 0.62(0.15) | 0.58(0.15) | 0.63(0.15) | 0.63(0.15) |

| E-M | 0.62(0.15) | 0.65(0.14) | 0.59(0.15) | 0.63(0.15) | 0.62(0.15) | 0.63(0.14) | 0.62(0.13) | 0.61(0.16) | 0.63(0.15) | |

| E-E | 0.61(0.15) | 0.65(0.14) | 0.59(0.15) | 0.61(0.16) | 0.62(0.15) | 0.61(0.15) | 0.58(0.13) | 0.63(0.13) | 0.63(0.14) | |

| E-S | 0.63(0.14) | 0.65(0.14) | 0.58(0.15) | 0.61(0.15) | 0.62(0.15) | 0.63(0.15) | 0.59(0.12) | 0.63(0.13) | 0.63(0.14) | |

| KNN | S | 0.59(0.16) | 0.61(0.15) | 0.52(0.11) | 0.57(0.13) | 0.63(0.15) | 0.60(0.15) | 0.59(0.13) | 0.60(0.17) | 0.60(0.17) |

| E-M | 0.61(0.14) | 0.62(0.15) | 0.57(0.15) | 0.60(0.15) | 0.64(0.16) | 0.62(0.15) | 0.61(0.12) | 0.61(0.15) | 0.60(0.12) | |

| E-E | 0.55(0.13) | 0.63(0.15) | 0.53(0.10) | 0.54(0.10) | 0.63(0.16) | 0.60(0.17) | 0.54(0.16) | 0.61(0.14) | 0.60(0.17) | |

| E-S | 0.60(0.13) | 0.63(0.15) | 0.54(0.11) | 0.54(0.12) | 0.62(0.16) | 0.58(0.14) | 0.55(0.14) | 0.62(0.14) | 0.60(0.14) | |

| LDA | S | 0.54(0.12) | 0.56(0.12) | 0.51(0.14) | 0.55(0.13) | 0.52(0.12) | 0.56(0.12) | 0.50(0.13) | 0.58(0.14) | 0.57(0.14) |

| E-M | 0.53(0.10) | 0.55(0.13) | 0.55(0.13) | 0.58(0.12) | 0.56(0.13) | 0.60(0.15) | 0.52(0.14) | 0.59(0.14) | 0.60(0.13) | |

| E-E | 0.54(0.13) | 0.53(0.15) | 0.52(0.15) | 0.53(0.11) | 0.53(0.14) | 0.57(0.13) | 0.53(0.15) | 0.59(0.12) | 0.58(0.13) | |

| E-S | 0.54(0.13) | 0.52(0.13) | 0.54(0.13) | 0.55(0.12) | 0.52(0.14) | 0.57(0.16) | 0.54(0.15) | 0.59(0.15) | 0.60(0.13) | |

| NB | S | 0.57(0.14) | 0.60(0.13) | 0.58(0.11) | 0.58(0.14) | 0.57(0.13) | 0.56(0.14) | 0.54(0.11) | 0.59(0.15) | 0.59(0.15) |

| E-M | 0.59(0.13) | 0.59(0.14) | 0.57(0.14) | 0.59(0.13) | 0.57(0.13) | 0.56(0.13) | 0.59(0.12) | 0.57(0.15) | 0.57(0.14) | |

| E-E | 0.55(0.15) | 0.60(0.14) | 0.58(0.12) | 0.57(0.13) | 0.58(0.13) | 0.57(0.14) | 0.58(0.11) | 0.58(0.12) | 0.58(0.13) | |

| E-S | 0.58(0.14) | 0.60(0.14) | 0.57(0.13) | 0.57(0.13) | 0.58(0.13) | 0.56(0.14) | 0.58(0.10) | 0.58(0.11) | 0.58(0.13) | |

| SVM | S | 0.56(0.18) | 0.56(0.15) | 0.55(0.11) | 0.55(0.12) | 0.54(0.15) | 0.62(0.14) | 0.51(0.16) | 0.62(0.15) | 0.62(0.15) |

| E-M | 0.51(0.15) | 0.55(0.14) | 0.59(0.16) | 0.60(0.13) | 0.56(0.13) | 0.62(0.15) | 0.55(0.16) | 0.61(0.16) | 0.61(0.16) | |

| E-E | 0.54(0.16) | 0.54(0.15) | 0.54(0.13) | 0.54(0.12) | 0.55(0.15) | 0.61(0.17) | 0.56(0.17) | 0.63(0.13) | 0.62(0.16) | |

| E-S | 0.54(0.17) | 0.55(0.18) | 0.56(0.12) | 0.56(0.12) | 0.54(0.14) | 0.61(0.16) | 0.55(0.17) | 0.63(0.14) | 0.62(0.16) |

AUC obtained for each combination of feature selection and classification method, in 10-fold cross validation and averaged over the datasets. Standard error is shown within parentheses. For each selection algorithm, we highlighted the setting in which it obtained the best performance. The Type column refers to the use of feature selection run a single time (S) or through ensemble feature selection, either with the mean (E-M), exponential (E-E) or stability selection (E-S) procedure to aggregate lists.

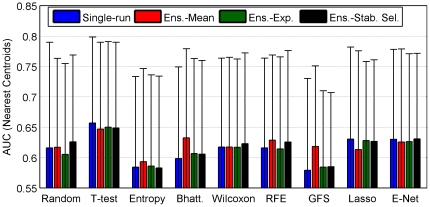

Globally, we observe only limited differences between the feature selection methods, for a given classification method. In particular the selection of a random signature reaches a baseline AUC comparable to that of other methods, confirming results already observed by [3]. Second, we observe that, among all classification algorithms, the simple NC classifier consistently gives good results compared to other classifiers. We therefore choose it as a default classification algorithm for further assessment of the performance of the signatures below. Figure 1 depicts graphically the AUC reached by each feature selection method with NC as a classifier, reproducing the first three lines of Table 2. Although the t-test has the best average AUC, the results vary widely across datasets explaining the large error bars. In fact, a paired ANOVA test detects no method significantly better than the random selection strategy; the only significant differences are observed between t-test, on the one hand, and Entropy and GFS, on the other hand, which have the lowest performances without aggregation. In particular, we observe that ensemble methods for feature selection do not bring any improvement in accuracy in a significant way.

Figure 1. Area under the ROC curve.

Signature of size  in a

in a  -fold CV setting and averaged over the four datasets.

-fold CV setting and averaged over the four datasets.

In order to assess how a signature estimated on one dataset behave in another dataset, we report the results for between-datasets experiments in Table 3. For each training dataset, we highlight the method with the best results, and report the average results (over the  folds) in the last row. In this setting, we barely notice any difference with the cross-validation setting (Table 2) and essentially reach the same conclusions, namely that no significant result stands out, except for the t-test to perform overall better than entropy.

folds) in the last row. In this setting, we barely notice any difference with the cross-validation setting (Table 2) and essentially reach the same conclusions, namely that no significant result stands out, except for the t-test to perform overall better than entropy.

Table 3. AUC (between-datasets setting).

| Training data | Type | Random | t-test | Entropy | Bhatt. | Wilcoxon | SVM RFE | GFS | Lasso | Elastic Net |

| GSE1456 | S | 0.59(0.10) | 0.63(0.13) | 0.60(0.10) | 0.63(0.13) | 0.61(0.14) | 0.61(0.13) | 0.61(0.11) | 0.62(0.11) | 0.62(0.11) |

| E-M | 0.60(0.12) | 0.63(0.14) | 0.60(0.12) | 0.61(0.14) | 0.61(0.14) | 0.61(0.11) | 0.60(0.12) | 0.63(0.11) | 0.60(0.12) | |

| E-E | 0.60(0.13) | 0.63(0.13) | 0.58(0.10) | 0.63(0.12) | 0.61(0.13) | 0.61(0.11) | 0.62(0.12) | 0.63(0.11) | 0.62(0.11) | |

| E-S | 0.60(0.14) | 0.63(0.14) | 0.59(0.10) | 0.63(0.11) | 0.61(0.13) | 0.61(0.13) | 0.62(0.13) | 0.63(0.12) | 0.63(0.09) | |

| GSE2034 | S | 0.62(0.15) | 0.62(0.15) | 0.57(0.20) | 0.59(0.19) | 0.58(0.19) | 0.60(0.18) | 0.62(0.15) | 0.63(0.16) | 0.63(0.16) |

| E-M | 0.63(0.17) | 0.63(0.15) | 0.60(0.15) | 0.64(0.16) | 0.58(0.19) | 0.63(0.17) | 0.62(0.16) | 0.62(0.16) | 0.62(0.16) | |

| E-E | 0.64(0.14) | 0.63(0.15) | 0.56(0.19) | 0.58(0.19) | 0.59(0.19) | 0.63(0.16) | 0.60(0.18) | 0.61(0.16) | 0.61(0.16) | |

| E-S | 0.61(0.17) | 0.63(0.16) | 0.56(0.17) | 0.57(0.19) | 0.59(0.19) | 0.63(0.15) | 0.62(0.17) | 0.62(0.16) | 0.63(0.16) | |

| GSE2990 | S | 0.64(0.14) | 0.64(0.15) | 0.56(0.14) | 0.60(0.16) | 0.60(0.16) | 0.62(0.16) | 0.64(0.15) | 0.66(0.13) | 0.65(0.13) |

| E-M | 0.61(0.15) | 0.66(0.16) | 0.59(0.17) | 0.65(0.13) | 0.58(0.16) | 0.65(0.15) | 0.62(0.14) | 0.64(0.15) | 0.64(0.15) | |

| E-E | 0.61(0.14) | 0.66(0.15) | 0.54(0.14) | 0.57(0.19) | 0.59(0.15) | 0.62(0.15) | 0.63(0.15) | 0.65(0.14) | 0.66(0.14) | |

| E-S | 0.62(0.15) | 0.66(0.14) | 0.55(0.14) | 0.57(0.18) | 0.60(0.16) | 0.64(0.15) | 0.63(0.14) | 0.65(0.14) | 0.65(0.14) | |

| GSE4922 | S | 0.65(0.15) | 0.66(0.15) | 0.59(0.16) | 0.63(0.14) | 0.64(0.16) | 0.64(0.14) | 0.62(0.12) | 0.65(0.14) | 0.65(0.14) |

| E-M | 0.65(0.12) | 0.67(0.15) | 0.64(0.13) | 0.66(0.16) | 0.65(0.15) | 0.64(0.13) | 0.65(0.15) | 0.66(0.14) | 0.64(0.13) | |

| E-E | 0.65(0.15) | 0.66(0.15) | 0.57(0.16) | 0.63(0.15) | 0.66(0.15) | 0.64(0.12) | 0.65(0.13) | 0.67(0.13) | 0.66(0.14) | |

| E-S | 0.65(0.15) | 0.65(0.15) | 0.60(0.16) | 0.62(0.16) | 0.66(0.16) | 0.63(0.12) | 0.63(0.10) | 0.66(0.13) | 0.65(0.13) | |

| Average | S | 0.62(0.14) | 0.64(0.15) | 0.58(0.15) | 0.61(0.15) | 0.61(0.16) | 0.62(0.15) | 0.62(0.13) | 0.64(0.13) | 0.64(0.14) |

| E-M | 0.62(0.14) | 0.65(0.15) | 0.61(0.15) | 0.64(0.15) | 0.61(0.16) | 0.63(0.14) | 0.62(0.14) | 0.64(0.14) | 0.62(0.14) | |

| E-E | 0.62(0.14) | 0.64(0.15) | 0.56(0.15) | 0.60(0.17) | 0.61(0.16) | 0.63(0.13) | 0.62(0.14) | 0.64(0.14) | 0.64(0.14) | |

| E-S | 0.62(0.15) | 0.64(0.15) | 0.58(0.15) | 0.60(0.16) | 0.61(0.16) | 0.63(0.14) | 0.62(0.14) | 0.64(0.14) | 0.64(0.13) |

AUC obtained with Nearest Centroids when a signature is learnt from one dataset and tested by 10-fold cross-validation on the three remaining datasets. Standard error is shown within parentheses. For each training dataset, we highlighted the best performance. The Type column refers to the use of feature selection run a single time (S) or through ensemble feature selection, either with the mean (E-M), exponential (E-E) or stability selection (E-S) procedure to aggregate lists.

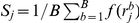

In order to check how these results depend on the size of the signature, we plot in Figure 2 the AUC of the  feature selection methods, with or without ensemble averaging, combined with a NC classifier, as a function of the size of the signature. Interestingly, we observe that in some cases the AUC seems to increase early, implying that fewer than

feature selection methods, with or without ensemble averaging, combined with a NC classifier, as a function of the size of the signature. Interestingly, we observe that in some cases the AUC seems to increase early, implying that fewer than  genes may be sufficient to obtain the maximal performance. Indeed, while it is significant that

genes may be sufficient to obtain the maximal performance. Indeed, while it is significant that  -gene signatures perform better than a list of fewer than

-gene signatures perform better than a list of fewer than  features (

features ( regardless of the method or the setting), signatures of size

regardless of the method or the setting), signatures of size  do not lead to significantly worse performances in general. It is worth noting that some algorithms have an increasing AUC curve in this range of sizes, and we observe no overfitting that may lead to a decreasing AUC when the number of features increases. Random selection was previously shown to give an AUC equivalent to other methods for a large signature, but as we observe on this picture, the fewer genes the larger the gap in AUC.

do not lead to significantly worse performances in general. It is worth noting that some algorithms have an increasing AUC curve in this range of sizes, and we observe no overfitting that may lead to a decreasing AUC when the number of features increases. Random selection was previously shown to give an AUC equivalent to other methods for a large signature, but as we observe on this picture, the fewer genes the larger the gap in AUC.

Figure 2. Area under the ROC Curve.

NC classifier trained as a function of the size of the signature, for different feature selection methods, in a  -fold CV setting averaged over the four datasets.

-fold CV setting averaged over the four datasets.

In order to assess the influence of the number of samples used to estimate the signature, we computed the 10-fold cross-validation AUC (repeated 50 times) reached with a NC classifier as a function of the number of samples in the training set. Figure 3 shows the AUC averaged over the four datasets, for each feature selection method, while Figure 4 shows the same AUC on each dataset separately. With no surprise, we observe that the average accuracy clearly increases with the number of samples in the training set, for all methods, and that the relative order of the different methods does not strongly depend on the number of samples. While it is impossible to extrapolate the curve, it is not hard to imagine that it would continue to increase to a certain point. On this plot, t-test clearly outperforms the rest of the methods. However, looking at the behavior of the methods with respect to the size of the training set on each set separately, we note that not only the level of performance but also the relative order between methods strongly depend on the dataset. For example, while t-test outperforms all methods in the GSE4922 dataset, Lasso and Elastic Net seem to be the best choices in GSE2034. On the other hand, we observe that the best methods on each datasets have not reached their asymptote yet, suggesting by extrapolation that better accuracies could be reached with larger cohorts.

Figure 3. Area under the ROC Curve.

NC classifier trained as a function of the number of samples in a  -fold CV setting. We show here the accuracy for 100-gene signatures as averaged over the

-fold CV setting. We show here the accuracy for 100-gene signatures as averaged over the  datasets. Note that the maximum value of the x axis is constrained by the smallest dataset, namely GSE2990.

datasets. Note that the maximum value of the x axis is constrained by the smallest dataset, namely GSE2990.

Figure 4. Area under the ROC Curve.

NC classifier trained as a function of the number of samples in a  -fold CV setting for each of the four datasets. We show here the accuracy for 100-gene signatures.

-fold CV setting for each of the four datasets. We show here the accuracy for 100-gene signatures.

Stability of gene lists

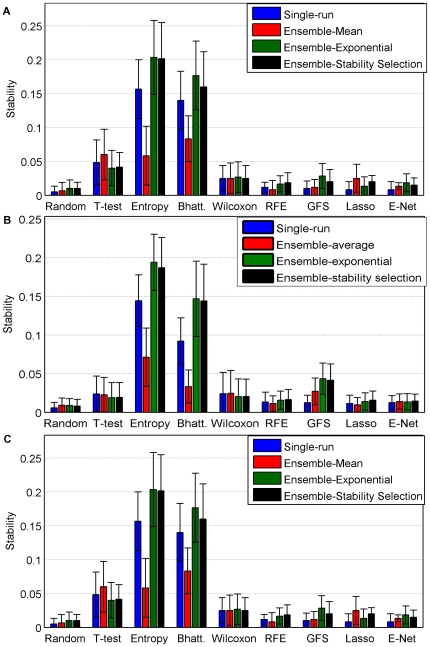

We now assess the stability of signatures created by different feature selection methods at the gene level. Figure 5 compares the stability of  -gene signatures estimated by all feature selection methods tested in this benchmark, in the three experimental settings: soft-perturbation, hard-perturbation and between-datasets settings. The results are averaged over the bootstrap replicates and the four datasets. It appears very clearly and significantly that filter methods provide more stable lists than wrappers and embedded methods. It also seems that ensemble-exponential and ensemble-stability selection yield much more stable signatures than ensemble-average. It is worth noting that a significant gain in robustness through bootstrap is only observable for relative entropy and Bhattacharyya distance. Interestingly, SVM-RFE seems to benefit from ensemble aggregation in the soft-perturbation setting, as observed by [11], but this effect seems to vanish in the more relevant hard-perturbation and between-dataset settings.

-gene signatures estimated by all feature selection methods tested in this benchmark, in the three experimental settings: soft-perturbation, hard-perturbation and between-datasets settings. The results are averaged over the bootstrap replicates and the four datasets. It appears very clearly and significantly that filter methods provide more stable lists than wrappers and embedded methods. It also seems that ensemble-exponential and ensemble-stability selection yield much more stable signatures than ensemble-average. It is worth noting that a significant gain in robustness through bootstrap is only observable for relative entropy and Bhattacharyya distance. Interestingly, SVM-RFE seems to benefit from ensemble aggregation in the soft-perturbation setting, as observed by [11], but this effect seems to vanish in the more relevant hard-perturbation and between-dataset settings.

Figure 5. Stability for a signature of size 100.

Average and standard errors are obtained over the four datasets. a) Soft-perturbation setting. b) Hard-perturbation setting. c) Between-datasets setting.

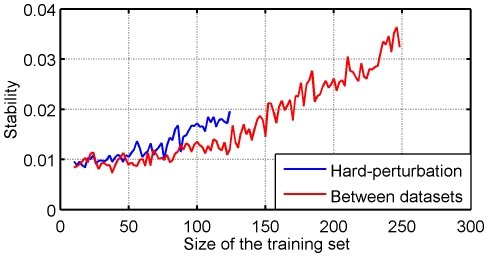

Obviously, subfigures 5B) and 5C) are very much alike while Figure 5A) stands aside. They confirm that the hard-perturbation setting is the best way to estimate the behavior of the algorithms between different studies. The larger stability observed in the between-datasets setting compared to the hard perturbation setting for some methods (e.g., t-test) is essentially due to the fact that signatures are trained on more samples in the between-dataset setting, since no split is required within a dataset. Figure 6 illustrates this difference for one feature selection method. It shows the stability of the t-test in both settings with respect to the number of samples used to estimate signatures. While both curves remain low, we observe like [5] a very strong effect of the number of samples. Interestingly, we observe that for very small sample sizes the stability in the hard-perturbation setting is a good proxy for the stability in the between-dataset setting. However, the slope of the hard-perturbation setting stability seems sharper, suggesting that the gap would stretch for larger sample sizes, should the blue curve be extrapolated. These results suggest that i) the main reason for the low stability values is really the sample size and ii) the uniformity of the cohort still plays a role for larger sizes of training sets.

Figure 6. Evolution of stability of t-test signatures with respect to the size of the training set in the hard-perturbation and the between datasets settings from GSE2034 and GSE4922.

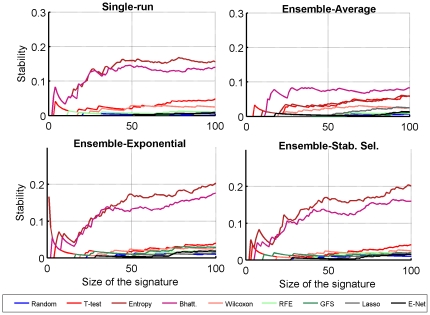

We also observe in Figure 7 that the relative stability of the different methods does not depend on the size of the signature over a wide range of values, confirming that the differences observed for signatures of size 100 reveal robust differences between the methods.

Figure 7. Stability of different methods in the between-dataset setting, as a function of the size of the signature.

Interpretability and functional stability

Even when different signatures share no or little overlap in terms of genes, it is possible that they encode the same biological processes and be useful if we can extract information about these processes from the gene lists in a robust manner. In the case of breast cancer prognostic signatures, for example, several recent studies have shown that functional analysis of the signatures can highlight coherent biological processes [6], [7], [24]–[26]. Just like stability at the gene level, it is therefore important to assess the stability of biological interpretation that one can extract from signatures.

First, we evaluate the interpretability of signatures of size  , i.e., the ability of functional analysis to bring out a biological interpretation for a signature.

, i.e., the ability of functional analysis to bring out a biological interpretation for a signature.

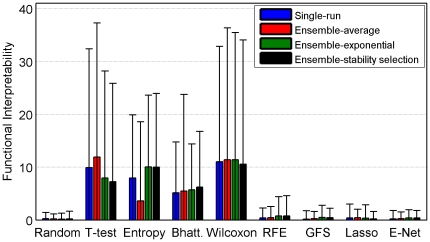

As shown on Figure 8, the four filter methods appear to be much more interpretable than wrappers/embedded methods. However, it should be pointed out that the number of significant GO terms is often zero regardless of the algorithm, leading to large error bars. Ensemble methods do not seem to enhance the interpretability of signatures.

Figure 8. GO interpretability for a signature of size 100.

Average number of GO BP terms significantly over-represented.

Second, we assess on Figure 9 the functional stability for all methods in the three settings. While the baseline stability, as obtained by random signatures, is approximatively the same regardless of the setting, we observe that, like stability at the gene level, soft- and hard-perturbation can lead to very different interpretations. This suggests again that the high functional stability obtained by several methods in the soft-perturbation setting is mainly due to the overlap in samples. Hence the hard-perturbation setting seems to be a much better proxy for the between-datasets framework.

Figure 9. GO stability for a signature of size 100 in the soft-perturbation setting.

Average and standard errors are obtained over the four datasets. A) Soft-perturbation setting. B) Hard-perturbation setting. C) Between-datasets setting.

Stability results at the functional level are overall very similar to the results at the gene level, namely, we observe that univariate filters are overall the most stable methods, and that the hard-perturbation setting returns a trustworthy estimate of the inter-datasets stability. In particular, an ANOVA procedure reveals that in the single-run settings, only signatures obtained from filters are significantly more stable than random. We also note that Ensemble-mean never improves the functional stability and that Ensemble-exponential/Ensemble stability selection return more stable signatures than single-run for Entropy and Bhattacharyya as well as for GFS and Lasso although less significantly.

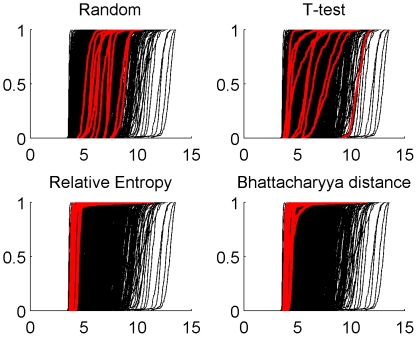

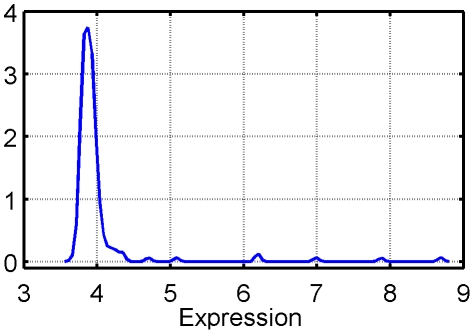

Bias issues in selection with Entropy and Bhattacharrya distance

Gene selection by relative entropy and Bhattacharyya distance is more stable but less accurate than random selection, which suggests a bias in the method which may preferably and consistently select particular genes, not necessarily very predictive. To elucidate this behavior, we investigated the genes selected by these two methods. We noticed that they tend to be systematically expressed at low levels, as shown in Figure 10, and that they barely depend on the labels, which explains the high stability but small accuracy. In fact the frequently selected genes systematically show a multimodal yet imbalanced distribution due to the presence of outliers, as illustrated on Figure 11. As soon as, by chance, one class of samples contains one or more outliers when the other class doesn't, this type of distribution is responsible for a very high variance ratio between the two classes, thus leading to a very high value of the entropy and Bhattacharyya statistics. It is therefore likely that, although stable and interpretable, the molecular signatures generated by these two methods lead to erroneous interpretation.

Figure 10. Bias in the selection through entropy and Bhattacharyya distance.

Estimated cumulative distribution functions (ECDF) of the first ten genes selected by four methods on GSE1456. They are compared to the ECDF of  randomly chosen background genes.

randomly chosen background genes.

Figure 11. Estimated distribution of the first gene selected by entropy and Bhattacharyya distance.

Discussion

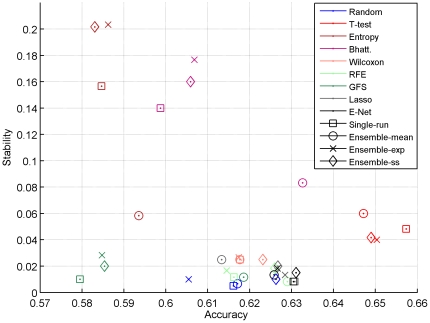

We compared a panel of 32 feature selection methods in light of two important criteria: accuracy and stability, both at the gene and at the functional level. Figure 12 summarizes the relative performance of all methods, and deserves several comments.

Figure 12. Accuracy/stability trade-off.

Accuracy versus stability for each method in the between-datasets setting. We show here the average results over the four datasets.

Taking random feature selection as a baseline, we first notice the strange behavior of gene selection by Batthacharyya distance and relative entropy: they are both more stable but less accurate than random selection. A careful investigation of the genes they select allowed us to identify that they tend to select genes with low expression levels, independently of the sample labels. This unwanted behavior can easily be fixed by pre-filtering genes with small variations, but it highlights the danger of blindly trusting a feature selection method, which in this case gives very stable and interpretable signatures.

Second, we observe that among the other methods, only elastic net, Lasso and t-test clearly seem to outperform random in terms of accuracy, and only t-test outperforms it in terms of stability. Overall, t-test gives both the best performance and the best stability. The fact that the Lasso is not stable is not surprising since, like most multivariate methods, it tries to avoid redundant genes in a signature and should therefore not be stable in data where typically many genes encode for functionally related proteins. What was less expected is that neither the elastic net, which was designed exactly to fight this detrimental property of Lasso by allowing the selection of groups of correlated genes, nor stability selection, which is supposed to stabilize the features selected by Lasso, were significantly more stable than the Lasso. In addition, we also found very unstable behaviors at the functional level. This raises questions about the relevance of these methods for gene expression data. Similarly, the behavior of wrapper methods was overall disappointing. SVM RFE and Greedy Forward Selection are neither more accurate, nor more stable or interpretable than other methods, while their computational cost is much higher. Although we observed like [11] that SVM RFE can benefit from ensemble feature selection, it remains below the t-test both in accuracy and stability.

Overall we observed that ensemble method which select features by aggregating signatures estimated on different bootstrap samples increased the stability of some methods in some cases, but did not clearly improve the best methods. Regarding the aggregation step itself, we advise against the use of ensemble-average, i.e. averaging the ranks of each gene over the bootstrapped lists, regardless of the selection method. Ensemble-stability selection or ensemble-exponential gave consistently better results. The superiority of the latter two can be explained by the high instability of the rankings, as discussed in [27].

Regarding the choice of method to train a classifier once features are selected, we observed that the best accuracy was achieved by the simplest one, namely the nearest centroids classifier, used e.g. by [10], [25]. An advantage of this classifier is that it does not require any parameter tuning, making the computations fast and less prone to overfitting.

The performance evaluation of gene selection methods must be done carefully to prevent any selection bias, which could lead to underestimated error rates as discussed in [28], [29]. This happens when, for example, a set of genes is selected on a set of samples, and its performance as a signature is then estimated by cross-validation on the same set. In our experimental protocol, we overcome this issue by ensuring that gene selection is never influenced by the test samples on which the accuracy is measured. In the 10-fold cross-validation setting, this means that genes are selected and the classification model is trained 10 times, on the 10 training sets. Alternatively, we also tested the performance of prognostic signature across datasets, where selection bias is clearly absent. We barely observed any difference between the 10-fold cross-validation setting and the setting across dataset, in terms of average accuracy, confirming that cross-validation without selection bias is a good way to estimate the generalization performance.

We noticed that evaluating the stability and the interpretability in a soft-perturbation setting may lead to untrustworthy results. The best estimation seems to be obtained in the hard-perturbation setting experiments. The lack of stability between datasets has been explained by four arguments. First data may come from different technological platforms, which is not the case here. Second and third, there are differences in experimental protocols and in patient cohorts, which is indeed the case between datasets; fourth, the small number of sample leads statistical instability. We however obtained very similar stability in the hard-perturbation setting (within each dataset) and in the inter-datasets results. This suggests that the main source of instability is not the difference in cohorts or experimental protocols, but really the statistical issue of working in high dimension with few samples.

Acknowledgments

We thank Simon Fourquet, Alexandre Hamburger, Laurent Jacob and Fabien Reyal for fruitful discussions.

Footnotes

Competing Interests: The authors have declared that no competing interests exist.

Funding: This work was supported by the French National Research Agency under grant agreement ANR-09-BLAN-0051-04 and by the European Community's Seventh Framework Programme under grant agreement FP7-246513. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Sotiriou C, Pusztai L. Gene-expression signatures in breast cancer. N Engl J Med. 2009;360:790–800. doi: 10.1056/NEJMra0801289. [DOI] [PubMed] [Google Scholar]

- 2.Ioannidis JPA. Microarrays and molecular research: noise discovery? Lancet. 2005;365:454. doi: 10.1016/S0140-6736(05)17878-7. [DOI] [PubMed] [Google Scholar]

- 3.Ein-Dor L, Kela I, Getz G, Givol D, Domany E. Outcome signature genes in breast cancer: is there a unique set? Bioinformatics. 2005;21:171–178. doi: 10.1093/bioinformatics/bth469. [DOI] [PubMed] [Google Scholar]

- 4.Michiels S, Koscielny S, Hill C. Prediction of cancer outcome with microarrays: a multiple random validation strategy. Lancet. 2005;365:488–492. doi: 10.1016/S0140-6736(05)17866-0. [DOI] [PubMed] [Google Scholar]

- 5.Ein-Dor L, Zuk O, Domany E. Thousands of samples are needed to generate a robust gene list for predicting outcome in cancer. Proc Natl Acad Sci USA. 2006;103:5923–5928. doi: 10.1073/pnas.0601231103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Shen R, Chinnaiyan A, Ghosh D. Pathway analysis reveals functional convergence of gene expression profiles in breast cancer. BMC Med Genomics. 2008;1:28. doi: 10.1186/1755-8794-1-28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Reyal F, Van Vliet M, Armstrong N, Horlings H, De Visser K, et al. A comprehensive analysis of prognostic signatures reveals the high predictive capacity of the proliferation, immune response and RNA splicing modules in breast cancer. Breast Cancer Res. 2008;10:R93. doi: 10.1186/bcr2192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wirapati P, Sotiriou C, Kunkel S, Farmer P, Pradervand S, et al. Meta-analysis of gene expression profiles in breast cancer: toward a uni_ed understanding of breast cancer subtyping and prognosis signatures. Breast Cancer Res. 2008;10:R65. doi: 10.1186/bcr2124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Guyon I, Elisseeff A. An introduction to variable and feature selection. J Mach Learn Res. 2003;3:1157–1182. [Google Scholar]

- 10.Lai C, Reinders M, Van't Veer L, Wessels L, et al. A comparison of univariate and multivariate gene selection techniques for classification of cancer datasets. BMC Bioinformatics. 2006;7:235. doi: 10.1186/1471-2105-7-235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Abeel T, Helleputte T, Van de Peer Y, Dupont P, Saeys Y. Robust biomarker identification for cancer diagnosis with ensemble feature selection methods. Bioinformatics. 2010;26:392–398. doi: 10.1093/bioinformatics/btp630. [DOI] [PubMed] [Google Scholar]

- 12.Zou H, Hastie T. Regularization and variable selection via the Elastic Net. J R Stat Soc Ser B. 2005;67:301–320. [Google Scholar]

- 13.Meinshausen N, Bühlmann P. Stability selection. J R Stat Soc Ser B. 2010;72:417–473. [Google Scholar]

- 14.Kohavi R, John G. Wrappers for feature selection. Artif Intell. 1997;97:273–324. [Google Scholar]

- 15.Guyon I, Weston J, Barnhill S, Vapnik V. Gene selection for cancer classification using support vector machines. Mach Learn. 2002;46:389–422. [Google Scholar]

- 16.Mairal J, Bach F, Ponce J, Sapiro G. Online learning for matrix factorization and sparse coding. J Mach Learn Res. 2010;11:19–60. [Google Scholar]

- 17.Tibshirani R. Regression shrinkage and selection via the lasso. J R Stat Soc Ser B. 1996;58:267–288. [Google Scholar]

- 18.Bi J, Bennett K, Embrechts M, Breneman C, Song M. Dimensionality reduction via sparse support vector machines. J Mach Learn Res. 2003;3:1229–1243. [Google Scholar]

- 19.Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc Ser B. 1995;57:289–300. [Google Scholar]

- 20.Wang J, Du Z, Payattakool R, Yu P, Chen C. A new method to measure the semantic similarity of GO terms. Bioinformatics. 2007;23:1274. doi: 10.1093/bioinformatics/btm087. [DOI] [PubMed] [Google Scholar]

- 21.Barrett T, Troup D, Wilhite S, Ledoux P, Rudnev D, et al. NCBI GEO: archive for highthroughput functional genomic data. Nucleic Acids Res. 2009;37:D885. doi: 10.1093/nar/gkn764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Dai M, Wang P, Boyd A, Kostov G, Athey B, et al. Evolving gene/transcript definitions significantly alter the interpretation of GeneChip data. Nucleic Acids Res. 2005;33:e175. doi: 10.1093/nar/gni179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Irizarry RA, Hobbs B, Collin F, Beazer-Barclay YD, Antonellis KJ, et al. Exploration, normalization, and summaries of high density oligonucleotide array probe level datas. Biostatistics. 2003;4:249–264. doi: 10.1093/biostatistics/4.2.249. [DOI] [PubMed] [Google Scholar]

- 24.Fan C, Oh D, Wessels L, Weigelt B, Nuyten D, et al. Concordance among gene-expressionbased predictors for breast cancer. N Engl J Med. 2006;355:560. doi: 10.1056/NEJMoa052933. [DOI] [PubMed] [Google Scholar]

- 25.Abraham G, Kowalczyk A, Loi S, Haviv I, Zobel J. Prediction of breast cancer prognosis using gene set statistics provides signature stability and biological context. BMC Bioinformatics. 2010;11:277. doi: 10.1186/1471-2105-11-277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Shi W, Bessarabova M, Dosymbekov D, Dezso Z, Nikolskaya T, et al. Functional analysis of multiple genomic signatures demonstrates that classification algorithms choose phenotype-related genes. Pharmacogenomics J. 2010;10:310–23. doi: 10.1038/tpj.2010.35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Iwamoto T, Pusztai L. Predicting prognosis of breast cancer with gene signatures: are we lost in a sea of data? Genome Med. 2010;2:81. doi: 10.1186/gm202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Ambroise C, McLachlan G. Selection bias in gene extraction on the basis of microarray gene-expression data. Proc Natl Acad Sci USA. 2002;99:6562–6566. doi: 10.1073/pnas.102102699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Simon R, Radmacher M, Dobbin K, McShane L. Pitfalls in the use of dna microarray data for diagnostic and prognostic classification. J Natl Cancer Inst. 2003;95:14. doi: 10.1093/jnci/95.1.14. [DOI] [PubMed] [Google Scholar]

- 30.Pawitan Y, Bjoehle J, Amler L, Borg A, Egyhazi S, et al. Gene expression profiling spares early breast cancer patients from adjuvant therapy: derived and validated in two population-based cohorts. Breast Cancer Res. 2005;7:R953–R964. doi: 10.1186/bcr1325. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wang Y, Klijn J, Zhang Y, Sieuwerts A, Look M, et al. Gene-expression profiles to predict distant metastasis of lymph-node-negative primary breast cancers. Lancet. 2005;365:671–679. doi: 10.1016/S0140-6736(05)17947-1. [DOI] [PubMed] [Google Scholar]

- 32.Sotiriou C, Wirapati P, Loi S, Harris A, Fox S, et al. Gene expression profiling in breast cancer: understanding the molecular basis of histologic grade to improve prognosis. J Natl Cancer Inst. 2006;98:262. doi: 10.1093/jnci/djj052. [DOI] [PubMed] [Google Scholar]

- 33.Ivshina A, George J, Senko O, Mow B, Putti T, et al. Genetic reclassification of histologic grade delineates new clinical subtypes of breast cancer. Cancer Res. 2006;66:10292. doi: 10.1158/0008-5472.CAN-05-4414. [DOI] [PubMed] [Google Scholar]