Short abstract

Is no evidence better than any evidence when controlled studies are unethical?

Rigorous evidence on the health effects of social interventions is scarce1,2 despite calls for more evidence from randomised studies.3 One reason for the lack of such experimental research on social interventions may be the perception among researchers, policymakers, and others that randomised designs belong to the biomedical world and that their application to social interventions is both unethical and simplistic.4 Applying experimental designs to social interventions may be problematic but is not always impossible and is a desirable alternative to uncontrolled experimentation.3 However, even when randomised designs have been used to evaluate social interventions, opportunities to incorporate health measures have often been missed.5 For example, income supplementation is thought to be a key part of reducing health inequalities,6 but rigorous evidence to support this is lacking because most randomised controlled trials of income supplementation have not included health measures.5 Current moves to increase uptake of benefits offer new opportunities to establish the effects of income supplements on health. In attempting to design such a study, however, we found that randomised or other controlled trials were difficult to justify ethically, and our eventual design was rejected by funders.

Box 1 Attendance allowance

Attendance allowance is payable to people aged 65 or older who need frequent help or supervision and whose need has existed for at least six months

The rate payable depends on whether they need help at home or only when going out and whether they need help during the day or the evening, or both

Aims of study

A pilot study carried out by one of us (RH) showed substantial health gains among elderly people after receipt of attendance allowance. We therefore decided to pursue a full scale study of the health effects of income supplementation. The research team comprised a multidisciplinary group of academics and a representative from the Benefits Agency (TQ). Our aim was to construct a robust experimental or quasi-experimental design (in which a control group is included but not randomly allocated) that would be sensitive enough to measure the health and social effects of an attendance allowance award on frail, elderly recipients.

The intervention

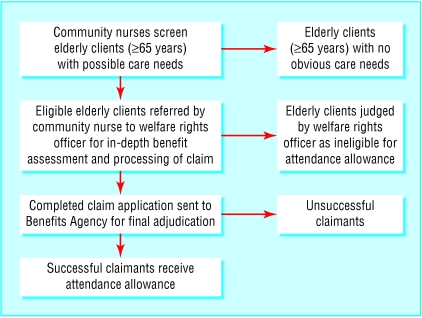

The intervention involved a primary care based programme that aimed to increase uptake of benefits. In 2001, community nurses, attached to a general practice serving the unhealthiest parliamentary constituency in the United Kingdom,7 screened their frail elderly clients for unclaimed attendance allowance (box 1). Potential underclaimants were then visited by a welfare rights officer, who carried out a benefit assessment, and the claim was then forwarded to the Benefits Agency (part of the Department of Social Security) for the final adjudication of applications (figure). This resulted in 41 clients receiving additional benefit totalling £112 892 (€160 307; $200 302), with monthly incomes increasing by £163-£243.8

Figure 1.

Process of screening to promote uptake of attendance allowance. The groups represented by the boxes on the right would be unsuitable as controls because they would be systematically different from benefit recipients in terms of care needs and health status

Figure 2.

Health effects of social intervention can be hard to study

Credit: ALIX/PHANIE/REX

Outcomes

We chose change in health status measured by the SF-36 questionnaire as the main outcome variable. Explanatory variables, which recipients had linked to increased income in pilot interviews, were also incorporated. These included diet, stress levels, levels of social participation, and access to services. We intended to assess health status before receipt of the benefit and at six and 12 months afterwards. An economic evaluation was also planned.

Study design

We initially considered a randomised controlled trial. However, we encountered problems with the key elements of this design. The study designs considered and the issues raised are outlined below.

Design 1: randomisation of the intervention

Under a randomised controlled design successful claimants would be randomised immediately after the adjudication decision by the benefits agency. Those in the control group would have their benefit delayed by one year, and those in the intervention group would receive the benefit immediately. This design would ensure that the health status and benefit eligibility of both groups were comparable at baseline. However, the research group considered this design unethical because of the deliberate withholding of an economic benefit, which would also be unacceptable to participants. This design was therefore abandoned.

Design 2: randomising to waiting list

The introduction of a three month waiting list between initial assessment by a nurse and assessment by the welfare rights officer provided an opportunity for random allocation to the control and intervention group. We obtained approval to randomise the clients to a waiting list of a maximum of three months from the Benefits Agency, which provides the welfare rights officer. Thus, elderly clients referred by the nurse to the welfare rights officer could have been randomised to receive the visit either immediately (the intervention group) or after three months (the control group).

This design would have allowed us to compare the groups at the desired time points and provided a directly comparable control group in terms of health status and benefit eligibility. However, it randomises the benefit assessment and not the intervention of interest (receipt of the benefit), and a delay of three months would probably not be long enough to detect important health differences between the two groups. More importantly, it is unlikely to be ethically acceptable to request that study participants, already assessed to be in need of an economic benefit, accept a 50% chance of delaying the application process for three months in the interests of research. We therefore rejected this design.

Design 3: non-randomised controlled trial

A third potential design entailed identifying a non-randomised control group from a nearby area with a similar sociodemographic composition but with no welfare rights officers. In this design, community nurses would have screened potential underclaimants in the control area, who would then have been offered a standard leaflet on how to apply for attendance allowance (a nominal intervention corresponding to “usual care”). This design would have eliminated some of the ethical concerns associated with randomisation and delaying the receipt of benefit, and would have achieved an intermediate level of internal validity by retaining a comparison with a control group. However, recruitment and retention of this control group raises problems.

The success of this design depends on participants in the control group delaying their claim for the duration of the study. Although the effectiveness of the “usual care” intervention, the leaflet, is normally poor, we considered it unlikely that this would be the case after assessment for the study as participants are made aware of their potential eligibility for the benefit. We thought it unacceptable to request that participants delay claiming the additional benefit after drawing attention to their eligibility.

Design 4: uncontrolled study

A before and after study of a group of benefit recipients would be more ethically acceptable, but it would be more difficult to attribute any observed change in health status to the intervention alone. We applied for research funding for a study based on this design, citing the practical and ethical difficulties in designing a randomised controlled trial, but the application was rejected mainly because of the lack of a control group. We presume that the underlying assumption was that such an uncontrolled study would be so biased as to provide no useful information.

Discussion

Our initial aim was to design a randomised or controlled study to detect the health effects of income supplementation. Our failure to design such a study and to get funding for a less rigorous study poses the question of what sort of evidence is acceptable in such situations. Social interventions differ from clinical and most complex public health interventions in that changes in health are often an indirect effect rather than a primary aim of the intervention. Investigation of indirect health effects often requires choices to be made between competing values, usually health and social justice, creating a moral problem. When, as in our study, the tangible social and economic gains generated by the social interventions outweigh the theoretical possibility of marginal health effects, the moral issues are clear.

Summary points

The health effects of social interventions have rarely been assessed and are poorly understood

Studies are required to identify the possible positive or negative health impacts and the mechanisms for these health impacts

The assessment of indirect health effects of social interventions draws attention to competing values of health and social justice

Randomisation of a social intervention may be possible using natural delays, but adding delays for the sole purpose of health research is often unethical

When randomised or other controlled studies are not ethically possible, uncontrolled studies may have to be regarded as good enough

Randomisation

Although judgments about equipoise have recently been challenged,9 equipoise around the primary clinical outcome has been the ethical justification for randomising clinical interventions.10,11 Equipoise implies uncertainty around the distribution of costs and benefits between two interventions. Designing a randomised study may be simple in theory, but in cases where the equipoise is around uncertain indirect health impacts, and the primary economic or social impacts seem certain, true equipoise is unlikely and randomisation may be unethical.

Randomising a control group need not always present ethical hurdles. There may be inherent delays in rolling out a new or reformed programme across an area, or an intervention may require rationing or be subject to long waiting lists. These delays may provide ethical and pragmatic opportunities for randomisation12; indeed, randomisation may be the fairest means of rationing an intervention.13 However, delaying access to a tangible benefit for individuals who are assessed as “in need” may not be justifiable on research grounds.

Generating evidence for healthy public policy

An urgent need remains for studies of the indirect health effects of social interventions to improve our understanding of the mechanisms by which health effects can be achieved.14 Attention has already been drawn to the need for careful design of evaluations of complex public health interventions,15-17 but guidance for evaluating the indirect health impacts of social interventions may require further consideration in light of the issues outlined above. For example, when the direct effects are obvious, randomised controlled trials may be unnecessary and inappropriate.18 In health technology assessment, other study designs have an important role in development15,19 and in helping to detect secondary effects.18 For example, new drugs with established pharmacological mechanisms are investigated at increasing levels of internal and external validity before being tested in a population level randomised controlled trial. Phase I and II studies are often small and uncontrolled, but they help to establish positive and negative effects, clarify the dose-response relations, and provide the background for larger trials.15,19 In addition, once approved, drugs are closely monitored at a population level to detect previously unidentified secondary adverse effects that may outweigh the primary positive effects.18 Our pilot study was similar to a phase II study.

This matching of study designs to the level of development and knowledge of the effects of an intervention could be usefully applied to the study of social interventions. Non-randomised and uncontrolled studies could be used to shed light on the nature and possible size of health effects in practice, to illustrate mechanisms, and to establish plausible outcomes.18 Such studies may serve as a precursor to experimental studies when these are ethically justifiable and appropriate. However, when randomised studies are not possible, we may have to accept data from uncontrolled studies as good enough, given the huge gaps in our knowledge.14 We need to reconsider what sort of evidence is required, how this should be assembled and for what purpose, and the trade offs between bias and utility so that study designs that are acceptable to research participants, users, and funders can be agreed.

We thank Sally Macintyre and Graham Hart for comments on a previous draft of this article.

Contributors and sources: The initial idea for this study was developed by RH. All authors regularly attended and contributed to meetings of the study team, development of the research proposal, and writing the paper. HT, MP, and DO work on a research programme that evaluates the health impacts of social interventions. RH, GL, and TQ worked on initial pilot work investigating Attendance Allowance uptake and its health impacts. NC is a lecturer in health economics and has an active research interest in designing economic evaluations of social welfare interventions.

Funding: HT and MP are employed by the Medical Research Council and funded by the Chief Scientist Office at the Scottish Executive Health Department. DO is funded by the Chief Scientist Office at the Scottish Executive Health Department.

Competing interests: None declared.

References

- 1.Macintyre S, Chalmers I, Horton R, Smith R. Using evidence to inform health policy: case study. BMJ 2001;322: 222-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Exworthy M, Stuart M, Blane D, Marmot M. Tackling health inequalities since the Acheson inquiry. Bristol: Policy Press, 2003.

- 3.Oakley A. Experimentation and social interventions: a forgotten but important history. BMJ 1998;317: 1239-42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Macintyre S, Petticrew M. Good intentions and received wisdom are not enough. J Epidemiol Community Health 2000;54: 802-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Connor J, Rodgers A, Priest P. Randomised studies of income supplementation: a lost opportunity to assess health incomes. J Epidemiol Community Health 1999;53: 725-30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Acheson D. Independent inquiry into inequalities in health report. London: HMSO, 1998.

- 7.Shaw M, Dorling D, Gordon D, Davey Smith G. The widening gap: health inequalities and policy in Britain. Bristol: Policy Press, 1999.

- 8.Hoskins R, Smith L. Nurse-led welfare benefits screening in a general practice located in a deprived area. Public Health 2002;116: 214-20. [DOI] [PubMed] [Google Scholar]

- 9.Lilford RJ. Ethics of clinical trials from a bayesian and decision analytic perspective: whose equipoise is it anyway? BMJ 2003;326: 980-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Freedman B. Equipoise and the ethics of clinical research. N Engl J Med 1987;317: 141-5. [DOI] [PubMed] [Google Scholar]

- 11.Edwards SJ, Lilford RJ, Braunholtz DA, Jackson JC, Hewison J, Thornton J. Ethical issues in the design and conduct of randomised controlled trials. Health Tech Assess 1998;2: 1-132. [PubMed] [Google Scholar]

- 12.Campbell DT. Reforms as experiments. Am Psychol 1969;24: 409-29. [Google Scholar]

- 13.Silverman WA, Chalmers I. Casting and drawing lots: a time honoured way of dealing with uncertainty and ensuring fairness. BMJ 2001;323: 1467-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Millward L, Kelly M, Nutbeam D. Public health intervention research: the evidence. London: Health Development Agency, 2001.

- 15.Campbell M, Fitzpatrick R, Haines A, Kinmouth AL, Sandercock P, Spiegelhalter D, et al. Framework for design and evaluation of complex interventions to improve health. BMJ 2000;321: 694-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wolff N. Randomised trials of socially complex interventions: promise or peril? J Health Service Res Policy 2001;6: 123-6. [DOI] [PubMed] [Google Scholar]

- 17.Rychetnik L, Frommer M, Hawe P, Shiell A. Criteria for evaluating evidence on public health interventions. J Epidemiol Community Health 2002;56: 119-27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Black N. Why we need observational studies to evaluate the effectiveness of health care. BMJ 1996;312: 1215-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Freemantle N, Wood J, Crawford F. Evidence into practice, experimentation and quasi-experimentation: are the methods up to the task? J Epidemiol Community Health 1998;52: 75-81. [DOI] [PMC free article] [PubMed] [Google Scholar]