Diagnostic mammography experience, more immediate feedback generated by the diagnostic process, or both may be beneficial and should be encouraged and shared among radiologists.

Abstract

Purpose:

To investigate the association between radiologist interpretive volume and diagnostic mammography performance in community-based settings.

Materials and Methods:

This study received institutional review board approval and was HIPAA compliant. A total of 117 136 diagnostic mammograms that were interpreted by 107 radiologists between 2002 and 2006 in the Breast Cancer Surveillance Consortium were included. Logistic regression analysis was used to estimate the adjusted effect on sensitivity and the rates of false-positive findings and cancer detection of four volume measures: annual diagnostic volume, screening volume, total volume, and diagnostic focus (percentage of total volume that is diagnostic). Analyses were stratified by the indication for imaging: additional imaging after screening mammography or evaluation of a breast concern or problem.

Results:

Diagnostic volume was associated with sensitivity; the odds of a true-positive finding rose until a diagnostic volume of 1000 mammograms was reached; thereafter, they either leveled off (P < .001 for additional imaging) or decreased (P = .049 for breast concerns or problems) with further volume increases. Diagnostic focus was associated with false-positive rate; the odds of a false-positive finding increased until a diagnostic focus of 20% was reached and decreased thereafter (P < .024 for additional imaging and P < .001 for breast concerns or problems with no self-reported lump). Neither total volume nor screening volume was consistently associated with diagnostic performance.

Conclusion:

Interpretive volume and diagnostic performance have complex multifaceted relationships. Our results suggest that diagnostic interpretive volume is a key determinant in the development of thresholds for considering a diagnostic mammogram to be abnormal. Current volume regulations do not distinguish between screening and diagnostic mammography, and doing so would likely be challenging.

© RSNA, 2011

Supplemental material: http://radiology.rsna.org/lookup/suppl/doi:10.1148/radiol.11111026/-/DC1

Introduction

Diagnostic mammography is a critical tool in the evaluation of abnormal screening mammograms and in the examination of women who have signs or symptoms of breast cancer. Unlike screening mammography, diagnostic mammography usually involves acquisition of additional mammographic images, is typically performed to evaluate a specific area of concern, and frequently involves a population in which cancer is approximately 10 times more prevalent than in the general population (1).

The technical quality of mammography has improved since the 1992 Mammography Quality Standards Act (2); however, relative to screening mammography, little research has been devoted to understanding variability in the interpretive performance of diagnostic mammography in community-based settings. Despite studies in which researchers considered characteristics at the level of the woman and mammogram (1,3–8), radiologist (9,10), and facility (11–13), broad unexplained variation remains in the performance of diagnostic mammography (1,9).

One possible source of variation in performance is the interpreting radiologist’s volume of work; an Institute of Medicine report called for more research in this area (2). To our knowledge, in only two studies have researchers examined interpretive volume as it relates to diagnostic mammography. Jensen et al (13) noted improved diagnostic performance in Danish clinics with at least one radiologist with a high volume of work. However, they did not examine radiologist-specific volume measures. Miglioretti et al (9) found no association between work volume and diagnostic performance, but the evaluation was limited by the use of a self-reported three-level categorical measure of total volume. Given these limitations, we undertook this study to investigate the association between radiologist interpretive volume and diagnostic mammography performance in community-based settings.

Materials and Methods

The collection of cancer incidence data used in this study was supported in part by several state public health departments and cancer registries throughout the United States. For a full description of these sources, please see http://breastscreening.cancer.gov/work/acknowledgement.html. The authors had full responsibility for designing the study; collecting, analyzing, and interpreting the data; writing the manuscript; and deciding to submit it for publication.

The Breast Cancer Surveillance Consortium (BCSC) is a population-based collaborative network of mammography registries (14,15). Each registry collects demographic and clinical data at each mammography visit at participating facilities. Cancer outcomes are ascertained via linkage to a regional or Surveillance, Epidemiology, and End Results cancer registry and to pathology databases. Analyses presented here are based on pooled data from six registries (San Francisco, Calif; North Carolina; New Hampshire; Vermont; western Washington; and New Mexico). Sites received institutional review board approval for study activities and for protection of the identities of women, physicians, and facilities. Radiologists provided informed consent. Active consent, passive permission, and/or waivers were obtained at each BCSC site from women who underwent mammography. Procedures were compliant with the Health Insurance Portability and Accountability Act (16).

Interpretive Volume

Between 2005 and 2006, 214 BCSC radiologists who interpreted mammograms at a BCSC facility completed a self-administered mailed survey (17). The survey asked radiologists to indicate all facilities where they had interpreted mammograms between 2001 and 2005. For each radiologist who interpreted mammograms at non-BCSC facilities, registry staff collected additional volume information at the non-BCSC facilities. Only radiologists with complete volume information from all facilities were included. Our final study sample included 107 radiologists; demographic characteristics and experience of these radiologists resembled those of the original survey respondents (Table E1 [online]).

We measured diagnostic volume, screening volume, and total volume when the radiologist served as the primary reader from each facility for each year between 2001 and 2005. Diagnostic volume included examinations performed for additional evaluation of a prior mammogram, short-interval follow-up, or evaluation of a breast concern or problem. Total volume included all diagnostic and screening examinations; diagnostic and screening mammograms obtained in the same woman and interpreted by the same radiologist on the same day counted as one study (18). This differs from Breast Imaging Reporting and Data System audits (19), where screening and diagnostic mammography performed on the same day independently contribute to total volume. Mammograms with missing indication (2.5%) were attributed to diagnostic or screening volume based on the observed proportion for that reader (18).

For each radiologist and year, each volume measure was summed across all facilities to obtain annual totals; diagnostic focus is the percentage of total mammograms that were considered diagnostic.

Interpretive Performance

We included diagnostic mammograms that had indications for additional imaging of an abnormality that was detected at screening or that were obtained to evaluate a breast concern or problem (1). Mammograms obtained in women with a history of breast cancer were excluded.

Examination results were considered positive or negative on the basis of the final Breast Imaging Reporting and Data System assessment at the end of imaging work-up (up to 90 days after acquisition of the index diagnostic mammogram and before biopsy) with use of standard BCSC definitions (20). Women were considered to have breast cancer if a diagnosis of invasive carcinoma or ductal carcinoma in situ was made within 1 year after mammography.

Performance measures included sensitivity, false-positive rate (FPR) per 100 mammograms, and cancer detection rate (CDR) per 1000 mammograms (15).

Statistical Analyses

All analyses were stratified by indication. Volume measures were analyzed in relation to performance in the subsequent year. For instance, 2005 volume was linked to 2006 performance (18).

We examined the distributions of radiologist- and mammogram-level characteristics with categorized volume measures. To describe overall performance, we calculated performance measures for each radiologist collapsing across all examinations and years. We examined unadjusted associations by estimating performance by using all mammograms within each categorized volume stratum and calculating 95% confidence intervals (CIs) (21).

The observed volume distributions were heavily skewed, with sparse information at very high volumes. To ensure stable estimation, we restricted our modeling to mammograms with annual average volume measures in the following ranges: total volume, 480–7000 mammograms; screening volume, 480–6000 mammograms; diagnostic volume, 0–2500 mammograms; and diagnostic focus, 1%–40%.

To estimate the adjusted effect of volume on diagnostic performance, we fit separate logistic regression models for each performance measure. Models for sensitivity included mammograms obtained in patients in whom a cancer diagnosis was made within 1 year after mammography, with final assessment (positive or negative) as the outcome. Models for FPR were also used in the final assessment but were based on mammograms obtained in patients in whom no cancer diagnosis was made within 1 year after mammography. Models for CDR included all mammograms and used the outcome of whether cancer was diagnosed within 1 year after positive final assessment. All models were adjusted for BCSC registry. Patient-level characteristics were age, family history, self-reported presence of a lump, and time since last mammographic examination. Radiologist-level characteristics were years of experience with mammogram interpretation and percentage of time spent in breast imaging. Breast density was not included because of the high rate of missing data.

To avoid making restrictive assumptions about the shape of the volume-performance relationships, we used natural cubic splines with one knot at the midpoint of the prespecified volume ranges (22). To standardize interpretations, we set the following reference levels: 2000 mammograms for total volume, 1500 mammograms for screening volume, 1000 mammograms for diagnostic volume, and 20% for diagnostic focus. The tables show estimated adjusted odds ratios and 95% CIs; Appendix E1 (online) provides additional detail.

We hypothesized that a self-reported lump could modify the effect of volume on interpretive performance for mammograms that showed a breast concern or problem. Thus, we tested for an interaction between self-reported lump and each volume measure. For volume and performance combinations with significant interactions, we present lump stratum–specific results; otherwise, we present results from the main effects–only model (with adjustment for self-reported lump).

Generalized estimating equations were used to estimate model parameters (23,24). To account for within-radiologist correlation, we adopted a working exchangeable correlation structure and calculated standard errors, two-sided P values, and 95% CIs with the robust sandwich estimator (23). Sensitivity analyses included individually excluding each registry to ensure none overly influenced results, not adjusting for radiologist-level characteristics, and adjusting for breast density. Statistical significance was based on two-sided P values at the .05 level. All analyses were performed by using R software (version 2.12.0; R Foundation for Statistical Computing, Vienna, Austria) (25).

Results

Our study included 107 radiologists who interpreted 117 136 diagnostic mammograms (46 369 obtained because additional imaging was required, 70 767 obtained because of a breast concern or problem) obtained in 98 667 women. Most radiologists (86%) contributed at least 3 years of volume data, performance data, or both (50% contributed 5 years of volume data), for a total of 426 reader-years.

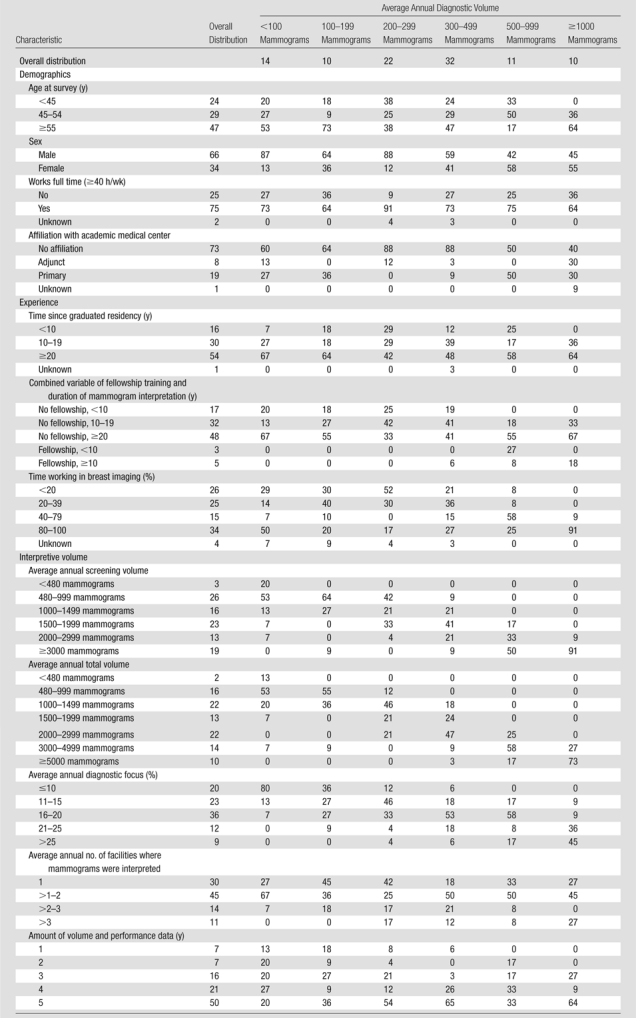

The median age of radiologists was 54 years (age range, 37–72 years); most radiologists were men (66%), worked full time (75%), and had interpreted mammograms for at least 10 years (84%) (Table 1). Approximately 34% of the radiologists spent most (80%–100%) of their time working in breast imaging. The median average annual diagnostic volume was 315 mammograms; 15 (14%) of the 107 radiologists interpreted fewer than 100 diagnostic mammograms on average; 11 (10%) interpreted at least 1000 mammograms. Readers with the highest average annual diagnostic volume (≥1000 mammograms) were more likely to be women (55%, compared with 34% overall), spend more time in breast imaging (91% spend 80%–100% of their time in breast imaging, compared with 34% overall), and have higher total and screening volume and a higher diagnostic focus (Table 1).

Table 1.

Distribution of Radiologist Characteristics across 107 Radiologists by Average Annual Diagnostic Volume

Note.—All data are percentages. Unknown percentages are based on all 107 radiologists; remaining percentages are based on the number with the characteristic known.

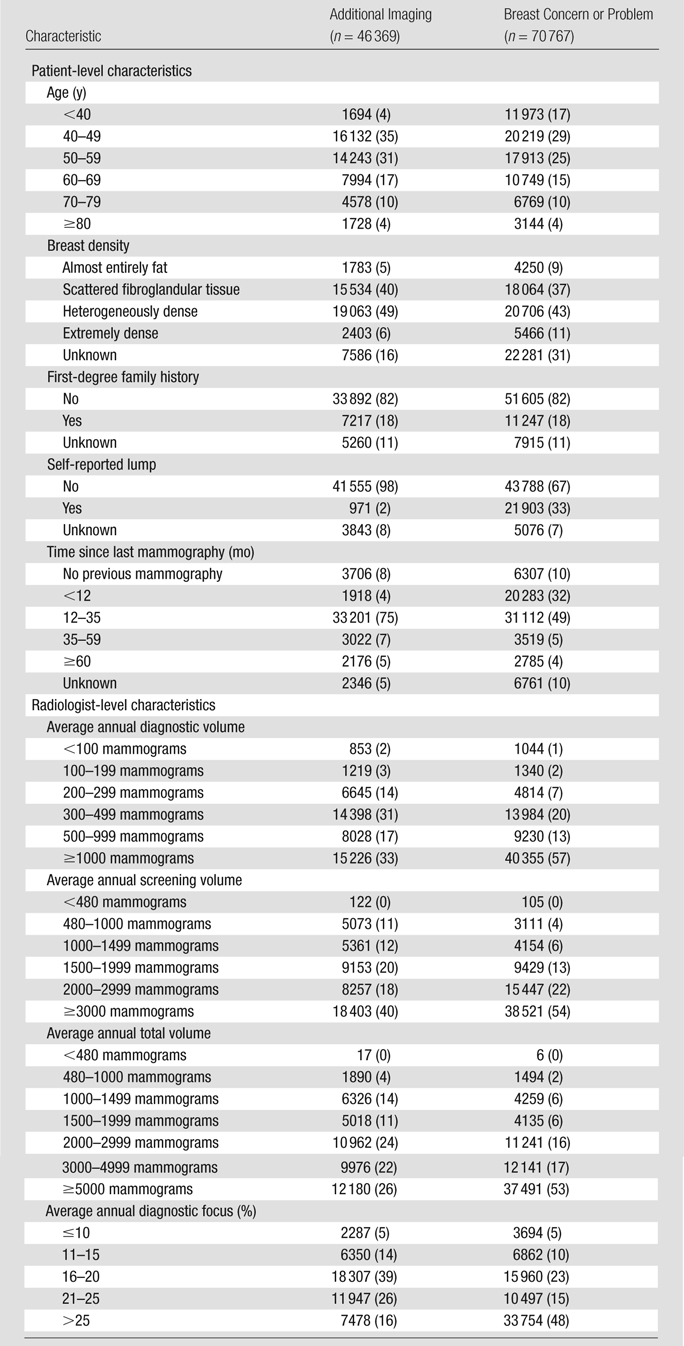

Table 2 presents characteristics of women who underwent mammography for the purpose of additional imaging or to evaluate a breast concern or problem; Tables E2 and E3 (online) present this information stratified by diagnostic volume. A greater proportion of mammograms were obtained to evaluate a breast concern or problem in women aged at least 40 years (17%, compared with 4% for additional imaging mammography). Only 2% of the 42 526 mammograms obtained for additional imaging that were not missing symptom information revealed a lump compared with 33% of the 65 691 mammograms obtained because of a breast concern or problem that had nonmissing symptom information. Eighteen percent of mammograms obtained in patients who were not missing self-reported family history indicated a positive prior history.

Table 2.

Distribution of Patient and Radiologist Characteristics for Mammograms Obtained for Additional Imaging and for Breast Concern or Problem

Note.—Data are numbers of mammograms, and data in parentheses are percentages. Unknown percentages are based on all mammograms; remaining percentages are based on mammograms with the characteristic known.

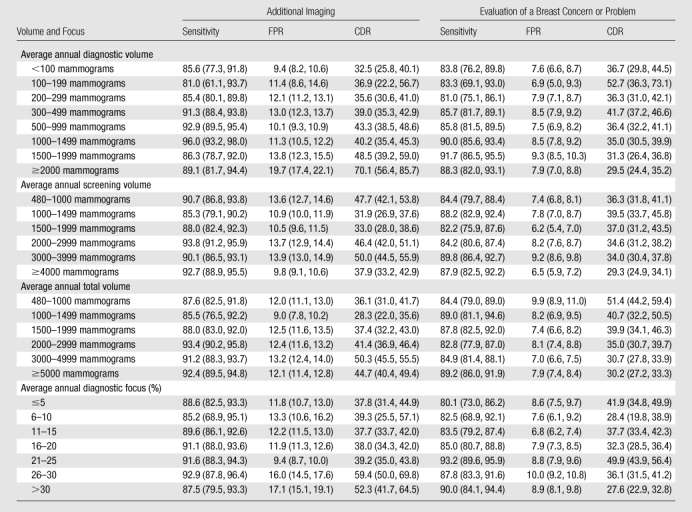

Table 3 presents unadjusted performance estimates across categorized volume measures. No consistent patterns emerged for additional imaging mammography. For mammography performed because of a breast concern or problem, sensitivity increased as average annual diagnostic volume and diagnostic focus increased. Further, CDR decreased with increased average annual total volume, screening volume, and diagnostic volume.

Table 3.

Unadjusted Diagnostic Performance Measure Estimates across Categorized Volume Measures, Stratified by Indication

Note.—Data are estimates, and data in parentheses are 95% CIs. Estimates were obtained by collapsing across all mammograms within the volume stratum, and 95% CIs were calculated by inverting a likelihood ratio test. FPR is rate per 100 mammograms, and CDR is rate per 1000 mammograms.

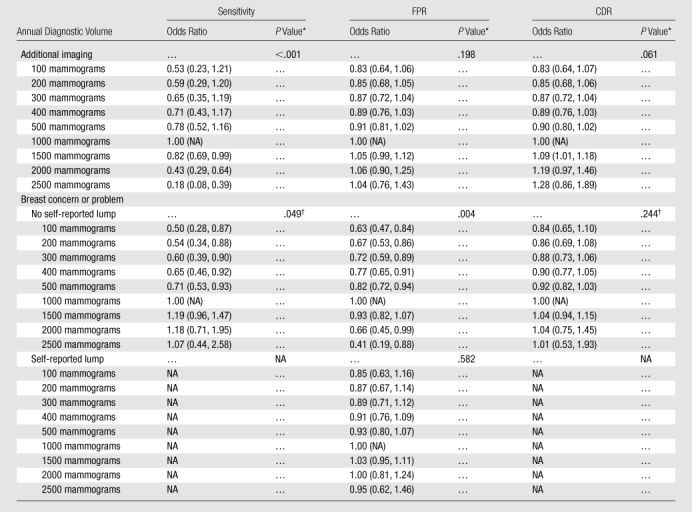

In adjusted analyses, diagnostic volume was significantly (P < .001) associated with sensitivity for additional imaging mammography (Table 4, Fig E4 [online]); the estimated odds ratios were bell shaped, with an apex at 1000 mammograms. When we compared diagnostic volumes of 1500 and 1000 mammograms, the estimated odds ratio was 0.82 (95% CI: 0.69, 0.99); that is, the odds of a true-positive assessment for an additional imaging mammogram interpreted by a radiologist with a diagnostic volume of 1500 mammograms are approximately 18% lower than those for a radiologist with a diagnostic volume of 1000 mammograms. When we compared a diagnostic volume of 2000 mammograms with a diagnostic volume of 1000 mammograms, the estimated odds ratio for sensitivity decreased to 0.43 (95% CI: 0.29, 0.64). When we decreased diagnostic volume to 300 mammograms, the estimated odds ratio for sensitivity compared with 1000 mammograms decreased to 0.65 (95% CI: 0.35, 1.19). There was insufficient evidence of an association between diagnostic volume and FPR (P = .20) or CDR (P = .061).

Table 4.

Estimated Adjusted Odds Ratio Associations between Annual Diagnostic Volume and Diagnostic Performance

Note.—Unless otherwise indicated, data are odds ratios, and data in parentheses are 95% CIs. FPR is rate per 100 mammograms, and CDR is rate per 1000 mammograms. For mammography performed to evaluate a breast concern or problem, an interaction with self-reported presence of a lump was evaluated. When the interaction was not significant, a main-effects only model was fit, and lump stratum–specific estimates were the same. When the interaction was significant, lump stratum–specific estimates differed. Results correspond to Figure E4 (online). Sensitivity and CDR data were not obtained for women with a self-reported lump because interaction with lump was not significant (see results for No self-reported lump). NA = not applicable.

Two-sided omnibus P value for overall association based on natural cubic spline model.

P value for main effects–only model when an interaction between volume and presence of self-reported lump was not significant.

Mammography performed to evaluate a breast concern or problem revealed no interaction between diagnostic volume and self-reported lump for sensitivity (P = .27) or CDR (P = .20); for FPR, the interaction was significant (P = .011). On the basis of a main effects–only model, the association between diagnostic volume and sensitivity increased until a diagnostic volume of approximately 1500 mammograms was reached; thereafter, the association reached a plateau (P = .049) (Table 4, Figure E4 [online]). Given the significant interaction, results for FPR have been stratified according to self-reported lump. For women who did not report a lump, diagnostic volume was associated with FPR for mammography performed to evaluate a breast concern or problem (P = .004). The estimated association was bell shaped, increased to an apex of approximately 1100 mammograms, and then decreased. In women who reported a lump, we detected no association (P = .58). CDR displayed no evidence of an association with diagnostic volume for mammography performed because of a breast concern or problem (P = .24).

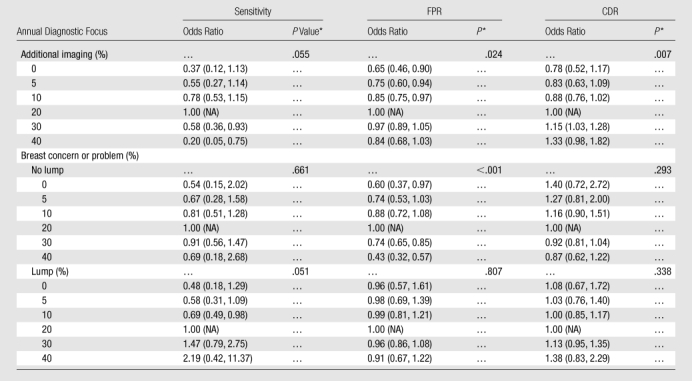

For additional imaging mammography, diagnostic focus exhibited a marginal association with sensitivity (P = .055) and significant associations with FPR (P = .024) and CDR (P = .007) (Table 5, Fig E5 [online]). For both sensitivity and FPR, the estimated odds ratio curve is bell shaped, increasing to apexes of 18% and 23%, respectively, and then decreasing. When we compared diagnostic focuses of 10% and 20%, the estimated adjusted odds ratio for FPR was 0.85 (95% CI: 0.75, 0.97). That means the odds of a false-positive assessment for an additional imaging mammogram interpreted by a radiologist with a diagnostic focus of 10% were approximately 15% lower than the odds of a false-positive assessment for an additional imaging mammogram interpreted by a radiologist with 20% diagnostic focus. When we compared two additional imaging mammograms interpreted by radiologists with diagnostic foci of 5% and 20%, the odds ratio for FPR decreased to 0.75 (95% CI: 0.60, 0.94). For CDR, the estimated odds ratio curve increased linearly from 0.83 to 1.33, respectively, when we compared diagnostic foci of 5% and 40% with a focus of 20%

Table 5.

Estimated Adjusted Odds Ratio Associations and Annual Diagnostic Focus and Diagnostic Performance

Note.—Unless otherwise indicated, data are odds ratios, and data in parentheses are 95% CIs. FPR is rate per 100 mammograms, and CDR is rate per 1000 mammograms. For breast concern or problem mammography, an interaction with self-reported presence of a lump was evaluated and found to be significant; hence, lump stratum–specific results are presented. Results correspond to Figure E5 (online).

Two-sided omnibus P value for overall association based on natural cubic spline model.

For all three performance measures, the interaction between diagnostic focus and self-reported lump in mammography performed to address a breast concern or problem was significant (P = .020, P < .001, and P < .027 for sensitivity, FPR, and CDR, respectively). Among women who did not report a lump, the association between diagnostic focus and both sensitivity and FPR was bell shaped (Table 5, Fig E5 [online]); only the association with FPR was significant (P < .001). For CDR, the estimated odds ratio curve linearly decreased from 1.27 to 0.87, respectively, when we compared diagnostic foci of 5% and 40% with a focus of 20%; however, this difference was not significant (P = .293). Among women who reported a lump, diagnostic focus was marginally associated with sensitivity (P = .051); when compared with a focus of 20%, increasing the diagnostic focus from 5% to 30% corresponded to an estimated odds ratio increase (from 0.58 [95% CI: 0.31, 1.09] to 1.47 [95% CI: 0.79, 2.75]).

While not significant, there was borderline evidence of an increasing association between screening volume and FPR for both additional imaging mammography (P = .098) and breast concern or problem mammography (P = .076) (Table E5, Fig E6 [online]). There were nonlinear associations between screening volume and sensitivity among women who underwent mammography to address a breast concern or problem. In women who did not report a lump, the estimated association was bell-shaped, with an apex at approximately 3000 mammograms (P = .003); in women who did report a lump, the estimated association increased until approximately 4000 mammograms and then plateaued (P = .148). For CDR, we found no evidence of an association with screening volume. Neither indication had consistent evidence of a relationship between total volume and diagnostic performance (Table E6 [online]).

The clinical interpretation of results did not change after we completed the sensitivity analyses described previously.

Discussion

We found that diagnostic volume and diagnostic focus were most consistently associated with performance; neither screening volume nor total volume was consistently associated with performance. For diagnostic volume and diagnostic focus, the associations were approximately quadratic: Radiologists with either low volume/focus or high volume/focus had the lowest FPR; radiologists with an annual diagnostic volume of approximately 1000 mammograms or a diagnostic focus of approximately 20% had the highest FPR. In parallel, radiologists with either low volume/focus or high volume/focus had the lowest sensitivity, indicating an important tradeoff between the two performance measures. These results generally held for both additional imaging and breast concern or problem diagnostic mammography. In contrast, CDR for additional imaging mammography increased linearly as both diagnostic volume and diagnostic focus increased; no relationship between volume and CDR was found for breast concern or problem mammography.

The mechanisms by which radiologists acquire experience and translate it into improved diagnostic mammography performance are likely complex. Particularly challenging is the fact that both accrual of experience, for which we view radiologist interpretive volume as a measurable surrogate, and diagnostic performance are multifaceted. That both annual diagnostic volume and diagnostic focus exhibited the strongest evidence of an association suggests that a key determinant in developing thresholds for considering diagnostic examination findings abnormal is the accrual of diagnostic mammography experience, as opposed to experience in the interpretation of screening mammograms.

Both diagnostic volume and diagnostic focus had strong nonlinear relationships with performance: radiologists at the extremes of the volume range had worse performance for sensitivity and better performance for FPR. This may reflect changing mechanisms by which increasing diagnostic experience may influence thresholds for recommending biopsy of a suspicious lesion; as diagnostic volume or diagnostic focus increases, radiologists are exposed to more mammograms obtained in women who ultimately receive a breast cancer diagnosis. One hypothesis is that radiologists benefit from this because they learn to recognize different abnormalities as benign or malignant and then, because they have experience interpreting a broader range of lesions, sensitivity increases and FPR decreases. However, the incremental learning benefit may diminish with volume, leading to a plateau effect, or it may ultimately be detrimental as workload increases to very high levels.

We found evidence of significant interaction between the presence of a lump and both diagnostic volume and diagnostic focus for FPR in mammography performed because of a breast concern or problem. Before the study, we hypothesized that the skill required to examine a lump may be different than that required for other concerns and that the accrual of experience may be more beneficial to the interpretation of mammograms obtained in women who did not have self-reported lumps (the latter generally are more ambiguous).

Buist et al (18) recently reported on a comprehensive study of the association between interpretive volume and screening performance. Radiologists with higher annual volume had clinically and significantly lower FPR with similar sensitivity when compared with radiologists with lower volume. In addition, radiologists with a greater screening focus had lower sensitivity, FPR, and CDR. Food and Drug Administration regulations state that U.S. physicians who interpret mammograms must have interpreted 960 mammograms within the previous 24 months. The Food and Drug Administration does not distinguish between screening and diagnostic mammography for volume requirements. As we have found, the relationships between continuing experience and diagnostic performance are complex, confounding regulations based on the simple use of a lower limit for continuing experience. Further, only 33% of diagnostic mammograms for which the radiologist-given indication was breast concern or problem were obtained in women who had reported a breast lump. This suggests some examinations performed in asymptomatic or quasisymptomatic women are routinely being classified as diagnostic examinations instead of as screening examinations. Regulatory thresholds for the number of diagnostic mammograms could therefore be easily circumvented by lack of adherence to strict definitions of what constitutes a screening examination, rendering the threshold ineffective.

This study had some limitations. These are observational data, and we cannot rule out the possibility of residual confounding or differences in case mix across radiologists and time. Further, because of sparse data, we restricted our analyses to prespecified volume ranges. Although the observed associations may be validly interpreted within these ranges, the results may not be generalized to radiologists with very high volumes.

This study also had a number of important strengths. Detailed information on a large sample of mammograms from the BCSC permitted comprehensive characterization of volume and its association with diagnostic interpretive performance in community-based settings. Our volume data were verified by radiology facility records; therefore, we did not need to rely on self-reported estimates, and we were able to control for a number of well-known correlates of diagnostic performance. The prospective linkage of radiologist-specific volume measures with performance in the subsequent year strengthens the interpretation of our results. Finally, the coverage of the BCSC across the United States ensures that our results are representative of the associations in community-based settings (15).

This study suggests that diagnostic mammography experience, more immediate feedback generated during the diagnostic process, or both may be beneficial and should be encouraged and shared among radiologists. Because of the multifaceted nature of interpretive volume and diagnostic performance, it is a challenge to characterize their relationship and doing so limits the practicality of setting regulatory thresholds for diagnostic mammographic interpretation.

Advances in Knowledge.

Neither annual total volume nor screening volume was consistently associated with diagnostic performance.

Annual diagnostic volume exhibited a strong association with sensitivity of diagnostic mammography performed for additional imaging or for evaluation of breast concerns or problems (P < .001 and P = .049, respectively).

For diagnostic mammography performed to evaluate breast concerns or problems, significant interactions between diagnostic focus and the presence of a self-reported lump were found for each performance measure (P = .020, P < .001, and P = .027 for sensitivity, false-positive rate, and cancer detection rate, respectively).

False-positive rate exhibited an increasing association with diagnostic focus up to a focus of 20%; beyond this apex, false-positive rate decreased with increasing diagnostic focus.

No consistent association was found between any volume measure and cancer detection rate.

Implication for Patient Care.

Our results suggest that diagnostic interpretive volume is a key determinant in developing thresholds for considering a diagnostic mammogram to be abnormal; however, because of the complex and multifaceted nature of interpretive volume and diagnostic performance, it is difficult to characterize their relationship, which limits the practicality of setting regulatory thresholds for diagnostic mammographic interpretation.

Disclosures of Potential Conflicts of Interest: S.H. No potential conflicts of interest to disclose. D.S.M.B. No potential conflicts of interest to disclose. D.L.M. No potential conflicts of interest to disclose. M.L.A. No potential conflicts of interest to disclose. P.A.C. No potential conflicts of interest to disclose. T.O. No potential conflicts of interest to disclose. B.M.G. No potential conflicts of interest to disclose. K.K. No potential conflicts of interest to disclose. R.D.R. No potential conflicts of interest to disclose. B.C.Y. No potential conflicts of interest to disclose. J.G.E. No potential conflicts of interest to disclose. S.H.T. No potential conflicts of interest to disclose. R.A.S. No potential conflicts of interest to disclose. E.A.S. No potential conflicts of interest to disclose.

Supplementary Material

Acknowledgments

We thank Rebecca Hughes, BA, for editorial assistance and the participating radiologists, women, and mammography facilities for the data they have provided for this study. A list of the BCSC investigators and procedures for requesting BCSC data for research are provided at http://breastscreening.cancer.gov/.

Received June 7, 2011; revision requested July 24; revision received July 27; accepted August 3; final version accepted August 15.

Funding: This research was supported by the National Cancer Institute (grants 1R01 CA107623, 1K05 CA104699, U01CA63740, U01CA86076, U01CA86082, U01CA63736, U01CA70013, U01CA69976, U01CA63731, and U01CA70040).

Supported by the American Cancer Society, the Longaberger Company’s Horizon of Hope Campaign (SIRGS-07-271-01, SIRGS-07-272-01, SIRGS-07-274-01, SIRGS-07-275-01, SIRGS-06-281-01, ACS A1-07-362), the Agency for Healthcare Research and Quality (HS-10591), and the Breast Cancer Stamp Fund.

Abbreviations:

- BCSC

- Breast Cancer Surveillance Consortium

- CDR

- cancer detection rate

- CI

- confidence interval

- FPR

- false-positive rate

References

- 1.Sickles EA, Miglioretti DL, Ballard-Barbash R, et al. Performance benchmarks for diagnostic mammography. Radiology 2005;235(3):775–790 [DOI] [PubMed] [Google Scholar]

- 2.Nass S, Ball J; Committee on Improving Mammography Quality Standards Improving breast imaging quality standards. Washington, DC: National Academies Press, 2005 [Google Scholar]

- 3.Barlow WE, Lehman CD, Zheng Y, et al. Performance of diagnostic mammography for women with signs or symptoms of breast cancer. J Natl Cancer Inst 2002;94(15):1151–1159 [DOI] [PubMed] [Google Scholar]

- 4.Burnside ES, Sickles EA, Sohlich RE, Dee KE. Differential value of comparison with previous examinations in diagnostic versus screening mammography. AJR Am J Roentgenol 2002;179(5):1173–1177 [DOI] [PubMed] [Google Scholar]

- 5.Flobbe K, van der Linden ES, Kessels AG, van Engelshoven JM. Diagnostic value of radiological breast imaging in a non-screening population. Int J Cancer 2001;92(4):616–618 [DOI] [PubMed] [Google Scholar]

- 6.Houssami N, Irwig L, Simpson JM, McKessar M, Blome S, Noakes J. The contribution of work-up or additional views to the accuracy of diagnostic mammography. Breast 2003;12(4):270–275 [DOI] [PubMed] [Google Scholar]

- 7.Houssami N, Irwig L, Simpson JM, McKessar M, Blome S, Noakes J. The influence of clinical information on the accuracy of diagnostic mammography. Breast Cancer Res Treat 2004;85(3):223–228 [DOI] [PubMed] [Google Scholar]

- 8.Yankaskas BC, Haneuse S, Kapp JM, et al. Performance of first mammography examination in women younger than 40 years. J Natl Cancer Inst 2010;102(10):692–701 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Miglioretti DL, Smith-Bindman R, Abraham L, et al. Radiologist characteristics associated with interpretive performance of diagnostic mammography. J Natl Cancer Inst 2007;99(24):1854–1863 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Sickles EA, Wolverton DE, Dee KE. Performance parameters for screening and diagnostic mammography: specialist and general radiologists. Radiology 2002;224(3):861–869 [DOI] [PubMed] [Google Scholar]

- 11.Goldman LE, Walker R, Miglioretti DL, Smith-Bindman R, Kerlikowske K; National Cancer Institute Breast Cancer Surveillance Consortium Accuracy of diagnostic mammography at facilities serving vulnerable women. Med Care 2011;49(1):67–75 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Jackson SL, Taplin SH, Sickles EA, et al. Variability of interpretive accuracy among diagnostic mammography facilities. J Natl Cancer Inst 2009;101(11):814–827 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Jensen A, Vejborg I, Severinsen N, et al. Performance of clinical mammography: a nationwide study from Denmark. Int J Cancer 2006;119(1):183–191 [DOI] [PubMed] [Google Scholar]

- 14.Ballard-Barbash R, Taplin SH, Yankaskas BC, et al. Breast Cancer Surveillance Consortium: a national mammography screening and outcomes database. AJR Am J Roentgenol 1997;169(4):1001–1008 [DOI] [PubMed] [Google Scholar]

- 15.National Cancer Institute Breast Cancer Surveillance Consortium. U.S. National Institutes of Health Web site. http://breastscreening.cancer.gov/. Updated February 24, 2011. Accessed April 29, 2011

- 16.Carney PA, Goodrich ME, Mackenzie T, et al. Utilization of screening mammography in New Hampshire: a population-based assessment. Cancer 2005;104(8):1726–1732 [DOI] [PubMed] [Google Scholar]

- 17.Elmore JG, Jackson SL, Abraham L, et al. Variability in interpretive performance at screening mammography and radiologists’ characteristics associated with accuracy. Radiology 2009;253(3):641–651 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Buist DS, Anderson ML, Haneuse SJ, et al. Influence of annual interpretive volume on screening mammography performance in the United States. Radiology 2011;259(1):72–84 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.American College of Radiology ACR BI-RADS–Mammography. In: ACR Breast Imaging and Reporting and Data System, Breast Imaging Atlas. 4th ed. Reston, Va: American College of Radiology, 2003 [Google Scholar]

- 20.Breast Cancer Surveillance Consortium BCSC Glossary of Terms. National Cancer Institute Web site. http://breastscreening.cancer.gov/data/bcsc_data_definitions.pdf. Updated September 16, 2009. Accessed May 4, 2011

- 21.Lehmann EL, Romano JP. Testing statistical hypotheses. New York, NY: Springer, 2005 [Google Scholar]

- 22.Hastie T, Tibshirani R, Friedman J. The elements of statistical learning: data mining, inference, and prediction. New York, NY: Springer, 2009 [Google Scholar]

- 23.Liang KY, Zeger S. Longitudinal data analysis using generalized linear models. Biometrika 1986;73(1):13–22 [Google Scholar]

- 24.Miglioretti DL, Haneuse SJ, Anderson ML. Statistical approaches for modeling radiologists’ interpretive performance. Acad Radiol 2009;16(2):227–238 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Team RDC R: A language and environment for statistical computing. R Foundation for Statistical Computing Web site. http://cran.r-project.org/. Accessed July 21, 2011

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.