The senses serve as portals thorough which the brain samples the environment. Each transduces a different form of energy, and thus provides an independent sample of the same event. The senses can compensate for one another when necessary and complement one another when reporting about the same event. But, most impressive is that the brain can integrate the information it gathers from these different sensory channels to provide real-time benefits in event detection and scene analysis that would otherwise be impossible. The survival value of such a system is substantial.

Biologic systems devised this strategy of “multisensory integration” very early in evolution, even before a brain was invented. They have extended and refined this process during evolution under most challenging circumstances (see (Stein and Meredith, 1993)). Nature is harsh in its assessment of innovation, and selection has yielded a striking diversity in the functional capabilities of sensory systems in different ecological niches of their host organisms. The same is true of the brains that process this information. Yet, despite this diversity, there are species-independent similarities in how multiple sensory inputs are integrated (e.g., (King and Palmer, 1985;Meredith and Stein, 1983;Frens and Van Opstal, 1998;Wallace et al., 1996;Angelaki et al., 2010;Ernst and Banks, 2002)). For example, in the detection of salient environmental events, one common strategy is to pool information across the senses according to the spatial and temporal relationships between observed cross-modal stimuli despite variation in the types of sensors providing this information. The commonality of these principles across species may reflect the fact that the constancies of space and time supersede variations in biology and ecological niche.

Presumably, the ability to determine that stimuli accessing different sensory channels are linked to the same event is unavailable to the naïve brain, and yet such information is essential to construct a neural circuit that uses sensory systems synergistically to optimally detect and disambiguate environmental events. This determination results from early life experiences which, via simple learning rules based on the spatial and temporal congruence of cross-modal cues, could guide the formation of the underlying structural and functional architecture for making such determinations. Indeed, early life appears to be a time during which the brain uses its own experience to determine the integrative principles that will enhance the detection and identification of biologically significant events.

There are several parallel objectives in this discussion: first to describe multisensory integration at the level of the single neuron and its implications for overt behavior, then to detail the development and maturation of this process and its early plasticity, and finally to discuss the plasticity of multisensory neurons in adulthood.

The Mature Superior Colliculus (SC)

A good deal of information has been obtained about the principles that govern multisensory integration at the level of the single neuron. Most of this has been derived from multisensory neurons in the cat superior colliculus (SC), although other species have also been studied (see, e.g., (Hartline et al., 1978;Gaither and Stein, 1979;Stein and Gaither, 1981;Zahar et al., 2009). The SC has approximately the same location and function (orientation and localization) in all mammals (see Fig. 1).

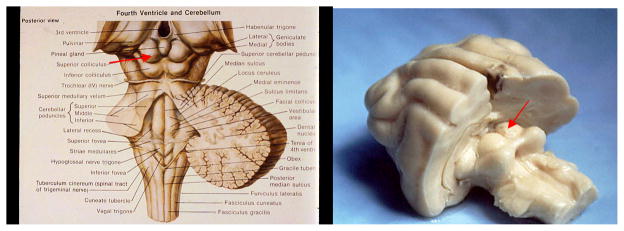

Fig. 1. The Superior Colliculus (SC).

Left: a schematic of the human brain. Right: photograph of a cat brain. In both cases cortex was removed to provide an unobstructed view of the midbrain. The SC appears as a pair of hillocks rostral/superior to the inferior colliculus and caudal/medial to the lateral geniculate nucleus.

One of the many attractive features of the cat SC as a model for studying this process is that it is a site at which unisensory visual, auditory and somatosensory projections converge onto individual neurons (Wallace et al., 1993;Stein and Meredith, 1993;Huerta and Harting, 1984;Edwards et al., 1979;Fuentes-Santamaria et al., 2009). As a result, its different constituent neurons become unisensory, bisensory or trisensory, and the multisensory responses of these neurons reflect an operation that is taking place on-site, not one that happened elsewhere and was referred to the SC.

The majority of neurons in the multisensory (i.e., deep) layers are visually-responsive. Most common is the bisensory visual-auditory neuron, followed by the visual-somatosensory neuron. These have generally served as the exemplars for understanding the circuitry, principles, and underlying computations of multisensory integration, with use of the former type far more common than the latter. This is most likely due to the ease of finding visual-auditory neurons, and the ease of providing appropriate stimuli. However, the principles of multisensory integration appear to apply equally well to all modality convergence patterns.

Another attractive feature of this model is that SC activity is directly coupled to specific behaviors: detection, orientation, and localization (Stein and Meredith, 1993;Lomber et al., 2001). This is achieved through its descending projections to the motor regions of the brainstem and spinal cord (Moschovakis and Karabelas, 1985;Stein and Meredith, 1993;Peck and Baro, 1997). This affords one the opportunity to compare neurophysiological observations from individual SC neurons to SC-mediated overt behaviors. This is not a simple task in higher-order centers where the link between a simple behavioral response and a neural response is not as evident. As one would predict, the same principles that govern the responses of individual SC neurons to cross-modal stimuli also govern SC-mediated responses to them (Stein et al., 1988;Jiang et al., 2002;Jiang et al., 2007;Burnett et al., 2000;Burnett et al., 2004;Gingras et al., 2009;Bell et al., 2005;Frens and Van Opstal, 1998).

Another benefit to this model is that the cat is born at an early maturational stage (see below), and requires substantial postnatal development before achieving its adult-like status. As a result, one can observe functional changes as they appear and are elaborated over time. The mass of information that had already been accumulated about the maturation of its unisensory (largely visual) neuronal properties (e.g., (Stein, 1984)) provides a benchmark for sensory maturation that has also been extremely helpful. Thus, except where other species are noted, the discussion below will be referring to this animal.

Semantic Issues in Multisensory Integration

Multisensory integration refers to the process by which a combination of stimuli from different senses (i.e. “cross-modal” stimulus) produce a neural response product that differs significantly from that evoked by the individual component stimuli, indicating a fusion of information. Multisensory integration has been defined at the level of the individual neuron as: a statistically significant difference between the number of impulses evoked by a cross-modal combination of stimuli and the number evoked by the most effective of these stimuli individually (see (Stein and Meredith, 1993)). This definition incorporates both multisensory response enhancement and response depression, but the former is more often used as an index of this process than the latter. This is because it is found in all the neurons exhibiting multisensory integration, whereas the latter is found only in a subset of the neurons showing response enhancement (Kadunce, et al., 1997).

It does not refer to other multisensory processes such as those involved in cross-modal matching, where the individual sensory components must retain their independence so that they can be compared, as for example, when one compares the sight or feel of a given object. Nor does it refer to “amodal” processes; for example, engaged in comparing equivalencies in size, intensity, or number across senses (see (Stein et al., 2010)).

The Underlying Computation

It is important to note that multisensory integration, as indicated by response enhancement, can reflect a variety of underlying computations (e.g., subadditive, additive, superadditive) that expose how a given neuron has integrated two or more different sensory inputs. Although superadditive responses most dramatically illustrate this phenomenon, superadditivity is not a prerequisite for multisensory integration. This important distinction is sometimes misunderstood. All cases of multisensory enhancement increase the physiological salience of the signal and thereby the probability that the organism will respond appropriately to an event. Multisensory depression does the opposite (Spence et al., 2004;Calvert et al., 2004;Gillmeister and Eimer, 2007;Stein and Stanford, 2008), and in the case of the SC, may reflect a competition among stimuli for access to the motor circuitry (Stanford and Stein, 2007).

The Principles of Multisensory Integration

Multisensory SC neurons and SC-mediated behavior appear to follow a common set of operational principles (Stein and Meredith, 1993). Generally, cross-modal stimuli that are presented at the same time and place within their respective receptive fields enhance response magnitude, while cross-modal stimuli presented at different locations or times degrade or do not affect responses (Fig. 2). These are described by the “spatial” and “temporal” principles of integration. The degree to which a response is enhanced or depressed is inversely related to the effectiveness of the individual component stimuli (the “principle of inverse effectiveness”). This makes intuitive sense, as potent responses have less “room” for enhancement than do weak responses. The challenges and successes in defining and using these principles have recently been discussed in detail (Stein and Stanford, 2008;Stein et al., 2010;Stein et al., 2009) and the operational definitions of “same place” and “same time”, as well as the interactions between spatial and temporal factors have been detailed empirically (Kadunce et al., 1997;Kadunce et al., 2001;Meredith et al., 1987;Meredith and Stein, 1986;Meredith and Stein, 1983;Meredith and Stein, 1996;Royal et al., 2009).

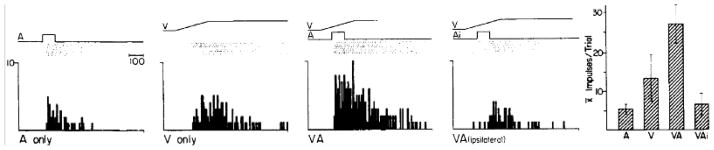

Fig. 2. Multisensory enhancement and depression: The spatial principle.

Left: A square-wave broadband auditory stimulus (A, 1st panel) and a moving visual stimulus (ramp labeled V, 2nd panel) evoked unisensory responses from this neuron (illustrated below the stimulus by rasters and peri-stimulus time histograms). Each dot in the raster represents a single impulse and each row a single trial. The 3rd panel shows the response to their presentation at the same time and location, which is much more robust than either unisensory response. But, when the auditory stimulus was moved into ipsilateral auditory space (Ai) and out of the receptive field, its combination with the visual stimulus elicited fewer impulses than did the visual stimulus individually. This ‘response depression’ is illustrated within the 4th panel. Right: The mean number of impulses/trial elicited by each of the 4 stimulus configurations. Note the difference between multisensory enhancement (VA) and multisensory depression (VAi).

Simple Heuristics

A “rule of thumb” in remembering the principles of multisensory integration is that cross-modal stimuli likely to be linked to a common event enhance activity and behavioral performance, whereas those likely to be linked to different events degrade activity and performance. Furthermore, the proportionate multisensory enhancement benefits that accrue are greatest when the integrated stimuli are least effective.

The term ‘proportionate’ is of key importance when considering the impact of multisensory integration. Multisensory responses are based on their magnitude relative to that of their presumptive component responses. Thus, even superadditive multisensory responses may be less robust in a given circumstance than those evoked by a highly effective modality-specific stimulus (see Stein and Stanford 2008; Stein et al., 2009).

The Generality of the SC Model

These fundamental principles of multisensory integration, though derived from studies of the SC, appear to be general ones. They apply to multisensory neurons in other structures (e.g., (Wallace et al., 1992)) and are helpful in understanding behaviors other than those mediated by the SC. But it is important to remember that the principles discussed here are basic, they neither take into account higher order cognitive issues such as semantic congruence and expectation, or issues relevant to the state of the organism, such as hunger, thirst, fear, etc. The higher-order influences over this process are likely to differ in different brain circuits to best suit their functions. The impact of higher-order factors on the cellular processes underlying multisensory integration are poorly understood at present, but are being examined in a number of laboratories. It is also important to remember that the impact of each of these principles may not always be obvious or relevant in all situations, especially when examining their applicability to behaviors or perceptions uninvolved in the detection and localization of events from which they have been derived. For example, tasks involving no spatial component are unlikely to reflect the spatial principle. Although this may seem self-evident, it is sometimes overlooked.

The Essential Circuit for SC Multisensory Integration

Implementing multisensory integration may seem as simple as connecting different sensory inputs to a given neuron, but is not. This common assumption reflects a failure to appreciate the nature of the circuit that implements SC multisensory integration, and has hindered efforts to extract its features for non-biologic uses. Although cross-modal convergence is necessary for this process, it is not sufficient for its implementation. The essential circuit includes converging descending inputs from two or more unisensory regions of association cortex (e.g., the anterior ectosylvian sulcus, AES) as shown in Fig. 3 (see also (Fuentes-Santamaria et al., 2009;Alvarado et al., 2009;Fuentes-Santamaria et al., 2008)). In the absence of this cortical input SC neurons still respond to multiple sensory inputs (because they receive multiple inputs from many non-cortical sources) but cannot integrate those inputs to produce signal enhancement (Wallace and Stein, 1994;Jiang et al., 2001;Alvarado et al., 2007;Alvarado et al., 2008;Alvarado et al., 2009). Instead, they respond no better to the combined input than they do to the most effective of them individually (see Fig. 4). This physiological result is paralleled in behavioral observations as a loss of the performance benefit of multisensory integration in detection and localization (Jiang et al., 2002;Jiang et al., 2006;Jiang et al., 2007;Wilkinson et al., 1996). In short, the combination of modality-specific cues no longer provides significant operational benefits.

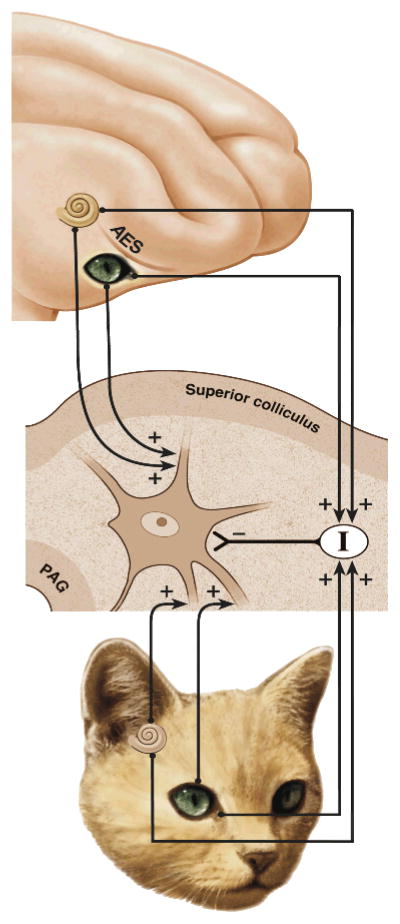

Fig. 3. Schematic model of the SC multisensory circuit.

Here the Auditory-Visual neuron is the exemplar multisensory neuron. Its inputs are derived from numerous unisensory sources. Of particular importance are the inputs that descend from an area of association cortex, the anterior ectosylvian sulcus or AES (top). The host of other inputs it receives (bottom) such as those ascending from sensory organs, relayed from other subcortical structures, or projecting from non-AES cortical areas have been collapsed here for illustrative and computational purposes. They form the ascending component of the input pathways illustrated here. Both the AES and non-AES inputs have a dual projection. Thus, they project also to a population of inhibitory interneurons. Together these afferents and target neurons constitute a circuit through which SC responses are a result of an excitatory (+)-inhibitory (−) balance of inputs. From Rowland et al. (2007).

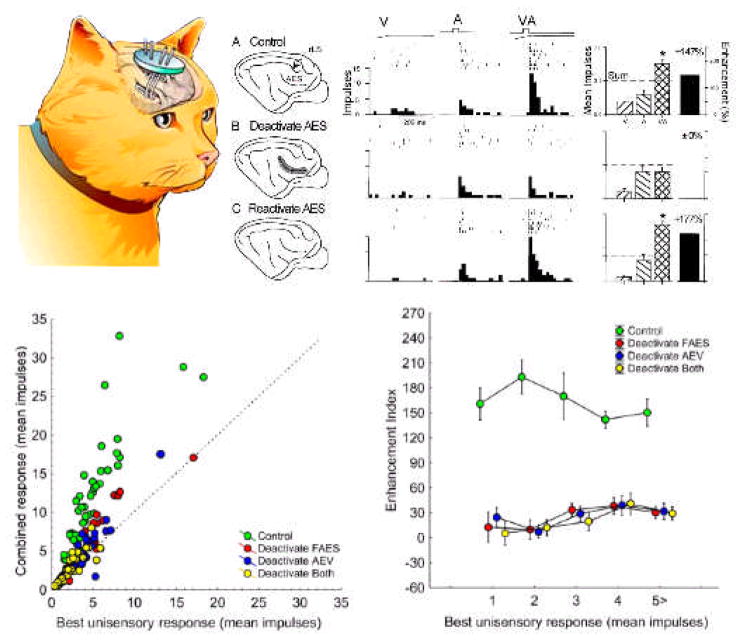

Fig. 4. The dependence of SC multisensory integration on cortex.

The top-left figure shows the placement of cryogenic coils in the relevant cortical areas. Cold fluid circulated through the coils reduces the temperature of the surrounding cortex and inhibits activity. The top-right figure shows the area deactivated and then reactivated in this procedure (shaded region) and sample responses from a visual-auditory neuron to visual (V), auditory (A), and spatiotemporally concordant visual-auditory stimuli. Prior to cooling (“Control”), the neuron shows an enhanced response to the visual-auditory stimulus complex. However, when cortex is cooled (“Deactivate AES”), the multisensory response is no longer statistically greater than the best unisensory response. Reactivating cortex (“Reactivate AES”) returns the neuron’s integrative capabilities. The bottom-left figure plots the multisensory response versus the best unisensory response for a population of similar visual-auditory neurons before deactivation (green), when only one subregion of AES is deactivated (red=FAES, blue=AEV), or when both are deactivated (yellow). The bottom right plots the enhancement index (percent difference between the multisensory and best unisensory response) for these four conditions against the best unisensory response. The results of this study indicate that there is a true “synergy” between the subregions of AES cortex in producing multisensory integration in the SC: deactivating one or the other subregion often yields results equivalent to deactivating both.

The Development of Multisensory Integration

The significance of multisensory integration discussed above, as well as its seeming ubiquity across species, might lead one to assume that it is an inherent brain process that is present or prescribed at birth, especially given the newborn’s heightened vulnerability. However, the data from experiments detailing the maturation of multisensory neurons in SC or cortex of animals indicated that the capability to engage in multisensory integration was not innate, but was acquired gradually during postnatal life as a consequence of experience with cross-modal stimuli (Wallace and Stein, 1997;Wallace and Stein, 2001;Wallace and Stein, 2007;Wallace et al., 1993;Wallace et al., 2006;Carriere et al., 2007). This is in keeping with the finding that many sensory systems are poorly developed at birth and require substantial postnatal refinement for optimum function. Indeed, integrating information across them is even more complex than using them independently and thus may require longer periods of postnatal maturation.

The cat is an altricial species, which makes it advantageous as a model because one can observe functional changes over its protracted postnatal developmental time period. The unisensory (particularly visual) response properties of these neurons are well-studied and provide a benchmark for sensory development (Stein, 1984). The newborn cat has poor motor control and is both blind and deaf, and its SC contains only tactile-responsive neurons (Stein et al., 1973), which presumably aid the neonate in suckling (Larson and Stein, 1984). SC responses to auditory stimuli are first apparent at 5 dpn (days postnatal) (Stein, 1984) and visual responses in the multisensory (deep) layers after several additional weeks (Kao et al., 1994). Obviously, prior to this time, these neurons cannot engage in multisensory integration.

It is necessary to be specific about the appearance of visual sensitivity in the multisensory layers of the SC, because the overlying superficial layers, which are purely visual, develop their visual sensitivity considerably earlier (Stein et al., 1973;Stein, 1984). Although superficial layer neurons are not directly involved in multisensory processes (their function is believed to more closely approximate that of neurons in the primary projection pathway), this superficial-deep developmental lag is still somewhat surprising because superficial layer neurons provide some of the visual input to the multisensory layers (Grantyn and Grantyn, 1984;Moschovakis and Karabelas, 1985;Behan and Appell, 1992). Apparently, the functional coupling of superficial neurons with their deep layer target neurons has not yet developed. The maturational distinction between visually-responsive neurons within the same structure underscores a key difference between unisensory neurons and those that will be involved in integrating inputs from different senses.

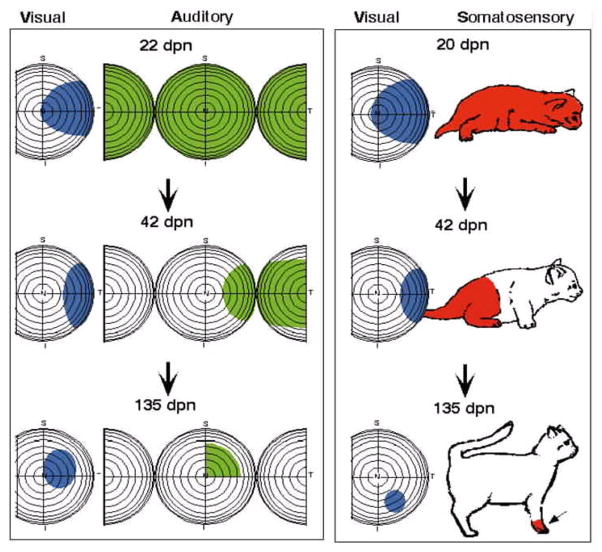

The chronology of multisensory neurons parallels but is delayed with respect to the chronology of unisensory development. The earliest multisensory neurons are somatosensory-auditory, appearing at approximately 10–12 days after birth. The first visual-nonvisual neurons take 3 weeks to appear (Stein et al., 1973;Kao et al., 1994;Wallace and Stein, 1997). However, the incidence of these multisensory neurons does not reach adult-like proportions until many weeks later. Visual, auditory and somatosensory receptive fields are all initially very large and contract significantly over months of development, thereby enhancing the resolution of their individual maps, the concordance among the maps, and of special importance in this context, the spatial concordance of the multiple receptive fields of individual neurons (Fig. 5). The changes are accompanied by increases in the vigor of neuronal responses to sensory stimuli, increases in response reliability, decrease in response latency, and an increase in the ability to respond to successive stimuli (Stein et al., 1973;Kao et al., 1994;Wallace and Stein, 1997). These functional changes reflect the maturation of the intrinsic circuitry of the structure, as well as the maturation and selection of its afferents resulting from selective strengthening and pruning of synapses.

Fig. 5. Receptive fields of SC neurons shrink during development.

Illustrated are the receptive fields of two types of neurons (left: visual-auditory, right: visual-somatosensory) at different developmental ages (increasing from top to bottom). As depicted, the receptive fields shrink in size as the animal ages, increasing their specificity and also their spatial concordance across the senses. Adapted from Wallace and Stein 1997.

However, these neonatal multisensory neurons are incapable of integrating their multiple sensory inputs. SC neurons do not show multisensory integration until at least a month of age, long after they have developed the capacity to respond to more than one sensory modality (Wallace and Stein, 1997). In other words, they respond to cross-modal stimulations as if only one (typically the more effective) stimulus is present. Once multisensory integration begins to appear, only a few neurons show it at first. Gradually more and more multisensory neurons begin to show integration, but it takes many weeks before the normal complement of neurons capable of multisensory integration is achieved.

The inability of neonatal multisensory neurons to integrate their different sensory inputs is not limited to the kitten, nor is it restricted to altricial species. The Rhesus monkey is much more mature at birth than is the cat, and already has many multisensory SC neurons. Apparently the appearance of mulitsensory neurons during development does not depend on postnatal experience, but on developmental stage, an observation we will revisit below. However, the multisensory neurons in the newborn primate, just like those in the cat, are incapable of integrating their different sensory inputs, and, in this regard, are distinctly different than their adult counterparts (Wallace and Stein 2001; Wallace et al., 1996). Presumably this is because they have not yet had the requisite experience with cross-modal events.

Recent observations in human subjects (Neil et al., 2006;Gori et al., 2008;Putzar et al., 2007) also suggest that there is a gradual postnatal acquisition of this capability, but there is no unequivocal information regarding the newborn. However, this does not mean that newborns have no multisensory processing capabilities, only that they cannot use cross-modal information in a synergistic way (i.e., do not engage “multisensory integration” as defined above). Those studying human development sometimes include other multisensory processes under this umbrella. The best example of this is cross-modal matching, a capacity that appears to be present early in life. However, as noted earlier, this process does not yield an integrated product. While it is clearly a multisensory process, it is not an example of multisensory integration (see (Stein et al., 2010) for more discussion). But, some caution should still be exercised here, as the brains and/or behaviors of only a limited number of species have been studied thus far. It may still turn out that some examples of multisensory integration, such as those involving chemical senses, and/or species that are rarely examined in the laboratory, develop prenatally and independent of experience. After all, it is probably an inherent characteristic of single celled organisms that have multiple receptors embedded in the same membrane.

How Experience Changes the Circuit for Multisensory Integration

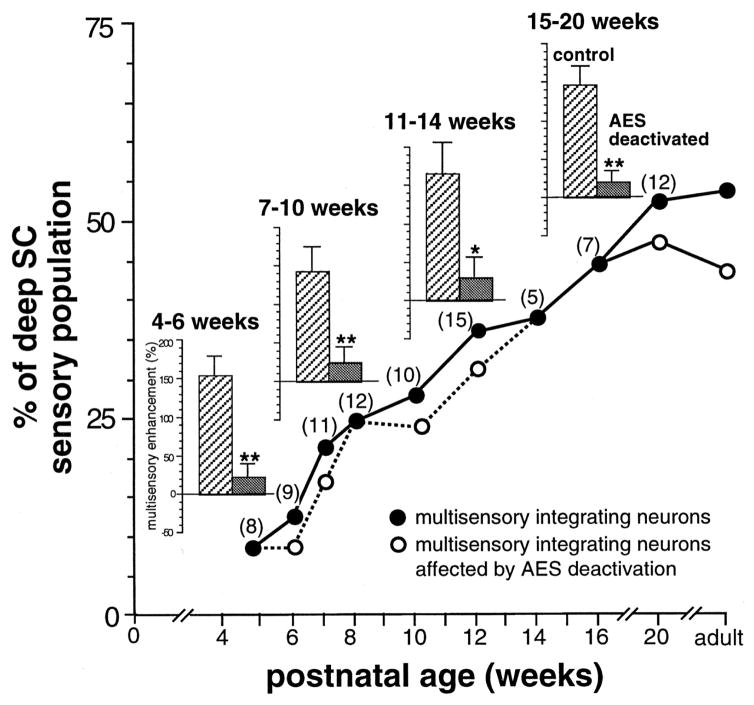

Inputs from AES have already reached the multisensory SC at birth, even before its constituent neurons become multisensory (McHaffie et al., 1988). Presumably, the inability of neonatal SC multisensory neurons to integrate their cross-modal inputs is because the AES-SC synaptic coupling is not properly functional (just as those from superficial layers are not). This is only a supposition, for at this point we know little about how this projection changes over time. Some of the AES inputs to the SC certainly become functional at about one month of age, for as soon after individual SC neurons exhibit multisensory integration, this capability can be blocked by deactivating AES (see Fig. 6 and (Wallace and Stein, 2000)). These relationships strengthen over the next few months.

Fig. 6. The developmental appearance of multisensory integration coincides with the development of AES-SC influences.

There is a progressive increase in the percent of SC neurons exhibiting multisensory integration capabilities as revealed by the graph. Note that whenever a neuron with integrating capabilities was located, the effect of AES deactivation was examined. Regardless of age, nearly all neurons lost this capability during cryogenic block of AES activity (numbers in parentheses show the number of neurons examined). Presumably, those SC neurons that were not affected by AES blockade were dependent on adjacent areas (e.g., rostral lateral suprasylvian cortex, see Jiang et al., 2001). From Wallace and Stein (2000).

This is also a period during which the brain is exposed to a variety of sensory stimuli, some of which are linked to the same event and some of which are not. Cross-modal cues that are derived from the same event often occur in spatiotemporal concordance, while unrelated events are far less tightly linked in space and time. Presumably, after sufficient experience, the brain has learned the statistics of those sensory events, which, via Hebbian learning rules, have been incorporated into the neural architecture underlying the capacity to integrate different sensory inputs. Such experience provides the foundation for a principled way of perceiving and interacting with the world so that only some stimulus configurations will be integrated and yield response enhancement or response depression.

Put another way, the experience leads the animal to expect that certain cross-modal physical properties covary (e.g. the timing and/or spatial location of visual and auditory stimuli) and this “knowledge” is used to craft the principles for discriminating between those stimuli derived from the same event and those derived from different events.

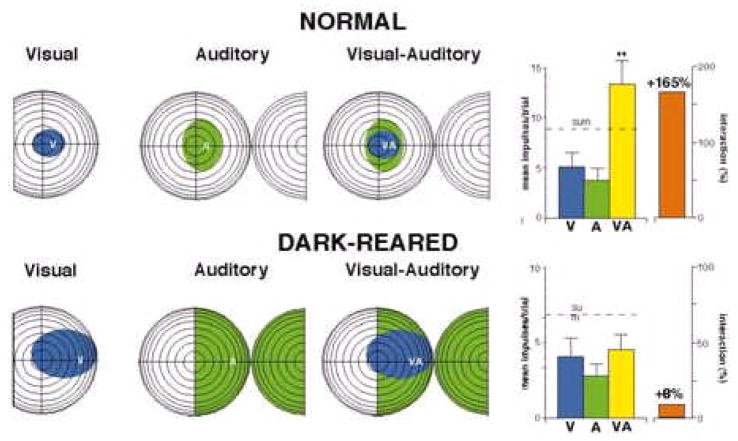

The first test of this hypothesis was aimed at determining whether experience is essential for the maturation of this process, visual-nonvisual experiences were precluded by rearing animals in darkness from birth to well after the maturation of multisensory integration is normally achieved (i.e., 6 months or more). Interestingly, this rearing condition did not prevent the development of visually-responsive neurons. In fact, in additional to unisensory neurons, each of the cross-modal convergence and response patterns characteristic of normal animals was evident in neurons within the SC of dark-reared animals, though their incidence was slightly different (Wallace et al., 2001;Wallace et al., 2004). This parallels the observations in monkey, which is born later in development than the cat but already has visual-nonvisual SC neurons. Visual experience is obviously not essential for the appearance of such neurons.

The receptive fields of these neurons in dark-reared cats were very large, more like neonatal SC neurons than those in the adult. Like neonatal neurons, they could not integrate their cross-modal inputs and their responses to cross-modal pairs of visual-nonvisual stimuli were no more vigorous than were their responses to the best of the modality-specific component stimuli (Fig. 7). As postulated, experience with visual-nonvisual stimuli proved to be necessary to develop the capacity to engage in multisensory integration. This is also consistent with observations in human subjects who had congenital cataracts removed during early life. Their vision seemed reasonably normal, but they were compromised in their ability to integrate visual and nonvisual cues, despite having years of experience after surgical correction (Putzar et al., 2007).

Fig. 7. Comparison between normal and dark-reared animals.

The sample neuron illustrated on top was recorded from the SC of a normally-reared animal. Its visual and auditory receptive fields are relatively small and in good spatial register with one another. The summary figure on the right indicates its responses to visual, auditory, and spatiotemporally concordant visual-auditory stimuli, which yields typical multisensory response enhancement. The sample neuron on the bottom was recorded from the SC of an animal reared in the dark. Its receptive fields are much larger, and while it responds to both visual and auditory stimuli, its response to a spatiotemporally concordant visual-auditory stimulus complex is statistically no greater than the response to the visual stimulus alone. Adapted from Wallace et al., 2004

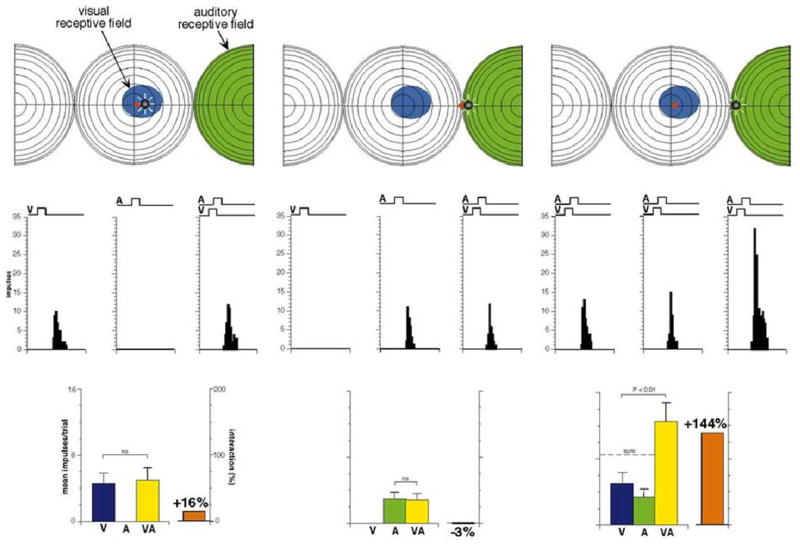

The next test of this hypothesis was to rear animals in conditions in which the spatiotemporal relationships of cross-modal stimuli were altered from “normal” experience, in which they are presumably in spatiotemporal concordance when derived from the same event. If cross-modal experience determines the governing principles of multisensory integration, then changing it should change the principles. This possibility was examined after rearing animals in special dark environments in which their only experience with simultaneous visual and auditory stimuli were when they were spatially displaced (Wallace and Stein, 2007). They were raised to 6 mos or more in this condition and then the multisensory integration characteristics of SC neurons were examined.

Similar to simply dark-reared animals, these animals possessed the full range of multisensory convergence patterns and there were many visual-nonvisual neurons. However, the properties of visually-responsive neurons were atypical: their receptive fields were very large and many were unable to integrate visual-nonvisual cues. There was, however, a sizable minority of visual-auditory neurons that were fully capable of multisensory integration, but the stimulus configurations eliciting response enhancement or no integration were significantly different from those of normally-reared animals (Fig. 8). Their receptive fields, unlike those of many of their neighbors, had contracted partially, but were in poor spatial alignment with one another. Some were totally out of register, a feature that is exceedingly rare in normal animals, but one that clearly reflects the early experience of these animals with visual-auditory cues. Most important in the current context, is that those neurons integrated spatially disparate stimuli to produce response enhancement–not spatially concordant stimuli. This is because their receptive fields were misaligned and only spatially disparate stimuli could fall simultaneously within them.

Fig. 8. Rearing animals in environments with spatially disparate visual-auditory stimulus configurations yields abnormal multisensory integration.

Illustrated is a sample neuron recorded from the SC of an animal reared in an environment in which simultaneous visual and auditory stimuli were always spatially displaced. This neuron developed spatially-misaligned receptive fields (i.e., the visual receptive field is central while the auditory receptive field is in the periphery). When presented with spatiotemporally concordant visual-auditory stimuli in the visual (left plots) or auditory (center plots) receptive fields, the multisensory response is no larger than the largest unisensory response (the identity of which is determined by which receptive field served as the stimulus location). However, if temporally concordant but spatially disparate visual and auditory stimuli are placed within their respective receptive fields, the multisensory response shows significant enhancement. In other words, this neuron appears to integrate spatially disparate cross-modal cues as a normal animal integrates spatially concordant cues. It fails to integrate spatially concordant, just as a normal animal might fail to integrate spatially discordant cues, an apparent “reversal” of the spatial principle. Adapted from Wallace and Stein 2007

Taken together, the dark rearing and disparity rearing conditions demonstrate that not only is experience critical for the maturation of multisensory integration, but that the nature of the experience directs formation of the neural circuits that engage in this process. In both normal and disparity-reared animals, the basis for multisensory response enhancement is defined by early experience. Whether this reflects a simple adaptation to specific cross-modal stimulus configurations, or the general statistics of multisensory experience, is a subject of ongoing experimentation.

Parallel experiments in AES cortex revealed that multisensory integration develops more slowly in cortex than in the SC. These multisensory neurons in AES populate the border regions between its visual (AEV), auditory (FAES), and somatosensory (SIV) subregions. This is perhaps not surprising, as in general, the development of the cortex is thought to be more protracted than that of the midbrain. These multisensory cortical neurons are involved in a circuit independent of the SC, as they do not project into the cortico-SC pathway (Wallace et al., 1992). Despite this, they have properties very similar to those found in the SC. They too fail to show multisensory integration capabilities during early neonatal stages, and develop this capacity gradually, and after SC neurons (Wallace et al., 2006). Just as is the case for SC neurons, these AES neurons also require sensory experience and fail to develop multisensory integration capabilities when animals are raised in the dark (Carriere et al., 2007).

Although the above observations suggest that the development of multisensory integration in the SC and cortex is dependent on exposure to cross-modal stimuli and its principles adapt to their configurations, they provide no insight as to the underlying circuitry governing its development and adaptation. However, for multisensory SC neurons, the cortical deactivation studies described above coupled with the maturational time course of the AES-SC projection suggests that AES cortex is likely to play a critical role.

Evaluating this idea began with experiments in which chronic deactivation of association cortex (both AES and its adjacent area rLS) was induced on one side of the brain for 8 weeks (between 4–12 wks postnatal) during the period in which multisensory integration normally develops (Wallace and Stein, 1997;Stein and Stanford, 2008), thereby rendering them unresponsive to sensory (in particular, cross-modal) experience. The deactivation was induced with muscimol, a GABAa agonist. It was embedded in a polymer that was placed over association cortex from which it was slowly released over this period. After the polymer released its stores of muscimol or was physically removed, the cortex became active and responsive to environmental stimuli. Animals were then tested behaviorally and physiologically when adults (1 yr of age), long after cortex had reactivated. These experiments are still ongoing, but preliminary results are quite clear.

Their ability of these animals to detect and locate visual stimuli was indistinguishable from that of normal animals, and was equally good in both hemifields. Furthermore, behavioral performance indicated that they significantly benefitted from the presentation of spatiotemporally concordant but task-irrelevant auditory stimuli in the ipsilateral hemifield (as do normal animals). However, in the contralateral hemifield, they were abnormal: responses to spatiotemporally concordant visual-auditory stimuli were no better than when the visual stimulus was presented alone. Apparently, deactivating ipsilateral association cortex during early life disrupted the maturation of multisensory integration capabilities in the contralateral hemifield. SC neurons in these animals also appeared incapable of synthesizing spatiotemporally concordant cross-modal stimuli to produce multisensory response enhancement. These data strongly support the hypothesis that the AES-SC projection is principally engaged in the instantiation of multisensory integration in the SC. The fact that the deficits in multisensory integration were observed long after the deprivation period, regardless of whether they were induced by dark rearing or chronic cortical deactivation, suggests that there is a ‘critical’ or ‘sensitive’ period for acquiring this capacity. Such a period would demarcate the period in which the capacity could be acquired.

Multisensory Plasticity in Adulthood

Despite these observations, it is possible that multisensory integration and its principles may be plastic in adulthood, but may operate on different time scales or be sensitive to different types of experience. Animals involved in the studies described above entailing chronic deactivation during early life were retained, and were available for experimentation several years later. The results were striking: whereas they had shown deficits before, now they appeared to show normal multisensory integration capabilities both in behavior and physiology on both sides of space. It is possible that experience was gradually incorporated into the AES-SC projection over such a long period of time. Another possibility is that entirely new circuits, not involving the AES, formed to support the instantiation of multisensory integration in the SC, although this seems less likely, as such circuits do not form in response to neonatal cortical ablation of cortex (Jiang et al., 2006). Ongoing experiments are investigating this issue.

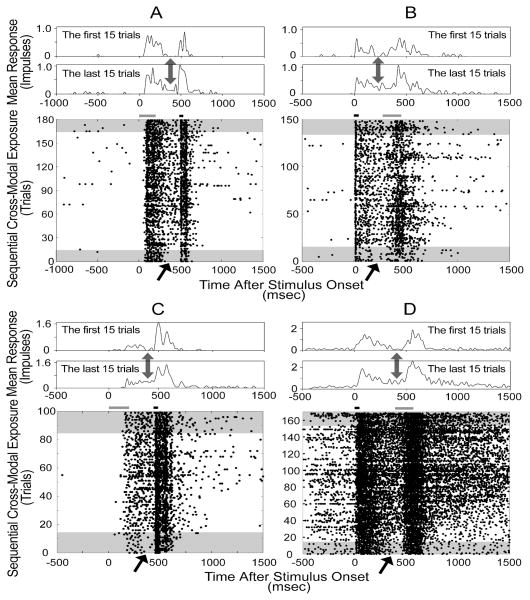

However, it is possible that multisensory integration might also be plastic on shorter time scales in the adult under the proper conditions. Yu et al. (2009) examined whether multisensory SC neurons in anesthetized animals would alter their responses if repeatedly presented with temporally-proximal sequential cross-modal stimuli. Because the stimuli were separated by hundreds of milliseconds, they initially generated what would be generally regarded as two distinct unisensory responses (separated by a “silent” period) rather than a single integrated response. They found that SC neurons rapidly adapted to the repeated presentation of these stimulus configurations (Yu et al., 2009). After only a few minutes of exposure to the repeated sequence, the initial silent period between the two responses began to be populated by impulses. Soon it appeared as if the two responses were merging (see Fig. 9). This resulted from an increase in the magnitude and duration of the first response and a shortening of the latency of the second response when they were presented in sequence. The stimuli were either visual or auditory, but it did not seem to matter which was the first or second in the sequence. Interestingly, similar sequences of stimuli belonging to the same modality did not generate the same consistent results.

Fig. 9. A repeated sequence of auditory and visual stimuli in either order led to a merging of their responses.

Shown are the responses of 4 neurons. In each display the responses are ordered from bottom to top. The stimuli are represented by bars above the rasters: the short one refers to the auditory stimulus and the long one to the visual stimulus. The first and last series of trials (n=15 in each) are shaded in the rasters and displayed at the top as peristimulus time histograms (20 ms bin width). Arrows indicate the time period between the responses to the stimuli. Note that the period of relative quiescence between the two distinct unisensory responses is lost after a number of trials and the responses begin to merge. This is most obvious when comparing the activity in the first and last 15 trials. From Yu et al. (2009).

These observations confirmed the presence of plasticity in adult multisensory SC neurons, and revealed that this plasticity could be induced even when the animal was anesthetized. Presumably, similar changes could be induced by temporally proximate cross-modal cues like those used in studies examining multisensory integration. The results also raise the question of whether a dark reared animal’s SC neurons could acquire the capacity to develop multisensory integration capabilities even after the animal is raised to adulthood in the dark.

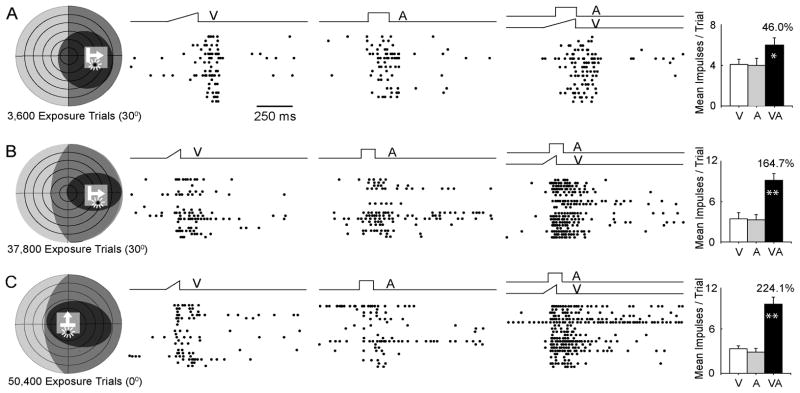

To test this possibility, Yu et al., (2010) raised animals from birth to maturity in darkness and then provided them with spatiotemporally concordant visual-auditory stimuli during daily exposure periods (Fig. 10). Once again, the animals were anesthetized during these exposure periods. Comparatively soon, SC neurons began showing multisensory integration capabilities, and the magnitude of these integrated responses increased over time to reach the level of normal adults (Yu et al., 2010). Of particular interest was the speed of acquisition of this capability. It was far more rapid than the acquisition in normally reared animals, suggesting that much of the delay in normal maturation is related to the development of the neural architecture that encodes these experiences. Interestingly, with only a few exceptions, only those neurons that had both receptive fields encroaching on the exposure site acquired this capability. This finding indicates that the cross-modal inputs to the neuron had to be activated together for this experience to have influence; that is, the influence of experience was not generalized across the population of neurons. However, within a given neuron, the experience was generalized to other overlapping areas of the receptive field, even those that did not exactly correspond to the exposure site. It is not clear from these observations whether this is a general finding or one specific to the exact stimuli and stimulus configurations (e.g., the fact that the exposure stimuli has precise spatiotemporal relationships) used to initiate the acquisition in multisensory integration. This may be the reason that the cats given chronic cortical deactivation do not develop multisensory integration capabilities even as young adults and humans with congenital cataracts that have undergone corrective surgery do not immediately develop this capacity (Putzar et al., 2007). Though seemingly reasonable, this supposition requires empirical validation.

Fig. 10. Exposure to spatiotemporally concordant visual-auditory stimuli leads to the maturation of multisensory integration capabilities in dark reared animals.

Shown above are the receptive fields, exposure sites and responses of 3 SC neurons (A–C). Left: receptive fields (visual = black, auditory = gray) are shown on schematics of visual-auditory space. The numbers below refer to cross-modal exposure trials provided before testing the neuron’s multisensory integration capability. The exposure site (0° or 30°) is also on the schematic and designated by a light gray square. Both receptive fields of each neuron overlapped the exposure site. Middle: each neuron responded to the cross-modal stimuli with an integrated response that exceeded the most robust unisensory response and, in 2/3 cases, exceeded their sum. Right: the summary bar graphs compare the average unisensory and multisensory responses. From Yu et al. (2010).

The continued plasticity of multisensory integration into adulthood also suggests that its characteristics may be adaptable to changes in environmental circumstances, specifically, changes in cross-modal statistics. This promises to be an exciting issue of future exploration. The possibility that it is not too late to acquire this fundamental capacity during late childhood or adulthood promises an ability to ameliorate the dysfunctions in this capacity induced by early deprivation via congenital vision and/or early hearing impairments. Perhaps by better understanding the requirements for its acquisition, better rehabilitative strategies can be developed. It may also be possible to significantly enhance the performance of people with normal developmental histories, especially in circumstances in which detection and localization of events is of critical importance.

Acknowledgments

The research described here was supported in part by NIH grants NS36916 and EY016716.

Reference List

- Alvarado JC, Rowland BA, Stanford TR, Stein BE. A neural network model of multisensory integration also accounts for unisensory integration in superior colliculus. Brain Res. 2008 doi: 10.1016/j.brainres.2008.03.074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alvarado JC, Stanford TR, Rowland BA, Vaughan JW, Stein BE. Multisensory integration in the superior colliculus requires synergy among corticocollicular inputs. J Neurosci. 2009;29:6580–6592. doi: 10.1523/JNEUROSCI.0525-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alvarado JC, Stanford TR, Vaughan JW, Stein BE. Cortex mediates multisensory but not unisensory integration in superior colliculus. J Neurosci. 2007;27:12775–12786. doi: 10.1523/JNEUROSCI.3524-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Angelaki D, Gu Y, Deangelis G. Visual and vestibular cue integration for heading perception in extrastriate visual cortex. J Physiol. 2010 doi: 10.1113/jphysiol.2010.194720. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behan M, Appell PP. Intrinsic circuitry in the cat superior colliculus: projections from the superficial layers. J Comp Neurol. 1992;315:230–243. doi: 10.1002/cne.903150209. [DOI] [PubMed] [Google Scholar]

- Bell AH, Meredith MA, Van Opstal AJ, Munoz DP. Crossmodal integration in the primate superior colliculus underlying the preparation and initiation of saccadic eye movements. J Neurophysiol. 2005;93:3659–3673. doi: 10.1152/jn.01214.2004. [DOI] [PubMed] [Google Scholar]

- Burnett LR, Stein BE, Chaponis D, Wallace MT. Superior colliculus lesions preferentially disrupt multisensory orientation 3. Neuroscience. 2004;124:535–547. doi: 10.1016/j.neuroscience.2003.12.026. [DOI] [PubMed] [Google Scholar]

- Burnett L, Stein BE, Wallace MT. Ibotenic acid lesions of the superior colliculus disrupt multisensory orientation behaviors. 2000. p. 1220. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Spence C, Stein BE. The Handbook of Multisensory Processes. Cambridge, MA: MIT Press; 2004. [Google Scholar]

- Carriere BN, Royal DW, Perrault TJ, Morrison SP, Vaughan JW, Stein BE, Wallace MT. Visual deprivation alters the development of cortical multisensory integration. J Neurophysiol. 2007;98:2858–2867. doi: 10.1152/jn.00587.2007. [DOI] [PubMed] [Google Scholar]

- Edwards SB, Ginsburgh CL, Henkel CK, Stein BE. Sources of subcortical projections to the superior colliculus in the cat. J Comp Neurol. 1979;184:309–329. doi: 10.1002/cne.901840207. [DOI] [PubMed] [Google Scholar]

- Ernst MO, Banks MS. Humans integrate visual and haptic information in a statistically optimal fashion. Nature. 2002;415:429–433. doi: 10.1038/415429a. [DOI] [PubMed] [Google Scholar]

- Frens MA, Van Opstal AJ. Visual-auditory interactions modulate saccade-related activity in monkey superior colliculus. Brain Res Bull. 1998;46:211–224. doi: 10.1016/s0361-9230(98)00007-0. [DOI] [PubMed] [Google Scholar]

- Fuentes-Santamaria V, Alvarado JC, McHaffie JG, Stein BE. Axon morphologies and convergence patterns of projections from different sensory-specific cortices of the anterior ectosylvian sulcus onto multisensory neurons in the cat superior colliculus. Cereb Cortex. 2009;19:2902–2915. doi: 10.1093/cercor/bhp060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuentes-Santamaria V, Alvarado JC, Stein BE, McHaffie JG. Cortex contacts both output neurons and nitrergic interneurons in the superior colliculus: direct and indirect routes for multisensory integration. Cereb Cortex. 2008;18:1640–1652. doi: 10.1093/cercor/bhm192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gaither NS, Stein BE. Reptiles and mammals use similar sensory organizations in the midbrain. Science. 1979;205:595–597. doi: 10.1126/science.451623. [DOI] [PubMed] [Google Scholar]

- Gillmeister H, Eimer M. Tactile enhancement of auditory detection and perceived loudness. Brain Res. 2007;1160:58–68. doi: 10.1016/j.brainres.2007.03.041. [DOI] [PubMed] [Google Scholar]

- Gingras G, Rowland BA, Stein BE. The differing impact of multisensory and unisensory integration on behavior. J Neurosci. 2009;29:4897–4902. doi: 10.1523/JNEUROSCI.4120-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gori M, Del VM, Sandini G, Burr DC. Young children do not integrate visual and haptic form information. Curr Biol. 2008;18:694–698. doi: 10.1016/j.cub.2008.04.036. [DOI] [PubMed] [Google Scholar]

- Grantyn A, Grantyn R. Generation of grouped discharges by tectal projection cells. Arch Ital Biol. 1984;122:59–71. [PubMed] [Google Scholar]

- Hartline PH, Kass L, Loop MS. Merging of modalities in the optic tectum: infrared and visual integration in rattlesnakes. Science. 1978;199:1225–1229. doi: 10.1126/science.628839. [DOI] [PubMed] [Google Scholar]

- Huerta MF, Harting JK. The Mammalian Superior Colliculus: Studies of Its Morphology and Connections. In: Vanegas H, editor. Comparative Neurology of the Optic Tectum. Plenum Publishing Corporation; 1984. pp. 687–773. [Google Scholar]

- Jiang W, Jiang H, Rowland BA, Stein BE. Multisensory orientation behavior is disrupted by neonatal cortical ablation. J Neurophysiol. 2007;97:557–562. doi: 10.1152/jn.00591.2006. [DOI] [PubMed] [Google Scholar]

- Jiang W, Jiang H, Stein BE. Two corticotectal areas facilitate multisensory orientation behavior. J Cogn Neurosci. 2002;14:1240–1255. doi: 10.1162/089892902760807230. [DOI] [PubMed] [Google Scholar]

- Jiang W, Jiang H, Stein BE. Neonatal cortical ablation disrupts multisensory development in superior colliculus. J Neurophysiol. 2006;95:1380–1396. doi: 10.1152/jn.00880.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiang W, Wallace MT, Jiang H, Vaughan JW, Stein BE. Two cortical areas mediate multisensory integration in superior colliculus neurons. J Neurophysiol. 2001;85:506–522. doi: 10.1152/jn.2001.85.2.506. [DOI] [PubMed] [Google Scholar]

- Kadunce DC, Vaughan JW, Wallace MT, Benedek G, Stein BE. Mechanisms of within- and cross-modality suppression in the superior colliculus 2. J Neurophysiol. 1997;78:2834–2847. doi: 10.1152/jn.1997.78.6.2834. [DOI] [PubMed] [Google Scholar]

- Kadunce DC, Vaughan JW, Wallace MT, Stein BE. The influence of visual and auditory receptive field organization on multisensory integration in the superior colliculus 1. Exp Brain Res. 2001;139:303–310. doi: 10.1007/s002210100772. [DOI] [PubMed] [Google Scholar]

- Kao CQ, Stein BE, Coulter DA. Postnatal Development of Excitatory Synaptic Function in Deep Layers of SC. 1994. [Google Scholar]

- King AJ, Palmer AR. Integration of visual and auditory information in bimodal neurones in the guinea-pig superior colliculus. Exp Brain Res. 1985;60:492–500. doi: 10.1007/BF00236934. [DOI] [PubMed] [Google Scholar]

- Larson MA, Stein BE. The use of tactile and olfactory cues in neonatal orientation and localization of the nipple. Dev Psychobiol. 1984;17:423–436. doi: 10.1002/dev.420170408. [DOI] [PubMed] [Google Scholar]

- Lomber SG, Payne BR, Cornwell P. Role of the superior colliculus in analyses of space: superficial and intermediate layer contributions to visual orienting, auditory orienting, and visuospatial discriminations during unilateral and bilateral deactivations 11. J Comp Neurol. 2001;441:44–57. doi: 10.1002/cne.1396. [DOI] [PubMed] [Google Scholar]

- McHaffie JG, Kruger L, Clemo HR, Stein BE. Corticothalamic and corticotectal somatosensory projections from the anterior ectosylvian sulcus (SIV cortex) in neonatal cats: an anatomical demonstration with HRP and 3H-leucine. J Comp Neurol. 1988;274:115–126. doi: 10.1002/cne.902740111. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Nemitz JW, Stein BE. Determinants of multisensory integration in superior colliculus neurons. I. Temporal factors. J Neurosci. 1987;7:3215–3229. doi: 10.1523/JNEUROSCI.07-10-03215.1987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Interactions among converging sensory inputs in the superior colliculus. Science. 1983;221:389–391. doi: 10.1126/science.6867718. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Spatial factors determine the activity of multisensory neurons in cat superior colliculus. Brain Res. 1986;365:350–354. doi: 10.1016/0006-8993(86)91648-3. [DOI] [PubMed] [Google Scholar]

- Meredith MA, Stein BE. Spatial determinants of multisensory integration in cat superior colliculus neurons. J Neurophysiol. 1996;75:1843–1857. doi: 10.1152/jn.1996.75.5.1843. [DOI] [PubMed] [Google Scholar]

- Moschovakis AK, Karabelas AB. Observations on the somatodendritic morphology and axonal trajectory of intracellularly HRP-labeled efferent neurons located in the deeper layers of the superior colliculus of the cat. J Comp Neurol. 1985;239:276–308. doi: 10.1002/cne.902390304. [DOI] [PubMed] [Google Scholar]

- Neil PA, Chee-Ruiter C, Scheier C, Lewkowicz DJ, Shimojo S. Development of multisensory spatial integration and perception in humans. Dev Sci. 2006;9:454–464. doi: 10.1111/j.1467-7687.2006.00512.x. [DOI] [PubMed] [Google Scholar]

- Peck CK, Baro JA. Discharge patterns of neurons in the rostral superior colliculus of cat: activity related to fixation of visual and auditory targets. Exp Brain Res. 1997;113:291–302. doi: 10.1007/BF02450327. [DOI] [PubMed] [Google Scholar]

- Putzar L, Goerendt I, Lange K, Rosler F, Roder B. Early visual deprivation impairs multisensory interactions in humans. Nat Neurosci. 2007;10:1243–1245. doi: 10.1038/nn1978. [DOI] [PubMed] [Google Scholar]

- Royal DW, Carriere BN, Wallace MT. Spatiotemporal architecture of cortical receptive fields and its impact on multisensory interactions. Exp Brain Res. 2009;198:127–136. doi: 10.1007/s00221-009-1772-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spence C, Pavani F, Driver J. Spatial constraints on visual-tactile cross-modal distractor congruency effects. Cogn Affect Behav Neurosci. 2004;4:148–169. doi: 10.3758/cabn.4.2.148. [DOI] [PubMed] [Google Scholar]

- Stanford TR, Stein BE. Superadditivity in multisensory integration: putting the computation in context. Neuroreport. 2007;18:787–792. doi: 10.1097/WNR.0b013e3280c1e315. [DOI] [PubMed] [Google Scholar]

- Stein BE. Annual Review of Neuroscience. Palo Alto, CA: Annual Reviews, Inc; 1984. Development of the superior colliculus; pp. 95–125. [DOI] [PubMed] [Google Scholar]

- Stein BE, Burr D, Constantinidis C, Laurienti PJ, Alex MM, Perrault TJ, Jr, Ramachandran R, Roder B, Rowland BA, Sathian K, Schroeder CE, Shams L, Stanford TR, Wallace MT, Yu L, Lewkowicz DJ. Semantic confusion regarding the development of multisensory integration: a practical solution. Eur J Neurosci. 2010;31:1713–1720. doi: 10.1111/j.1460-9568.2010.07206.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein BE, Gaither NS. Sensory representation in reptilian optic tectum: some comparisons with mammals. J Comp Neurol. 1981;202:69–87. doi: 10.1002/cne.902020107. [DOI] [PubMed] [Google Scholar]

- Stein BE, Huneycutt WS, Meredith MA. Neurons and behavior: the same rules of multisensory integration apply. Brain Res. 1988;448:355–358. doi: 10.1016/0006-8993(88)91276-0. [DOI] [PubMed] [Google Scholar]

- Stein BE, Labos E, Kruger L. Sequence of changes in properties of neurons of superior colliculus of the kitten during maturation. J Neurophysiol. 1973;36:667–679. doi: 10.1152/jn.1973.36.4.667. [DOI] [PubMed] [Google Scholar]

- Stein BE, Meredith MA. The merging of the senses. Cambridge, Mass: MIT Press; 1993. [Google Scholar]

- Stein BE, Stanford TR. Multisensory integration: current issues from the perspective of the single neuron. Nat Rev Neurosci. 2008;9:255–266. doi: 10.1038/nrn2331. [DOI] [PubMed] [Google Scholar]

- Stein BE, Stanford TR, Ramachandran R, Perrault TJ, Jr, Rowland BA. Challenges in quantifying multisensory integration: alternative criteria, models, and inverse effectiveness. Exp Brain Res. 2009;198:113–126. doi: 10.1007/s00221-009-1880-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace MT, Carriere BN, Perrault TJ, Jr, Vaughan JW, Stein BE. The development of cortical multisensory integration. J Neurosci. 2006;26:11844–11849. doi: 10.1523/JNEUROSCI.3295-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace MT, Hairston WD, Stein BE. Program No. 511.6. 2001. Long-term effects of dark-rearing on multisensory processing. [Google Scholar]

- Wallace MT, Meredith MA, Stein BE. Integration of multiple sensory modalities in cat cortex. Exp Brain Res. 1992;91:484–488. doi: 10.1007/BF00227844. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Meredith MA, Stein BE. Converging influences from visual, auditory, and somatosensory cortices onto output neurons of the superior colliculus. J Neurophysiol. 1993;69:1797–1809. doi: 10.1152/jn.1993.69.6.1797. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Perrault TJ, Jr, Hairston WD, Stein BE. Visual experience is necessary for the development of multisensory integration 1. J Neurosci. 2004;24:9580–9584. doi: 10.1523/JNEUROSCI.2535-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace MT, Stein BE. Early experience determines how the senses will interact. J Neurophysiol. 2007;97:921–926. doi: 10.1152/jn.00497.2006. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Stein BE. Onset of cross-modal synthesis in the neonatal superior colliculus is gated by the development of cortical influences. J Neurophysiol. 2000;83:3578–3582. doi: 10.1152/jn.2000.83.6.3578. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Stein BE. Cross-modal synthesis in the midbrain depends on input from cortex. J Neurophysiol. 1994;71:429–432. doi: 10.1152/jn.1994.71.1.429. [DOI] [PubMed] [Google Scholar]

- Wallace MT, Stein BE. Development of multisensory neurons and multisensory integration in cat superior colliculus. J Neurosci. 1997;17:2429–2444. doi: 10.1523/JNEUROSCI.17-07-02429.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace MT, Stein BE. Sensory and multisensory responses in the newborn monkey superior colliculus. J Neurosci. 2001;21:8886–8894. doi: 10.1523/JNEUROSCI.21-22-08886.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace MT, Wilkinson LK, Stein BE. Representation and integration of multiple sensory inputs in primate superior colliculus. J Neurophysiol. 1996;76:1246–1266. doi: 10.1152/jn.1996.76.2.1246. [DOI] [PubMed] [Google Scholar]

- Wilkinson LK, Meredith MA, Stein BE. The role of anterior ectosylvian cortex in cross-modality orientation and approach behavior. Exp Brain Res. 1996;112:1–10. doi: 10.1007/BF00227172. [DOI] [PubMed] [Google Scholar]

- Yu L, Rowland BA, Stein BE. Initiating the development of multisensory integration by manipulating sensory experience. J Neurosci. 2010;30:4904–4913. doi: 10.1523/JNEUROSCI.5575-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu L, Stein BE, Rowland BA. Adult plasticity in multisensory neurons: short-term experience-dependent changes in the superior colliculus. J Neurosci. 2009;29:15910–15922. doi: 10.1523/JNEUROSCI.4041-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zahar Y, Reches A, Gutfreund Y. Multisensory enhancement in the optic tectum of the barn owl: spike count and spike timing. J Neurophysiol. 2009;101:2380–2394. doi: 10.1152/jn.91193.2008. [DOI] [PubMed] [Google Scholar]