Abstract

In the last years, optimal control theory (OCT) has emerged as the leading approach for investigating neural control of movement and motor cognition for two complementary research lines: behavioral neuroscience and humanoid robotics. In both cases, there are general problems that need to be addressed, such as the “degrees of freedom (DoFs) problem,” the common core of production, observation, reasoning, and learning of “actions.” OCT, directly derived from engineering design techniques of control systems quantifies task goals as “cost functions” and uses the sophisticated formal tools of optimal control to obtain desired behavior (and predictions). We propose an alternative “softer” approach passive motion paradigm (PMP) that we believe is closer to the biomechanics and cybernetics of action. The basic idea is that actions (overt as well as covert) are the consequences of an internal simulation process that “animates” the body schema with the attractor dynamics of force fields induced by the goal and task-specific constraints. This internal simulation offers the brain a way to dynamically link motor redundancy with task-oriented constraints “at runtime,” hence solving the “DoFs problem” without explicit kinematic inversion and cost function computation. We argue that the function of such computational machinery is not only restricted to shaping motor output during action execution but also to provide the self with information on the feasibility, consequence, understanding and meaning of “potential actions.” In this sense, taking into account recent developments in neuroscience (motor imagery, simulation theory of covert actions, mirror neuron system) and in embodied robotics, PMP offers a novel framework for understanding motor cognition that goes beyond the engineering control paradigm provided by OCT. Therefore, the paper is at the same time a review of the PMP rationale, as a computational theory, and a perspective presentation of how to develop it for designing better cognitive architectures.

Keywords: optimal control theory, passive motion paradigm, synergy formation, covert actions, iCub, humanoid robots, cognitive architecture

“Nina: I want to be perfect.

Thomas: Perfection is not just about control. It’s also about letting go.”

A conversation between Nina Sayers and Thomas Leroy, the student and the dance teacher in the movie “The Black Swan” directed by Aronofsky (2010).

Putting the Issue into Context

Since the time of Nicholas Bernstein (1967) it has become clear that one of the central issues in neural control of movement is the “Degrees of Freedom (DoFs) Problem,” that is the computational process by which the brain coordinates the action of a high-dimensional set of motor variables for carrying out the tasks of everyday life, typically described, and learnt in a “task-space” of much lower dimensionality. Such dimensionality imbalance is usually expressed by the term “motor redundancy.” This means that the same movement goal can be achieved by an infinite number of combinations of the control variables which are equivalent as far as the task is concerned. But in spite of so much freedom, experimental evidence suggests that the motor system consistently uses a narrow set of solutions. Consider, for example, the task of reaching a point B in space, starting from a point A, in a given time T. In principle, the task could be carried out in an infinite number of ways, with regards to spatial aspects (hand path), timing aspects (speed profile of the hand), and recruitment patterns of the available DoF’s. In contrast, it was found that the spatio-temporal structure of this class of movements is strongly stereotypical, whatever their amplitude, direction, and duration: the path is nearly straight (in the extrinsic, Cartesian space, not the intrinsic, articulatory space) and the speed profile is nearly bell-shaped, with symmetric acceleration and deceleration phases (Morasso, 1981; Abend et al., 1982). That this stereotypicity should be attributed to internal control mechanisms, not to biomechanical effects, is suggested by the observation of reaching movements in different types of neuromotor impaired subjects. For example, in the case of ataxic patients, although they still can reach the target, spatio-temporal invariance is grossly violated: paths are strongly curved, with distortion patterns that change with the direction of movement, and the speed profile is asymmetric (Sanguineti et al., 2003).

Cybernetics of purposive actions

A movement, per se, is nothing unless it is associated with a goal and this usually requires recruitment of a number of joints, in the context of an action. Recognizing the crucial importance of multi-joint coordination was really a paradigm shift from the classical Sherringtonian viewpoint (typically focused on single-joint movements), to the Bernsteinian quest for principles of coordination or synergy formation. A coordinated action is a class of movements plus a goal. Redundancy is a side-effect of this connection and thus redundancy is necessarily task-oriented, something to be managed “on-line” and “rapidly” updated as the action unfolds. As descriptive concepts, coordination and synergy are equivalent: both refer to the fact that, in the context of a given set of behaviors, systematic correlations between different effectors can be observed. However, such correlations are just an epiphenomenon, determined by a deeper structure, namely the underlying control mechanisms in the motor system that activates groups of effectors as single units in different moments of an action. Shortly, we suggest calling it the “cybernetics of purposive actions.” Generally speaking, we consider actions as operational modules in which descending motor patterns are produced together with the expectation of the (multimodal) sensory consequences. Mounting evidence accumulated in the last 30 years from different directions and points of view, such as the equilibrium point hypothesis (Asatryan and Feldman, 1965; Feldman, 1966; Bizzi et al., 1976, 1992; Feldman and Levin, 1995), mirror neurons system (Di Pellegrino et al., 1992), motor imagery (Decety, 1996; Crammond, 1997; Grafton, 2009; Kranczioch et al., 2009; Munzert et al., 2009), motor resonance (Borroni et al., 2011), embodied cognition (Wilson, 2002; Gallese and Lakoff, 2005; Gallese and Sinigaglia, 2011; Sevdalis and Keller, 2011), etc., suggest that in order to understand the neural control of movement, the observation, and analysis of overt movements is just the tip of the iceberg because what really matters is the large computational basis shared by action production, action observation, action reasoning, and action learning.

Equilibrium point hypothesis – an extended view

Let us go back to the issue of stereotypicity of reaching movements: where is it coming from? A general concept that was in the background of many studies during the mid-1960s to mid-1980s was the equilibrium point hypothesis (EPH: Asatryan and Feldman, 1965; Feldman, 1966; Bizzi et al., 1976, 1992; Feldman and Levin, 1995). Its power comes from its ability to solve the “DoFs problem” by positing that posture is not directly controlled by the brain in a detailed way but is a “biomechanical consequence” of equilibrium among a large set of muscular and environmental forces. In this view, “movement” is a symmetry-breaking phenomenon, i.e., the transition from an equilibrium state to another. In the quest for motor modules, studies were carried out with intact and spinalized animals (Bizzi et al., 1991; Mussa Ivaldi and Bizzi, 2000; d’Avella and Bizzi, 2005; Roh et al., 2011) showing that motor behaviors may be constructed by muscle synergies, with the associated force fields organized within the brain stem and spinal cord and activated by descending commands from supraspinal areas. Muscle synergies were also shown to be correlated to the control of task-related variables (e.g., end-point kinematics or kinetics, displacement of the center of pressure; (Ivanenko et al., 2003; Torres-Oviedo et al., 2006). Using techniques from control theory, (Berniker et al., 2009) proposed a design of a low-dimensional controller for a frog hind limb model, that balances the advantages of exploiting a system’s natural dynamics with the need to accurately represent the variables relevant for task-specific control. They demonstrated that the low-dimensional controller is capable of producing movements without substantial loss of either efficacy or efficiency, hence providing support for the viability of the muscle synergy hypothesis and the view that the CNS might use such a strategy to produce movement “simply and effectively.”

We emphasize that the additivity of the muscle synergies is ultimately made possible by the additivity of the underlying force fields. In the classical view of EPH, the attractor dynamics that underlies reaching movements is based on the elastic properties of the skeletal neuromuscular system and its ability to store/release mechanical energy. However, this may not be the only possibility. The discovery of motor imagery and the strong similarity of the recorded neural patterns in overt and covert movements, suggests that attractor dynamics and the associated force fields may not be uniquely determined by physical properties of the neuromuscular system but may arise as well from “similar” neural dynamics due to interaction among brain areas that are active in both situations. In this sense, the original EPH viewpoint can be extended by positing that cortico-cortical, cortico-subcortical, and cortico-cerebellar circuits associated with synergy formation may also be characterized by similar attractor mechanisms that cooperate in shaping flexible behaviors of the body schema in the context of ever-changing environmental interactions. The proposed PMP framework goes in this direction.

On the other hand, it is still an open question whether or not the motor system represents equilibrium trajectories (Karniel, 2011). Many motor adaptation studies, starting with the seminal paper by Shadmehr and Mussa-Ivaldi (1994), demonstrate that equilibrium points or equilibrium trajectories per se are not sufficient to account for adaptive motor behavior, but this is not sufficient to rule out the existence of neural mechanisms or internal models capable of generating equilibrium trajectories. Rather, as suggested by Karniel (2011), such findings should induce the research to shift from the lower level analysis of reflex loops and muscle properties to the level of internal representations and the structure of internal models. This is indeed the motivation and the purpose of our proposal: to model the posited internal models in terms of an extension of the EPH.

Optimal control theory

The first attempt to formulate in a mathematical manner the process by which the brain singles out a unique spatio-temporal pattern for a reaching task among infinite possible solutions was formulated by Flash and Hogan (1985), in the framework of the classical engineering design technique: optimal control theory (OCT). The general idea is that in order to design the best possible controller of a (robotic/human) system, capable of carrying out a prescribed task, one should define first a “cost function,” i.e., a mathematical combination of the control variables that yields a single number (the “cost”): This function is generally composed of two parts: a part that measures the “distance” of the system from the goal and a part (regularization term) that encodes the required “effort.” The design is then reduced to the computation of the control variables that minimize the cost function, thus finding the best possible trade-off between accuracy and effort. In the case of Flash and Hogan (1985), the regularization term was the “integrated jerk” and they showed that the solution of such minimization task was indeed consistent with the spatio-temporal invariances found by Morasso (1981). Other simulation studies found similar results by choosing different types of cost functions, such as “integrated torque change” (Uno et al., 1989), “minimum end-point variance” (Harris and Wolpert, 1998), “minimum object crackle” (Dingwell et al., 2004), “minimum acceleration criterion” (Ben-Itzhak and Karniel, 2008). In this line of research, optimal control concepts were used for deriving off-line control patterns, to be employed in feed-forward control schemes. A later development (Todorov and Jordan, 2002; Todorov, 2004) suggested using an extension of OCT that incorporates sensory feedback in the computational architecture. In this closed-loop control technique, a block named “Control Policy” generates a stream of motor commands that optimize the pre-defined cost function on the basis of a current estimate of the “state variables”; this estimate integrates in an optimal way (by means of a “Kalman filter”) feedback information (coming from delayed and noise-corrupted sensory signals) with a prediction of the state provided by a “forward model” of the system’s dynamics, driven by an “efference copy” of the motor commands. One of the most attractive features of this formulation, in addition to its elegance and apparent simplicity, is that it blurs the difference between feed-forward and feedback control because the control policy governs both. Recent developments show that OCT has gradually emerged as a powerful theory for interpreting a range of motor behaviors (Scott, 2004; Chhabra and Jacobs, 2006; Li, 2006; Shadmehr et al., 2010), online movement corrections (Saunders and Knill, 2004; Liu and Todorov, 2007), structure of motor variability (Guigon et al., 2008a; Kutch et al., 2008), Fitts’ law and control of precision (Guigon et al., 2008b) among others. At the same time, the framework has also been applied for controlling anthropomorphic robots (Nori et al., 2008; Ivaldi et al., 2010; Mitrovic et al., 2010; Simpkins et al., 2011).

Open challenges in OCT

A basic challenge within this approach is to derive the optimal control signal with non-linear time-varying systems, given a specific cost function and assumptions as to the structure of the noise. It is well known that this process comes with heavy computational costs and requires challenging mathematical contortions to solve even the simplest of the linear control problems (Bryson, 1999; Scott, 2004). Recent reformulations (Todorov, 2009) attempt to specifically address this topic by using concepts from statistical inference and thereby reducing the computation of the optimal “cost to go” function to a linear problem. At the same time, how these formal methods can be implemented through distributed neural networks has been questioned by numerous authors (Scott, 2004; Todorov, 2006; Guigon, 2011). A seemingly unrelated issue that is also worth mentioning here concerns the relationship between posture and movement. OCT based approaches generally speak about “goal directed” movements and speak very little about the integration (and interference) between posture and movement (Ostry and Feldman, 2003; Guigon et al., 2007) in an acting organism. We believe, all these issues are in fact related to the lack of consideration of the characteristics of the underlying neuromuscular system that ultimately generates movement.

Optimal control theory is a sophisticated motor control model directly derived from engineering “servo” theory, extended by integrating internal models and predictors. The “fact” is that such engineering paradigms were designed for high bandwidth, inflexible, consistent systems with precision sensors. The “difficulty” lies in adapting these models to the typical biological situation, characterized by low bandwidth, high transmission delays, variable/flexible behavior, noisy sensors, and actuators. In contrast, evolution naturally aided biological systems to establish “soft” mechanisms that “counteract” these factors and yet produce robust, flexible behaviors. Motor control arises from the interplay between processes both at neural and musculoskeletal levels. Although it is generally believed that the neural level has a dominant role in the control of movements, there is evidence that the mechanics of moving limbs in interaction with the environment can also contribute to control (Chiel and Beer, 1997; Nishikawa et al., 2007). We believe OCT based approaches that begin with the basic assumption that behavior can be understood by minimization of a cost function are too general and do very little to exploit specific properties of the system they intend to control. That such techniques can be applied to a wide range of problems ranging from “animal foraging” to “national policy” making speaks rather about the power of formal mathematical methods. However, when applied to specific problems like coordination of movement in humans or humanoids, it may be possible to simplify the computational machinery by taking into account the properties and constraints of the physical system that is being coordinated (like, stiffness, reflex, local distributed processing/learning etc). This may in turn endow the computational model with greater flexibility, scalability, and robustness.

Optimality entails the choice of a cost function, which indicates a quantity to minimize. The nature of the cost function is a highly debated issue. Part of the confusion arises from the fact that all the proposed cost functions (jerk, energy, torque change, among several others) make similar predictions on basic qualitative characteristics of movement, e.g., trajectories, velocity profiles (Flash and Hogan, 1985; Uno et al., 1989; Harris and Wolpert, 1998; Todorov and Jordan, 2002; Guigon et al., 2007). Yet, a thorough quantitative analysis is in general lacking that could provide more contrasted results. In the standard formulation of OCT, the cost for being in a state and the probability of state transition depending on the action are explicitly given (Doya, 2009). However, in many realistic problems, such costs and transitions are not known a priori. Thus, we have to identify them before applying OCT or learn to act based on past experiences of costs and transitions (using reinforcement learning techniques etc). Similar difficulties also occur in the robotic version of the “DoFs problem” because, for robots interacting with unstructured environments, it is difficult to identify and carefully craft a cost function that may promote the emergence/maturation of purposive, intelligent behavior. This is relevant if we want to go “beyond” reach/grasp movements to more complex manipulation tasks like tool use which in fact “begins” once an object of interest is reached and grasped. It has been recently demonstrated ingeniously that it may be possible to learn the desirability function without explicit knowledge of the costs and transitions using “Z-learning” (Todorov, 2009). It has also been shown to converge considerably faster than the popular “Q learning” (Watkins and Dayan, 1992). But as Doya (2009) suggests, such learning may be trivial for examples like walking on grid-like streets, but may turn out to be very complicated for cases like shifting the body posture by activating several DoFs.

Coming to the topic of redundancy, optimal control can be considered as a solution for such problems by minimizing the norm of the control signal, pseudo-inverse can be used to replace the inverse model block in a non-invertible redundant system. However, a central issue that still remains to be understood is how the brain uses different solutions under different circumstances (Karniel, 2011). Multiple internal models as proposed by different authors (Wolpert and Kawato, 1998; Haruno et al., 2001; Demiris and Khadhouri, 2006) might be the key to represent multiple solutions to the same goal. Nevertheless, the criterion for selecting one of the multiple solutions under various cases is open for future research. This goes to the contentious issue of “Sub–optimality.” The issue of sub-optimality in motor planning and the role of “motor memory” in consolidating the choice of a suboptimal strategy has been recently addressed by Ganesh et al. (2010), by showing the role of motor memory in the local minimization of task-specific variables. Zenzeri et al. (2011) have addressed this issue in relation with bimanual stabilization of an unstable task. The ability of expert users to switch between control strategies with strongly different cost functions was explored recently by Kodl et al. (2011), who showed that in suitable behavioral conditions subjects may randomly select from several available motor plans to perform a task. Generally speaking, the investigation of tasks that attempt to address activities of daily life, rather than artificial lab experiments, shows that the traditional approach to motor control, in the framework of a single plan, characterized by regular patterns related to the minimum of a cost function, can only offer a narrow view of the issue. In contrast, what is needed is a mechanism to hierarchically structure and modulate motion plans “on-line,” in a multi-referential framework, in such a way to allow to mix goals and constraints in a variety of task-related reference systems.

All this is not to say that optimal control concepts are not relevant for addressing motor control and synergy formation in humans and humanoid robots, set aside the successful application of optimization techniques and Bayesian modeling to multisensory and sensorimotor integration (Ernst and Banks, 2002; Kording and Wolpert, 2004; Stevenson et al., 2009). The point is that most studies on application of OCT to motor control were aimed at global optimization, where subjects were supposed to search the unique optimal solution for the given task and the issue of sub-optimality, if considered at all, was limited to address incomplete convergence to the unique optimum (Izawa et al., 2008). In contrast, real life tasks that require skilled control of tools in a variable, partially unknown environment are likely to require the ability to switch from one strategy to another, in the course of an action, accepting suboptimal criteria, in each phase of the action, provided that the overall performance satisfies the task requirements. In this sense, the existence of multiple optima and the ability of the subjects to access them is a key element of skilled behavior. At the same time, taking into account the properties and constraints of the physical (and musculoskeletal) system that is being coordinated can alleviate issues related to “computational cost,” posture–movement integration, local computing principles realized using distributed neural networks, and motor skill learning. The PMP framework, analyzed in the following sections, goes in this direction.

Passive motion paradigm: The general idea

An alternative to OCT (both versions, feed-forward and feedback) as a general theory of synergy formation, is the passive motion paradigm (PMP: Mussa Ivaldi et al., 1988). The focus of attention is shifted from cost functions to force fields. The basic idea can be formulated in qualitative terms by suggesting that the process by which the brain can determine the distribution of work across a redundant set of joints, when the end-effector is assigned the task of reaching a target point in space, can be represented as an “internal simulation process” that calculates how much each joint would move if an externally induced force (i.e., the goal) pulls the end-effector by a small amount toward the target. This internal simulation in turn causes the incremental elastic reconfiguration of the internal body schema involved in generating the action, by disseminating the force field across the kinematic chain (more generally, task-specific kinematic graph) which characterizes the articulated structure of the human or robot. The mechanism is labeled “passive” in line with the EPH because the equilibrium point is not explicitly specified by the brain. Instead, it just contributes to the activation of “task-related” force fields. When motor commands obtained by this process of internal simulation are actively transmitted to the actuators, the robot will reproduce the same motion.

Considering the mounting evidence from neuroscience in support of common neural substrates being activated during both “real and imagined” movements (Jeannerod, 2001; Kranczioch et al., 2009; Munzert et al., 2009; Thirioux et al., 2010), it is not unreasonable to posit that also real, overt actions are the results of an “internal simulation” as in PMP. We further posit that this internal simulation is a result of the interactions between the “internal body model” and the attractor dynamics of force fields induced by the goal and task-specific constraints involved during the performance of an “Action.” If the mental simulation converges (i.e., goal is realized), then the movement can be executed. Otherwise, convergence failure may play the role of a crucial internal event, namely the starting point to break the action plan into a sequence of sub-actions, by recruiting additional DoFs, affordances of tools that may allow the realization of the goal etc. In this sense, PMP can be considered a generalization of EPH from action execution (“overt actions”) to action planning and reasoning about actions (“covert actions”).

Passive motion paradigm: The computational formulation

Let q be the set of all the DoFs that characterize the body of a human or humanoid, possibly extended by including the DoFs of a manipulated object (like a tool). Any given task identifies one or more “end-effectors” and is defined by the motion x(t) of one end-effector with respect to some reference point. The natural reference frame for x(t) is linked to the environmental (extrinsic) space and not the joint (intrinsic) space. Moreover, the dimensionality of q is generally much greater than the dimensionality of x.

The basic idea of the PMP is to express the goal of an action (e.g., “reach a target point P”) by means of an attractive force field, centered in the target position (the target is the “source” of the field) and apply it to the body schema, in particular to the task-related end-effector. The whole body schema will be displaced from the initial equilibrium configuration to a final configuration where the force is null (when the end-effector reaches the target). This relaxation process, from one equilibrium state xA = f (qA) to another one xB = f (qB)1, is analogous to the mechanism of coordinating the motion of a wooden marionette by means of strings attached to the terminal parts of the body: the distribution of the motion among the joints is the “passive” consequence of the virtual forces applied to the end-effectors and the “compliance” of different joints.

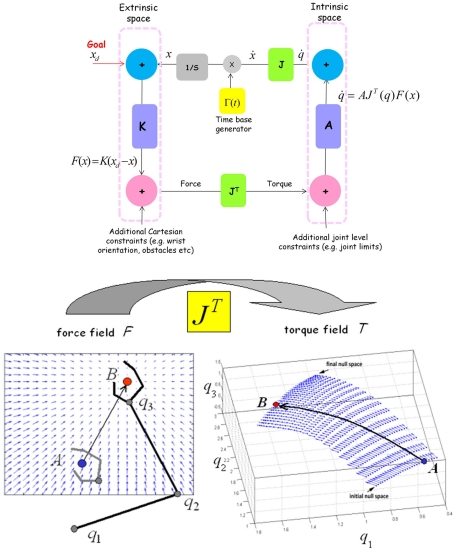

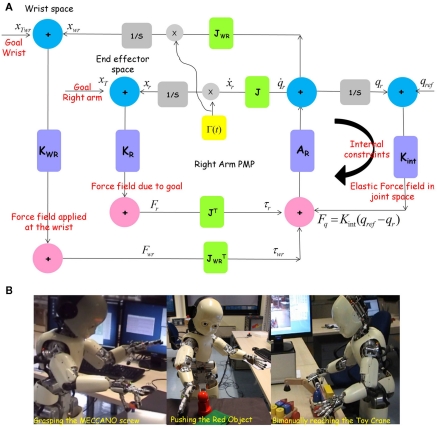

It is possible to express the dynamics of PMP by means of a graph as in Figure 1 (top panel). In mathematical terms the PMP can be expressed by the following equations:

Figure 1.

Top panel. Basic kinematic network that implements the passive motion paradigm for a simple kinematic chain (as the arm). In this simple case, the network is grouped into two motor spaces (extrinsic or end effector space and intrinsic or arm joint space). Each motor space consists of a generalized displacement node (blue) and a generalized force node (pink). Vertical connections (purple) denote impedances (K: Stiffness, A: Admittance) in the respective motor spaces and horizontal connections denote the geometric relation between the two motor spaces represented by the Jacobian (Green). The goal induces a force field that causes incremental elastic configurations in the network analogous to the coordination of a marionette with attached strings. The network also includes a time base generator which endows the system with terminal attractor dynamics: this means that equilibrium is not achieved asymptotically but in finite time. External and internal constraints (represented as other task-dependent force/torque fields) bias the path to equilibrium in order to take into account suitable “penalty functions.” This is a multi-referential system of action representation and synergy formation, which integrates a Forward and an Inverse Internal Model. Bottom panel. The figure illustrates the key element of the architecture of Figure 1 for solving the degrees of freedom problem, namely the mapping of the “force field,” defined in the extrinsic space and applied to the end-effector, into the corresponding “torque field,” defined in the intrinsic space and applied to the joints. The mapping is implemented by means of the transpose Jacobian matrix of the kinematic transformation. Dimensionality reduction is obtained implicitly by letting the internal model “slide” in the torque field. Each point of the trajectory in the extrinsic space corresponds to a whole manifold in the intrinsic space (the “null space” of the kinematic transformation). The equilibrium point in the force field corresponds to an equilibrium manifold in the torque field. The selection among the infinite number of possible targets is carried out implicitly by the combination of different force/torque fields.

| (1) |

F is the force field, with intensity and shape determined by the matrix K. In the simplest case, K is proportional to an identity matrix and this corresponds to an isotropic field, converging to the target along straight flow lines. J is the Jacobian matrix of the kinematic mapping from q to x. This matrix is always well defined, whatever the degree of redundancy of the system. For humanoid robots, it can be easily computed analytically. In biological organisms, in which x and q are likely represented in a distributed manner, J can be learnt through “babbling” movements and represented by means of neural networks (Mohan and Morasso, 2007). An important property of kinematic chains is that while the Jacobian matrix maps elementary motions (or speed vectors) from the intrinsic to the extrinsic space, the transpose Jacobian maps forces (or force fields) from the extrinsic to the intrinsic space.

The bottom panel of Figure 1 illustrates the process of mapping the task-oriented “force field” defined in the extrinsic space into a “torque field” in the intrinsic joint space: this is the crucial step in solving the DoF problem because the former field generally has a much smaller dimensionality than the latter and still they are causally related in a flexible way. The dimensionality imbalance implies that each point in the extrinsic space (a given position of the task-selected end-effector) corresponds to a whole manifold in the intrinsic space, what is also known as the “null space” of the kinematic function x = f (q). In the example of Figure 1, this manifold is a curved line that stores all the possible joint configurations compatible with a given position of the end-effector. The shape of the torque field implicitly determines which configuration is chosen. A is a virtual admittance matrix that transforms the torque field to the degree of participation of any individual joint to the collective relaxation process. The fact that trajectories generated according to this mechanism tend to be straight is implicit in the shape of the force field and is not explicitly “programmed.”

Γ(t) is a time-varying gain, or time base generator, that implements “terminal attractor dynamics” (Zak, 1988). A terminal attractor is an equilibrium point which is reached in a specified, finite time, in contrast with the asymptotic behavior of standard attractor systems. Informally stated, the idea behind terminal attractor dynamics is similar to the temporal pressure posed by a deadline in a grant proposal submission. A month before the deadline, the temporal pressure has low intensity and thus the rate of document preparation is scarce. But the pressure builds up as the deadline approaches, in a markedly non-linear way up to a very sharp peak the night before the deadline, and settles down afterward. The technique was originally developed by Zak (1988) for associative memories and later adopted for the PMP both with humans and robots (Morasso et al., 1994, 1997, 2010; Tsuji et al., 2002; Tanaka et al., 2005; Mohan et al., 2009, 2011a). It should be remarked that the mechanism, in spite of its simplicity, is computationally very effective and can be applied to systems with attractor dynamics of any complexity. From the conceptual point of view, Γ(t) has the role of the GO-signal advocated by Bullock and Grossberg (1988) for explaining the dynamics of planned arm movements.

Equation 1 expresses the “Inverse Internal Model” of the computational architecture that generates synergetic activations of all the joints q(t), to be sent to the motor controller. But this is only part of the machinery which is necessary for carrying out mental simulations of virtual and real actions. The missing part is a “Forward Internal Model,” driven by an efference copy of the flow of motor commands. This model generates a prediction of the trajectory of the end-effector which can be compared with the (fixed or moving) target in order to update the driving force field applied to the end-effector:

| (2) |

With this prediction, the loop is closed, defining the PMP as an integrated, multi-referential system of action representation and synergy formation, with a Forward and an Inverse Internal Model.

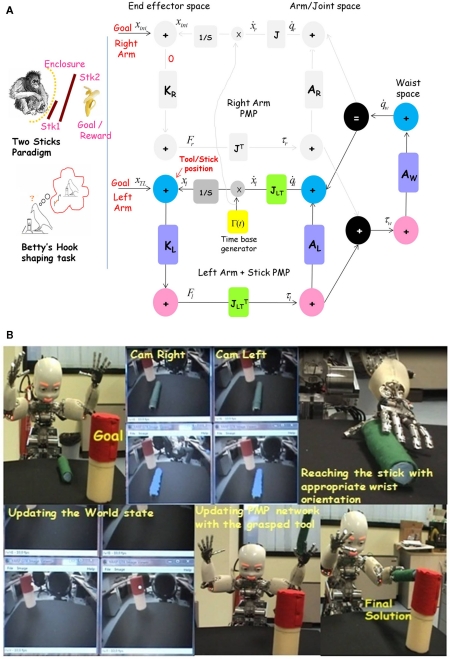

Task-specific PMP networks: Extracting general principles

Passive motion paradigm is a task-specific model. PMP networks have to be assembled on the fly based on the nature of the motor task and the body segment (and tool) chosen for its execution. We believe that runtime creation/modification of such networks is a fundamental operation in motor planning and action synthesis. In this section, we outline some general principles underlying the creation of task-specific PMP networks, in order to coordinate body/body + tool chains of arbitrary redundancy. At the same time, we also discuss how such a formulation can alleviate some of the open issues with the OCT approach mentioned in “Open Challenges in OCT.” We illustrate the central ideas using two examples: (1) a common day to day bimanual coordination task, namely controlling the steering wheel of a car (Figure 2), which captures both the modularity and computational organization of the framework and (2) Whole upper body coordination in the baby humanoid iCub (Sandini et al., 2004), that captures implementation aspects of such a network (Figure 3) while coordinating a highly redundant body.

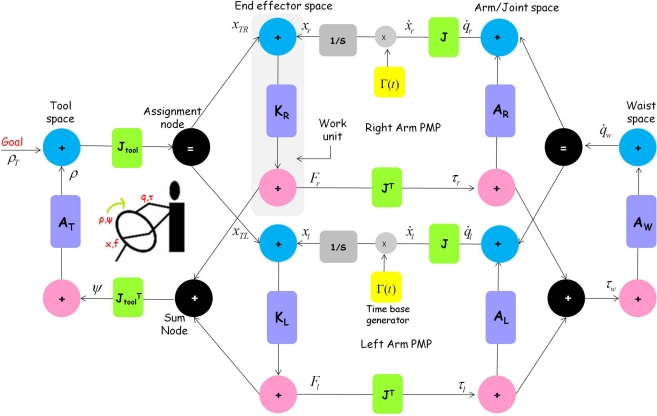

Figure 2.

Passive motion paradigm network for a common day to day bimanual task such as controlling the steering wheel of a car. Note that the basic PMP sub network (of Figure 1) is repeated for the right and the left arm. Since the goal is to coordinate bimanually a steering wheel, the network is grouped into the different motor spaces involved in this action, i.e., tool, hand, arm joint, and waist space. Each motor space consists of a displacement (blue) and force node (pink) grouped as a work unit. For example, the blue node in right hand PMP transmits the instantaneous position of the right hand, while the pink node transmits the force exerted by it. Vertical connections (purple) within each work unit denote the impedance, while horizontal connections (green) between two work units denote the geometric transformation between them (Jacobian: J). In this complex PMP network, there are two additional nodes “sum” and “assignment,” that add or assign (forces or displacements) between different motor spaces. Also note that the resulting network is fully connected, connectivity articulated in a fashion that all transformations are “well posed.” Intuitively, as the goal pulls the tool tip, the end-effectors are being simultaneously pulled to respective positions so as to allow the tool to reach the goal. At the same time, the joints (in the two arms and waist) are being pulled to values that allow the two hands to reach positions that allow the tool to reach the goal. This process of incremental updating of every node in the network continues till the time the tool tip reaches the goal (and equivalently the force field in the network is 0). Also note that all computations are local in the sense that every element responds to the pull of the goal based on its own impedance and all these local contributions sum up to create the global synergy achieved by the network.

Figure 3.

Bimanual coordination task of reaching two objects at the same time. (A) PMP network for the upper body with two target goals and a single time base generator. The network includes three modules: (1) Right arm, (2) Left arm, (3) Waist. The dimensionality of JR and JL is 3 × 10 (this includes the seven DoF’s of the arms and the three DoF’s of the waist). The dimensionality of Aj is 7 × 7 and of AT is 3 × 3. The three sub-networks interact through a pair of nodes (“assignment” and “sum”) that allow the spread of the goal-related activation patterns. (B,C) Show the initial and the final posture of the robot and the two target objects. (D,E) Show the trajectories of the two end-effectors and the corresponding speed profiles (together with the output Γ(t) of the time base generator). (F) Clarifies the intrinsic degrees of freedom in the right arm-torso chain. (G) Shows the time course of the right-arm joint rotation patterns: J0–J2: joint angles of the Waist (yaw, roll, pitch); J3–J9: joint angles of the Right Arm (shoulder pitch/yaw/roll; elbow flexion/extension; wrist prono supination pitch/yaw).

Motor spaces

Consider the common task of bimanually controlling a steering wheel. One of the first things to observe is the diversity of descriptions that are plausible for any motor event. For example, we can describe the same task using a mono-dimensional steering wheel pattern or a 6-dimensional limb space pattern or a 7-dimensional joint rotation pattern or multi-dimensional muscle contraction patterns. Figure 2 gives an explicit PMP network to incrementally derive the 7-D joint rotation patterns for each arm from the 1-D steering wheel plan. Since any motor action can be described simultaneously in multiple motor spaces (tool, end-effector, joint, actuator), PMP networks are “multi-referential.” The type of motor spaces involved in any PMP relaxation depends on the task and body chain responsible for its execution. By default, for action generation using the upper body of a humanoid robot, there are three motor spaces: end effector, arm joints, and waist (see Figure 3A).

Work units

All motor spaces have a pair of generalized force and displacement vectors grouped together as a work unit (in all PMP networks, position nodes are shown in blue, force nodes are shown in pink). For example, x and q denote displacement vectors, i.e., position of the hand and rotation at the arm and waist space respectively; f and τ denote force vectors, i.e., force at the hand space and torques at the joint space, respectively. If a task involves use of a tool, the tool space is also represented similarly with a generalized force and displacement node. Hence, in Figure 2, ρ denotes a generalized displacement (rotation of the steering wheel) and ψ denotes a generalized force (i.e., the steering wheel torque). The scalar work (force × displacement) is the structural invariant across different motor spaces (thus the name work unit: WU). Hence, in PMP the invariance of energy by coordinate transformations (principle of virtual works) is used to relate entities in different motor spaces. The relaxation process achieved by PMP incrementally derives trajectories in all the nodes (force and displacement) of the participating WU’s. For example, in a PMP relaxation for a simple reaching task (like in Figure 3), we get four sets of trajectories (as a function of time): (1) trajectory of joint angles given by the position node in the joint space (arm and waist, see Figure 3G); (2) the resulting consequence, i.e., the trajectory of end-effectors given by the position node in end effector space (Figure 3D); (3) the trajectory of torques at the different joints (arm and waist), given by the force node in the joint space; (4) the resulting consequence, i.e., the trajectory of forces applied by the end effector given by the force node in the end effector space.

Connectivity and circularity

The next thing to observe is that all PMP networks (Figures 2 and 3A) are fully connected in the sense that any node can be reached from any other node. In other words, PMP networks are “circular.” The “goal” can be applied at any node in the network, based on the task. The connectivity allows the force fields induced by a goal to ripple across the whole network. As a simple example, if we deactivate the left arm and the waist space in Figure 3A and enter the network at the right arm end effector dxr and exit at right arm joint space dqr, we get the following rule for computing incremental joint angles: dqr = ARJTKRdxr. The rules become more complex as additional motor spaces participate in the PMP relaxation.

Analogous to electrical circuits, connectivity in any PMP network are of two types: serial and parallel. In a serial connection, position vectors are added. For example, links are serially connected to form a limb. In a parallel connection, force vectors are added. For example, when we push an external object with both arms, the force applied by individual arms is added. In the steering task, the two hands are connected in parallel to the device (wheel), links are connected serially to form the two limbs and muscles are connected in parallel to a link. The task device, tool or effector organ to which the “motor goal” is coupled is always the starting point to build the PMP network. From there we may enter different motor spaces in the body model of the actor, hence branching the PMP network into serial or parallel configurations down to directly controlled elements relevant for a particular task.

Branching nodes (+/=)

In complex kinematic structures, where there are several serial and parallel connections, two additional nodes, i.e., Sum (+) and Assignment (=) are used to “add or assign” displacements and forces from one motor space to another. For example, in Figure 3A the assignment node assigns the contribution of the waist (to the overall upper body movement toward a goal), to the right and left arm networks. On the other hand, the net torque seen at the waist is the “sum” of torques coming from the right and left arm PMP sub-networks (because of the individual force fields experienced by the right and left arms respectively). Sum and assignment nodes are dual in nature. If an assignment node appears in the displacement transformation between two WU, then a sum node appears in the force transformation between the same WU’s. This can be understood as a consequence of conservation of energy between two WU’s. Further, sum and assignment nodes can also appear at the interface between the body and a tool, in order to assign/sum forces and displacements from the external object to the end-effectors and vice versa (like in Figure 2).

Geometric causality

This is expressed by the Jacobian matrices that form the horizontal links in the PMP network. They connect two WU’s or motor spaces together. Whether it is a serial or parallel connection, the mapping between one motor space to another is generally “non-linear” and “irreversible.” This mapping can be linearized by considering small displacements (or velocities), whose representations in any two motor spaces are related by the Jacobian matrix: for example, dxr = JR(q)dqr. Further, while the Jacobian determines the mapping of small displacements in one direction, the transpose Jacobian determines the dual relation among forces in the opposite direction (principle of virtual works). For example, in Figure 3A, the space Jacobians JR and JL map joint rotation patterns of the two arms and waist into displacements of the two hands, while the corresponding transpose Jacobians project disturbance forces F applied on the hands into corresponding joint torques. The tool Jacobian JT forms the interface between the body and the tool and represents the geometrical relationship between the tool and the concerned end-effector. While learning to use different tools, it is the tool Jacobians at the interface that are learnt. Based on the tool being coordinated, it is necessary to load the appropriate device Jacobian associated with it.

Elastic causality

This is expressed by the vertical links in the PMP network and is implemented by stiffness and admittance matrices. These links connect generalized force nodes to displacement nodes (or vice versa) in each WU. Hooke’s law of linear elasticity can be generalized to non-linear cases by considering differential variations: dF = K·dX and dX = A·dF, where K is the virtual stiffness and A is the virtual admittance. In the former case, effort is derived from position; whereas in the latter, position is derived from effort. For example, in Figure 3A, the virtual stiffness Ke determines the intensity and shape of the force field applied in the right and left hand networks. In the simplest case, K is proportional to the identity matrix and this corresponds to an isotropic field, converging to the goal target along straight flow lines (see Figure 1, bottom panel, and Figure 3D for the case of bimanual reaching). Curved trajectories (like in hand-written characters of different scripts) can be obtained by actively modulating (or learning) the appropriate values of the virtual stiffness (Mohan et al., 2011b).

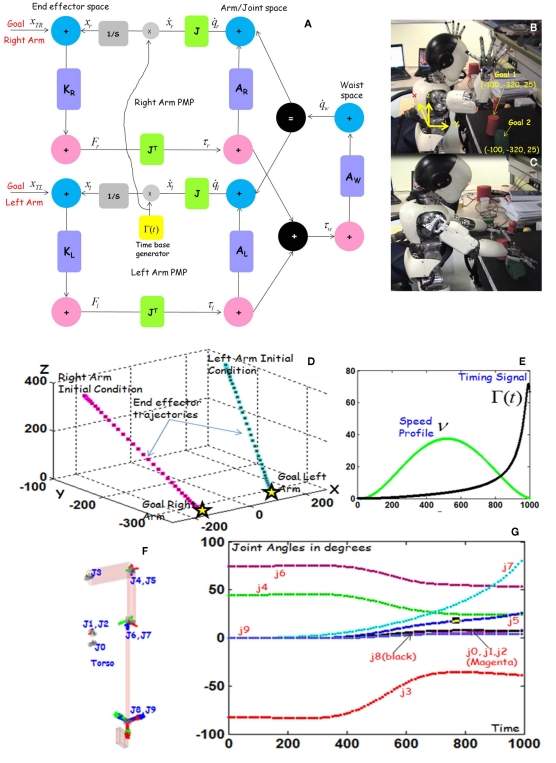

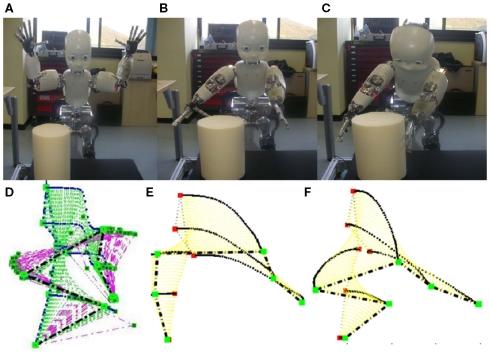

Role of admittance in the intrinsic space. In PMP networks, the effect of admittance is “local.” Every intrinsic element (for example, a joint in the arm) responds to the goal induced “force field” based on its own “local” admittance. Hence, it is not the precise values of the admittance of every joint, but the balance between them that affects the final solution achieved. This balance can be altered in a local and “task–specific” fashion. In normal conditions, we consider that all the participating joints are equally compliant. In this case, the admittance is an identity matrix (for a seven DoF arm, it is a 7 × 7 identity matrix). On the other hand, by locally modulating individual values, it is possible to alter the degree of participation of each joint to the coordinated movement while not affecting the solution at the end effector space (see Figure 1 bottom panel). For example, Figure 4A shows the initial condition with the goal being issued to reach the large cylinder (placed far away and asymmetrically with respect to the robot’s body) using both arms. Figure 4B shows the final solution when the admittance of the three DoF of the waist is reduced 10 times as compared to the two arms. Without the contributions of the additional DoF of the torso, it not possible to bimanually reach the target. Figure 4C shows the solution when the waist admittance is made equal to the arms. In this case, note the contributions from all three DoFs of the torso (Figure 4B), hence enabling iCub to bimanually reach the cylinder successfully in this case. An alternative way to interpret this behavior is that, in the former case (Figure 4B) the force field induced by the goal did not propagate through the waist network. In other words, the propagation of goal induced force field across different intrinsic elements of the body can be modified by altering their local admittance. This relates to the issue of “grounding.” Since there are many possible kinematic chains that can be coordinated simultaneously in a complex human/humanoid body, based on the nature of the motor task it is necessary to identify the start and end points in the body schema between which the force fields generated by the goal will propagate, and beyond which the force fields generated by the goal will not propagate. Such grounding can be easily achieved by modulating the local admittance of intrinsic elements in a task-specific fashion. For example, if the waist admittance is very low, this is equivalent to grounding the network at the shoulders. In the steering wheel task the body is grounded at the waist. At the same time, additional DoFs can be “incrementally” recruited in the relaxation process based on the success/failure of the task.

Figure 4.

Effects of modulating the admittance in the intrinsic space on the final posture achieved through PMP relaxation. (A) Shows the initial condition with the goal being issued to reach the large cylinder (placed far away and asymmetrically with respect to the robot’s body) using both arms. (B) Shows the final solution when the admittance of the three DoF of the waist is reduced 10 times as compared to the two arms. Without the contributions of the additional DoF of the torso, it is not possible to bimanually reach the target. An alternative way to interpret this behavior is that the force field induced by the goal did not propagate through the waist network because of its lower admittance (in comparison with the arm networks). In other words, the propagation of goal induced force field across different intrinsic elements of the body can be modified by altering their “local” admittance. (C) Shows the solution when the waist admittance is made equal to the arms. In this case, note the contributions from all three degrees of freedom of the torso (B), hence enabling iCub to bimanually reach the cylinder successfully in this case. (D–F) Show a simple scenario where the goal is to reach a target using the whole body but also attain a specific posture as demonstrated by the teacher [(D): Nearby target, (E,F) far way target]. If the admittance of the hip was reduced from 2.5 to 0.1 (rad/s/Nm) in (F) (keeping admittance of other joints constant), and we see two different postures: one that uses the hip more (E) and the other in which the knees compensate for the low admittance of the hip (F). This local and modular nature of motion generation is also evident during injury, when other degrees of freedom compensate for the temporarily “inactive” element, in reaction to the pull of a goal. This is a natural property of the PMP mechanism.

The issue of generating different solutions by actively modulating the admittance of different joints has been demonstrated for whole body reaching (WBR) tasks using the PMP (Morasso et al., 2010). Figures 4D–F show a simple scenario where the goal is to reach a target using the whole body but also attain a specific posture as demonstrated by the teacher (Figure 4D: nearby target, Figures 4E,F far away target). In such cases, it may be “perceptually” possible to determine approximately the contribution of different body parts to the observed movement. Such perceptual information can “locally” modulate the participation of different DoFs, hence influencing the nature of solution obtained. For example, if the admittance of the hip was reduced from 2.5 to 0.1 (rad/s/Nm) in Figure 4F (keeping admittance of other joints constant), and we see two different postures: one that uses the hip more (Figure 4E) and the other in which the knees compensate for the low admittance of the hip (Figure 4F). This local and modular nature of motion generation is also evident during injury (for example, a fracture to elbow), when other DoFs compensate for the temporarily “inactive” element, in reaction to the pull of a goal. This is a natural property of the PMP mechanism (and does not require any additional computation).

Finally, we must note that even though an elastic element is reversible in nature, in articulated elastic systems like in PMP, a coherence of representation dictates the “direction” in which causality is directed.

Directionality

The issue that needs to be understood now is the “direction” in which information should flow in a fully connected network like PMP. This is a critical issue not only while controlling highly redundant bodies, but also when tools with controllable DoFs are coordinated. The short answer to the question is that the direction in which information flows is constrained by the fact that PMP networks always operate through “well posed” computations/transformations. In which direction a transformation is “well posed” depends on the motor spaces involved and the type of connectivity (i.e., serial or parallel) between them.

Serial connections. Consider, for example, a serial kinematic chain like the right arm of iCub, which involves two motor spaces, namely the end effector and the arm joint space (Figures 2 and 3A). In serial connections, vectors of higher dimensionality are transformed into vectors of lower dimensionality (joint angles transform to hand coordinates). Thus the Jacobian matrix has more columns’ than rows (for example, considering that the end effector position is represented in 3D Cartesian space coordinates and the arm has seven joints, the resulting Jacobian matrix has three rows and seven columns). What transformations are well posed in a serial connection? We can observe that given the joint angles of the arm, it is possible to uniquely compute the position of the end effector. So the transformation from position node in joint space to position node in end effector space is well posed. In contrast, the transformation in the opposite direction is not well posed, in the sense that given an end effector position it is not possible to uniquely compute the value of the joint angles. The reason is that there are more unknowns (joint angles) than the equations, thus resulting in infinite solutions. Similarly, coming to transformation between force nodes, note that the transformation from end effector force to joint torques via the transpose Jacobian is well posed (T = JTF: there are seven equations and seven unknowns if the arm has seven joints). However, the transformation in the opposite direction is ill posed, i.e., given a set of joint torques it is not possible to compute the hand force since there are more constraint equations than unknowns. This is the reason that in the PMP networks of figures 2 and 3A we move from the position node in arm space to the position node in end effector space and force node in end effector space to force node in joint space. Further, this also preserves the circularity in the network.

Parallel connections. The parallel connection is a dual version of the serial connection. A biological example of parallel connection is the relationship between muscle and skeleton. The problem of finding the joint torque given the muscle forces is well posed, but the inverse problem results in infinite solutions (because there are more unknowns than equations). The connection between the two arms and the steering wheel (Figure 2) is also an example of a parallel connection. There can be infinite possible combinations of forces exerted by the two hands “in parallel” to generate a given steering wheel torque, but the transformation in the opposite direction is well posed. Similarly, given a steering wheel rotation it is possible to uniquely compute the position of the two hands. Hence in Figure 2, there is a position to position transformation from the steering wheel space to hand space, and force to force transformation from the hand space to steering wheel space.

In sum, the direction in which causality is directed in a PMP network is constrained by the fact that all computations in the network should be “well posed.” Operating through well posed computations (and avoiding inversion of a generally non-invertible redundant system) significantly reduces the computational overhead. Further, since computations are always “well posed and linearized,” PMP mechanisms do not suffer from the curse of dimensionality and can be easily scaled up to any number of DoFs. This is not the case with OCT where it is well known that non-linearity and high dimensionality can significantly affect the computational overhead and numerical stability of the solution (Bryson, 1999; Scott, 2004).

A more general question can be asked as to “How and Why” computations turn out to be well posed in PMP? The answer is that they are “constrained” by the physical properties of the system they intend to model. For example, natural direction of causality for a muscle is to receive flow and yield force, and the natural direction of causality for the joint is to receive force and yield flow (which is the reason the joint space receives the force field as input and yields joint rotations as output, which in turn uniquely determines end effector displacement). In fact, a detailed analysis of issues related to modularity and causality in physical system modeling goes back to a seminal paper by Hogan (1987), with contributions from Henry Paynter (of the Bond graph approach), that we merely revisit with the PMP model. We think that techniques that start with the assumption that behavior can be understood by minimization of a cost function, even though very general and powerful in explaining observed systematic correlations in wide range of behaviors, often neglect the specific physical properties of the system they intend to model (Guigon, 2011) and that in turn results in unnecessary “costs.”

Local to global, distributed computing

From the perspective of local to global computing, note that, every element in every “work unit” involved in any PMP network always makes a local decision regarding its contribution to the externally induced pull, based on its own impedance. All such local decisions synergistically drive the overall network to a configuration that minimizes its global potential energy. This is analogous to the behavior of well known connectionist models in the field of artificial neural networks like Hopfield networks (Hopfield, 1982). Different implementations of the PMP using back propagation networks (Mohan and Morasso, 2006, 2007) and self organizing maps (Morasso et al., 1997) have already been conceived and implemented on the iCub humanoid. Thus, the local, distributed nature of information processing makes it possible to explain how computations necessary for PMP relaxation can actually be realized using neural networks, whereas this is still an open question for the formal methods employed by OCT (Scott, 2004; Todorov, 2006).

Timing

There are always temporal deadlines associated with any goal. Control over “time and timing” is crucial for successful action synthesis, be it simply reaching a target in a finite time or complex scenarios like synchronization of PMP relaxations with multiple kinematic chains (bimanual coordination), trajectory formation, multi tasking etc. A way to explicitly control time, without using a clock, is to insert in the non-linear dynamics of the PMP, a time-varying gain Γ(t) according to the technique originally proposed by Zak (1988) for speeding up the access to content addressable memories and then applied to a number of problems in neural networks. In this way, the dynamics of the PMP network is characterized by terminal attractor properties (Figure 3E shows the timing signal). This mechanism can be applied to any dynamics where a state vector x is attracted to a target xT by a potential function, such as V(x) = 1/2(x − xT)TK(x − xT), according to a gradient descent behavior: , where ▽V(x) is the gradient of the potential function, i.e., the attracting force field. Based on the nature of the task, there can either be single or multiple timing signals, hence allowing action sequencing, synchronization, mixing of force fields generated by multiple spatial goals, generation of a diverse range of spatio-temporal trajectories.

PMP and bond graphs

PMP networks have some similarity with bond graphs (Paynter, 1961). Both are graphical representations of dynamical systems which are port-based, emphasizing the flow of energy rather than the flow of information as it happens in the network diagrams. However, bond graphs represent bi-directional exchange of physical energy among interconnected devices in a given application domain (mechanical, electrical, thermal, hydraulic, etc.), with the purpose of simulating the dynamics of the interconnected system. In contrast, PMP-networks are conceived at a more abstract level, which is concerned with the internal representation of the body schema, not as a static map but as a multi-referential dynamical system. Moreover, PMP-graphs are intrinsically unidirectional, in such a way to restrict the overall dynamics to well-formed transformations between motor spaces of different dimensionality (as described under the “Directionality” subheading).

To sum up, in the example of the steering wheel rotation task, a small wheel rotation incrementally assigns (through the assignment node) motion to the two hands connected in parallel, according to the “weight” JT (this transformation is well posed). The force disturbance is computed for the imposed displacement in the end effector space “dx” using the stiffness matrix “K.” The resultant force vector determines a torque vector which yields a joint rotation dq via the transpose Jacobian and compliance matrix A, respectively (this transformation is also well posed). Finally, the steering wheel torque is the summed contribution coming from the two arms (through the sum node) and weighted by transpose of the device Jacobian (this transformation is also well posed). The timing signal allows smooth synchronized motion of the two hands, converging to equilibrium in finite time. In sum, the relaxation process of the PMP network allows us to effectively characterize this highly redundant task of bimanual coordination and incrementally derive the multi-dimensional actuator patterns from a mono-dimensional steering wheel rotation plan.

Incorporating task-specific “internal and external” constraints

Equation 1 can also be seen as the on-line optimization of a cost function, the distance of the end-effector from the target, compatible with the kinematic constraint given by the kinematic structure and represented by the admittance matrix A. However, this is just the simplest situation, which can be expanded easily to include an arbitrary number of constraints or penalty functions, in the form of force fields defined either in the extrinsic space or intrinsic space:

| (3) |

A constraint in the extrinsic space could be an obstacle to avoid, an appropriate hand pose with which to reach an object so as to allow further manipulation actions to be performed (like grasp or push). In the intrinsic space a constraint could take into account the limited range of motion of a joint, the saturation power or torque of an actuator etc. Figure 5A shows a composite PMP network for the right arm kinematic chain, for reaching an object (Goal) with an appropriate wrist orientation/hand pose to support further manipulations (constraint 1) and generating a solution such that the joint angles are well within the permitted range of motion (constraint 2). Hence, in the PMP network of Figure 5A there are three weighted, superimposed force fields that modulate the spatio-temporal behavior of the system: (1) the end-effector field (to reach the target); (2) the wrist field (to achieve the specified hand pose); (3) the force field in joint space for joint limit avoidance. Note that the same timing signal Γ(t) synchronizes all the three relaxation processes. Figure 5B shows results of iCub performing different manipulation tasks driven by such a network.

Figure 5.

(A) Composite PMP network with three force fields applied to the right arm of iCub: a field Fr that identifies the desired position of the hand/fingertip (Goal); a field Fwr that helps achieving a desired pose of the hand via an attractor applied to the wrist (Constraint 1). Here Jwr is the Jacobian matrix of the subset of the kinematic chain, up to the wrist; an elastic force field Fq in the joint space for generating a solution such that the joint angles are well within the permitted range of motion (constraint 2). Note that the same timing signal synchronizes all three relaxation processes, hence allowing the hand to reach the target with a specific pose and posture. (B) Show three examples of iCub performing manipulation tasks driven by the composite PMP net of (A). In the case of bimanually reaching the crane toy, a similar network also applies to the left arm PMP chain. Note that in all these cases, reaching the goal object with specified hand pose is obligatory for successful realization of the goal.

Recently, this modeling framework was further pursued for explaining the formation of WBR synergies, i.e., coordinated movement of lower and upper limbs, characterized by a focal component (the hand must reach a target) and a postural component (the center of mass or CoM, must remain inside the support base; Morasso et al., 2010). By simulating the network in various conditions it was possible to show that it exhibits several spatio-temporal features found in experimental data of WBR in humans (Stapley et al., 1999; Pozzo et al., 2002; Kaminski, 2007). In particular, it was possible to demonstrate that: (1) during WBR, legs, and trunk play a dual role: not only are they responsible for maintaining postural stability, but they also contribute to transporting the hand to the target. As target distance increases, the reach and postural synergies became coupled resulting in the arms, legs and trunk working together as one functional unit to move the whole body forward (see Figures 4D–F); (2) Analysis of the CoM showed that it is progressively shifted forward, as the reached distance increases, and is synchronized with the finger’s movement. Posture and movement are indeed like Siamese twins: inseparable but, to a certain extent, independent. The article on whole body synergy formation showed how postural and focal synergies can be integrated during goal directed coordination through the PMP framework. Generally, we can see the PMP as a mechanism of multiple constraints satisfaction, which solves implicitly the “DoFs problem” without any fixed hierarchy between the extrinsic and intrinsic spaces. The constraints integrated in the system are task-oriented and can be modified at runtime as a function of performance and success.

Motor skill learning and PMP

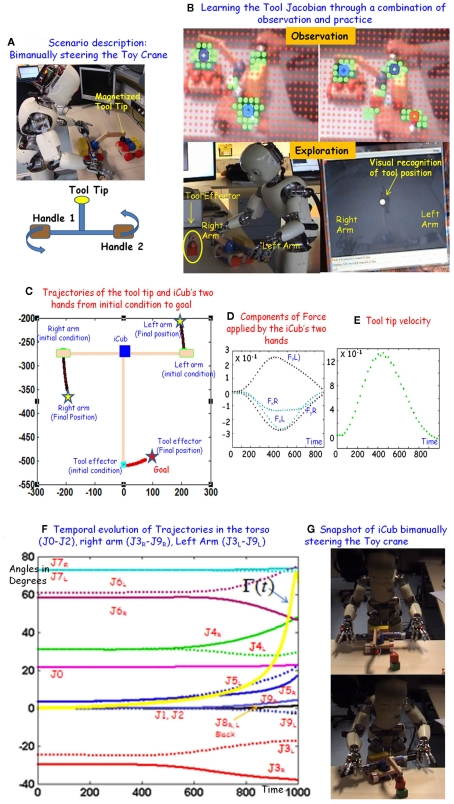

In the context of PMP, when we learn a motor skill, we basically learn the connecting links in the PMP network associated with the task (i.e., vertical links or impedances, horizontal links or Jacobians, and the timing of the time base generators). We will describe central ideas using a new scenario where iCub learns to bimanually steer a toy crane in order to position its magnetized tip at a goal target (Figure 7A). We choose this example because the task is similar to the bimanual control of the steering wheel, the steering wheel replaced by the two handles of the toy crane. So the structure of the PMP network is the same as shown in Figure 2. In general, while learning to control the toy crane, iCub has to learn: (a) the appropriate stiffness and timing to execute the required “spatio-temporal” trajectories using the body + tool chain (for example, performing synchronized quasi-circular trajectories with both hands while turning the toy crane) and (b) while performing such coordinated movements with the tool, learn the Jacobians that map the relationship between the movements of the body effectors and the corresponding consequence on the tool effector (the magnetized tip). The third issue is of course related to using this learnt knowledge to generate “goal directed” body + tool movements (given a goal to reach/pick up an otherwise “unreachable” environmental object using the toy crane).

Figure 7.

(A) Describes the task. Analogous to controlling a steering wheel, iCub has to bimanually maneuver the toy crane so that the magnetized tool tip reaches the goal. (B) Shows snapshots of the dual processes of observing the teacher to imitate similar spatio-temporal movements with the toy and then interacting directly with the tool in order to learn the tool Jacobian. (C) Shows the trajectories in the tool and end effector space, when iCub steers the crane toy from the initial position to the goal. (D) Shows temporal evolution of the x and y components of force exerted by right and left hand, to steer the toy crane toward the goal. (E) Shows the tool tip velocity. Note that the tool velocity is symmetric and bell-shaped. (F) Shows the temporal evolution of motor commands/joint angles in the 17 joints of the iCub upper body as iCub steers the crane toy from the initial condition [(G): top panel] to the Goal [(G): Bottom panel]. Observe that based on the motion of the two hands (C), the evolution of joint angles in the right and the left arm are approximately mirror symmetric (F).

Till now we were dealing with point to point reaching actions using the PMP network (for example Figure 3). But using a toy crane is a task that not only requires iCub to reach (and grasp) the tool but also perform coordinated spatio-temporal movements with the tool (both during exploration and performing goal directed movements using the tool). Part of the information as to what kind of movements can be performed with the tool can be acquired by observing a teachers demonstration. The teacher’s demonstration basically constrains the space of explorative actions when iCub practices with the new toy to learn the consequences of its actions. The basic PMP system on the iCub is presently being extended to incorporate these capabilities. With the help of Figure 6, we outline central features of the extended skill learning architecture.

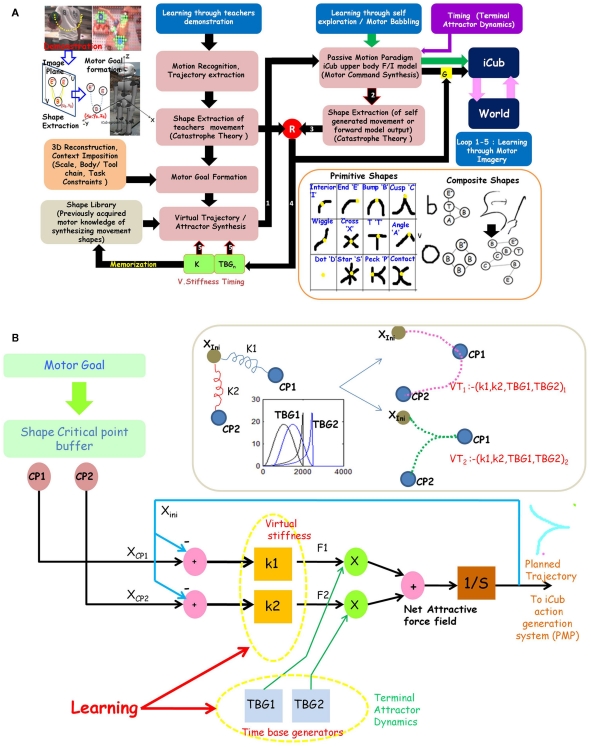

Figure 6.

(A) Motor Skill learning and Action generation architecture of iCub: Building blocks and Information flows. (B) Scheme of the virtual trajectory synthesis system (modeled by Eq. (5)), that transforms a discrete set of critical points (shape “type” and its “spatial location”) in the motor goal into a continuous sequence of equilibrium points that act as moving point attractor to the task relevant PMP network. An elastic force field is associated to each spatial location (in the motor goal), with a strength given by the stiffness matrices (K1 and K2). The two force fields are activated in sequence, with a degree of time overlap, as dictated by two time base generators (TBG1 and TBG2). Simulating the dynamics with different values of K and γ, results in different trajectories through the critical points. Inversely, the problem of learning is to acquire the correct values for K and γ (virtual stiffness and temporal overlap) such that the shape of the resulting trajectory correlates with the shape description in the motor goal.

Learning through imitation, exploration, and motor imagery

Three streams of learning, i.e., learning through teacher’s demonstration (information flow in black arrow), learning through physical interaction (blue arrow), and learning through motor imagery (loop 1–5) are integrated into the architecture. The imitation loop initiates with the teachers demonstration and ends with iCub reproducing the observed action. The motor imagery loop is a sub part of the imitation loop, the only difference being that the motor commands synthesized by the PMP are not transmitted to the actuators instead, the forward model output is used to close the learning loop. This loop hence allows iCub to internally simulate a range of motor actions and only execute the ones that have high performance score “R.”

From trajectory to shape, toward “context independent” motor knowledge

Most skilled actions involve synthesis of spatio-temporal trajectories of varying complexity. A central feature in our architecture is the introduction of the notion of “Shape” in the motor domain. The main purpose was to conduct motor learning at an abstract level and thus speed up learning by exploiting the power of “compositionality” and motor knowledge “reuse.” In general, a trajectory may be thought as a sequence of points in space, from a starting position to an ending position. “Shape” is a more abstract description of a trajectory, which captures only the critical events in it. By extracting the “shape” of a trajectory, it is possible to liberate the trajectory from task-specific details like scale, location, coordinate frames and body effectors that underlie its creation and make it “context independent.” Using Catastrophe theory (Thom, 1975; Chakravarthy and Kompella, 2003) have derived a set of 12 primitive shape features (Figure 6, bottom right panel) sufficient to describe the shape of any trajectory in general. As an example, the critical events in a trajectory like “U” is the presence of a minima (or Bump “B” critical point) in between two end points (“E”). Thus, the shape is represented as a graph “E–B–E” (see Figure 6). If the “U” was drawn on a paper or if someone runs a “U” in a playground, the shape representation is “invariant” (there is always a minima in between two end points). More complex shapes can be described as “combinations” of the basic primitives, like a circular trajectory is a composition of four bumps. In short, using the shape extraction system it is possible to move from the visual observation of the end effector trajectory of the teacher to its more abstract “shape” representation.

Imposing “context” while creating the motor goal

The extracted “shape” representation may be thought of as an “abstract” visual goal created by iCub after perceiving the teacher’s demonstration. To facilitate any action generation/learning to begin, this “visual” goal must be transformed into an appropriate “motor” goal in iCub’s egocentric space. To achieve this, we have to transform the location of the shape critical point computed in the image planes of the two cameras (Uleft, Vleft, Uright, Vright) into corresponding point in the iCub’s egocentric space (x, y, z) through a process of 3D reconstruction (see Figure 6, top left box). Of course the “shape” is conserved by this transformation, i.e., a bump still remains a bump, a cross is still a cross in any coordinate frame. Reconstruction is achieved using Direct Linear Transform (Shapiro, 1978) based stereo camera calibration and 3D reconstruction system already functional in iCub (implementation details of this technique are summarized in the appendix of Mohan et al., 2011b). At this point, other task-related constraints like the scale of the shape, end effector/body chain performing the action can be added to the goal description. So the motor goal for iCub, is an abstract shape representation of the teachers movement (transformed into the egocentric space) and other task-related parameters that needs to be considered while generating the motor action. An example of a motor goal description is like: “use” the left arm-torso chain coupled to the toy crane, generate a trajectory that starts from point 1, ends at point 2, and has a “bump” at point 3 (and observe the consequence through visual and proprioceptive information).

“Virtual trajectories” – motor equivalent action representation

The motor goal basically consists of a discrete set of shape critical points (their spatial location in iCub’s ego centric space and type), that describe in abstract terms the “shape” of the spatio-temporal trajectory that iCub must now generate (with the task relevant body chain). Given a set of points in space an infinite number of trajectories can be shaped through them. How can iCub learn to synthesize a continuous trajectory similar to the teacher’s demonstration using a discrete set of shape descriptors in the Motor goal? The virtual trajectory generation system (VTGS) performs this inverse operation. It transforms the discrete shape representation (in the motor goal) into a continuous set of equilibrium points that act as moving point attractor to the PMP system.

Virtual trajectory generation system preserves the same “force field” based structure as in PMP (Figure 6B). Let Xini ∈ (x, y, z) be the initial condition, i.e., the point in space from where the creation of shape is expected to commence (usually initial condition will be one of the end points). If there are N shape points in the motor goal, the spatio-temporal evolution of virtual trajectory (x, y, z, t) is equivalent to integrating a differential equation that takes the following form:

| (4) |

Intuitively, as seen in Figure 6B, we may visualize Xini as connected to the spatial locations of all shape points by means of virtual springs and hence being attracted by the force fields generated by them FCP = KCP(xCP − xini). The strength of these attractive force fields depends on: (1) the virtual stiffness “Ki” of the spring and (2) time-varying modulatory signals Γi(t) generated by the respective time base generators that determine the degree of temporal overlap between different force fields. The virtual trajectory is then the set of points created during the evolution Xini through time, under the influence of the net attractive field generated by different CP’s. Further, by simulating the dynamics of Eq. (4), with different values of K and γ, a wide range of trajectories can be obtained passing through the discrete set of points described in the motor goal. Inversely, learning to “shape” translates into the problem of learning the right set of virtual stiffness and timing such that the “Shape” of the trajectory created by iCub correlates with the shape description in motor goal.

So “how difficult and how long” does it take to learn these parameters given the demonstration of a specific movement by the teacher? It is here we reap the advantage of moving from “trajectory” to “shape,” since compositionality in the domain of shapes can be exploited to speed up learning. In other words, the amount of exploration in the space of “K” and γ is constrained by the fact that once iCub learns to generate the 12 movement shape primitives, any motion trajectory can be expressed as a composition of these primitive features. The main idea is that since more complex trajectories can be “decomposed” into combinations of these primitive shapes, inversely the actions needed to synthesize them can be “composed” using combinations of the corresponding “learnt” primitive actions. Regarding learning the primitives, it has been demonstrated in (Mohan et al., 2011c), that they can be learnt very quickly by just exploring the space of the virtual stiffness “K” in a finite range of 1–10, followed by an evaluation of how closely the shape of the synthesized trajectory (using Eq. (4)) matches the shape described in the goal. Thus effort in terms of motor exploration is required during the initial phases to learn the basics (i.e., primitives). During the synthesis of more complex spatio-temporal trajectories, composition, and recycling of previous knowledge takes the front stage (considering that the correct parameters to generate the primitives already exist in the shape library).