Abstract

We examined college students' participation in a game activity for studying course material on their subsequent quiz performance. Game conditions were alternated with another activity counterbalanced across two groups of students in a multielement design. Overall, the mean percentage correct on quizzes was higher during the game condition than in the no-game condition.

Keywords: college instruction, games, study habits, higher education

College students often do not study effectively or devote sufficient time to studying (Emanuel et al., 2008; Houston, 1987; Thomas, Bol, & Warkentin, 1991). A potential means of motivating studying is through the use of games as a study aid. Although games as study aids for college students have been described favorably in the educational literature (Gibson, 1991; Walters, 1993), few experimental investigations have been reported. Neef et al. (2007) compared optional study sessions conducted in a game format (e.g., Jeopardy, Who Wants to Be a Millionaire) to a student-directed question-and-answer format in a research methods course. The results of an alternating treatments design counterbalanced across two course sections indicated that review sessions conducted in either format were well attended. Despite improvements relative to baseline, however, there was little difference between the two formats on participants' subsequent quiz scores. Furthermore, mean quiz scores following the two review formats were improved only slightly relative to those of students who elected not to participate in review sessions. It may be that combining features of each of the review formats would be more effective. We therefore systematically extended the Neef et al. study by examining the effects of game activities that involved student- (rather than instructor-) generated questions and answers over the material on college students' subsequent quiz performance.

METHOD

Participants and Setting

The participants were 11 practitioners (e.g., teachers, behavior therapists) enrolled in a graduate level course in applied behavior analysis. Data are reported for eight participants who gave consent for their data to be included in the study. During the first class session, all students in the course were assigned randomly to one of two groups, and within each group to one of two teams (comprised of two to four members). Group and team assignments remained the same throughout the study. Classes met for 2 hr 18 min once per week during the 10-week quarter. All study-related activities took place in separate areas (opposite corners) of the same classroom.

Procedure

During 20 min of each class period, two teams in one group participated in a review game with the instructor, while the two teams in the other group met with a graduate teaching associate (GTA) to discuss their behavior-change project assignment (attention control condition).

Games

Each student who was scheduled to participate in the game activity for a given class session was responsible for generating at least four questions and corresponding answers pertaining to key points of the reading assignment (consisting of one or two chapters from the textbook) for that week. As a condition of participating in the game for the upcoming week, students posted their questions and answers electronically in the dropbox of the course Web site (visible only to the instructor and GTA) before class. The instructor examined the questions and provided feedback if warranted (e.g., if a question focused on a detail unrelated to the objectives). Students then could reexamine and alter the questions or answers before class, if necessary.

The game rules and procedures were explained to the students during the first class session and were reiterated immediately before each game:

1. Students were required to post four questions prior to class to be eligible to participate (none of the students ever failed to meet this criterion).

2. Each team took turns posing questions to the other team.

3. Questions could be answered individually or as a team, with the condition that each team member had to answer at least one question without consultation during the game in order for his or her team to be eligible to win.

4. Teams received one point for each question answered correctly. The student who asked the question was to determine if the answer by the opposing team was correct or incorrect. If that student misidentified an incorrect answer as correct or vice versa, or if he or she failed to provide accurate feedback, a point would be awarded to the opposing team by default (this occurred on only two occasions). For questions answered incorrectly, no point was awarded, and the student who had asked the question was to state the correct answer.

5. The team with the most points at the end of the game activity would be declared the winner, and each member would be awarded 4 bonus points toward his or her course grade. In the case of a tie, members of both teams would be awarded the bonus points.

No-game attention control

Students who were not scheduled to participate in the game activity for a given class session met as a group with a GTA during the 20-min period to review their progress and plans on a behavior-change project that applied concepts covered in class. Students received feedback from the GTA and their peers, and the completed project was turned in at the end of the course for a grade.

Quizzes

During the first 20 min of each class period, all students took a quiz. Quizzes were cumulative and consisted of questions for 6 to 7 points from the previous week's material (which had been the subject of the former week's review game) and up to 8 points from earlier review material. Performance on questions from the earlier review material was not included in the dependent measure. All quiz questions were short answer, were based on unit objectives, and were generated independently and in advance of the review game questions.

Procedural Integrity

During each game session, the instructor recorded on the data sheet the extent to which each of the specified rules and procedures were followed. The data sheet addressed four summary questions for each game session: (a) Only eligible students participated, (b) all eligible team members answered at least one question, (c) a point was awarded for each correct answer or default question, and (d) 4 bonus points were awarded to the members of the winning team on the grade spreadsheet. A GTA observed 37.5% of the game sessions, grade spreadsheet, and dropbox entries, and used the same data sheet as the course instructor to record the events and answers to the summary questions independently. There was 100% agreement in all cases.

Dependent Measure

The dependent measure was the mean percentage correct on each weekly quiz. These data are reported as weekly means for each group (sum of number correct for all participants in the group divided by the number of points possible on the quiz times the number of participants in the group) and as overall means for each participant (sum of number correct for all quizzes in a condition divided by number of points possible for all quizzes in a condition). Data for review questions were not included in the data analysis.

Interobserver Agreement

Interobserver agreement was assessed on 31% of the quizzes across all sessions. A random sample of quizzes was selected each session and then copied for independent scoring by the instructor and a GTA. Scoring was compared on an item-by-item basis, and an agreement was counted if both the instructor and GTA scored the same item identically as correct or incorrect. Agreement scores were calculated by dividing agreements by agreements plus disagreements and multiplying by 100%. Mean agreement was 97% (range, 77% to 100%).

Design and Data Analysis

A counterbalanced multielement design was used in which the two groups alternated between game and no-game (attention control) conditions every other week throughout the academic quarter. Thus, each week two teams participated in the review game while two teams participated in the no-game attention control. Because it was not possible to equate difficulty of the material across sessions, analysis focused on within-unit (session) comparisons of team quiz performance for game and no-game (attention control) conditions. Data were analyzed graphically, and a paired-sample t test was performed to assess the extent to which differences between conditions were statistically significant.

RESULTS AND DISCUSSION

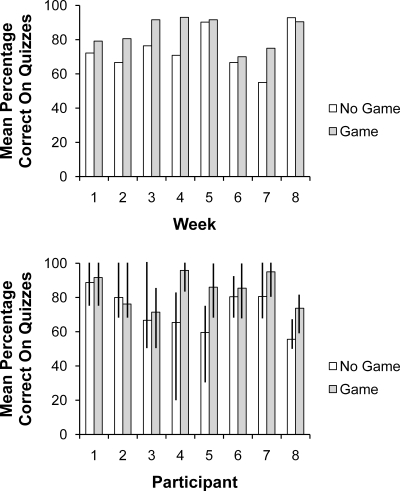

Figure 1 (top) shows the mean percentage correct on quizzes across review game and no-game (attention control) conditions. With the exception of Week 8, students who participated in the review game outperformed students who did not (although the differences were minimal during Weeks 5 and 6). Differences may have been less pronounced during the latter half of the course as opportunities to make up points toward the desired grade diminished, and perhaps because students learned effective strategies for studying from exposure to the review game condition.

Figure 1.

Mean percentage correct on quizzes for students who played the review game and participated in the attention control. Weekly group performance is displayed in the top panel. Individual performance in each condition is displayed in the bottom panel. Error bars represent the range of quiz scores.

Figure 1 (bottom) shows each participant's mean percentage correct on quizzes across review game and no-game conditions. With the exception of Participant 2, all participants performed better on quizzes during the game condition. During the game condition, the mean quiz score across participants was 84.4% (range, 68.3% to 96.1%), whereas during the no-game condition the mean quiz score was 72.1% (range, 53.1% to 88.6%). This difference was educationally significant (being the equivalent of a letter grade) as well as statistically significant when mean quiz scores were compared using a paired-sample t test, t(7) = 2.87, p = .02.

Combining the features of the game and student-directed question-and-answer formats used by Neef et al. (2007) may have resulted in a more robust review format than either alone. For example, participants in the current study were required to develop their own game questions covering the main points of the chapter (and to judge the subsequent answers by opposing team members as correct or incorrect). This student-directed feature of the game may have increased the extent to which students contacted the material before class. The interdependent group-oriented contingency (see Litow & Pumroy, 1975) may have brought both positive and negative reinforcement to bear in supporting preparation. All members of the winning team received bonus points. The eligibility requirement that each member of the team correctly answer at least one question created individual accountability and most likely social contingencies for behaviors that would promote and not preclude teammates from obtaining the extra points. Coyne (1978) found a similar interdependent group contingency applied to in-class peer tutoring to be effective in improving the test scores of college students in an educational psychology course. In future research, a component analysis may reveal the extent to which student-directed features and interdependent group-oriented contingencies contribute to improved quiz performance. A control condition in which the instructor generates the questions may help to determine the specific factors that are most instrumental. In addition, the supervision provided by the instructor and GTAs might be counterbalanced across game and no-game activities, thereby correcting a limitation of the current study.

Interestingly, performance did not appear to be motivated by the competitive aspect of games. Often, members of the leading team would answer the questions in a manner that ended the game with a tie, with both teams thereby receiving the bonus points. The leading team members reported that they “felt bad” for the opposing team and that they wanted both teams to earn the bonus points. Perhaps for this reason, student ratings of games versus discussion of group projects on an anonymously completed course evaluation yielded mixed results. Only 28.6% of the respondents preferred games to discussion of group projects, and 42.9% reported having no preference. On the other hand, the indifferent ratings may have occurred because each of the two activities aided performance related to a different part of the course grade (i.e., quizzes and projects).

Further research is needed to determine systematically the features of games or other interventions that enhance both engagement and fluency with the material. The current study provides some possible leads in that direction.

Acknowledgments

The game used in this study was a variation of that observed in a course taught by Philip Ward, and we thank him for that idea. This research was supported in part by a grant from USDE, OSEP (H325DO60032).

REFERENCES

- Emanuel R, Adams J, Baker K, Daufin E.K, Ellington C, Fitts E, et al. How college students spend their time communicating. The International Journal of Listening. 2008;22:13–28. [Google Scholar]

- Coyne P.D. The effects of peer tutoring with group contingencies on the academic performance of college students. Journal of Applied Behavior Analysis. 1978;11:305–307. [Google Scholar]

- Gibson B. Research methods jeopardy: A tool for involving students and organizing the study session. Teaching of Psychology. 1991;18:176–177. [Google Scholar]

- Houston L.N. The predictive validity of a study habits inventory for first semester undergraduates. Educational and Psychological Measurements. 1987;47:1025–1030. [Google Scholar]

- Litow L, Pumroy D.K. A brief review of classroom group-oriented contingencies. Journal of Applied Behavior Analysis. 1975;8:341–347. doi: 10.1901/jaba.1975.8-341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neef N.A, Cihon T, Kettering T, Guld A, Axe J.B, Itoi M, et al. A comparison of study session formats on attendance and quiz performance in a college course. Journal of Behavioral Education. 2007;16:235–249. [Google Scholar]

- Thomas J.W, Bol L, Warkentin R.W. Antecedents of college students' study deficiencies: The relationship between course features and students' study activities. Higher Education. 1991;22:275–296. [Google Scholar]

- Walters A.S. Sexual jeopardy: An activity for human sexuality courses. Sexuality and Disability. 1993;11:319–324. [Google Scholar]