Abstract

Introduction

The National Cancer Institute’s (NCI) Community Clinical Oncology Program (CCOP) contributes one third of NCI treatment trial enrollment (“accrual”) and most cancer prevention and control (CP/C) trial enrollment. Prior research indicated that the local clinical environment influenced CCOP accrual performance during the 1990s. As the NCI seeks to improve the operations of the clinical trials system following critical reports by the Institute of Medicine and the NCI Operational Efficiency Working Group, the current relevance of the local environmental context on accrual performance is unknown.

Materials and methods

This longitudinal quasi-experimental study used panel data on 45 CCOPs nationally for years 2000–2007. Multivariable models examine organizational, research network, and environmental factors associated with accrual to treatment trials, CP/C trials, and trials overall.

Results

For total trial accrual and treatment trial accrual, the number of active CCOP physicians and the number of trials were associated with CCOP performance. Factors differ for CP/C trials. CCOPs in areas with fewer medical school-affiliated hospitals had greater treatment trial accrual.

Conclusions

Findings suggest a shift in the relevance of the clinical environment since the 1990s, as well as changes in CCOP structure associated with accrual performance. Rather than a limited number of physicians being responsible for the preponderance of trial accrual, there is a trend toward accrual among a larger number of physicians each accruing relatively fewer patients to trial. Understanding this dynamic in the context of CCOP efficiency may inform and strengthen CCOP organization and physician practice.

Keywords: Clinical research, Practice-based research networks, Environment and public health, Cancer, Community Clinical Oncology Program

1. Introduction

We stand on the edge of a new era in cancer clinical research. Rapid advances in proteomics and genomics are reshaping not only the practice of clinical cancer care, but also clinical research itself [1]. Simultaneously, there is a new imperative on improving the efficiency and productivity of the national cancer clinical trials system. The Institute of Medicine (IOM) and the National Cancer Institute’s (NCI’s) Operational Efficiency Working Group (OEWG) have recently issued stark reports advocating for substantial change in the cancer clinical trials system, which has reached “a state of crisis [2–4].” Cancer research leaders recently reiterated this urgency at the NCI-American Society of Clinical Oncology (ASCO) symposium, and specifically emphasized better understanding of the drivers of research efficiency and productivity [5].

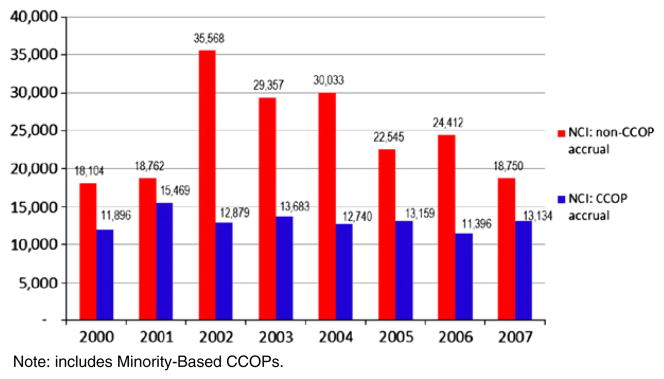

To date, the NCI Cooperative groups that develop and manage NCI clinical trials have been a focal point of emphasis, and the IOM and OEWG reports have catalyzed their massive reorganization, consolidating from 9 groups to 4 with substantial corresponding system changes [6]. However, 30% of NCI treatment trial accrual and nearly all of its cancer prevention and control (CP/C) trial accrual (over 28,000 accruals in 2007) comes from the NCI Community Clinical Oncology Program (CCOP). The CCOP is tightly connected to the Cooperative groups; however, it is still independent of them, and while efforts to restructure the cooperative groups will affect CCOPs, research shows that organizational characteristics and local market conditions significantly influence CCOP productivity. For example, studies of CCOP performance in the 1990s demonstrated that managed care penetration and hospital competition were relevant factors affecting trial accrual, and that accrual to treatment trials and CP/C trials were differently affected by not only these factors, but also CCOP organizational characteristics [7–9] (Fig. 1).

Fig. 1.

Overall new accruals to NCI trials: NCI CCOP vs. NCI non-CCOP accrual, 2000–2007.

Much has changed since the 1990s. The clinical practice dynamic surrounding managed care is no longer what it was, and other factors have arisen and changed the clinical environment in which CCOPs operate. Among them, the Medicare Modernization Act of 2003 changed the reimbursement landscape substantially for cancer care, especially for chemotherapy, which is a dominant focus of investigation in NCI clinical trials [10,11]. These changes have spawned concerns regarding consequent changes in patient access and referral, which may also affect clinical trials participation [12]. As the nation engages in historic health care reform accompanied by substantial uncertainty [13,14], the current effect of the healthcare environment on NCI clinical trials is unknown.

This study reexamines the NCI CCOPs to inform and update our understanding of factors associated with trial enrollment and program sustainability. It employs a multilevel approach to examine factors associated with the CCOP, NCI research network, and local clinical care environment to understand factors associated with treatment trial accrual, CP/C trial accrual, and total trial accrual in the past 10 years. In doing so, this study addresses the calls from the IOM and NCI/ASCO to develop a better understanding of the drivers of clinical trial productivity and efficiency in the context of the clinical provider community in which cancer care is delivered.

2. Materials and methods

2.1. The NCI CCOP Program

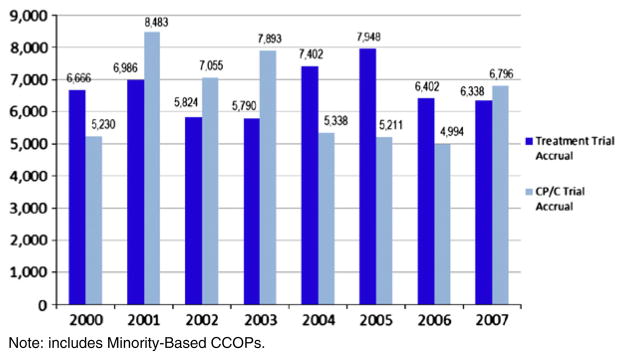

This study focuses on the NCI CCOP program, which has been described extensively elsewhere [1,15]. Briefly, the CCOP is a nationwide provider-based research network (PBRN) enabling community physicians to participate in NCI clinical research not only by enrolling patients in trials, but also by engaging them with academic researchers to contribute practice-based practical insight to collaboratively develop practical studies. The CCOP goals include not only enrolling patients onto trials, but also accelerating the use of evidence-based medicine in the community. Through first-hand participation in the clinical trials, community physicians practice the state-of-the-art science being investigated through the trial, and develop a sense of ownership and acceptance of study findings. This strengthens their likelihood of acting on the research findings and incorporating the evidence-based medicine into practice. Recent studies have validated this NCI goal, by showing that organizational participation in NCI clinical research is positively associated with adoption of state-of-the-art care [16–18] (Fig. 2).

Fig. 2.

Treatment and CP/C trial accrual in the NCI CCOP Program.

2.2. Data and sample

This single-group, longitudinal quasi-experimental study used yearly panel data from 2000 to 2007 collected from CCOP progress reports and the NCI’s CCOP, MBCCOP, and Research Base Management System. The unit of analysis was the CCOP. Because healthcare environmental data were measured at the metropolitan statistical area (MSA), we included all CCOPs that served at least one MSA and were active in the year 2000. This resulted in 45 CCOPs in the year 2000 decreasing to 41 CCOPs in 2007, for a total of 355 CCOP-year observations in the analytic sample.

Three dependent variables were separately examined as markers of CCOP performance: treatment trial accrual, CP/C trial accrual, and total trial accrual. Independent variables included CCOP characteristics, CCOP-Research Base (RB)1 network characteristics, and environmental characteristics. Among CCOP characteristics, we examined the number of active and enrolling CCOP physicians, the number of CCOP components, the number of active institutional review boards (IRBs), and the number of CCOP or RB meetings attended by CCOP physicians or staff. A gini index was developed to enumerate each CCOP site’s accrual as being concentrated among a few CCOP physicians, or more broadly and evenly distributed among multiple physicians. [19] This index was used to create a categorical variable indicating low, medium, and high physician accrual equity. The CCOP-RB network characteristics included the number of affiliated RBs and the number of treatment trials, CP/C trials, and total trials for which the CCOP had at least one accrual during the year.

To measure environmental characteristics, we used managed care penetration, hospital competition, and clinical trials competition. Using the InterStudy Competitive Edge Regional Market Analysis dataset, managed care penetration was measured as health maintenance organization (HMO) penetration for each CCOP’s MSA [20]. HMO penetration was calculated by dividing the number of HMO enrollees in the MSA by its total population. For hospital competition, we used the American Hospital Association (AHA) Survey Database to calculate the Herfindahl–Hirschman Index (HHI) for each MSA, based on each short-term acute care hospital’s total number of admissions divided by the total of all admissions in the MSA, then squared and summed [21]. The final measure was calculated as one minus the HHI. Data from the Area Resource File were used to develop a measure of clinical trials availability or competition in the MSA, and measured as the proportion of hospitals in the MSA that were unaffiliated with medical schools. Additional environmental control variables included the proportion of the MSA that was insured, and whether or not the state had a legislative mandate that regular care be covered by insurance for those enrolled in clinical trials [22].

Although the study captured data on nearly all CCOPs, the total sample size called for using a limited set of variables to conserve degrees of freedom in the multivariable analysis. Accordingly, informed by annual accrual trends and a review of preliminary regression analysis, categorical variables indicating the year were collapsed from eight variables to four reflecting the years 2000–2001, 2002–2003, 2004–2005, 2006–2007. CCOP-level data were linked to MSA-level data based on the MSA in which the CCOP headquarter was located, as done previously [7].

The dependent variable was available for all 355 observations, as was the case for nearly all observations on independent variables. In 2006, HHI was missing for 7 observations at the MSA level. Because of the high correlation between MSA-level and county-level measures of HHI (0.829, p>0.001), county-level HHI replaced missing MSA-level values for this year. For 2007, because of the very high inertia in this measure, observations for HHI were imputed by linear interpolation/extrapolation based on immediately prior years.

To select the functional form for the dependent variable, we used the Wooldridge test examining if logged annual accrual was superior to un-logged annual accrual in terms of overall model fit [23]. To select the functional forms of the independent variables in the model, we used adjusted R-squared values [23]. We determined that the non-logged annual accrual model and non-quadratic forms of HMO penetration and hospital competition best fit the data. Additionally, in order to allow the effect of the number of IRBs on trial accrual to vary for CCOPs with a higher or lower number of CCOP components, we examined an interaction term of number of IRBs number of components.

2.3. Analytic model

Using a single-group, longitudinal panel design, we analyzed the three dependent variables separately in three models. We compared three different types of analytic methods: pooled-OLS with dummy variables for time, fixed effects, and random effects estimation. A Hausman test and a Breusch–Pagan Lagrange Multiplier test suggested that fixed effects estimation for total accrual and treatment accrual, and random effects estimation for the CP/C accrual model were most appropriate [23,24]. T-tests were used to test the significance of single variables and F-tests to examine the joint significance categorical constructs in each of the three models described above. All analyses were performed using Stata 11, and tests were conducted using a minimum significance level of 0.05 (StataCorp. 2009. Stata Statistical Software: Release 11. College Station, TX: StataCorp LP).

3. Results

During the period of 2000–2007, the average accrual per CCOP for total trials, treatment trials and CP/C trials was 240.3, 123.2, and 117.1 patients per year, respectively (Table 1). The average CCOP had approximately 21 physicians accruing patients to a trial, was affiliated with slightly less than five cooperative groups, and had accrual to 31 treatment trials and 13 CP/C trials. Accrual was evenly distributed among the time periods. The majority of CCOPs (52%) were located in the North Central region of the United States. Bivariate correlations are presented in Table 2.

Table 1.

Descriptive characteristics of the CCOP analytic sample.

| Variable | Mean | (Std. dev.) | [Range] |

|---|---|---|---|

| Performance variables | |||

| Total accrual | 240.28 | (160.12) | [4–1027] |

| CP/C accrual | 123.22 | (76.55) | [0–733] |

| Treatment accrual | 117.06 | (102.11) | [0–508] |

| CCOP characteristics | |||

| Num. of active CCOP MDs | 21.35 | (14.96) | [0–96] |

| Num. of CCOP components | 6.60 | (5.25) | [0–29] |

| Num. of active IRBs | 2.93 | (3.07) | [1–19] |

| Num. of CCOP or RB meetings attended | 29.22 | (39.30) | [0–281] |

| Low physician accrual equity | 0.36 | (0.48) | [0–1] |

| Medium physician accrual equity | 0.38 | (0.49) | [0–1] |

| High physician accrual equity | 0.26 | (0.44) | [0–1] |

| CCOP-RB network characteristics | |||

| Num. of affiliated RBs | 4.93 | (2.54) | [0–10] |

| Num. of total trials | 43.95 | (19.78) | [5–120] |

| Num. of treatment trials | 30.97 | (14.69) | [1–85] |

| Num. of CP/C trials | 12.98 | (6.37) | [1–40] |

| Environmental characteristics | |||

| Hospital competition (1-HHI) | 0.79 | (0.15) | [0.45–0.98] |

| HMO penetration | 0.22 | (0.12) | [0.01–0.64] |

| Proportion of MSA uninsured | 0.13 | (0.04) | [0.07–0.33] |

| High proportion of hospitals in MSA with medical school affiliation | 0.34 | (0.48) | [0–1] |

| Medium proportion of hospitals in MSA with medical school affiliation | 0.34 | (0.47) | [0–1] |

| Low proportion of hospitals with in MSA medical school affiliation | 0.32 | (0.47) | [0–1] |

| State insurance mandate | 0.26 | (0.44) | [0–1] |

| Time variables (mean n, % of sample in each year) | |||

| 2000–2001 | 45.0 | (25.4%) | |

| 2002–2003 | 45.0 | (25.4%) | |

| 2004–2005 | 44.5 | (25.1%) | |

| 2006–2007 | 43.0 | (24.2%) | |

| Region (mean n, % of sample in each region) | |||

| Northeast | 0.0 | (0.0%) | |

| Mid-Atlantic Region | 4.9 | (11.0%) | |

| East North Central Region | 13.0 | (29.3%) | |

| West North Central Region | 9.9 | (22.3%) | |

| South Atlantic Region | 5.0 | (11.3%) | |

| East South Central Region | 0.0 | (0.0%) | |

| West South Central Region | 3.0 | (6.8%) | |

| Mountain Region | 3.6 | (8.2%) | |

| Pacific Region | 5.0 | (11.3%) | |

Table 2.

Correlation matrix of CCOP characteristics.

| (1) | (2) | (3) | (4) | (5) | (6) | (7) | (8) | (9) | (10) | (11) | (12) | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| (1) Total accrual | 1.000 | ||||||||||||

| (2) Treatment accrual | 0.860*** | 1.000 | |||||||||||

| (3) CP/C accrual | 0.924*** | 0.598*** | 1.000 | ||||||||||

| (4) Number of total trials | 0.783*** | 0.806*** | 0.623*** | 1.000 | |||||||||

| (5) Number of active physicians | 0.585*** | 0.643*** | 0.436*** | 0.493*** | 1.000 | ||||||||

| (6) Number of components | 0.467*** | 0.565*** | 0.308*** | 0.404*** | 0.439*** | 1.000 | |||||||

| (7) Number of affiliated IRBs | 0.401*** | 0.465*** | 0.280*** | 0.266*** | 0.464*** | 0.268*** | 1.000 | ||||||

| (8) Number of affiliated IRBs per component | 0.557*** | 0.626*** | 0.404*** | 0.386*** | 0.644*** | 0.494*** | 0.890*** | 1.000 | |||||

| (9) Number of meetings attended | 0.404*** | 0.386*** | 0.345*** | 0.345*** | 0.301*** | 0.089* | 0.450*** | 0.422*** | 1.000 | ||||

| (10) Number of affiliated RBs | 0.274*** | 0.257*** | 0.238*** | 0.297*** | 0.228*** | 0.074 | 0.047 | 0.088 | 0.107** | 1.000 | |||

| (11) Low physician accrual equity (uneven distribution) | 0.124*** | 0.150*** | 0.083 | 0.043 | 0.060 | −0.002 | 0.173** | 0.164*** | 0.009 | 0.010 | 1.000 | ||

| (12) Medium physician accrual equity | −0.027 | −0.002 | −0.041 | 0.063 | 0.040 | 0.068 | −0.106*** | −0.066 | −0.091* | 0.101* | −0.592*** | 1.000 | |

| (13) High physician accrual equity (even distribution) | −0.107** | −0.163*** | −0.046 | −0.118** | −0.110** | −0.074 | −0.072 | −0.107** | 0.092* | −0.123** | −0.441 | −0.463*** | |

| (14) Number of treatment trials | 0.759*** | 0.802*** | 0.588*** | 0.975*** | 0.487*** | 0.416*** | 0.287** | 0.403*** | 0.352*** | 0.291*** | 0.074 | 0.044 | |

| (15) Number of CP/C trials | 0.682*** | 0.654*** | 0.579*** | 0.858*** | 0.406*** | 0.297*** | 0.164** | 0.271*** | 0.262*** | 0.249*** | −0.036 | 0.094* | |

| (16) Hospital competition (1-\HHI) | −0.258*** | −0.203*** | −0.253*** | −0.381** | 0.106** | −0.034 | 0.053 | 0.033 | −0.121** | −0.025 | −0.049 | 0.085 | |

| (17) HMO penetration | −0.187*** | −0.159*** | −0.174*** | −0.213** | 0.017 | −0.057 | −0.061 | −0.053 | −0.108** | −0.039 | 0.077 | 0.010 | |

| (18) Uninsured penetration | −0.272*** | −0.228*** | −0.255*** | −0.358** | −0.106** | −0.108** | 0.135** | 0.048 | −0.112** | −0.116** | 0.182*** | −0.146 | |

| (19) High proportion of hospitals in MSA with medical school affiliation | −0.023 | 0.009 | −0.042 | 0.046 | −0.063 | 0.151*** | −0.076 | −0.075 | −0.147*** | −0.050 | −0.058 | −0.004 | |

| (20) Medium proportion of MSA hospitals with medical school affiliation | 0.113** | 0.098* | 0.104* | 0.082 | 0.098* | −0.048 | 0.064 | 0.069 | 0.252*** | 0.093* | −0.094* | 0.057 | |

| (21) Low proportion of MSA hospitals with medical school affiliation | −0.092* | −0.109** | −0.063 | −0.131** | −0.036 | −0.105** | 0.012 | 0.006 | −0.107** | −0.044 | 0.033 | 0.001 | |

| (22) State Insurance Mandate | −0.103* | −0.066 | −0.112** | −0.053 | 0.132** | −0.056 | 0.012 | 0.089* | −0.029 | 0.056 | −0.012 | 0.013 | |

| (23) 2002–2003 | −0.006 | −0.127** | 0.086 | −0.061 | 0.001 | −0.010 | −0.026 | −0.013 | 0.045 | 0.073 | −0.060 | −0.006 | |

| (24) 2004–2005 | −0.014 | 0.132** | −0.120** | 0.075 | 0.012 | −0.014 | −0.026 | −0.019 | 0.010 | 0.189*** | −0.028 | 0.079 | |

| (25) 2006–2007 | −0.070 | −0.067 | −0.060 | 0.024 | −0.005 | 0.012 | −0.029 | −0.008 | −0.084 | −0.151*** | −0.096 | 0.041 | |

| (13) | (14) | (15) | (16) | (17) | (18) | (19) | (20) | (21) | (22) | (23) | (24) | (25) | |

| (13) High physician accrual equity (even distribution) | 1.000 | ||||||||||||

| (14) Number of treatment trials | −0.130** | 1.000 | |||||||||||

| (15) Number of CP/C trials | −0.065 | 0.721*** | 1.000 | ||||||||||

| (16) Hospital competition (1-HHI) | −0.041 | −0.373*** | −0.320*** | 1.000 | |||||||||

| (17) HMO penetration | −0.095* | −0.171*** | −0.265*** | 0.323*** | 1.000 | ||||||||

| (18) Uninsured penetration | −0.038 | −0.328*** | −0.356*** | 0.268*** | 0.222*** | 1.000 | |||||||

| (19) High proportion of hospitals in MSA with medical school affiliation | 0.062 | 0.052 | 0.024 | −0.324*** | −0.134 | −0.129 | 1.000 | ||||||

| (20) Medium proportion of MSA hospitals with medical school affiliation | 0.041 | 0.066 | 0.102* | 0.240 | 0.071 | 0.012 | −0.520*** | 1.000 | |||||

| (21) Low proportion of MSA hospitals with medical school affiliation | −0.038 | −0.120** | −0.129** | 0.087 | 0.064 | 0.120** | −0.491*** | −0.488*** | 1.000 | ||||

| (22) State insurance mandate | −0.001 | −0.056 | −0.035 | 0.098* | 0.192*** | 0.152*** | −0.192*** | 0.053 | 0.142*** | 1.000 | |||

| (23) 2002–2003 | 0.073 | −0.045 | −0.087 | 0.000 | 0.042 | 0.014 | 0.056 | 0.004 | −0.061 | 0.047 | 1.000 | ||

| (24) 2004–2005 | −0.057 | 0.046 | 0.127** | −0.008 | 0.011 | 0.063 | −0.008 | −0.032 | 0.041 | 0.110** | −0.337*** | 1.000 | |

| (25) 2006–2007 | 0.060 | −0.030 | 0.144*** | −0.010 | −0.148*** | −0.120** | −0.077 | 0.010 | 0.069 | 0.093* | −0.330** | −0.327*** | 1.000 |

In the total trial accrual model, only the number of active CCOP physicians (p<.009) and the number of total trials (p<.001) were significant. An increase in either of these factors was associated with an increase in total clinical trial accrual. The categorical variable indicating low, medium, and high physician accrual equity was not jointly significant [F(2, 292)=1.0, Prob>F=0.37]. The marginal effect of Number of IRBs and Number of components (using the interaction) was not significant in this or any of the other models. The only significant environmental factor was the categorical variable for proportion of hospitals in MSA with medical school affiliations [F(2, 292)= 3.3, Prob>F=0.04]; CCOPs in MSAs with fewer medical school affiliated hospitals were associated with higher total trial accrual.

In the treatment accrual model, the number of active CCOP physicians (p<.001) and the number of treatment trials (p<.001) were significantly associated with greater treatment trial accrual. The categorical variable indicating low, medium, and high physician accrual equity was jointly significant [F(2, 292)=3.1, Prob>F=0.047]. In this model, lower accrual equity among physicians (i.e., the majority of accrual concentrated among few physicians) was generally associated with higher treatment trial accrual, though this association was not consistently statistically significant. Similar to the total accrual model, the CCOPs in MSAs with fewer medical school affiliated hospitals were significantly associated with greater treatment trial accrual [F(2, 292)=3.1, Prob>F=0. 047].

In the CP/C model, the number of CP/C trials (p<.001) and RB affiliations (p<.028) was again significantly associated with greater total trial accrual. Unlike the treatment trial accrual model, higher accrual equity among physicians was generally associated with higher CP/C trial accrual; however, this was not statistically significant. Hospital competition was significantly associated with a decrease in CP/C trial accrual (p<0.039). All other variables and constructs were statistically insignificant (Table 3).

Table 3.

Regression results: CCOP, network, and environmental factors associated with CCOP trial accrual.

| Variable | Total accrual

|

Treatment accrual

|

CP/C accrual

|

|||

|---|---|---|---|---|---|---|

| β | (S.E.) | β | (S.E.) | β | (S.E.) | |

| CCOP characteristics | ||||||

| Num. of active CCOP MDs | 2.575*** | (0.984) | 1.322*** | (0.344) | 0.862 | (0.514) |

| Num. of CCOP components | −3.063 | (3.468) | −1.151 | (1.231) | 0.369 | (1.392) |

| Num. of active IRBs | −6.388 | (6.708) | 0.639 | (2.393) | −6.292 | (4.038) |

| Num. of CCOP components num. of active IRBs | 0.951 | (0.697) | 0.096 | (0.249) | 0.695* | (0.305) |

| Num. of CCOP or RB meetings attended | 0.356 | (0.373) | 0.121 | (0.132) | 0.216 | (0.168) |

| Low physician accrual equity | – | – | – | – | – | – |

| Medium physician accrual equity | 0.749 | (10.505) | −6.962* | (3.746) | −3.664 | (9.098) |

| High physician accrual equity | 14.563 | (12.456) | −10.796 | (4.445) | 10.074 | (10.557) |

| CCOP-RB network characteristics | ||||||

| Num. of affiliated RBs | 2.838 | (1.843) | −0.250 | (0.658) | 3.546* | (1.612) |

| Num. of total trials | 2.291*** | (0.480) | – | – | – | – |

| Num. of treatment trials | – | – | 1.388*** | (0.209) | – | – |

| Num. of CP/C trials | – | – | – | – | 5.607*** | (0.893 |

| Environmental characteristics | ||||||

| Hospital competition (1-HHI) | 252.382 | (215.919) | 135.828 | (76.580) | −92.949* | (45.067) |

| HMO penetration | −60.606 | (76.947) | −27.235 | (27.420) | −20.235 | (48.148) |

| Proportion of MSA uninsured | −71.556 | (260.821) | 129.267 | (93.093) | −193.766 | (152.635) |

| Low proportion of hospitals with in MSA medical school affiliation | – | – | – | – | – | – |

| Medium proportion of hospitals in MSA with medical school affiliation | −19.450 | (1.210) | 7.930 | (1.380) | −12.250 | (1.060) |

| High proportion of hospitals in MSA with medical school affiliation | −57.115* | (2.490) | −5.087 | (0.062) | −30.993* | (2.310) |

| State insurance mandate | 1.067 | (17.372) | 3.862 | (6.201) | −15.420 | (11.909) |

| Time variables | ||||||

| 2000–2001 | – | – | – | – | – | – |

| 2002–2003 | −27.571* | (11.459) | −19.986*** | (4.109) | −8.435 | (10.202) |

| 2004–2005 | −44.152*** | (12.110) | 9.280*** | (4.291) | −58.754*** | (10.871) |

| 2006–2007 | −57.296*** | (13.166) | −13.058** | (4.694) | −49.680*** | (11.373) |

| Intercept | −46.030 | (0.260) | −56.170 | (0.890) | 152.000*** | (3.370) |

N=335, G=45.

F test for joint significance of equality F(2, 292)=1.00 F(2, 292)=3.07 chi2(2)=2.14.

p<0.05.

p<0.01.

p<0.001.

4. Discussion

This study examined the organizational and environmental characteristics associated with accrual in the NCI’s CCOP program. One of the most striking findings in the analysis may be that, compared with analyses of the prior decade [7], very few traditionally-measured CCOP organizational or environmental characteristics remain associated with CCOP accrual performance. A hallmark of the CCOP has been its long history of performance data collection and a philosophy of systematic self-examination to inform continuous improvement [1,7–9,25,26]. As the CCOP continues to embrace this philosophy and engage in strategic planning efforts, also incorporating guidance from the recent IOM and OEWG [3,4], one of its most important priorities may be to examine new measures associated with organizational performance and implement them to augment the set of measures it currently collects.

Interpreting the results of this analysis in the context of prior research, we see current CCOPs experiencing higher per-CCOP accrual in current years, suggesting greater efficiencies in operation than before. The CCOPs had a comparable number of CP/C trials open, though substantially fewer treatment trials open compared to the 1990s. Given this trend, it may be the case that funding previously allocated to administrative staff for opening and processing trials may have been reallocated to fund more staff that enrolled or helped manage patients on trials, and thus be a key driver of the apparent accrual efficiencies. CCOPs in the 2000s tended to be larger and have a slightly greater number of accruing physicians than those in the 1990s, further suggesting that achieving a “critical mass” may be associated with CCOP success. This may be through greater programmatic stability (i.e., better able to absorb individual physicians coming and going) or broader access to additional funding from affiliated hospitals and clinical programs. Alternatively, it may offer enhanced access to a greater number of patients or a more diverse patient population. This may also be reflected in CCOPs recently being affiliated with a greater number of Research Bases than before, with associated access to a more diverse set of clinical trials from which to choose to meet the needs of the more diverse population.

The non-significance of HMO penetration and hospital competition suggests that CCOPs able to adapt to the disruptive changes in healthcare financing seen in the 1980s and 1990s were more likely to survive and thrive in the 2000s. It may also indicate that the once-volatile market characteristics are in fact less disruptive than they once were.

For CP/C accrual, surprisingly few factors are strongly predictive of performance compared to those associated with either CP/C in the 1990s or with treatment accrual in the 2000s. Another notable contrast is the physician accrual profile, in which treatment trial performance appears to have favored few physicians who were responsible for most accrual, compared to a broader set of physicians with more equal accrual among CP/C trials. Comparing CP/C and treatment models further, statistical specification tests suggested that a different model (Random Effects estimation) had a better fit for CP/C compared to treatment (Fixed Effects estimation). Together, these points jointly suggest that factors associated with CP/C accrual performance are fundamentally different from those associated with treatment accrual. In practical terms, this observation reflects the reality of CCOP operations and is quite logical, as treatment accrual tends to take place in oncology practices while CP/C accrual commonly takes place in a much broader set of clinical as well as non-clinical settings. Therefore, it makes sense that organizational and environmental factors associated with oncology treatment may differently affect accrual to treatment trials compared to accrual to CP/C trials, for example, in primary care practices, follow-up clinics, or the broader non-clinical community.

Study findings suggest that, more so than clinical competition, there may be academic competition. Specifically, there tends to be less accrual among CCOPs in communities with a stronger academic presence vis-à-vis the proportion of hospitals affiliated with a medical school. It may be that patients who would contemplate enrollment in a clinical trial would be likely to accept referral or self-refer to an academic center, rather than refer to a CCOP practice. On one hand, this speaks to the CCOP’s strategic priority focus outside of the academic medical centers, and the establishment of local CCOPs in communities that do not have a medical school. Conversely, it may be that CCOPs in communities with a strong academic presence are functionally different from other CCOPs either in terms of their structure, the trials they offer, the strength or consistency of referral relationships, or the different practice focus and priorities of CCOP practitioners in these communities. Again, we look at the trend toward greater treatment trial accrual among CCOPs with low accrual equity physicians (i.e., a small proportion of physicians have a preponderance of accrual). There are several possible causes of this phenomenon. CCOP physicians in communities where there is an academic presence may feel greater pressure to more broadly serve the practice organization’s many missions, rather than “specialize” in clinical research. This may contrast to communities without academic centers, where the CCOP may be the only source of clinical trial participation, and thus functionally serve as “the academic center” for that community, in turn promoting cultural norms that encourage specialization in different areas among professionals — clinical care, research, and others. Additionally, this finding may indicate that a dynamic, motivated physician may provide a bolus in trial enrollment. It may also suggest that small practices with a strong focus on trial enrollment may outperform their larger peer practices. Further research should examine these issues, as understanding them may inform CCOP organization and physician practice focus, and strengthen those CCOPs operating in both academic and non-academic communities.

4.1. Limitations

Among study limitations, our study involves a sample of only 45 CCOPs followed for eight years. Though this represents nearly all CCOPs, it is a small sample to examine with fixed effects and random effects estimation, and close attention must be paid to balance sufficient model specification with a need for erring on the side of parsimony in terms of degrees of freedom. A tradeoff of being parsimonious is that this minimally-specified model may suffer from excluding relevant variables. This study examined the variables collected by CCOPs that were most relevant and incorporated additional measures. However, there may be other environmental and organizational factors or patient population, physician, hospital and clinical trial specific characteristics that may have had an effect on clinical trial accrual for which our model did not control. If these factors truly belonged in the model, omitting them will result in biased estimates. As the CCOP goes forward to identify additional relevant variables, understanding the relative strength of relevance for each will be important.

Among other issues, many of our environmental variables are linked to the CCOPs by the MSA where the CCOP headquarters is located, which is suboptimal because some CCOPs are active in more than one MSA. In these instances, variables such as hospital competition or HMO penetration might not comprehensively reflect the environment in which the CCOP actually operates. However, most CCOPs operate in a limited region, and for this study sample, there was limited variation with these regions, and thus limited risk of bias due to this measurement method. In this study, hospital competition was based on the number of hospital admissions; given the outpatient nature of the majority of cancer care, it may be more relevant to measure hospital competition differently in the future. Finally, physician competition was measured using the number of practicing physicians in the MSA. Given that this study focuses on clinical trials for cancer patients, it may have been more accurate to reflect physician competition in terms of practicing oncologists in the MSA or CCOP service area. This data was not available for this study.

5. Conclusions

More so than any one organizational or environmental characteristic, the flexibility of CCOPs and the motivation of their research community have been central aspects enabling a great number of CCOPs to succeed [1]; CCOPs that stopped operations may have done so in part because they were unable to adapt on the local level to the changing environments around them. The CCOP’s consistent history of self-examination has fueled both its flexibility and success. Moving forward, as the CCOP continues its strategic planning, it will be important to understand factors that drive performance and to systematically identify measures allowing these factors to be monitored over time, to provide ongoing feedback and inform the performance and success of local CCOPs. The successes of CCOP and PBRNs like it that reach broadly into the community are of increasing importance as development of effective treatments for all populations increasingly demands access to heterogeneous populations for research. Accordingly, understanding factors relevant to PBRN success will continue to be of great importance. This knowledge will thus inform the National Institutes of Health Roadmap and be responsive to the IOM, NCI, and NCI/ASCO as the CCOP pursues its goals of accelerating the pace of scientific discovery, while facilitating the translation of evidence-based research into practice.

Acknowledgments

This analysis was funded in part by contract HHSN-261200800726P: CCOP Sustainability: Assessing the Interplay Between Organizational, environmental, and Network Factors that Predict long-term Performance and Survival; and 5R01CA124402: Implementing Systemic Interventions to Close the Discovery Delivery Gap; and 5R25CA116339: NCI Cancer Care Quality Training Program. We thank Lori Minasian, Cynthia Whitman, and colleagues at the NCI Division of Cancer Prevention for their gracious assistance with access to key information and insight and relevant to this examination.

Footnotes

NCI Research Bases include the NCI Cooperative Groups and four other NCI affiliated organizations that develop and manage clinical trials conducted through the NCI research networks.

References

- 1.Minasian LM, Carpenter WR, Weiner BJ, et al. Translating research into evidence-based practice: the National Cancer Institute Community Clinical Oncology Program. Cancer. 2010;116(19):4440–9. doi: 10.1002/cncr.25248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.National Cancer Institute. Special issue: clinical trials enrollment. NCI Cancer Bull. 2010;7(10) [Google Scholar]

- 3.Institute of Medicine. Prepublication Copy. 2010. Washington DCA national cancer clinical trials system for the 21st century: reinvigorating the NCI Cooperative Group Program. [Google Scholar]

- 4.National Cancer Institute. Report of the Operational Efficiency Working Group Clinical Trials and Translational Research Advisory Committee. Bethesda, MD: DHHS; 2010. [Google Scholar]

- 5.ASCO. Cancer trial accrual symposium; April 29–30, 2010; Bethesda, MD: http://university.asco.org/CT2010. [Google Scholar]

- 6.Printz C. NCI, cooperative groups gear up for changes in clinical trials system: new policies initiated in response to institute of medicine report. Cancer. 2011;117(10):2017–9. doi: 10.1002/cncr.26176. [DOI] [PubMed] [Google Scholar]

- 7.Carpenter WR, Weiner BJ, Kaluzny AD, Domino ME, Lee SY. The effects of managed care and competition on community-based clinical research. Med Care. 2006 Jul;44(7):671–9. doi: 10.1097/01.mlr.0000220269.65196.72. [DOI] [PubMed] [Google Scholar]

- 8.McKinney MM, Weiner BJ, Carpenter WR. Building community capacity to participate in cancer prevention research. Cancer Control. 2006 Oct;13(4):295–302. doi: 10.1177/107327480601300407. [DOI] [PubMed] [Google Scholar]

- 9.Weiner BJ, McKinney MM, Carpenter WR. Adapting clinical trials networks to promote cancer prevention and control research. Cancer. 2006;106(1):180–7. doi: 10.1002/cncr.21548. [DOI] [PubMed] [Google Scholar]

- 10.Elliott SP, Jarosek SL, Wilt TJ, Virnig BA. Reduction in physician reimbursement and use of hormone therapy in prostate cancer. J Natl Cancer Inst. 2010;102(24):1826–34. doi: 10.1093/jnci/djq417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Weight CJ, Klein EA, Jones JS. Androgen deprivation falls as orchiectomy rates rise after changes in reimbursement in the U.S. Medicare population. Cancer. 2008 May 15;112(10):2195–201. doi: 10.1002/cncr.23421. [DOI] [PubMed] [Google Scholar]

- 12.Friedman JY, Curtis LH, Hammill BG, et al. The Medicare Modernization Act and reimbursement for outpatient chemotherapy: do patients perceive changes in access to care? Cancer. 2007;110(10):2304–12. doi: 10.1002/cncr.23042. [DOI] [PubMed] [Google Scholar]

- 13.Edge SB, Zwelling LA, Hohn DC. The anticipated and unintended consequences of the patient protection and affordable care act on cancer research. Cancer J. 2010 Nov–Dec;16(6):606–13. doi: 10.1097/PPO.0b013e318201fdac. [DOI] [PubMed] [Google Scholar]

- 14.Albright HW, Moreno M, Feeley TW, et al. The implications of the 2010 Patient Protection and Affordable Care Act and the Health Care and Education Reconciliation Act on cancer care delivery. Cancer. 2011;117(8):1564–74. doi: 10.1002/cncr.25725. [DOI] [PubMed] [Google Scholar]

- 15.National Cancer Institute — Division of Cancer Prevention. [Accessed June 20, 2011.];Community Clinical Oncology Program (CCOP) http://prevention.cancer.gov/programs-resources/programs/ccop2011.

- 16.Warnecke RB, Johnson TP, Kaluzny AD, Ford LG. The community clinical oncology program: its effect on clinical practice. Jt Comm J Qual Improv. 1995 Jul;21(7):336–9. doi: 10.1016/s1070-3241(16)30158-4. [DOI] [PubMed] [Google Scholar]

- 17.Laliberte L, Fennell ML, Papandonatos G. The relationship of membership in research networks to compliance with treatment guidelines for early-stage breast cancer. Med Care. 2005;43(5):471. doi: 10.1097/01.mlr.0000160416.66188.f5. [DOI] [PubMed] [Google Scholar]

- 18.Carpenter W, Reeder-Hayes K, Bainbridge J, et al. The role of organizational affiliations and research networks in the diffusion of breast cancer treatment innovation. Med Care. 2011;49(2):172–9. doi: 10.1097/MLR.0b013e3182028ff2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Yitzhaki S. On an extension of the Gini inequality index. Int Econ Rev. 1983;24(3):617–28. [Google Scholar]

- 20.Competitive Edge Database. 2009. Interstudy. Excelsior MN2000-2006. [Google Scholar]

- 21.Baker L. Measuring competition in health care markets. Health Serv Res. 2001;36(10):223–51. [PMC free article] [PubMed] [Google Scholar]

- 22.Murthy V, Krumholz H, Gross C. Participation in cancer clinical trials: race-, sex-, and age-based disparities. JAMA. 2004;291(22):2720. doi: 10.1001/jama.291.22.2720. [DOI] [PubMed] [Google Scholar]

- 23.Wooldridge JM. Introductory econometrics: a modern approach. 4. South-Western College Publishing; 2009. [Google Scholar]

- 24.Hausman JA. Specification tests in econometrics. Econometrica J Econ Soc. 1978;46(6):1251–71. [Google Scholar]

- 25.Kaluzny AD, Lacey LM, Warnecke R, et al. Predicting the performance of a strategic alliance: an analysis of the Community Clinical Oncology Program. Health Serv Res. 1993 Jun;28(2):159–82. [PMC free article] [PubMed] [Google Scholar]

- 26.Kaluzny AD, Ricketts T, III, Warnecke R, et al. Evaluating organizational design to assure technology transfer: the case of the Community Clinical Oncology Program. J Natl Cancer Inst. 1989;81(22):1717–25. doi: 10.1093/jnci/81.22.1717. [DOI] [PubMed] [Google Scholar]