Abstract

Background

To optimize behavior organisms evaluate the risks and benefits of available choices. The mesolimbic dopamine (DA) system encodes information about response costs and reward delays that bias choices. However, it remains unclear whether subjective value associated with risk-taking behavior is encoded by DA release.

Methods

Here, rats (n = 11) were trained on a risk-based decision making task in which visual cues predicted the opportunity to respond for smaller certain (safer) or larger uncertain (riskier) rewards. Following training, DA release within the NAc was monitored on a rapid time scale using fast-scan cyclic voltammetry during the risk-based decision making task.

Results

Individual differences in risk-taking behavior were observed as animals displayed a preference for either safe or risky rewards. When only one response option was available, reward predictive cues evoked increases in DA concentration in the NAc core that scaled with each animal’s preferred reward contingency. However, when both options were presented simultaneously, cue-evoked DA release signaled the animals preferred reward contingency, regardless of the future choice. Further, DA signaling in the NAc core also tracked unexpected presentations or omissions of rewards following prediction error theory.

Conclusions

These results suggest that the dopaminergic projections to the NAc core encode the subjective value of future rewards that may function to influence future decisions to take risks.

Keywords: risk-taking, dopamine, nucleus accumbens, decision making, reward, value

Introduction

Organisms must learn to evaluate the costs and benefits of potential actions and bias choices towards more valuable options for optimal survival (1–4). Value based decision making engages a specific network of nuclei including the nucleus accumbens (NAc) and midbrain dopamine (DA) neurons (5–8). Mesolimbic DA neurons show increased phasic activity to reward paired cues and encode violations in prediction of those cues (9) while phasic DA release in the NAc encodes associations between cues and outcomes (10, 11), and is essential for stimulus-outcome learning (12, 13). DA neurons display increased activity for cues predicting higher value rewards based on reward magnitude, delay (7), probability (14), and expected value (15). Further, DA release in the NAc encodes behavioral costs and reward delays during decision making tasks (6). As such, DAergic projections to the NAc provide a neural circuit to encode future reward value that can promote adaptive decision making.

Risky decision making is one type of value-based decision making that appears to be mediated by the mesolimbic DA system and is impaired in several psychiatric disorders including drug addiction (16). Risky decisions have been modeled in humans and animals using gambling paradigms in which organisms are given choices between “playing it safe” for a smaller reward delivered 100% of the time or “taking a risk” for a larger reward delivered with less certainty. Animals display similar discounting to humans in that larger rewards are chosen less often as the probability of receiving the reward decreases (2, 17–21). In animal models, lesions (17) and inactivation (22) of the NAc induce risk-averse behaviors, even when these choices are less advantageous. Further, systemic administration of DAergic drugs differentially alters risk-taking behavior (19, 20). These results suggest that DA release in the NAc may be critical for adaptive risk-based decision making behavior. However, it is presently unclear whether different aspects of risky decision making are encoded by phasic (subsecond) DA release in the NAc.

Here, rapid DA signaling was monitored in ‘real time’ in the NAc using fast scan cyclic voltammetry during a risky decision making task. Rats were given the option to choose between a smaller certain and larger uncertain reward and safe and risky options were predicted by discrete cues. The results indicate that cue-evoked DA signals in the NAc core may contribute to risky decision making by reflecting the subjective value of future rewards.

Methods and Materials

Behavioral Training

Male Sprague Dawley rats aged 90–120d (275–350g) were used (see Supplement 1 for additional information). Sessions were conducted in 43 × 43 × 53cm Plexiglas chambers housed in a sound-attenuated cubicle (Med Associates, St Albans, VT). One side of the chamber had 2 retractable levers (Coulbourn Instruments, Allentown, PA) 17cm apart, with a stimulus light 6cm above each lever. A white noise speaker (80db) was located 12cm above the floor on the opposite wall. A houselight (100mA) was mounted 6cm above the speaker. Sucrose pellets (45 mg) were delivered to the food receptacle located equidistantly between the levers. During training sessions 1–5, both levers were extended and both cue lights above each lever were illuminated. Responding on either lever was reinforced on a fixed ratio (FR1) schedule of reinforcement. A maximum of 100 reinforcers (50 per lever) were available. After stable responding was established (50 responses on each lever/5 sessions), training on the risky decision making task began. During the first 10 sessions the task involved 3 types of contingencies (30 trials each) intermixed within 90 total trials per session. At this stage, only safe options were available to train rats to press each lever. The first two trial types were classified as forced-choice trials. For one trial type, a single cue light was illuminated for 5s over one lever followed by extension of both levers. Responses on the lever under the illuminated cue light within 15s were reinforced with 1 sucrose pellet. During the other forced-choice trial type, the other cue light over the second lever was illuminated (5s), followed by extension of both levers and responses (15s) on the corresponding lever were reinforced as above. Responses on the unsignaled lever were counted as “errors” and resulted in extinction of the houselight for the remainder of the trial period and no reward delivery. During the third trial type (termed free-choice trials), both cue lights were illuminated (5s), both levers were extended, and responses on either lever (15s) were reinforced with a single food pellet. Following each lever press during any trial type, the levers were retracted and rewards were immediately delivered. The presentation of identical reward contingencies (FR1, 1 sucrose pellet) allowed animals to fully learn the predictive associations of the cue lights before the reward contingencies were altered. Furthermore, this ensured that there would be no bias in response allocation as a result of differential learning between the two levers.

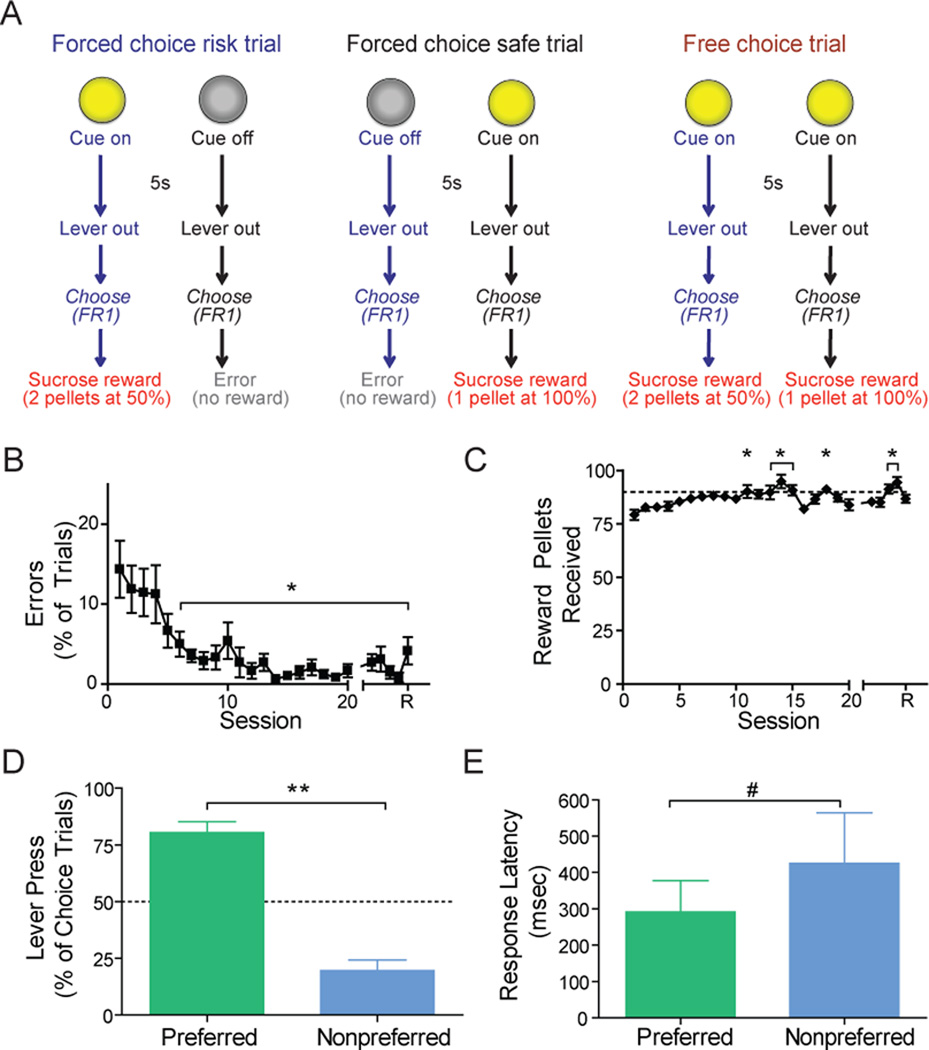

Following session 10, the reward contingency on one of the levers (counterbalanced across animals) was altered to reflect the risky decision making task (Figure 1A). The task remained identical to sessions 1–10, except the reward contingency of one lever (the risk lever) was 2 sucrose pellets 50% of the time while responses on the safe lever remained at 1 sucrose pellet 100% of the time. Animals were trained on the risky decision making task for an additional 10 sessions prior to surgical preparation for electrochemical measurements.

Figure 1.

Individual differences in risky decision making behavior. (A) Schematic representing task (see main text for description). (B) A significant reduction in percentage of errors and (C) a significant increase in reward pellets received across sessions compared to session 1. For B and C the behavioral data following surgery consisted of the final 4 days before the recording session (represented by R). (D) Response allocation on free-choice trials averaged across the final two training sessions and recording session. Dashed lines represent indifference point. Each animal showed a significant preference for one (safe or risk) option and (E) a trend toward decreased response latency for the preferred versus nonpreferred lever. All data are mean ± SEM. *P<0.05; **P<0.0001; #P=0.1.

Fast Scan Cyclic Voltammetry

Following training, rats were surgically prepared for voltammetric recordings as previously described (23) (see Supplement for details). One week following surgery, animals were food restricted as previously and retrained on the task for 4 sessions or until they reached pre-surgery performance of less than 5% errors (maximum of 9 sessions). DA concentration changes during behavior were assessed using fast-scan cyclic voltammetry as previously described (10). A new carbon-fiber electrode, housed in the micromanipulator, was lowered into the NAc and was used to measure DA changes during task performance. The potential of the carbon-fiber electrode was held at −0.4V versus the Ag/AgCl reference electrode. Voltammetric recordings were made every 100 ms by applying a triangular waveform that drove the potential to +1.3V and back to −0.4V at a scan rate of 400V/s. DA release was electrically evoked by stimulating the VTA using a range of stimulation parameters (2–24 biphasic pulses, 20–60 Hz, 120µA, 2 ms per phase) to make sure that the carbon fiber electrode was placed close to DA release sites and to create a training set for principal component analysis (PCR) for the detection of DA and pH changes during the behavioral session (26). A second computer and software system (Med Associates Inc) controlled behavioral events and sent digital outputs for each event to the voltammetry recording computer to be timestamped along with the electrochemical data. In some cases (3 animals) a second recording session was completed in which an electrode was lowered to a new location in the NAc core.

Data analysis

The number of errors and reward pellets received during the task were evaluated using a one-way repeated measures ANOVA with Tukey’s HSD post-hoc test. Response allocation on choice trials were calculated as 3 day averages of lever press behavior on free-choice trials. Animals that displayed at least 60% responses on one lever during choice trials of the recording session were considered to have a preference for one of the reward contingencies. Animals that showed a behavioral preference were included in all subsequent analysis involving response preferences. An unpaired two-tailed t-test was used to compare responses to chance level and paired two-tailed t-tests to compare preferred versus nonpreferred responses. Response latencies during the recording session were calculated by taking the average latency to lever press during forced-choice trials on the preferred and nonpreferred lever. Trials in which the response latency was greater than 10 seconds were excluded from analysis. The average response latencies were compared using a paired two-tailed t-test.

Changes in extracellular DA concentration were assessed by aligning DA concentration traces to cue presentation and lever extension events. Group increases or decreases in NAc DA concentration from baseline in response to cue presentation were evaluated separately for each trial type (preferred, nonpreferred, and choice) using a one-way repeated measures ANOVA with Dunnett’s correction for multiple comparisons. This analysis compared the baseline mean DA concentration (5s prior to cue onset) to each data point (100 msec bin) obtained within 2s following the cue presentation.

The correlation between DA release in the NAc core and lever press behavior was performed by taking the ratio of the peak DA concentration within 2s of cue presentation for the risk and safe cues compared to the ratio of risk versus safe lever presses on free-choice trials. The ratio of DA signaling was calculated by taking the peak DA for the risk cue and dividing it by the total peak DA for the risk and safe cue presentation. The ratio for lever pressing was calculated by taking the total number of presses on the risk lever during free-choice trials and dividing by the total number of presses during free-choice trials (sum of presses on the risk and safe lever). One animal (NAc core recording) did not develop a behavioral preference and was thus only included in the correlational analysis and excluded from all other data analyses involving response preferences.

To assess the differential effects of the three cue types (preferred, nonpreferred, and choice) on DA release, peak DA concentrations within 2 s following cue presentation were analyzed. The effects of the three cue types were evaluated using repeated measures ANOVA with Tukey’s post-hoc tests. For comparison of DA signal on free-choice trials when animals pressed the preferred versus nonpreferred lever, one animal that never selected the nonpreferred option was removed to allow for proper statistical comparison and to ensure that results were not biased. Peak DA release during choice trials when animals chose the preferred versus nonpreferred option were evaluated using a paired t-test. In order to confirm that the effect was not a result of an uneven number of trials, peak DA release during free-choice trials was also evaluated using a paired t-test in which preferred lever press trials were randomly selected to allow for equal numbers of preferred and nonpreferred trials. The number of random trials that were selected from each animal were equivalent to that animal’s number of nonpreferred presses. DA concentration during reward delivery was evaluated by examining the peak DA concentration within 2 seconds following lever extension for rewarded risk and safe trials and the lowest point within 2 seconds following lever extension for unrewarded risk trials. All analysis were considered significant at α=0.05. Statistical and graphical analysis were performed using GraphPad Prism 4.0 for Windows (GraphPad Software, La Jolla, CA), STATISTICA for Windows (StatSoft Inc. Tulsa OK) and Neuroexplorer for Windows version 4.034 (Plexon Inc., Dallas, TX).

After completion of each experiment, electrode placement was verified in a manner identical to previous investigations (10, 27) (see Supplement for details).

Results

Individual Differences in Risk Taking Behavior

Rats (n=8 rats with 11 recording locations in the NAc core) were able to learn the risky decision making task and discriminate between the cue types as evidenced by a significant reduction in the percentage of errors on forced-choice safe and risk trials compared to session 1 (F(24,168) = 5.985, P < 0.00001; Figure 1B). Further, animals displayed a significant increase in the number of sucrose pellets received across all sessions compared to session 1 (F(24,168) = 3.585, P < 0.00001; Figure 1C).

On free-choice trials animals displayed a significant preference for one reward contingency (4 rats were safe preferring, 3 rats were risk preferring, and 1 rat showed no preference). Collapsed across contingency type (safe or risky), animals exhibited significantly greater presses on the preferred lever compared to the nonpreferred lever (t (9) = 6.426, P = 0.001) and pressed the preferred lever significantly more than chance (t (9) = 6.426, P = 0.001; Figure 1D). Rats also showed a nonsignificant trend towards faster latency to press the preferred lever on forced-choice trials (t(9)=1.833, P=0.1; Figure 1E).

Cue-evoked Dopamine Signaling within the NAc core reflect reward preferences

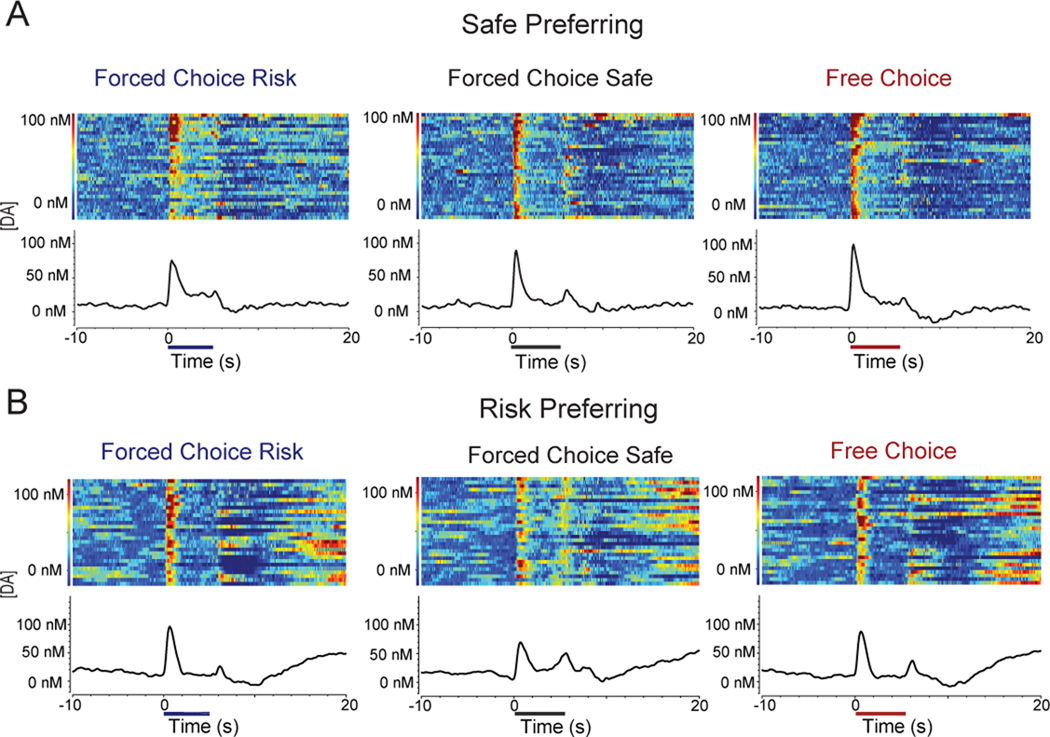

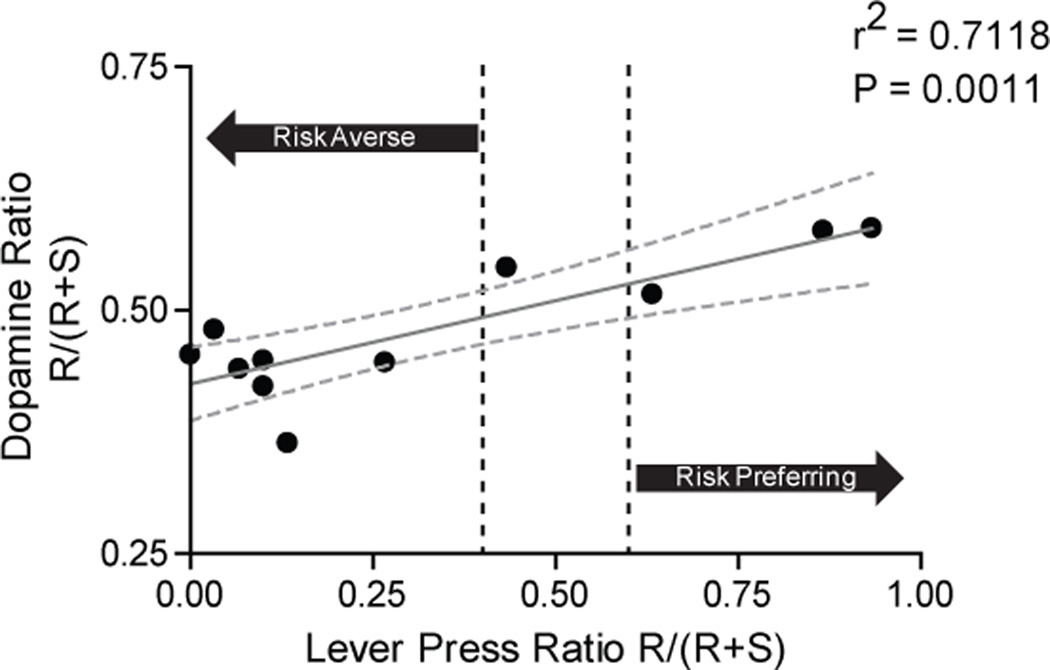

Reward predictive cues in all trial types evoked a significant increase in phasic DA release in the NAc core, consistent with previous reports (6, 10). On forced-choice trials, cue-evoked DA release in the NAc core tracked the subjective value of future rewards as DA signaling scaled with each animal’s individual preference. For example, Figure 2A and Figure S1A in the Supplement show DA release dynamics during the task for a single safe preferring animal. Cue-evoked DA release was higher for cues predicting safe (center) compared to risk (left) trials. Further, DA release during free-choice trials (right) was similar in amplitude to that observed during forced-choice safe trials (center). Figure 2B and Figure S1B in the Supplement show a complementary pattern of DA concentration changes in a risk preferring animal, with larger increases in DA occurring for cues that predicted risky rewards. This pattern was conserved across all animals recorded from the NAc core, as there was a significant correlation between risk taking behavior and differential DA release (r2 = 0.7118, P = 0.0011; Figure 3).

Figure 2.

Individual differences in DA release and risky decision making. Representative electrochemical data during training session for a safe preferring (A) and risk preferring (B) animal. Heat plots (top) represent individual trial data ordered with first trial on top; bottom traces represent the average from all trials. Signal is aligned to cue onset (time 0s) and cue offset/lever extension (time 5 s; colored bars along x-axis). Cues evoked differential DA release correlated with the animals’ safe or risk behavioral preference.

Figure 3.

DA signaling correlates with lever press behavior for risk versus safe options. The x-axis is lever press behavior during free choice trials showing the risk preference of each animal. Dotted lines indicate the criteria for a significant preference (defined as pressing the preferred lever 60% of the time). The ratio of lever pressing was determined by dividing the number of presses on the risk lever during free-choice trials by the total number of presses on free-choice trials (Risk/(Risk+Safe)). A ratio greater than 0.6 indicates that an animal is risk-prone while a ratio of less than 0.4 indicates an animal is risk-averse. Area in between the dotted lines indicate no preference. The y-axis is the ratio of DA signaling for forced-choice risk and forced-choice safe trials. This ratio was calculated by taking the peak DA concentration within 2s of the cue presentation for risk and safe trials using the same formula as above (Risk/Risk+Safe). A ratio higher than 0.5 means that an animal had greater DA signaling during the risk cue compared to the safe cue while a ratio of less than 0.5 means that an animal had less DA signaling to the risk cue than the safe cue.

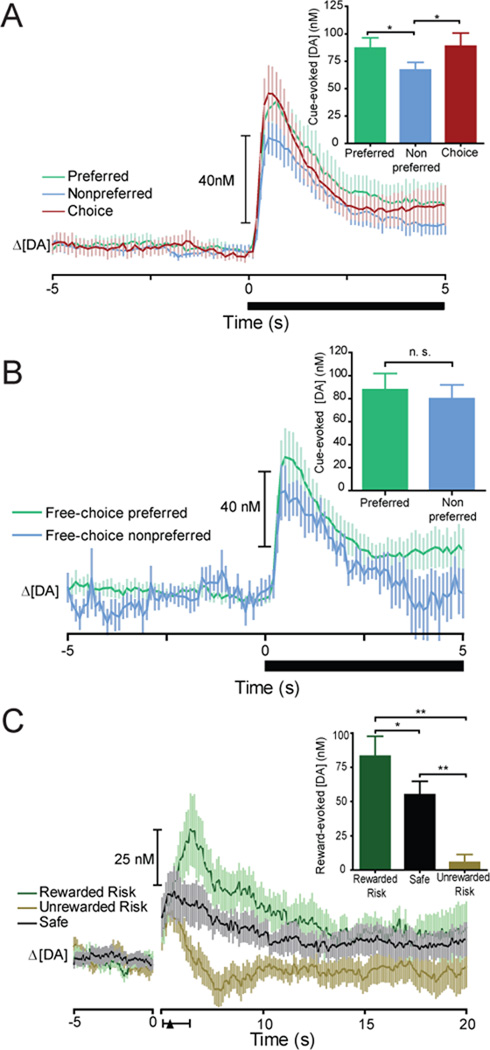

Overall changes in DA concentration recorded at all locations in the NAc core for animals displaying a behavioral preference (n=7, 10 recording locations) are shown in Figure 4A, aligned to cue onset. Repeated measures ANOVA revealed that cue presentation in each trial type significantly increased DA concentration (Preferred: F(20,9) = 16.72 P < 0.0001, Nonpreferred: F(20,9) = 28.39 P < 0.0001, Choice: F(20,9) = 24.69 P < 0.0001). Further, a one-way repeated measures ANOVA comparing the peak DA evoked on each trial type showed that the amplitude of cue-evoked DA release varied depending on the type of cue presented (F(2,9) = 9.479, P = 0.0015; Figure 4A inset). Post hoc analysis revealed a significant difference in DA concentration for forced-choice trials during the presentation of the preferred versus nonpreferred cue (Tukey’s post hoc test, P < 0.01; Figure 4A inset). Further, during free-choice trials cue-evoked DA release scaled to the preferred option. Specifically, peak DA concentration was similar for cues predicting the free-choice and preferred (P > 0.05; Figure 4A inset) but not the nonpreferred option (P < 0.01; Figure 4A inset). In three animals, a second recording was completed. Since multiple recordings from a single animal could potentially bias results another repeated measures ANOVA was completed that excluded those sessions. This analysis revealed a significant effect of cue type on the amplitude of dopamine release (F(2,6) = 8.304, P = 0.0054) indicating that multiple recording sessions did not bias the data.

Figure 4.

DA release encodes subjective value and prediction errors during the risky decision making task. (A) DA concentration aligned to cue onset (black bar, time 0s) on forced–choice trials (separated by each animal’s behavioral preference) and free-choice trials. Inset: Peak cue-evoked DA concentration during a 2 s period following cue onset. Cue presentation on preferred and free-choice trials led to significantly larger increases in DA concentration than cue presentation on nonpreferred trials. (B) DA concentration aligned to cue onset (black bar, time 0s) on free–choice trials (separated by the subsequent response on the preferred and nonpreferred lever) Inset: Peak cue-evoked DA concentration during a 2 s period following cue onset. On free-choice trials, cue-evoked DA signals did not reflect the value of the chosen option. P = 0.4015. Further analysis that incorporated a random selection of preferred trials to allow for equal numbers of preferred and nonpreferred trials also showed no significant difference (P = 0.0701). There was also no significant difference between all preferred trials and the randomly selected preferred trials (P = 0.1697). (C) DA release after lever press during forced-choice risk trials separated by reward presentation or omission. Average lever press across animals at black triangle; line around black triangle shows minimum/maximum of average press. Inset: Peak and minimum DA concentration within 2 s following lever presentation. Unexpected presentation of reward during risk trials evoked a significant increase in DA compared to perfectly predicted safe trials while unexpected reward omission caused a significant decrease in DA concentration compared to safe trials. All data are mean ± SEM. *p< 0.05; **p< 0.01.

Cue–evoked DA could be functioning to signal the most valuable option available or the value of the option that is eventually chosen. To distinguish between these, DA release events were also quantified during free-choice trials when the animal chose the preferred versus nonpreferred option. This analysis revealed that DA signaling on free-choice trials encoded the most valuable option available rather than what the animal subsequently chose (Figure 4B). Specifically, there was no significant differences in DA release in the NAc core on free-choice trials when animals chose the preferred versus nonpreferred option (t(8) = 0.8858, P = 0.4015; Figure 4B inset). DA signals on free-choice nonpreferred trials were also not significantly different from cue-evoked DA release on forced-choice preferred trials (t(8) = 0.602, P = 0.5624), suggesting that the DA signal encodes information about the most valuable options available during decision making even when the nonpreferred option was chosen. Finally, preliminary findings reveal that value encoding of DA signaling was not observed in the NAc shell as there were no significant differences in peak DA concentrations between preferred and nonpreferred cues despite a strong behavioral preference for three animals (F(2,2) = 4.931, P = 0.0833; Figure 5; Figure S2 and Figure S3 in the Supplement for histological verification of electrode placements in NAc core and shell).

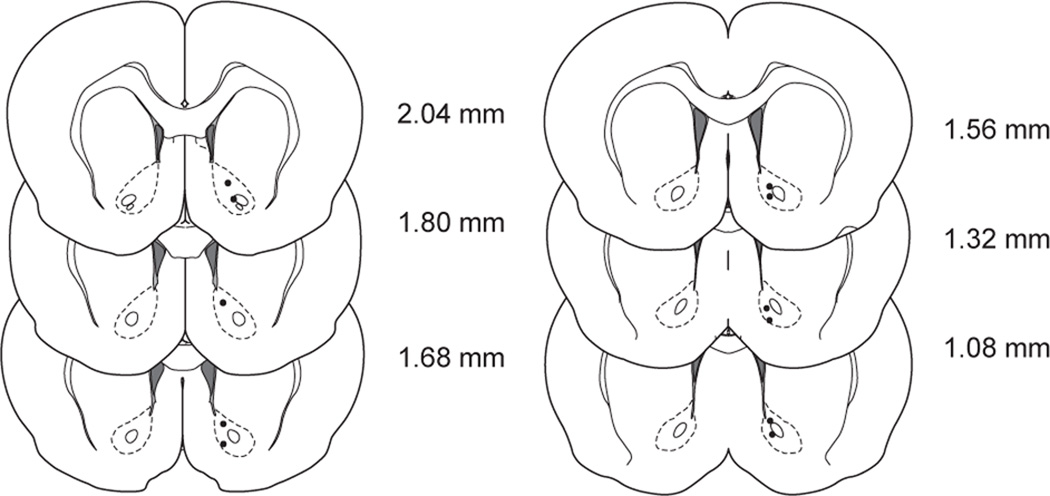

Figure 5.

Anatomical distribution of carbon fiber electrode recording sites in the NAc. Coronal sections show electrode tip locations for 11 recording locations. Numbers to the right indicate anterior posterior coordinates rostral to bregma. Coronal sections and coordinates adapted from stereotaxic atlas (37).

DA Signaling in the NAc Core Tracks Prediction Errors During Risky Decision Making

Schultz and colleagues postulate that midbrain DA neurons encode information about stimulus-reward associations thus functioning as a learning signal (9). Specifically, DA cell firing is believed to provide a ‘prediction error’ signal that compares expected outcomes with actual outcomes (9). Unexpected rewards produce brief synchronous bursts among DA neurons (termed positive prediction error) while fully predicted rewards typically evoke little or no phasic activity. Further, if an expected reward is omitted, DA neurons exhibit a pause in cell firing, termed a negative prediction error (9).

Our behavioral task enabled an examination of whether DA release in the NAc core encodes prediction errors during risky decision making. Here, rewards are perfectly predicted during safe trials while reward is unexpected during risk trials. Figure 4C shows the timecourse of DA release following lever extension in the NAc core. Unexpected reward delivery or omission during risk trials resulted in increases or decreases, respectively, in phasic DA concentration in the NAc core compared to perfectly predicted safe trials. Specifically, we observed a main effect of reward delivery on DA concentration (F(2,9) = 30.31, P < 0.0001; Figure 4C inset). Post hoc tests revealed that during forced-choice risk trials, reward presentation resulted in a significant increase in DA concentration compared to forced-choice safe trials (Tukey’s post hoc test, P < 0.001; Figure 4C inset). Thus, when reward was presented, an increase in DA release was observed consistent with a positive prediction error (9, 24). Conversely, the omission of reward during forced-choice risk trials resulted in significantly less DA release compared to forced-choice safe trials (P < 0.05; Figure 4C inset), consistent with the signaling of a negative prediction error (9). There were no significant differences (t (8) = 0.9280, P = 0.3805) in prediction error signaling for safe versus risky animals.

Discussion

Here, we show that when rats were given the option to play it safe for a certain small reward or take a risk for a larger, uncertain reward, individual preferences were tracked by DA release in the NAc core. Thus, DA release does not simply encode extrinsic factors of reward value, but also reflects intrinsic representations that can function to bias future decision making behavior. Further, consistent with its role as a reward prediction error signal (9), we also show that unexpected reward deliveries increase phasic DA release using our risky decision making task, and also demonstrate for the first time that subsecond DA release in the NAc also encodes unexpected reward omissions. As such, rapid DA encoding in the NAc core may function to provide feedback on the consequences of behaviors linked with uncertain outcomes and influence future decisions to take risks.

The mesolimbic DA system is believed to contribute to decision making and reward learning by encoding information about the expected value of future rewards. However, previous studies have typically focused on explicit value-based decision making where, for example, one option always leads to greater reward and as such is clearly more advantageous than the other (5–8). Other studies examined the role of DA or the NAc in decision making based on subjective reward value where the organism must decide to take risks to get what is perceived as a potentially more valuable reward outcome. In this regard, dopaminergic drugs have been shown to alter risk-taking behavior while lesions of the NAc increase impulsivity and risk-averse behavior even when this behavior is less advantageous (17, 25). While these studies suggest an important role of DA signaling and NAc function in decision making based on subjective value, the present findings extend that work and show how DA signaling in ‘real time’ in the NAc functions to mediate decision making related to risk-taking behavior.

Previous research has shown that DA release in the NAc core and shell signal different aspects of reward value. Specifically, DA transmission in the NAc core is an expression of the motivational value of future rewards while DA transmission in the shell is related to reward novelty and valence (26–29). Selective inactivation of the core (not shell) disrupted appropriate decision making based on reward cost and delay (30, 31). Further, DA release in the NAc core (not shell) encoded response costs and reward delays (6) suggesting divergent roles for rapid DA signaling in these substructures. Previous work has also implicated the NAc core in appropriate decision making based on risk (17). Given these findings, the present study was focused on examining DA release in the NAc core during risky decision making. The results support the important role of DA release in the NAc core for encoding reward value to mediate appropriate decision making related to taking risks.

The processing of risk-based decision making by rapid DA signaling in the NAc core is consistent with mesolimbic DA functioning as a learning signal. Schultz and colleagues (9) proposed that DA neurons encode a prediction error signal where cues that predict rewards evoke phasic increases in firing rate while fully expected rewards do not alter DA activity. Further, cues which predict larger, immediate, or more probable rewards elicit larger spikes in DA neural activity than cues that predict smaller, delayed or less probable rewards (7, 14, 15). This processing by DA neurons is hypothesized to be critical for decision making as it functions to broadcast information about reward value to striatal circuits that enable animals to maximize behaviors (6, 7).

In support, DA neural activity and terminal release in the NAc core encode information about the best available option when animals are given a concurrent choice of alternatives with different explicit value, irrespective of what the animal actually chooses (6, 7). The present data are consistent with this view. Here, DA release on free-choice trials encoded the animals’ preferred or most valuable reward contingency even when the animal chose the less valuable option. Thus, DA release in the NAc core may play a key role in the evaluation of risky behaviors and may thereby function to promote appropriate action selection when faced with risky alternatives.

The NAc core is embedded within a larger neural circuit involved in risky decision making including the prefrontal cortex (PFC) and basolateral amygdala (BLA) (1, 17, 21, 22, 32, 33). These various regions have been implicated in different aspects of reward value encoding that may mediate risky decision making. For example, the PFC appears critical for encoding information about reward uncertainty correlated with individual risk attitudes (34) while the BLA appears critical for maintaining the representation of reward in its absence (35). Further, damage to these regions differentially alters risk taking behavior. For example, inactivation of the medial PFC makes animals risk prone or risk averse, depending on which risk contingency the animal was exposed to initially. This finding suggests that animals are unable to update information about reward uncertainty to mediate appropriate choices (21). Conversely, BLA inactivation biases animals toward safe options during risky decision making (32) suggesting that animals could not maintain the representation of reward in its absence and thus biased animals away from risky choices. Collectively, these findings suggest that each neural substrate plays different roles in mediating appropriate decision making and importantly, the transfer of information between these structures and to the NAc is critical for risky decision making. As such, signaling from the PFC or BLA may override the value signaling of the mesolimbic DA system to bias responses towards a less valuable option; this may explain why rats sometimes choose the nonpreferred lever or the less valuable option (5, 6).

Mesolimbic DA projections are an integral component of the corticolimbic circuitry which functions to inform the organism of the value of available options to facilitate appropriate decision making. As such, imbalances in the mesolimbic DA system may impair valuation processing and influence aberrant decision making. For example, in rats that display trait impulsivity, there is a significant reduction in NAc DA D2/3 receptor availability which is further correlated with subsequent increases in drug self-administration (36). Thus, dysfunctions in the mesolimbic DA circuitry can affect value-based decision making and provide a neural substrate for aberrant behaviors such as impulsive choices, a hallmark of drug addiction.

Supplementary Material

Acknowledgments

This research was supported by NIDA (DA 017318 to R.M.C. & DA 10900 to R.M.W.). The authors would like to thank Dr. Michael Saddoris and Dr. Erin Kerfoot for helpful discussions, and Katherine Fuhrmann and Laura Ciompi for technical assistance.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Financial Disclosures: All authors reported no biomedical financial interest or potential conflicts of interest.

References

- 1.Cardinal RN. Neural systems implicated in delayed and probabilistic reinforcement. Neural Netw. 2006;19(8):1277–1301. doi: 10.1016/j.neunet.2006.03.004. [DOI] [PubMed] [Google Scholar]

- 2.Green L, Myerson J. A discounting framework for choice with delayed and probabilistic rewards. Psychol Bull. 2004;130(5):769–792. doi: 10.1037/0033-2909.130.5.769. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Phillips PE, Walton ME, Jhou TC. Calculating utility: preclinical evidence for cost-benefit analysis by mesolimbic dopamine. Psychopharmacology (Berl) 2007;191(3):483–495. doi: 10.1007/s00213-006-0626-6. [DOI] [PubMed] [Google Scholar]

- 4.Rangel A, Camerer C, Montague PR. A framework for studying the neurobiology of value-based decision making. Nat Rev Neurosci. 2008;9(7):545–556. doi: 10.1038/nrn2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Day JJ, Jones JL, Carelli RM. Nucleus accumbens neurons encode predicted and ongoing reward costs in rats. European Journal of Neuroscience. 2011;33(2):308–321. doi: 10.1111/j.1460-9568.2010.07531.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Day JJ, et al. Phasic nucleus accumbens dopamine release encodes effort- and delay-related costs. Biol Psychiatry. 2010;68(3):306–309. doi: 10.1016/j.biopsych.2010.03.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Roesch MR, Calu DJ, Schoenbaum G. Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nat Neurosci. 2007;10(12):1615–1624. doi: 10.1038/nn2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Roesch MR, et al. Ventral Striatal Neurons Encode the Value of the Chosen Action in Rats Deciding between Differently Delayed or Sized Rewards. Journal of Neuroscience. 2009;29(42):13365–13376. doi: 10.1523/JNEUROSCI.2572-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275(5306):1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 10.Day JJ, et al. Associative learning mediates dynamic shifts in dopamine signaling in the nucleus accumbens. Nat Neurosci. 2007;10(8):1020–1028. doi: 10.1038/nn1923. [DOI] [PubMed] [Google Scholar]

- 11.Stuber GD, et al. Reward-predictive cues enhance excitatory synaptic strength onto midbrain dopamine neurons. Science. 2008;321(5896):1690–1692. doi: 10.1126/science.1160873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sombers LA, et al. Synaptic overflow of dopamine in the nucleus accumbens arises from neuronal activity in the ventral tegmental area. J Neurosci. 2009;29(6):1735–1742. doi: 10.1523/JNEUROSCI.5562-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zellner MR, Kest K, Ranaldi R. NMDA receptor antagonism in the ventral tegmental area impairs acquisition of reward-related learning. Behav Brain Res. 2009;197(2):442–449. doi: 10.1016/j.bbr.2008.10.013. [DOI] [PubMed] [Google Scholar]

- 14.Fiorillo CD, Tobler PN, Schultz W. Discrete coding of reward probability and uncertainty by dopamine neurons. Science. 2003;299(5614):1898–1902. doi: 10.1126/science.1077349. [DOI] [PubMed] [Google Scholar]

- 15.Tobler PN, Fiorillo CD, Schultz W. Adaptive coding of reward value by dopamine neurons. Science. 2005;307(5715):1642–1645. doi: 10.1126/science.1105370. [DOI] [PubMed] [Google Scholar]

- 16.Crews FT, Boettiger CA. Impulsivity, frontal lobes and risk for addiction. Pharmacology Biochemistry and Behavior. 2009;93(3):237–247. doi: 10.1016/j.pbb.2009.04.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Cardinal RN, Howes NJ. Effects of lesions of the nucleus accumbens core on choice between small certain rewards and large uncertain rewards in rats. BMC Neurosci. 2005;6:37. doi: 10.1186/1471-2202-6-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Floresco SB, Whelan JM. Perturbations in different forms of cost/benefit decision making induced by repeated amphetamine exposure. Psychopharmacology (Berl) 2009;205(2):189–201. doi: 10.1007/s00213-009-1529-0. [DOI] [PubMed] [Google Scholar]

- 19.St Onge JR, Floresco SB. Dopaminergic Modulation of Risk-Based Decision Making. Neuropsychopharmacology. 2008;34(3):681–697. doi: 10.1038/npp.2008.121. [DOI] [PubMed] [Google Scholar]

- 20.St. Onge J, Chiu Y, Floresco S. Differential effects of dopaminergic manipulations on risky choice. Psychopharmacology (Berl) 2010;211(2):209–221. doi: 10.1007/s00213-010-1883-y. [DOI] [PubMed] [Google Scholar]

- 21.St. Onge JR, Floresco SB. Prefrontal Cortical Contribution to Risk-Based Decision Making. Cerebral Cortex. 2010;20(8):1816–1828. doi: 10.1093/cercor/bhp250. [DOI] [PubMed] [Google Scholar]

- 22.Stopper C, Floresco S. Contributions of the nucleus accumbens and its subregions to different aspects of risk-based decision making. Cognitive, Affective, & Behavioral Neuroscience. 2011;11(1):97–112. doi: 10.3758/s13415-010-0015-9. [DOI] [PubMed] [Google Scholar]

- 23.Phillips PE, et al. Real-time measurements of phasic changes in extracellular dopamine concentration in freely moving rats by fast-scan cyclic voltammetry. Methods Mol Med. 2003;79:443–464. doi: 10.1385/1-59259-358-5:443. [DOI] [PubMed] [Google Scholar]

- 24.Schultz W. Predictive reward signal of dopamine neurons. J Neurophysiol. 1998;80(1):1–27. doi: 10.1152/jn.1998.80.1.1. [DOI] [PubMed] [Google Scholar]

- 25.Cardinal RN, et al. Impulsive choice induced in rats by lesions of the nucleus accumbens core. Science. 2001;292(5526):2499–2501. doi: 10.1126/science.1060818. [DOI] [PubMed] [Google Scholar]

- 26.Bassareo V, De Luca MA, Di Chiara G. Differential Expression of Motivational Stimulus Properties by Dopamine in Nucleus Accumbens Shell versus Core and Prefrontal Cortex. The Journal of Neuroscience. 2002;22(11):4709–4719. doi: 10.1523/JNEUROSCI.22-11-04709.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Corbit LH, Muir JL, Balleine BW. The Role of the Nucleus Accumbens in Instrumental Conditioning: Evidence of a Functional Dissociation between Accumbens Core and Shell. The Journal of Neuroscience. 2001;21(9):3251–3260. doi: 10.1523/JNEUROSCI.21-09-03251.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Rebec GV, et al. Regional and temporal differences in real-time dopamine efflux in the nucleus accumbens during free-choice novelty. Brain Research. 1997;776(1–2):61–67. doi: 10.1016/s0006-8993(97)01004-4. [DOI] [PubMed] [Google Scholar]

- 29.Roitman MF, et al. Real-time chemical responses in the nucleus accumbens differentiate rewarding and aversive stimuli. Nat Neurosci. 2008;11(12):1376–1377. doi: 10.1038/nn.2219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ghods-Sharifi S, Floresco SB. Differential effects on effort discounting induced by inactivations of the nucleus accumbens core or shell. Behavioral Neuroscience. 2010;124(2):179–191. doi: 10.1037/a0018932. [DOI] [PubMed] [Google Scholar]

- 31.Pothuizen HHJ, et al. Double dissociation of the effects of selective nucleus accumbens core and shell lesions on impulsive-choice behaviour and salience learning in rats. European Journal of Neuroscience. 2005;22(10):2605–2616. doi: 10.1111/j.1460-9568.2005.04388.x. [DOI] [PubMed] [Google Scholar]

- 32.Ghods-Sharifi S, St. Onge JR, Floresco SB. Fundamental Contribution by the Basolateral Amygdala to Different Forms of Decision Making. J. Neurosci. 2009;29(16):5251–5259. doi: 10.1523/JNEUROSCI.0315-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Roitman JD, Roitman MF. Risk-preference differentiates orbitofrontal cortex responses to freely chosen reward outcomes. European Journal of Neuroscience. 2010;31(8):1492–1500. doi: 10.1111/j.1460-9568.2010.07169.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Tobler PN, et al. Reward value coding distinct from risk attitude-related uncertainty coding in human reward systems. J Neurophysiol. 2007;97(2):1621–1632. doi: 10.1152/jn.00745.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Winstanley CA, et al. Contrasting roles of basolateral amygdala and orbitofrontal cortex in impulsive choice. J Neurosci. 2004;24(20):4718–4722. doi: 10.1523/JNEUROSCI.5606-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Dalley JW, et al. Nucleus accumbens D2/3 receptors predict trait impulsivity and cocaine reinforcement. Science. 2007;315(5816):1267–1270. doi: 10.1126/science.1137073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Paxinos G, Watson C. The rat brain in stereotaxic coordinates. Fifth Ed. New York: Elsevier Academic Press; 2005. ed, [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.