Abstract

Objective

Recognition of learning curves in medical skill acquisition has enhanced patient safety through improved training techniques. Clinical trials research has not been similarly scrutinized. We retrospectively evaluated VALIANT, a large multinational, pragmatic, randomized, double-blind, multicenter trial, for evidence of research conduct consistent with a performance “learning curve.”

Design

Records provided protocol departure (deviations/violations) and documentation query data. For each site, analysis included patient order (e.g., first, second), recruitment rate, and first enrollment relative to study start date.

Setting

Computerized data from a trial coordinated by an academic research organization collaborating with 10 academic and two commercial research organizations and an industry sponsor.

Interventions

931 sites enrolled 14,703 patients. Departures were restricted to the first year. Exclusions included: patient’s death or loss to follow-up within twelve months and subjects 80th or higher at a site. Departures were assessed for variance with higher patient rank, more frequent recruitment, and later start date.

Methods and Results

12,367 patients at 931 sites were analyzed. Departures were more common for patients enrolled earlier at a site (P<0.0001). For example, compared to the 30th patient the first had 47% more departures. Departures were also more common with slower enrollment and site start closer to the trial start date (P<0.0001). Similar patterns existed for queries.

Conclusions

Research performance improved during VALIANT consistent with a “learning curve.” Although effects were not related to a change in outcome (mortality), learning curves in clinical research may have important safety, ethical, research quality, and economic implications for trial conduct.

Keywords: Cardiology, Ethics, Health Services, Medical Informatics, Therapeutics

INTRODUCTION

Mastering complex medical skills requires practice. Early attempts of complex tasks frequently involve error. Learning curves are graphical representations of reductions in errors with practice. The recognition of learning curves in the acquisition of medical skills was a significant breakthrough[1-7] that enhanced patient safety by highlighting deficiencies of established teaching methods and providing a metric to compare alternate training strategies (e.g., simulation).[8] To date there has been limited scrutiny of clinical trials for performance learning curves[9] despite such actions as the common inclusion of “practice subjects” in trial design.

Errors in healthcare are a serious public health problem.[10] While clinical trials are key to improving healthcare and reducing error, they may involve protocol departures.[8] A significant portion of the $6 billion spent on clinical trials annually in the United States[11] is used for education to minimize errors. Clinical trial education commonly includes pre-trial investigator meetings using seminars, discussions, and reading materials.

Trial documentation generates a record of research conduct. Recognized departures from the study protocol and recording inconsistencies are reported and reviewed. Inaccurate performance may represent errors or a conscious decision to deviate in the interest of patient care. Departures from prescribed care are categorized as deviations and are recorded in the trial documentation. Extreme departures are categorized as violations and cannot be included in the primary study analysis. Other documentation issues are recorded in case report form queries.

Learning curves are as likely to exist in clinical trials as in other complex medical tasks, yet this has not been studied. We examined data from a large, pragmatic,[12] randomized, double-blind, multicenter clinical trial[13] for patterns of research conduct consistent with learning curves.

MATERIALS AND METHODS

Study population

With Institutional Review Board approval, a limited dataset from the final computerized locked VALsartan In Acute myocardial iNfarcTion (VALIANT) Trial[13] was analyzed. VALIANT, a completed multi-national, multicenter, double-blind, randomized, active controlled, parallel group trial, compared long-term treatment with valsartan, captopril, or both in high-risk patients after myocardial infarction. VALIANT included 931 centers in 24 countries and enrolled 14,703 patients over 31 months; median follow-up was 24.7 months. Site recruitment and management was coordinated by an academic research organization in collaboration with 10 academic and two commercial research organizations and an industry sponsor.

VALIANT has been described previously.[13] Site investigators at each site included a physician principal investigator and at least one research coordinator. Site preparation involved investigator attendance at an educational meeting, local institutional protocol approval by a review board, and completion of documentation of receipt of study drug. In one country, on-site training visits were used instead of the educational meeting. Eligible consented study candidates were assigned a unique seven-digit number and randomized to receive one of three therapies. Subsequent care required six scheduled visits during the first year and at four-month intervals thereafter unless the subject died or was lost to follow-up. Sites were encouraged to see patients as needed for clinical reasons. Periodic monitor visits occurred for quality control including review of protocol departures, identification and resolution of documentation queries. The primary endpoint was death from any cause.

Site enrollment factors

The unique patient numbers were used to identify site and enrollment order. For example, 0237-011 identifes the 11th subject at site 0237. The rate of site enrollment was calculated as the average number of subjects per month, using the dates of first and last patient and total enrolled. Sites with a single subject (n=65) were assigned a rate of one patient per month. A secondary analysis excluding all single-subject sites was performed to support this approach. The time between first site enrollment and study start date (December 12, 1998) was calculated. Individuals enrolled 80th and above were excluded since this occurred at fewer than 10 sites; the scarcity of these patients could result in unreliable estimates.

Performance departures were the set of protocol violations and deviations from the VALIANT dataset. A protocol violation is “any failure to comply with the final study protocol as approved by the Institutional Review Board.” A violation is “a serious non-compliance with the protocol resulting from error, fraud, or misconduct, and results in the exclusion of a patient from the study.”[14] A protocol deviation is “a less serious non-compliance, usually to deal with unforeseen circumstances and can be agreed between the sponsor and investigator either in advance or after the event.”[14] In VALIANT, deviations and violations were grouped as a single set of protocol departures. Documentation inconsistencies were the set of case report form queries generated from patient records representing the unresolved questions from computer screening and monitor review. Query definitions changed during the study (this occurred 8, 16, and 24 months post-study start) were excluded. Only incidents that occurred during study initiation and the six subsequent visits were included (approximately a 12-month period), since follow-up beyond this point was variable. Patients who died or were lost to follow-up before completion of the first year were excluded. Because death/loss to follow-up resulted in fewer visits, these patients could show lower rates of events for reasons unrelated to the learning effect.

Statistical analysis

The primary analysis involved the limited dataset of protocol departures from VALIANT and excluded ineligible sites, subjects, and departures. A multivariable model predicting number of protocol departures per patient was developed; predictors included: order of patient enrollment, site enrollment rate, and time between first site enrollment and study start. All three site enrollment factors were treated as simple linear functions. A similar secondary analysis was performed to assess documentation inconsistencies (queries). Events were analyzed using generalized estimating equations to permit the analysis of correlated data from repeated measurements. A Poisson distribution was specified for departures and queries to accommodate for their relative infrequency. Because patients from the same site could have correlated outcomes, site was included as a repeated effect in the model. For descriptive and graphical purposes, a restricted cubic splines methodology[15] was used to investigate non-linear effects of patient enrollment order. Restricted cubic spines analysis has been described previously.[16] Five-knot methodology was selected for all analyses (knots at quantiles 0.05, 0.275, 0.5, 0.725, and 0.95).

Since single patient sites cannot be included in the multivariable analysis due to the missing enrollment rate variable, analyses were performed with and without the enrollment variable.

Mortality analysis was conducted using the complete VALIANT dataset. The relation between order of patient enrollment and death was explored using Cox proportional hazards regression analysis. Patient characteristics reported in the survival analysis for VALIANT[13] (age, baseline heart rate, history of diabetes, cigarette smoking, weight, history of myocardial infarction, anterior ECG site, Killip class, baseline Q-wave myocardial infarction, history of coronary stenting or bypass surgery, region of country, angina, peripheral vascular disease, alcohol abuse, and previous hospitalization) and site enrollment factors were included to determine if patient order predicted mortality risk after accounting for all other known factors. Patients from VALIANT were censored at the last follow-up date or if they were alive at the end of the study.

Analyses were conducted using statistical software (SAS version 9.1, SAS, Inc., Cary, NC).

RESULTS

Parent Study Descriptors

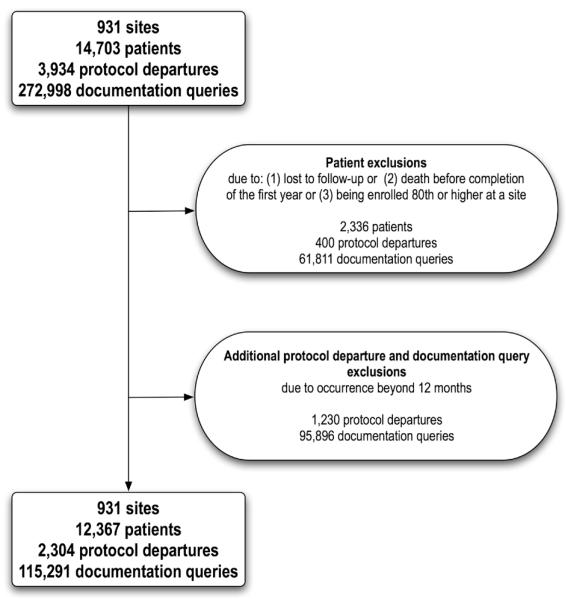

Of the 14,703 patients and 931 sites, 12,367 patients and all sites met the criteria for primary analysis (Table 1).

Table 1.

Descriptive characteristics of the original VALIANT trial[13] and limited VALIANT dataset used in the analysis of protocol departures and documentation queries. Continuous variables are presented as means (standard deviation).

| VALIANT dataset | Study dataset | |

|---|---|---|

| Number of study sites | 931 | 931 |

| Number of patients | 14,703 | 12,367 |

| Number of patients per site | 15.8 (19.1) | 13.3 (12.8) |

| Number of protocol departures | 3,934 | 2,304 |

| Number of protocol departures per subject | 0.27 (0.22) | 0.19 (0.13) |

| Number of documentation inconsistencies * | 272,998 | 115,291 |

| Number of documentation inconsistencies * per patient | 18.6 (10.5) | 9.3 (6.7) |

| Rate of subject enrollment (per month) | 1.4 (1.8) | 1.4 (1.8) |

| Time from trial start date to site initiation (days) | 388 (151) | 388 (151) |

Protocol departures defined as recorded protocol violations or deviations,

documentation inconsistencies defined as case report form queries.

Current Study Data

Of 3,934 protocol departures and 272,998 queries recorded, 2,304 and 115,291 met inclusion criteria. Exclusions included 472 patients enrolled 80th or higher, 1,864 due to death/loss to follow-up before completion of the year, resulting in a loss of 400 departures and 61,811 queries. Finally, 1,230 departures and 95,896 queries were excluded due to occurrence beyond the first 6 visits.

Of 14,703 patients, 2,878 died during the trial. Further exclusions from mortality analysis included 457 patients (78 deaths) rank 80th or higher, and 872 patients (215 deaths) with missing covariate data. Therefore, 13,374 patients met the criteria for mortality analysis, including 2,585 deaths.

Exclusion of single patient sites (65) from mortality analysis had no important effect on the findings of patient enrollment order.

Main Study Findings

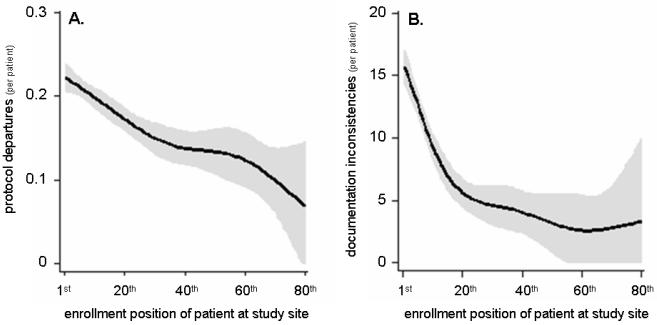

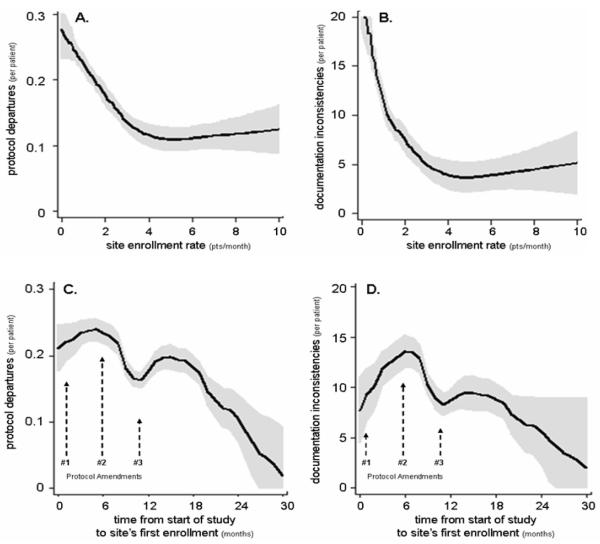

Protocol departures were significantly more common for earlier patients at study sites (P<0.0001) (Table 2A, fig 2A). Lower rates of enrollment and earlier date of first enrollment were associated with more departures (P<0.0001) (Table 2A, figs 3A & C). Predicted values generated from the cubic spline model indicate that departures were 1.47 times more common in the first patient than the 30th enrolled at each site.

Table 2.

A: multivariable analysis of factors associated with the protocol departures in the VALIANT trial[13] and B: a multivariable analysis of factors associated with documentation inconsistencies in the VALIANT trial.

| A: Protocol Departures^ | |||||

|---|---|---|---|---|---|

| Variable | Estimate | Standard error | 95% CI | Chi-square | P value |

| Patient order (per patient) | −0.0073 | 0.0019 | [−0.0110, −0.0036] | 15.03 | <0.0001 |

| Rate of enrollment (patients per month) |

−0.0826 | 0.0179 | [−0.1179, −0.0475] | 21.30 | <0.0001 |

| Time to 1st enrolled patient (weeks) |

−0.0006 | 0.0002 | [−0.0009, −0.0003] | 14.16 | <0.0001 |

| B: Documentation Inconsistencies * | |||||

|---|---|---|---|---|---|

| Variable | Estimate | Standard error | 95% CI | Chi-square | P value |

| Patient order (per patient) | −0.0202 | 0.0003 | [−0.0216, −0.0131] | 5382.15 | <0.0001 |

| Rate of enrollment (patients per month) |

−0.0174 | 0.0022 | [−0.0216, −0.0131] | 64.47 | <0.0001 |

| Time to 1st enrolled patient (weeks) |

−0.0010 | 0.0000 | [−0.0011 −0.0010] | 2122.6 | <0.0001 |

Protocol departures defined as recorded protocol violations or deviations,

documentation inconsistencies defined as case report form queries.

Figure 2.

Modeled data depicting the relationship between enrollment order at a study site (e.g., 1st, 2nd) and the likelihood of protocol departures (A) and documentation inconsistencies (queries) (B) during the VALIANT trial.[13]

Figure 3.

Modeled data depicting the relationship between site enrollment rate and the likelihood of protocol departures (A) and documentation inconsistencies (queries) (B), and the relationship between the time from the overall study start date to the first enrollment at a study site and the likelihood of protocol departures (C) and documentation inconsistencies (D), during the VALIANT trial.[13] The three study protocol amendments were issued 4 weeks (#1), 6 (#2) and 11 (#3) months after the study start date, and involved 14, 12 and 7 pages of text to identify protocol changes, respectively.

Similarly, queries were significantly more common for patients enrolled earlier (P<0.0001) (Table 2B, fig 2B). Lower rate of enrollment and earlier date of first enrollment were associated with more queries (P<0.0001) (Table 2B, fig 3B & D). Predicted values generated from the cubic spline model indicate queries were 2.15 times more common in the first patient than in the 30th.

After adjusting for other site enrollment and patient factors, patient order was not a predictor of mortality (P=0.57; hazard ratio=1.001). Median follow-up time for surviving patients was 830 days. Finally, 10,789 patients (81%) were censored in the analysis.

DISCUSSION

Our investigation indicates that research performance improved significantly during VALIANT.[13] In the completed trial record, we found fewer protocol departures, deliberate or unplanned, as the trial proceeded, consistent with a learning curve. Three features typical of skill acquisition that we hypothesized would be present were evident. More protocol departures occurred: 1) earlier in enrollment (first vs. 30th patient); 2) with less frequent enrollment (two vs. six patients/month); and 3) with earlier first enrollment relative to trial start. The same patterns were present in our analysis of documentation inconsistencies. Improvements during the study demonstrated curvilinear characteristics with a leveling off typical of a learning curve. Events were more frequent for queries than protocol departures, but a 1.5 to two-fold greater rate in the first versus the 30th patient was common for both (fig 2). We found no relation between mortality and enrollment order. If a learning curve is typical of performance in all clinical trials, this has implications for patient safety, ethics of informed consent, research quality, and cost. These findings suggest researcher education deserves the same attention and development applied to the acquisition of skills in other areas of healthcare.

Airline training from the 1930’s[17] provided some of the first graphic representations of improved performance with practice, known as the learning curve. While learning curves are an accepted part of medical and surgical skill acquisition and are anticipated in clinical trial design,[18, 19] little has been reported on learning curves in the conduct of clinical trials. Macias and colleagues suggested their findings in a secondary analysis of data from a completed clinical trial[9] could be explained by a research conduct learning curve. They noted a trend towards benefit in an otherwise negative study when patients enrolled prior to a protocol amendment and patients with at least one protocol violation were excluded. They noted a relation between treatment effect, protocol violations, and sequence of enrollment that may have influenced the trial results. Although we did not observe changes in mortality risk, we did observe a strong relation between increased opportunities for investigators learning and reduction in protocol departures consistent with a learning curve.

Despite the retrospective nature of our study, we believe the data source is highly accurate. Although limiting our study to data from within the first year after enrollment resulted in the loss of many protocol departures (31%), the six visits that occurred for each patient during this period were highly reproducible for all subjects. Follow-up was less predictable thereafter; thus, valid comparison required this restriction. Since our study was not considered in the design of VALIANT, the record is insufficiently detailed to address some aspects of our question. For example, we noted steady improvement in research conduct, but we cannot differentiate deliberate protocol departures from errors. Nor can we differentiate whether improvements were of major or minor importance. This limitation is partially attributable to a design feature of VALIANT where major (violations) and minor (deviations) protocol departures were pooled. Furthermore, we have limited ability to interrogate the nature of any learning effects. Although we know many sites had more than one coordinator and/or personnel turnover, we cannot assess the relative importance of learning effects by individuals, collective groups at study sites, or the coordinating centers resulting in site feedback or protocol amendments. Early performance improvements involving the first several subjects at a site may have resulted from increased protocol familiarity and feedback from the local environment. Later sources of learning feedback that would prevent operators from simply becoming more efficient at the wrong behavior (i.e., negative training) likely included monitor site visits, documentation queries, and protocol amendments. Our findings cannot be explained simply by individual learning. Sites starting later had fewer protocol departures suggesting that earlier sites, through the coordinating centers, may have shared knowledge with colleagues enrolling later. There were three protocol amendments released after the study began. They were issued 4 weeks, 6 and 11 months after the start date, and included 14, 12, and 7 pages of text, and 14, 6, and 2 lines in the table of contents identifying protocol changes. There were instances wherein the study protocol was found to be unspecified, unclear, or ambiguous. Clarification issues were most evident in the first two amendments. In fact, the first amendment referred to protocol review by “health authorities and potential investigators” as the source of concern for “practical protocol adherence issues.”

No changes in diagnosis, treatment, or follow-up procedures occurred. A fourth sub-study amendment did not involve the primary project. The possibility of superimposed mini learning curves related to protocol amendments was not explored.

Due to the unique characteristics of clinical trials, these findings should be cautiously generalized to similar studies (other home-administered oral therapies), and with even less confidence to more complex trials (critically-timed administration of short-acting intravenous therapy), although our study supports observations by Macias and colleagues from a complex trial.[9] Our findings are consistent with human learning theory and observations from other healthcare fields. Our analysis should be repeated in completed trials and prospectively collected data to better characterize study conduct learning curves. This analyses will generate mathematical models of learning curves (e.g., rate of skill acquisition) that may enlighten clinical trial design. Although we did not identify a link between protocol departures and outcome, it is plausible that such a relation may exist in a larger or more complex study. However, studies comparing the outcomes from numerous trials with standard care suggest there is no disadvantage, and possibly an overall advantage, to being enrolled in a clinical trial (the Hawthorne effect).[20]

If learning curves are inherent in the conduct of clinical trials, integration of this concept may improve research education and strategies. Categorizing tasks by the types of challenges they pose (affective, cognitive, or psychomotor) can help predict the most useful educational tools for instruction and evaluation.[21] A method commonly used in clinical trial education is a pre-trial investigator meeting including seminars, discussions, and reading materials. New approaches to education, such as high-fidelity simulation to familiarize researchers with a complex protocol[22, 23] and online case reporting with the potential for screening and feedback, have been tried.[24] While these innovations are supported by learning theory rationale, they have not been evaluated for evidence that they improve performance. Developing a performance taxonomy and metrics will be an important step in describing and comparing clinical trial education methods.

Beyond education, trial organizers must provide clear, easily executable study designs. Case report forms can be organized to minimize documentation error and inconsistencies. Human Factors usability methods have been used in designing clinical trial protocols[25] and may reduce the need for protocol amendments, but these innovations have not been evaluated for evidence of improved performance.

Language issues may have influenced the training at different VALIANT sites; all investigator meetings were conducted in English, with the exception of one that had simultaneous translations into 5 languages. All the training materials and data collection forms were in English. The existence of research conduct learning curves has implications that extend beyond improved safety and better science. For example, informed consent to participate in a trial currently requires a patient receive a clear study description including risks, but not whether an individual is the first to enroll at a site or a summary of the overall progress of a trial. A second issue is expense. Protocol departures in the early phase of a learning curve would add to cost by requiring greater enrollment to replace subjects lost to unacceptable protocol departures and due to the imprecision of lower quality data. In the worst case scenario, a useful treatment may go unrecognized.[9] Finally, case report form queries are costly. An intervention that reduced documentation queries during the learning phase of VALIANT (first 10 patients at each site) would have lowered costs for data management and site personnel needed to resolve queries.

CONCLUSIONS

We observed improvements in investigator research conduct during VALIANT consistent with a learning curve, as documented in the quality assurance record of this large, multi-center, randomized, double-blind clinical trial. However, in this seriously ill patient group, we found no association of study site enrollment order as a marker of the learning curve with mortality. If learning curves are common in clinical trials, this has broad implications involving safety, consenting practices, research quality, and significant cost (replace lost subjects, query resolution) that need to be further investigated. Learning curves are to be expected when acquiring skills for any complex task. The concept of learning curves in clinical practice is firmly established. If learning curves exist in the conduct of research as they have been shown to exist in patient care, insight into this phenomenon may be an important tool in advancing the design and implementation of future clinical trials.

To better understand this phenomenon and facilitate evidence-based improvements in clinical trial education and design, a general taxonomy and metrics are needed to better characterize variability in clinical trial conduct.

Figure 1.

Description of the exclusions in the development of the sample for the current study from the original VALIANT trial[13] dataset.

Acknowledgments

FUNDING This study was funded in part by National Institute of Neurological Disorders and Stroke grant #1R01NS49548-01 (JMT) and a grant from the Anesthesia Patient Safety Foundation (MCW). Work also funded in part by the Department of Anesthesiology GPRO/DCRI/Novartis Pharmaceuticals.

REFERENCES

- 1.Waldman JD, Yourstone SA, Smith HL. Learning curves in health care. Health Care Manage Rev. 2003;28:41–54. doi: 10.1097/00004010-200301000-00006. [DOI] [PubMed] [Google Scholar]

- 2.Caropreso PR. The learning curve. JAMA. 1994;271:824. doi: 10.1001/jama.271.11.824a. [DOI] [PubMed] [Google Scholar]

- 3.Marshall JB. Technical proficiency of trainees performing colonoscopy: A learning curve. Gastrointest Endosc. 1995;42:287–91. doi: 10.1016/s0016-5107(95)70123-0. [DOI] [PubMed] [Google Scholar]

- 4.Mulcaster JT, Mills J, Hung OR, et al. Laryngoscopic intubation: Learning and performance. Anesthesiology. 2003;98:23–7. doi: 10.1097/00000542-200301000-00007. [DOI] [PubMed] [Google Scholar]

- 5.Parry BR, Williams SM. Competency and the colonoscopist: A learning curve. Aust N Z J Surg. 1991;61:419–22. doi: 10.1111/j.1445-2197.1991.tb00254.x. [DOI] [PubMed] [Google Scholar]

- 6.Smith CD, Farrell TM, McNatt SS, et al. Assessing laparoscopic manipulative skills. Am J Surg. 2001;181:547–50. doi: 10.1016/s0002-9610(01)00639-0. [DOI] [PubMed] [Google Scholar]

- 7.Watkins JL, Etzkorn KP, Wiley TE, et al. Assessment of technical competence during ERCP training. Gastrointest Endosc. 1996;44:411–5. doi: 10.1016/s0016-5107(96)70090-1. [DOI] [PubMed] [Google Scholar]

- 8.Seymour NE, Gallagher AG, Roman SA, et al. Virtual reality training improves operating room performance: Results of a randomized, double-blinded study. Ann Surg. 2002;236:458–63. doi: 10.1097/00000658-200210000-00008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Macias WL, Vallet B, Bernard GR, et al. Sources of variability on the estimate of treatment effect in the PROWESS trial: Implications for the design and conduct of future studies in severe sepsis. Crit Care Med. 2004;32:2385–91. doi: 10.1097/01.ccm.0000147440.71142.ac. [DOI] [PubMed] [Google Scholar]

- 10.Kohn LT, Corrigan J, Donaldson MS, et al., editors. To err is human: Building a safer health system. National Academy Press; Washington, D.C.: 1999. [PubMed] [Google Scholar]

- 11.Bodenheimer T. Uneasy alliance--clinical investigators and the pharmaceutical industry. N Engl J Med. 2000;342:1539–44. doi: 10.1056/NEJM200005183422024. [DOI] [PubMed] [Google Scholar]

- 12.Tunis SR, Stryer DB, Clancy CM. Practical clinical trials: Increasing the value of clinical research for decision making in clinical and health policy. JAMA. 2003;290:1624–32. doi: 10.1001/jama.290.12.1624. [DOI] [PubMed] [Google Scholar]

- 13.Pfeffer MA, McMurray JJ, Velazquez EJ, et al. Valsartan, captopril, or both in myocardial infarction complicated by heart failure, left ventricular dysfunction, or both. N Engl J Med. 2003;349:1893–906. doi: 10.1056/NEJMoa032292. [DOI] [PubMed] [Google Scholar]

- 14.Hospitals ST. [updated 2005; cited 2006 November 2];Standard operating procedure. 2005 [PDF] Available from: http://www.sth-research.group.shef.ac.uk/documents/sops/sop_a105_protocol_violation.pdf.

- 15.Stone CJ. Generalized additive models. Statistical Science. 1986;1:312–14. [Google Scholar]

- 16.Harell FE. Regression modeling strategies: With application to linear models, logistic regression, and survival analysis. Springer-Verlag; New York: 2001. [Google Scholar]

- 17.Wright TP. Factors affecting the cost of airplanes. Journal of Aeronautical Sciences. 1936;3:122–28. [Google Scholar]

- 18.Cook JA, Ramsay CR, Fayers P. Statistical evaluation of learning curve effects in surgical trials. Clin Trials. 2004;1:421–7. doi: 10.1191/1740774504cn042oa. [DOI] [PubMed] [Google Scholar]

- 19.Thoma A. Challenges in creating a good randomized controlled trial in hand surgery. Clin Plast Surg. 2005;32:563–73. doi: 10.1016/j.cps.2005.05.002. [DOI] [PubMed] [Google Scholar]

- 20.Braunholtz DA, Edwards SJ, Lilford RJ. Are randomized clinical trials good for us (in the short term)? Evidence for a “trial effect”. J Clin Epidemiol. 2001;54:217–24. doi: 10.1016/s0895-4356(00)00305-x. [DOI] [PubMed] [Google Scholar]

- 21.Bloom BS, Krathwohl DR. Taxonomy of educational objectives: The classification of educational goals. D. McKay Co. Inc.; New York: 1956. [Google Scholar]

- 22.Stafford-Smith M, Lefrak EA, Qazi AG, et al. Efficacy and safety of heparinase I versus protamine in patients undergoing coronary artery bypass grafting with and without cardiopulmonary bypass. Anesthesiology. 2005;103:229–40. doi: 10.1097/00000542-200508000-00005. [DOI] [PubMed] [Google Scholar]

- 23.Taekman JM, Hobbs G, Barber L, et al. Preliminary report on the use of high-fidelity simulation in the training of study coordinators conducting a clinical research protocol. Anesth Analg. 2004;99:521–7. doi: 10.1213/01.ANE.0000132694.77191.BA. [DOI] [PubMed] [Google Scholar]

- 24.Fitzmartin R, Nitahara K. A survey of industry best practice metrics in clinical data management and biostatistics. Drug Information Journal. 2001;35:671–9. [Google Scholar]

- 25.Wright MC, Taekman JM, Barber L, et al. The use of high-fidelity human patient simulation as an evaluative tool in the development of clinical research protocols and procedures. Contemp Clin Trials. 2005;26:646–59. doi: 10.1016/j.cct.2005.09.004. [DOI] [PubMed] [Google Scholar]