Abstract

Objective

Compare auditory performance of Hybrid and standard cochlear implant users with psychoacoustic measures of spectral and temporal sensitivity and correlate with measures of clinical benefit.

Study design

Cross-sectional study.

Setting

Tertiary academic medical center.

Patients

Hybrid cochlear implant users between 12 and 33 months post implantation. Hybrid recipients all had preservation of low-frequency hearing.

Interventions

Administration of psychoacoustic, music perception, and speech reception in noise tests.

Main outcome measures

Performance on spectral-ripple discrimination, temporal modulation detection, Schroeder-phase discrimination, Clinical Assessment of Music Perception (CAMP), and speech reception in steady-state noise tests.

Results

CAMP pitch performance at 262 Hz was significantly better in Hybrid users compared to standard implant controls. There was a near significant difference on speech reception in steady-state noise. Surprisingly, neither Schroeder-phase discrimination at two frequencies nor temporal modulation detection thresholds across a range of frequencies revealed any advantage in Hybrid users. This contrasts with spectral-ripple measures that were significantly better in the Hybrid group. The spectral-ripple advantage was preserved even when using only residual hearing.

Conclusions

These preliminary data confirm existing data demonstrating that residual low-frequency acoustic hearing is advantageous for pitch perception. Results also suggest that clinical benefits enjoyed by Hybrid recipients are due to improved spectral discrimination provided by the residual hearing. No evidence indicated that residual hearing provided temporal information beyond that provided by electrical stimulation.

Keywords: electroacoustic, Hybrid cochlear implant, spectral, temporal

INTRODUCTION

Despite the impressive advances in cochlear implant (CI) technology over the past 25 years, many individuals with hearing loss fall into a gray area marked by hearing too good for CI candidacy but too poor for hearing aids to provide an adequately functional level of hearing. Hybrid, or electroacoustic, cochlear implants were designed to target this important patient population. Hybrid devices combine electrical hearing from direct stimulation of the basal cochlea with acoustical hearing from surviving apical hair cells. This is achieved through shorter and softer electrode arrays that are inserted into the basal turn of the cochlea with minimally traumatic surgical techniques. The basal cochlea is then stimulated electrically via the implant, whereas the apical cochlea functions via native physiology amplified (if needed) by a hearing aid.1,2

Because electrical stimulation occurs basally and acoustical stimulation occurs apically, appropriate candidates would have poor high-frequency hearing and relatively intact low-frequency hearing. Indeed, this audiometric configuration of a down-sloping sensorineural hearing loss is the most frequently encountered in adults.1 Contributing etiologies are common and include presbycusis, ototoxicity, and noise-induced hearing loss.

The ability to supplement the electrical signal with acoustic hearing confers several unique benefits to Hybrid implant users compared to standard long electrode implant users. This includes improved speech understanding in noise3–7 and music recognition abilities8. Despite the presence of numerous studies citing advantages of electroacoustic hearing, the underlying mechanisms to explain these data have not been thoroughly studied.

Two enhanced hearing abilities could potentially account for the performance improvements in Hybrid implant users. The first is improved spectral resolution. Electrical stimulation of the cochlea is spectrally imprecise. Current spread reduces the spatial selectivity of neural stimulation along the tonotopically oriented cochlea.9 Furthermore, inexact alignment of electrodes representing specific frequency ranges with the appropriate tonotopic place results in spectral mismatch. Thus, while modern long CIs contain 12 or more independent electrodes, the number of functionally independent channels is limited to a maximum of about 8.10 This suggests implant innovations should be focused beyond simply increasing the number of channels. The low frequency acoustic component of Hybrid hearing should theoretically enjoy far greater spectral resolution, which could aid in complex hearing tasks such as speech in noise and music appreciation.

The second ability that may explain Hybrid superiority is improved temporal resolution. Encoding strategies used for electrical stimulation discard the temporal fine structure of sound, transmitting only the temporal envelope. While the envelope is sufficient for understanding speech in quiet, the fine structure contains more subtle cues such as temporal pitch information.9,11,12 Hybrid users may have improved temporal abilities by utilizing their residual low frequency acoustic hearing.13

In the present study, a variety of listening abilities were evaluated in 5 Hybrid implant users and compared to those previously reported for long electrode implant users. Measures included speech and music perception as well as spectral and temporal psychoacoustical abilities. Spectral sensitivity was evaluated using spectral-ripple discrimination.14,15 Sensitivity to rapidly-changing spectral cues was evaluated using Schroeder-phase discrimination.11. Temporal envelope sensitivity was evaluated using temporal modulation detection..16 These psychoacoustic tests were previously shown to correlate with CI users’ clinical performance such as speech and music perception.11,15, 17

MATERIALS AND METHODS

Two subjects implanted with the Cochlear Nucleus Freedom-based Hybrid S8 device (6 intracochlear electrodes along a 10 mm array) and three subjects implanted with the Cochlear Nucleus Freedom-based Hybrid S12 device (10 intracochlear electrodes along a 10 mm array) were enrolled in the study. All implantations were performed at the University of Washington site of the Hybrid FDA clinical trials. Subject ages ranged from 63 to 75 years.

Map settings and fitting strategies for the Hybrid device were set in Custom Sound 2.1 software (Cochlear Ltd) according to the Hybrid S12 clinical trial protocol. The strategy for the electric component was ACE/ACE(RE) at 900 Hz with the number of maxima equal to the number of channels (i.e., 6 or 10). The acoustic component was fit using the National Acoustics Laboratories’ hearing aid fitting strategy (NAL-RP). The lowest frequency for electric stimulation was assigned to the frequency where acoustic hearing was no longer useful, defined by a threshold of 90 dB HL. Thus, there was no frequency overlap between the electric and acoustic hearing.

The duration between implantation and the first date of testing ranged from 12 to 33 months (mean and SEM 21±5 months). Testing was performed over two to three four-hour sessions. The duration between the first and final testing sessions was no greater than 9 months.

All subjects used the clinical processing program (map) that they most commonly employed for everyday listening. Non-implanted ears were occluded with an insert earplug or appropriately masked using a clinical audiometer.

Psychoacoustic tests were administered using custom graphical software programs written in MATLAB (MathWorks; Natick, MA). Acoustic stimuli were presented using a Macintosh G5 Computer (Apple; Cupertino, CA) via a Crown D45 amplifier (Crown Audio; Elkhart, IN) connected to a single B&W DM303 loudspeaker (Bowers & Wilkins; North Reading, MA). Subjects were positioned 1 m in front of the speaker in a double-walled sound-treated booth. The following tests were employed:

Spectral-Ripple Discrimination

This task used stimuli and procedures as previously described.15 Briefly, stimuli of 500 ms duration were generated by summing 200 pure-tone frequency components. Each 500 ms stimulus was either a standard or inverted ripple. A 3-interval, 3-adaptive forced-choice (AFC) paradigm using a two-up and one-down adaptive procedure was used to determine the spectral-ripple resolution threshold, converging on 70.7% correct18. One stimulus (i.e. inverted ripple sound, test stimulus) was different from two others (i.e. standard ripple sound, reference stimulus). The subject’s task was to discriminate the test stimulus from the reference stimuli. The depth of the ripples was 30 dB. The mean presentation level of the stimuli was 65 dBA and randomly roved within trials (7 dB range, 1 dB steps). The threshold was estimated by averaging the ripple spacing (the number of ripples/octave) for the final 8 of 13 reversals. Subjects completed 6 test runs with each testing condition. Scores represented the spectral-ripple resolution threshold, indicating the threshold for discriminating ripple density in ripples/octave. Thus, higher scores implied better performance.

Speech Reception Threshold (SRT) in Steady State Noise

This test was administered using methods and stimuli as previously described15. Subjects were asked to identify one randomly chosen spondee word (two-syllable word with equal emphasis on each syllable) out of a closed-set of 12 equally difficult spondees. The spondees were recorded by a female talker. A steady-state, speech-shaped background noise was used and the onset of the spondees was 500 ms after the onset of the background noise. The steady-state noise had a duration of 2 s. The level of the target speech was 65 dBA. The level of the noise was tracked using a 1-down, 1-up procedure and 2 dB steps. The threshold for a single test run was estimated by averaging the signal-to-noise ratio (SNR) for the final 10 of 14 reversals. The primary dependent variable was average SRTs out of the six repeated measures. Scores represented dB SNR, with lower (more negative) scores representing better performance.3,15

University of Washington Clinical Assessment of Music Perception Test (UW-CAMP)

Melody, timbre, and pitch recognition abilities were assessed as previously described.19

The complex-tone pitch direction discrimination test used a synthesized piano tone of 3 different frequencies (262, 300, and 391 Hz). The tones were synthesized to make the envelopes of each harmonic complex. The task was a 2-AFC in which listeners were asked to select the interval with the higher frequency. A one-up and one-down tracking procedure was used to measure the minimum detectable change in semitones that a listener could hear. The step size was one semitone equivalent to a half step on the piano. Three tracking histories were run for each frequency. The threshold for each tracking history was the mean of the last 6 of 8 reversals. The threshold for each frequency was the mean of the 3 thresholds from each tracking history.

The melody test was conducted using a 12-AFC task with familiar isochronous melodies such as “Twinkle, Twinkle Little Star” and “Row, Row, Row Your Boat”. Rhythm cues were eliminated by repeating long tones in an eight-note pattern. The level of each successive note in the sequence was roved by ±4 dB to reduce loudness cues. Each melody was presented 3 times.

The timbre test was an 8-AFC task. A simple note pattern was played with each musical instrument recorded in a live studio. The notes were separated in time and played in the same octave at the same tempo. Recordings were matched for note lengths and adjusted to match levels. The performers were instructed to avoid vibrato. Instruments include piano, guitar, clarinet, saxophone, flute, trumpet, violin, and cello.

Schroeder-Phase Discrimination

This test was administered as previously described.11 Positive and negative Schroeder-phase stimuli pairs were created for two different fundamental frequencies (F0s) of 50 Hz and 200 Hz. For each F0, equal-amplitude cosine harmonics from the F0 up to 5 kHz were summed. The Schroeder-phase stimuli were presented at 65 dBA without roving the level. A 4-interval, 2-AFC procedure was used. One stimulus (i.e. positive Schroeder-phase, test stimulus) in either the second or third interval was different from three others (i.e. negative Schroeder-phase, reference stimulus). The subject’s task was to discriminate the test stimulus from the reference stimuli. To determine a total percent correct for each F0, the method of constant stimuli was used. In a single test block, each F0 was presented 24 times in random order and a total percent correct for each F0 was calculated as the percent of stimuli correctly identified. The dependent variable for this test was the mean percent correct of six test blocks for each F0. The total number of presentations for each F0 was 144. Scores represented a percentage correct, where 50% was the chance level.

Temporal Modulation Detection

This test was adapted from previously employed methods16,20. A 1-interval, 2-AFC procedure was used to measure the modulation detection threshold (MDT). One of the two 1 s observation intervals consisted of sinusoidally amplitude modulated wide-band noise, and the other 1 s observation interval consisted of continuous wideband noise. The subjects were instructed to choose the interval which contained the modulated noise. For the modulated stimuli, sinusoidal amplitude modulation was applied to the wide-band noise carrier using the following equation: [f(t)][1 + mi sin(2πfmt)], where f(t) is the wideband noise carrier, mi is the modulation index (i.e. modulation depth), and fm is the modulation frequency. To compensate the intensity increment for the modulated stimuli, the modulated waveform was divided by a factor of 1 + (mi2/2). Stimuli were ramped with 10 ms rise/fall times. Modulation frequencies of 10, 50, 100, 150, 200, and 300 Hz were used. Stimuli were presented at 65 dBA. A 2-down, 1-up adaptive procedure was used to measure the modulation depth (mi) threshold, converging on 70.7%18, starting with a modulation depth of 100% and decreasing in steps of 4 dB from the first to fourth reversal, and 2 dB for the next 10 reversals. The final 10 reversals for each run were averaged to obtain the MDT for each test run. Six test blocks were conducted. We reported MDTs in dB relative to 100% modulation (20Log10(mi)).

The spectral-ripple discrimination, Schroeder-phase harmonic discrimination, and temporal modulation detection tests were administered both with acoustic-only (A) and electroacoustic (EA) hearing. The remaining tests were administered with only EA hearing. In order to assess A hearing, subjects detached the speech processor magnetic coil, thereby disabling E hearing.

Data from forty-two standard cochlear implant subjects who underwent testing at our center with the SRT and CAMP, spectral-ripple, and Schroeder-phase discrimination tests were used for control comparison. Subject characteristics are detailed in a prior publication.17 Briefly, the subject age range was 25–78 years (mean 50). The duration of deafness ranged between 0–57 years (mean 9.4) and the range of implant use duration was 0.3–16 years (mean 4.1). Data from twenty-four standard cochlear implant subjects who completed the temporal modulation detection test were also used for control comparison. Subject characteristics are likewise detailed in a prior publication.16 Briefly, the subject age range was 25–78 (mean 59). The duration of deafness ranged between 0–57 years (mean of 9.7) and the range of implant use duration was 0.5–11 years (mean 3.2).

Published performance data from nonimplanted hearing impaired and normal subjects on the Schroeder-phase discrimination21 and temporal modulation detection20 tests were employed for comparison purposes. Details appear in the referenced publications.

Statistics were performed in SPSS 19 (IBM; Armonk, NY). An independent-samples two-tailed T test was utilized to compare means between user groups. A paired-samples two-tailed T test was employed to compare means between acoustic and electroacoustic hearing performance among the same subjects. Significance was considered at the p<0.05 level. Mean values are presented with standard errors of the mean (SEM).

The study protocol was reviewed and approved by the University of Washington Institutional Review Board.

RESULTS

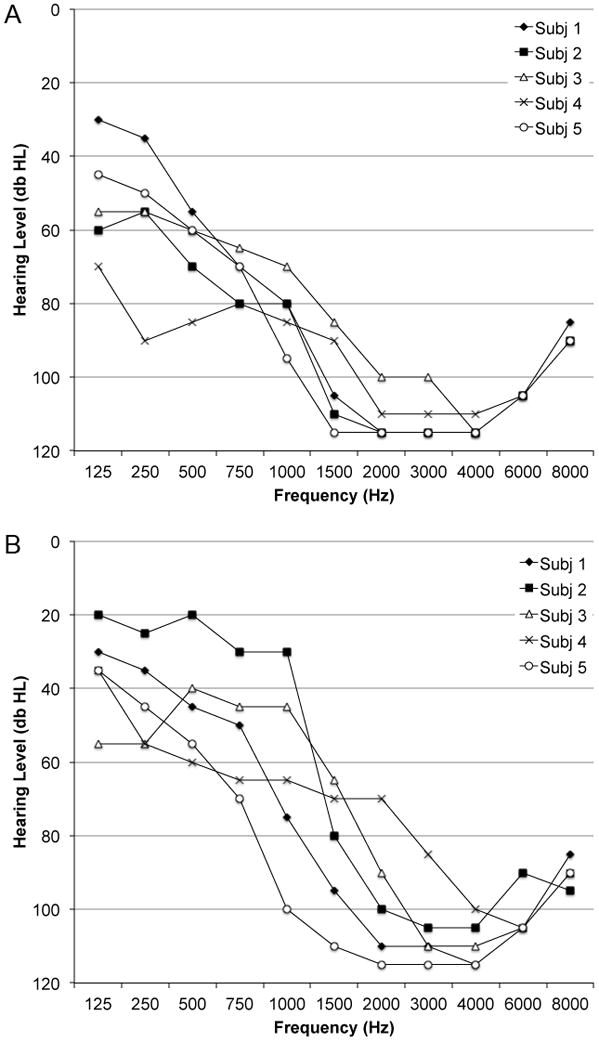

Hybrid subjects’ most recent audiograms prior to testing appear in Figure 1.

FIG. 1.

A, Individual pure tone audiograms for the subjects’ implanted ear. B, Individual pure tone audiograms for the subjects’ nonimplanted ear.

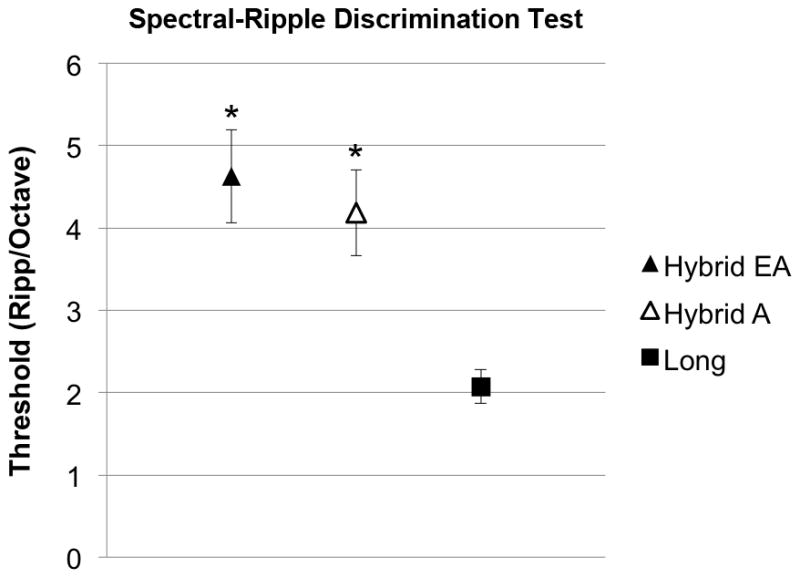

On the spectral-ripple discrimination test, Hybrid users (mean score 4.6±0.6 ripples/octave) performed significantly better than standard implant users (mean score 2.1±0.2 ripples/octave) (p<0.001). Four Hybrid users were then administered the test again using only their acoustic hearing. A pairwise comparison revealed no significantly different performance between the electroacoustic and acoustic alone conditions (p=0.47). (Figure 2)

FIG. 2.

Spectral-ripple discrimination test performance. Higher scores indicate better performance. *Significant difference between Hybrid users and standard cochlear implant users. (EA, electroacoustic; A, acoustic; error bars are SEM)

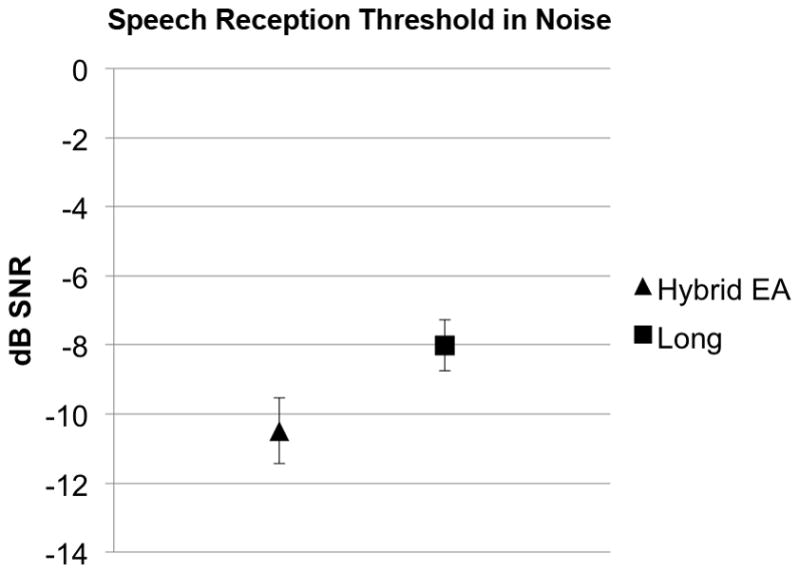

There was a nearly significant difference comparing performance on the speech reception threshold (SRT) in steady state noise test between Hybrid users and standard implant users (p=0.066). The addition of one more Hybrid subject with mean performance would have achieved significantly superior Hybrid scores at the p<0.05 level. (Figure 3)

FIG. 3.

Speech reception threshold (SRT) performance. Lower (more negative) scores indicate better performance. (EA, electroacoustic; SNR, signal-to-noise ratio; error bars are SEM)

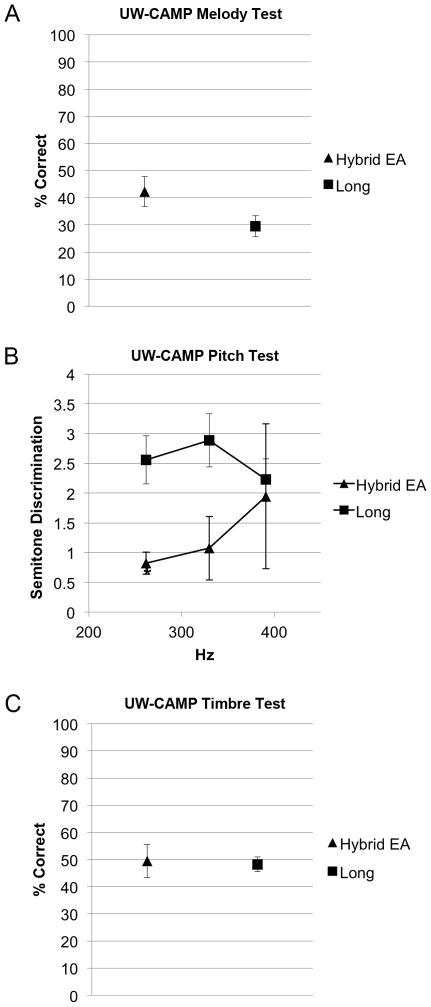

Hybrid user performance was significantly better on the pitch-direction discrimination portion of the UW-CAMP than standard implant users at 262 Hz (p<0.001). Performance was not statistically different at 330 Hz (p=0.15) or 391 Hz (p=0.6). (Figure 4B)

FIG. 4.

University of Washington Clinical Assessment of Music Perception (UW-CAMP) performance. A, Melody test. B, Pitch test. Lower scores indicate better performance. C, Timbre test. *Significant difference between Hybrid users and standard cochlear implant users. (p<0.05) (EA, electroacoustic; error bars are SEM)

Hybrid user performance was not statistically different on the melody (p=0.27) or timbre (p=0.87) portions of the UW-CAMP from standard implant users. (Figure 4A, C)

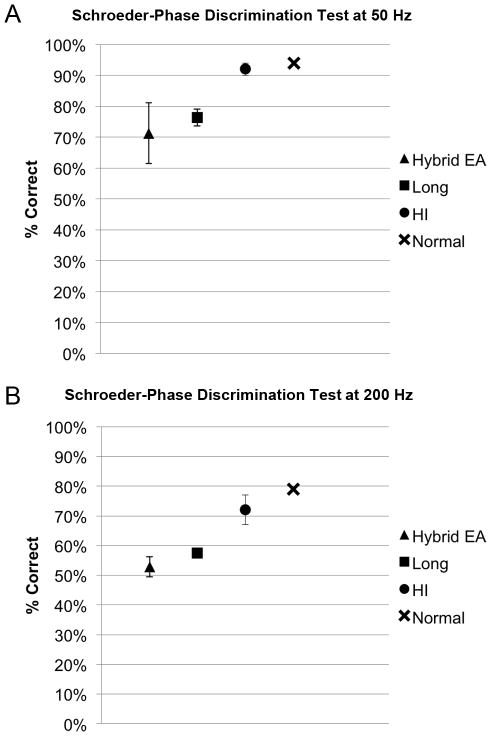

There was no statistically significant difference between Hybrid user performance and standard cochlear implant user performance on Schroeder-phase discrimination at 50 Hz (p=0.53) or 200 Hz (p=0.30). Three Hybrid users were then administered the test again using only their acoustic hearing. A pairwise comparison revealed no significantly different performance between the electroacoustic and acoustic conditions at both 50 Hz (p=0.31) and 200 Hz (0.71). (Figure 5)

FIG. 5.

Schroeder-phase discrimination performance. A, 50 Hz. B, 200 Hz. Chance level is 50%. Higher scores indicate better performance. Previously published hearing impaired and normal data are displayed for reference.21 (EA, electroacoustic, HI, hearing impaired; error bars are SEM)

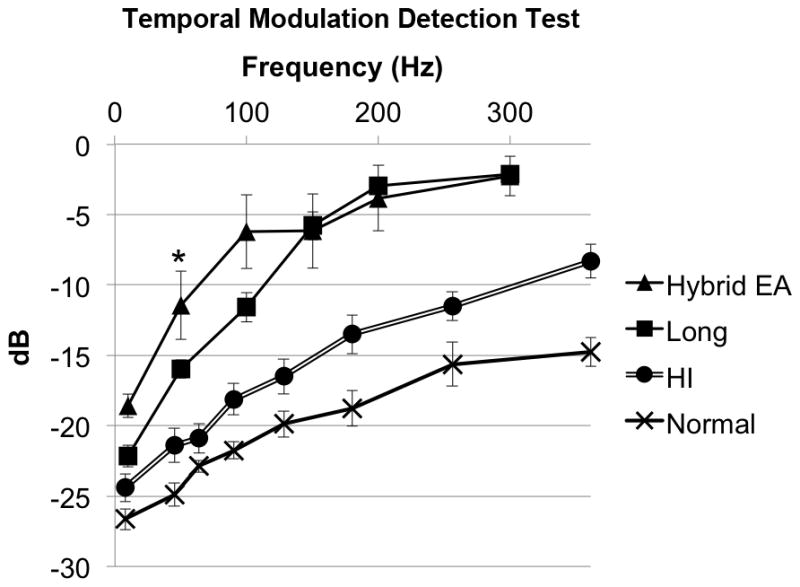

On the temporal modulation detection test, there was no statistically significant difference between Hybrid user performance and standard cochlear implant user performance at 10 Hz (p=0.39), 100 Hz (p=0.11), 150 Hz (p=0.75), 200 Hz (p=0.57), and 300Hz (p=0.88). Of note, at 50 Hz, Hybrid users scored significantly worse than standard implant users (p<0.05). (Figure 6) Three Hybrid users were then administered the test again using only their acoustic hearing. A pairwise comparison revealed no significantly different performance between the electroacoustic and acoustic conditions at all frequencies (p=0.10 to 1.0).

FIG. 6.

Temporal modulation detection test performance. *Significant difference between Hybrid users and standard cochlear implant users. Lower (more negative) scores indicate better performance. Previously published hearing impaired and normal data are displayed for reference.20 (EA, electroacoustic, HI, hearing impaired; error bars are SEM)

DISCUSSION

Numerous studies have cited clinical performance advantages to Hybrid (electroacoustic) cochlear implant users compared to standard long cochlear implant users. This includes improved speech recognition in noise as well as music recognition.3,4,6,8 Yet, the mechanism that underlies these improved abilities is poorly understood. In this report, performance of Hybrid cochlear implant subjects was compared to standard long cochlear implant subjects on a battery of psychoacoustic tests designed to specifically assess spectral and temporal discrimination. Knowing which basic abilities are improved in Hybrid users might lead to improved understanding of electroacoustic hearing and also suggest avenues for further implant refinement.

One of the fundamental challenges of Hybrid implant research is matching control subjects to draw comparisons. For example, the majority of Hybrid subjects are not standard cochlear implant candidates and vice versa. Subjects in the Hybrid group were older and also had less experience with the implant than subjects in the standard cochlear implant group.

While our subject numbers were too low to reveal a statistically significant advantage with speech recognition in steady state noise, prior studies have demonstrated an advantage in understanding speech with competing talker noise.3–7

The data revealed that Hybrid subjects performed superiorly to standard implant subjects on the spectral-ripple discrimination test as well as pitch discrimination at 262 Hz on the UW-CAMP.

In order to investigate whether the improved spectral performance may be explained by the residual acoustic hearing, subjects repeated the spectral-ripple discrimination test with acoustic hearing only. There was no significant difference in performance between the electroacoustic and acoustic-only conditions. Likewise, the acoustic condition alone yielded significantly superior performance compared to standard cochlear implant users. This suggests that better spectral resolution abilities of the residual acoustic hearing are, at least in part, responsible for Hybrid performance benefits.

In contrast to the superior spectral sensitivity, Hybrid subjects performed equivalent to, or possibly slightly worse than, standard implant subjects on temporal tasks. On the Schroeder-phase discrimination test, Hybrid and standard implant subjects scored equivalently at the two tested frequencies. On the temporal modulation detection test, Hybrid subjects scored equivalently on five frequencies, but worse at one frequency (50 Hz, the second lowest tested).

Because acoustical hearing has far better temporal processing abilities than electrical hearing22, this was a surprising finding. Indeed others have suggested that even a single narrowband channel of low-frequency temporal information can greatly enhance electrical hearing.13 Distortion of the test signals due to saturation of the acoustic device was unlikely since loudness balancing was performed as part of the device fitting. Furthermore, the loudness of all test signals was at conversational volume. One possible explanation is that Hybrid users could not fully integrate electrical spectral with acoustical temporal information.

Hybrid subjects have been shown to require up to 10–12 months before achieving maximal levels of speech understanding, longer than required for standard implant users.1 All of our subjects were beyond this learning period, however, having a mean time from implantation to testing of 21 months. It is conceivably possible that learning to integrate temporal information is more challenging and requires even greater amounts of time. Age has also been shown to have a negative association with performance, perhaps due to the high cognitive abilities required to integrate electrical and acoustical information. It is possible that integrating disparate temporal information is more challenging, and that subjects tested in this study, whose mean age was 70, lacked the requisite plastic or cognitive abilities.

Even more concerning, however, is the possibility that the dual modality of sound presentation could be antagonistic. It is possible that spectral discrepancies introduced by Hybrid implant programming could affect accurate encoding of the sound. Whereas the tonotopic encoding of the sound in the apical turn of the cochlea is correct, the basal turn where the implant array is inserted follows the implant frequency allocation to individual electrodes. More specifically, the frequency range processed by the Hybrid implant generally covers 1500 to 8000 Hz, but the physical location of the electrodes in the cochlea does not match to this range. It also appears that some frequency components might simultaneously be represented acoustically as well as electrically.

In order to test if these spectral discrepancies affected the Schroeder-phase and temporal modulation detection tests (in which Hybrid subjects performed worse than standard implant subjects) we re-administered these tests to three subjects in both the acoustic and electroacoustic conditions. To eliminate performance fluctuations over different testing days, both tests were administered the same day and the order of the tests was randomized. There was no significant difference in performance.

A final explanation, supported by our data from the acoustic condition alone, is that the degree of residual hearing alone was insufficient to gain any temporal advantages. This would stand in contrast with the spectral advantages observed with residual hearing. Thus, better preservation of residual hearing could potentially offer an advantage in temporal processing. Of note, the degree of residual hearing lost post implantation has been shown to hold no correlation to speech recognition in quiet.6 However, one of the hallmark improvements of Hybrid hearing is better speech recognition in background noise. Improved temporal processing may help further realize this goal.

In conclusion, our data suggest that improved performance in Hybrid cochlear implant users is due to spectral, rather than temporal advantages of the residual acoustic hearing. Better recognition of temporal cues may represent an avenue for improvement for both Hybrid and standard cochlear implants.

Acknowledgments

The authors thank Elyse Jameyson for her assistance. This work was supported by NIH grants R01-DC007525, P30-DC04661, F31-DC009755, L30-DC008490.

Footnotes

Financial Disclosures: Jay Rubinstein is a paid consultant for Cochlear Ltd and receives research funding from Advanced Bionics Corporation, two manufacturers of cochlear implants. Neither company played any role in data acquisition, analysis, or composition of this paper.

References

- 1.Gantz BJ, Turner CW. Combining acoustic and electrical hearing. The Laryngoscope. 2003 Oct;113(10):1726–1730. doi: 10.1097/00005537-200310000-00012. [DOI] [PubMed] [Google Scholar]

- 2.Gantz BJ, Turner C, Gfeller KE, Lowder MW. Preservation of hearing in cochlear implant surgery: advantages of combined electrical and acoustical speech processing. Laryngoscope. 2005 May;115(5):796–802. doi: 10.1097/01.MLG.0000157695.07536.D2. [DOI] [PubMed] [Google Scholar]

- 3.Turner CW, Gantz BJ, Vidal C, Behrens A, Henry BA. Speech recognition in noise for cochlear implant listeners: benefits of residual acoustic hearing. J Acoust Soc Am. 2004 Apr;115(4):1729–1735. doi: 10.1121/1.1687425. [DOI] [PubMed] [Google Scholar]

- 4.Turner C, Gantz BJ, Reiss L. Integration of acoustic and electrical hearing. J Rehabil Res Dev. 2008;45(5):769–778. doi: 10.1682/jrrd.2007.05.0065. [DOI] [PubMed] [Google Scholar]

- 5.Gantz BJ, Turner C, Gfeller KE. Acoustic plus electric speech processing: preliminary results of a multicenter clinical trial of the Iowa/Nucleus Hybrid implant. Audiol Neurootol. 2006;11( Suppl 1):63–68. doi: 10.1159/000095616. [DOI] [PubMed] [Google Scholar]

- 6.Gantz BJ, Hansen MR, Turner CW, Oleson JJ, Reiss LA, Parkinson AJ. Hybrid 10 clinical trial: preliminary results. Audiol Neurootol. 2009;14( Suppl 1):32–38. doi: 10.1159/000206493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Turner CW, Gantz BJ, Karsten S, Fowler J, Reiss LA. Impact of hair cell preservation in cochlear implantation: combined electric and acoustic hearing. Otology & Neurotology. 2010 Oct;31(8):1227–1232. doi: 10.1097/MAO.0b013e3181f24005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Gfeller KE, Olszewski C, Turner C, Gantz B, Oleson J. Music perception with cochlear implants and residual hearing. Audiol Neurootol. 2006;11( Suppl 1):12–15. doi: 10.1159/000095608. [DOI] [PubMed] [Google Scholar]

- 9.Rubinstein JT. How cochlear implants encode speech. Curr Opin Otolaryngol Head Neck Surg. 2004 Oct;12(5):444–448. doi: 10.1097/01.moo.0000134452.24819.c0. [DOI] [PubMed] [Google Scholar]

- 10.Wilson BS. Cochlear implants: Current designs and future possibilities. The Journal of Rehabilitation Research and Development. 2008;45(5):695–730. doi: 10.1682/jrrd.2007.10.0173. [DOI] [PubMed] [Google Scholar]

- 11.Drennan WR, Longnion JK, Ruffin C, Rubinstein JT. Discrimination of Schroeder-phase harmonic complexes by normal-hearing and cochlear-implant listeners. J Assoc Res Otolaryngol. 2008 Mar;9(1):138–149. doi: 10.1007/s10162-007-0107-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Smith ZM, Delgutte B, Oxenham AJ. Chimaeric sounds reveal dichotomies in auditory perception. Nature. 2002 Mar 7;416(6876):87–90. doi: 10.1038/416087a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zhang T, Dorman MF, Spahr AJ. Information from the voice fundamental frequency (F0) region accounts for the majority of the benefit when acoustic stimulation is added to electric stimulation. Ear Hear. 2010 Feb;31(1):63–69. doi: 10.1097/aud.0b013e3181b7190c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Henry BA, Turner CW, Behrens A. Spectral peak resolution and speech recognition in quiet: normal hearing, hearing impaired, and cochlear implant listeners. J Acoust Soc Am. 2005 Aug;118(2):1111–1121. doi: 10.1121/1.1944567. [DOI] [PubMed] [Google Scholar]

- 15.Won JH, Drennan WR, Rubinstein JT. Spectral-ripple resolution correlates with speech reception in noise in cochlear implant users. J Assoc Res Otolaryngol. 2007 Sep;8(3):384–392. doi: 10.1007/s10162-007-0085-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Won JH, Drennan WR, Nie K, Jameyson EM, Rubinstein JT. Acoustic temporal modulation detection and speech perception in cochlear implant listeners. The Journal of the Acoustical Society of America. 2011 Jul;130(1):376. doi: 10.1121/1.3592521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Won JH, Drennan WR, Kang RS, Rubinstein JT. Psychoacoustic abilities associated with music perception in cochlear implant users. Ear Hear. 2010 Dec;31(6):796–805. doi: 10.1097/AUD.0b013e3181e8b7bd. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Levitt H. Transformed up-down methods in psychoacoustics. The Journal of the Acoustical Society of America. 1971 Feb;49(2 Suppl 2):467. [PubMed] [Google Scholar]

- 19.Kang R, Nimmons GL, Drennan W, et al. Development and validation of the University of Washington Clinical Assessment of Music Perception test. Ear Hear. 2009 Aug;30(4):411–418. doi: 10.1097/AUD.0b013e3181a61bc0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Bacon SP, Viemeister NF. Temporal modulation transfer functions in normal-hearing and hearing-impaired listeners. Audiology: official organ of the International Society of Audiology. 1985;24(2):117–134. doi: 10.3109/00206098509081545. [DOI] [PubMed] [Google Scholar]

- 21.Lauer AM, Molis M, Leek MR. Discrimination of time-reversed harmonic complexes by normal-hearing and hearing-impaired listeners. J Assoc Res Otolaryngol. 2009 Dec;10(4):609–619. doi: 10.1007/s10162-009-0182-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kong Y-Y, Stickney GS, Zeng F-G. Speech and melody recognition in binaurally combined acoustic and electric hearing. The Journal of the Acoustical Society of America. 2005;117(3):1351. doi: 10.1121/1.1857526. [DOI] [PubMed] [Google Scholar]