Abstract

The aim of the present study was to dissociate the neural correlates of semantic and phonological processes during word reading and picture naming. Previous studies have addressed this issue by contrasting tasks involving semantic and phonological decisions. However, these tasks engage verbal short‐term memory and executive functions that are not required for reading and naming. Here, 20 subjects were instructed to overtly name written words and pictures of objects while their neuronal responses were measured using functional magnetic resonance imaging (fMRI). Each trial consisted of a pair of successive stimuli that were either semantically related (e.g., “ROBIN‐nest”), phonologically related (e.g., “BELL‐belt”), unrelated (e.g., “KITE‐lobster”), or semantically and phonologically identical (e.g., “FRIDGE‐fridge”). In addition, a pair of stimuli could be presented in either the same modality (word‐word or picture‐picture) or a different modality (word‐picture or picture‐word). We report that semantically related pairs modulate neuronal responses in a left‐lateralized network, including the pars orbitalis of the inferior frontal gyrus, the middle temporal gyrus, the angular gyrus, and the superior frontal gyrus. We propose that these areas are involved in stimulus‐driven semantic processes. In contrast, phonologically related pairs modulate neuronal responses in bilateral insula. This region is therefore implicated in the discrimination of similar, competing phonological and articulatory codes. The above effects were detected with both words and pictures and did not differ between the two modalities even with a less conservative statistical threshold. In conclusion, this study dissociates the effects of semantic and phonological relatedness between successive items during reading and naming aloud. Hum Brain Mapp, 2007. © 2006 Wiley‐Liss, Inc.

Keywords: fMRI, language, phonology, semantics, reading, naming

INTRODUCTION

The name of a word or picture is intrinsically associated with its meaning. One of the challenges in the investigation of how language is implemented in the human brain is therefore to segregate semantic from phonological processes.

Most functional imaging studies have attempted to identify the brain areas that are selectively involved in phonological and semantic processes by manipulating the experimental task [Demonet et al., 1992; Price et al., 1997; Poldrack et al., 1999; Devlin et al., 2003; McDermott et al., 2003]. For example, McDermott et al. [2003] increased semantic demands by instructing participants to decide which two of three words were most meaningfully related (e.g., “tiger,” “circus,” and “jungle”) and increased phonological demands by instructing participants to decide which two of three words sounded most similar (e.g., “skill,” “fill,” and “hill”). These studies have typically reported increased activation during semantic relative to phonological tasks in anterior/ventral left inferior frontal cortex (pars orbitalis and pars triangularis), the angular gyrus, the middle temporal cortex, the anterior fusiform gyrus, and the angular gyrus. Conversely, increased activation during phonological relative to semantic tasks has been detected in posterior/dorsal left inferior frontal cortex (pars opercularis and premotor cortex), insula, supramarginal gyrus, and posterior fusiform gyrus.

The interpretation of these findings, however, is constrained by two methodological limitations. First, while the studies have employed a variety of experimental tasks to manipulate semantic and phonological demands, they tend to share one common feature: the use of orthographic stimuli. One recent study has compared semantic and phonological processing using picture stimuli [Price et al., 2005], but there are no studies that directly contrasted semantic and phonological processes using both orthographic and pictorial stimuli. Thus, it is currently unclear whether the reported double dissociation between phonological and semantic activations differs for orthographic and pictorial stimuli. Second, task manipulation may be affected by strategy confounds [Demonet et al., 1994; Noppeney and Price, 2003]. For instance, semantic tasks typically involve memory search, decision‐making, response selection, working memory processes, and mental imagery. Phonological tasks, on the other hand, tend to involve subvocal articulatory monitoring as well as verbal short‐term memory. Thus, semantic and phonological tasks are likely to be associated with differential executive processes that are not required for reading and naming per se. It is therefore currently unclear to what extent the reported double dissociation for phonological and semantic tasks reflects stimulus‐driven processes rather than task‐related strategies.

The aim of the present study was to investigate the neural correlates of phonological and semantic processes for orthographic as well as pictorial stimuli, while minimizing task‐related strategy confounds. In contrast with previous studies, this was achieved by manipulating the presentation of the stimuli while keeping the task constant throughout the experiment. Each trial involved the presentation of two successive stimuli that could be semantically related (e.g., “ROBIN‐nest”), phonologically related (e.g., “BELL‐belt”), unrelated (e.g., “KITE‐lobster”), or semantically and phonologically identical (“FRIDGE–fridge”). In addition, each stimulus could be either a word or a picture. This allowed the identification of effects that were common to the two modalities as well as effects that were specific to either reading or naming. The experimental task simply required subjects to read all words and name all pictures overtly as soon as they appeared on the screen. The present paradigm can also be understood in terms of semantic and phonological priming [Henson, 2003], with the first stimulus or “prime” modulating the neuronal response to the second stimulus or “target” within each pair.

We predicted that semantically related and phonologically related pairs would modulate neuronal activation in distinct language areas. Specifically, semantically related pairs were expected to modulate activation in areas that are sensitive to meaningful associations. On the basis of previous functional imaging and neuropsychological studies, we expected these areas to include left inferior frontal [Kotz et al., 2002; Copland et al., 2003], anterior temporal [Hodges et al., 1992, 2000; Bozeat et al., 2000; Kensinger et al., 2003], middle temporal [Chertkow et al., 1997; Mummery et al., 1998; Copland et al., 2003], and parietal [Demonet et al., 1992; Mummery et al., 1998] regions. Likewise, phonologically related pairs were expected to modulate activation in areas that are sensitive to phonological and articulatory demands. These may include the left inferior parietal cortex, posterior fusiform, and prefrontal regions including pars opercularis, dorsal premotor cortex, and insula [Demonet et al., 1992; Dronkers, 1996; Price et al., 1997; Poldrack et al., 1999; Devlin et al., 2003; McDermott et al., 2003; Nestor et al., 2003]. We also predicted that most semantic and phonological effects would be similar for words and pictures, consistent with the idea that reading is a relatively recent skill from an evolutionary point of view and is therefore likely to be mediated by the same phonological and semantic processes that are involved in naming [Price et al., 2006]. However, given the almost exclusive reliance of previous studies on orthographic stimuli, the possibility of modality‐specific semantic and phonological effects could not be discarded.

MATERIALS AND METHODS

Subjects

Informed consent was obtained from 20 right‐handed volunteers (11/9 M/F), aged between 2 and 36 years (with a mean age of 26), with English as their first language. None reported a history of neurological or psychiatric illness, or disturbances in speech comprehension, speech production, reading, or writing. The study was approved by the National Hospital for Neurology and Institute of Neurology Medical Ethics Committee.

Experimental Paradigm

Each trial consisted of a pair of successive stimuli. Each stimulus was either a black‐and‐white picture of an object or its written name, resulting in four types of pairs: word‐word, picture‐picture, word‐picture, and picture‐word. In addition, the two stimuli could be semantically related (e.g., “ROBIN‐nest”; “COW‐bull”), phonologically related (e.g., “BELL‐belt”), unrelated (e.g., “KITE‐lobster”), or semantically and phonologically identical (e.g., “FRIDGE‐fridge”). This resulted in a total of 16 experimental conditions (i.e., 4 word‐picture combinations × 4 prime‐target relationships). The trials were presented in an event‐related design in order to minimize the cognitive confounds typically associated with block designs [Josephs and Henson, 1999].

Two stimuli were considered semantically related if they were meaningfully related based on semantic association (e.g., “ROBIN‐nest”) or category membership (e.g., “COW‐bull”). In contrast, two stimuli were considered phonologically related if they shared at least the first phoneme. In most cases, phonologically related items shared the first two or three phonemes and in some cases they shared the first four or five phonemes. Two stimuli were considered unrelated if they were not phonologically or semantically related and referred to different objects. Finally, semantically and phonologically identical stimuli referred to the same object but were not perceptually identical. For instance, in the case of pairs of pictures, different pictures of the same object or different exemplars were used; similarly, in the case of pairs of words, the same words printed in different fonts, letter cases, and letter sizes were used. The appendix provides the full list of phonological, semantic, unrelated, and identical pairs.

In order to avoid item‐specific effects, the same prime and target stimuli were used to create semantic, phonological, unrelated, and identical pairs over subjects. For instance, the target crab (1) followed the prime crane, thereby forming a phonological pair in a first subset of subjects; (2) followed the prime lobster, thereby forming a semantic pair in a second subset of subjects; (3) followed the prime crab, thereby forming an identical pair in a third subset of subjects; (4) followed the prime slide, thereby forming an unrelated pair in a forth subset of subjects. This ensured that semantic, phonological, unrelated, and identical pairs were matched for variables of no interest over subjects. The black‐and‐white procures were taken from Hemera Photo‐Objects Data Base photographic library; the words were created using Corel Draw software. In order to minimize error trials in the scanner, those pictures that were named incorrectly by at least a third of the subjects in a pilot behavioral study were excluded from the stimulus set.

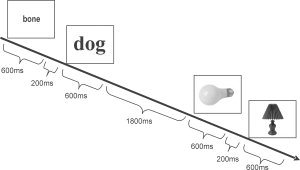

The data were acquired in two separate sessions, each including 200 trials (either 12 or 13 for each of the 16 experimental conditions) plus 100 null events, which consisted of a fixation cross. The exact number of trials within each condition (i.e., 12 or 13) was counterbalanced across subjects. The same prime‐target relationships were used in the two sessions; however, objects presented as words in the first session were presented as pictures in the second session, whereas objects presented as pictures in the first session were presented as words in the second session. The first stimulus was presented for 600 ms, followed by a fixation cross for 200 ms; the second stimulus was then presented for 600 ms, followed by a fixation cross for 800 ms. This resulted in an intertrial interval of 3,200 ms (Fig. 1). Perceptual priming for words was minimized by using different fonts (i.e., Arial, Comic Sans, Time New Roman, Verdana), different letter cases, and different letter sizes. Perceptual priming for pictures was minimized by presenting pictures of objects with different sizes and in different views. The task required subjects to read/name all words/pictures overtly as soon as they appeared on the screen. Subjects were instructed to whisper to minimize jaw and head movements in the scanner. The subjects' verbal responses were recorded by means of an air tube whose open end was placed close to the mouth. The tube was led out of the scanner room and attached to a low‐noise wide‐dynamic‐range microphone. The microphone signal was digitized and the repetitive scanner sound subtracted in real time, allowing for online monitoring. The dynamic range of the microphone and digitization was sufficient that after subtraction of the large scanner component, the relatively small voice signal was still adequately intelligible.

Figure 1.

Temporal parameters of stimulus presentation. The first stimulus was presented for 600 ms, followed by a fixation cross for 200 ms; the second stimulus was then presented for 600 ms, followed by a fixation cross for 1,800 ms. This resulted in an intertrial interval of 3,200 ms.

Scanning Technique

For each subject, a Siemens 3T scanner was used to acquire T2*‐weighted echoplanar images with BOLD contrast and an effective repetition time (TR) of 2.275 s. Each echoplanar image comprised 35 axial slices of 2 mm thickness with 1‐mm slice interval and 3 × 3 mm in‐plane resolution. A total of 836 volumes were acquired in two separate runs and the first six (dummy) images of each run were discarded to allow for T1 equilibration effects. After the two functional runs, a T1‐weighted anatomical volume (1 × 1 × 1.5 mm voxels) was also acquired.

Statistical Parametric Mapping

Behavioral measures were quantified and compared between groups using factorial analyses of variance. Functional imaging data were analyzed using statistical parametric mapping as implemented in SPM2 software (Wellcome Department of Imaging Neuroscience, London, United Kingdom). All volumes from each subject were realigned using the first as reference and resliced with sinc interpolation. The functional images were spatially normalized [Friston et al., 1995a] to a standard MNI‐305 template using a total of 1,323 nonlinear‐basis functions. Functional data were spatially smoothed with a 6 mm full width at half maximum isotropic Gaussian kernel to compensate for residual variability in functional anatomy after spatial normalization and to permit application of Gaussian random field theory for adjusted statistical inference.

First, the statistical analysis was performed for each subject independently. To remove low‐frequency drifts, the data were high‐pass‐filtered using a set of discrete cosine basis functions with a cutoff period of 128 s. Each trial was assigned to a specific experimental condition in a subject‐specific fashion, after listening to the vocal responses recorded during the acquisition of the data. For instance, when the subject produced a vocal response (e.g., “tiger‐lemon”) that did not match the predicted response (e.g., “leopard‐lemon”), such trial was reassigned accordingly (e.g., from the phonologically related to the unrelated condition). Trials in which the subject did not produce any vocal response for either one or both of the stimuli within a pair were modeled as errors and excluded from the statistical comparisons. Each experimental condition was then modeled independently by convolving the onset times of the target stimuli with a synthetic hemodynamic response function (HRF) without dispersion or temporal derivatives. The choice to model the target but not the prime was motivated by our hypothesis that neuronal responses to the target stimuli would differ as a function of the prime‐target relationship. The parameter estimates were calculated for all brain voxels using the general linear model, and contrast images comparing each condition against fixation (i.e., the baseline) were computed [Friston et al., 1995b]. Second, the subject‐specific contrast images were entered into an ANOVA to permit inferences at the population level [Holmes and Friston, 1998]. This allowed us to identify the brain areas that responded during task performance relative to the baseline. In addition, it allowed us to test for the differential effects of semantically unrelated, phonologically related and unrelated pairs, and the dependency of these effects on the orthographic or pictorial nature of the stimuli. The t‐images for each contrast at the second level were subsequently transformed into statistical parametric maps of the Z‐statistic. Unless otherwise indicated, we report and discuss regions that showed significant effects at P < 0.05 (corrected for multiple comparisons across the whole brain for either high or extent threshold).

RESULTS

Behavioral Data

Vocal responses for both word reading and picture naming were recorded during fMRI scanning. Trials that elicited unpredicted vocal responses (e.g., “tiger‐lemon” instead of “leopard‐lemon”) were reclassified accordingly (e.g., from the phonologically related to the unrelated condition). For pairs composed of two words, no trials were reassigned based on the vocal responses of the subjects. For pairs composed of either a picture and a word or two pictures, a limited number of trials were reassigned from the phonological to the unrelated condition (29%), from the semantic to the identical condition (21%), or from the semantic to the unrelated condition (4%).

A trial was classified as an error if the subject did not produce any vocal response for either one or both of the stimuli within a pair. For trials composed of words only, errors were negligible (i.e., 0.2%). For trials that also included pictures, there was a greater proportion of errors (i.e., 5.15%). The difference between the number of errors during reading and naming was significant as revealed by a two‐sample t‐test (P < 0.001). Finally, error rate did not differ significantly between semantically related, phonologically related and unrelated pairs (ANOVA, P = 0.714).

Functional Imaging Data

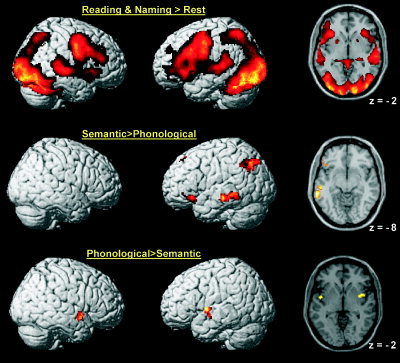

First we report the areas that were activated by reading words and naming pictures relative to the baseline. This revealed increased neuronal responses in a distributed bilateral network that included striate and extrastriate occipital cortex, superior parietal cortex, superior temporal cortex, ventral and dorsal inferior frontal cortex (see top row of Fig. 2). From this comparison alone, we were unable to dissociate sensorimotor effects (visual input and motor response) from high‐order language areas. Nevertheless, the distributed pattern of activation we observed for reading and picture naming related to fixation was broadly consistent with previous studies of word reading and picture naming [Turkeltaub et al., 2002; Price and Mechelli, 2005].

Figure 2.

Brain areas that expressed significant effects at P < 0.05 (corrected). Top row: brain areas activated by reading and naming relative to fixation. Middle row: brain areas activated by semantically related more than phonologically related pairs. Bottom row: brain areas activated by phonologically related more than semantically related pairs. [Color figure can be viewed in the online issue, which is available at www.interscience.wiley.com.]

We now report the areas that were modulated by the relationship between prime and target. The effects of semantic and phonological relatedness were identified by directly contrasting semantically related against phonologically related pairs. In addition, in order to better characterize neuronal responses in the regions identified by this comparison, we contrasted semantic and phonological conditions against the unrelated condition. Greater activation for semantically relative to phonologically related pairs was found in a left‐lateralized network, including the pars orbitalis of the inferior frontal gyrus, the middle temporal gyrus, the angular gyrus, and the superior frontal gyrus (Figs. 2 and 3, Table I). These effects were associated with increased activity for semantically related than unrelated pairs rather than decreased activity for phonologically related than unrelated pairs. Thus, they can be explained in terms of enhancement for semantically related pairs as opposed to suppression for phonologically related pairs. These effects were replicated for word‐word, picture‐picture, word‐picture, and picture‐word combinations and were therefore independent of stimulus modality. Effects specific to either orthographic (i.e., word‐word) or pictorial (i.e., picture‐picture) pairs were not detected even when lowering the statistical threshold to P < 0.001 (uncorrected).

Figure 3.

Parameter estimates (averaged across subjects) for each experimental condition in those regions identified by the comparison between semantic and phonological pairs (Table I). Vertical bars indicate standard errors. WW, word‐word; PP, picture‐picture; WP, word‐picture; PW, picture‐word; s, semantically related; p, phonologically related; i, conceptually identical; u, unrelated. [Color figure can be viewed in the online issue, which is available at www.interscience.wiley.com.]

Table I.

Areas that expressed differential activation for semantically and phonologically related pairs

| Coordinates x, y, z | Semantic > phonological | Semantic > unrelated | Semantic > identical | Phonological > unrelated | Phonological > identical | |

|---|---|---|---|---|---|---|

| Semantic effects | ||||||

| Left middle temporal | −66, −38, −8 | 5.9 | 5.4 | 1.7 | NS | NS |

| −56, −24, −10 | 5.2 | 3.6 | 1.8 | NS | NS | |

| Left angular gyrus | −32, −72, 44 | 4.7 | 4.5 | 3.0 | NS | NS |

| −58, −52, 40 | 4.4 | 3.7 | NS | NS | NS | |

| Superior frontal gyrus | 2, 30, 40 | 4.6 | 2.8 | 3.3 | NS | NS |

| −6, 18, 44 | 4.4 | NS | 2.5 | NS | NS | |

| Left inferior frontal (pars orbitalis) | −46, 24, −14 | 4.4 | 2.7 | 2.6 | NS | NS |

| −52, 38, −6 | 3.4 | 1.4 | 3.1 | NS | NS | |

| Phonological effects | Coordinates x, y, z | Phonological > semantic | Phonological > unrelated | Phonological > identical | Semantic > unrelated | Semantic > identical |

| Left insula | −44, 0, 2 | 4.8 | 3.5 | 2.5 | NS | NS |

| Right insula | 38, 2, −4 | 4.8 | 3.1 | 3.6 | NS | NS |

| 46, 4, −12 | 4.6 | 3.4 | 3.2 | NS | NS |

P < 0.05 (corrected). Semantic > phonological: regions with greater activation for semantically than phonologically related pairs. Phonological > semantic: regions with greater activation for phonologically than semantically related pairs. Z−scores for comparisons with unrelated and identical pairs are also reported. NS, not significant at P < 0.1 (uncorrected).

Greater activation for phonologically relative to semantically related pairs was found in left and right insula (Figs. 2 and 3, Table I). These effects were associated with increased activity for phonologically related compared to unrelated pairs rather than decreased activity for semantically related compared to unrelated pairs. Thus, they can be explained in terms of enhancement for phonologically related pairs rather than suppression for semantically related pairs. In addition, the left superior occipital gyrus expressed greater activation for phonologically related to semantically related pairs but this effect was associated with decreased activity for semantically related than unrelated pairs rather than an increase for phonologically related than unrelated pairs. Thus, it can be explained in terms of suppression for semantically related pairs as opposed to enhancement for phonologically related pairs. These effects were detected irrespective of the orthographic or pictorial nature of the stimuli. Effects specific to the orthographic or pictorial modality were not found even when lowering the statistical threshold to P < 0.001 (uncorrected).

For completeness, we report the comparisons between related and identical pairs in regions that expressed a significant modulation by semantic or phonological relatedness (Table I). However, the interpretation of such comparisons is not straightforward because identical items are the most diametrically opposed to unrelated items and yet cannot be considered simply phonologically and semantically related. In fact, identity and relatedness are likely to elicit distinct neuronal and cognitive processes.

DISCUSSION

The aim of the present study was to dissociate the neural correlates of semantic and phonological processes during word reading and picture naming. Previous studies have addressed this issue by contrasting tasks involving semantic and phonological decisions. In order to avoid the potential confounds associated with task manipulation, we identified semantic and phonological areas by manipulating the semantic and phonological relationship between successive stimuli. We report that semantically related pairs modulate neuronal responses in a left‐lateralized network, including the pars orbitalis of the inferior frontal gyrus, the middle temporal gyrus, the angular gyrus, and the superior frontal gyrus. These regions expressed strong increases for semantic relative to phonological pairs but also positive trends for semantic relative to unrelated pairs (Table I). In contrast, phonologically related pairs modulate neuronal responses in the left and right insula. These regions expressed increases for phonological relative to semantic pairs but also positive trends for phonological relative to unrelated pairs (Table I). Critically, these effects were consistently detected with words and pictures and there were no modality‐specific changes.

The areas modulated by the semantic relationship between stimuli have been implicated in semantic processing by previous studies using task manipulation. For instance, the pars orbitalis of the inferior frontal gyrus responds to tasks that require decisions about the meaning of written words [Fiez, 1997; Dapretto and Bookheimer, 1999; Poldrack et al., 1999; Devlin et al., 2003; McDermott et al., 2003]. The middle temporal gyrus is activated by semantic decision on both auditory [Noppeney and Price, 2002] and written [McDermott et al., 2003] words. The angular gyrus responds to semantic relative to phonological tasks [Demonet et al., 1992; Mummery et al., 1998] and has been implicated in both written and spoken word comprehension by neuropsychological studies [Dejerine, 1892; Geshwind, 1965; Hart and Gordon, 1990]. Finally, the superior frontal gyrus is activated in tasks that require semantic decision on words relative to tasks that require the perceptual analysis of nonlinguistic stimuli [Binder et al., 1997]. On the basis of our findings, we propose that these areas are involved in stimulus‐driven semantic processes.

In contrast with our prediction, we did not find semantic effects in the anterior temporal pole, which has been associated with semantic processing by several neuropsychological studies [Hodges et al., 1992, 2000; Bozeat et al., 2000; Kensinger et al., 2003]. This null result can be explained by either limited sensitivity in the anterior temporal pole due to susceptibility artifacts [Devlin et al., 2000] or, alternatively, the involvement of this region in task‐related retrieval strategies or other aspects of semantic processing that were not affected by our manipulation. We also note that activation in the anterior temporal pole was not detected for reading and naming relative to fixation, even when we lowered the statistical threshold to P < 0.001 (uncorrected). This is consistent with a recent report that this region activates during picture naming when a high‐level baseline is used rather than fixation [Price et al., 2005].

The left and right insula were modulated by the phonological relationship between stimuli. The left insula is typically damaged in patients with apraxia of speech, a disorder in programming the speech musculature to produce the correct sounds of words [Dronkers, 1996]. Furthermore, this region shows hypometabolism [Nestor et al., 2003] and atrophy [Gorno‐Tempini et al., 2004] in patients with nonfluent aphasia, a syndrome in which the ability to communicate fluently is lost in the context of preserved comprehension. Several other studies have implicated the left insula in articulatory planning of speech [Wise et al., 1999; Blank et al., 2002] and speech motor control [Riecker et al., 2000; Ackermann and Riecker, 2004]. In contrast, the right insula has been associated in the control of prosodic aspects of speech [Akermann and Riecker, 2004]. Furthermore, this region is thought to be involved in the temporospatial control of vocal tract musculature during overt singing [Riecker et al., 2000]. It is most likely that both the left and right insula include distinct focal regions that differentially contribute to different aspects of speech production, such as planning and coordination, as well as other linguistic and nonlinguistic responses [e.g., see Singer et al., 2004]. However, it is unclear whether the above studies examined the same or distinct anatomical regions, because findings were typically localized and stereotactic coordinates were seldom reported. In the present study, we identify a region in the middle of the insula that is sensitive to the phonological relationship between stimuli. We interpret this modulation in terms of increased demands on the discrimination between similar phonological or articulatory codes. For example, when the pair “BELL‐belt” is presented, the second item will evoke phonological and articulatory codes that are similar to those evoked by the first item. Successful naming of the second item will therefore require the discrimination between similar competing codes. In contrast, when a pair such as “TABLE‐chair” is presented, the second item is likely to evoke phonological and articulatory codes that are different from those evoked by the first item. As a result, successful naming of the second item will be less dependent on the discrimination between similar competing codes. The results in the bilateral insula may also be affected by the presence of identical pairs in our experimental paradigm. These may have engaged a tendency to repeat, which had to be counteracted for phonologically related pairs. The presence of identical pairs may have had a smaller effect on semantically related pairs that evoked clearly distinct phonological and articulatory codes.

An important feature of the present investigation is that we used both orthographic and pictorial stimuli. Previous studies compared word reading and picture naming directly in order to identify areas that respond more to orthographic than pictorial stimuli [Bookheimer et al., 1995; Moore and Price, 1999; Price et al., 2006]. These investigations were motivated by cognitive models that typically include reading‐specific functions such as graphemic, orthographic, sublexical, and visual word form processing [Marshall and Newcombe, 1973; Patterson and Shewell, 1987; Coltheart et al., 1993]. Here we did not examine reading‐ or naming‐specific functions by directly comparing the two tasks. Rather, we investigated whether semantic and phonological processes respectively engage the same sets of areas during reading and naming by manipulating the semantic and phonological relationships between items. Reading‐ or naming‐specific effects were not detected even when lowering the statistical threshold to 0.001 (uncorrected). Therefore, our results suggest that the same sets of areas are modulated by semantic and phonological demands during word reading and picture naming. In other words, reading and naming rely on “shared” semantic and phonological systems as previously concluded on the basis of neuropsychological studies [Lambon Ralph et al., 1999].

Finally, we note that our experimental paradigm can also be understood in terms of semantic and phonological priming [Schacter and Buckner, 1998; Henson, 2003]. For instance, in the case of semantically related pairs, the first stimulus is expected to modulate the response to the second stimulus in semantic areas. Likewise, in the case of phonologically related pairs, the first stimulus is expected to modulate the response to the second stimulus in phonological areas. However, semantic studies typically report decreases in activations as the presentation of an item or some feature is repeated over time [e.g., Wagner et al., 1997, 2000; Buckner et al., 1998; Mummery et al., 1999; Koutstaal et al., 2001; Kotz et al., 2002; Copland et al., 2003; Rissman et al., 2003; Rossell et al., 2003]. The effects we find, on the other hand, are driven by increases relative to the baseline condition, which consisted of unrelated pairs. How can this apparent inconsistency be explained? There are potentially important differences between our study and previous investigations, which may have contributed to the discrepancy between the increases found here and the decreases reported elsewhere. First, we identified semantic areas by manipulating the semantic relationship between stimuli; on the other hand, previous investigations characterized semantic priming in terms of repeated relative to initial semantic processing of exactly the same stimuli [e.g., Wagner et al., 1997, 2000; Buckner et al., 1998; Koutstaal et al., 2001]. Second, in our experiment, subjects were asked to read/name both first and second stimuli; this allowed us to establish whether phonological or semantic priming had occurred on a trial‐by‐trial basis based on the vocal responses of the subjects. The few studies that manipulated the semantic relationship between words, on the other hand, required the subjects to ignore the prime and used a lexical decision task [Kotz et al., 2002; Copland et al., 2003; Rissman et al., 2003]. Thus, ours is the only study that manipulated the semantic relationship between stimuli and required subjects to read/name both primes and targets. It has also been proposed that regions that show repetition suppression are those that subserve a process that occurs for both primed and unprimed stimuli, whereas regions that show repetition enhancement are likely to be involved in a process that occurs on primed but not unprimed stimuli [Henson, 2003]. In our experiment, the additional process evoked by semantically related pairs was the meaningful association between the first and second stimulus. Likewise, the additional process evoked by phonologically related pairs was the discrimination between similar phonological or articulatory codes.

In the present study, we assumed that semantic and phonological relatedness would modulate neuronal responses in areas implicated in semantic and phonological processes, respectively. There are advantages and disadvantages with this approach, which need to be taken into account when interpreting our findings. As discussed above, semantic and phonological decision tasks are associated with differential executive processes that are not required for reading and naming per se. By manipulating the semantic and phonological relatedness of the items while keeping the task constant, we were able to minimize the strategy and working memory confounds that are associated with task manipulation. However, semantic and phonological effects could still reflect differences in strategic and executive processes generated by the stimuli. In other words, our findings must be explained in terms of processes that depend on the relationship between successive stimuli, rather than differences in the task being performed with these stimuli. Another important aspect of our paradigm relates to the use of both orthographic and pictorial stimuli. This allowed us to test for effects that were common to the two modalities as well as effects that were specific to either word reading or picture naming. In contrast, as discussed above, previous studies have typically used only orthographic stimuli when comparing semantic and phonological decision tasks.

We now turn to the limitations of our approach. First, the regions that we reported for phonological and semantic priming are only a subset of those regions activated by reading and picture naming relative to fixation (Fig. 2). It is important to acknowledge that our manipulations did not identify all areas that contribute to semantic and phonological processing, but only those that are sensitive to the relationship between successive items during reading and naming. For instance, the anterior temporal pole was not modulated by the semantic relationship between items despite the well‐documented implication of this region in conceptual knowledge [Hodges et al., 1992; Kensinger et al., 2003]. Second, the phonological similarity between prime and target was sometimes limited, particularly in the case of items that shared only the first phoneme. Likewise, the strength of the semantic association was variable across trials, with some items more obviously associated than others. The limited phonological or semantic relatedness of the prime and target in some trials may have affected the sensitivity of our experimental paradigm. A third limitation of our study relates to the specificity of the phonological effects that we report in the bilateral insula. The present study cannot establish whether these effects are specific to phonological and articulatory demands or, rather, reflect a more general mechanism. For instance, activations in bilateral insula might be related to the avoidance of repeating the same word twice, which is most prominent for phonological than semantic pairs. A forth limitation of our study is that reaction times of vocal responses could not be measured during scanning because of technical constraints. Behavioral studies indicate that reaction times were most likely to be longer for pictures compared to words [Glaser and Glaser, 1989]. In particular, an interval of 800 ms between words was likely to allow enough time for the subject to read the first word before the presentation of the second word. In contrast, an interval of 800 ms between pictures meant that the vocal response to the first picture was likely to be produced after the presentation of the second picture. This possible discrepancy did not appear to affect our results, which were highly consistent for words and pictures. A recent study out of the scanner has confirmed that semantic and phonological primes interfere at the behavioral as well as the neural level. Thus, response times to picture targets after semantic and phonological primes were longer than when the prime was unrelated (unpublished data).

In conclusion, the present study has identified a left‐lateralized network (including the pars orbitalis of the inferior frontal gyrus, the middle temporal gyrus, the angular gyrus, and the superior frontal gyrus), which is sensitive to stimulus‐driven semantic processing irrespective of the orthographic or pictorial nature of the stimuli. Conversely, a medial region within the insular complex is implicated in the discrimination between similar competing phonological and articulatory codes for both words and pictures. This modality‐independent double dissociation provides support to the idea that reading and naming rely on “shared” semantic and phonological systems.

Acknowledgements

The authors thank Karalyn Patterson and Melanie Vitkovitch for helpful comments on previous drafts of the manuscript.

Table .

| Target | Prime | |||

|---|---|---|---|---|

| Phonological | Semantic | Identical | Unrelated | |

| Accordion | Axe | Harmonica | Accordion | Butterfly |

| Ambulance | Amplifier | Fire engine | Ambulance | Bagel |

| Ant | Anchor | Wasp | Ant | Bagpipe |

| Apple | Apricot | Pear | Apple | Bath |

| Ashtray | Asparagus | Cigarette | Ashtray | Ruler |

| Baboon | Balloon | Gorilla | Baboon | Tent |

| Bag | Bagpipe | Rucksack | Bag | Lantern |

| Badger | Banana | Mole | Badger | Tea pot |

| Baby | Bagel | Cot | Baby | Suitcase |

| Bamboo | Ballet shoe | Panda | Bamboo | Lizard |

| Basin | Bacon | Shower | Basin | Spider |

| Boar | Ball | Pig | Boar | Stapler |

| Barbecue | Barrel | Sausages | Barbecue | Tie |

| Basket | Basketball | Barrel | Basket | Kangaroo |

| Battery | Bicycle | Torch | Battery | Table |

| Bra | Brick | Pants | Bra | Watch |

| Bed | Bottle | Pillow | Bed | Canoe |

| Bell | Belt | Whistle | Bell | Leopard |

| Bin | Binoculars | Dustpan | Bin | Cockerel |

| Bikini | Bib | Swim‐suit | Bikini | Mug |

| Buggy | Bath | Pram | Buggy | Fox |

| Boat | Boot | Canoe | Boat | Mole |

| Bolt | Bomb | Screw | Bolt | Rucksack |

| Bowl | Bone | Dish | Bowl | Cot |

| Broccoli | Brain | Cauliflower | Broccoli | Spanner |

| Broom | Bracelet | Mop | Broom | Harmonica |

| Bread | Bench | Cheese | Bread | Pillow |

| Bucket | Buckle | Spade | Bucket | Whistle |

| Bull | Bullet | Cow | Bull | Swim‐suit |

| Bus | Bulb | Coach | Bus | Sausages |

| Button | Butterfly | Zip | Button | Fire engine |

| Briefcase | Bottle opener | Suitcase | Briefcase | Glass |

| Kettle | Ketchup | Tea pot | Kettle | Dish |

| Keyboard | Kiwi | Computer | Keyboard | Snake |

| Kilt | Key | Bagpipe | Kilt | Brain |

| Cake | Cane | Bagel | Cake | Beaver |

| Calculator | canary | Ruler | Calculator | Pear |

| Camera | Camel | Tripod | Camera | Pram |

| Cannon | Canoe | Bomb | Cannon | Mop |

| Candle | Kangaroo | Lantern | Candle | Gorilla |

| Caravan | Carrot | Tent | Caravan | Palm Tree |

| Caterpillar | Cat | Butterfly | Caterpillar | Screw |

| Sellotape | Celery | Stapler | Sellotape | Whale |

| Chair | Chain | Table | Chair | Ball |

| Chips | Church | Ketchup | Chips | Lion |

| Clamp | Clarinet | Spanner | Clamp | Aubergine |

| Chicken | Cheese | Cockerel | Chicken | Dustpan |

| Clock | Clown | Watch | Clock | Zip |

| Coconut | Coat | Palm tree | Coconut | Skirt |

| Climbing frame | Clothes peg | Slide | Climbing frame | Goose |

| Coffee maker | cockerel | Mug | Coffee maker | Hoover |

| Coffin | Coins | Skull | Coffin | Pie |

| Kite | Cow | Ball | Kite | Lobster |

| Cup | Curtains | Glass | Cup | Letter opener |

| Collar | Computer | Tie | Collar | Barrel |

| Cookie | Cushion | Pie | Cookie | Torch |

| Courgette | Cot | Aubergine | Courgette | Pants |

| Cork | Corn | Bottle | Cork | Teddy bear |

| Corkscrew | Cauliflower | Bottle opener | Corkscrew | CD |

| Crab | Crane | Lobster | Crab | Slide |

| Cradle | Crayon | Teddy bear | Cradle | Sword |

| Crisps | Crocodile | Peanuts | Crisps | Mailbox |

| Diskette | Dish | CD | Diskette | Peanuts |

| Dagger | Dragon | Sword | Dagger | Garlic |

| Dice | Diamond | Playing cards | Dice | Bottle |

| Dolphin | Doll | Whale | Dolphin | Playing cards |

| Dog | Donut | Bone | Dog | Mitten |

| Donkey | Door | Horse | Donkey | Knife |

| Dress | Drainer | Skirt | Dress | Oven |

| Drill | Drums | Screwdriver | Drill | Fence |

| Duck | Dustpan | Goose | Duck | Glider |

| Duster | Dummy | Hoover | Duster | Frog |

| Earring | Eagle | Diamond | Earring | Shell |

| Egg | Elephant | Bacon | Egg | Bottle opener |

| Envelope | Extinguisher | Letter opener | Envelope | Radiator |

| Easel | Ear | Palette | Easel | Trumpet |

| Feather | Fence | Ostrich | Feather | Drums |

| Fire | Foot | Extinguisher | Fire | Ostrich |

| Frying pan | Fire engine | Wooden spoon | Frying pan | Giraffe |

| Flamingo | Flag | Swan | Flamingo | Screwdriver |

| Flute | Flake | Trombone | Flute | Extinguisher |

| Fly | Flower | Mosquito | Fly | Wooden spoon |

| Fork | Fox | Knife | Fork | Vase |

| Fridge | Frog | Oven | Fridge | Bulb |

| Gate | Garlic | Fence | Gate | Swan |

| Ginger | Giraffe | Garlic | Ginger | Bone |

| Glove | Glass | Mitten | Glove | Horse |

| Glasses | Glider | Eyes | Glasses | Flake |

| Guitar | Goose | Drums | Guitar | Starfish |

| Goggles | Gorilla | Glider | Goggles | Cow |

| Grater | Grapes | Drainer | Grater | Diamond |

| Gun | Goat | Bullet | Gun | Bacon |

| Hanger | Hammer | Clothes peg | Hanger | Trombone |

| Handle | Hoover | Door | Handle | Mosquito |

| Hat | Handbag | Coat | Hat | Palette |

| Hair brush | Harmonica | Toothbrush | Hair brush | Flower |

| Hedgehog | hedge | Beaver | Hedgehog | Orange |

| Helicopter | Helmet | Plane | Helicopter | Cucumber |

| Hook | Hoof | Rope | Hook | Mascara |

| House | Hair dryer | Radiator | House | Pipe |

| Horn | Horse | Trumpet | Horn | Spade |

| Ice‐cream | Eyes | Flake | Ice‐cream | Bullet |

| Jellyfish | Jacket | Starfish | Jellyfish | Clothes peg |

| Jug | Juicer | Vase | Jug | Door |

| Lamp | Lamb | Bulb | Lamp | Chain |

| Leaf | Leek | Flower | Leaf | Sticks |

| Lemon | Leopard | Orange | Lemon | Tambourine |

| Ladybird | Ladle | Frog | ladybird | Coat |

| Lettuce | Letter opener | Cucumber | Lettuce | Toothbrush |

| Lighter | Lion | Pipe | Lighter | Pig |

| Lipstick | Lizard | Mascara | Lipstick | Church |

| Lock | Lantern | Chain | Lock | Ketchup |

| Log | Lobster | Sticks | Log | Helmet |

| Medal | Mailbox | Trophy | Medal | Rocket |

| Maracas | Mascara | Tambourine | Maracas | Rat |

| Microphone | Microwave | Amplifier | Microphone | Plane |

| Mouse | Mouth | Rat | Mouse | Rope |

| Mosque | Mosquito | Church | Mosque | Shaver |

| Motorbike | Mole | Helmet | Motorbike | Crayon |

| Moustache | Mug | Shaver | Moustache | Coins |

| Muffin | Mushroom | Donut | Muffin | Clarinet |

| Moon | Mop | Rocket | Moon | Kiwi |

| Money | Monkey | Coins | Money | Banana |

| Melon | Mitten | Kiwi | Melon | Bench |

| Mango | Magazine | Banana | Mango | Ear |

| Nose | Knife | Ear | Nose | Magazine |

| Necklace | Nest | Bracelet | Necklace | Bib |

| Onion | Oven | Leek | Onion | Eagle |

| Orangutan | Orange | Monkey | Orangutan | Bicycle |

| Owl | Ostrich | Eagle | Owl | Boot |

| Pushchair | Pear | Bib | Pushchair | Canary |

| Pasta | Pants | Pizza | Pasta | Balloon |

| Parrot | Parachute | Canary | Parrot | Key |

| Partyhat | Palm tree | Balloon | Partyhat | Coach |

| padlock | Panda | Key | Padlock | Buckle |

| Peacock | Peanuts | Bird | Peacock | Carrot |

| Potato | Pie | Carrot | Potato | Harp |

| Pencil | Pepper grinder | Crayon | Pencil | Lamb |

| Piano | Pizza | Harp | Piano | Camel |

| Pyramid | Pram | Camel | Pyramid | Blackberry |

| Python | Pliers | Snake | Python | Leek |

| Peach | Pillow | Blackberry | Peach | Television |

| Peas | Pig | Mushroom | Peas | Syringe |

| Plate | Plane | Corn | Plate | Shower |

| Plant | Plum | Raspberry | Plant | Sandals |

| Paintbrush | Palette | Roller | Paintbrush | Mushroom |

| Purse | Pumpkin | Handbag | Purse | Corn |

| Rabbit | Razor | Cat | Rabbit | Jacket |

| Radio | Radiator | Television | Radio | Cigarette |

| Rattle | Rat | Dummy | Rattle | Cat |

| Ram | Rake | Lamb | Ram | See saw |

| Rhino | Raspberry | Elephant | Rhino | Doll |

| Robin | Ruler | Nest | Robin | Gloves |

| rolling pin | Roller skate | Apron | Rolling pin | Razor |

| rocking horse | Rope | Doll | Rocking horse | Roller skate |

| Saxophone | Sandals | Clarinet | Saxophone | Penguin |

| Scissors | Cigarette | Razor | Scissors | Nest |

| Scooter | Screwdriver | Roller skate | Scooter | Cheese |

| Scorpion | Skull | Spider | Scorpion | Handbag |

| Seagull | CD | Shell | Seagull | Amplifier |

| Seal | See‐saw | Penguin | Seal | Trophy |

| Shark | Shaver | Crocodile | Shark | Raspberry |

| Ship | Sheep | Anchor | Ship | Cauliflower |

| Shoe | Shell | Ballet shoe | Shoe | Pepper grinder |

| Shovel | Shower | Rake | Shovel | Crocodile |

| Slipper | Slide | Foot | Slipper | Axe |

| salt cellar | Sausages | pepper grinder | Salt cellar | Roller |

| Sock | Sword | Sandals | Sock | Whisk |

| Saw | Snake | Axe | Saw | Celery |

| Sofa | Sewing machine | Cushion | Sofa | ladle |

| Spatula | Spanner | whisk | Spatula | Dummy |

| Sponge | Spade | Bath | Sponge | Grapes |

| Spoon | Screw | ladle | Spoon | Elephant |

| Spinach | Spider | Asparagus | Spinach | Hammer |

| Spindle | Cymbals | Wool | Spindle | Apron |

| Stethoscope | Stapler | Syringe | stethoscope | Donut |

| Stool | Stork | Bench | Stool | Anchor |

| Strawberry | Starfish | Grapes | Strawberry | Ballet shoe |

| Sweater | Swan | Jacket | Sweater | Rake |

| Swing | Swim‐suit | See saw | Swing | Foot |

| Tape measure | Table | Sewing machine | Tape measure | Wasp |

| Tap | Tank | Sink | Tap | Crane |

| Teeth | Tea pot | mouth | Teeth | Bracelet |

| Telescope | Television | binoculars | Telescope | Dragon |

| Telephone | Teddy bear | Mobile phone | Telephone | Brick |

| Tennis racquet | Tent | Basketball | Tennis racquet | Avocado |

| Tiger | Tie | Lion | Tiger | Belt |

| Tomato | Tambourine | Celery | Tomato | Curtains |

| Trousers | Trophy | Belt | Trousers | Eyes |

| Toucan | Toothbrush | Stork | Toucan | Pliers |

| Tortoise | Torch | Kangaroo | Tortoise | Drainer |

| Triangle | Tripod | Cymbals | Triangle | Bomb |

| Tree | Train | Hedge | Tree | Basketball |

| Truck | Trumpet | Crane | Truck | Asparagus |

| Tweezers | T‐shirt | Pliers | Tweezers | Wool |

| Wall | Wasp | Brick | Wall | Mouth |

| Watermelon | Watch | Avocado | Watermelon | Binoculars |

| Wheel | Whale | Bicycle | Wheel | Snail |

| Whip | Whisk | Cane | Whip | Train |

| Wolf | Wool | Fox | Wolf | Cymbals |

| Window | Whistle | Curtains | Window | Hedge |

| Worm | Wooden spoon | Snail | Worm | Sewing machine |

| Zebra | Zip | Giraffe | Zebra | Sink |

Re‐use of this article is permitted in accordance with the Terms and Conditions set out at http://wileyonlinelibrary.com/onlineopen#OnlineOpen_Terms

REFERENCES

- Ackermann H, Riecker A (2004): The contribution of the insula to motor aspects of speech production: a review and a hypothesis. Brain Lang 89: 320–328. [DOI] [PubMed] [Google Scholar]

- Binder JR, Frost JA, Hammeke TA, Cox RW, Rao SM, Prieto T (1997): Human brain language areas identified by functional magnetic resonance imaging. J Neurosci 17: 353–362. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blank SC, Scott SK, Murphy K, Warburton E, Wise PJ (2002): Speech production: Wernicke, Broca and beyond. Brain 125: 1829–1838. [DOI] [PubMed] [Google Scholar]

- Bookheimer SY, Zeffiro TA, Blaxton T, Gaillard W, Theodore W (1995): Regional cerebral blood flow during object naming and word reading. Hum Brain Mapp 3: 93–106. [Google Scholar]

- Bozeat S, Lambon Ralph MA, Patterson K, Garrard P, Hodges JR (2000): Non‐verbal semantic impairment in semantic dementia. Neuropsychologia 38: 1207–1215. [DOI] [PubMed] [Google Scholar]

- Buckner RL, Goodman J, Burock M, Rotte M, Koutstaal W, Schacter D, Rosen B, Dale AM (1998): Functional‐anatomic correlates of object priming in humans revealed by rapid presentation event‐related fMRI. Neuron 20: 285–296. [DOI] [PubMed] [Google Scholar]

- Chertkow H, Bub D, Deaudon C, Whitehead V (1997): On the status of object concepts in aphasia. Brain Lang 58: 203–232. [DOI] [PubMed] [Google Scholar]

- Coltheart M, Curtis B, Atkins P, Haller M (1993): Models of reading aloud: dual‐route and parallel‐distributed‐processing approaches. Psychol Rev 100: 589–608. [Google Scholar]

- Copland DA, de Zubicaray GI, McMahon K, Wilson SJ, Eastburn M, Chenery HJ (2003): Brain activity during automatic semantic priming revealed by event‐related functional magnetic resonance imaging. NeuroImage 20: 302–310. [DOI] [PubMed] [Google Scholar]

- Dapretto M, Bookheimer SY (1999): Form and content: dissociating syntax and semantics in sentence comprehension. Neuron 24: 427–432. [DOI] [PubMed] [Google Scholar]

- Dejerine J (1892): Contribution à l'étude anatomo‐pathologique et clinique de différentes variétés de cécité verbale. Memoires‐Soc Biol 4: 61–90. [Google Scholar]

- Demonet JF, Chollet F, Ramsay S, Cardebat D, Nespoulous JL, Wise R, Rascol A, Frackowiak R (1992): The anatomy of phonological and semantic processing in normal subjects. Brain 115: 1753–1768. [DOI] [PubMed] [Google Scholar]

- Demonet JF, Price C, Wise R, Frackowiak RS (1994): A PET study of cognitive strategies in normal subjects during language tasks: influence of phonetic ambiguity and sequence processing on phoneme monitoring. Brain 117: 671–682. [DOI] [PubMed] [Google Scholar]

- Devlin JT, Russell RP, Davis MH, Price CJ, Wilson J, Moss HE, Matthews PM, Tyler LK (2000): Susceptibility‐induced loss of signal: comparing PET and fMRI on a semantic task. NeuroImage 11: 589–600. [DOI] [PubMed] [Google Scholar]

- Devlin JT, Matthews PM, Rushworth MF (2003): Semantic processing in the left inferior prefrontal cortex: a combined functional magnetic resonance imaging and transcranial magnetic stimulation study. J Cogn Neurosci 15: 71–84. [DOI] [PubMed] [Google Scholar]

- Dronkers NF (1996): A new brain region for coordinating speech articulation. Nature 384: 159–161. [DOI] [PubMed] [Google Scholar]

- Fiez JA (1997): Phonology, semantics, and the role of the left inferior prefrontal cortex. Hum Brain Mapp 5: 79–83. [PubMed] [Google Scholar]

- Friston KJ, Ashburner J, Frith CD, Poline J‐B, Heather JD, Frackowiak RSJ (1995a): Spatial registration and normalization of images. Hum Brain Mapp 2: 1–25. [Google Scholar]

- Friston KJ, Holmes A, Worsley KJ, Poline J‐B, Frith CD, Frackowiak RSJ (1995b): Statistical parametric maps in functional imaging: a general linear approach. Hum Brain Mapp 2: 189–210. [Google Scholar]

- Geschwind N (1965): Disconnction syndromes in animals and man. Brain 88: 237–294. [DOI] [PubMed] [Google Scholar]

- Glaser WR, Glaser MO (1989): Context effects in Stroop‐like word and picture processing. J Exp Psychol Gen 118: 13–42. [DOI] [PubMed] [Google Scholar]

- Gorno‐Tempini ML, Murray RC, Rankin KP, Weiner MW, Miller BL (2004): Clinical, cognitive and anatomical evolution from nonfluent progressive aphasia to corticobasal syndrome: a case report. Neurocase 10: 426–436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hart J, Gordon B (1990): Delineation of single word semantic comprehension deficits in aphasia. Ann Neurol 27: 227–231. [DOI] [PubMed] [Google Scholar]

- Henson RNA (2003): Neuroimaging studies of priming. Prog Neurobiol 70: 53–81. [DOI] [PubMed] [Google Scholar]

- Hodges JR, Patterson K, Oxbury S, Funnell E (1992): Semantic dementia: progressive fluent aphasia with temporal lobe atrophy. Brain 115: 1783–1806. [DOI] [PubMed] [Google Scholar]

- Hodges JR, Bozeat S, Patterson K, Spatt J (2000): The role of conceptual knowledge in object use: evidence from semantic dementia. Brain 123: 1913–1925. [DOI] [PubMed] [Google Scholar]

- Holmes AP, Friston KJ (1998): Generalisability, random effects and population inference. NeuroImage 7: S754. [Google Scholar]

- Josephs O, Henson RA (1999): Event‐related functional magnetic resonance imaging: modelling, inference and optimization. Philos Trans R Soc Lond B Biol Sci 354: 1215–1228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kensinger EA, Siri S, Cappa SF, Corkin S (2003): Role of the anterior temporal lobe in repetition and semantic priming: evidence from a patient with a category‐specific deficit. Neuropsychologia 41: 71–84. [DOI] [PubMed] [Google Scholar]

- Kotz SA, Cappa SF, von Cramon DY, Friederici AD (2002): Modulation of the lexical‐semantic network by auditory semantic priming: an event‐related functional MRI study. NeuroImage 17: 1761–1772. [DOI] [PubMed] [Google Scholar]

- Koutstaal W, Wagner AD, Rotte M, Maril A, Buckner RL, Schacter DL (2001): Perceptual specificity in visual object priming: functional magnetic resonance imaging evidence for a laterality difference in fusiform cortex. Neuropsychologia 39: 184–199. [DOI] [PubMed] [Google Scholar]

- Lambon Ralph MA, Cipolotti L, Patterson K (1999): Oral naming and oral reading: do they speak the same language? Cogn Neuropsychol 16: 157–169. [Google Scholar]

- Marshall JC, Newcombe F (1973): Patterns of paralexia: a psycholinguistic approach. J Psycholing Res 2: 175–198. [DOI] [PubMed] [Google Scholar]

- McDermott KB, Petersen SE, Watson JM, Ojemann JG (2003): A procedure for identifying regions preferentially activated by attention to semantic and phonological relations using functional magnetic resonance imaging. Neuropsychologia 41: 293–303. [DOI] [PubMed] [Google Scholar]

- Moore CJ, Price CJ (1999): Three distinct ventral occipitotemporal regions for reading and object naming. NeuroImage 10: 181–192. [DOI] [PubMed] [Google Scholar]

- Mummery CJ, Patterson K, Hodges J, Price CJ (1998): Organization of the semantic system: dissociable by what? J Cogn Neurosci 10: 766–777. [DOI] [PubMed] [Google Scholar]

- Mummery CJ, Shallice T, Price CJ (1999): Dual‐process model in semantic priming: a functional imaging perspective. NeuroImage 9: 516–525. [DOI] [PubMed] [Google Scholar]

- Nestor PJ, Graham NL, Fryer TD, Williams GB, Patterson K, Hodges JR (2003): Progressive non‐fluent aphasia is associated with hypometabolism centred on the left anterior insula. Brain 126: 2406–2418. [DOI] [PubMed] [Google Scholar]

- Noppeney U, Price CJ (2002): A PET study of stimulus‐and task‐induced semantic processing. NeuroImage 15: 927–935. [DOI] [PubMed] [Google Scholar]

- Noppeney U, Price CJ (2003): Functional imaging of the semantic system: retrieval of sensory‐experienced and verbally learned knowledge. Brain Lang 84: 120–133. [DOI] [PubMed] [Google Scholar]

- Patterson K, Shewell C (1987): Speak and spell: Dissociations and word class effects In: Coltheart M, Sartori G, Job R, editors. The cognitive neuropsychology of language. Hove, U.K.: Lawrence Erlbaum Associates; p 273–295. [Google Scholar]

- Poldrack RA, Wagner AD, Prull MW, Desmond JE, Glover GH, Gabrieli JD (1999): Functional specialization for semantic and phonological processing in the left inferior prefrontal cortex. NeuroImage 10: 15–35. [DOI] [PubMed] [Google Scholar]

- Price CJ, Moore CJ, Humphreys GW, Wise RJ (1997): Segregating semantic from phonological processes during reading. J Cogn Neurosci 9: 727–733. [DOI] [PubMed] [Google Scholar]

- Price CJ, Mechelli A (2005): Reading and reading disturbance. Curr Opin Neurobiol 15: 231–238. [DOI] [PubMed] [Google Scholar]

- Price CJ, Devlin JT, Moore CJ, Morton C, Laird AR (2005): Meta‐analyses of object naming: effect of baseline. Hum Brain Mapp 25: 70–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Price CJ, McCrory E, Moore CJ, Noppeney U, Mechelli A, Biggio N, Devlin JT (2006): How reading differs from object naming at the neuronal level. NeuroImage 29: 643–648. [DOI] [PubMed] [Google Scholar]

- Riecker A, Ackermann H, Wildgruber D, Dogil G, Grodd W (2000): Opposite hemispheric lateralization effects during speaking and singing at motor cortex, insula and cerebellum. Neuroreport 11: 1997–2000. [DOI] [PubMed] [Google Scholar]

- Rissman J, Eliassen JC, Blumstein SE (2003): An event‐related FMRI investigation of implicit semantic priming. J Cogn Neurosci 15: 1160–1175. [DOI] [PubMed] [Google Scholar]

- Rossell SL, Price CJ, Nobre AC (2003): The anatomy and time course of semantic priming investigated by fMRI and ERPs. Neuropsychologia 41: 550–564. [DOI] [PubMed] [Google Scholar]

- Schacter DL, Buckner RL (1998): Priming and the brain. Neuron 20: 185–195. [DOI] [PubMed] [Google Scholar]

- Singer T, Seymour B, O'Doherty J, Kaube H, Dolan RJ, Frith CD (2004): Empathy for pain involves the affective but not sensory components of pain. Science 303: 1157–1162. [DOI] [PubMed] [Google Scholar]

- Turkeltaub PE, Eden GF, Jones KM, Zeffiro TA (2002): Meta‐analysis of the functional neuroanatomy of single‐word reading: method and validation. NeuroImage 16: 765–780. [DOI] [PubMed] [Google Scholar]

- Wagner AD, Desmond JE, Demb JB, Glover GH, Gabrieli JDE (1997): Semantic repetition priming for verbal and pictorial knowledge: a functional MRI study of left inferior prefrontal cortex. J Cogn Neurosci 9: 714–726. [DOI] [PubMed] [Google Scholar]

- Wagner AD, Koutstaal W, Maril A, Schacter DL, Buckner RL (2000): Task‐specific repetition priming in left inferior prefrontal cortex. Cereb Cortex 10: 1176–1184. [DOI] [PubMed] [Google Scholar]

- Wise RJ, Greene J, Buchel C, Scott SK (1999): Brain regions involved in articulation. Lancet 353: 1057–1061. [DOI] [PubMed] [Google Scholar]