Abstract

Sensorimotor theories of semantic memory require overlap between conceptual and perceptual representations. One source of evidence for such overlap comes from neuroimaging reports of co-activation during memory retrieval and perception; for example, regions involved in color perception (i.e., regions that respond more to colored than grayscale stimuli) are activated by retrieval of object color. One unanswered question from these studies is whether distinctions that are observed during perception are likewise observed during memory retrieval. That is, are regions defined by a chromaticity effect in perception similarly modulated by the chromaticity of remembered objects (e.g., lemons more than coal)? Subjects performed color perception and color retrieval tasks while undergoing fMRI. We observed increased activation during both perception and memory retrieval of chromatic compared to achromatic stimuli in overlapping areas of the left lingual gyrus, but not in dorsal or anterior regions activated during color perception. These results support sensorimotor theories but suggest important distinctions within the conceptual system.

Keywords: fMRI, semantic memory, perception, color

Introduction

According to sensorimotor theories of semantic memory, object knowledge is organized in a modality-specific fashion, and it is distributed in or near the brain regions responsible for perceiving and acting on objects (Allport, 1985; Barsalou, 1999; Warrington & McCarthy, 1987). Numerous behavioral, neuroimaging, and neuropsychological studies have supported these theories, and neuroimaging studies in particular have demonstrated that retrieval of knowledge about object features recruits brain regions involved in perceiving those features (see Martin, 2007 or Thompson-Schill, 2003 for review). For this study, we investigated color in the visual modality, which has certain advantages. Not only is color especially important for recognition, but also, unlike other features of object appearance (e.g., shape, size), color is a feature that is perceived solely through the visual modality, which affords clear predictions.

Cortical regions involved in color perception are typically defined as those responding more to viewing of colored than grayscale stimuli. Previously, subjects have passively viewed colored and grayscale Mondrians (Chao & Martin, 1999; Howard et al., 1998), actively viewed these stimuli by detecting characters in the displays (Beauchamp, Haxby, Jennings, & DeYoe, 1999), or actively made luminance judgments on the Farnsworth-Munsell 100 Hue stimuli (Beauchamp et al., 1999; Simmons, Ramjee, Beauchamp, McRae, Martin, & Barsalou, 2007). Although there is some variability with respect to the brain regions activated during these tasks, they tend to include lateralized or bilateral fusiform and lingual gyri. Some studies have also investigated brain regions involved in color knowledge retrieval, using different tasks including color similarity judgments (Howard et al., 1998), object color naming (Chao & Martin, 1999; Martin, Haxby, Lalonde, Wiggs, & Ungerleider, 1995), and property verification (Simmons et al., 2007). In particular, two studies have found activation of the left fusiform gyrus during both a color perception task and color knowledge retrieval task, suggesting that areas involved in perceiving color are also involved when retrieving object color (Simmons et al., 2007; Hsu, Kraemer, Oliver, Schlichting, & Thompson-Schill, 2011).

However, several open questions remain. First, color perception tasks tend to activate several brain areas, but overlap was only found in one anterior region. Why were posterior regions not activated during color knowledge retrieval? Second, previous studies have not used a color knowledge retrieval task that is analogous to the color perception tasks. That is, if brain regions involved in color perception are identified as those responding more to colored than grayscale stimuli, we should expect a similar chromaticity effect in color knowledge (i.e., thinking about the colors of lemons versus coal). Specifically, we would expect differential recruitment of color perception regions when retrieving knowledge about chromatic versus achromatic object colors.

To address these issues, we conducted the current investigation, in which subjects retrieved color knowledge by comparing luminance of named object pairs while undergoing functional magnetic resonance imaging (fMRI). For the conditions of interest, these object pairs were of two chromatic objects, or two achromatic objects. Subjects also performed a color perception task, in which they judged the luminance of colored or grayscale visual displays. We found overlapping brain regions involved in both perception and knowledge retrieval in a posterior region - the left lingual gyrus. Further, we found that in ventral but not dorsal regions involved in the color perception task, there was more activity when retrieving chromatic versus achromatic color knowledge. Our findings are the first to demonstrate that chromaticity distinctions in color perception extend to color knowledge, lending further support to sensorimotor theories of semantic memory.

1. Method

1.1. Participants

Eighteen right-handed, native English speakers with no history of neurological disorders participated in this study (8 males; average age: 23.3). All subjects provided informed consent and practiced both tasks prior to scanning. The University of Pennsylvania IRB approved all experimental procedures. Participants received monetary compensation for their participation.

1.2. Task – color knowledge retrieval

Subjects made a luminance judgment on a named pair of objects, indicating which object was lighter or darker. The conditions of interest named chromatic (e.g., LEMON and BASKETBALL) or achromatic (e.g. COAL and SNOW) objects. The 206 objects (102 achromatic, 104 chromatic) used in the experiment were rated for color agreement (> 66%) by an independent group of 24 subjects, drawn from the same population as the study sample. The conditions did not differ from one another in terms of frequency, word length, concreteness, familiarity, imageability, or percent color/chromatic agreement (See Table 1 for characteristics of word stimuli). There were 98 trials for each condition. As a baseline task, subjects judged which of a pair of abstract (e.g., GREED and DELIGHT) concepts was better or worse. For a list of stimuli used in the color knowledge task, see Supplementary Table 1.

Table 1.

Mean and standard deviation of stimuli characteristics used in color knowledge task. Mean (standard deviation). All values derived from entries in the UWA MRC Psycholinguistic Database.

| Condition | N | Concreteness | Familiarity | Imageability | Number of Letters |

Number of Phonemes |

Kucera-Francis frequency |

|---|---|---|---|---|---|---|---|

| Abstract | 101 | 302 (41) | 471 (89) | 404 (65) | 6.69 (1.8) | 5.84 (2.0) | 30.9 (44.1) |

| Achromatic | 102 | 596 (32) | 522 (61) | 581 (37) | 5.95 (1.9) | 4.62 (1.7) | 22.8 (31.5) |

| Chromatic | 104 | 601 (22) | 513 (66) | 595 (24) | 6.13 (2.0) | 4.99 (1.7) | 18.3 (34.4) |

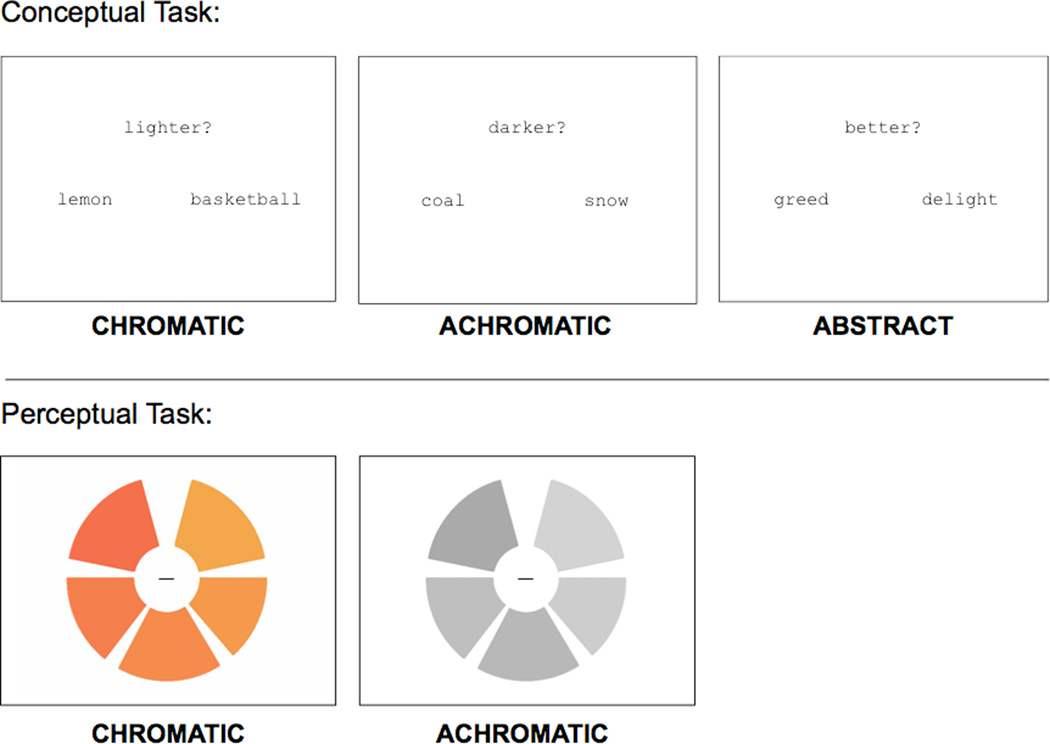

At the beginning of each trial, a pair of words and a prompt, randomly assigned to display “lighter?” or “darker?” (“better?” or “worse?” for the abstract condition) simultaneously appeared on the screen for 2700 ms (see Figure 1). During this time window, the subjects’ task was to decide which named object was lighter or darker, indicating their response via button press. At the end of the trial, a central fixation cross appeared for 300 ms, for a total trial duration of 3000 ms.

Figure 1. Examples of stimuli from each task.

(top) For the color knowledge task, subjects made a luminance judgment on named pairs of chromatic or achromatic objects. As a baseline, subjects made goodness judgments on pairs of abstract concepts. (bottom) For the color perception task, subjects judged whether the wedges making up a chromatic or achromatic wheel were sequentially ordered from lightest to darkest.

We blocked conditions as follows: 7 trials of one condition (21 seconds) followed by 12 seconds of passive fixation, 7 trials of the second condition followed by 12 seconds of passive fixation, then 7 trials of the third condition, and so on. We permuted condition order across subjects, but there was always a fixed stimulus onset asynchrony (SOA) for the 7 trials in each 21 second block. We used E-Prime software (Psychology Software Tools, Inc) to present stimuli and to collect response data.

1.3. Task – color perception

After subjects completed the color retrieval task, we administered a functional localizer to identify brain regions involved in color perception. Participants saw blocks of the Farnsworth-Munsell 100 hue stimuli, in which they judged whether the wedges making up colored or grayscale wheels were sequentially ordered from lightest to darkest. The methods and stimuli for this task have been used previously to identify brain regions involved in color perception (e.g., Beauchamp et al., 1999, Simmons et al., 2007, Hsu et al., 2011).

1.4. Scanning Procedure

We acquired imaging data using a 3T Siemens Trio system with a standard 8-channel head coil and foam padding to secure the head position. After we acquired T1-weighted anatomical images (TR = 1620 msec, TE = 3 msec, TI = 950 msec, voxel size = 0.9766 mm × 0.9766 mm × 1.0 mm), each subject performed four runs of the color knowledge retrieval task, followed by two runs of the color perception task, while undergoing blood oxygen dependent (BOLD) imaging (Ogawa et al., 1993). We collected 774 sets of 42 slices using interleaved, gradient echo, echoplanar imaging (TR = 3000 msec, TE = 30 msec, FOV = 19.2 cm × 19.2 cm, voxel size = 3.0 mm × 3.0 mm × 3.0 mm). Nine seconds of “dummy” gradient and radio frequency pulses preceded each functional scan to allow for steady-state magnetization; during this initial time period, we did not present stimuli or collect fMRI data.

1.5. Image Processing

We analyzed the data using VoxBo (www.voxbo.org) and SPM2 (http://www.fil.ion.ucl.ac.uk). Anatomical data for each subject was processed using the FMRIB Software Library (FSL) toolkit (http://www.fmrib.ox.ac.uk/fsl) to correct for spatial inhomogeneities and to perform non-linear noise reduction. Functional data were sinc interpolated in time to correct for the slice acquisition sequence, motion corrected with a sixparameter, least squares, rigid body realignment routine using the first functional image as a reference, and normalized in SPM2 to a standard template in Montreal Neurological Institute (MNI) space. Data were smoothed using a 9mm full-width half-max Gaussian smoothing kernel. Following preprocessing for each subject, a power spectrum for one functional run was fit with a 1/frequency function; we used this model to estimate the intrinsic temporal autocorrelation of the functional data (Zarahn, Aguirre, & D'Esposito, 1997).

We fit a modified general linear model (Worsley & Friston, 1995) to each subject’s data to the four runs of the color retrieval task, in which the conditions of interest (chromatic, achromatic, abstract) were each modeled as a 21-second block and convolved with a standard hemodynamic response function. Several covariates of no interest (global signal, scan effects, movement, spikes) were also included in the model. An adjusted response latency for each trial for all conditions (i.e., a mean centered log transformation of each subject’s RT) was also entered as a continuous covariate of no interest, to address any difficulty or “time on task” confounds. From this model, we computed parameter estimates for each condition (compared to fixation baseline) at each voxel, and these estimates were included in the group-level random effects analyses described below. Independently, we fit a second modified GLM to each subject’s data from the two runs of the color perception task, in which the conditions of interest (colored versus grayscale stimuli) were modeled as blocks in the same manner as described above. Aside from this difference in the conditions of interest, the two models were constructed identically.

2. Results

2.1. Behavioral Results

Color knowledge retrieval task

There was a significant RT difference across conditions, F(2,51) = 5.78, p = .005 (chromatic: M = 1873 ms, SD = 173 ms; achromatic: M = 1782 ms, SD = 145 ms; abstract: M = 1694 ms, SD = 155 ms). We found this RT difference substantial enough to warrant entering the RT for each trial as a continuous covariate of no interest in the GLM, such that any differences reported below describe condition differences, rather than RT differences. Note that the inclusion of this covariate has the effect of underestimating the chromaticity effect on the BOLD response.

Color perception task

There were no RT differences between chromatic and achromatic perceptual judgments (chromatic: M = 1473 ms, SD = 204 ms; achromatic: M = 1439 ms, SD = 230 ms), t(17) = 1.43, p = 0.17), though participants were significantly worse at chromatic judgments [(chromatic: M = 73%, SD = 5.6%; achromatic: M = 79%, SD = 5.9%), t(17) = 3.00, p = 0.008].

2.2. Functional Region of Interest Analyses: Left lingual gyrus

To establish functionally defined regions of interest (fROIs) in which to assess the effects of chromaticity on color knowledge retrieval, we first performed a group-level random effects analysis on the color perception data, comparing brain activity of colored stimuli to that of grayscale stimuli as in prior studies. No regions responded more to grayscale than colored stimuli. We corrected for multiple comparisons (at α = 0.05) by performing 1000 Monte Carlo permutations of the data, deriving a critical threshold of t = 6.16 (Nichols & Holmes, 2002). When examining those regions that responded more to colored than grayscale stimuli, only one fROI (17 voxels) in the left lingual gyrus (Talairach coordinates: −9, −87, 4) surpassed the corrected threshold. Within this region, we calculated parameter estimates for each subject, for each condition of the color retrieval task, on the spatially-averaged time series. We assessed an effect of concrete versus abstract concepts by testing a comparison of both types of conceptual knowledge to abstract knowledge. The chromaticity effect was assessed by using a paired t-test of the difference between the chromatic and achromatic parameters. In the left lingual gyrus, there was significantly greater activity when retrieving concrete versus abstract knowledge ([Chromatic + Achromatic] – Abstract; t(17) = 2.85, p = 0.01). Critically, there was significantly greater activity when retrieving chromatic versus achromatic knowledge ([Chromatic – Achromatic]; t(17) = 2.29, p = 0.04]).

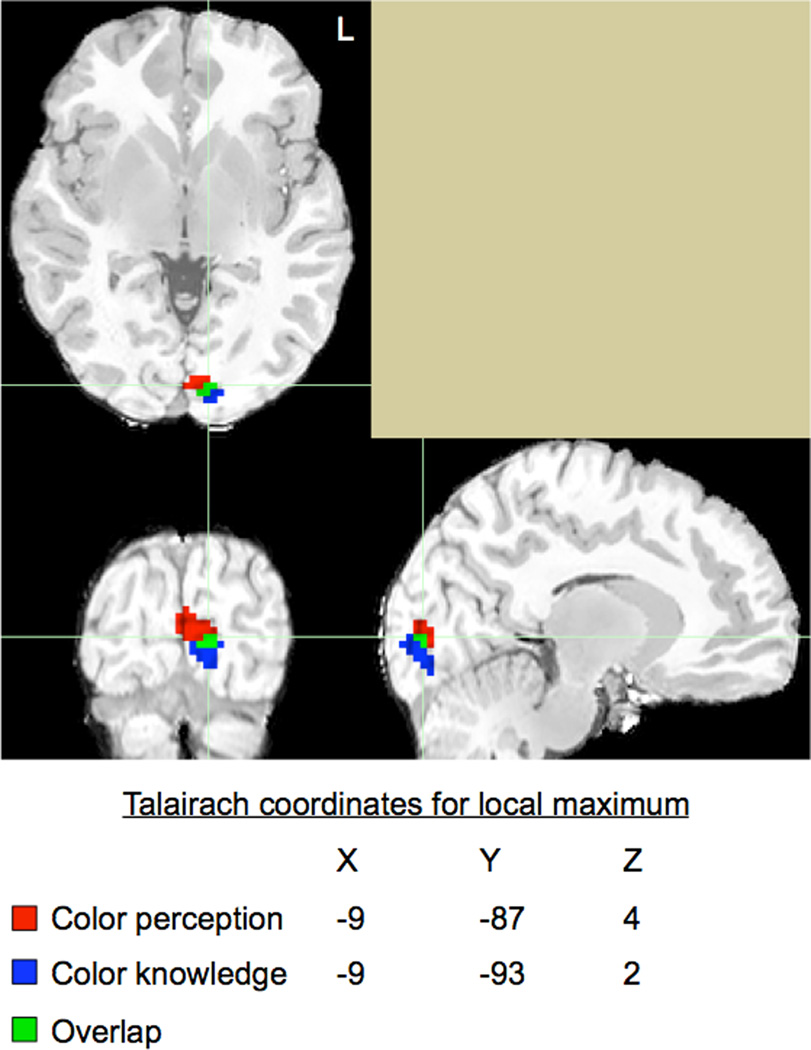

The preceding analyses establish that a chromaticity effect during color retrieval is observed in a region that exhibits a chromaticity effect during color perception. This effect, while a direct test of the hypothesis of interest, is a narrow way to address the extent and location of overlap during perception and memory processing. Towards this end, we visually assessed co-localization of regions involved in both tasks. In a manner similar to the group-level random effects analysis performed on the color perception data, we performed a similar analysis on the color knowledge retrieval data, again from all 18 subjects. For this dataset, we compared brain activity in the chromatic condition to that in the achromatic condition. Next, within occipital brain regions, we identified the top 50 voxels that were most active during each task, irrespective of threshold. For the color perception task, Talairach coordinates for the peak voxel were −9, −87, 4. For the color knowledge task, Talairach coordinates for the peak voxel were −9, −93, 2. As seen in Figure 2, we found co-localization of seven voxels in the left lingual gyrus. This result suggests not only that the lingual gyrus is involved in both color perception and knowledge retrieval, but also that it is recruited more for the chromatic condition in both processes (moreso than the achromatic condition of both processes).

Figure 2. Co-localization of color perception and color knowledge.

We independently identified the 50 most active voxels in posterior occipital regions for color perception (red) and color knowledge (blue). Voxels active for both tasks are shown in green. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of the article.)

2.3. Exploratory Whole Brain Analyses: Ventral and Dorsal Distinctions

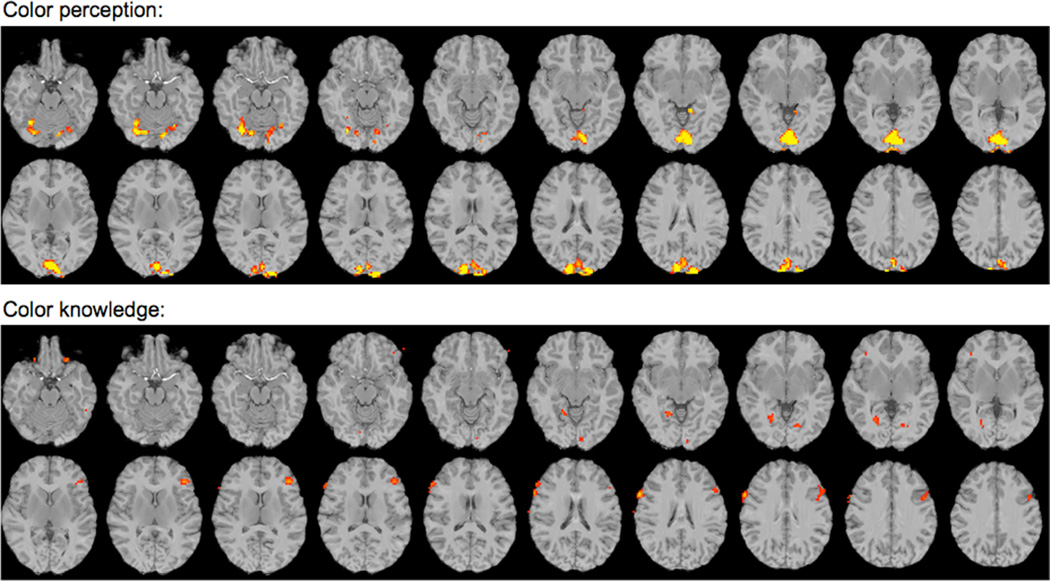

In order to ascertain the specificity of this effect, we examined brain activity at a less stringent threshold to determine if other brain regions were active for either task. For this exploratory analysis, we examined the group color perception data at an uncorrected threshold of p < 0.001(t = 3.97), which yielded 27 distinct clusters, as shown in Figure 3. We then created 27 fROIs of comparable size by identifying each individual local maximum and any of the 26 surrounding voxels that also surpassed the uncorrected threshold (see Supplementary Table 2 for the coordinates of these local maxima). In order to assess the chromaticity effect during memory retrieval within these regions, we calculated parameter estimates for each subject for each condition (compared to baseline) on the spatially-averaged time series across voxels within each fROI.

Figure 3. Exploratory analyses.

(top) Group random-effects whole-brain analysis shows voxels responding more to chromatic than achromatic visual stimuli at p < 0.001 (uncorrected) threshold. (bottom) Group random-effects whole brain analysis shows voxels responding more to chromatic that achromatic conceptual knowledge at p < 0.01 (uncorrected) threshold. The 27 fROIs were identified within these active regions. Slices are oriented according to radiological convention.

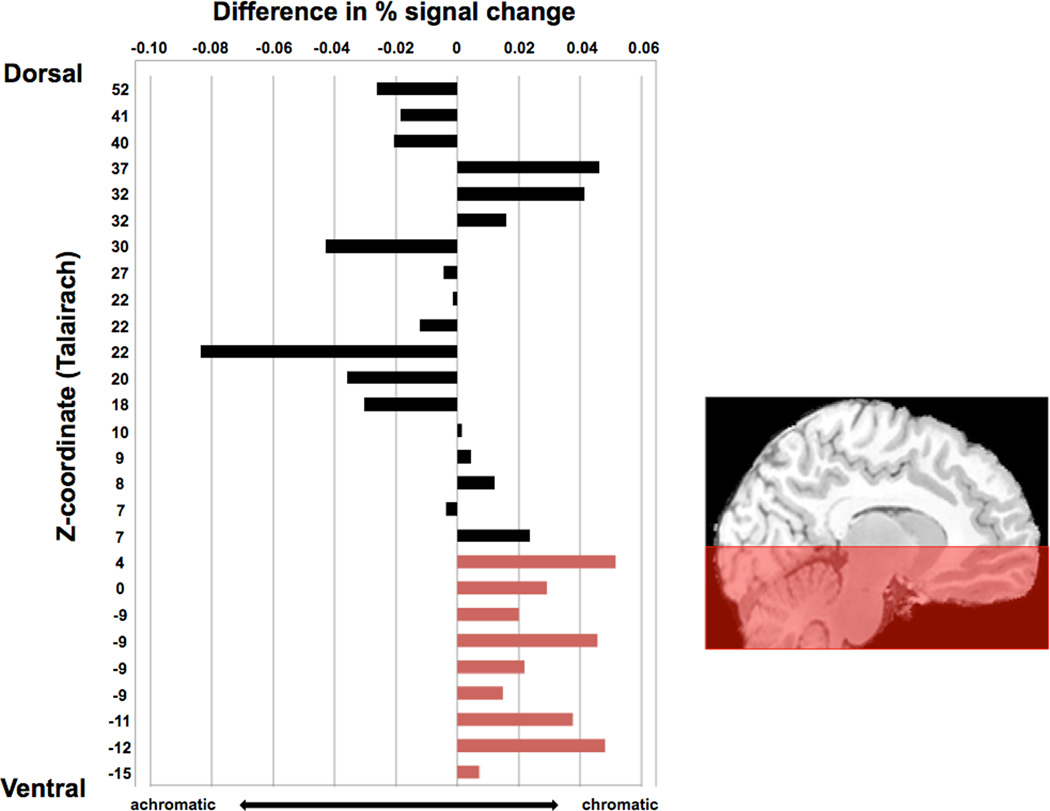

Upon doing this, we observed an unanticipated pattern, which is evident in the order we have arranged the clusters in Figure 4: The difference in response to chromatic versus achromatic memory retrieval was larger and more consistent in the ventral fROIs than in the dorsal fROIs. Although the chromaticity effects did not reach significance in individual fROIs, the reliability of the pattern can be established with a post-hoc binomial test. In the nine most ventral fROIs (z < 5), all nine of the fROIs showed numerically greater activation for chromatic compared to achromatic stimuli (p = 0.004). In contrast, the pattern was not consistent across the 18 dorsal fROIs (p = 0.48). Although these results should be interpreted with caution, they are suggestive that the effect reported above in the left lingual gyrus may be a more widespread pattern in ventral but not dorsal regions of the visual system. In the following section, motivated by previous work, we take a closer look at one such region, the left fusiform gyrus.

Figure 4. Signal change trends in ventral but not dorsal regions.

For each of the 27 fROIs identified in the exploratory whole-brain analysis, we assessed percent signal change by comparing each condition of the color knowledge task to baseline. We then subtracted the values from achromatic from chromatic, and plotted the difference. All ventral color perception regions showed greater response to chromatic than achromatic knowledge retrieval, and this trend was not consistently observed in dorsal regions that were involved in the color perception task. Note that these values correspond to activity during the conceptual task, and coordinates are plotted in order from dorsal to ventral according to Talairach z-coordinates. For full Talairach coordinate listings, corresponding regions, and t-values of these maxima to the perceptual task, see Supplementary Table 2.

2.4. Secondary Region of Interest Analyses: Fusiform Gyrus

Previous research has shown that the fusiform gyrus is involved in both color knowledge retrieval and color imagery and perception (Howard et al., 1998; Simmons et al., 2007). In all analyses thus far, ROIs were functionally defined, and the fusiform gyrus did not emerge as an active region when contrasting the chromatic to achromatic conditions in either task (i.e., activity did not surpass either the uncorrected or permuted threshold). However, for our color knowledge task, the left fusiform gyrus was robustly active when comparing both chromatic and achromatic pairs (combined) to the abstract pairs (t[17] = 4.63, p < .001). The Talaraich coordinates for the peak active voxel for this contrast were −33, −36, −13 ; by comparison, the peak voxel in left fusiform gyrus as reported by Simmons and colleagues for their color knowledge task was nearly identical (−33, −36, −16). The proximity of these peak voxel coordinates across studies suggests that our memory task and other tasks used previously both tap into color knowledge retrieval processes, though again, there was no main effect of chromaticity (t[17] = 0.38, p = 0.71).

Several prior studies have reported that this region of the fusiform gyrus is active during color perception (that is, more active during perception of chromatic compared to achromatic stimuli) in addition to during retrieval of color (e.g., Simmons et al., 2007). We did not observe this effect in the analyses we reported above; although we used their same procedure and stimuli to manipulate color perception, there were some differences in our analyses. We thus repeated the analysis of the perception data without including global signal as a covariate in the model (following from Simmons et al., 2007), and in this new analysis, we did observe a chromaticity effect in color perception in the fusiform gyrus. In this functionally-defined fusiform region, there was a trend towards a chromaticity effect on the knowledge retrieval task, though it did not reach statistical significance [t(17) = 1.82, p = 0.09]. Finally, in applying a similar approach as described earlier – in which we identified the top voxels in ventral temporal regions that were involved in both tasks, irrespective of threshold – in analyses without global signal in the models, we observed near (but not direct) voxel overlap between task activation (in memory) and chromaticity (in perception). In sum, this series of additional analyses suggest a role for the left fusiform gyrus in color perception and memory, but the pattern of activation in this region is different in a number of ways from that observed in the left lingual region (i.e., correlated with global signal and less sensitive to chromaticity in memory). We will return to these differences and their possible implications below.

3. General Discussion

The results of this study demonstrate that a chromaticity effect (namely, greater activation to colored than grayscale stimuli), already documented in color perception, is paralleled in memory retrieval. This tests an important prediction of sensorimotor models of memory, which require that the same processes invoked during perception of a sensory property contribute to memory of that property. Therefore, under these models, if there are different neural patterns associated with the perception of chromatic versus achromatic stimuli, this difference should emerge during memory retrieval. Our data confirm this prediction. This experimental strategy could be applied to many other sensorimotor properties, in order to test for similarities between perception (or action) and memory processes.

Somewhat to our surprise, the chromaticity effect on memory was strongest in a very posterior region - the left lingual gyrus. This was the one region where we found direct overlap of voxels activated by both perception and memory tasks. Ventral temporal regions - in particular, the left fusiform gyrus - showed robust task activation during the knowledge retrieval task (with a peak voxel coordinate that was only 3mm from the peak voxel reported by Simmons et al., 2007), but in the left fusiform the chromaticity effect in memory was weak at best (evident only as a marginally significant difference in an analysis without global signal in the model). Based on these findings, one can speculate about possible differences between posterior (i.e., lingual) and more anterior (i.e., fusiform) regions of the visual system that could be tested in future research.

To begin, we find it noteworthy that the decision to include global signal in the model did not impact the detection of the perceptual chromaticity effect in the left lingual region but did so in the left fusiform region. Often, considerations about inclusion of the global signal in a model are based on the correlation between the global signal covariate and the task covariate (i.e., when the two are highly correlated, teasing apart the effects of each becomes nearly impossible – see Aguirre, Zarahn, & D’Esposito (1998) for further discussion). The correlation between global signal and the chromaticity covariate in the perceptual task ranged from 0.11–0.31 across subjects; but, of course, the co-linearity across predictors in the model is the same for the two regions in question here. What could differ, however, is the correlation between the global signal and the regionally-specific signal. In other words, if activation in the fusiform region is more strongly correlated with the global signal than is activation in the lingual region, then the inclusion of global signal in the model could differentially impact the fusiform findings. This was indeed the pattern we observed in these two regions (p < 0.01). The fact that global signal explains more variance in the fusiform (average t = 10.8) than in the lingual region (average t = 7.88) could explain why the inclusion of global signal has differential effects in these two regions.

We find this idea interesting in light of an argument made in Beauchamp et al. (1999), namely that fusiform activation depends on attentional demands of the task. For example, previous work has demonstrated that the fusiform gyrus is activated during an attentionally demanding color perception task but not during passive viewing tasks (see Beauchamp et al., 1999), and we have shown that activation in this region during color retrieval is modulated by specificity of the color information required in the task (Hsu et al., 2011). If activation in the fusiform reflects a more general attentional process, it is conceivable that activity there would be correlated with a spatially-distributed attentional network, which could in turn lead to a higher correlation with global signal (because the global signal is the average of every voxel’s response at a given time point). More generally, this discussion highlights the potential value of comparing models with and without covariates of so-called “no interest”; in this case, global signal might in fact be of interest.

Turning to the memory data, we found a strong effect of chromaticity in the lingual gyrus (co-localized with the perceptual chromaticity effect) but a weak effect, at best, in the fusiform gyrus. We find the absence of a chromaticity effect in the fusiform particularly interesting in light of the robust activation in this region during the memory task. How might this area be contributing to the process of memory retrieval (particularly in light of its association with color perception as reviewed above)? We offer two possibilities, with a cautionary note that the interaction between chromaticity and region did not reach significance (p = 0.19), so we do not have evidence that the patterns we observed in fusiform and lingual regions are reliably different; as such, these ideas are presented as suggestions for future research aimed at distinguishing response properties of these regions.

Firstly, echoing a suggestion in Simmons et al. (2007), the posterior lingual area might respond to any colored stimuli, regardless of task (i.e., a purely sensory response) whereas the more anterior fusiform area may be involved in the process of color categorization. The luminance judgment for our color knowledge task did not explicitly require subjects to categorize color stimuli, which may explain the lack of a robust chromaticity effect in fusiform gyrus. Under this account, the two areas support different processes that are invoked to varying degrees during different perceptual and memory tasks, which leads to the testable prediction that chromaticity effects would be observed during a memory retrieval task that required more attention to color categories.

A second possibility concerns the nature of the representations of different colors in these two regions. An area could be said to represent color if the response in that area varies as a function of variation in color. One such variation would be that chromatic stimuli, as a group, elicit a different pattern of activity (in this case, a higher magnitude response) than do achromatic stimuli. Observation of a chromaticity effect requires that within-class differences (that is, variation between different colors, among the chromatic stimuli, or between different shades of gray, among the achromatic stimuli) are smaller that the between-class differences. However, there are many possible representations of color that would not lead to a chromaticity effect. The fusiform region could be a “color area”, in that it codes the color of stimuli in both perception and memory, but one in which the difference in the representation of, say, purple and green stimuli is just as big as the difference between purple and gray stimuli. There are now methods for analyzing fMRI data that are well-suited for characterizing pattern similarity that could fruitfully be applied to the study of color representation (e.g., Weber, Thompson-Schill, Osherson, Haxby, & Parson, 2009, O’Toole, Jiang, Abdi, & Haxby, 2005; Haushofer, Livingstone, & Kanwisher, 2008). For example, we would predict that when discriminating different categories of chromatic stimuli such as purple from green (in perception or memory), classification performance should be worse in early visual areas (e.g. lingual gyrus) than it is in downstream visual areas (e.g., fusiform gyrus).

Finally, two aspects of our stimulus set and task choice warrant some further discussion. First, by using a luminance judgment task, the observed chromaticity effect might be attributed to additional processing in the chromatic condition. That is, by asking subjects to judge luminance, they may have needed to “convert” chromatic items to grayscale prior to responding. By contrast, this step is unnecessary for achromatic items. Thus, greater activity in the chromatic condition may be the result of a task-dependent process that contributed to differential activation between chromatic and achromatic knowledge retrieval. Second, chromatic items might sample a larger area of color space than achromatic items. That is, the colors of achromatic items were always either white, gray, or black. Chromatic items, by contrast, were sampled from any colors except for white, gray, or black. Further, to better match conditions in terms of RT, we intentionally constructed some achromatic pairs to be closer in terms of perceived luminance (e.g., a luminance judgment for CHALK - KNIFE is more difficult than for CHALK - PIANO), and we constructed some chromatic pairs to be further apart in terms of perceived luminance (e.g., a luminance judgment for SCHOOL BUS – FUDGE is easier than for SCHOOL BUS – BASKETBALL). As a result, no trials in either condition used two items from the same color family (e.g., two red items). Thus, our reported effects may be the result of a larger color space sampling in the chromatic condition, relative to the achromatic condition. A future study could control for color space variance in the two conditions by asking luminance of two red items (e.g., STRAWBERRY – BRICK) versus luminance of two gray items (e.g., CONCRETE – QUARTER).

In summary, our study is the first to examine whether a well-established difference in the perception of chromatic and achromatic stimuli is paralleled during memory retrieval, in the absence of any visual information. These results support sensorimotor models of semantic memory by demonstrating commonalities between color perception and color knowledge in early areas of the cortical visual system. More importantly, our findings show that there may be multiple areas of overlap between perception and memory, but the functions of those areas may be distinct. The chromaticity effect described here may tap into some of these functional differences, and future research may elucidate some of these distinctions further.

Highlights.

-

-

We use luminance judgments to tap color perception and color memory processes.

-

-

Using fMRI, we examined and compared brain activity during both color tasks.

-

-

Chromaticity effects for both perception and memory are found in the lingual gyrus.

-

-

Ventral visual areas are modulated by stimulus chromaticity in perception and memory.

-

-

Our results support sensorimotor theories but suggest important distinctions.

Supplementary Material

Acknowledgements

This research was supported by the National Institutes of Health (R01-MH070850 to S. T.-S.). Further support came from the Center for Functional Neuroimaging at the University of Pennsylvania (P30 NS045839, ROI DA14418, and R24 HD050836). The authors wish to thank members of the Thompson-Schill lab, members of the Cognitive Tea group, David Brainard for helpful discussion, W. Kyle Simmons for use of the perceptual stimuli, and two anonymous reviewers for their helpful comments on an earlier version of this manuscript.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Aguirre GK, Zarahn E, D’Esposito M. The inferential impact of global signal covariates in functional neuroimaging analyses. Neuroimage. 1998;8:302–306. doi: 10.1006/nimg.1998.0367. [DOI] [PubMed] [Google Scholar]

- Allport D. Current Perspectives in Dysphasia. Edinburgh: Churchill Livingstone; 1985. Distributed memory, modular subsystems and dysphasia; pp. 207–244. [Google Scholar]

- Barsalou LW. Perceptual symbol systems. The Behavioral and Brain Sciences. 1999;22(4):577–609. doi: 10.1017/s0140525x99002149. discussion 610–660. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Haxby JV, Jennings JE, DeYoe EA. An fMRI version of the Farnsworth-Munsell 100-Hue test reveals multiple color-selective areas in human ventral occipitotemporal cortex. Cerebral Cortex (New York NY.: 1991) 1999;9(3):257–263. doi: 10.1093/cercor/9.3.257. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A. Cortical regions associated with perceiving, naming, and knowing about colors. Journal of Cognitive Neuroscience. 1999;11(1):25–35. doi: 10.1162/089892999563229. [DOI] [PubMed] [Google Scholar]

- Haushofer J, Livingstone MS, Kanwisher N. Multivariate patterns in object-selective cortex dissociate perceptual and physical shape similarity. PLoS Biology. 2008;6(7):1459–1467. doi: 10.1371/journal.pbio.0060187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howard RJ, ffytche DH, Barnes J, McKeefry D, Ha Y, Woodruff PW, Bullmore ET, et al. The functional anatomy of imagining and perceiving colour. Neuroreport. 1998;9(6):1019–1023. doi: 10.1097/00001756-199804200-00012. [DOI] [PubMed] [Google Scholar]

- Hsu NS, Kraemer DJM, Oliver RT, Schlichting ML, Thompson-Schill SL. Color, context, and cognitive style: Variations in color knowledge retrieval as a function of task and subject variables. Journal of Cognitive Neuroscience. 2011;23(9):2544–2557. doi: 10.1162/jocn.2011.21619. [DOI] [PubMed] [Google Scholar]

- Martin A, Haxby JV, Lalonde FM, Wiggs CL, Ungerleider LG. Discrete cortical regions associated with knowledge of color and knowledge of action. Science (New York, NY) 1995;270(5233):102–105. doi: 10.1126/science.270.5233.102. [DOI] [PubMed] [Google Scholar]

- Martin A. The representation of object concepts in the brain. Annual Review of Psychology. 2007;58:25–45. doi: 10.1146/annurev.psych.57.102904.190143. [DOI] [PubMed] [Google Scholar]

- Nichols TE, Holmes AP. Nonparametric permutation tests for functional neuroimaging: a primer with examples. Human Brain Mapping. 2002;15(1):1–25. doi: 10.1002/hbm.1058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ogawa S, Menon RS, Tank DW, Kim SG, Merkle H, Ellermann JM, Ugurbil K. Functional brain mapping by blood oxygenation level-dependent contrast magnetic resonance imaging. A comparison of signal characteristics with a biophysical model. Biophysical Journal. 1993;64(3):803–812. doi: 10.1016/S0006-3495(93)81441-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Toole AJ, Jiang F, Abdi H, Haxby JV. Partially distributed representations of objects and faces in ventral temporal cortex. Journal of Cognitive Neuroscience. 2005;17(4):580–590. doi: 10.1162/0898929053467550. [DOI] [PubMed] [Google Scholar]

- Simmons W, Ramjee V, Beauchamp M, McRae K, Martin A, Barsalou L. A common neural substrate for perceiving and knowing about color. Neuropsychologia. 2007;45(12):2802–2810. doi: 10.1016/j.neuropsychologia.2007.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thompson-Schill SL. Neuroimaging studies of semantic memory: inferring "how" from "where". Neuropsychologia. 2003;41(3):280–292. doi: 10.1016/s0028-3932(02)00161-6. [DOI] [PubMed] [Google Scholar]

- Warrington EK, McCarthy RA. Categories of knowledge. Further fractionations and an attempted integration. Brain: A Journal of Neurology. 1987;110(Pt 5):1273–1296. doi: 10.1093/brain/110.5.1273. [DOI] [PubMed] [Google Scholar]

- Weber MJ, Thompson-Schill SL, Osherson D, Haxby J, Parsons L. Predicting judged similarity of natural categories from their neural representations. Neuropsychologia. 2009;47:859–868. doi: 10.1016/j.neuropsychologia.2008.12.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worsley KJ, Friston KJ. Analysis of fMRI time-series revisited--again. NeuroImage. 1995;2(3):173–181. doi: 10.1006/nimg.1995.1023. [DOI] [PubMed] [Google Scholar]

- Zarahn E, Aguirre GK, D'Esposito M. Empirical analyses of BOLD fMRI statistics. I. Spatially unsmoothed data collected under null-hypothesis conditions. NeuroImage. 1997;5(3):179–197. doi: 10.1006/nimg.1997.0263. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.