Abstract

PURPOSE

This study was a systematic review with a quantitative synthesis of the literature examining the overall effect size of practice facilitation and possible moderating factors. The primary outcome was the change in evidence-based practice behavior calculated as a standardized mean difference.

METHODS

In this systematic review, we searched 4 electronic databases and the reference lists of published literature reviews to find practice facilitation studies that identified evidence-based guideline implementation within primary care practices as the outcome. We included randomized and nonrandomized controlled trials and prospective cohort studies published from 1966 to December 2010 in English language only peer-reviewed journals. Reviews of each study were conducted and assessed for quality; data were abstracted, and standardized mean difference estimates and 95% confidence intervals (CIs) were calculated using a random-effects model. Publication bias, influence, subgroup, and meta-regression analyses were also conducted.

RESULTS

Twenty-three studies contributed to the analysis for a total of 1,398 participating practices: 697 practice facilitation intervention and 701 control group practices. The degree of variability between studies was consistent with what would be expected to occur by chance alone (I2 = 20%). An overall effect size of 0.56 (95% CI, 0.43–0.68) favored practice facilitation (z = 8.76; P <.001), and publication bias was evident. Primary care practices are 2.76 (95% CI, 2.18–3.43) times more likely to adopt evidence-based guidelines through practice facilitation. Meta-regression analysis indicated that tailoring (P = .05), the intensity of the intervention (P = .03), and the number of intervention practices per facilitator (P = .004) modified evidence-based guideline adoption.

CONCLUSION

Practice facilitation has a moderately robust effect on evidence-based guideline adoption within primary care. Implementation fidelity factors, such as tailoring, the number of practices per facilitator, and the intensity of the intervention, have important resource implications.

Keywords: Practice facilitation, outreach facilitation, primary care, implementation research, evidence-based guidelines, meta-analysis, systematic review, knowledge translation, behavior change

INTRODUCTION

There are many challenges to the adoption of evidence-based guidelines into the clinical practice of primary care physicians,1–7 and a consensus has emerged from the literature that having knowledge is rarely sufficient to change practice behavior.8,9 Didactic education or passive dissemination strategies are ineffective, whereas interactive education, reminder systems, and multifaceted interventions have a greater effect.10–14 Outreach or practice facilitation is a multifaceted approach that involves skilled individuals who enable others, through a range of intervention components and approaches, to address the challenges in implementing evidence-based care guidelines within the primary care setting.15–21 Nagykaldi et al22 in 2005 conducted a systematic review of practice facilitation and found through a narrative summary of effects that practice facilitation increased preventive service delivery rates, assisted with chronic disease management, and implemented system-level improvements within practice settings.

In this meta-analysis, we examined the overall effect size of practice facilitation using a quantitative synthesis of the literature. We included in the analysis studies that described the intervention as outreach or practice facilitation for the implementation of evidence-based practice guidelines within primary care practice settings. The quantitative analyses were undertaken to describe the range and distribution of effects across studies, to explore probable explanations of the variation, and to demonstrate results quantitatively compared with the descriptive systematic reviews that have been done to date on practice facilitation.16,22

METHODS

Study Design and Primary Outcome

This study was a systematic review with a quantitative synthesis of the literature examining the overall effect size of practice facilitation and possible moderating factors. The primary outcome was the change in evidence-based practice behavior calculated as a standardized mean difference. We used the guidelines outlined in the PRISMA statement for reporting systematic reviews and meta-analyses23 and applied the methods of the Cochrane Collaboration.24

Inclusion Criteria and Selection of Studies

The literature review focused solely on controlled trials or evaluations of facilitation within health care, where an explicit facilitator role was adopted to promote changes in clinical practice. The definition provided by Kitson and colleagues was used to determine study eligibility—a facilitator is an individual carrying out a specific role, either internal or external to the practice, aimed at helping to get evidence-based guidelines into practice.16,21,25 We built on the review of 25 studies conducted by Nagykaldi et al from 1966 to 2004 by adding the following inclusion criteria for study selection: English language only peer-reviewed journals from December 2004 to December 2010, an intervention study using practice facilitation to improve the adoption of evidence-based practice, and a controlled trial (randomized or not) or a pre- and postintervention cohort study.

One author (N.B.B.) conducted a systematic literature search on February 1, 2011, using MEDLINE and the Thomson Scientific Web of Science database, which contains the Science Citation Index, the Social Sciences Citation Index, and the Arts and Humanities Citation Index. The following key word search was used:

(primary care or family medicine or general practice or family physician or practice-based research or audit or prevent* or quality improvement or practice enhancement or practice-based education or evidence based or office system) and (facilitator or facilitation) and (controlled trial or clinical trial or evaluation)

The references from the published systematic reviews of practice facilitation, the references from retrieved articles, and other secondary sources that met the inclusion criteria were also consulted to supplement articles found through the initial literature search.

Initial screening of the identified articles was based on their titles and abstracts and conducted by one author (N.B.B.). Two authors (N.B.B., C.L.) and an assistant reviewed in more detail studies that could not be excluded based on the abstract alone to determine whether they met the inclusion criteria.

Quality Assessment

Given that no critical appraisal reference standard tool exists,26,27 we used a modified version of the Physiotherapy Evidence-Based Database (PEDro) method, which consisted of 12 criteria, each receiving either a yes (reported) or no (not reported) score, for assessing the risk of bias of practice facilitation studies. Compared with the Jadad assessment criteria,28 PEDro has been shown to provide a more comprehensive picture of methodological quality for studies in which double-blinding is not possible. We added an adequate intervention description and adjusting for interclass correlation (ICC) to the scale, because unit of analysis errors have been identified as a methodological problem in the implementation research literature.29 The protocol covered the study characteristics considered key by the Cochrane Collaboration of methods, participants, interventions, outcome measures, and results.24

Two authors (N.B.B., C.L.) and an assistant independently rated all included studies (n = 45) using the same protocol, and discrepancies were resolved by consensus with the inclusion of a fourth rater (W.H.). Interrater reliability between the 3 raters was assessed to be very good, Fleiss’ κ = 0.78 (95% CI, 0.73–0.84). With a maximum score of 12, we considered studies from the 44 that had a total quality score of 6 (the average score) or greater (mean = 5.57; 95% CI, 4.79–6.35) to be of high quality.30

Data Analysis and Effect Size Determination

Selected study measures, such as participation rates and attributes of participating practices, were summarized across all studies descriptively using measures of central tendency for continuous data and frequencies for categorical and binomial data. The change in the primary outcome measure from preintervention to postintervention assessment for each study was ascertained by determining the difference between the practice facilitation and comparison group postintervention and the difference from baseline for prospective cohort studies. All statistics were computed using SPSS 18.0 and Comprehensive Meta-Analysis software.31,32

Effect Sizes

The standardized mean difference (SMD) for the primary outcome (as identified by the authors of the study) of selected high methodological performance studies was computed using Hedges’ (adjusted) g.24 Cohen’s categories were used to evaluate the magnitude of the effect size, calculated by the standardized mean difference, with g <0.5 as a small effect size; g ≥0.5 and ≤0.8, medium effect size; and g >0.8, large effect size. When the primary outcome was unspecified or more than 1, the median outcome was selected to calculate the standardized mean difference.33 Methods for determining standard deviations from confidence intervals and P values were used when standard deviations were not provided.24 For studies in which the unit of analysis and the unit of randomization did not agree,34–36 verification that the intraclass correlation was taken into consideration was done to avoid including potentially false-positive results.37–39 For studies that provided results for primary outcomes only as odds ratios, the formula proposed by Chinn40 was used to convert the odds ratio to a standardized mean difference and determine the standard error.

The DerSimonian and Laird41 random effects meta-analysis was conducted to determine the overall effect size of practice facilitation interventions and the presence of statistical heterogeneity. Ninety-five percent confidence intervals were calculated for effect sizes based on a generic inverse variance outcome model. The z statistic was used to test for significance of the overall effect.

Publication Bias and Heterogeneity

The Cochran’s Q statistic and the Higgins’ I2 statistic24 were used to determine statistical heterogeneity between studies. A low P value (≤.10) for the Q statistic was considered evidence of heterogeneity of treatment effects. Forest plots were generated to display graphically both the study-specific effect sizes (along with associated 95% confidence intervals) and the pooled effect estimate. A funnel plot was generated to show any evidence of publication bias42,43 along with 2-tailed Begg-Mazumdar rank correlation test44 and Egger regression asymmetry test.45 We also assessed the presence of significant heterogeneity between studies that undertook blinding, allocation concealment, and intention-to-treat and those that did not.46

Influence, Subgroup and Meta-Regression Analysis

To investigate the influence of each individual study on the overall effect size estimate, we conducted an influence analysis by computing the estimate and omitting 1 study in each turn. Finally, we conducted a subgroup analysis using a random-effects model, generated scatter plots, and tested the significance of regression equations, using the maximum likelihood method for mixed effects and the calculation of the Q statistic, to determine whether there were any potential effect size modifiers from year of the study, the number of practices per facilitator, duration of the intervention, tailoring of the intervention, and intensity of the intervention.

RESULTS

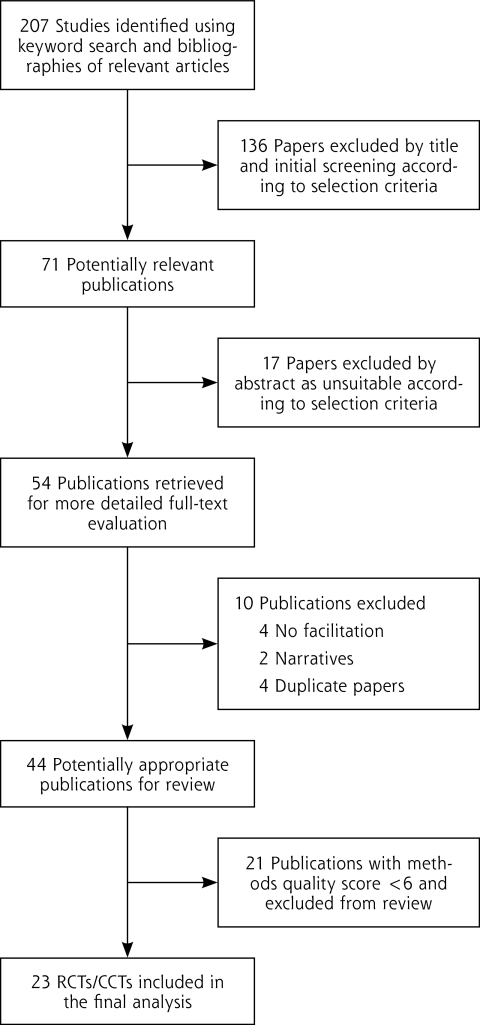

Figure 1 is a flow diagram of the selection of relevant studies. The initial literature search resulted in 207 (1980 to 2010) articles (February 1, 2011), of which 46 articles were determined to be relevant and were added to the 25 outcome studies identified by Nagykaldi et al,22 for a total of 71 articles; 54 were retrieved for closer inspection. From these articles, 44 articles were judged to meet the inclusion criteria and included in the review.17,20,34–36,47–85 The reasons for not including the 10 articles were because the intervention under study did not include an individual with the explicit role of facilitator (n = 4);86–89 the study had already been captured in the facilitation literature with no new information, had a shorter follow-up period, or measured a different outcome on the same cohort (n = 4);90–93 and the article was an editorial or written in the narrative (n = 2).94,95 In the instance where research teams produced several outcome-based studies from the same intervention, all published studies were included when the populations and outcomes being measured were different between studies.35,36,76 Finally, 21 studies were excluded because of limited validity and a quality rating of less than 6, leaving 23 studies with greater validity for the final analysis (Table 1). Supplemental Table 1, available at http://www.annfammed.org/content/10/1/63/suppl/DC1, provides the reasons for exclusion. Seventy-six percent of the 21 studies excluded were nonrandomized trials, case studies, or before-after designs with no control group. Further, 91% did not report conducting an intent-to-treat analysis, 95% did not report blinding outcome assessments, and 100% did not report allocation concealment. Because of unmatched groups at baseline, 7 of the 9 controlled clinical trials and randomized controlled trials were excluded.

Figure 1.

Flowchart of identification of relevant studies.

Table 1.

Research Design Characteristics of Studies with High Methodological Performance Scores (N = 23)

| Author, Year | Scorea | Trial Characteristics | Outcome Measure | Months Follow-up (% Retention) | Effect Size SMD (SE) 95% CI |

|---|---|---|---|---|---|

| Kottke et al,63 1992 | 6 | Design: CCT Allocation concealed: N Blindedb: Y Intent to treat: Y |

Mean percentage of patients advised to quit | 19 (83) | 1.01 (0.52) 0.00 to 2.02c |

| McBride et al,67 2000 | 6 | Design: RCT Allocation concealed: N Blindedb: N Intent to treat: N |

Percentage of records with CVD screening | 18 (100) | 0.82 (0.46) –0.08 to 1.72 |

| Stange et al,48 2003 | 6 | Design: RCT Allocation concealed: N Blindedb: N Intent to treat: N |

Mean rate of preventive service | 24 (NR) | 0.59 (0.23) 0.13- to 1.05c |

| Lobo et al,57 2004 | 6 | Design: RCT Allocation concealed: Y Blindedb: N Intent to treat: N |

Mean health-related quality of life | 21 (57) | 0.44 (0.18) 0.09 to 0.79c |

| Roetzhiem et al,34 2005 | 6 | Design: C-RCT Allocation concealed: N Blindedb: Y Intent to treat: N |

Mean number of CA-screening tests | 24 (100) | 0.84 (0.29) 0.27 to 1.41c |

| Hogg et al,78 2008 | 6 | Design: CCT Allocation concealed: N Blindedb: N Intent to treat: Y |

Mean preventive performance index | 6 (87) | 0.73 (0.29) 0.16 to 1.30c |

| Aspy et al,82 2008 | 6 | Design: CCT Allocation concealed: N Blindedb: Y Intent to treat: N |

Percent given physical inactivity brief intervention | 18 (89) | 1.12 (0.36) 0.42 to 1.82c |

| Jaén et al,85 2010 | 6 | Design: RCT Allocation concealed: N Blindedb: Y Intent to treat: N |

Mean prevention service score | 26 (86) | 0.04 (0.37) –0.69 to 0.77 |

| Cockburn et al,70 1992 | 7 | Design: RCT Allocation concealed: N Blindedb: N Intent to treat: N |

Mean number of cessation cards used | 3 (79) | 0.24 (0.15) –0.06 to 0.54 |

| Modell et al,55 1998 | 7 | Design: RCT Allocation concealed: N Blindedb: N Intent to treat: N |

Median number of hemoglobin tests | 12 (100) | 0.32 (0.40) –0.45 to 1.09 |

| Engels et al,80 2006 | 7 | Design: RCT Allocation concealed: Y Blindedb: N Intent to treat: Y |

Mean number of projects initiated | 12 (92) | 1.04 (0.32) 0.41 to 1.67c |

| Aspy et al,83 2008 | 7 | Design: RCT Allocation concealed: N Blindedb: Y Intent to treat: N |

Mean percent with MMG | 9 (100) | 1.31 (0.57) 0.20 to 2.42c |

| Deitrich et al,59 1992 | 18 | Design: RCT Allocation concealed: N Blindedb: Y Intent to treat: N |

Mean rate of prevention service | 12 (96) | 0.59 (0.29) 0.02 to 1.16c |

| Lobo et al,57 2002 | 8 | Design: RCT Allocation concealed: Y Blindedb: N Intent to treat: Y |

Mean number of adherence items | 21 (100) | 0.66 (0.19) 0.30 to 1.02c |

| Bryce et al,47 1995 | 9 | Design: RCT Allocation concealed: Y Blindedb: Y Intent to treat: Y |

Percentage of consults initiated for asthma | 12 (93.3) | 0.62 (0.31) 0.02 to 1.22c |

| Kinsinger et al,56 1998 | 9 | Design: RCT Allocation concealed: Y Blindedb: Y Intent to treat: N |

Percentage of patients with CBE and MMG | 18 (94) | 0.47 (0.27) –0.05 to 0.99 |

| Solberg et al,69 1998 | 9 | Design: RCT Allocation concealed: Y Blindedb: N Intent to treat: Y |

Mean number of preventive systems processes | 22 (100) | 1.08 (0.32) 0.45 to 1.71c |

| Lemelin et al17 2001 | 9 | Design: RCT Allocation concealed: Y Blindedb: Y Intent to treat: N |

Mean preventive performance index | 18 (98) | 0.98 (0.32) 0.36 to 1.60c |

| Frijling et al,35 2002 | 9 | Design: C-RCT Allocation concealed: Y Blindedb: Y Intent to treat: Y |

Percentage giving eye examination | 21 (95) | 0.26 (0.18) –0.09 to 0.61 |

| Frijling et al,65 2003 | 9 | Design: C-RCT Allocation concealed: Y Blindedb: Y Intent to treat: Y |

Percentage assessing hypertension risk | 21 (95) | 0.39 (0.18) 0.04 to 0.74c |

| Margolis et al,58 2004 | 10 | Design: RCT Allocation concealed: Y Blindedb: Y Intent to treat: Y |

Mean percentage given preventive service | 30 (100) | 0.60 (0.31) 0.00 to 1.20c |

| Mold et al,81 2008 | 10 | Design: RCT Allocation concealed: Y Blindedb: Y Intent to treat: Y |

Percentage implementing processes | 6 (100) | 0.94 (0.53) –0.10 to 1.98 |

| Hogg et al,79 2008 | 12 | Design: RCT Allocation concealed: Y Blindedb: Y Intent to treat: Y |

Mean preventive performance index | 13 (100) | 0.11 (0.27) –0.42 to 0.64 |

CA = cancer; CBE = clinical breast examination; CCT = controlled clinical trial; C-RCT = cluster randomized-controlled trial; CVD = cardiovascular disease; MMG = mammography; N = no (not reported); RCT = randomized controlled trial; SE = standard error; SMD = standardized mean difference; Y = yes (reported).

Scored on a scale from 0 to 12, in which the higher the score, the higher the quality of the study methods.

Single- or double-blind study.

P <.05; z statistic.

Characteristics of Selected Studies

The 23 controlled clinical trials and randomized controlled trials included a total 1,398 participating practices: 697 randomized or allocated to the practice facilitation intervention, and 701 to a control group. The mean number of primary care practices participating per study was 59.5 (95% CI, 42.1–77.0). Table 1 displays the research design characteristics of the 23 trials included in the analysis, along with the effect size for each study rank-ordered by methodological quality. The selected trials were reported from 1992 through 2010, spanning 18 years. Of the 20 studies that were randomized controlled trials, 3 were cluster randomized-controlled trials in which clusters of patients were randomized rather than the practices. Eleven studies reported having adhered to the intention-to-treat principle, 12 reported allocation concealment, and 14 reported blinding of assessment. Eighty-three percent of studies had a form of preventive service as the primary outcome measure (Table 1), and of those studies, 13 used the mean performance and 6 used a percentage performance.

Supplemental Table 2, available at http://www.annfammed.org/content/10/1/63/suppl/DC1, provides an overview of intervention characteristics for the 23 high-quality studies, including targeted behavior, facilitator qualifications, intervention components, and the tools used. Forty-four percent of studies described the qualifications of the facilitator as a registered nurse or masters’ educated person with training. The tools used varied; however, audit with feedback was a component of each intervention study, 91% used interactive consensus building and goal setting, and 39% used a reminder system. Seventy-four percent of the studies reported that the practice facilitator tailored the intervention to the needs of the practice.

Intervention Effects

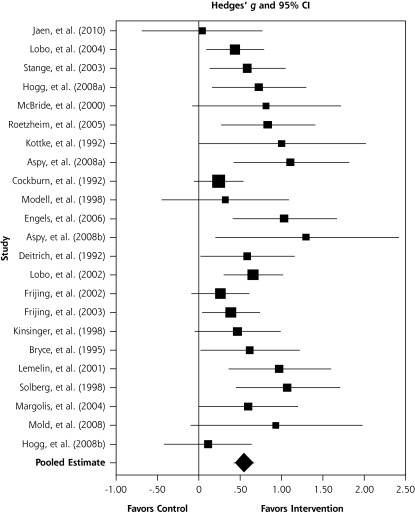

Figure 2 is a forest plot that shows most of the studies have effect size point estimates which favor the intervention condition; the test for an overall effect across the 23 included studies is significant (z = 8.76; P <.001), with an overall moderate effect size point estimate of 0.56 (95% CI, 0.43–0.68) based on a random-effects model. Converting the SMD of 0.56 to an odds ratio (OR)24 results in an OR = 2.76 (95% CI, 2.18–3.43). Although some statistical heterogeneity is expected given practice facilitation studies with differing intervention components, outcomes, and measures, the final random-effects model was homogenous, with the test for heterogeneity being nonsignificant, χ2 (1, n = 22) = 27.55; P = .19. To further understand the percentage of variability in effects caused by the heterogeneity, we computed an I2 statistic,24 which showed that 20% of the variation among the studies could not be explained by chance.

Figure 2.

Effect of practice facilitation vs control in random-effects meta-analysis sorted from low to high methodological performance and effect size (N = 23).

We then conducted an influence analysis to test the sensitivity of the overall 0.56 effect size of any 1 of the 23 studies. The observed impact of any single study on the overall point estimate was negligible; the effect varied from as high as 0.58 (95% CI, 0.46–0.71) with the Cockburn et al70 study removed to as low as 0.53 (95% CI 0.41–0.65) with the study by Solberg et al69 removed.

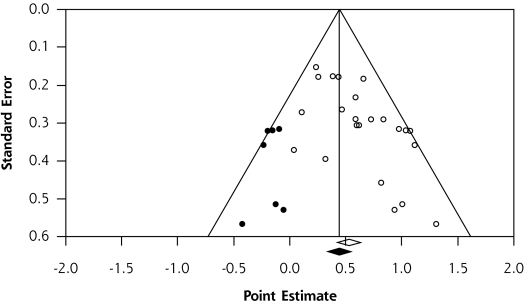

Figure 3 is a publication bias funnel plot of practice facilitation effect size as represented by the standardized mean difference (x-axis) and the standard error (y-axis) for each of the 23 practice facilitation studies. The funnel plot provides evidence of publication bias, in that there were fewer small studies with small effects included in the meta-analysis, as displayed by the imputed results. Publication bias was confirmed by the Begg and Mazumdar44 test (P = .003) and the Egger et al45 test (P = .003).

Figure 3.

Publication bias funnel plot with observed (N = 23) and imputed studies.

There was no association between the methodological characteristics of studies as determined by the methodologic performance score and effect size (β = –0.04; P = .28). Further, Jüni et al46 have shown that 3 key domains have been associated with biased effect size estimates in meta-analysis. Effect sizes for the 23 included studies did not differ significantly in terms of allocation concealment (P = .77), blinding of outcome assessments (P = .80), and the handling of attrition through intent to treat (P = .85).

Practice Facilitation Effect Size Moderators

There was no significant difference between studies published in or after 2001 when compared with studies published before 2001 (P = .69), and the relationship between duration of the intervention and effect size was not significant (P = .94). Those practice facilitation studies that reported an intervention tailored to the context and needs of the practice had a significantly larger overall effect size of 0.62 (95% CI, 0.48–0.75; P = .05) compared with studies34,35,47,55,70 that did not report tailoring (SMD = 0.37; 95% CI, 0.16–0.58).

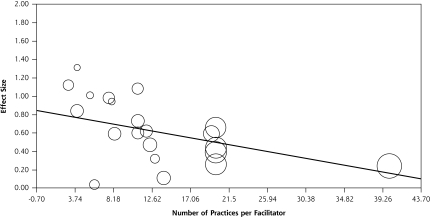

The scatter plot in Figure 4 depicts the relationship between the ratio of practices per facilitator (Supplemental Table 2) and effect size for each study. It shows the fitted regression line and a significant negative association between the number of practices per facilitator and effect size (β = –0.02; P = .004). Each selected study is shown on the graph as a bubble, and the size of the bubble represents the amount of weight associated with the results of that study. Data were not available for 2 of the studies.67,80

Figure 4.

Number of practices per facilitator and effect size (n = 21).

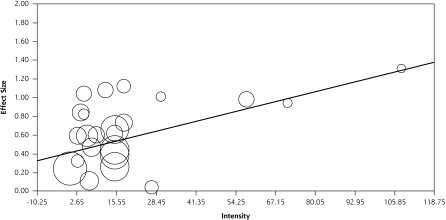

Intensity of practice facilitation was calculated by multiplying the average number of contacts with a practice by the average meeting time in hours (Supplemental Table 1). Figure 5 depicts a significant trend between the intensity of the intervention and the effect size (β = 0.008; P = .03).

Figure 5.

Intensity of intervention and effect size (N = 23).

DISCUSSION

The translation of evidence-based guidelines into practice is complex, and research continues to find major gaps between research evidence and practice.96,97 Alternative intervention models are being advanced to address the numerous challenges that face traditional primary care practices in providing high-quality care.96,98 This systematic review and meta-analysis has shown the potential for practice facilitation to address the challenges of translating evidence into practice. Primary care practices are 2.76 (95% CI, 2.18–3.43) times more likely to adopt evidence-based guidelines through practice facilitation.

These findings should prove important to health policy makers and those involved in practice-based research networks99 when designing quality improvement programs. We know that practice facilitation improves adoption of guidelines in multiple clinical practice areas that focus on prevention. Prevention activities in health care organizations are well suited to a practice facilitation approach, because much of the uptake can be improved by a focus on the organization of care, such as using simple reminder systems, recalls, and team care that need not involve the physician. We do not know whether facilitation can be translated to other areas that will require more direct physician uptake, such as clinical management requiring medication optimization or chronic illness care.

All the studies included audit with feedback, practice consensus building, and goal setting as key components, as well as basing the change approach on the system level and the organization change on common quality improvement tools, such as plan-do-study-act.100 Many also incorporated collaborative meetings, whether face to face or virtually. Such collaborative meetings can add costs to the programs, and it is not known whether these resource-intensive meetings increase effectiveness. There was variation in the process of implementation among the studies related to the facilitator qualifications, training, number of practices, intensity, and duration of intervention.

We found that as the number of practices per facilitator increases, the overall effect of facilitation diminishes but did not plateau. The intensity of the intervention is associated with larger effects as well. In addition, whether the intervention was tailored for the practice also affected effectiveness, and we found that a larger effect is associated with tailored interventions. Previous research has shown that tailored interventions are key to improving performance,48,92,101 and this study has confirmed this finding.

Implementing practice facilitation into routine quality improvement programs for organizations can be challenging. These findings support the need to tailor to context, to incorporate audit and feedback with goal setting, and to consider intensity of the intervention. The key components appear to be processes of care and organization of care with less focus on content knowledge. These findings, in addition to the qualifications of the facilitator, hold important resource implications that will complicate adequately funding facilitator interventions.102

There are several important limitations to this study. First, in an effort to focus and limit the scope of work involved, only published journal literature was included. We did not search for unpublished studies or original data. Second, although the variability between studies was consistent with what would be expected to occur by chance alone, the differing outcome measures, settings, and the diversity of guidelines being implemented and the potential modifying effect of such factors warrant caution.103 Third, not all of the study characteristics were analyzed in terms of the relationship to effects, and further research and meta-regression analysis are recommended.24 Finally, there is evidence of publication bias for practice facilitation research. Researchers should publish good-quality studies with null effects to better understand the limits of practice facilitation, as it is unlikely to be able to change successfully every type of targeted evidence-based behavior in all contexts.

In conclusion, despite the professional, organizational, and broader environmental challenges of getting evidence into practice, this study has found that practice facilitation can work. An understanding of the conceptual model for practice facilitation exists,21 and more randomized controlled trials to test the model are not required. Instead, large-scale collaborative, practice-based evaluation research is needed to understand the impact of facilitation on the adoption of guidelines, the relationship between context and the components of facilitation, sustainability, and the costs to the health system.104,105 This study has provided information on the empirical effects of practice facilitation that can be used to adjust expectation for what is realistic based on the current evidence and to move forward.

Acknowledgments

Roy Cameron, PhD, Walter Rosser, MD, CCFP, FCFP, MRCGP (UK); Steve Brown, PhD, Paul W. McDonald, 3PhD, FRIPH, and, Ian McKillop, PhD, contributed to the review of the article. Dianne Zakaria for contributed to the review and methodological assessment of the practice facilitation literature.

Footnotes

Conflicts of interest: authors report none.

References

- 1.Hutchison BG, Abelson J, Woodward CA, Norman G. Preventive care and barriers to effective prevention. How do family physicians see it? Can Fam Physician. 96;42:1693–1700 [PMC free article] [PubMed] [Google Scholar]

- 2.Stange KC. Primary care research: barriers and opportunities. J Fam Pract. 1996;42(2):192–198 [PubMed] [Google Scholar]

- 3.Hulscher MEJL, van Drenth BB, Mokkink HGA, van der Wouden JC, Grol RPTM. Barriers to preventive care in general practice: the role of organizational and attitudinal factors. Br J Gen Pract. 1997;47 (424):711–714 [PMC free article] [PubMed] [Google Scholar]

- 4.Cabana MD, Rand CS, Powe NR, et al. Why don’t physicians follow clinical practice guidelines? A framework for improvement. JAMA. 1999;282(15):1458–1465 [DOI] [PubMed] [Google Scholar]

- 5.McKenna HP, Ashton S, Keeney S. Barriers to evidence-based practice in primary care. J Adv Nurs. 2004;45(2):178–189 [DOI] [PubMed] [Google Scholar]

- 6.Grimshaw JM, Thomas RE, MacLennan G, et al. Effectiveness and efficiency of guideline dissemination and implementation strategies. Int J Technol Assess Health Care. 2005;21(1):149. [DOI] [PubMed] [Google Scholar]

- 7.Eccles MP, Grimshaw JM. Selecting, presenting and delivering clinical guidelines: are there any “magic bullets”? Med J Aust. 2004;180 (6)(Suppl):S52–S54 [DOI] [PubMed] [Google Scholar]

- 8.Oxman AD, Thomson MA, Davis DA, Haynes RB. No magic bullets: a systematic review of 102 trials of interventions to improve professional practice. CMAJ. 1995;153(10):1423–1431 [PMC free article] [PubMed] [Google Scholar]

- 9.Grimshaw JM, Russell IT. Effect of clinical guidelines on medical practice: a systematic review of rigorous evaluations. Lancet. 1993; 342(8883):1317–1322 [DOI] [PubMed] [Google Scholar]

- 10.Lomas J, Haynes RB. A taxonomy and critical review of tested strategies for the application of clinical practice recommendations: from “official” to “individual” clinical policy. Am J Prev Med. 1988;4(4) (Suppl):77–94, discussion 95–97 [PubMed] [Google Scholar]

- 11.Wensing M, Grol R. Single and combined strategies for implementing changes in primary care: a literature review. Int J Qual Health Care. 1994;6(2):115–132 [DOI] [PubMed] [Google Scholar]

- 12.Wensing M, van der Weijden T, Grol R. Implementing guidelines and innovations in general practice: which interventions are effective? Br J Gen Pract. 1998;48(427):991–997 [PMC free article] [PubMed] [Google Scholar]

- 13.Prior M, Guerin M, Grimmer-Somers K. The effectiveness of clinical guideline implementation strategies—a synthesis of systematic review findings. J Eval Clin Pract. 2008;14(5):888–897 [DOI] [PubMed] [Google Scholar]

- 14.Grimshaw JM, Shirran L, Thomas R, et al. Changing provider behavior: an overview of systematic reviews of interventions. Med Care. 2001;39(8)(Suppl 2):II2–II45 [PubMed] [Google Scholar]

- 15.Harvey G, Loftus-Hills A, Rycroft-Malone J, et al. Getting evidence into practice: the role and function of facilitation. J Adv Nurs. 2002; 37(6):577–588 [DOI] [PubMed] [Google Scholar]

- 16.Kitson A, Harvey G, McCormack B. Enabling the implementation of evidence based practice: a conceptual framework. Qual Health Care. 1998;7(3):149–158 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Lemelin J, Hogg W, Baskerville N. Evidence to action: a tailored multifaceted approach to changing family physician practice patterns and improving preventive care. CMAJ. 2001;164(6):757–763 [PMC free article] [PubMed] [Google Scholar]

- 18.Dietrich AJ, O’Connor GT, Keller A, Carney PA, Levy D, Whaley FS. Cancer: improving early detection and prevention. A community practice randomised trial. BMJ. 1992;304(6828):687–691 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Baskerville NB, Hogg W, Lemelin J. Process evaluation of a tailored multi-faceted approach to changing family physician practice patterns and improving preventive care. J Fam Pract. 2001;50(3): W242–W249 [PubMed] [Google Scholar]

- 20.Hulscher MEJL, van Drenth BB, van der Wouden JC, Mokkink HGA, van Weel C, Grol RPTM. Changing preventive practice: a controlled trial on the effects of outreach visits to organise prevention of cardiovascular disease. Qual Health Care. 1997;6(1):19–24 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Dogherty EJ, Harrison MB, Graham ID. Facilitation as a role and process in achieving evidence-based practice in nursing: a focused review of concept and meaning. Worldviews Evid Based Nurs. 2010; 7(2):76–89 [DOI] [PubMed] [Google Scholar]

- 22.Nagykaldi Z, Mold JW, Aspy CB. Practice facilitators: a review of the literature. Fam Med. 2005;37(8):581–588 [PubMed] [Google Scholar]

- 23.Liberati A, Altman DG, Tetzlaff J, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. PLoS Med. 2009;6(7):e1000100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Higgins J, Green S. Cochrane Handbook for Systematic Reviews of Interventions Version 5.0.0. Chichester, United Kingdom: The Cochrane Collaboration, 2008 [Google Scholar]

- 25.Rycroft-Malone J, Harvey G, Seers K, Kitson A, McCormack B, Titchen A. An exploration of the factors that influence the implementation of evidence into practice. J Clin Nurs. 2004;13(8):913–924 [DOI] [PubMed] [Google Scholar]

- 26.Katrak P, Bialocerkowski AE, Massy-Westropp N, Kumar S, Grimmer K. A systematic review of the content of critical appraisal tools. BMC Med Res Methodol. 2004; 4:22 http://www.biomedcentral.com/1471-2288/4/22 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sanderson S, Tatt ID, Higgins JP. Tools for assessing quality and susceptibility to bias in observational studies in epidemiology: a systematic review and annotated bibliography. Int J Epidemiol. 2007;36(3):666–676 [DOI] [PubMed] [Google Scholar]

- 28.Bhogal SK, Teasell RW, Foley NC, Speechley MR. The PEDro scale provides a more comprehensive measure of methodological quality than the Jadad scale in stroke rehabilitation literature. J Clin Epidemiol. 2005;58(7):668–673 [DOI] [PubMed] [Google Scholar]

- 29.Grimshaw J, McAuley LM, Bero LA, et al. Systematic reviews of the effectiveness of quality improvement strategies and programmes. Qual Saf Health Care. 2003;12(4):298–303 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Cook DJ, Sackett DL, Spitzer WO. Methodologic guidelines for systematic reviews of randomized control trials in health care from the Potsdam Consultation on Meta-Analysis. J Clin Epidemiol. 1995;48 (1):167–171 [DOI] [PubMed] [Google Scholar]

- 31.SPSS Inc SPSS (Version 18.0.0) [Computer software]. Chicago, IL: SPSS Inc; 2009 [Google Scholar]

- 32.Comprehensive Meta-Analysis. [Computer software]. Englewood, NJ: Biostat; 2006 [Google Scholar]

- 33.Grimshaw JM, Thomas RE, MacLennan G, et al. Effectiveness and efficiency of guideline dissemination and implementation strategies. Health Technol Assess. 2004;8(6):iii–iv, 1–72 [DOI] [PubMed] [Google Scholar]

- 34.Roetzheim RG, Christman LK, Jacobsen PB, Schroeder J, Abdulla R, Hunter S. Long-term results from a randomized controlled trial to increase cancer screening among attendees of community health centers. Ann Fam Med. 2005;3(2):109–114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Frijling BD, Lobo CM, Hulscher MEJL, et al. Multifaceted support to improve clinical decision making in diabetes care: a randomized controlled trial in general practice. Diabet Med. 2002;19(10):836–842 [DOI] [PubMed] [Google Scholar]

- 36.Frijling BD, Hulscher MEJL, van Leest LATM, et al. Multifaceted support to improve preventive cardiovascular care: a nationwide, controlled trial in general practice. Br J Gen Pract. 2003;53(497):934–941 [PMC free article] [PubMed] [Google Scholar]

- 37.Donner A, Birkett N, Buck C. Randomization by cluster. Sample size requirements and analysis. Am J Epidemiol. 1981;114(6):906–914 [DOI] [PubMed] [Google Scholar]

- 38.Campbell MK, Mollison J, Grimshaw JM. Cluster trials in implementation research: estimation of intracluster correlation coefficients and sample size. Stat Med. 2001;20(3):391–399 [DOI] [PubMed] [Google Scholar]

- 39.Donner A, Klar N. Issues in the meta-analysis of cluster randomized trials. Stat Med. 2002;21(19):2971–2980 [DOI] [PubMed] [Google Scholar]

- 40.Chinn S. A simple method for converting an odds ratio to effect size for use in meta-analysis. Stat Med. 2000;19(22):3127–3131 [DOI] [PubMed] [Google Scholar]

- 41.DerSimonian R, Laird N. Meta-analysis in clinical trials. Control Clin Trials. 1986;7(3):177–188 [DOI] [PubMed] [Google Scholar]

- 42.Mulrow C, Cook D. Systematic Reviews: Synthesis of Best Evidence for Health Care Decisions. Philadelphia, PA: American College of Physicians; 1998 [Google Scholar]

- 43.Lau J, Ioannidis JP, Terrin N, Schmid CH, Olkin I. The case of the misleading funnel plot. BMJ. 2006;333(7568):597–600 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Begg CB, Mazumdar M. Operating characteristics of a rank correlation test for publication bias. Biometrics. 1994;50(4):1088–1101 [PubMed] [Google Scholar]

- 45.Egger M, Davey Smith G, Schneider M, Minder C. Bias in meta-analysis detected by a simple, graphical test. BMJ. 1997;315(7109): 629–634 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Jüni P, Witschi A, Bloch R, Egger M. The hazards of scoring the quality of clinical trials for meta-analysis. JAMA. 1999;282(11):1054–1060 [DOI] [PubMed] [Google Scholar]

- 47.Bryce FP, Neville RG, Crombie IK, Clark RA, McKenzie P. Controlled trial of an audit facilitator in diagnosis and treatment of childhood asthma in general practice. BMJ. 1995;310(6983):838–842 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Stange KC, Goodwin MA, Zyzanski SJ, Dietrich AJ. Sustainability of a practice-individualized preventive service delivery intervention. Am J Prev Med. 2003;25(4):296–300 [DOI] [PubMed] [Google Scholar]

- 49.Lawrence M, Packwood T. Adapting total quality management for general practice: evaluation of a programme. Qual Health Care. 1996;5(3):151–158 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Hearnshaw H, Reddish S, Carlyle D, Baker R, Robertson N. Introducing a quality improvement programme to primary healthcare teams. Qual Health Care. 1998;7(4):200–208 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Crotty M, Litt JC, Ramsay AT, Jacobs S, Weller DP. Will facilitators be acceptable in Australian general practice? A before and after feasibility study. Aust Fam Physician. 1993;22(9):1643–1647 [PubMed] [Google Scholar]

- 52.Geboers H, van der Horst M, Mokkink H, et al. Setting up improvement projects in small scale primary care practices: feasibility of a model for continuous quality improvement. Qual Health Care. 1999;8(1):36–42 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Cox S, Wilcock P, Young J. Improving the repeat prescribing process in a busy general practice. A study using continuous quality improvement methodology. Qual Health Care. 1999;8(2):119–125 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Nagykaldi Z, Mold JW. Diabetes Patient Tracker, a personal digital assistant-based diabetes management system for primary care practices in Oklahoma. Diabetes Technol Ther. 2003;5(6):997–1001 [DOI] [PubMed] [Google Scholar]

- 55.Modell M, Wonke B, Anionwu E, et al. A multidisciplinary approach for improving services in primary care: randomised controlled trial of screening for haemoglobin disorders. BMJ. 1998;317(7161):788–791 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Kinsinger LS, Harris R, Qaqish B, Strecher V, Kaluzny A. Using an office system intervention to increase breast cancer screening. J Gen Intern Med. 1998;13(8):507–514 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Lobo CM, Frijling BD, Hulscher ME, et al. Improving quality of organizing cardiovascular preventive care in general practice by outreach visitors: a randomized controlled trial. Prev Med. 2002; 35(5):422–429 [DOI] [PubMed] [Google Scholar]

- 58.Margolis PA, Lannon CM, Stuart JM, Fried BJ, Keyes-Elstein L, Moore DE., Jr Practice based education to improve delivery systems for prevention in primary care: randomised trial. BMJ. 2004;328 (7436):388–392 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Dietrich AJ, O’Connor GT, Keller A, Carney PA, Levy D, Whaley FS. Cancer: improving early detection and prevention. A community practice randomised trial. BMJ. 1992;304(6828):687–691 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Rebelsky MS, Sox CH, Dietrich AJ, Schwab BR, Labaree CE, Brown-McKinney N. Physician preventive care philosophy and the five year durability of a preventive services office system. Soc Sci Med. 1996;43(7):1073–1081 [DOI] [PubMed] [Google Scholar]

- 61.Horowitz CR, Goldberg HI, Martin DP, et al. Conducting a randomized controlled trial of CQI and academic detailing to implement clinical guidelines. Jt Comm J Qual Improv. 1996;22(11):734–750 [DOI] [PubMed] [Google Scholar]

- 62.Carney PA, Dietrich AJ, Keller AA, Landgraf J, O’Connor GT. Tools, teamwork, and tenacity: an office system for cancer prevention. J Fam Pract. 1992;35(4):388–394 [PubMed] [Google Scholar]

- 63.Kottke TE, Solberg LI, Brekke ML, Conn SA, Maxwell P, Brekke MJ. A controlled trial to integrate smoking cessation advice into primary care practice: Doctors Helping Smokers, Round III. J Fam Pract. 1992;34(6):701–708 [PubMed] [Google Scholar]

- 64.McKenzie A, Grylls J. Diabetic retinal photographic screening: a model for introducing audit and improving general practitioner care of diabetic patients in a rural setting. Aust J Rural Health. 1999; 7(4):237–239 [DOI] [PubMed] [Google Scholar]

- 65.Frijling BD, Lobo CM, Hulscher MEJL, et al. Intensive support to improve clinical decision making in cardiovascular care: a randomised controlled trial in general practice. Qual Saf Health Care. 2003;12(3):181–187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Jones JN, Marsden P. Improved diabetes care in a UK health district. Diabet Med. 1992;9(2):176–180 [DOI] [PubMed] [Google Scholar]

- 67.McBride P, Underbakke G, Plane MB, et al. Improving prevention systems in primary care practices: the Health Education and Research Trial (HEART). J Fam Pract. 2000;49(2):115–125 [PubMed] [Google Scholar]

- 68.Bordley WC, Margolis PA, Stuart J, Lannon C, Keyes L. Improving preventive service delivery through office systems. Pediatrics. 2001;108(3):E41. [DOI] [PubMed] [Google Scholar]

- 69.Solberg LI, Kottke TE, Brekke ML. Will primary care clinics organize themselves to improve the delivery of preventive services? A randomized controlled trial. Prev Med. 1998;27(4):623–631 [DOI] [PubMed] [Google Scholar]

- 70.Cockburn J, Ruth D, Silagy C, et al. Randomised trial of three approaches for marketing smoking cessation programmes to Australian general practitioners. BMJ. 1992;304(6828):691–694 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Fullard E, Fowler G, Gray M. Facilitating prevention in primary care. Br Med J (Clin Res Ed). 1984;289(6458):1585–1587 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Fullard E, Fowler G, Gray M. Promoting prevention in primary care: controlled trial of low technology, low cost approach. Br Med J (Clin Res Ed). 1987;294(6579):1080–1082 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Szczepura A, Wilmot J, Davies C, Fletcher J. Effectiveness and cost of different strategies for information feedback in general practice. Br J Gen Pract. 1994;44(378):19–24 [PMC free article] [PubMed] [Google Scholar]

- 74.Bashir K, Blizard B, Bosanquet A, Bosanquet N, Mann A, Jenkins R. The evaluation of a mental health facilitator in general practice: effects on recognition, management, and outcome of mental illness. Br J Gen Pract. 2000;50(457):626–629 [PMC free article] [PubMed] [Google Scholar]

- 75.McCowan C, Neville RG, Crombie IK, Clark RA, Warner FC. The facilitator effect: results from a four-year follow-up of children with asthma. Br J Gen Pract. 1997;47(416):156–160 [PMC free article] [PubMed] [Google Scholar]

- 76.Lobo CM, Frijling BD, Hulscher MEJL, et al. Effect of a comprehensive intervention program targeting general practice staff on quality of life in patients at high cardiovascular risk: a randomized controlled trial. Qual Life Res. 2004;13(1):73–80 [DOI] [PubMed] [Google Scholar]

- 77.Hearnshaw H, Baker R, Robertson N. Multidisciplinary audit in primary healthcare teams: facilitation by audit support staff. Qual Health Care. 1994;3(3):169–172 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Hogg W, Lemelin J, Moroz I, Soto E, Russell G. Improving prevention in primary care: Evaluating the sustainability of outreach facilitation. Can Fam Physician. 2008;54(5):712–720 [PMC free article] [PubMed] [Google Scholar]

- 79.Hogg W, Lemelin J, Graham ID, et al. Improving prevention in primary care: evaluating the effectiveness of outreach facilitation. Fam Pract. 2008;25(1):40–48 [DOI] [PubMed] [Google Scholar]

- 80.Engels Y, van den Hombergh P, Mokkink H, van den Hoogen H, van den Bosch Grol WR. The effects of a team-based continuous quality improvement intervention on the management of primary care: a randomised controlled trial. Br J Gen Pract. 2006;56(531):781–787 [PMC free article] [PubMed] [Google Scholar]

- 81.Mold JW, Aspy CA, Nagykaldi Z. Oklahoma Physicians Resource/ Research Network Implementation of evidence-based preventive services delivery processes in primary care: an Oklahoma Physicians Resource/Research Network (OKPRN) study. J Am Board Fam Med. 2008;21(4):334–344 [DOI] [PubMed] [Google Scholar]

- 82.Aspy CB, Mold JW, Thompson DM, et al. Integrating screening and interventions for unhealthy behaviors into primary care practices. Am J Prev Med. 2008;35(5)(Suppl):S373–S380 [DOI] [PubMed] [Google Scholar]

- 83.Aspy CB, Enright M, Halstead L, Mold JWOklahoma Physicians Resource/Research Network Improving mammography screening using best practices and practice enhancement assistants: an Oklahoma Physicians Resource/Research Network (OKPRN) study. J Am Board Fam Med. 2008;21(4):326–333 [DOI] [PubMed] [Google Scholar]

- 84.Kauth MR, Sullivan G, Blevins D, et al. Employing external facilitation to implement cognitive behavioral therapy in VA clinics: a pilot study. Implement Sci. 2010;5(75):75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Jaén CR, Ferrer RL, Miller WL, et al. Patient outcomes at 26 months in the patient-centered medical home National Demonstration Project. Ann Fam Med. 2010;8(Suppl 1):S57–S67, S92 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Palmer RH, Louis TA, Peterson HF, Rothrock JK, Strain R, Wright EA. What makes quality assurance effective? Results from a randomized, controlled trial in 16 primary care group practices. Med Care. 1996;34(9)(Suppl):SS29–SS39 [DOI] [PubMed] [Google Scholar]

- 87.Manfredi C, Czaja R, Freels S, Trubitt M, Warnecke R, Lacey L. Prescribe for health. Improving cancer screening in physician practices serving low-income and minority populations. Arch Fam Med. 1998;7(4):329–337 [DOI] [PubMed] [Google Scholar]

- 88.Gottlieb NH, Huang PP, Blozis SA, Guo JL, Murphy Smith M. The impact of Put Prevention into Practice on selected clinical preventive services in five Texas sites. Am J Prev Med. 2001;21(1):35–40 [DOI] [PubMed] [Google Scholar]

- 89.O’Connor PJ, Desai J, Solberg LI, et al. Randomized trial of quality improvement intervention to improve diabetes care in primary care settings. Diabetes Care. 2005;28(8):1890–1897 [DOI] [PubMed] [Google Scholar]

- 90.Ruhe MC, Weyer SM, Zronek S, Wilkinson A, Wilkinson PS, Stange KC. Facilitating practice change: lessons from the STEP-UP clinical trial. Prev Med. 2005;40(6):729–734 [DOI] [PubMed] [Google Scholar]

- 91.Fullard E. Extending the roles of practice nurses and facilitators in preventing heart disease. Practitioner. 1987;231(1436):1283–1286 [PubMed] [Google Scholar]

- 92.Goodwin MA, Zyzanski SJ, Zronek S, et al. The Study to Enhance Prevention by Understanding Practice (STEP-UP) A clinical trial of tailored office systems for preventive service delivery. Am J Prev Med. 2001;21(1):20–28 [DOI] [PubMed] [Google Scholar]

- 93.Nutting PA, Crabtree BF, Stewart EE, et al. Effect of facilitation on practice outcomes in the National Demonstration Project model of the patient-centered medical home. Ann Fam Med. 2010;8(Suppl 1): S33–S44, S92 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Solberg LI. Guideline implementation: what the literature doesn’t tell us. Jt Comm J Qual Improv. 2000;26(9):525–537 [DOI] [PubMed] [Google Scholar]

- 95.Solberg LI, Kottke TE, Brekke ML. Will primary care clinics organize themselves to improve the delivery of preventive services? A randomized controlled trial. Prev Med. 1998;27(4):623–631 [DOI] [PubMed] [Google Scholar]

- 96.Crabtree BF, Nutting PA, Miller WL, et al. Primary care practice transformation is hard work: insights from a 15 year developmental program of research. Med Care. 2010. Sep 17 [Epub ahead of print] [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Lenfant C. Shattuck lecture—clinical research to clinical practice—lost in translation? N Engl J Med. 2003;349(9):868–874 [DOI] [PubMed] [Google Scholar]

- 98.Bodenheimer T, Wagner EH, Grumbach K. Improving primary care for patients with chronic illness. JAMA. 2002;288(14):1775–1779 [DOI] [PubMed] [Google Scholar]

- 99.Practice-Based Research Networks. 2010. http://pbrn.ahrq.gov/portal/server.pt/community/practice_based_research_networks_%28pbrn%29__about/852.

- 100.Deming WE. Out of the Crisis. Cambridge, MA: Cambridge University Press; 1986 [Google Scholar]

- 101.Cohen DJ, Crabtree BF, Etz RS, et al. Fidelity versus flexibility: translating evidence-based research into practice. Am J Prev Med. 2008;35(5)(Suppl):S381–S389 [DOI] [PubMed] [Google Scholar]

- 102.Hogg W, Baskerville N, Lemelin J. Cost savings associated with improving appropriate and reducing inappropriate preventive care: cost-consequences analysis. BMC Health Serv Res. 2005;5:20 http://www.biomedcentral.com/1472-6963/5/20 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Gerber S, Tallon D, Trelle S, Schneider M, Jüni P, Egger M. Bibliographic study showed improving methodology of meta-analyses published in leading journals 1993–2002. J Clin Epidemiol. 2007; 60(8):773–780 [DOI] [PubMed] [Google Scholar]

- 104.Glasgow RE, Emmons KM. How can we increase translation of research into practice? Types of evidence needed. Annu Rev Public Health. 2007;28:413–433 [DOI] [PubMed] [Google Scholar]

- 105.Green LW. Making research relevant: if it is an evidence-based practice, where’s the practice-based evidence? Fam Pract. 2008; 25(Suppl 1):i20–i24 [DOI] [PubMed] [Google Scholar]