Abstract

Perceptual stability requires the integration of information across eye movements. We first tested the hypothesis that motion signals are integrated by neurons whose receptive fields (RFs) do not move with the eye but stay fixed in the world. Specifically, we measured the RF properties of neurons in the middle temporal area (MT) of macaques (Macaca mulatta) during the slow phase of optokinetic nystagmus. Using a novel method to estimate RF locations for both spikes and local field potentials, we found that the location on the retina that changed spike rates or local field potentials did not change with eye position; RFs moved with the eye. Second, we tested the hypothesis that neurons link information across eye positions by remapping the retinal location of their RFs to future locations. To test this, we compared RF locations during leftward and rightward slow phases of optokinetic nystagmus. We found no evidence for remapping during slow eye movements; the RF location was not affected by eye-movement direction. Together, our results show that RFs of MT neurons and the aggregate activity reflected in local field potentials are yoked to the eye during slow eye movements. This implies that individual MT neurons do not integrate sensory information from a single position in the world across eye movements. Future research will have to determine whether such integration, and the construction of perceptual stability, takes place in the form of a distributed population code in eye-centered visual cortex or is deferred to downstream areas.

Introduction

Humans perform ∼100,000 eye movements per day, and with each eye movement, the physical image of the outside world lands on a different location on the retina. In early vision, receptive fields (RFs) are eye centered; if the eye moves 10° to the left, so does the RF. This implies that the neural representation of the world in early visual cortex changes with every eye movement. Despite these changes in incoming information, however, the visually perceived world is relatively stable.

One way to create this perceptual stability is to construct spatial RFs that are independent of eye position. There is evidence for such head-centered RFs in the parietal cortex of the macaque (Duhamel et al., 1997; Galletti et al., 1999) (for review, see Snyder, 2000). These RFs, however, are typically large (often covering more than one-quarter of the visual field), and they represent only a small subset of the features known to be represented in earlier visual areas. It is difficult to see how these parietal neurons could underlie perceptual stability of elementary visual features at a fine spatial scale; hence, a search for head-centered mechanisms in earlier visual areas is warranted.

The existence of head-centered mechanisms for the analysis and integration of motion signals has recently been a topic of debate. Some human behavioral studies have argued that motion is integrated by head-centered detectors (Melcher and Morrone, 2003; Ong et al., 2009), whereas others have shown that these phenomena can be understood using only eye-centered motion detectors (Morris et al., 2009). Functional imaging of the main motion processing area in the human brain has also led to conflicting results, with one study showing head-centered (d'Avossa et al., 2007) and another only eye-centered (Gardner et al., 2008) responses. Our study tests whether RFs in the macaque middle temporal area (MT) are eye or head centered.

An alternative mechanism for the integration of spatial information across eye movements is to transfer information from neurons that respond to a given location in space before an eye movement to the neurons that respond to that same location after the eye movement. For a brief period of time, such neurons do not code in eye-centered coordinates. This mechanism—called remapping—has first been observed in the lateral intraparietal area (LIP) (Duhamel et al., 1992), but others have reported similar temporary changes in the neural response profile around saccades in various regions of the brain, including the frontal eye field (Umeno and Goldberg, 1997), the superior colliculus (Walker et al., 1995), area V3 (Nakamura and Colby, 2002), and area V4 (Tolias et al., 2001). Some of the functional reasons to integrate information across eye movements are the same whether the eye movements are fast or slow. Moreover, perceptual effects, such as mislocalization of briefly flashed stimuli, occur not only during fast but also during slow eye movements (Kaminiarz et al., 2007) and are often presumed to be related to remapping. For these reasons, we investigated whether a form of remapping could also be found during slow eye movements.

One previous study has shown indirectly that neurons in area MT likely encode spatial information in an eye-centered frame of reference (Krekelberg et al., 2003), but there has been little quantitative research that directly addresses this question. Given the controversy in the literature, we considered it important to map MT RFs quantitatively and investigate their properties during eye movements. We determined the RFs of cells in area MT during fixation and optokinetic nystagmus (OKN): slow pursuit phases interspersed with fast saccade-like movements in the opposite direction. To strengthen the link with functional imaging studies, we not only determined the RFs based on extracellular action potentials but also the RFs based on local field potentials (LFPs). Our data clearly show that both RFs, spike based and LFP based, in area MT are firmly yoked to the eye during the slow phases of OKN.

Materials and Methods

Subjects

The electrophysiological experiments involved two adult male rhesus monkeys (Macaca mulatta). Experimental and surgical protocols were approved for monkey S by The Salk Institute Animal Care and Use Committee and for monkey N by the Rutgers University Animal Care and Use Committee. The protocols were in agreement with National Institutes of Health guidelines for the humane care and use of laboratory animals.

Animal preparation.

The surgical procedures and behavioral training have been described in detail previously (Dobkins and Albright, 1994). In short, a head post and a recording cylinder were affixed to the skull using CILUX screws and dental acrylic (monkey S) or titanium screws (monkey N). Recording chambers were placed vertically above the anatomical location of area MT (typically 4 mm posterior to the interaural plane and 17 mm lateral to the midsagittal plane). This allowed access via a dorsoventral electrode trajectory. All surgical procedures were conducted under sterile conditions using isoflurane anesthesia.

Recording

Electrophysiology.

During a recording session, we penetrated the dura mater with a guide tube to allow access to the brain. An electric microdrive then lowered glass or parylene-C-coated tungsten electrodes (0.7–3 MΩ; FHC or Alpha Omega Engineering) into area MT through the guide tube. Single cells were isolated while they were visually stimulated using a circular motion stimulus (Schoppmann and Hoffmann, 1976; Krekelberg, 2008). We recorded the spiking activity either continuously at 25 kHz using Alpha Lab [for monkey N (Alpha Omega Engineering)] or in 800 μs windows triggered by threshold crossings with a Plexon system [monkey S (Plexon)]. After detecting action potentials, we first used an automated superparamagnetic clustering algorithm (Quiroga et al., 2004) to coarsely assign spike wave forms to single units and then fine-tuned clustering by hand. The Alpha Omega and the Plexon systems sampled the LFP data continuously at 782 and 1000 Hz, respectively. We filtered all LFP data with a bandpass filter (low, 1 Hz; high, 120 Hz).

Eye position.

We measured the eye-position signal with infrared eye trackers [120 Hz (ISCAN) or up to 2000 Hz (EyeLink2000; SR Research)]. We identified the fast phases of OKN offline by first detecting speeds of the eye that crossed a threshold set to 2–3 SDs (depending on the noise) above the mean speed. To estimate fast phase onset, we then searched for the first time point before this threshold crossing at which the speed was higher than the mean speed. We confirmed the accurate detection of the fast phase by visually inspecting a subset of trials. For each slow phase, we determined the linear fit that best described the eye position as a function of time. The slope of this line is the gain of the slow phase.

Visual stimuli.

The in-house software, Neurostim (http://neurostim.sourceforge.net), computed and displayed all stimuli on a 20-inch CRT monitor (Sony GDM-520) 57 cm in front of the eyes. The display covered 40° × 30° of the visual field. Except for a red fixation point, all stimuli were presented in equal energy white (x = 0.33, y = 0.33, Commission Internationale de l'Eclairage color space) with intensities ranging from 0.2 to 85 cd/m2.

Random noise stimulus.

The main visual stimulus was a grating composed of randomly positioned, flickering bars (0.5–1° wide) (for three example frames, see Fig. 1A). The bars were always oriented vertically on the screen, thus allowing the mapping of the horizontal extent of the RF. The luminance value of each bar was independent of all other bars, and a new bar pattern was shown with a temporal frequency of 60 Hz (monkey S) or 50 Hz (monkey N). In the case of monkey S, each frame was spatially low-pass filtered by means of a Gaussian function (average full-width at half-maximum was 1.0°). Hence, the resulting luminance distribution was approximately Gaussian for monkey S, whereas it was approximately uniform for monkey N. For the offline analysis, we reproduced this random noise stimulus and centered the luminance distribution on 0, such that numbers above 0 represent luminance above the mean and numbers below represent luminance below the mean.

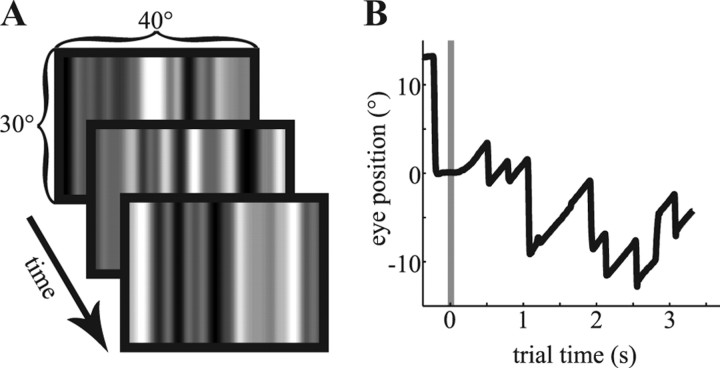

Figure 1.

The random noise stimulus and a typical optokinetic eye movement. A, Three random noise patterns, as presented to monkey S. Each monitor frame consisted of one pattern similar to the ones shown here. B, A typical eye movement during the rightward OKN condition. Once the animal fixated a central dot, the flickering noise stimulus appeared on the screen. A random-dot pattern filling the entire screen replaced the central fixation dot at the same time, overlaying the flickering stimulus. This pattern moved with 10°/s and induced the OKN. The graph shows the initial saccade to the fixation point (0°), the onset of the moving dots and the random noise pattern (gray line), and the start of the slow phase of OKN followed by the typical saw-tooth pattern of the nystagmus.

Procedure

A trial started with the presentation of a central red fixation target. Once the monkey fixated, the random noise stimulus appeared on the screen for 2.5–3 s. The fixation target stayed on for this period (fixation condition) or disappeared. In the latter conditions, a black random dot pattern [dot diameter, 0.3°; dot density, 0.43 dots/(°)2] was superimposed on the noise pattern. The random dot pattern induced OKN (Fig. 1B) by moving to the left or the right at 10°/s (leftward and rightward OKN conditions, respectively). All three conditions were randomly interleaved and occurred equally often. The animals received a drop of juice at the end of the trial for maintaining fixation in a 4° × 3° window (fixation condition) or for looking at the screen (36° × 28° fixation window, OKN conditions) for the duration of the trial.

Data analysis

Estimation of the linear RF.

We determined the best linear approximation of the complex relationship between the neural response and the stimulus pattern. Formally, we expressed the stimulus pattern (Y) as a linear combination of the neural response (X) plus noise ε: Y = Xβ + ε. In this form, the problem is equivalent to a general linear model (GLM), with Y being the regressand, X the design matrix, and β a matrix of regression coefficients. In our case, these coefficients corresponded to the space–time RF map. Standard GLM methods not only provide the estimate of β that minimizes the error term but also provide significance values for each of the coefficients based on F tests.

Specifically, the regressand Y is an N × T matrix representing the stimulus. N is the number of pixels on the screen (1024). T is the number of stimulus frames (typically 25,000–75,000). We used the eye position, as measured by the eye tracker, to align the monitor pixel at the fovea with the center of the matrix. After this alignment, the value Y (512,100), for instance, is the luminance of the pixel that was directly at the fovea in the 100th stimulus frame. In other words, the first dimension of the Y matrix represents stimulus location (in monitor pixels) in eye-centered coordinates. Stimulus locations beyond the edge of the screen were assigned a value of 0.

The design matrix X is a T × M matrix representing the neural data; each column is a regressor. The first regressor represents the neural data at 0 delay compared with the stimulus. Each entry in this vector contains either the number of spikes per stimulus frame (to determine the spike RF) or the LFP averaged over a stimulus time frame (LFP RF). In the OKN conditions, we excluded neural signals recorded from 75 ms before to 150 ms after the onset of the fast phase. The other columns of the design matrix also contain the neural data vector, but each is shifted by a temporal offset. For each regressor, this offset is increased by the duration of one frame. For example, the fourth regressor contains the neural activity that occurred three frames, i.e., 50 ms (monkey S) or 60 ms (monkey N), after the stimulus frame appeared on the screen. The last regressor is filled with ones; it is the constant term of the linear model.

Solving the regression model results in a coefficient matrix β with N columns and M rows. The last row of the coefficient matrix, the intercept, contains the average of all stimulus frames for each pixel. For an infinite dataset, this would be 0 (corresponding to the mean of the stimulus values). This intercept is required for the statistical evaluation of the data using F tests and plays a role analogous to the whitening procedure sometimes used in spike-triggered averaging. The next row in β represents the coefficients that predict the stimulus frames for all 1024 pixels from neural data recorded at the same time. Each following row contains the coefficients that establish the linear relationship between the stimulus and the neural data with a bigger temporal offset. Thus, the coefficient matrix establishes the linear model that relates the space–time stimulus to the neural data; we interpret it as the space–time RF map.

For the spike RF—the neural data X contained spike counts per stimulus frame—a high value in the RF map (e.g., at 5° and −50 ms) indicates that a bright bar 5° to the right of the fovea was typically followed by spikes 50 ms later (for example, see Fig. 2). The interpretation of the LFP RF map is similar but with one difference that arises from the fact that—unlike the spike count—the LFP can be negative. As a result, a high LFP RF value can be explained two ways. Either a bright bar was often followed by a high positive LFP, or a dark bar was often followed by a low negative LFP. We used the raw LFP signal and not the LFP power (squared LFP) because the RF maps obtained with the raw LFP yielded higher significance. This implies that locations on the screen at which a bright or dark bar consistently changed the LFP power but with a random phase each time were not included in our definition of the LFP RF.

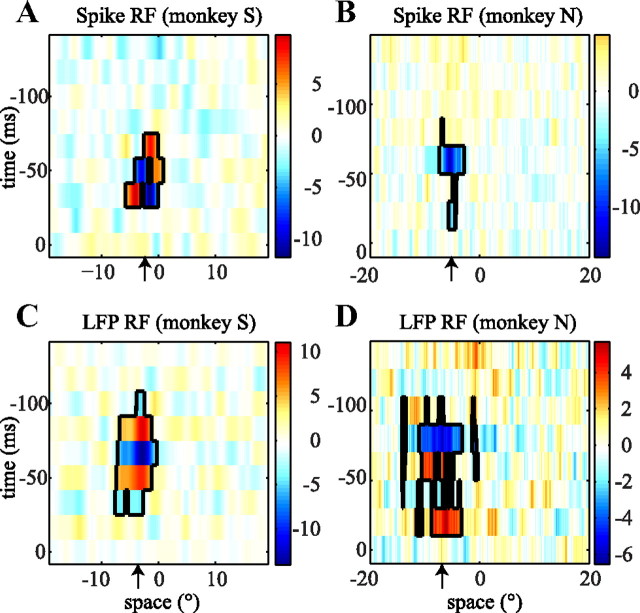

Figure 2.

Example RF maps. Each of the four images shows a spatiotemporal RF map. The axes are space (degrees), where 0 indicates the position of the fovea, and time (ms), where 0 is the time at which the neural data were recorded. The color represents luminance (z scored) and indicates the strength and sign of the RF. The black borders show the clusters in the space–time RF map that were significant (bootstrap test; see Materials and Methods). A, The spike RF of a single cell recorded in monkey S. B, The spike RF of a single cell from monkey N. C, D, The RF of the LFPs recorded at the same sites as the single cells in A and B, respectively. The black arrows indicate the estimated positions of the RFs. For a full description, see Results.

The GLM analysis provides a significance value per space–time pixel in the RF map. As a consequence, a simple uncorrected threshold of p < 0.01 would lead to RF maps with, on average, ∼100 significant pixels even for neural data consisting entirely of noise (0.01 * 8 * 1024, where 8 is the number of time points, and 1024 is the horizontal number of pixels in the monitor). We used a bootstrap permutation test to exclude such type I errors without applying an overly conservative Bonferroni's correction. For each neuron and LFP site, we generated a random stimulus sequence and then calculated the size of the largest cluster of contiguous space–time pixels in the map. This process was repeated 100 times to create a null distribution of space–time cluster sizes. We then compared the cluster size obtained with the actual stimulus to this distribution and discarded all clusters whose size was less than the 95th percentile of the null distribution. The remaining pixels are significant at the p < 0.05 level, corrected for multiple comparisons. We excluded cells and LFP sites if none of the pixels of the fixation RF map passed this threshold; this removed one LFP site from monkey N and 19 neurons and six LFP sites for monkey S from our analysis.

We defined the location of the RF as the spatial coordinate of the centroid of the significant pixels and the size of the RF as the spatial extent spanned by the significant pixels.

RF reference frames.

To determine the reference frame of an RF, we estimated the RFs at different eye positions. We split the recorded eye positions during OKN into three blocks with an equal number of time points: the third of the data where the eyes were directed most leftward, the third where the eyes were directed most rightward, and the third of the data in between. The mean of the eye positions in these three blocks could be different depending on the condition (leftward vs rightward OKN), or recording day.

We used the corresponding subsets of neural data to fill the design matrix only with the data recorded at these different eye positions. This resulted in seven independent RF maps (three for each OKN condition, one for fixation) and seven corresponding average eye positions.

To determine the relative location of the RFs without making assumptions about RF shape, we cross-correlated each of the six independent RF maps recorded during OKN with the RF map estimated using the fixation data (Duhamel et al., 1997). The spatial shift at which the cross-correlation peaked was considered the shift of the RF relative to the fixation condition. For each of the six RF maps recorded during OKN, we then calculated the RF location on the screen as the sum of the mean eye position, the location of the fixation RF, plus the shift of the RF determined by cross-correlation.

The head-centered RF hypothesis predicts that RF location is independent of eye position, whereas the eye-centered hypothesis predicts that RF location is linearly related to eye position. To test these hypotheses, we assumed a linear relationship between RF location and mean eye position: RF = m * eye + b. We determined the parameters of this equation by robust linear regression with a bisquare weighting method. The slope m is a measure of the reference frame; we refer to it as the reference frame index (RFI). Eye-centered cells have an RFI of 1; head-centered cells have an RFI of 0. The constant term b is the position of the RF while looking straight ahead (when eye and screen coordinates are equal). We used the standard error of the regression (σ) as a measure of the goodness of fit.

RF remapping.

Receptive fields have been shown to shift their location around fast eye movements (Duhamel et al., 1992). To investigate whether RFs shift similarly during the slow phase of OKN, we fixed the slope m of the linear fit to the value obtained while determining the reference frame but allowed the offset b to vary independently for the leftward and rightward slow phase. The offset b is the retinal location of the RF while the eye is looking straight ahead. If RFs shifted in the direction of the eye movement, one would expect b to be greater in the rightward than in the leftward OKN condition. We defined the remapping shift as half the difference between the offsets in the leftward and rightward OKN conditions. Statistical significance of the remapping shift was assessed at the population level by performing a sign test. Although it would be possible to investigate a model in which both b and m could vary depending on the direction of the eye movement, such a model is underconstrained given that we have only three data points (i.e., eye positions) per condition.

We used a Monte Carlo simulation to determine the minimal size of the remapping shift at which our statistical analysis would reject the null hypothesis that no shift occurred. We simulated datasets in which a simulated offset (ε) was assumed for both the leftward and rightward OKN conditions. This ε was drawn—separately for each recording—from a Gaussian distribution with a mean that was systematically varied from −2° to 2° and an SD equal to the SD of the estimated offset parameter b for that recording. We repeated this procedure 1000 times for each simulated dataset. We defined the upper bound of the remapping shift as the smallest mean simulated offset for which the sign test rejected the null hypothesis at the 0.05 significance level in >80% of cases. In other words, this is the remapping shift at which both type I (false positive) and type II (false-negative) statistical errors were controlled at conventional levels (α = 0.05, β = 0.8).

Results

We present data from 76 single neurons and 53 LFP recording sites in area MT in two right hemispheres of two macaque monkeys (monkey N, neurons = 31, LFPs = 23; monkey S, neurons = 45, LFPs = 30). Each trial started with the monkey fixating a target in the center; this triggered the start of dynamic noise on the computer screen, which elicited neural responses that we used to estimate the RF. The monkeys either fixated throughout the trial, or they performed OKN induced by a random-dot pattern moving leftward or rightward.

The average ± SD fast phase frequencies were 2.5 ± 0.2 Hz (monkey S) and 3.4 ± 0.2 Hz (monkey N). In humans, such high beating frequencies are typically associated with stare nystagmus (Konen et al., 2005; Knapp et al., 2008). The mean and SD of the gain of the slow phase was 0.82 ± 0.05 (monkey S) and 0.95 ± 0.05 (monkey N). A separate behavioral experiment in which OKN was induced in the presence or absence of the flickering bar stimulus showed that presence of the full-field flicker did not affect the slow-phase gain of the OKN significantly (p > 0.15).

First, we present examples of RF maps based on spikes and LFPs recorded during steady fixation and show how these RF measures are related. We then turn to the main question: how eye position and eye movement affect RF locations.

Spike and LFP receptive field estimates

We estimated the RFs with a GLM (see Materials and Methods). Figure 2 shows two pairs of RF maps for single units and LFPs recorded simultaneously with the same electrode.

In the spike RF maps, a bright red patch at a specific (x, t) location indicates that t ms before a high firing rate occurs and a higher than average luminance is typically seen at retinal position x. The interpretation of LFP RF is analogous, with the exception that, unlike spike rates, LFPs can be positive or negative; hence, a bright red patch can correspond to a higher than average luminance followed by a high positive LFP, or a lower than average luminance followed by a very negative LFP. The color maps are centered on the same value, such that white represents the mean of the luminance distribution (i.e., no relation between neural activity and luminance), red represents luminance above the mean, and blue represents luminance below the mean.

To provide a scale that can be compared across recordings, we scaled the RF maps to the SD of the luminance distribution outside the RF (z-score). At the same time, however, we wanted to visualize RF structure independently of the degree of statistical confidence (which depends on the consistency of the neural response, as well as the somewhat arbitrary duration of the recording). To achieve this, we allowed the color scale (Fig. 2) to vary across RF maps. With this convention, numerical (z-score) values can be compared across RF maps to compare consistency of the RFs, but colors are defined per map only.

Note that the RF maps for monkey S (Fig. 2, left) appear smoother than those on the right (monkey N) because the stimulus presented to monkey S was spatially low-pass filtered (see Materials and Methods).

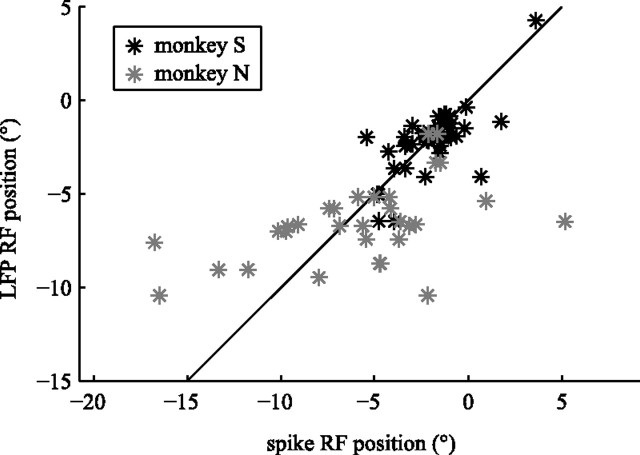

We quantified the location of the RF as the centroid of all significant clusters in the RF map. In Figure 2, the spatial components of the centroids for the spike RF and the LFP RF were −2.34° and −3.69° (A, C) and −5.11° and −6.87° (B, D). The horizontal locations of the spike RF and LFP RF were highly correlated (Fig. 3). The Pearson's correlation for monkey S was r = 0.70 (p < 10−7) and r = 0.49 (p < 0.005) for monkey N. Similarly, we quantified RF size as the horizontal spatial extent of all significant clusters. For monkey S, the average ± SD RF size was 4.6 ± 2.6° for spike RFs and 5.9 ± 2.6° for LFP RFs, and the mean RF sizes for monkey N were 4.7 ± 4.1° for spike RFs and 8.1 ± 7.4° for LFP RFs. The size of spike RFs and LFP RFs were not significantly correlated (p > 0.3), and LFP RFs were significantly larger than spike RFs (rank-sum test, p < 0.001). Please note that our one-dimensional stimulus pattern does not allow us to estimate vertical extent or retinal eccentricity of the RFs, and thus they are not reported here.

Figure 3.

RF position correlation. The LFP RF position (vertical axis) is shown as a function of the spike RF positions (horizontal axis). Gray elements represent RFs from monkey N and black elements from monkey S. For both monkeys, LFP and spike RF position were significantly correlated.

RF reference frames

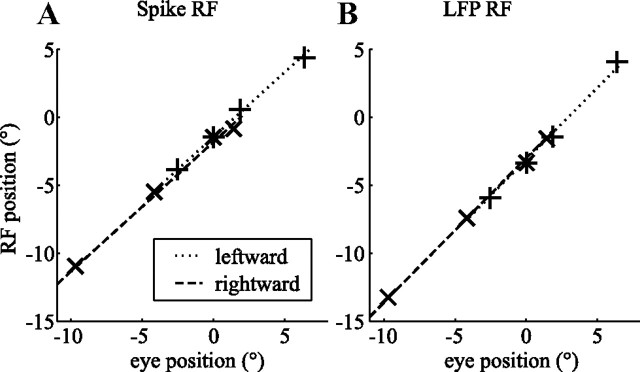

Figure 4 shows the RF locations of one example neuron (A) and the simultaneously recorded LFP (B) for seven eye positions (see Materials and Methods). The relationship between RF location and eye position was obviously well described by a line with a slope of 0.95 for the spike RF (A). We refer to this slope as the RFI (see Materials and Methods). The LFP RF had an RFI of 1.08. A perfectly eye-centered RF has an RFI of 1 (black dash-dotted line); a head-centered RF has an RFI of 0 (black dotted line).

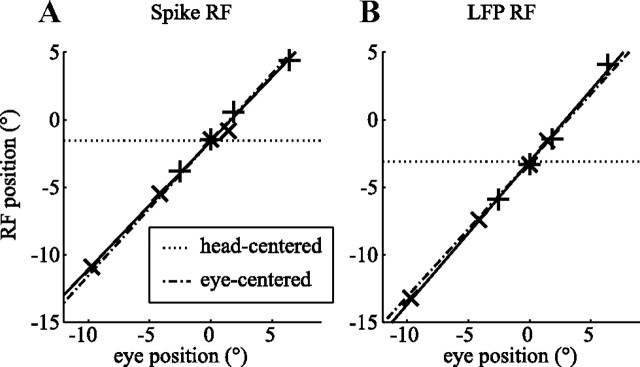

Figure 4.

RF reference frame estimation. The seven elements correspond to the seven independent pairs of eye position and RF position in screen coordinates (+, leftward slow phase; ×, rightward slow phase; *, fixation). The dash-dotted lines show the prediction of a pure eye-centered RF (RFI = 1). The dotted lines show the prediction of a head-centered RF (RFI = 0). The solid lines show the linear fit. A, RF location of a spike RF. B, RF location of the LFP RF from the same electrode (monkey N). These example recordings show receptive fields that clearly move with the eye.

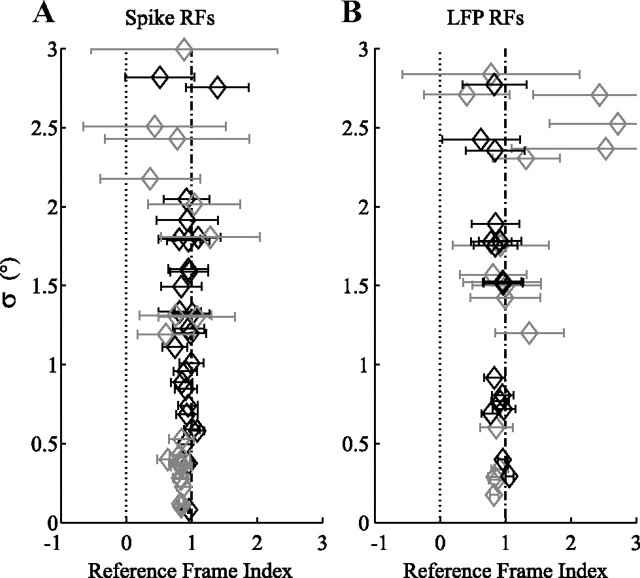

Figure 5 shows the RFIs for the population. To give an indication of the noise in these RFI estimates, we plot σ as a function of the RFI (with confidence intervals). σ is the SE of the regression, which is a measure of the goodness of fit. It is 0.38° for the cell in Figure 4A, and 0.29° for the LFP RF in Figure 4B. For both spike and LFP RFs, the RFIs are near 1. The mean and SD of all RFIs was 0.92 ± 0.50 for spike RFs and 0.97 ± 0.50 for LFP RFs. High RFIs (i.e., eye-centered reference frames) were almost always found when we had enough data to get a reliable estimate of the RF (small σ and small error bar). For instance, for σ < 3°, 79% of spike RFIs and 71% of LFP RFIs lay between 0.8 and 1.2.

Figure 5.

Population overview of reference frames. A, RFI for all spike RFs (gray, monkey N; black, monkey S) with a goodness of fit (σ) better than 3° (for RFI and σ, see Materials and Methods). The horizontal bars on the data points show the confidence intervals for the estimates of the RFIs. B, RFIs for LFP RFs, same conventions as A. These data show that both spike and LFP RFs in MT moved with the eye.

RF remapping

Next, we investigated whether there were shifts in RF position that depended not on eye position but on eye-movement direction. For instance, one might expect RFs to run ahead of their retinal location during fixation; this would be analogous to the predictive remapping that is found before saccades in LIP (Duhamel et al., 1992). We fit the RF positions during the two slow phases independently while keeping the slope of the lines fixed (see Materials and Methods). The constant term in these linear fits corresponds to the retinal location of the RF when the eye is pointing straight ahead. If RFs remapped their location during slow eye movements, one would expect this retinal RF location to differ between rightward and leftward slow eye movements.

For the example shown in Figure 6A, the retinal location was −1.42 ± 0.67° during the leftward slow phase, whereas it was −1.80 ± 1.92° for the rightward slow phase (estimate ± 95% confidence interval). The two positions were not significantly different. Figure 6B shows the same analysis for the simultaneously recorded LFP RFs; again, the eye-movement direction did not significantly affect the RF location (−3.13° ± 1.01 leftward slow phase, −3.01° ± 0.31 rightward slow phase). Figure 6 shows data from the same recording site as in Figure 4.

Figure 6.

Assessment of RF remapping during the slow phase of OKN. The seven elements correspond to the seven independent eye position/RF position pairs (+, leftward slow phase; ×, rightward slow phase; *, fixation). The lines in each plot correspond to two fits with the same slope to the rightward slow phase (dashed line) and the leftward slow phase (dotted line). The offsets of the fitted lines represent the retinal location of the RF when looking straight ahead. A, RF location based on spikes. B, RF location based on the LFP recorded from the same electrode (monkey N). The retinal locations of the RFs were not significantly different during leftward and rightward slow eye movements; there was no evidence for remapping in these example recordings.

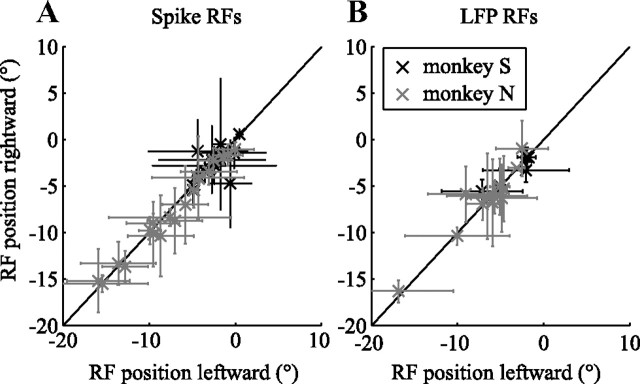

To provide a population overview, Figure 7 plots the retinal RF location while looking straight ahead during rightward OKN as a function of the retinal RF location while looking straight ahead during leftward OKN. For this overview, we excluded RFs whose location could only be determined with great uncertainty (error bars larger than 10°). The average retinal RF location was shifted 0.04° (Fig. 7A, spike RFs) and 0.38° (Fig. 7B, LFP RFs) in the direction opposite to the slow phase. None of the distributions of differences showed a significant shift (all sign tests p > 0.1). Hence, our data do not support the hypothesis that RFs shift during OKN slow phase. We also used Monte Carlo simulations to estimate an upper bound to the remapping shift (see Materials and Methods) and found that our methods would have been sensitive enough to detect remapping shifts as small as 0.14° for the spike RFs and 1.00° for the LFP RFs.

Figure 7.

Population overview of the (absence of) remapping. Error bars represent confidence intervals. Gray elements represent monkey N and black elements monkey S. A, The retinal RF position during rightward eye movements (vertical axis) compared with RF position during leftward eye movements (horizontal axis) for spike RFs. B, LFP RFs in the same format as A. For clarity, we excluded data in which the confidence intervals of the RF position estimates were bigger than 10°. These data show that the RF positions did not depend on the direction of the slow eye movement. Hence, MT RFs are not remapped during the following phase of OKN.

Discussion

We mapped receptive fields in macaque MT using well-isolated single units, as well as aggregate neural activity reflected in the local field potentials. During slow eye movements induced by a whole-field moving pattern, these RFs moved with the eye. We found no evidence for either head-centered RFs or eye-movement direction-dependent remapping of RFs.

We first discuss our mapping method, its advantages and limitations, and then the implications of our findings for theories of perceptual stability.

Mapping method

Random noise RF mapping has been used extensively [e.g., on retinal ganglion cells (Chichilnisky, 2001) or V1 neurons (Horwitz et al., 2005; Rust et al., 2005)], and the data are usually analyzed with spike-triggered averaging (STA). For an infinite spike dataset, GLM-based analysis and STA result in the same linear kernel estimates. For finite datasets, however, STA requires whitening of the stimulus sample distribution, which the GLM method does implicitly. Moreover, although significance testing in STA is usually done with bootstrap methods, the GLM analysis allows the application of standard F tests. Finally, the main advantage of the GLM approach arises in the context of the analysis of LFPs in which unique trigger events (the equivalent of spikes in spike-triggered averaging) cannot easily be defined. The GLM-based analysis determines the best linear model that relates (any) neural activity to the visual stimulus.

Our method was able to quantify either a spike or an LFP RF at 92% of recording sites. Of course, the method fails in the absence of a response to flickering bars. This, however, is quite rare in MT (Krekelberg and Albright, 2005). Mapping could also fail during the OKN conditions because (retinal) motion of the dot pattern could suppress the response. Because, however, the retinal motion is rapidly (200–300 ms) cancelled by the nystagmus whose gain was near 1, only a slow (<2°/s for monkey S, <0.5°/s for monkey N) moving retinal motion pattern remains. Given that most MT neurons prefer higher speeds (Rodman and Albright, 1987), this retinal slip is expected to have only a minimal influence on the neural response. Additionally, mapping could fail if the eye movement strongly suppressed the neural response (Chukoskie and Movshon, 2009); this implies that a negative result (absence of a significant RF) should be interpreted with caution.

Given that we were interested in the relationship between RF location and horizontal eye movements, we used a one-dimensional stimulus and only mapped the horizontal position and extent of the RF. Although there is no principled reason against using a 2D checkerboard to map 2D RFs, we found in practice that the dimensionality of a 2D full-field stimulus was too high to allow RF estimation within reasonable experimental time. Such a 2D method may still be successful on a coarser spatial scale.

Spike and LFP RFs

We found that the location of spike and LFP RFs were highly correlated. This is presumably a consequence of the retinotopic organization of MT; nearby neurons have similar retinal RF locations, and hence the aggregate neural activity of these neurons reflected in the LFP should have a similar RF, too.

Our results, however, also showed that the size of the LFP RF was a much more variable quantity (no correlation with the spike RF and a larger SD in monkey N but not in monkey S). In part, this may be attributable to the fact that the receptive fields of monkey N were closer to the boundary of the monitor, which may have affected our ability to map their full extent (Fig. 3). In addition, however, these differences may be attributed to a number of neural and experimental factors that are known to influence the range of spatial integration in the LFP. For instance, although an action potential—once it is detected—is independent of the impedance of the recording electrode, low-impedance electrodes accumulate more lower-frequency LFPs, and these are expected to integrate electrical activity over a larger radius (Bédard et al., 2004). This problem is exacerbated by the dependence of the spatial spread of the LFP on the cortical layer (Murthy and Fetz, 1996). Hence, properties of neural populations based on LFP recordings can be obscured by the unknown range of spatial integration. This uncertainty, however, did not impact our particular question, because even the larger LFP RFs in monkey N were eye and not head centered (Fig. 5).

Mixed reference frames

Previous work has shown that mixed reference frames—RFs that partially move with the eye—are prevalent in the ventral intraparietal (VIP) area (Duhamel et al., 1997; Avillac et al., 2005; Schlack et al., 2005). Moreover, theoretical studies have shown that mixed reference frames are useful as part of a neural network for the optimal combination of multiple sources of sensory information (Deneve et al., 2001).

In our analysis, an RFI that is neither 0 (head-centered) nor 1 (eye-centered) corresponds to a mixed reference frame. The mean RFI for spike RFs (0.95) was not significantly different from 1, but the 0.81 mean RFI for LFP RFs was. Moreover, some individual RFIs were significantly below 1 (see confidence intervals in Fig. 5A,B). Taken at face value, this implies that MT coded in a mixed reference frame, albeit one that was strongly biased toward eye centered. We note, however, that these apparent mixed reference frames could be artifactual. For instance, a bias in the position signal of the video eye tracker could lead to RFIs < 1. Such a bias was indeed observed in the data obtained with the lower-resolution video tracker used in monkey S. The oblique angle of the camera led to a presumably artifactual change in pupil size with eye position, which caused a minor (∼10%) overestimation of eye position. This is consistent with an underestimation of the RFI. Similarly, the inability to map responses beyond the physical boundaries of the screen introduces a bias toward central screen positions, which our analysis would interpret as an RF that does not quite follow the eye. This effect increases for peripheral RFs and thus mainly affects the RFIs for the more eccentric receptive fields recorded in monkey N (Fig. 3).

Perceptual stability

There is a clear discrepancy between our findings of eye-centered RFs in MT, with the head-centered RFs in human MT reported by d'Avossa et al. (2007). Given the significant functional and anatomical similarities between macaque MT and human MT (Orban et al., 2004), this is not likely to be a species difference. Moreover, the large discrepancy is also unlikely to be explained by methodological differences, especially because we not only mapped the RFs of single units but also those of the LFPs, which are known to be more closely related to the BOLD signal (Logothetis et al., 2001; Viswanathan and Freeman, 2007) (but see Nir et al., 2008).

In recent work, Burr and Morrone (2011) have argued that head-centered representations in MT arise only under conditions of spread attention, and not while the subject is performing a demanding task at fixation. This could explain the discrepancy between d'Avossa et al. (2007) and Gardner et al. (2008). In our study, we did not explicitly control the monkeys' allocation of attention, but the typically high fast-phase frequency is consistent with stare nystagmus, which is a reflexive eye movement that does not involve the tracking of an individual dot. Under these conditions, attention is likely spread across the screen and unlikely to be highly focused at the fovea. Nevertheless, receptive fields moved with the eye. Moreover, the recent study by Ong and Bisley (2011) mimicked the fixation paradigm of d'Avossa et al. (2007) and also reported eye-centered RFs in macaque MT. Although we believe this suggests that spread attention may not be enough to explain head-centered representations in area MT, a study in which attention is carefully controlled is needed to fully address this possibility.

RF remapping and mislocalization

An inability to properly match the old image on the retina to the current one during or after an eye movement may explain the temporary cracks in perceptual stability that occur for briefly presented targets around the time of a saccade (Honda, 1989; Ross et al., 1997; Lappe et al., 2000). Many researchers have connected these localization errors to perisaccadic remapping in visual areas (for review, see Wurtz, 2008). Similar kinds of behavioral mislocalization, however, also occur during smooth eye movements [smooth pursuit (van Beers et al., 2001; Konigs and Bremmer, 2010), OKN (Kaminiarz et al., 2007), and optokinetic afternystagmus (Kaminiarz et al., 2008)]. This led us to investigate whether remapping also occurs during smooth eye movements. When the eyes move with 10°/s, then a neuron, which anticipates the stimulus location by 50 ms, would shift its RF 0.5°. Our data, however, failed to find support for a shift of the RF location during the slow phase of the OKN. Moreover, our Monte Carlo simulations show that our methods were sensitive enough to detect shifts as small as 0.14°.

Of course, this could simply mean that the behavioral phenomenon of mislocalization, which is larger than 1° during the slow phase of OKN (Kaminiarz et al., 2007), is not based on neural activity in MT. However, this finding also led us to reconsider the hypothesized relationship between RF shifts and mislocalization. Let us assume that, just before a rightward saccade, a neuron that normally responds to foveal stimulation, responds to stimuli 10° to the right of the fovea. If the message of this neuron to the rest of the brain stays constant (i.e., assuming a labeled line code), then it signals the presence of a foveal stimulus when a flash is presented 10° to the right of the fovea just before a rightward saccade. In other words, this predicts that mislocalization would be against the direction of the saccade, which is contrary to most of the behavioral evidence (Honda, 1989). This contradiction can be resolved by assuming a more complex code for retinal position (Krekelberg et al., 2003) that does not rely on labeled line codes, which may not be useful beyond the peripheral sensory system (Krekelberg et al., 2006). An alternative or possibly complementary source of the behavioral mislocalization is an error in eye-position signals. In this view, perisaccadic stimuli are mislocalized not because the retinal position is incorrectly represented but because the subjects use incorrect information on the position of the eye (Honda, 1991; Dassonville et al., 1992). Recent evidence shows that perisaccadic errors in eye-position signals in macaque MT, medial superior temporal area MST, VIP, and LIP are consistent with this hypothesis (Morris et al., 2010). Whether similar errors in eye-position signals could also explain mislocalization during smooth eye movements is a target for future studies.

Footnotes

This work was supported by The Pew Charitable Trusts, National Institutes of Health Grant R01EY017605, and Deutsche Forschungsgemeinschaft Grant DFG-FOR-560. We thank Anna Chavez, Jessica Wright, Wei Song Ong, and James Bisley for comments on this manuscript and Jennifer Costanza, Dinh Diep, and Eli Wolkoff for technical assistance.

The authors declare no competing financial interests.

References

- Avillac M, Denève S, Olivier E, Pouget A, Duhamel JR. Reference frames for representing visual and tactile locations in parietal cortex. Nat Neurosci. 2005;8:941–949. doi: 10.1038/nn1480. [DOI] [PubMed] [Google Scholar]

- Bédard C, Kröger H, Destexhe A. Modeling extracellular field potentials and the frequency-filtering properties of extracellular space. Biophys J. 2004;86:1829–1842. doi: 10.1016/S0006-3495(04)74250-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burr DC, Morrone MC. Spatiotopic coding and remapping in humans. Philos Trans R Soc Lond B Biol Sci. 2011;366:504–515. doi: 10.1098/rstb.2010.0244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chichilnisky EJ. A simple white noise analysis of neuronal light responses. Network. 2001;12:199–213. [PubMed] [Google Scholar]

- Chukoskie L, Movshon JA. Modulation of visual signals in macaque MT and MST neurons during pursuit eye movement. J Neurophysiol. 2009;102:3225–3233. doi: 10.1152/jn.90692.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dassonville P, Schlag J, Schlag-Rey M. Oculomotor localization relies on a damped representation of saccadic eye displacement in human and nonhuman primates. Vis Neurosci. 1992;9:261–269. doi: 10.1017/s0952523800010671. [DOI] [PubMed] [Google Scholar]

- d'Avossa G, Tosetti M, Crespi S, Biagi L, Burr DC, Morrone MC. Spatiotopic selectivity of BOLD responses to visual motion in human area MT. Nat Neurosci. 2007;10:249–255. doi: 10.1038/nn1824. [DOI] [PubMed] [Google Scholar]

- Deneve S, Latham PE, Pouget A. Efficient computation and cue integration with noisy population codes. Nat Neurosci. 2001;4:826–831. doi: 10.1038/90541. [DOI] [PubMed] [Google Scholar]

- Dobkins KR, Albright TD. What happens if it changes color when it moves?: the nature of chromatic input to macaque visual area MT. J Neurosci. 1994;14:4854–4870. doi: 10.1523/JNEUROSCI.14-08-04854.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, Goldberg ME. The updating of the representation of visual space in parietal cortex by intended eye movements. Science. 1992;255:90–92. doi: 10.1126/science.1553535. [DOI] [PubMed] [Google Scholar]

- Duhamel JR, Bremmer F, BenHamed S, Graf W. Spatial invariance of visual receptive fields in parietal cortex neurons. Nature. 1997;389:845–848. doi: 10.1038/39865. [DOI] [PubMed] [Google Scholar]

- Galletti C, Fattori P, Gamberini M, Kutz DF. The cortical visual area V6: brain location and visual topography. Eur J Neurosci. 1999;11:3922–3936. doi: 10.1046/j.1460-9568.1999.00817.x. [DOI] [PubMed] [Google Scholar]

- Gardner JL, Merriam EP, Movshon JA, Heeger DJ. Maps of visual space in human occipital cortex are retinotopic, not spatiotopic. J Neurosci. 2008;28:3988–3999. doi: 10.1523/JNEUROSCI.5476-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Honda H. Perceptual localization of visual stimuli flashed during saccades. Percept Psychophys. 1989;45:162–174. doi: 10.3758/bf03208051. [DOI] [PubMed] [Google Scholar]

- Honda H. The time courses of visual mislocalization and of extraretinal eye position signals at the time of vertical saccades. Vision Res. 1991;31:1915–1921. doi: 10.1016/0042-6989(91)90186-9. [DOI] [PubMed] [Google Scholar]

- Horwitz GD, Chichilnisky EJ, Albright TD. Blue-yellow signals are enhanced by spatiotemporal luminance contrast in macaque V1. J Neurophysiol. 2005;93:2263–2278. doi: 10.1152/jn.00743.2004. [DOI] [PubMed] [Google Scholar]

- Kaminiarz A, Krekelberg B, Bremmer F. Localization of visual targets during optokinetic eye movements. Vision Res. 2007;47:869–878. doi: 10.1016/j.visres.2006.10.015. [DOI] [PubMed] [Google Scholar]

- Kaminiarz A, Krekelberg B, Bremmer F. Expansion of visual space during optokinetic afternystagmus (OKAN) J Neurophysiol. 2008;99:2470–2478. doi: 10.1152/jn.00017.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knapp CM, Gottlob I, McLean RJ, Proudlock FA. Horizontal and vertical look and stare optokinetic nystagmus symmetry in healthy adult volunteers. Invest Ophthalmol Vis Sci. 2008;49:581–588. doi: 10.1167/iovs.07-0773. [DOI] [PubMed] [Google Scholar]

- Konen CS, Kleiser R, Seitz RJ, Bremmer F. An fMRI study of optokinetic nystagmus and smooth-pursuit eye movements in humans. Exp Brain Res. 2005;165:203–216. doi: 10.1007/s00221-005-2289-7. [DOI] [PubMed] [Google Scholar]

- Konigs K, Bremmer F. Localization of visual and auditory stimuli during smooth pursuit eye movements. J Vis. 2010;10:8. doi: 10.1167/10.8.8. [DOI] [PubMed] [Google Scholar]

- Krekelberg B. Perception of direction is not compensated for neural latency. Behav Brain Sci. 2008;31:208–209. [Google Scholar]

- Krekelberg B, Albright TD. Motion mechanisms in macaque MT. J Neurophysiol. 2005;93:2908–2921. doi: 10.1152/jn.00473.2004. [DOI] [PubMed] [Google Scholar]

- Krekelberg B, Kubischik M, Hoffmann KP, Bremmer F. Neural correlates of visual localization and perisaccadic mislocalization. Neuron. 2003;37:537–545. doi: 10.1016/s0896-6273(03)00003-5. [DOI] [PubMed] [Google Scholar]

- Krekelberg B, van Wezel RJ, Albright TD. Interactions between speed and contrast tuning in the middle temporal area: implications for the neural code for speed. J Neurosci. 2006;26:8988–8998. doi: 10.1523/JNEUROSCI.1983-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lappe M, Awater H, Krekelberg B. Postsaccadic visual references generate presaccadic compression of space. Nature. 2000;403:892–895. doi: 10.1038/35002588. [DOI] [PubMed] [Google Scholar]

- Logothetis NK, Pauls J, Augath M, Trinath T, Oeltermann A. Neurophysiological investigation of the basis of the fMRI signal. Nature. 2001;412:150–157. doi: 10.1038/35084005. [DOI] [PubMed] [Google Scholar]

- Melcher D, Morrone MC. Spatiotopic temporal integration of visual motion across saccadic eye movements. Nat Neurosci. 2003;6:877–881. doi: 10.1038/nn1098. [DOI] [PubMed] [Google Scholar]

- Morris AP, Kubischik M, Hoffmann KP, Krekelberg B, Bremmer F. Dynamics of eye position signals in macaque dorsal areas explain peri-saccadic mislocalization. J Vis. 2010;10:556. [Google Scholar]

- Murthy VN, Fetz EE. Oscillatory activity in sensorimotor cortex of awake monkeys: synchronization of local field potentials and relation to behavior. J Neurophysiol. 1996;76:3949–3967. doi: 10.1152/jn.1996.76.6.3949. [DOI] [PubMed] [Google Scholar]

- Nakamura K, Colby CL. Updating of the visual representation in monkey striate and extrastriate cortex during saccades. Proc Natl Acad Sci U S A. 2002;99:4026–4031. doi: 10.1073/pnas.052379899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nir Y, Dinstein I, Malach R, Heeger DJ. BOLD and spiking activity. Nat Neurosci. 2008;11:523–524. doi: 10.1038/nn0508-523. author reply 524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ong WS, Bisley JW. A lack of anticipatory remapping of retinotopic receptive fields in area MT. J Neurosci. 2011;31:10432–10436. doi: 10.1523/JNEUROSCI.5589-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ong WS, Hooshvar N, Zhang M, Bisley JW. Psychophysical evidence for spatiotopic processing in area MT in a short-term memory for motion task. J Neurophysiol. 2009;102:2435–2440. doi: 10.1152/jn.00684.2009. [DOI] [PubMed] [Google Scholar]

- Orban GA, Van Essen D, Vanduffel W. Comparative mapping of higher visual areas in monkeys and humans. Trends Cogn Sci. 2004;8:315–324. doi: 10.1016/j.tics.2004.05.009. [DOI] [PubMed] [Google Scholar]

- Quiroga RQ, Nadasdy Z, Ben-Shaul Y. Unsupervised spike detection and sorting with wavelets and superparamagnetic clustering. Neural Comput. 2004;16:1661–1687. doi: 10.1162/089976604774201631. [DOI] [PubMed] [Google Scholar]

- Rodman HR, Albright TD. Coding of visual stimulus velocity in area MT of the macaque. Vision Res. 1987;27:2035–2048. doi: 10.1016/0042-6989(87)90118-0. [DOI] [PubMed] [Google Scholar]

- Ross J, Morrone MC, Burr DC. Compression of visual space before saccades. Nature. 1997;386:598–601. doi: 10.1038/386598a0. [DOI] [PubMed] [Google Scholar]

- Rust NC, Schwartz O, Movshon JA, Simoncelli EP. Spatiotemporal elements of macaque V1 receptive fields. Neuron. 2005;46:945–956. doi: 10.1016/j.neuron.2005.05.021. [DOI] [PubMed] [Google Scholar]

- Schlack A, Sterbing-D'Angelo SJ, Hartung K, Hoffmann KP, Bremmer F. Multisensory space representations in the macaque ventral intraparietal area. J Neurosci. 2005;25:4616–4625. doi: 10.1523/JNEUROSCI.0455-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoppmann A, Hoffmann KP. Continuous mapping of direction selectivity in the cat's visual cortex. Neurosci Lett. 1976;2:177–181. doi: 10.1016/0304-3940(76)90011-2. [DOI] [PubMed] [Google Scholar]

- Snyder LH. Coordinate transformations for eye and arm movements in the brain. Curr Opin Neurobiol. 2000;10:747–754. doi: 10.1016/s0959-4388(00)00152-5. [DOI] [PubMed] [Google Scholar]

- Tolias AS, Moore T, Smirnakis SM, Tehovnik EJ, Siapas AG, Schiller PH. Eye movements modulate visual receptive fields of V4 neurons. Neuron. 2001;29:757–767. doi: 10.1016/s0896-6273(01)00250-1. [DOI] [PubMed] [Google Scholar]

- Umeno MM, Goldberg ME. Spatial processing in the monkey frontal eye field. I. Predictive visual responses. J Neurophysiol. 1997;78:1373–1383. doi: 10.1152/jn.1997.78.3.1373. [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Wolpert DM, Haggard P. Sensorimotor integration compensates for visual localization errors during smooth pursuit eye movements. J Neurophysiol. 2001;85:1914–1922. doi: 10.1152/jn.2001.85.5.1914. [DOI] [PubMed] [Google Scholar]

- Viswanathan A, Freeman RD. Neurometabolic coupling in cerebral cortex reflects synaptic more than spiking activity. Nat Neurosci. 2007;10:1308–1312. doi: 10.1038/nn1977. [DOI] [PubMed] [Google Scholar]

- Walker MF, Fitzgibbon EJ, Goldberg ME. Neurons in the monkey superior colliculus predict the visual result of impending saccadic eye movements. J Neurophysiol. 1995;73:1988–2003. doi: 10.1152/jn.1995.73.5.1988. [DOI] [PubMed] [Google Scholar]

- Wurtz RH. Neuronal mechanisms of visual stability. Vision Res. 2008;48:2070–2089. doi: 10.1016/j.visres.2008.03.021. [DOI] [PMC free article] [PubMed] [Google Scholar]