Abstract

Purpose:

This research evaluated an individualized Internet training designed to teach nurse aides (NAs) strategies to prevent or, if necessary, react to resident aggression in ways that are safe for the resident as well as the caregiver.

Design and Methods:

A randomized treatment and control design was implemented, with baseline, 1-, and 2-month assessments for 158 NAs. The training involved 2 weekly visits. The Internet intervention was a behaviorally focused and video-based training that included content on skills for safely dealing with physical aggression. Measures included video situation testing and assessment of psychosocial constructs associated with behavior change.

Results:

Analysis of covariance showed positive results for knowledge, attitudes, self-efficacy, and empathy, with medium–large effect sizes maintained after 2 months. The training was well received by participants.

Implications:

Internet training is a viable approach to shape appropriate NA reactions to aggressive resident behaviors. This format has future potential because it offers fidelity of presentation and automated documentation, with minimal supervision.

Keywords: Resident aggression, Training, Internet, Nurse aides, Long-term care

In long-term care facilities (LTCs) and other health venues, workers are at high risk to be victims of violence by residents or patients (Gates, Fitzwater, & Meyer, 1999; Gates, Ross, & McQueen, 2006; Gillespie, Gates, Miller, & Howard, 2007; Livingston, Verdun-Jones, Brink, Lussier, & Nicholls, 2010; Shaw, 2004; Snyder, Chen, & Vacha-Haase, 2007). Nurse aides (NAs) in LTCs have reported daily verbal or physical assaults (Manderson & Schofield, 2005), but literature reports are inconsistent, if only because definitions of violence may be subjective. Physical injuries from resident aggression were reported to have occurred among 35% of NAs in the previous year by Tak, Sweeney, Alterman, Baron, and Calvert (2010). In the National Nursing Assistant Survey, more than half of NAs incurred a reportable injury in the previous year, including scratches and cuts, bruises, and human bites (Squillace et al., 2009). In one study, 138 NAs reported an average of 4.69 assaults during 80 work hours, with a range of 0–67 assaults (Gates, Fitzwater, & Deets, 2003). Another study of 76 NAs at six LTCs reported a median of 26 aggressive incidents over a two-week period, with approximately 95% of incidents probably undocumented (Snyder et al., 2007).

Violence in health care settings affects both the workers and the quality of care for the residents. Regardless of whether an injury was sustained or not, after an assault, NAs (Gates et al., 2003) and emergency department (ED) hospital workers (Gates et al., 2006) experience increased stress, anger, job dissatisfaction, decreased feelings of safety, and fear of future assaults. Although research into the psychological impact of workplace violence is limited, 17% of health care workers who were assaulted in hospital EDs had diagnosable symptoms of post-traumatic stress disorder and 15% had scores possibly associated with suppressed immune system functioning (Gates, Gillespie, & Succop, 2011). Some evidence suggests that after violent experiences, health care providers avoid patients who have been or might be violent (Gates et al., 1999; Gillespie, Gates, Miller, & Howard, 2010)

Despite the high incidence of violence in LTCs, NAs receive little or no training to work with resident behaviors (Glaister & Blair, 2008; Molinari et al., 2008; Squillace et al., 2009), which undermines their capabilities to react appropriately if assaulted or threatened. Negative stereotypes increase the likelihood that staff will fear or avoid residents with mental illness, which in turn erodes the quality of resident care (Molinari et al., 2008). As a result, problematic behaviors are too often treated with psychotropic medications or restraint, and behavioral methods are underutilized by caregiving staff (Brooker, 2007; Donat & McKeegan, 2003).

A prototype Internet training program to help NAs deal with aggressive resident behaviors has shown promising results in a randomized pre- and post-study (Irvine, Bourgeois, Billow, & Seeley, 2007). Tests of other prototypic computerized or Internet training programs for NAs also have shown positive effects (Breen et al., 2007; Hobday, Savik, Smith, & Gaugler, 2010; Irvine, Ary, & Bourgeois, 2003; Irvine, Billow, Bourgeois, & Seeley, in press; Irvine et al., 2007; MacDonald, Stodel, & Casimiro, 2006; Rosen et al., 2002), but follow-up assessments to measure maintenance of gains generally have been lacking.

The research described here redesigned the training prototype of Irvine and colleagues (2007) and added supplementary content to help NAs prevent or, if necessary, deal with aggressive resident behaviors. This study addressed the following research questions:

As demonstrated by responses to questions following the viewing of video simulations of aggressive resident behaviors, to what extent will users of an Internet training program demonstrate that they have learned appropriate behavioral and communication techniques for working with residents who display aggressive behaviors?

To what extent will users’ knowledge of how to deal with aggressive behaviors be improved by the training?

To what extent will users’ attitudes, self-efficacy, and behavioral intentions regarding aggressive resident behaviors positively change as a result of program use?

Will effects shown at posttest be maintained at a one-month follow-up assessment?

How satisfied will users be with an Internet training approach?

Design and Methods

Research Design

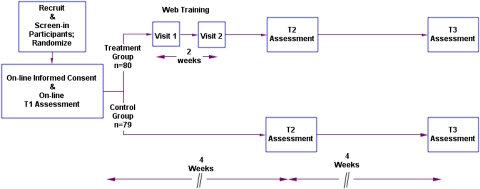

As described below, participants were recruited and randomized into treatment and control groups (see Figure 1). After consenting and submitting a baseline assessment (T1) treatment, subjects were asked to visit the intervention program twice, approximately one week apart. Approximately two weeks after completion of Visit 2, subjects in both groups completed a second assessment (T2), and one month later, they completed a third assessment (T3).

Figure 1.

Research design for Internet program evaluation.

The Intervention: Caring Skills: Dealing With Aggression

The NA training program was developed as a series of courses on the Internet in a Learning Management System format, which is similar to that often used for continuing education courses. A video narrator provided continuity and information. Video modeling by a diverse cast of paid actors was used for demonstrations and right-way, wrong-way exemplars. On-screen text, in the form of short titles, bulleted phrases, questions, and explanations, was written at 2nd–6th grade reading level. Users moved between web pages by clicking “next” buttons and answered text questions by clicking on radio buttons. Except for log-in, keyboarding was not required.

The program emphasized safety for the NA dealing with an aggressive situation, with emphasis on techniques that would minimize potential harm to the resident. Person-centered care philosophy (e.g., Brooker, 2007) was emphasized in right-way modeling videos. That is, NA models were always calm and respectful with aggressive residents, attempting to use knowledge of the resident as part of a positively oriented nonpunitive redirection strategy.

Visit 1 courses were designed to provide fundamental skills (About Aggression; The A.I.D. Care Strategy). These courses provided education about skills to safely de-escalate a situation with a resident exhibiting aggressive behavior. Video vignettes (e.g., NA reactions to a resident banging on a door with a water bottle) were supplemented by narration and by supportive testimonials. The NA video models demonstrated fundamental techniques of an intervention strategy called the A.I.D., which has been described elsewhere (Irvine et al., 2007, in press). In brief, “A” stands for Assess the situation from out of arm’s reach. “I” stands for Investigate, which includes approaching calmly, interrupting the behavior with a friendly greeting, and watching and listening to gather clues about the cause of the behavior. “D” stands for Do something, which includes validating the resident’s emotion and finding a suitable redirection.

Visit 2 offered advanced skills in four courses (About Hits, Hits With Fists or Arms, Hair Grabs, and Wrist Grabs) that applied the A.I.D. principles to more extreme situations. The Visit 2 courses taught situation-specific skills (e.g., hair grabs: pin the resident’s hand to your head, move closer, talk calmly, call for help if necessary). Modeling of key skills was performed by a psychiatric social worker who holds a black belt in karate and has extensive experience as an in-service trainer.

Procedures

After the protocol was approved by an Institutional Review Board, participants were recruited via e-mail announcements, e-newsletters, online message boards, and Internet advertising. Potential participants visited an informational Web site, and if still interested, they were linked to an online screening instrument that determined eligibility and gathered contact information. Eligibility criteria included (a) identification of employer for work as a direct caregiver (e.g., NA, Certified Nursing Assistants [CNA]) of residents over 50 years of age, (b) a self-rating of 0–3 on a 5-point scale rating confidence to handle aggressive situations with residents (i.e., 0 = not at all confident to 5 = extremely confident), and (c) a self-reported level of aggression-specific training of 0–3 on a 5-point scale (i.e., 0 = none to 5 = a lot). They were also required to enroll in the study from an Internet-video capable computer with broadband connection and to have a valid e-mail address. Once qualified, they were asked to provide full contact information to permit e-mail communication and compensation by mail.

In our previous Internet research, a few applicants have attempted to screen-in by providing false information to qualify for a study. Consequently, in this study, the data provided by each potential participant were checked against our database of 8,500 records of previous Internet study applicants to cross check for fraudulent information (e.g., same name, inconsistent age, gender, or ethnicity). Screened-in participants providing suspicious data were telephoned, and if the inconsistencies were not resolved, the individual was excluded from the study.

Approved participants were randomized into treatment (Tx) or control (Ctrl) conditions. They were e-mailed a link to an online informed consent form, and if they agreed, they were then linked to the T1 assessment. After submitting the T1 assessment, treatment participants were e-mailed log-in information to the Internet training program for Visit 1. One week after logging on to the Visit 1 courses, each participant was sent a second e-mail with log-in information for Visit 2. No effort was made to require participants to complete the training coursework at either visit. Research staff encouraged completion of the training by sending two e-mail reminders, at three and five days after participants were sent the program link. If, after seven days, the coursework was still not completed, research staff would also call the participant to ensure they had received the link and did not have any questions or difficulties accessing the training.

Four weeks after the T1 assessment and about two weeks after Visit 2 to the training program (see Figure 1), Tx and Ctrl participants were e-mailed log-in information to complete the T2. The Tx group assessments also included additional items to measure viewer acceptance of the program. After submission of the T2 assessment, the Tx group did not have access to the training program. Four weeks later (i.e., eight weeks after T1), all participants were e-mailed log-in information for T3. After T3 assessments were submitted, all participants were provided access to the program for three months.

All participants were paid $50 by check after submitting each of the three assessments. Tx group participants were not reimbursed for program visits. Both Tx and Ctrl participants who completed the training program were mailed a certificate of completion indicating their time spent viewing the courses. They were encouraged to show the certificate to their supervisor for possible training credit.

Measures

The first item set was designed around video situation testing (VST), which assessed participant reactions to video vignettes of resident behaviors (e.g., agitated resident swings a cane, resident grabs another resident forcefully). Situational testing has been used as VSTs to test NA reactions (Irvine et al., 2003; 2007). VSTs approximate real-life behavior when in vitro observations are not practical. In this research, a total of seven VSTs were presented to NAs. To minimize testing reactivity effects, the VSTs were presented only at T2 and T3.

Another set of items measured changes in constructs associated with behavior change from social cognitive theory (Bandura, 1969, 1977) and the expanded theory of reasoned action (Fishbein, 2000), including attitudes, self-efficacy, and behavioral intentions. Positive training effects should improve user knowledge, as well as empathy toward and attitudes about resident behaviors, and self-efficacy to deal with challenging situations.

At posttest only, treatment group participants also responded to items assessing their satisfaction with the program and its design.

VST Self-efficacy.—

Fourteen items assess participant confidence to react appropriately if faced with the depicted situation. Response options were recorded on a 7-point Likert scale (1 = not at all confident to 7 = extremely confident). A mean composite score was computed across the 11 items and showed excellent internal reliability (α = .97) and good test–retest reliability in the control condition from T1 to T2 (r = .63).

VST Knowledge.—

Ten items were used to assess a participant’s knowledge of seven separate video situations. Three additional items assessed knowledge of photos showing correct and incorrect ways to respond to physical aggression from a resident (e.g., resident hair grab: one correct and two incorrect photos). The number of correct items was summed and divided by 13 to indicate total percent of knowledge items correct.

Self-efficacy.—

Eleven items assessed participant confidence in their ability to apply the concepts taught in the program (e.g., How confident are you in your ability to redirect a resident who is acting aggressively?). Response options were recorded on a 7-point Likert scale (1 = not at all confident to 7 = extremely confident). A mean composite score was computed across the 11 items, and it showed excellent internal reliability (α = .93) and test–retest reliability in the control condition from T1 to T2 (r = .76).

Attitudes.—

Five items were used to assess participants’ attitudes toward resident actions (e.g., I believe that residents act aggressively because they have unmet needs). Response options were recorded on a 7-point Likert scale (1 = completely disagree to 7 = completely agree). A mean composite score across the five items was computed and showed adequate internal reliability (α = .67) and good test–retest reliability in the control condition from T1 to T2 (r = .70).

Empathy.—

Four items were used to assess participants’ empathy toward a resident (Ray & Miller, 1994). Response options were recorded on a 7-point Likert scale (1 = completely disagree to 7 = completely agree). A mean composite score across the four items was computed and showed adequate internal reliability (α = .62) and good test–retest reliability in the control condition from T1 to T2 (r = .70).

User Acceptance.—

At T2, the treatment group assessment included 14 items to assess user program acceptance. Four items asked users to rate the training program on a 7-point scale (1 = not at all to 7 = extremely positive) in terms of helpfulness, enjoy-ability, recommend-ability, and satisfaction. Nine items adapted from Internet evaluation instruments (Chambers, Connor, Diver, & McGonigle, 2002; Vandelanotte, De Bourdeaudhuij, Sallis, Spittaels, & Brug, 2005) were included to elicit responses about the program’s functionality, credibility, and usability. On these items, users were asked to agree or disagree with statements by responding on a 5-point scale (1 = strongly disagree to 5 = strongly agree).

Data Analysis

The three panel randomized design allowed for evaluation of both immediate and maintenance of between condition study effects. Immediate effects were assessed from T1 to T2 for survey measures and at T2 for the post-intervention–only VST measures. Maintenance effects were assessed from T1 to T3 for survey measures and at T3 for the post-intervention–only VST measures. For survey measures, the immediate and maintenance effects were evaluated with the T2 and T3 scores, respectively, using analysis of covariance (ANCOVA) models that adjusted for the pre-intervention (T1) score. For the VST post-intervention–only measures, the immediate and maintenance effects were evaluated with independent t tests at T2 and T3, respectively.

Despite the low rates of missing data (0%–5%), we employed an intent-to-treat analysis by using maximum likelihood estimates to impute missing data, as it produces more accurate and efficient parameter estimates than list-wise deletion or last-observation-carried-forward (Schafer & Graham, 2002). Effect size computations complement inferential statistics (i.e., p values) by estimating the strength of the relationship of variables in a statistical population. Effect sizes are reported as Cohen’s (1988) d-statistic (0.20 a small effect, 0.50 medium, and 0.80 large) and were calculated by dividing the difference between the treatment and control mean-adjusted posttests scores by the pooled standard deviations for ANCOVA models and by dividing the difference between treatment and control post-intervention–only scores by the pooled standard deviation for independent t test models.

Ancillary analysis for the treatment participants included dose–response (i.e., did greater program usage result in greater improvement in study outcomes) and descriptive summaries of program usability, impact, and user satisfaction. To evaluate effects of dose–response, change scores (defined as the posttest measure minus the pretest measure) on survey measures were correlated with total time of program use (in minutes). Effect sizes are reported as Pearson product–moment correlation coefficients and interpreted with Cohen’s (1988) convention of small (r =.10), medium (r =.30), and large (r =.50). For this within-subject analysis of the 80 treatment participants, the study had adequate power (80%) to detect significant correlations (at p < .05) of .31 or medium effect sizes. Meta-analytic studies have shown that effect sizes as low as r =.12 to be educationally meaningful (Wolf, 1986). Thus, for the within-subject ancillary analysis, correlations greater than .12 were interpreted as meaningful.

Results

Participants

The informational Web site was visited by a total of 2,067 potential participants, 1,569 of which accessed the screening instrument and 471 of which finished it. Two hundred and thirty-three individuals were screened in, but 21 were subsequently eliminated as being fraudulent (e.g., providing conflicting demographic information) and 31 were disqualified for other reasons (e.g., not responding to “opt-in e-mail” or a participant in other similar ORCAS research studies), leaving a total of 181 qualified potential participants. A total of 159 of these individuals consented and completed the T1 assessment.

As shown in Table 1, the sample of 159 participants was predominantly female and had at least graduated from high school. A majority were Caucasian, 21–45 years of age, and had household incomes of <$39,000.

Table 1.

Part A Study Demographic Characteristics by Study Condition

| Treatment (n = 80) |

Control (n = 79) |

|||

| N | % | N | % | |

| Sex (% female) | 70 | 87.5 | 68 | 86.1 |

| Age | ||||

| 18–20 years old | 9 | 11.3 | 1 | 1.3 |

| 21–35 years old | 30 | 37.5 | 36 | 45.6 |

| 36–45 years old | 23 | 28.7 | 16 | 20.3 |

| 46–55 years old | 11 | 13.8 | 19 | 24.1 |

| 55 or older | 7 | 8.8 | 7 | 8.9 |

| Race | ||||

| Black or African American | 18 | 22.5 | 20 | 25.3 |

| Asian | 5 | 6.3 | 0 | 0.0 |

| American Indian—Native American | 1 | 1.3 | 0 | 0.0 |

| Native Hawaiian—Pacific Islander | 1 | 1.3 | 0 | 0.0 |

| White or Caucasian | 48 | 60.0 | 53 | 67.1 |

| Mixed race/other | 7 | 8.8 | 6 | 7.6 |

| Annual household income | ||||

| Less than $20,000 | 22 | 27.5 | 12 | 15.2 |

| $20,000–$39,999 | 32 | 40.0 | 43 | 54.4 |

| $40,000–$59,999 | 13 | 16.3 | 12 | 15.2 |

| $60,000–$79,999 | 6 | 7.5 | 3 | 3.8 |

| $80,000–$99,999 | 5 | 6.3 | 7 | 8.9 |

| More than $100,000 | 2 | 2.5 | 2 | 2.5 |

| Highest level of education | ||||

| Less than high school diploma | 0 | 0.0 | 1 | 1.3 |

| High school diploma or GED | 15 | 18.8 | 15 | 19.0 |

| Some college | 54 | 67.5 | 47 | 59.5 |

| BA/BS | 10 | 12.5 | 13 | 16.5 |

| Graduate degree | 1 | 1.3 | 3 | 3.8 |

Baseline Equivalency and Attrition Analyses

Study treatment condition was compared with the demographic characteristics shown in Table 1 and the baseline assessment of all study outcome measures (see Table 2). No significant differences were found (a p < .05) suggesting that randomization produced initially equivalent groups. Of the 159 study participants, 151 (95%) completed all three assessment surveys, 6 (4%) two surveys, and 2 (1%) one survey. Participants who completed all three surveys were compared with those who completed one or two surveys on study condition, demographic characteristics, and all T1 outcome measures. Attrition was not significantly related to any of the measures, suggesting that dropping out of the study did not bias results.

Table 2.

Part A Descriptive Statistics for Study Outcomes

| Treatment (n = 80) |

Control (n = 79) |

|||||||||||

| T1 |

T2 |

T3 |

T1 |

T2 |

T3 |

|||||||

| M | SD | M | SD | M | SD | M | SD | M | SD | M | SD | |

| Self-efficacy | 5.04 | 0.92 | 5.72 | 0.74 | 5.99 | 0.66 | 5.20 | 1.00 | 5.35 | 1.00 | 5.51 | 0.99 |

| Empathy | 5.55 | 0.98 | 5.79 | 0.87 | 5.70 | 0.93 | 5.50 | 1.05 | 5.52 | 1.07 | 5.44 | 1.17 |

| Attitudes | 5.62 | 0.73 | 6.15 | 0.66 | 6.11 | 0.72 | 5.47 | 0.91 | 5.64 | 0.81 | 5.72 | 0.76 |

| VST self-efficacy | NA | NA | 5.44 | 0.94 | 5.59 | 0.87 | NA | NA | 4.91 | 1.05 | 5.19 | 1.06 |

| VST knowledge | NA | NA | 0.68 | 0.19 | 0.71 | 0.17 | NA | NA | 0.42 | 0.15 | 0.46 | 0.16 |

Note: NA = not applicable; VST = video situation test.

Immediate Effects

The top panel in Table 3 shows the results of the immediate effects of the online program. Results from the T1 to T2 ANCOVA models show significant group differences at T2 in self-efficacy, empathy, and attitudes. Comparison of the post-intervention means (T2) adjusted for pre-intervention scores (T1) shows greater gains by the treatment condition for every measure compared with the control condition. Differences correspond to medium to large effect sizes for self-efficacy and attitudes (d = 0.78 and 0.71, respectively) and small effects for empathy (d = 0.33). Results from the independent t test for the post-intervention–only VST measures show the treatment condition, compared with the control condition, had significantly greater means on self-efficacy (5.44 and 4.91, respectively) and knowledge (0.68 and 0.42, respectively). Associated effect sizes were medium (d = 0.54) and large (d = 1.55) for self-efficacy and knowledge, respectively.

Table 3.

Part A Results of Immediate and Maintenance Effects From Analysis of Covariance (ANCOVA) and Independent t Tests

| Immediate study effects |

|||||||||

| Survey measures |

VST measures |

||||||||

| T1–T2 ANCOVA models |

T2 independent t tests |

||||||||

| Adjusted meansa |

Test statistics |

||||||||

| TX | CNT | F value | p Value | d | t Value | p Value | d | ||

| Self-efficacy | 5.78 | 5.30 | 20.73 | <.001 | 0.78 | Self-efficacy | 3.39 | .001 | 0.54 |

| Empathy | 5.78 | 5.54 | 4.09 | .045 | 0.33 | Knowledge | 9.80 | <.001 | 1.55 |

| Attitudes | 6.12 | 5.67 | 19.44 | <.001 | 0.71 | ||||

| Maintenance of study effects |

|||||||||

| Survey measures |

VST measures |

||||||||

| T1–T3 ANCOVA models |

T3 independent t tests |

||||||||

| Adjusted meansa |

Test statistics |

||||||||

| TX | CNT | F value | p Value | d | t Value | p Value | d | ||

| Self-efficacy | 6.03 | 5.47 | 24.13 | <.001 | 0.79 | Self-efficacy | 3.53 | .001 | 0.42 |

| Empathy | 5.68 | 5.45 | 3.32 | .071 | 0.29 | Knowledge | 9.31 | <.001 | 1.53 |

| Attitudes | 6.09 | 5.74 | 9.81 | .002 | 0.50 | ||||

Notes: CNT = control; d = Cohen’s (1988) d-statistic; TX = treatment; VST = video situation test.

Post-intervention means adjusted for pre-intervention scores. All F statistics evaluated with 1 and 158 df. All t statistics evaluated with 157 df.

Maintenance Effects

The bottom panel in Table 3 shows the results for the maintenance of the significant program effects demonstrated in the immediate phase. The T1–T3 ANCOVA models show significant group differences at T3 in self-efficacy, empathy, and attitudes. Comparison of the post-intervention means (T3) adjusted for pre-intervention scores (T1) show greater gains by the treatment condition compared with the control condition for each of the measures. Associated effect sizes were large (d = 0.79), medium (d = 0.50), and small (d = 0.29) for self-efficacy, attitudes, and empathy, respectively, with empathy scores no longer significant (p = .071). Results from the independent t test for the post-intervention–only VST measures show that the treatment condition, compared with the control condition, had significantly greater means on self-efficacy (5.59 and 5.19, respectively) and knowledge (0.71 and 0.46, respectively). Associated effect sizes were small to medium (d = 0.42) and large (d = 1.53) for self-efficacy and knowledge, respectively. Taken together, results indicate that the significant group differences demonstrated in the immediate phase of the study were maintained at the two-month follow-up. Observed effect sizes between study phases suggest stability in the magnitude of the significant effects for self-efficacy (both survey and VST), empathy, and VST knowledge, but slight degradation in magnitude of effects for attitudes.

Program Usage and Dose–Response Analysis

At Visit 1, most treatment participants (n = 79, 98%) viewed both courses, and all participants saw at least one course. The average amount of time spent at Visit 1 was 65.8 min (SD = 32.1). At Visit 2, most treatment participants (n = 68, 85%) viewed all four courses, one (1%) viewed three courses, two (3%) viewed one course, and eight (10%) did not view any course. The average amount of time spent at Visit 2 was 31.5 min (SD = 21.0). The average amount of time spent on the program, across Visits 1 and 2, was 97.3 min (SD = 46.9 min).

To assess dose–response change scores (defined as the posttest measure minus the pretest measure), survey measures were correlated with total time of program use. Effect sizes in the small to medium range were found between time of program usage and improvement in self-efficacy (r = .22, p = .052) and empathy (r = .22, p = .055), and a small effect size was found for greater improvements in attitudes (r = .22, p = .055). Time spent using the program was also correlated with the post-intervention scores for VST self-efficacy (r = .18, p = .138) and VST knowledge (r = .49, p < .001), representing small and large effect sizes, respectively. Taken together, results suggest that treatment participants who invested more time using the program showed modest increases in study outcomes compared with those who used the program less.

Program Acceptance

At the end of the T2 assessment, treatment participants were asked about the program usability and about the impact the program would have on them if the program was made available to all company employees (see Table 4). Participants were asked to respond on a 5-point scale (1 = strongly disagree to 5 = strongly agree) to how much they agreed with each of the statements. An overall usability score was computed across the eight items and a mean score of 4.50 (SD = 0.50) indicated the participants agreed to a high degree that the program was useful. Likewise, an overall impact score was computed across the seven impact items and a mean score of 4.20 (SD = 0.63) indicated the participants agreed that the program would be beneficial to them if made available to their entire company. Finally, two user satisfaction items were asked and rated on a 7-point scale (1 = not at all to 7 = extremely). Responses average 5.4 (SD = 0.8) for the first item (Overall, how satisfied were you with the training website?) and 6.4 (SD = 0.9) for the second item (How likely is it that you would recommend the training site to a co-worker?), indicating high levels of overall satisfaction.

Table 4.

Program Usability and Impact of the Web Site

| Strongly disagree |

Disagree |

Neither agree nor disagree |

Agree |

Strongly agree |

||||||||

| N | % | N | % | N | % | N | % | N | % | M | SD | |

| Program usability | ||||||||||||

| Everything is easy to understand | 1 | 1.3 | 1 | 1.3 | 0 | 0.0 | 27 | 35.5 | 47 | 61.8 | 4.6 | 0.7 |

| The training program has much that is of interest to me | 1 | 1.3 | 0 | 0.0 | 1 | 1.3 | 25 | 33.3 | 48 | 64.0 | 4.6 | 0.7 |

| I can apply the training content to my job | 1 | 1.3 | 0 | 0.0 | 0 | 0.0 | 22 | 29.3 | 52 | 69.3 | 4.7 | 0.6 |

| I learned a lot from the training | 1 | 1.3 | 0 | 0.0 | 1 | 1.3 | 23 | 30.3 | 51 | 67.1 | 4.6 | 0.7 |

| The video scenes in the training helped me learn | 1 | 1.3 | 0 | 0.0 | 2 | 2.6 | 27 | 35.5 | 46 | 60.5 | 4.5 | 0.7 |

| Using the training website was a good use of my time | 1 | 1.3 | 0 | 0.0 | 1 | 1.3 | 27 | 35.5 | 47 | 61.8 | 4.6 | 0.7 |

| The training website was easy to use | 0 | 0.0 | 1 | 1.3 | 3 | 3.9 | 22 | 28.9 | 50 | 65.8 | 4.6 | 0.6 |

| Compared to an in-service on a similar topic, I learn more using a training website like this | 0 | 0.0 | 0 | 0.0 | 16 | 21.3 | 25 | 33.3 | 34 | 45.3 | 4.2 | 0.8 |

| Impact: if the program were made available by employer | ||||||||||||

| I would feel safer on the job | 0 | 0.0 | 0 | 0.0 | 9 | 12.0 | 32 | 42.7 | 34 | 45.3 | 4.3 | 0.7 |

| I would feel more satisfied with my job | 1 | 1.3 | 0 | 0.0 | 13 | 17.3 | 32 | 42.7 | 29 | 38.7 | 4.2 | 0.8 |

| I would feel like my company cares about me | 1 | 1.3 | 0 | 0.0 | 9 | 12.0 | 34 | 45.3 | 31 | 41.3 | 4.3 | 0.8 |

| I would feel more positive about my company | 1 | 1.4 | 1 | 1.4 | 8 | 10.8 | 29 | 39.2 | 35 | 47.3 | 4.3 | 0.8 |

| I would feel a greater commitment to my company | 1 | 1.4 | 1 | 1.4 | 21 | 28.4 | 30 | 40.5 | 21 | 28.4 | 3.9 | 0.9 |

| I would be able to provide better care for the residents | 0 | 0.0 | 0 | 0.0 | 3 | 4.0 | 24 | 32.0 | 48 | 64.0 | 4.6 | 0.6 |

| I would be more productive at my job | 0 | 0.0 | 0 | 0.0 | 18 | 24.0 | 32 | 42.7 | 25 | 33.3 | 4.1 | 0.8 |

Discussion

This project successfully developed and evaluated an Internet training program to teach NAs to work with residents exhibiting aggressive behaviors. Gains with medium to large effect sizes were achieved in mediators theoretically linked to behavior (Bandura, 1969, 1977; Malotte et al., 2000). Preliminary studies have shown immediate effects of Internet training for this population (e.g., Hobday et al., 2010; Irvine et al., 2003, 2007, in press; MacDonald & Walton, 2007). Results presented here demonstrate that training effects can be maintained for 2 months. The results are especially strong considering that 10% of the treatment group did not view any content.

Empathy for residents also improved among trainees but were somewhat diminished at two-month follow-up. Although preliminary, these results suggest that the training helped the NAs have more sympathy for the aggressive residents. Perhaps gains in empathy from training might help offset negative stereotypes (Hayman-White & Happell, 2005) and the other deleterious effects of resident aggression on care workers (Gates et al., 2011; Gillespie et al., 2010). The fact that the gains in empathy diminished over time may argue for the need for periodic booster training sessions to help NAs maintain a positive perspective.

Taken together, these results suggest that the intervention had a substantial and clinically meaningful impact on the participants. Thus, this research extends previous reports about the effective use of Internet training for NAs and has implication for aggression training delivery in the LTC industry, which to date has paid no attention to Web training (Farrell & Cubit, 2005; Livingston et al., 2010).

Although research on Internet professional training is still preliminary, a recent meta-analysis indicates that Internet interactivity and feedback can enhance learning outcomes and has the potential to improve instructional efficacy (Cook, Levinson, & Garside, 2010). Computerized training such as that tested in this research offers presentation fidelity of theoretically sound instructional designs, video behavior modeling, and interactive testing to enhance learning. We believe that the individualized self-paced education and skills-practice is more likely to hold the users attention compared with an in-service training, where the learner’s attention may wander. Studies show high program acceptance by NAs (Irvine, et al., 2007, in press), by a small sample of licensed health professionals (Irvine et al., in press), and by nurses and non-caregiving staff of a nursing home (Irvine, Beatty, & Seeley, 2011). Because Internet training requires minimal supervision, is available 24/7, and provides automated record keeping, this technology should have much to offer to the LTC industry.

Potential barriers to LTC adoption of Internet training are, however, not insignificant. LTCs typically rely on in-service instruction by nurse trainers or experts. Integration of Internet-based instruction would cast nurse trainers into a different and more technological role. They would spend time scheduling individual users, assisting as needed with logins, and trouble shooting user errors or computer problems. This might push the comfort level of some nurse trainers, even if they are frequent computer and Internet users. Additionally, LTC buildings may not be designed to accommodate computers. Older LTCs are not wired for computers, and they may have limited space for training stations or for securing computer equipment. Even if management supports the equipment expenses, costs of individual training programs might be a constraint. Interactive programs with video behavior modeling may be effective, but they are expensive to produce.

Although these are significant challenges, pilot research has shown positive training effects in real-world LTC environments. In one study, a nursing home owner purchased two computers for $500 each, wired them to the Internet via their Cable TV provider, and scheduled nurses and all non-caregiving staff for online dementia training within a two-week period (Irvine, Beatty, et al., 2011). In a study now being prepared for publication, two $810 computers were purchased for each of six LTCs (Irvine, Billow, et al., 2011). The computers were hooked to the Internet via the TV Cable provider and 89 participants used the same Internet aggression training program tested in this research, with positive results. These experiences lead us to believe that the Internet can be an integral part of future training strategies. Pricing of Internet training programs will probably depend on the volume of users, number and duration of courses licensed, and who hosts the program on the Internet. We believe, however, that acceptance of this technology by the LTC industry will require psychological as well as logistical readjustment to move beyond traditional in-service training. And change is often not easy.

Another issue of potential concern involves NAs as adult learners. Although continuing education via the Internet for nurses and licensed staff is readily available, NAs tend to be less well educated (Glaister & Blair, 2008; Molinari et al., 2008) and might be perceived to be less well suited for computerized training. Will NAs even know how to use a computer mouse or navigate through a training program? We found no research on this topic, but our experience indicates that the answer is a qualified “yes.” In a 2003 unpublished study, 21% of the CNAs requested assistance using a computer when arriving at the computer laboratory. In another study, 15% of NAs requested assistance (Irvine, Billow, et al., 2011). In both cases, the NAs expressed apprehension and needed initial instruction with mouse use, but only required temporary help with navigation (e.g., clicking the Next button to advance screens). Because Internet use by high school students is increasingly common, we believe that it will help prepare future NAs for computerized training formats. Given the decreasing costs of computer technology and increasing access to the Web, Internet-based professional training appears to be part of the solution for much needed improvements in training NAs in LTC industry.

Although the research presented here is promising, it has limitations. First, the research design did not permit analysis of whether the training effects maintained over more than two months. Longer term follow-up and in vivo observation would provide more rigorous evidence of training effects. Second, although participants were screened to qualify and then randomized, they participated on the Internet without direct contact with the research team, so we cannot verify that they met the screening criteria. Third, we speculate that the participants may have included more sophisticated computer users than is representative of the NA population as a whole. NAs tend to be less educated and work for relatively low wages compared with the U.S. population, and thus, they might be less likely to own computers or be facile on the Internet. Finally, the participants roughly fit the age and gender characteristics of NAs nationally, but the sample of people of color was low (National Nursing Home Survey, 2004). It is not known if the training would have similar effects on a more diverse population of NAs.

Implications for Practice

This research demonstrates the potential of Internet-based professional training to provide substantive preparation that can help care staff prevent aggressive resident behavior or if necessary, react to resident aggression in ways that are safe for the resident as well as the caregiver. The Internet has great potential to provide effective individualized training. The logistics of providing computers for the training are not substantial, but integrating an Internet training strategy into the daily work life of an LTC is a challenge still to be addressed.

Funding

This study was funded by a grant from the National Institute on Aging to Oregon Center for Applied Science (R44AG024675).

Acknowledgments

This project required the efforts of a multidisciplinary team of developers, programmers, and consultants. Tammy Salyer and Bess Day led on evaluation logistics. Program development was supported by Gretchen Boutin, Vicky Gelatt, Elizabeth Greene, Teri Gutierrez, Rob Hudson, Beth Machamer, Dave Merwin, Percy Perez-Pinedo, and Diana Robson. Christine Osterberg, RN, Joanne Rader, RN, and John Booker, CNA consulted on behavioral issues and presentation integrity. Peter Sternberg was the expert on, and primary video model for, behavioral responses to resident aggression. Dennis Ary made helpful comments on earlier versions of the manuscript.

References

- Bandura A. Principles of behavior modification. New York: Holt, Rinehart and Winston; 1969. [Google Scholar]

- Bandura A. Self-efficacy: Toward a unifying theory of behavioral change. Psychological Review. 1977;84:191–215. doi: 10.1037//0033-295x.84.2.191. doi:10.1037/0033-295X.84.2.191. [DOI] [PubMed] [Google Scholar]

- Breen A, Swartz L, Flisher AJ, Joska JA, Corrigall J, Plaatjies L, et al. Experience of mental disorder in the context of basic service reforms: The impact on caregiving environments in South Africa. International Journal of Environmental Health Research. 2007;17:327–334. doi: 10.1080/09603120701628388. doi:782903506 [pii] 10.1080/09603120701628388. [DOI] [PubMed] [Google Scholar]

- Brooker D. Person-centred dementia care: Making services better. London: Jessica Kingsley; 2007. [DOI] [PubMed] [Google Scholar]

- Chambers M, Connor S, Diver M, McGonigle M. Usability of multimedia technology to help caregivers prepare for a crisis. Telemedicine Journal and E-Health. 2002;8:343–347. doi: 10.1089/15305620260353234. doi:10.1089/15305620260353234. [DOI] [PubMed] [Google Scholar]

- Cohen J. Statistical power analysis for the behavioral sciences. 2nd ed. Hillsdale, NJ: Erlbaum & Associates; 1988. [Google Scholar]

- Cook DA, Levinson AJ, Garside S. Time and learning efficiency in Internet-based learning: A systematic review and meta-analysis. Advances in Health Sciences Education: Theory and Practice. 2010;15:755–770. doi: 10.1007/s10459-010-9231-x. doi:10.1007/s10459-010-9231-x. [DOI] [PubMed] [Google Scholar]

- Donat DC, McKeegan C. Employing behavioral methods to improve the context of care in a public psychiatric hospital: Some applications to health care management. Psychiatric Rehabilitation Journal. 2003;27:177–181. doi: 10.2975/27.2003.178.181. doi:10.2975/27.2003.178.181. [DOI] [PubMed] [Google Scholar]

- Farrell G, Cubit K. Nurses under threat: A comparison of content of 28 aggression management programs. International Journal of Mental Health Nursing. 2005;14:44–53. doi: 10.1111/j.1440-0979.2005.00354.x. doi:INM354 [pii] 10.1111/j.1440-0979.2005.00354.x. [DOI] [PubMed] [Google Scholar]

- Fishbein M. The role of theory in HIV prevention. AIDS Care. 2000;12:273–278. doi: 10.1080/09540120050042918. doi:10.1080/09540120050042918. [DOI] [PubMed] [Google Scholar]

- Gates DM, Fitzwater E, Deets C. Testing the reliability and validity of the assault log and violence prevention checklist. Journal of Gerontological Nursing. 2003;29:18–23. doi: 10.3928/0098-9134-20030801-09. Retrieved from http://www.slackjournals.com/jgn. [DOI] [PubMed] [Google Scholar]

- Gates DM, Fitzwater E, Meyer U. Violence against caregivers in nursing homes. Expected, tolerated, and accepted. Journal of Gerontological Nursing. 1999;25:12–22. doi: 10.3928/0098-9134-19990401-05. Retrieved from http://www.slackjournals.com/jgn. [DOI] [PubMed] [Google Scholar]

- Gates DM, Gillespie G, Succop P. Violence against nurses and its impact on stress and productivity. Nursing Economic$ 29:59–65. 2011. [PubMed] [Google Scholar]

- Gates DM, Ross CS, McQueen L. Violence against emergency department workers. Journal of Emergency Medicine. 2006;31:331–337. doi: 10.1016/j.jemermed.2005.12.028. doi:S0736-4679(06)00492-6 [pii] 10.1016/j.jemermed.2005.12.028. [DOI] [PubMed] [Google Scholar]

- Gillespie GL, Gates D, Miller M, Howard L. Violence against ED workers in a pediatric hospital. Cincinnati, OH: National Institute of Occupational Safety and Health and the Health Pilot Research Project Training Program of the University of Cincinnati Education and Research Center; 2007. [Google Scholar]

- Gillespie GL, Gates DM, Miller M, Howard PK. Violence against healthcare workers in a pediatric emergency department. Advanced Emergency Nursing Journal. 2010;32:68–82. doi: 10.1097/tme.0b013e3181c8b0b4. doi:10.1097/TME.0b013e3181c8b0b4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glaister JA, Blair C. Improved education and training for nursing assistants: Keys to promoting the mental health of nursing home residents. Issues in Mental Health Nursing. 2008;29:863–872. doi: 10.1080/01612840802182912. doi:795243535 [pii] 10.1080/01612840802182912. [DOI] [PubMed] [Google Scholar]

- Hayman-White K, Happell B. Nursing students’ attitudes toward mental health nursing and consumers: Psychometric properties of a self-report scale. Archives of Psychiatric Nursing. 2005;19:184–193. doi: 10.1016/j.apnu.2005.05.004. doi:S088394170500107X [pii] 10.1016/j.apnu.2005.05.004. [DOI] [PubMed] [Google Scholar]

- Hobday JV, Savik K, Smith S, Gaugler JE. Feasibility of Internet training for care staff of residents with dementia: The CARES program. Journal of Gerontological Nursing. 2010;36:13–21. doi: 10.3928/00989134-20100302-01. doi:10.3928/00989134-20100302-01. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Irvine AB, Ary DV, Bourgeois MS. An interactive multimedia program to train professional caregivers. Journal of Applied Gerontology. 2003;22:269–288. doi:10.1177/0733464803022002006. [Google Scholar]

- Irvine AB, Beatty JA, Seeley JR. Non-direct care staff training to work with residents with dementia. Health Care Management Review. 2011 Manuscript submitted for publication. [Google Scholar]

- Irvine AB, Billow MB, Bourgeois M, Seeley JR. Mental illness training for long term care staff. Journal of the American Medical Director's Association. doi: 10.1016/j.jamda.2011.01.015. in press. doi:10.1016/j.jamda.2011.01.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Irvine AB, Billow M, Gates D, Fitzwater E, Seeley JR, Bourgeois M. Web training in long term care for aggressive resident behaviors. 2011 doi: 10.1093/geront/gnr069. Manuscript in preparation. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Irvine AB, Bourgeois M, Billow M, Seeley JR. Internet training for nurse aides to prevent resident aggression. Journal of the American Medical Director's Association. 2007;8:519–526. doi: 10.1016/j.jamda.2007.05.002. doi:S1525-8610(07)00254-X [pii] 10.1016/j.jamda.2007.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Livingston JD, Verdun-Jones S, Brink J, Lussier P, Nicholls T. A narrative review of the effectiveness of aggression management training programs for psychiatric hospital staff. Journal of Forensic Nursing. 2010;6:15–28. doi: 10.1111/j.1939-3938.2009.01061.x. doi:JFN1061 [pii] 10.1111/j.1939-3938.2009.01061.x. [DOI] [PubMed] [Google Scholar]

- MacDonald C, Stodel E, Casimiro L. Online dementia care training for healthcare teams in continuing and long-term care homes: A viable solution for improving quality of care and quality of life for residents. International Journal on E-Learning. 2006;5:27. Retrieved from http://www.aace.org/pubs/ijel/ [Google Scholar]

- MacDonald CJ, Walton R. E-Learning Education Solutions for Caregivers in Long-Term Care (LTC) Facilities: New Possibilities. Education for Health. 2007;7:85. (online), Retrieved from http://www.educationforhealth.net; http://www.educationforhealth.net/articles/showarticlenew.asp?ArticleID=85. [PubMed] [Google Scholar]

- Malotte CK, Jarvis B, Fishbein M, Kamb M, Iatesta M, Hoxworth T, et al. Stage of change versus an integrated psychosocial theory as a basis for developing effective behaviour change interventions. The Project RESPECT Study Group. AIDS Care. 2000;12:357–364. doi: 10.1080/09540120050043016. doi:10.1080/09540120050043016. [DOI] [PubMed] [Google Scholar]

- Manderson D, Schofield V. How caregivers respond to aged-care residents’ aggressive behaviour. Nursing New Zealand. 2005;11:18–19. Retrieved from http://www.kaitiakiads.co.nz/ [PubMed] [Google Scholar]

- Molinari VA, Merritt SS, Mills WL, Chiriboga DA, Conboy A, Hyer K, et al. Serious mental illness in Florida nursing homes: Need for training. Gerontology & Geriatrics Education. 2008;29:66–83. doi: 10.1080/02701960802074321. doi:10.1080/02701960802074321. [DOI] [PubMed] [Google Scholar]

- National Nursing Home Survey. National Nursing Home Survey, 2004–2005 Nursing assistant tables—Estimates. Table 1. 2004 Retrieved April 1, 2011, from http://www.cdc.gov/nchs/nnhs/nursing_assistant_tables_estimates.htm#DemoCareer. [Google Scholar]

- Ray EB, Miller KI. Social support, home/work stress, and burnout: who can help? Journal of Applied Psychology. 1994;30:357–373. [Google Scholar]

- Rosen J, Mulsant BH, Kollar M, Kastango KB, Mazumdar S, Fox D. Mental health training for nursing home staff using computer-based interactive video: A 6-month randomized trial. Journal of the American Medical Directors Association. 2002;3:291–296. doi: 10.1097/01.JAM.0000027201.90817.21. doi:PII: S1525-8610(05)70543-0. [DOI] [PubMed] [Google Scholar]

- Schafer J, Graham J. Missing data: Our view of the state of the art. Psychological Methods. 2002;7:147–177. doi:10.1037/1082-989X.7.2.147. [PubMed] [Google Scholar]

- Shaw MM. Aggression toward staff by nursing home residents: Findings from a grounded theory study. Journal of Gerontological Nursing. 2004;30:43–54. doi: 10.3928/0098-9134-20041001-11. Retrieved from http://www.slackjournals.com/jgn. [DOI] [PubMed] [Google Scholar]

- Snyder LA, Chen PY, Vacha-Haase T. The underreporting gap in aggressive incidents from geriatric patients against certified nursing assistants. Violence and Victims. 2007;22:367–379. doi: 10.1891/088667007780842784. doi:10.1891/088667007780842784. [DOI] [PubMed] [Google Scholar]

- Squillace MR, Remsburg RE, Harris-Kojetin LD, Bercovitz A, Rosenoff E, Han B. The National Nursing Assistant Survey: Improving the evidence base for policy initiatives to strengthen the certified nursing assistant workforce. The Gerontologist. 2009;49:185–197. doi: 10.1093/geront/gnp024. doi:gnp024 [pii] 10.1093/geront/gnp024. [DOI] [PubMed] [Google Scholar]

- Tak S, Sweeney MH, Alterman T, Baron S, Calvert GM. Workplace assaults on nursing assistants in US nursing homes: A multilevel analysis. American Journal of Public Health. 2010;100:1938–1945. doi: 10.2105/AJPH.2009.185421. doi:10.2105/AJPH.2009.185421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vandelanotte C, De Bourdeaudhuij I, Sallis J, Spittaels H, Brug J. Efficacy of sequential or simultaneous interactive computer-tailored interventions for increasing physical activity and decreasing fat intake. Annals of Behavioral Medicine. 2005;29:138–146. doi: 10.1207/s15324796abm2902_8. doi:10.1207/s15324796abm2902_8. [DOI] [PubMed] [Google Scholar]

- Wolf FM. Meta-analysis: Quantitative methods for research synthesis. Beverly Hills, CA: Sage; 1986. [Google Scholar]