Abstract

The concept of reinforcement is at least incomplete and almost certainly incorrect. An alternative way of organizing our understanding of behavior may be built around three concepts: allocation, induction, and correlation. Allocation is the measure of behavior and captures the centrality of choice: All behavior entails choice and consists of choice. Allocation changes as a result of induction and correlation. The term induction covers phenomena such as adjunctive, interim, and terminal behavior—behavior induced in a situation by occurrence of food or another Phylogenetically Important Event (PIE) in that situation. Induction resembles stimulus control in that no one-to-one relation exists between induced behavior and the inducing event. If one allowed that some stimulus control were the result of phylogeny, then induction and stimulus control would be identical, and a PIE would resemble a discriminative stimulus. Much evidence supports the idea that a PIE induces all PIE-related activities. Research also supports the idea that stimuli correlated with PIEs become PIE-related conditional inducers. Contingencies create correlations between “operant” activity (e.g., lever pressing) and PIEs (e.g., food). Once an activity has become PIE-related, the PIE induces it along with other PIE-related activities. Contingencies also constrain possible performances. These constraints specify feedback functions, which explain phenomena such as the higher response rates on ratio schedules in comparison with interval schedules. Allocations that include a lot of operant activity are “selected” only in the sense that they generate more frequent occurrence of the PIE within the constraints of the situation; contingency and induction do the “selecting.”

Keywords: reinforcement, contingency, correlation, induction, inducing stimulus, inducer, allocation, Phylogenetically Important Event, reinstatement

This article aims to lay out a conceptual framework for understanding behavior in relation to environment. I will not attempt to explain every phenomenon known to behavior analysts; that would be impossible. Instead, I will offer this framework as a way to think about those phenomena. It is meant to replace the traditional framework, over 100 years old, in which reinforcers are supposed to strengthen responses or stimulus–response connections, and in which classical conditioning and operant conditioning are considered two distinct processes. Hopefully, knowledgeable readers will find nothing new herein, because the pieces of this conceptual framework were all extant, and I had only to assemble them into a whole. It draws on three concepts: (1) allocation, which is the measure of behavior; (2) induction, which is the process that drives behavior; and (3) contingency, which is the relation that constrains and connects behavioral and environmental events. Since none of these exists at a moment of time, they necessarily imply a molar view of behavior.

As I explained in earlier papers (Baum, 2001; 2002; 2004), the molar and molecular views are not competing theories, they are different paradigms (Kuhn, 1970). The decision between them is not made on the basis of data, but on the basis of plausibility and elegance. No experimental test can decide between them, because they are incommensurable—that is, they differ ontologically. The molecular view is about discrete responses, discrete stimuli, and contiguity between those events. It offers those concepts for theory construction. It was designed to explain short-term, abrupt changes in behavior, such as occur in cumulative records (Ferster & Skinner, 1957; Skinner, 1938). It does poorly when applied to temporally extended phenomena, such as choice, because its theories and explanations almost always resort to hypothetical constructs to deal with spans of time, which makes them implausible and inelegant (Baum, 1989). The molar view is about extended activities, extended contexts, and extended relations. It treats short-term effects as less extended, local phenomena (Baum, 2002; 2010; Baum & Davison, 2004). Since behavior, by its very nature, is necessarily extended in time (Baum, 2004), the theories and explanations constructed in the molar view tend to be simple and straightforward. Any specific molar or molecular theory may be invalidated by experimental test, but no one should think that the paradigm is thereby invalidated; a new theory may always be invented within the paradigm. The molar paradigm surpasses the molecular paradigm by producing theories and explanations that are more plausible and elegant.

The Law of Effect

E. L. Thorndike (2000/1911), when proposing the law of effect, wrote: “Of several responses made to the same situation, those which are accompanied or closely followed by satisfaction to the animal will, other things being equal, be more firmly connected with the situation, so that, when it recurs, they will be more likely to recur…” (p. 244).

Early on, it was subject to criticism. J. B. Watson (1930), ridiculing Thorndike's theory, wrote: “Most of the psychologists… believe habit formation is implanted by kind fairies. For example, Thorndike speaks of pleasure stamping in the successful movement and displeasure stamping out the unsuccessful movements” (p. 206).

Thus, as early as 1930, Watson was skeptical about the idea that satisfying consequences could strengthen responses. Still, the theory has persisted despite occasional criticism (e.g., Baum, 1973; Staddon, 1973).

Thorndike's theory became the basis for B. F. Skinner's theory of reinforcement. Skinner drew a distinction, however, between observation and theory. In his paper “Are theories of learning necessary?” Skinner (1950) wrote: “… the Law of Effect is no theory. It simply specifies a procedure for altering the probability of a chosen response.”

Thus, Skinner maintained that the Law of Effect referred to the observation that when, for example, food is made contingent on pressing a lever, lever pressing increases in frequency. Yet, like Thorndike, he had a theory as to how this came about. In his 1948 paper, “‘Superstition’ in the pigeon,” Skinner restated Thorndike's theory in a new vocabulary: “To say that a reinforcement is contingent upon a response may mean nothing more than that it follows the response … conditioning takes place presumably because of the temporal relation only, expressed in terms of the order and proximity of response and reinforcement.”

If we substituted “satisfaction” for “reinforcement” and “accompanied or closely followed” for “order and proximity,” we would be back to Thorndike's idea above.

Skinner persisted in his view that order and proximity between response and reinforcer were the basis for reinforcement; it reappeared in Science and Human Behavior (Skinner, 1953): “So far as the organism is concerned, the only important property of the contingency is temporal. The reinforcer simply follows the response… We must assume that the presentation of a reinforcer always reinforces something, since it necessarily coincides with some behavior” (p. 85).

From the perspective of the present, we know that Skinner's observation about contingency was correct. His theory of order and proximity, however, was incorrect, because a “reinforcer” doesn't “reinforce” whatever it coincides with. That doesn't happen in everyday life; if I happen to be watching television when a pizza delivery arrives, will I be inclined to watch television more? (Although, as we shall see, the pizza delivery matters, in that it adds value to, say, watching football on television.) It doesn't happen in the laboratory, either, as Staddon (1977), among others, have shown.

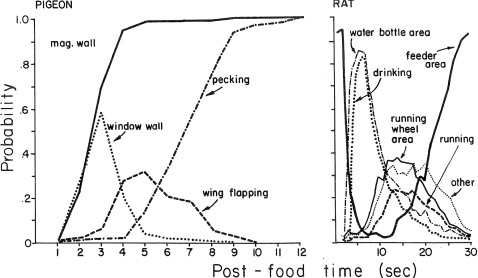

When Staddon and Simmelhag (1971) repeated Skinner's “superstition” experiment, they observed that many different activities occurred during the interval between food presentations. Significantly, the activities often were not closely followed by food; some emerged after the food and were gone by the time the food occurred. Figure 1 shows some data reported by Staddon (1977). The graph on the left shows the effects of presenting food to a hungry pigeon every 12 s. The activities of approaching the window wall of the chamber and wing flapping rose and then disappeared, undermining any notion that they were accidentally reinforced. The graph on the right shows the effects of presenting food to a hungry rat every 30 s. Early in the interval, the rat drinks and runs, but these activities disappeared before they could be closely followed by food. Subsequent research has confirmed these observations many times over, contradicting the notion of strengthening by accidental contiguity (e.g., Palya & Zacny, 1980; Reid, Bacha, & Morán, 1993; Roper, 1978).

Fig 1.

Behavior induced by periodic food. Left: Activities of a pigeon presented with food every 12 s. Right: Activities of a rat presented with food every 30 s. Activities that disappeared before food delivery could not be reinforced. Reprinted from Staddon (1977).

Faced with results like those in Figure 1, someone theorizing within the molecular paradigm has at least two options. Firstly, contiguity between responses and food might be stretched to suggest that the responses are reinforced weakly at a delay. Secondly, food–response contiguity might be brought to bear by relying on the idea that food might elicit responses. For example, Killeen (1994) theorized that each food delivery elicited arousal, a hypothetical construct. He proposed that arousal jumped following food and then dissipated as time passed without food. Build-up of arousal was then supposed to cause the responses shown in Figure 1. As we shall see below, the molar paradigm avoids the hypothetical entity by relying instead on the extended relation of induction.

To some researchers, the inadequacy of contiguity-based reinforcement might seem like old news (Baum, 1973). One response to its inadequacy has been to broaden the concept of reinforcement to make it synonymous with optimization or adaptation (e.g., Rachlin, Battalio, Kagel, & Green, 1981; Rachlin & Burkhard, 1978). This redefinition, however, begs the question of mechanism. As Herrnstein (1970) wrote, “The temptation to fall back on common sense and conclude that animals are adaptive, i.e., doing what profits them most, had best be resisted, for adaptation is at best a question, not an answer (p. 243).” Incorrect though it was, the contiguity-based definition at least attempted to answer the question of how approximations to optimal or adaptive behavior might come about. This article offers an answer that avoids the narrowness of the concepts of order, proximity, and strengthening and allows plausible accounts of behavior both in the laboratory and in everyday life. Because it omits the idea of strengthening, one might doubt that this mechanism should be called “reinforcement” at all.

Allocation of Time among Activities

We see in Figure 1 that periodic food changes the allocation of time among activities like wing flapping and pecking or drinking and running. Some activities increase while some activities decrease. No notion of strengthening need enter the picture. Instead, we need only notice the changing allocation.

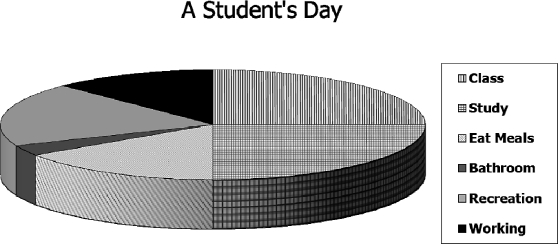

To be alive is to behave, and we may assume that in an hour's observation time one observes an hour's worth of behavior or that in a month of observation one observes a month's worth of behavior. Thus, we may liken allocation of behavior to cutting up a pie. Figure 2 illustrates the idea with a hypothetical example. The chart shows allocation of time in the day of a typical student. About 4 hr are spent attending classes, 4 hr studying, 2.5 hr eating meals, 0.5 hr in bathroom activities, 3 hr in recreation, and 2 hr working. The total amounts to 16 hr; the other 8 hr are spent in sleep. Since a day has only 24 hr, if any of these activities increases, others must decrease, and if any decreases, others must increase. As we shall see later, the finiteness of time is important to understanding the effects of contingencies.

Fig 2.

A hypothetical illustration of the concept of allocation. The times spent in various activities in a typical day of a typical student add up to 16 h, the other 8 being spent in sleep. If more time is spent in one activity, less time must be spent in others, and vice versa.

Measuring the time spent in an activity may present challenges. For example, when exactly is a pigeon pecking a key or a rat pressing a lever? Attaching a switch to the key or lever allows one to gauge the time spent by the number of operations of the switch. Luckily, this method is generally reliable; counting switch operations and running a clock whenever the switch is operated give equivalent data (Baum, 1976). Although in the past researchers often thought they were counting discrete responses (pecks and presses), from the present viewpoint they were using switch operations to measure the time spent in continuous activities (pecking and pressing).

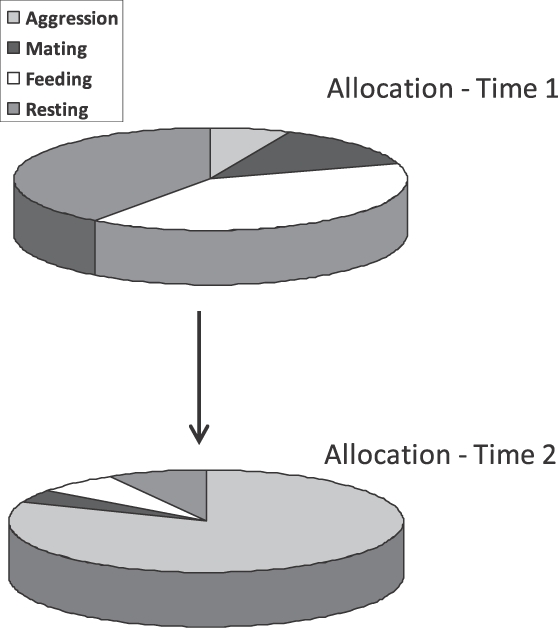

Apart from unsystematic variation that might occur across samples, allocation of behavior changes systematically as a result of two sources: induction and contingency. As we see in Figure 1, an inducing environmental event like food increases some activities and, because time is finite, necessarily decreases others. For example, food induces pecking in pigeons, electric shock induces aggression in rats and monkeys, and removal of food induces aggression in pigeons. Figure 3 shows a hypothetical example. Above (at Time 1) is depicted a pigeon's allocation of time among activities in an experimental situation that includes a mirror and frequent periodic food. The pigeon spends a lot of time eating and resting and might direct some aggressive or reproductive activity toward the mirror. Below (at Time 2) is depicted the result of increasing the interval between food deliveries. Now the pigeon spends a lot of time aggressing toward the mirror, less time eating, less time resting, and little time in reproductive activity.

Fig 3.

A hypothetical example illustrating change of behavior due to induction as change of allocation. At Time 1, a pigeon's activities in an experimental space exhibit Allocation 1 in response to periodic food deliveries. At Time 2, the interval between food deliveries has been lengthened, resulting in an increase in aggression toward a mirror. Other activities decrease necessarily.

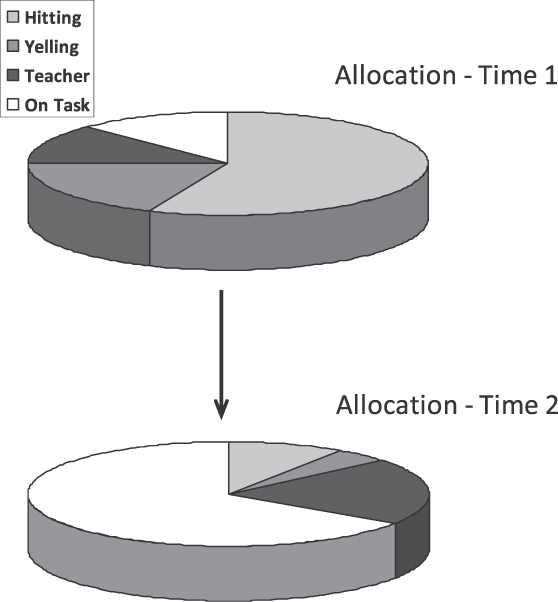

A contingency links an environmental event to an activity and results in an increase or decrease in the activity. For example, linking food to lever pressing increases the time spent pressing; linking electric shock to lever pressing usually decreases the time spent pressing. Figure 4 illustrates with a hypothetical example. The diagram above shows a child's possible allocation of behavior in a classroom. A lot of time is spent in hitting other children and yelling, but little time on task. Time is spent interacting with the teacher, but with no relation to being on task. The diagram below shows the result after the teacher's attention is made contingent on being on task. Now the child spends a lot of time on task, less time hitting and yelling, and a little more time interacting with the teacher—presumably now in a more friendly way. Because increase in one activity necessitates decrease in other activities, a positive contingency between payoff and one activity implies a negative contingency between payoff and other activities—the more yelling and hitting, the less teacher attention. A way to capture both of these aspects of contingency is to consider the whole allocation to enter into the contingency with payoff (Baum, 2002).

Fig 4.

A hypothetical example illustrating change of allocation due to correlation. At Time 1, a child's classroom activities exhibit Allocation 1, including high frequencies of disruptive activities. At Time 2, after the teacher's attention has been made contingent upon being on task, Allocation 2 includes more time spent on task and, necessarily, less time spent in disruptive activities.

Induction

The concept of induction was introduced by Evalyn Segal in 1972. To define it, she relied on dictionary definitions, “stimulating the occurrence of” and “bringing about,” and commented, “It implies a certain indirection in causing something to happen, and so seems apt for talking about operations that may be effective only in conjunction with other factors” (p. 2). In the molar view, that “indirection” is crucial, because the concept of induction means that, in a given context, the mere occurrence of certain events, such as food—i.e., inducers—results in occurrence of or increased time spent in certain activities. All the various activities that have at one time or another been called “interim,” “terminal,” “facultative,” or “adjunctive,” like those shown in Figure 1, may be grouped together as induced activities (Hineline, 1984). They are induced in any situation that includes events like food or electric shock, the same events that have previously been called “reinforcers,” “punishers,” “unconditional stimuli,” or “releasers.” Induction is distinct from the narrower idea of elicitation, which assumes a close temporal relation between stimulus and response. One reason for introducing a new, broader term is that inducers need have no close temporal relation to the activities they induce (Figure 1). Their occurrence in a particular environment suffices to induce activities, most of which are clearly related to the inducing event in the species' interactions with its environment—food induces food-related activity (e.g., pecking in pigeons), electric shock induces pain-related activity (e.g., aggression and running), and a potential mate induces courtship and copulation.

Induction and Stimulus Control

Induction resembles Skinner's concept of stimulus control. A discriminative stimulus has no one-to-one temporal relation with responding, but rather increases the rate or time spent in the activity in its presence. In present terms, a discriminative stimulus modulates the allocation of activities in its presence; it induces a certain allocation of activities. One may say that a discriminative stimulus sets the occasion or the context for an operant activity. Similarly, an inducer may be said to occasion or create the context for the induced activity. Stimulus control may seem sometimes to be more complicated, say, in a conditional discrimination that requires a certain combination of events, but, no matter how complex the discrimination, the relation to behavior is the same. Indeed, although we usually conceive of stimulus control as the outcome of an individual's life history (ontogeny), if we accept the idea that some instances of stimulus control might exist as a result of phylogeny, then stimulus control and induction would be two terms for the same phenomenon: the effect of context on behavioral allocation. In what follows, I will assume that induction or stimulus control may arise as a result of either phylogeny or life history.

Although Pavlov thought in terms of reflexes and measured only stomach secretion or salivation, with hindsight we may apply the present terms and say that he was studying induction (Hineline, 1984). Zener (1937), repeating Pavlov's procedure with dogs that were unrestrained, observed that during a tone preceding food the dogs would approach the food bowl, wag their tails, and so on—all induced behavior. Pavlov's experiments, in which conditional stimuli such as tones and visual displays were correlated with inducers like food, electric shock, and acid in the mouth, showed that correlating a neutral stimulus with an inducer changes the function of the stimulus. In present terms, it becomes a conditional inducing stimulus or a conditional inducer, equivalent to a discriminative stimulus. Similarly, activities linked by contingency to inducers become inducer-related activities. For example, Shapiro (1961) found that when a dog's lever pressing produces food, the dog salivates whenever it presses. We will develop this idea further below, when we discuss the effects of contingencies.

Phylogenetically Important Events

Since they gain their power to induce as a result of many generations of natural selection—from phylogeny—I call them Phylogenetically Important Events (PIEs; Baum, 2005). A PIE is an event that directly affects survival and reproduction. Some PIEs increase the chances of reproductive success by their presence; others decrease the chances of reproductive success by their presence. On the increase side, examples are food, shelter, and mating opportunities; on the decrease side, examples are predators, parasites, and severe weather. Those individuals for whom these events were unimportant produced fewer surviving offspring than their competitors, for whom they were important, and are no longer represented in the population.

PIEs are many and varied, depending on the environment in which a species evolved. In humans, they include social events like smiles, frowns, and eye contact, all of which affect fitness because of our long history of living in groups and the importance of group membership on survival and reproduction. PIEs include also dangerous and fearsome events like injuries, heights, snakes, and rapidly approaching large objects (“looming”).

In accord with their evolutionary origin, PIEs and their effects depend on species. Breland and Breland (1961) reported the intrusion of induced activities into their attempts to train various species using contingent food. Pigs would root wooden coins as they would root for food; raccoons would clean coins as if they were cleaning food; chickens would scratch at the ground in the space where they were to be fed. When the relevance of phylogeny was widely acknowledged, papers and books began to appear pointing to “biological constraints” (Hinde & Stevenson-Hinde, 1973; Seligman, 1970). In humans, to the reactions to events like food and injury, we may add induction of special responses to known or unknown conspecifics, such as smiling, eyebrow raising, and tongue showing (Eibl-Eibesfeldt, 1975).

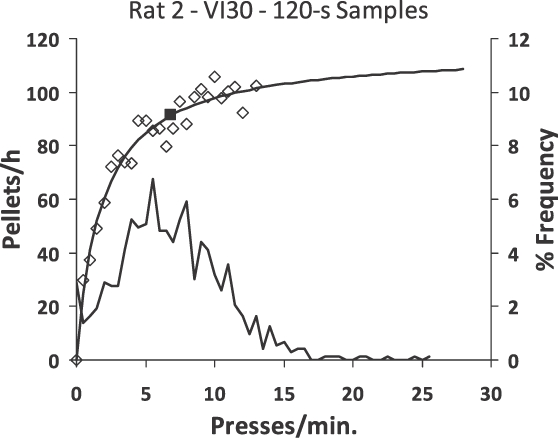

Accounts of reinforcers and punishers that make no reference to evolutionary history fail to explain why these events are effective as they are. For example, Premack (1963; 1965) proposed that reinforcement could be thought of as a contingency in which the availability of a “high-probability” activity depends on the occurrence of a “low-probability” activity. Timberlake and Allison (1974) elaborated this idea by adding that any activity constrained to occur below its level in baseline, when it is freely available, is in “response deprivation” and, made contingent on any activity with a lower level of deprivation, will reinforce that activity. Whatever the validity of these generalizations, they beg the question, “Why are the baseline levels of activities like eating and drinking higher than the baseline levels of running and lever pressing?” Deprivation and physiology are no answers; the question remains, “Why are organisms so constituted that when food (or water, shelter, sex, etc.) is deprived, it becomes a potent inducer?” Linking the greater importance of eating and drinking (food and water) to phylogeny explains why these events are important in an ultimate sense, and the concept of induction explains how they are important to the flexibility of behavior.

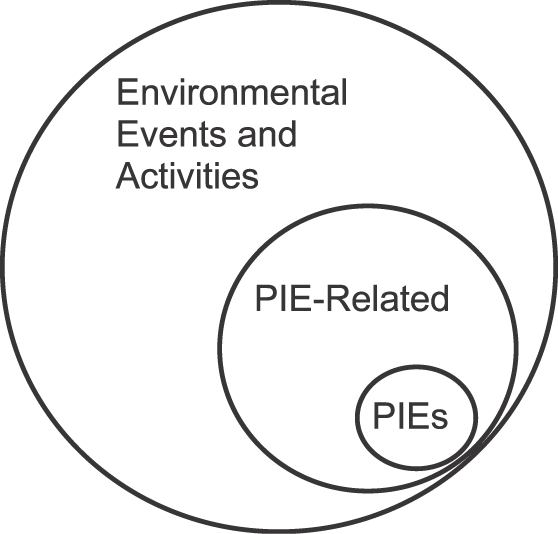

Figure 5 illustrates that, among all possible events—environmental stimuli and activities—symbolized by the large circle, PIEs are a small subset, and PIE-related stimuli and activities are a larger subset. That the subset of PIE-related stimuli and activities includes the subset of PIEs indicates that PIEs usually are themselves PIE-related, because natural environments and laboratory situations arrange that the occurrence of a PIE like food predicts how and when more food might be available. Food usually predicts more food, and the presence of a predator usually predicts continuing danger.

Fig 5.

Diagram illustrating that Phylogenetically Important Events (PIEs) constitute a subset of PIE-related events, which in turn are a subset of all events.

A key concept about induction is that a PIE induces any PIE-related activity. If a PIE like food is made contingent on an activity like lever pressing, the activity (lever pressing) becomes PIE-related (food-related). The experimental basis for this concept may be found in experiments that show PIEs to function as discriminative stimuli. Many such experiments exist, and I will illustrate with three examples showing food contingent on an activity induces that activity.

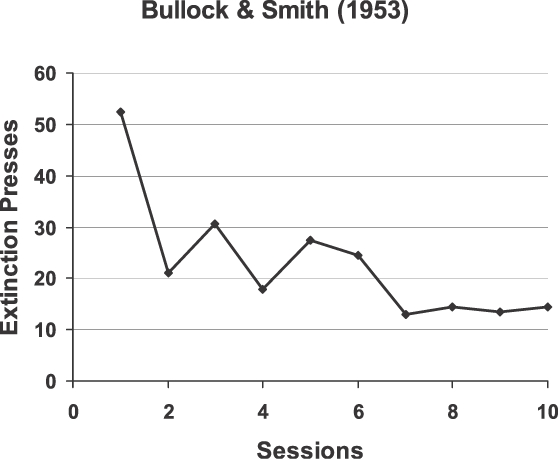

Bullock and Smith (1953) trained rats for 10 sessions. Each session consisted of 40 food pellets, each produced by a lever press (i.e., Fixed Ratio 1), followed by 1 hr of extinction (lever presses ineffective). Figure 6 shows the average number of presses during the hour of extinction across the 10 sessions. The decrease resembles the decrease in “errors” that occurs during the formation of a discrimination. That is how Bullock and Smith interpreted their results: The positive stimulus (SD) was press-plus-pellet, and the negative stimulus (SΔ) was press-plus-no-pellet. The food was the critical element controlling responding. Thus, the results support the idea that food functions as a discriminative stimulus.

Fig 6.

Results of an experiment in which food itself served as a discriminative stimulus. The number of responses made during an hour of extinction following 40 response-produced food deliveries decreased across the 10 sessions of the experiment. These responses would be analogous to “errors.” The data were published in a paper by Bullock and Smith (1953).

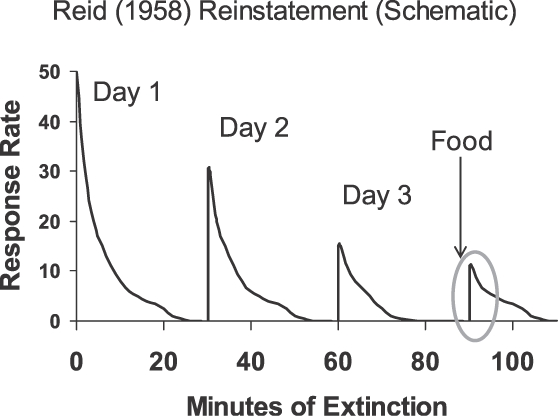

The second illustrative finding, reported by Reid (1958), is referred to as “reinstatement,” and has been studied extensively (e.g., Ostlund & Balleine, 2007). Figure 7 shows the result in a schematic form. Reid studied rats, pigeons, and students. First he trained responding by following each response with food (rats and pigeons) or a token (students). Once the responding was well-established, he subjected it on Day 1 to 30 min of extinction. This was repeated on Day 2 and Day 3. Then, on Day 3, when responding had disappeared, he delivered a bit of food (rats and pigeons) or a token (students). The free delivery was immediately followed by a burst of responding (circled in Figure 7). Thus, the freely delivered food or token reinstated the responding, functioning just like a discriminative stimulus.

Fig 7.

Cartoon of Reid's (1958) results with reinstatement of responding following extinction upon a single response-independent presentation of the originally contingent event (food for rats and pigeons; token for students). A burst of responding (circled) followed the presentation, suggesting that the contingent event functioned as a discriminative stimulus or an inducer.

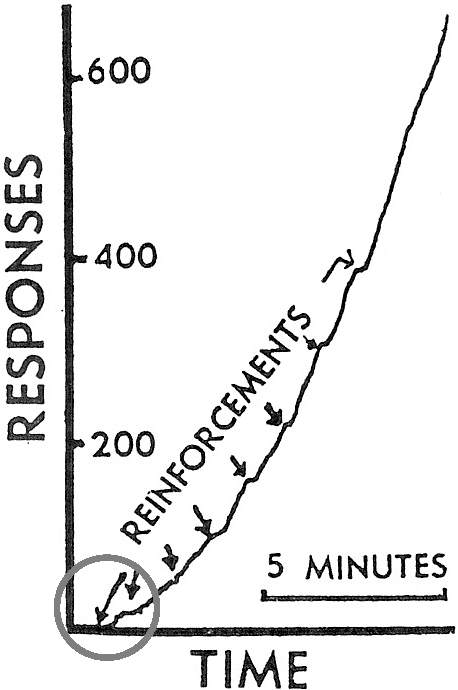

As a third illustration, we have the data that Skinner himself presented in his 1948 “superstition” paper. Figure 8 reproduces the cumulative record that Skinner claimed to show “reconditioning” of a response of hopping from side to side by adventitious reinforcement. Looking closely at the first two occurrences of food (circled arrows), however, we see that no response occurred just prior to the first occurrence and possibly just prior to the second occurrence. The hopping followed the food; it did not precede it. Skinner's result shows reinstatement, not reinforcement. In other words, the record shows induction by periodic food similar to that shown in Figure 1.

Fig 8.

Cumulative record that Skinner (1948) presented as evidence of “reconditioning” of a response of hopping from side to side. Food was delivered once a minute independently of the pigeon's behavior. Food deliveries (labeled “reinforcements”) are indicated by arrows. No response immediately preceded the first and possibly the second food deliveries (circled), indicating that the result is actually an example of reinstatement. Copyright ©1948 by the American Psychological Association. Reprinted by permission.

All three examples show that food induces the “operant” or “instrumental” activity that is correlated with it. The PIE induces PIE-related activity, and these examples show that PIE-related activity includes operant activity that has been related to the PIE as a result of contingency. Contrary to the common view of reinforcers—that their primary function is to strengthen the response on which they are contingent, but that they have a secondary role as discriminative stimuli—the present view asserts that the stimulus function is all there is. No notion of “strengthening” or “reinforcement” enters the account.

One way to understand the inducing power of a PIE like food is to recognize that in nature and in the laboratory food is usually a signal that more food is forthcoming in that environment for that activity. A pigeon foraging for grass seeds, on finding a seed, is likely to find more seeds if it continues searching in that area (“focal search;” Timberlake & Lucas, 1989). A person searching on the internet for a date, on finding a hopeful prospect on a web site is likely to find more such if he or she continues searching that site. For this reason, Michael Davison and I suggested that the metaphor of strengthening might be replaced by the metaphor of guidance (Davison & Baum, 2006).

An article by Gardner and Gardner (1988) argued vigorously in favor of a larger role for induction in explaining the origins of behavior, even suggesting that induction is the principal determinant of behavior. They drew this conclusion from studies of infant chimpanzees raised by humans. Induction seemed to account for a lot of the chimpanzees' behavior. But induction alone cannot account for selection among activities, because we must also account for the occurrence of the PIEs that induce the various activities. That requires us to consider the role of contingency, because contingencies govern the occurrence of PIEs. The Gardners' account provided no explanation of the flexibility of individuals' behavior, because they overlooked the importance of contingency within behavioral evolution (Baum, 1988).

Analogy to Natural Selection

As mentioned earlier, optimality by itself is not an explanation, but rather requires explanation by mechanism. The evolution of a population by natural selection offers an example. Darwinian evolution requires three ingredients: (1) variation; (2) recurrence; and (3) selection (e.g., Dawkins, 1989; Mayr, 1970). When these are all present, evolution occurs; they are necessary and sufficient. Phenotypes within a population vary in their reproductive success—i.e., in the number of surviving offspring they produce. Phenotypes tend to recur from generation to generation—i.e., offspring tend to resemble their parents—because parents transmit genetic material to their offspring. Selection occurs because the carrying capacity of the environment sets a limit to the size of the population, with the result that many offspring fail to survive, and the phenotype with more surviving offspring increases in the population.

The evolution of behavior within a lifetime (i.e., shaping), seen as a Darwinian process, also requires variation, recurrence, and selection (Staddon & Simmelhag, 1971). Variation occurs within and between activities. Since every activity is composed of parts that are themselves activities, variation within an activity is still variation across activities, but on a smaller time scale (Baum, 2002; 2004). PIEs and PIE-related stimuli induce various activities within any situation. Thus, induction is the mechanism of recurrence, causing activities to persist through time analogously to reproduction causing phenotypes to persist in a population through time. Selection occurs because of the finiteness of time, which is the analog to the limit on population size set by the carrying capacity of the environment. When PIEs induce various activities, those activities compete, because any increase in one necessitates a decrease in others. As an example, when a pigeon is trained to peck at keys that occasionally produce food, a pigeon's key pecking is induced by food, but pecking competes for time with background activities such as grooming and resting (Baum, 2002). If the left key produces food five times more often than the right key, then the combination left-peck-plus-food induces more left-key pecking than right-key pecking—the basis of the matching relation (Herrnstein, 1961; Davison & McCarthy, 1988). Due to the upper limit on pecking set by its competition with background activities, pecking at the left key competes for time with pecking at the right key (Baum, 2002). If we calculate the ratio of pecks left to pecks right and compare it with the ratio of food left to food right, the result may deviate from strict matching between peck ratio and food ratio across the two keys (Baum, 1974; 1979), but the results generally approximate matching, and simple mathematical proof shows matching to be optimal (Baum, 1981b).

Thus, evolution in populations of organisms and behavioral evolution, though both may be seen as Darwinian optimizing processes, proceed by definite mechanisms. Approximation to optimality is the result, but the mechanisms explain that result.

To understand behavioral evolution fully, we need not only to understand the competing effects of PIEs, but we need to account for the occurrence of the PIEs—how they are produced by behavior. We need to include the effects of contingencies.

Contingency

The notion of reinforcement was always known to be incomplete. By itself, it included no explanation of the provenance of the behavior to be strengthened; behavior has to occur before it can be reinforced. Segal (1972) thought that perhaps induction might explain the provenance of operant behavior. Perhaps contingency selects behavior originally induced. Before we pursue this idea, we need to be clear about the meaning of “contingency.”

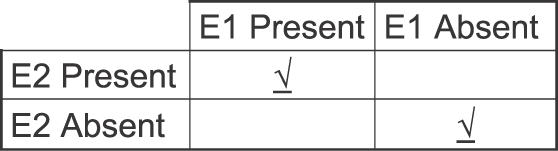

In the quotes we saw earlier, Skinner defined contingency as contiguity—a reinforcer needed only to follow a response. Contrary to this view based on “order and proximity,” a contingency is not a temporal relation. Rescorla (1968; 1988) may have been the first to point out that contiguity alone cannot suffice to specify a contingency, because contingency requires a comparison between at least two different occasions. Figure 9 illustrates the point with a 2-by-2 table, in which the columns show the presence and absence of Event 1, and the rows show the presence and absence of Event 2. Event 1 could be a tone or key pecking; Event 2 could be food or electric shock. The upper left cell represents the conjunction of the two events (i.e., contiguity); the lower right cell represents the conjunction of the absence of the two events. These two conjunctions (checkmarks) must both have high probability for a contingency to exist. If, for example, the probability of Event 2 were the same, regardless of the presence or absence of Event 1 (top left and right cells), then no contingency would exist. Thus, the presence of a contingency requires a comparison across two temporally separated occasions (Event 1 present and Event 1 absent). A correlation between rate of an activity and food rate would entail more than two such comparisons—for example, noting various food rates across various peck rates (the basis of a feedback function; Baum, 1973, 1989). Contrary to the idea that contingency requires only temporal conjunction, Figure 9 shows that accidental contingencies should be rare, because an accidental contingency would require at least two accidental conjunctions.

Fig 9.

Why a contingency or correlation is not simply a temporal relation. The 2-by-2 table shows the conjunctions possible of the presence and absence of two events, E1 and E2. A positive contingency holds between E1 and E2 only if two conjunctions occur with high probability at different times: the presence of both and the absence of both (indicated by checks). The conjunction of the two alone (contiguity) cannot suffice.

It is not that temporal relations are entirely irrelevant, but just that they are relevant in a different way from what traditional reinforcement would require. Whereas Skinner's formulation assigned to contiguity a direct role, the conception of contingency illustrated in Figure 9 suggests instead that the effect of contiguity is indirect. For example, inserting unsignalled delays into a contingency usually reduces the rate of the activity (e.g., key pecking) producing the PIE (e.g., food). Such delays must affect the tightness of the correlation. A measure of correlation such as the correlation coefficient (r) would decrease as average delay increased. Delays affect the clarity of a contingency like that in Figure 9 and affect the variance in a correlation (for further discussion, see Baum, 1973).

Avoidance

No phenomenon better illustrates the inadequacy of the molecular view of behavior than avoidance, because the whole point of avoidance is that when the response occurs, nothing follows. For example, Sidman (1953) trained rats in a procedure in which lever pressing postponed electric shocks that, in the absence of pressing, occurred at a regular rate. The higher the rate of pressing, the lower the rate of shock, and if pressing occurred at a high enough rate, the rate of shock was reduced close to zero. To try to explain the lever pressing, molecular theories resort to unseen “fear” elicited by unseen stimuli, “fear” reduction resulting from each lever press—so-called “two-factor” theory (Anger, 1963; Dinsmoor, 2001). In contrast, a molar theory of avoidance makes no reference to hidden variables, but relies on the measurable reduction in shock frequency resulting from the lever pressing (Herrnstein, 1969; Sidman, 1953; 1966). Herrnstein and Hineline (1966) tested the molar theory directly by training rats in a procedure in which pressing reduced the frequency of shock but could not reduce it to zero. A molecular explanation requires positing unseen fear reduction that depends only on the reduction in shock rate, implicitly conceding the molar explanation (Herrnstein, 1969).

In our present terms, the negative contingency between shock rate and rate of pressing results in pressing becoming a shock-related activity. Shocks then induce lever pressing. In the Herrnstein–Hineline procedure, this induction is clear, because the shock never disappears altogether, but in Sidman's procedure, we may guess that the experimental context—chamber, lever, etc.—also induces lever pressing, as discriminative stimuli or conditional inducers. Much evidence supports these ideas. Extra response-independent shocks increase pressing (Sidman, 1966; cf. reinstatement discussed earlier); extinction of Sidman avoidance is prolonged when shock rate is reduced to zero, but becomes faster on repetition (Sidman, 1966; cf. the Bullock & Smith study discussed earlier); extinction of Herrnstein–Hineline avoidance in which pressing no longer reduces shock rate depends on the amount of shock-rate reduction during training (Herrnstein & Hineline, 1966); sessions of Sidman avoidance typically begin with a “warm-up” period, during which shocks are delivered until lever pressing starts—i.e., until the shock induces lever pressing (Hineline, 1977).

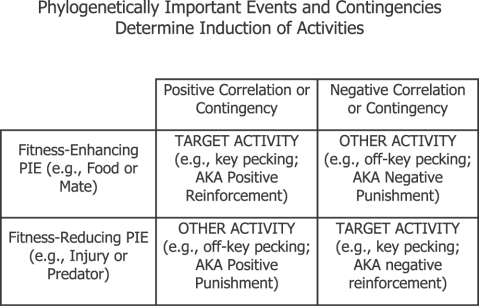

Figure 10 illustrates why shock becomes a discriminative stimulus or a conditional inducer in avoidance procedures. This two-by-two table shows the effects of positive and negative contingencies (columns) paired with fitness-enhancing and fitness-reducing PIEs (rows). Natural selection ensures that dangerous (fitness-reducing) PIEs (e.g., injury, illness, or predators) induce defensive activities (e.g., hiding, freezing, or fleeing) that remove or mitigate the danger. (Those individuals in the population that failed to behave so reliably produced fewer surviving offspring.) In avoidance, since shock or injury is fitness-reducing, any activity that would avoid it will be induced by it. After avoidance training, the operant activity (e.g., lever pressing) becomes a (conditional) defensive, fitness-maintaining activity, and is induced along with other shock-related activities; the shock itself and the (dangerous) operant chamber do the inducing. This lower-right cell in the table corresponds to relations typically called “negative reinforcement.”

Fig 10.

Different correlations or contingencies induce either the target activity or other-than-target activities, depending on whether the Phylogenetically Important Event (PIE) involved usually enhances or reduces fitness (reproductive success) by its presence.

Natural selection ensures also that fitness-enhancing PIEs (e.g., prey, shelter, or a mate) induce fitness-enhancing activities (e.g., feeding, sheltering, or courtship). (Individuals in the population that behaved so reliably left more surviving offspring.) In the upper left cell in Figure 10, a fitness-enhancing PIE (e.g., food) stands in a positive relation to a target (operant) activity, and training results in the target activity's induction along with other fitness-enhancing, PIE-related activities. This cell corresponds to relations typically called “positive reinforcement.”

When lever pressing is food-related or when lever pressing is shock-related, the food or shock functions as a discriminative stimulus, inducing lever pressing. The food predicts more food; the shock predicts more shock. A positive correlation between food rate and press rate creates the condition for pressing to become a food-related activity. A negative correlation between shock rate and press rate creates the condition for pressing to become a shock-related activity. More precisely, the correlations create the conditions for food and shock to induce allocations including substantial amounts of time spent pressing.

In the other two cells of Figure 10, the target activity would either produce a fitness-reducing PIE—the cell typically called “positive punishment”—or prevent a fitness-enhancing PIE—the cell typically called “negative punishment.” Either way, the target activity would reduce fitness and would be blocked from joining the other PIE-related activities. Instead, other activities, incompatible with the target activity, that would maintain fitness, are induced. Experiments with positive punishment set up a conflict, because the target activity usually produces both a fitness-enhancing PIE and a fitness-reducing PIE. For example, when operant activity (e.g., pigeons' key pecking) produces both food and electric shock, the activity usually decreases below its level in the absence of shock (Azrin & Holz, 1966; Rachlin & Herrnstein, 1969). The food induces pecking, but other activities (e.g., pecking off the key) are negatively correlated with shock rate and are induced by the shock. The result is a compromise allocation including less key pecking. We will come to a similar conclusion about negative punishment, in which target activity (e.g., pecking) cancels a fitness-enhancing PIE (food delivery), when we discuss negative automaintence. The idea that punishment induces alternative activities to the punished activity is further supported by the observation that if the situation includes another activity that is positively correlated with food and produces no shock, that activity dominates (Azrin & Holz, 1966).

Effects of Contingency

Contingency links an activity to an inducing event and changes the time allocation among activities by increasing time spent in the linked activity. The increase in time spent in an activity—say, lever pressing—when food is made contingent on it was in the past attributed to reinforcement, but our examples suggest instead that it is due to induction. The increase in pressing results from the combination of contingency and induction, because the contingency turns the pressing into a food-related (PIE-related) activity, as shown in the experiments by Bullock and Smith (1953) (Figure 6) and by Reid (1958) (Figure 7). We may summarize these ideas as follows:

Phylogenetically Important Events (PIEs) are unconditional inducers.

A stimulus correlated with a PIE becomes a conditional inducer.

An activity positively correlated with a fitness-enhancing PIE becomes a PIE-related conditional induced activity—usually called “operant” or “instrumental” activity.

An activity negatively correlated with a fitness-reducing PIE becomes a PIE-related conditional induced activity—often called “operant avoidance.”

A PIE induces operant activity related to it.

A conditional inducer induces operant activity related to the PIE to which the conditional inducer is related.

The effects of contingency need include no notion of strengthening or reinforcement. Consider the example of lever pressing maintained by electric shock. Figure 11 shows a sample cumulative record of a squirrel monkey's lever pressing from Malagodi, Gardner, Ward, and Magyar (1981). The scallops resemble those produced by a fixed-interval schedule of food, starting with a pause and then accelerating to a higher response rate up to the end of the interval. Yet, in this record the last press in the interval produces an electric shock. The result requires, however, that the monkey be previously trained to press this same lever to avoid the same electric shock. Although in the avoidance training shock acted as an aversive stimulus or punisher, in Figure 11 it acts, paradoxically, to maintain the lever pressing, as if it were a reinforcer. The paradox is resolved when we recognize that the shock, as a PIE, induces the lever pressing because the prior avoidance training made lever pressing a shock-related activity. No notion of reinforcement enters in—only the effect of the contingency. The shock induces the lever pressing, the pressing produces the shock, the shock induces the pressing, and so on, in a loop.

Fig 11.

Cumulative record of a squirrel monkey pressing a lever that produced electric shock at the end of the fixed interval. Even though the monkey had previously been trained to avoid the very same shock, it now continues to press when pressing produces the shock. This seemingly paradoxical result is explained by the molar view of behavior as an example of induction. Reprinted from Malagodi, Gardner, Ward, and Magyar (1981).

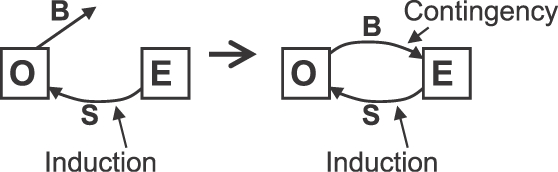

The diagrams in Figure 12 show how the contingency closes a loop. The diagram on the left illustrates induction alone. The environment E produces a stimulus S (shock), and the organism O produces the induced activities B. The diagram on the right illustrates the effect of contingency. Now the environment E links the behavior B to the stimulus S, resulting in an induction-contingency loop.

Fig 12.

How contingency completes a loop in which an operant activity (B) produces an inducing event (S), which in turn induces more of the activity. O stands for organism. E stands for environment. Left: induction alone. Right: the contingency closes the loop. Induction occurs because the operant activity is or becomes related to the inducer (S; a PIE).

The reader may already have realized that the same situation and the same diagram apply to experiments with other PIEs, such as food. For example, Figure 1 illustrates that, among other activities, food induces pecking in pigeons. When a contingency arranges also that pecking produces food, we have the same sort of loop as shown in Figure 12, except that the stimulus S is food and the behavior B is pecking. Food induces the activity, and the (operant) activity produces the food, which induces the activity, and so on, as shown by the results of Bullock and Smith (1953) (Figure 6) and Reid's (1958) reinstatement effect (Figure 7).

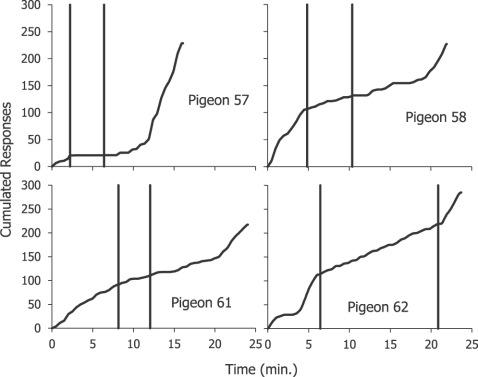

Figure 13 shows results from an experiment that illustrates the effects of positive and negative contingencies (Baum, 1981a). Pigeons, trained to peck a response key that occasionally produced food, were exposed to daily sessions in which the payoff schedule began with a positive correlation between pecking and food rate. It was a sort of variable-interval schedule, but required only that a peck occur anywhere in a programmed interval for food to be delivered at the end of that interval (technically, a conjunctive variable-time 10-s fixed-ratio 1 schedule). At an unpredictable point in the session, the correlation switched to negative; now, a peck during a programmed interval canceled the food delivery at the end of the interval. Finally, at an unpredictable point, the correlation reverted to positive. Figure 13 shows four representative cumulative records of 4 pigeons from this experiment. Initially, pecking occurred at a moderate rate, then decreased when the correlation switched to negative (first vertical line), and then increased again when the correlation reverted to positive (second vertical line). The effectiveness of the negative contingency varied across the pigeons; it suppressed key pecking completely in Pigeon 57, and relatively little in Pigeon 61. Following our present line, these results suggest that when the correlation was positive, the food induced key pecking at a higher rate than when the correlation was negative. In accord with the results shown in Figure 1, however, we expect that the food continues to induce pecking. As we expect from the upper-right cell of Figure 10, in the face of a negative contingency, pigeons typically peck off the key, sometimes right next to it. The negative correlation between food and pecking on the key constitutes a positive correlation between food and pecking off the key; the allocation between the two activities shifts in the three phases shown in Figure 13.

Fig 13.

Results from an experiment showing discrimination of correlation. Four cumulative records of complete sessions from 4 pigeons are shown. At the beginning of the session, the correlation between pecking and feeding is positive; if a peck occurred anywhere in the scheduled time interval, food was delivered at the end of the interval. Following the first vertical line, the correlation switched to negative; a peck during the interval canceled food at the end of the interval. Peck rate fell. Following the second vertical line, the correlation reverted to positive, and peck rate rose again. Adapted from Baum (1981a).

The results in the middle phase of the records in Figure 13 resemble negative automaintenance (Williams & Williams, 1969). In autoshaping, a pigeon is repeatedly presented with a brief light on a key, which is followed by food. Sooner or later, the light comes to induce key pecking, and pecking at the lit key becomes persistent; autoshaping becomes automaintenance. In negative automaintenance, pecks at the key cancel the delivery of food. This negative contingency causes a reduction in key pecking, as in Figure 13, even if it doesn't eliminate it altogether (Sanabria, Sitomer, & Killeen, 2006). Presumably, a compromise occurs between pecking on the key and pecking off the key—the allocation of time between pecking on the key and pecking off the key shifts back and forth. Possibly, the key light paired with food induces pecking on the key, whereas the food induces pecking off the key.

A Test

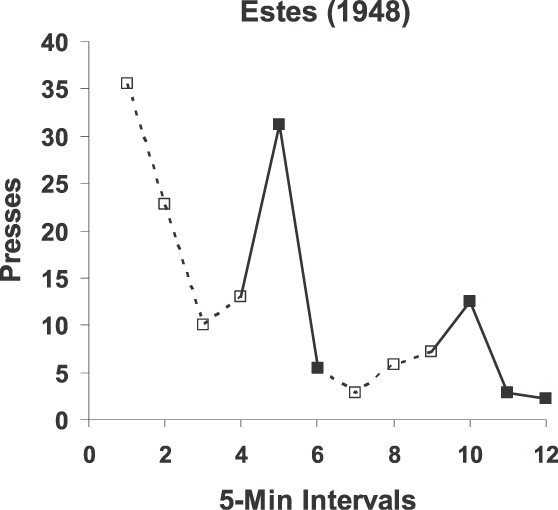

To test the molar view of contingency or correlation, we may apply it to explaining a puzzle. W. K. Estes (1943; 1948) reported two experiments in which he pretrained rats in two conditions: (a) a tone was paired with food with no lever present; and (b) lever pressing was trained by making food contingent on pressing. The order of the two conditions made no difference to the results. The key point was that the tone and lever never occurred together. Estes tested the effects of the tone by presenting it while the lever pressing was undergoing extinction (no food). Figure 14 shows a typical result. Pressing decreases across 5-min intervals, but each time the tone occurred, pressing increased.

Fig 14.

Results from an experiment in which lever pressing undergoing extinction was enhanced by presentation of a tone that had previously been paired with the food that had been used in training the lever pressing. Because the tone and lever had never occurred together before, the molecular view had difficulty explaining the effect of the tone on the pressing, but the molar view explains it as induction of pressing as a food-related activity. The data were published by Estes (1948).

This finding presented a problem for Estes's molecular view—that is, for a theory relying on contiguity between discrete events. The difficulty was that, because the tone and the lever had never occurred together, no associative mechanism based on contiguity could explain the effect of the tone on the pressing. Moreover, the food pellet couldn't mediate between the tone and the lever, because the food had never preceded the pressing, only followed it. Estes “explanation” was to make up what he called a “conditioned anticipatory state” (CAS). He proposed that pairing the tone with food resulted in the tone's eliciting the CAS and that the CAS then affected lever pressing during the extinction test. This idea, however, begs the question, “Why would the CAS increase pressing?” It too would never have preceded pressing. The “explanation” only makes matters worse, because, whereas the puzzle started with accounting for the effect of the tone, now it has shifted to an unobserved hypothetical entity, the CAS. The muddle illustrates well how the molecular view of behavior leads to hypothetical entities and magical thinking. The molecular view can explain Estes's result, but only by positing unseen mediators (Trapold & Overmier, 1972). (See Baum, 2002, for a fuller discussion.)

The molar paradigm (e.g., Baum, 2002; 2004) offers a straightforward explanation of Estes's result. First, pairing the tone with the food makes the tone an inducing stimulus (a conditional inducer). This means that the tone will induce any food-related activity. Second, making the food contingent on lever pressing—that is, correlating pressing with food—makes pressing a food-related activity. Thus, when the tone is played in the presence of the lever, it induces lever pressing. When we escape from a focus on contiguity, the result seems obvious.

Constraint and Connection

The effects of contingency go beyond just “closing the loop.” A contingency has two effects: (a) it creates or causes a correlation between the operant activity and the contingent event—the equivalent of what an economist would call a constraint; (b) as it occurs repeatedly, it soon causes the operant activity to become related to the contingent (inducing) event—it serves to connect the two.

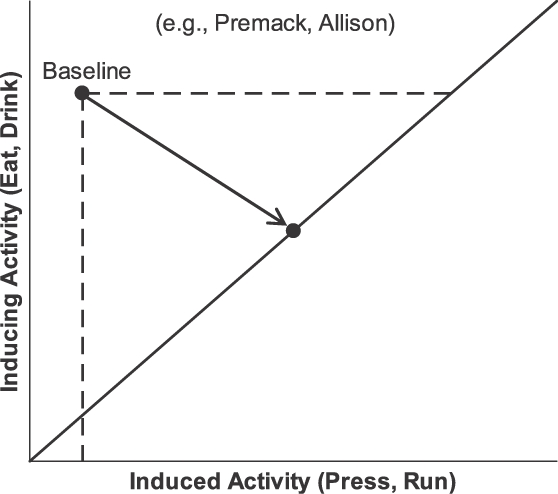

Contingency ensures the increase in the operant activity, but it also constrains the increase by constraining the possible outcomes (allocations). Figure 15 illustrates these effects. The vertical axis shows frequency of an inducing event represented as time spent in a fitness-enhancing activity, such as eating or drinking. The horizontal axis shows time spent in an induced or operant activity, such as lever pressing or wheel running. Figure 15 diagrams situations studied by Premack (1971) and by Allison, Miller, and Wozny (1979); it resembles also a diagram by Staddon (1983; Figure 7.2). In a baseline condition with no contingency, a lot of time is spent in the inducing activity and relatively little time is spent in the to-be-operant activity. The point labeled “baseline” indicates this allocation. When a contingency is introduced, represented by the solid diagonal line, which indicates a contingency such as in a ratio schedule, a given duration of the operant activity allows a given duration of the inducing activity (or a certain amount of the PIE). The contingency constrains the possible allocations between time in the contingent activity (eating or drinking) and time in the operant activity (pressing or running). Whatever allocation occurs must lie on the line; allocations off the line are no longer possible. The point on the line illustrates the usual result: an allocation that includes more operant activity than in baseline and less of the PIE than in baseline. The increase in operant activity would have been called a reinforcement effect in the past, but here it appears as an outcome of the combination of induction with the constraint of the contingency line (also known as the feedback function; Baum, 1973; 1989; 1992). The decrease in the PIE might have been called punishment of eating or drinking, but here it too is an outcome of the constraint imposed by the contingency (Premack, 1965).

Fig 15.

Effects of contingency. In a baseline condition with no contingency, little of the to-be-operant activity (e.g., lever pressing or wheel running; the to-be-induced activity) occurs, while a lot of the to-be-contingent PIE-related activity (e.g., eating or drinking; the inducing activity) occurs. This is the baseline allocation (upper left point and broken lines). After the PIE (food or water) is made contingent on the operant activity, whatever allocation occurs must lie on the solid line. The arrow points to the new allocation. Typically, the new allocation includes a large increase in the operant activity over baseline. Thus, contingency has two effects: a) constraining possible allocations; and b) making the operant behavior PIE-related so it is induced in a large quantity.

Another way to express the effects in Figure 15 might be to say that, by constraining the allocations possible, the contingency “adds value” to the induced operant activity. Once the operant activity becomes PIE-related, the operant activity and the PIE become parts of a “package.” For example, pecking and eating become parts of an allocation or package that might be called “feeding,” and the food or eating may be said to lend value to the package of feeding. If lever pressing is required for a rat to run in a wheel, pressing becomes part of running (Belke, 1997; Belke & Belliveau, 2001). If lever pressing is required for a mouse to obtain nest material, pressing becomes part of nest building (Roper, 1973). Figure 15 indicates that this doesn't occur without some cost, too, because the operant activity doesn't increase enough to bring the level of eating to its level in baseline; some eating is sacrificed in favor of a lower level of the operant activity. In an everyday example, suppose Tom and his friends watch football every Sunday and usually eat pizza while watching. Referring to Figure 9, we may say that watching football (E1) is correlated with eating pizza (E2), provided that the highest frequencies are the conjunctions in the checked boxes, and the conjunctions in the empty boxes are relatively rare. Rather than appeal to reinforcement or strengthening, we may say that watching football induces eating pizza or that the two activities form a package with higher value than either by itself. We don't need to bring in the concept of “value” at all, because all it means is that the PIE induces PIE-related activities, but this interpretation of “adding value” serves to connect the present discussion with behavioral economics. Another possible connection would be to look at demand as induction of activity (e.g., work or shopping) by the good demanded and elasticity as change in induction with change in the payoff schedule or feedback function. However, a full quantitative account of the allocations that actually occur is beyond the scope of this article (see Allison, 1983; Baum, 1981b; Rachlin, Battalio, Kagel, and Green, 1981; and Rachlin, Green, Kagel, and Battalio, 1976 for examples.) Part of a theory may be had by quantifying contingency with the concept of a feedback function, because feedback functions allow more precise specification of the varieties of contingency.

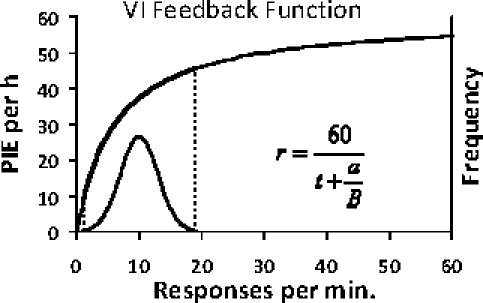

Correlations and Feedback Functions

Every contingency or correlation specifies a feedback function (Baum, 1973; 1989; 1992). A feedback function describes the dependence of outcome rate (say, rate of food delivery) and operant activity (say, rate of pecking). The constraint line in Figure 15 specifies a feedback function for a ratio schedule, in which the rate of the contingent inducer is directly proportional to the rate of the induced activity. The feedback function for a variable-interval schedule is more complicated, because it must approach the programmed outcome rate as an asymptote. Figure 16 shows an example. The upper curve has three properties: (a) it passes through the origin, because if no operant activity occurs, no food can be delivered; (b) it approaches an asymptote; and (c) as operant activity approaches zero, the slope of the curve approaches the reciprocal of the parameter a in the equation shown (1/a; Baum, 1992). It constrains possible performance, because whatever the rate of operant activity, the outcome (inducer) rate must lie on the curve. The lower curve suggests a frequency distribution of operant activity on various occasions. Although average operant rate and average inducer rate would correspond to a point on the feedback curve, the distribution indicates that operant activity should vary around a mode and would not necessarily approach the maximum activity rate possible.

Fig 16.

Example of a feedback function for a variable-interval schedule. The upper curve, the feedback function, passes through the origin and approaches an asymptote (60 PIEs per h; a VI 60s). Its equation appears at the lower right. The average interval t equals 1.0. The parameter a equals 6.0. Response rate is expected to vary from time to time, as shown by the distribution below the feedback function (frequency is represented on the right-hand vertical axis).

Figure 17 shows some unpublished data, gathered by John Van Syckel (1983), a student at the University of New Hampshire. Several sessions of variable-interval responding by a rat were analyzed by examining successive 2-min time samples and counting the number of lever presses and food deliveries in each one. All time samples were combined according the number of presses, and the press rate and food rate calculated for each number of presses. These are represented by the plotted points. The same equation as in Figure 15 is fitted to the points; the parameter a was close to 1.0. The frequency distribution of press rates is shown by the curve without symbols (right-hand vertical axis). Some 2-min samples contained no presses, a few contained only one (0.5 press/min), and a mode appears at about 5 press/min. Press rates above about 15 press/min (30 presses in 2 min) were rare. Thus, the combination of induction of lever pressing by occasional food together with the feedback function results in the stable performance shown.

Fig 17.

Example of an empirical feedback function for a variable-interval schedule. Successive 120-s time windows were evaluated for number of lever presses and number of food deliveries. The food rate and press rate were calculated for each number of presses per 120 s. The unfilled diamonds show the food rates. The feedback equation from Figure 15 was fitted to these points (t = 0.52; a = 0.945). The frequency distribution (right-hand vertical axis) below shows the percent frequencies of the various response rates. The filled square shows the average response rate.

Although the parameter a was close to 1.0 in Figure 17, it usually exceeds 1.0, ranging as high as 10 or more when other such data sets are fitted to the same equation (Baum, 1992). This presents a puzzle, because one might suppose that as response rate dropped to extremely low levels, a food delivery would have set up prior to each response, and each response would deliver food, with the result that the feedback function would approximate that for a fixed-ratio 1 schedule near the origin. A possible explanation as to why the slope (1/a) continues to fall short of 1.0 near the origin might be that when food deliveries become rare, a sort of reinstatement effect occurs, and each delivery induces a burst of pressing. Since no delivery has had a chance to set up, the burst usually fails to produce any food and thus insures several presses for each food delivery.

The relevance of feedback functions has been challenged by two sorts of experiment. In one type, a short-term contingency is pitted against a long-term contingency, and the short-term contingency is shown to govern performance to a greater extent than the long-term contingency (e.g., Thomas, 1981; Vaughan & Miller, 1984). In these experiments, the key pecking or lever pressing produces food in the short-term contingency, but cancels food in the long-term contingency. Responding is suboptimal, because the food rate would be higher if no responding occurred. That responding occurs in these experiments supports the present view. The positively correlated food induces the food-related activity (pressing or pecking); the other, negatively correlated food, when it occurs, would simply contribute to inducing the operant activity.

These experiments may be compared to observations such as negative automaintenance (Williams & Williams, 1969) or what Herrnstein and Loveland (1972) called “food avoidance.” Even though key pecking cancels the food delivery, still the pigeon pecks, because the food, when it occurs, induces pecking (unconditionally; Figure 1). Sanabria, Sitomer, and Killeen (2006) showed, however, that under some conditions the negative contingency is highly effective, reducing the rate of pecking to low levels despite the continued occurrence of food. As discussed earlier in connection with Figure 13, the negative contingency between key pecking and food implies also a positive contingency between other activities and food, particularly pecking off the key. Similar considerations explain contrafreeloading—the observation that a pigeon or rat will peck at a key or press a lever even if a supply of the same food produced by the key or lever is freely available (Neuringer, 1969; 1970). The pecking or pressing apparently is induced by the food produced, even though other food is available. Even with no pecking key available, Palya and Zacny (1980) found that untrained pigeons fed at a certain time of day would peck just about anywhere (any “spot”) around that time of day.

The other challenge to the relevance of feedback functions is presented by experiments in which the feedback function is changed with no concomitant change in behavior. For example, Ettinger, Reid, and Staddon (1987) studied rats' lever pressing that produced food on interlocking schedules—schedules in which both time and pressing interchangeably advance the schedule toward the availability of food. At low response rates, the schedule resembles an interval schedule, because the schedule advances mainly with the passage of time, whereas at high response rates, the schedule resembles a ratio schedule, because the schedule advances mainly due to responding. Ettinger et al. varied the interlocking schedule and found that average response rate decreased in a linear fashion with increasing food rate, but that variation in the schedule had no effect on this relation. They concluded that the feedback function was irrelevant. The schedules they chose, however, were all functionally equivalent to fixed-ratio schedules, and the rats responded exactly as they would on fixed-ratio schedules. In fixed-ratio performance, induced behavior other than lever-pressing tends to be confined to a period immediately following food, as in Figure 1; once pressing begins, it tends to proceed uninterrupted until food again occurs. The postfood period increases as the ratio increases, thus tending to conserve the relative proportions of pressing and other induced activities. The slope of the linear feedback function determines the postfood period, but response rate on ratio schedules is otherwise insensitive to it (Baum, 1993; Felton & Lyon, 1966). The decrease in average response rate observed by Ettinger et al. occurred for the same reason it occurs with ratio schedules: because the postfood period, even though smaller for smaller ratios, was an increasing proportion of the interfood interval as the effective ratio decreased. Thus, the conclusion that feedback functions are irrelevant was unwarranted.

When we try to understand basic phenomena, such as the difference between ratio and interval schedules, feedback functions prove indispensible. The two different feedback functions—linear for ratio schedules (Figure 15) and curvilinear for interval schedules (Figures 16 and 17)—explain the difference in response rate on the two types of schedule (Baum, 1981b). On ratio schedules, the contingency loop shown in Figure 12 includes only positive feedback and drives response rate toward the maximum possible under the constraints of the situation. This maximal response rate is necessarily insensitive to variation in ratio or food rate. On interval schedules, where food rate levels off, food induces a more moderate response rate (Figure 17). The difference in feedback function is important for understanding both these laboratory situations and also everyday situations in which people deal with contingencies like ratio schedules, in which their own behavior alone matters to production, versus contingencies, like interval schedules, in which other factors, out of their control, partially determine production.

Explanatory Power

The conceptual power of this framework—allocation, induction and contingency—far exceeds that of the contiguity-based concept of reinforcement. Whether we redefine the term and call it “reinforcement,” or whether we call the process something else (“inducement”?), it explains a large range of phenomena. We have seen that it explains standard results such as operant and respondent conditioning, operant–respondent interactions, and adjunctive behavior. We saw that it explains avoidance and shock-maintained responding. It can explain the effects of noncontingent events (e.g., “noncontingent reinforcement,” an oxymoron). Let us conclude with a few examples from the study of choice, the study of stimulus control, and observations of everyday life.

Dynamics of choice

In a typical choice experiment, a pigeon pecks at two response keys, each of which occasionally and unpredictably operates a food dispenser when pecked, based on the passage of time (variable-interval schedules). Choice or preference is measured as the logarithm of the ratio of pecks at one key to pecks at the other. For looking at putative reinforcement, we examine log ratio of pecks at the just-productive key (left or right) to pecks at the other key (right or left). With the right procedures, analyzing preference following food allows us to separate any strengthening effect from the inducing effect of food (e.g., Cowie, Davison, & Elliffe, 2011). For example, a striking result shows that food and stimuli predicting food induce activity other than the just-productive activity (Boutros, Davison, & Elliffe, 2011; Davison & Baum, 2006; 2010; Krägeloh, Davison, and Elliffe, 2005).

A full understanding of choice requires analysis at various time scales, from extremely small—focusing on local relations—to extremely large—focusing on extended relations (Baum, 2010). Much research on choice concentrated on extended relations by aggregating data across many sessions (Baum, 1979; Herrnstein, 1961). Some research has examined choice in a more local time frame by aggregating data from one food delivery to another (Aparicio & Baum, 2006; 2009; Baum & Davison, 2004; Davison & Baum, 2000; Rodewald, Hughes, & Pitts, 2010). A still more local analysis examines changes in choice within interfood intervals, from immediately following food delivery until the next food delivery. A common result is a pulse of preference immediately following food in favor of the just-productive alternative (e.g., Aparicio & Baum, 2009; Davison & Baum, 2006; 2010). After a high initial preference, choice typically falls toward indifference, although it may never actually reach indifference. These preference pulses seem to reflect local induction of responding at the just-productive alternative. A still more local analysis looks at the switches between alternatives or the alternating visits at the two alternatives (Aparicio & Baum, 2006, 2009; Baum & Davison, 2004). A long visit to the just-productive alternative follows food produced by responding at that alternative, and this too appears to result from local induction, assuming, for example, that pecking left plus food induces pecking at the left key whereas pecking right plus food induces pecking at the right key. Since a previous paper showed that the preference pulses are derivable from the visits, the long visit may reflect induction more directly (Baum, 2010).

Differential-outcomes effect

In the differential-outcomes effect, a discrimination is enhanced by arranging that the response alternatives produce different outcomes (Urcuioli, 2005). In a discrete-trials procedure, for example, rats are presented on some trials with one auditory stimulus (S1) and on other trials with another auditory stimulus (S2). In the presence of S1, a press on the left lever (A1) produces food (X1), whereas in the presence of S2, a press on the right lever (A2) produces liquid sucrose (X2). The discrimination that forms—pressing the left lever in the presence of S1 and pressing the right lever in the presence of S2—is greater than when both actions A1 and A2 produce the same outcome. The result is a challenge for the molecular paradigm because, focusing on contiguity, it sees the effect of the different outcomes as an effect of a future event on present behavior. The “solution” to this problem has been to bring the future into the present by positing unseen “representations” or “expectancies” generated by S1 and S2 and supposing that those invisible events determine A1 and A2. In the present molar view, we may see the result as an effect of differential induction by the different outcomes (Ostlund & Balleine, 2007). The combination of S1 and X1 makes S1 a conditional inducer of X1-related activities. The contingency between A1 and X1 makes A1 an X1-related activity. As a result, S1 induces A1. Similarly, as a result of the contingency between S2 and X2 and the contingency between A2 and X2, S2 induces A2, as an X2-related activity. In contrast, when both A1 and A2 produce the same outcome X, the only basis for discrimination is the relation of S1, A1, and X in contrast with the relation of S2, A2, and X. A1 is induced by the combination S1-plus-X, and A2 is induced by the combination S2-plus-X. Since S1 and S2 are both conditional inducers related to X, S1 would tend to induce A2 to some extent, and S2 would tend to induce A1 to some extent, thereby reducing discrimination. These relations occur, not just on any one trial, but as a pattern across many trials. Since no need arises to posit any invisible events, the explanation exceeds the molecular explanation on the grounds of plausibility and elegance.

Cultural transmission

The present view offers a good account of cultural transmission. Looking on cultural evolution as a Darwinian process, we see that the recurrence of a practice from generation to generation occurs partly by imitation and partly by rules (Baum, 1995b; 2000; 2005; Boyd & Richerson, 1985; Richerson & Boyd, 2005). A pigeon in a chamber where food occasionally occurs sees another pigeon pecking at a ping-pong ball and subsequently pecks at a similar ball (Epstein, 1984). The model pigeon's pecking induces similar behavior in the observer pigeon. When a child imitates a parent, the parent's behavior induces behavior in the child, and if the child's behavior resembles the parent's, we say the child imitated the parent. As we grow up, other adults serve as models, particularly those with the trappings of success—teachers, coaches, celebrities, and so forth—and their behavior induces similar behavior in their imitators (Boyd & Richerson, 1985; Richerson & Boyd, 2005). Crucial to transmission, however, are the contingencies into which the induced behavior enters following imitation. When it occurs, is it correlated with resources, approval, or opportunities for mating? If so, it will now be induced by those PIEs, no longer requiring a model. Rules, which are discriminative stimuli generated by a speaker, induce behavior in a listener, and the induced behavior, if it persists, enters into correlations with both short-term PIEs offered by the speaker and, sometimes, long-term PIEs in long-term relations with the (now no longer) rule-governed behavior (Baum, 1995b; 2000; 2005).

Working for wages

Finally, let us consider the everyday example of working for wages. Zack has a job as a dishwasher in a restaurant and works every week from Tuesday to Saturday for 8 hr a day. At the end of his shift on Saturday, he receives an envelope full of money. Explaining his persistence in this activity presents a problem for the molecular paradigm, because the money on Saturday occurs at such a long delay after the work on Tuesday or even the work on Saturday. No one gives Zack money on any other occasion—say, after each dish washed. The molecular paradigm's “solution” to this has been to posit hidden reinforcers that strengthen the behavior frequently during his work. Rather than having to resort to such invisible causes, we may turn to the molar view and assert, with common sense, that the money itself maintains the dishwashing. We may look upon the situation as similar to a rat pressing a lever, but scaled up, so to speak. The money induces dishwashing, not just in one week, but as a pattern of work across many weeks. We explain Zack's first week on the job a bit differently, because he brings to the situation a pattern of showing up daily from having attended school, which is induced by the promises of his boss. The pattern of work must also be seen in the larger context of Zack's life activities, because the money is a means to ends, not an end in itself; it permits other activities, such as eating and socializing (Baum, 1995a; 2002).

Omissions and Inadequacies

As I said at the outset, to cover all the phenomena known to behavior analysts in this one article would be impossible. The article aims to set out a conceptual framework that draws those phenomena together in a way not achieved by the traditional framework.