Summary

Species-specific vocalizations fall into two broad categories: those that emerge during maturation, independent of experience, and those that depend on early life interactions with conspecifics. Human language and the communication systems of a small number of other species, including songbirds, fall into this latter class of vocal learning. Self-monitoring has been assumed to play an important role in the vocal learning of speech [1–3] and studies demonstrate that perception of your own voice is crucial for both the development and lifelong maintenance of vocalizations in humans and songbirds [4–8]. Experimental modifications of auditory feedback can also change vocalizations in both humans and songbirds [9–13]. However, with the exception of large manipulations of timing [14,15], no study to date has ever directly examined the use of auditory feedback in speech production under the age of four. Here we use a real-time formant perturbation task [16] to compare the response of toddlers, children and adults to altered feedback. Children and adults reacted to this manipulation by changing their vowels in a direction opposite to the perturbation. Surprisingly, toddlers’ speech didn’t change in response to altered feedback, suggesting that long-held assumptions regarding the role of self-perception in articulatory development need to be reconsidered.

Results

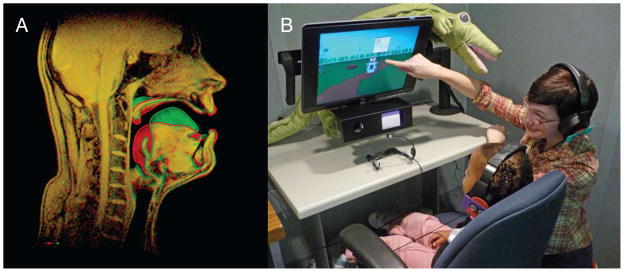

In humans, there is a clearly defined linkage between vocal tract configuration and the acoustic structure of speech. The two vocal tract configurations shown in Figure 1a have different resonant frequencies leading to the amplification of different harmonics in the speech signal. Speech researchers call these amplified harmonics formants, and listeners rely heavily on formants to determine what consonant or vowel a speaker intended to produce. As speakers shift the configuration of their vocal tract, the formant structure of their utterances shifts accordingly. By attending to the linkage between their own unique vocal tract configurations and the resulting speech acoustics, young children could fine-tune the mapping between motor commands sent from their brains to the vocal producing organs and the resulting acoustic output produced.

Figure 1.

A. Midsagittal adult vocal tract showing the positioning of articulators when producing two different vowels that differ in height and frontness of the vocal tract constriction. The different tongue positions result in different resonances in the vocal tract and perception of different vowels. B. Author JF explaining the computer game to a toddler.

In the current study, we look at real-time compensatory behavior in vowel production when auditory feedback is modified. We use a rapid signal processing system to change the formant frequencies of vowels produced by children and adults. Previous work with adults has demonstrated that when talkers receive auditory feedback in which their own vowel formants are shifted to new locations in the vowel space, they rapidly compensate for the perturbations, altering the formant frequencies of the vowels they produce in a direction opposite to the perturbation [16–19]. This response pattern has been interpreted as evidence for the existence of a predictive mechanism in speech motor control [17]. This phenomenon also demonstrates that even adult speakers remain reliant on auditory feedback to fine tune the accuracy of their vocal productions.

We tested three different age groups of native-English talkers: Adults (26 adult females with a mean age 18.9 years), Young Children (26 children with a mean age of 51.5 months) and Toddlers (20 children with a mean age of 29.8 months). Each talker produced 50 utterances of the word “bed”. To elicit these utterances from the young children and toddlers, a video game was developed in which the children would help a robot cross a virtual playground by saying the robot’s ‘magic’ word “bed” (Figure 1b). During the first 20 utterances, talkers received normal acoustic feedback through a pair of headphones. During the last 30 utterances, talkers received feedback in which the frequency of their first and second formants (F1 and F2 respectively) were perturbed using a real-time formant shifting system. F1 was increased by 200 Hz and F2 was decreased by 250 Hz. This manipulation changed talkers’ productions of the word “bed” into their own voice saying the word “bad”.

For each utterance, the ‘steady-state’ F1 and F2 frequency was determined by averaging estimates of that formant from 40% to 80% of the way through the vowel. These results were then normalized for each individual by subtracting that average of that individual’s baseline utterances defined as the average of the last 15 utterances before feedback was altered (i.e., utterances 6–20). For statistical analyses, individual measures of compensation in F1 and F2 were computed with the magnitude based on the difference in average frequency between the last 20 utterances (i.e., utterances 31–50) and the baseline used in normalization. The sign was determined based on whether the change in production opposed (positive) or followed (negative) the direction of the perturbation.

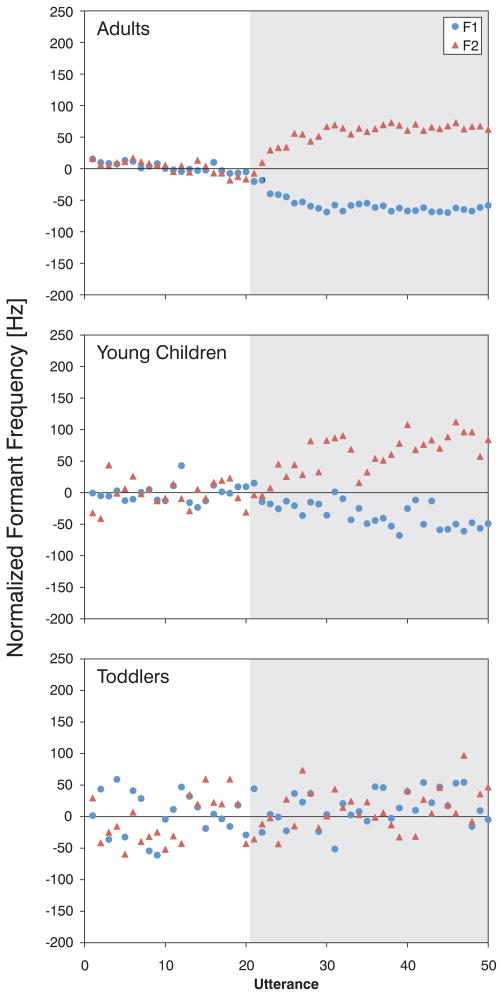

The normalized results, averaged across individuals in each group, are plotted in Figure 2. As in previous formant perturbation experiments [16, 19], the adults spontaneously compensated by altering the frequency of F1 and F2 in a direction opposite to that of the perturbation (top panel). The young children also compensated in a manner similar to the adults (middle panel). However, the toddlers did not alter production of F1 or F2 in response to the perturbation (bottom panel).

Figure 2.

Normalized F1 (circles) and F2 (triangles) frequency estimates across time for Adults (Top panel), Young Children (Middle panel), and Toddlers (Bottom panel). The shaded region indicates utterances during which talkers received altered auditory feedback.

To verify these observations, individual measures of compensation in F1 and F2 were computed. For both F1 and F2, an Analysis of Variance (ANOVA) revealed a significant effect between groups [F1: F(2,69) = 7.23, p < 0.01; F2: F(2,69) = 6.38, p < 0.01]. Multiple comparisons with Bonferroni correction confirmed that the compensation by the adults and young children was significantly different from that of the toddlers (p < 0.01 for both F1 and F2) but no significant differences between the adults and young children were observed (p > 0.99 for both F1 and F2).

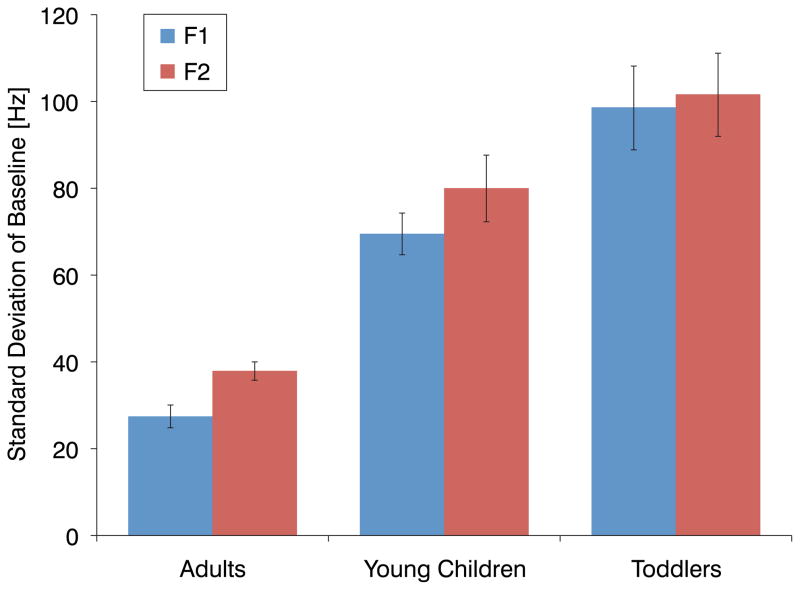

An examination of individual’s baseline utterances revealed that variability in production decreased with age. The average individual’s standard deviation in F1 and F2 during production of baseline utterances is plotted in Figure 3. For both F1 and F2, an ANOVA revealed a significant effect between groups [F1: F(2,69) = 37.23, p < 0.001; F2: F(2,69) = 22.32, p < 0.001]. Multiple comparisons with Bonferroni correction confirmed that for both F1 and F2, the differences between all groups were significant (p < 0.05).

Figure 3.

Standard deviation in F1 and F2 of an average individual’s production of baseline utterances for each of the three groups. Standard error bars are shown.

Discussion

Our data suggest that by the age of 4, children are monitoring their speech productions in an adult-like manner. Toddlers, in contrast, do not appear to self-regulate their vowel acoustics like adults or young children do. Feedback discrepancies with their own speech simply do not produce compensatory behaviors. At first blush, these results seem paradoxical. Perceptual attunement to the vowel space of the native language is in evidence by 6 months of age [20]. Infants readily detect small deviations in others’ pronunciation of familiar words [21] and begin babbling in prosodic patterns characteristic of the language they have been exposed to [22]. By the age of 24 months, American children have an average vocabulary size of about 300 words [23]. Thus, by two years of age, toddlers appear to be well on the way to acquiring the sound structure of their native language. If toddlers do not automatically monitor their own speech productions for accuracy as adults and young children do, then how do they learn to produce the speech sounds used in their language community? We see two kinds of possible answers to this question: 1) explanations that are consistent with the idea that feedback error correction is important at all ages but that its role is context-dependent in young children, and 2) hypotheses that suggest that error correction based on feedback of the child’s own speech develops only after the internal representation of a sound category is robust.

One context-dependent explanation for our data is that children may require different cognitive and/or social conditions to learn language at different ages. For example Baldwin [24] showed that by 18 months, the social cue of gaze direction of a speaker is more important for infant lexical acquisition than other cues which had previously been important such as salience of an object or temporal contiguity of object and name. Similarly, the speech processing behavior of very young children during word learning varies with different cognitive demands. For some online speech testing procedures, young children do not attach labels to objects as readily as they do if they were given more naturalistic contextual support or simpler tasks [25].

Social context might also modulate when auditory feedback can influence the sound representation. As has been shown with songbirds, social or public use of vocalizations and vocal practice in early learning can be differentiated and feedback plays a different role in each type of vocalization [26]. For our 2-year olds, the minimal speech produced by the adults during the task may have resulted in a situation where fine-tuning of production was minimized. In addition, the words produced by the children were reinforced by the video game independent of their accuracy in producing the vowel – the robot progressed through the playground regardless of whether the child did or did not compensate. Note that this was true for both toddlers and young children and this by itself does not explain the age-related changes.

Alternatively, more in line with our second class of explanation, feedback error correction may not be adaptive during the earliest stages of word production, perhaps because of the magnitude of variability observed in the motor activities of toddlers. If production variance alone was the issue, compensations should only be observed when variability is reduced to a tolerable amount. To explore this hypothesis, two types of analyses were conducted. In the first, regressions were computed between an individual’s compensation magnitude and production variability in the baseline values of F1 and F2. When the regressions were carried out within age groups and when the compensation results of the toddler and young children groups were pooled together, no significant relationship (p > 0.3) was found. In the second analysis, we tested whether the perturbation was influencing articulation even if the youngest children did not compensate. It is conceivable that the altered feedback might induce instability even if mature compensatory behaviour was not developed. To test this, the standard deviation of an individual’s last 15 utterances of the baseline phase and shift phase were compared. For both the toddlers and young children, no significant difference in standard deviation was observed for either F1 or F2. These results suggest that variability per se is not the issue.

An additional possibility in line with our second class of explanation is that the rapid growth of the vocal tract during the first two years of life may combine with motor variability to make feedback-based control sub-optimal. The first couple years of life is one of the periods associated with rapid changes in vocal tract size and configuration, primarily due to descent of the larynx [27]. A consequence of this rapid growth is abrupt change in vowel formant values between ages one and four [28]. One solution to this early phase of vocal learning is for learners to regulate their productions to the vocalizations of their communication partners rather than to use feedback from their own ill-defined targets for error correction. This suggestion is consistent with growing evidence that contingent adult behaviors shape the course of vocal learning in both birdsong acquisition and speech development [29, 30].

The most remarkable evidence for socially-guided vocal learning comes from the study of the brown-headed cowbird [31]. Juvenile males, raised in isolation with females who do not sing, nevertheless acquired mature, species-specific songs. Video analyses revealed that the immature male vocalizations were shaped by visual feedback from females (small wing strokes). Thus, without hearing mature male models, these young males were able to learn songs that contained the markers of regional dialects and songs that could strongly elicit female mating responses. As this example demonstrates, adult input to the vocal learning process can vary over a wide spectrum ranging from an acoustic template for assessing articulation error [32, 33] to non-verbal reinforcements for correct articulation [31, 34].

The period between ages one and four is marked by many other cognitive and linguistic developments associated with speech processing. For example, there are questions concerning the immaturity of the receptive phonology of children in this age group when engaged in word learning [35], despite evidence that even younger children are able to make fine-grained speech discriminations. While the auditory speech perception system and the auditory control of speech articulation clearly overlap and share resources, each system appears to have unique requirements and neural architectures tuned to meet those requirements. Single cell populations in the auditory cortex of non-human primates have been shown to be selectively activated or inhibited during the animal’s vocalizations as compared to listening to others [36, 37]. Unique fMRI activity in feedback compared to listening conditions has also been shown in humans [38]. The auditory feedback system, itself, also has different functional components including a mapping between articulator movements and acoustics, an error detection system, and a computational model that learns from the errors and computes new trajectories for speech movements. All of these components must undergo development because the vocal tract changes in size and shape and articulatory precision changes over time. Only through the use of real-time perturbation experiments of the kind performed here will we be able to begin to tease apart the components of this complex network of processing and understand the passage to mature communication.

In summary, an age-related difference in the use of auditory feedback to control speech production was observed. When exposed to altered feedback in which formant frequencies were perturbed, both 4-year-olds and adults compensated but 2-year-olds did not. These results suggest that either the auditory feedback component of the speech motor control system may be suppressed in infants and toddlers or develops between 2 to 4 years of age. While it is not possible to distinguish between these two classes of hypotheses using the present data, the finding that toddlers do not monitor their own auditory feedback in a manner similar to adults has broad implications for models of speech learning.

Experimental Procedures

Participants

The Adult group consisted of 26 female undergraduate students at Queen’s University (mean age of 18.9 years, range 17–22).

For the Young Children group, a total of 31 children between the ages of 3 and 4 years old were recruited in Kingston and Mississauga, Ontario. However, five of these children were excluded, four due to problems in tracking their formants and one due to equipment malfunction leaving a group of 26 children with a mean age of 51.5 months and range of 43–59 months.

For the Toddler group, a total of 50 two-year-old talkers were recruited in Mississagua, Ontario. Twenty-three of the toddlers did not complete the experiment. Ten of the toddlers refused to talk and 13 refused to wear the headphones. Seven of the remaining 27 toddlers that did complete the experiment were also excluded. Of these seven, six of the toddlers did not produce their utterances with a consistent timing (and thus did not receive altered feedback) and one did not produce utterances of the target vowel during the baseline phase. Twenty toddlers with a mean age of 29.8 months (range of 23–35) completed the task. When considering all the toddlers recruited for this study, one may be concerned about the high rate of attrition and the potential for selection bias. We note, however, that no compensation was observed from the toddlers included in this study. Thus, even if these toddlers were in some sense more advanced than the average 2-year-old, we can still be confident that less mature toddlers would also not compensate for the altered formant feedback.

All talkers in the experiment spoke English as their first language and reported no speech or language impairments. The protocol for this study was approved by the institutional ethics review board at both Queen’s University and the University of Toronto Mississauga. All of the adult talkers provided informed consent. All of the young children and toddlers provided verbal assent and their guardians provided informed consent.

Equipment

All of the adults and 14 of the young children included in the study were tested at Queen’s University. The remaining 12 young children and the 20 toddlers included in the study were tested at University of Toronto Mississauga. The same equipment was used in both locations and was identical to that reported in MacDonald et al. [19].

Talkers were seated in front of a computer monitor in a sound-insulated booth (Industrial Acoustics Company, Bronx, NY). Adult talkers were instructed to say the word “bed” at a natural rate and level when it appeared on a monitor in front of them. The young children and toddlers were instructed that they would be playing a computer game where they would help a forgetful robot move across a playground. At the beginning of each level in the game, the playground would appear with the robot at one end and a billboard with a picture of a bed at the other. The children were instructed that they could help the robot move by saying the word “bed”. When a child produced an utterance of bed an operator pressed a button and the robot advanced forward through the playground. The children were familiarized with the game using a training level that required five utterances for the robot to traverse the playground. The game was started after the training level. For each of the five levels in the game, ten utterances were required for the robot to completely traverse the playground.

The speech was recorded using a headset microphone (Shure WH20), amplified (Tucker-Davis Technologies MA3 microphone amplifier), low-pass filtered with a cutoff frequency of 4500 Hz (Krohn-Hite 3384 filter), and digitized at 10 kHz (National Instruments PXI-8106 embedded controller). The National Instruments system generated formant estimates every nine speech samples. Based on these estimates, filter coefficients were calculated to produce formant shifts and the filtering was conducted by the National Instruments system. To mask bone-conducted feedback, the manipulated voice signal was amplified and mixed with speech noise (Madsen Midimate 622 audiometer), and presented over headphones (Sennheiser HD 265) such that the speech and noise were presented at approximately 80 and 50 dBA SPL respectively.

Online formant shifting and detection of voicing

Detection of voicing and formant shifting was performed as previously described by MacDonald et al. [19]. Voicing was detected using a statistical amplitude-threshold technique. The formant shifting was achieved in real-time using an infinite impulse response (IIR) filter. Formants were estimated every 900 μs using an iterative Burg algorithm [39]. Filter coefficients were computed based on these estimates such that a pair of spectral zeroes was placed at the location of the existing formant frequency and a pair of spectral poles was placed at the desired frequency of the new formant.

Offline formant analysis

The recorded data were analyzed in the same way as that used by MacDonald et al. [19]. The boundaries of the vowel segment in each utterance were estimated using an automated process based on the harmonicity of the power spectrum. These boundaries were then inspected by hand and corrected if required.

The first three formant frequencies were estimated offline from the first 25 ms of a vowel segment using the same algorithm as that used in online shifting. The formants were estimated again after shifting the window 1 ms, and repeated until the end of the vowel segment was reached. For each vowel segment, a single “steady-state” value for each formant was calculated by averaging the estimates for that formant from 40% to 80% of the way through the vowel. The “steady-state” results for F1, F2, and F3 for each individual were plotted and inspected. Any estimates that were incorrectly categorized as another (e.g., F2 being mislabeled as F1, etc.) were corrected by hand.

Highlights.

Role of auditory feedback in control of speech production changes across lifespan

Adults and young children (45–60 months) use auditory speech feedback similarly

Toddlers (23–35 months) do not compensate for altered feedback

Acknowledgments

This research was supported by the National Institute of Deafness and Communicative Disorders Grant DC-08092 and the Natural Sciences and Engineering Research Council of Canada.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Callan DE, Kent RD, Guenther FH, Vorperian HK. An auditory-feedback-based neural network model of speech production that is robust to developmental changes in the size and shape of the articulatory system. J Speech Lang Hear Res. 2000;43:721–736. doi: 10.1044/jslhr.4303.721. [DOI] [PubMed] [Google Scholar]

- 2.Howard IS, Messum P. Modeling the development of pronunciation in infant speech acquisition. Motor Control. 2011;15:85–117. doi: 10.1123/mcj.15.1.85. [DOI] [PubMed] [Google Scholar]

- 3.Bailly G. Learning to speak. Sensorimotor control of speech movements. Speech Communication. 1997;22:251–267. [Google Scholar]

- 4.Oller DK, Eilers RE. The role of audition in infant babbling. Child Dev. 1988;59:441–449. [PubMed] [Google Scholar]

- 5.Osberger MJ, McGarr N. Speech production characteristics of the hearing impaired. In: Lass N, editor. Speech and Language: Advances in Basic Research and Practice. Vol. 8. New York: Academic Press; 1982. pp. 221–283. [Google Scholar]

- 6.Konishi M. Effects of deafening on song development in American robins and black-headed grosbeaks. Z Tierpsychol. 1965;22:584–599. [PubMed] [Google Scholar]

- 7.Waldstein RS. Effects of postlingual deafness on speech production: Implications for the role of auditory feedback. J Acoust Soc Am. 1990;88:2099–2114. doi: 10.1121/1.400107. [DOI] [PubMed] [Google Scholar]

- 8.Nordeen KW, Nordeen EJ. Auditory feedback is necessary for the maintenance of stereotyped song in adult zebra finches. Behav Neural Biol. 1992;57:58–66. doi: 10.1016/0163-1047(92)90757-u. [DOI] [PubMed] [Google Scholar]

- 9.Manabe K, Sadr EI, Dooling RJ. Control of vocal intensity in budgerigars (Melopsittacus undulatus): Differential reinforcement of vocal intensity and the Lombard effect. J Acoust Soc Am. 1998;103:1190–1198. doi: 10.1121/1.421227. [DOI] [PubMed] [Google Scholar]

- 10.Lombard E. Le signe de le elevation de la voix (The sign of the rise in the voice) Ann Malad l’Orielle Larynx Nez Pharynx (Annals of diseases of the ear, larynx, nose and pharynx) 1911;37:101–119. [Google Scholar]

- 11.Osmanski MS, Dooling RJ. The effect of altered auditory feedback on control of vocal production in budgerigars (Melopsittacus undulatus) J Acoust Soc Am. 2009;126:911–919. doi: 10.1121/1.3158928. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Lee BS. Effects of delayed speech feedback. J Acoust Soc Am. 1950;22:824–826. [Google Scholar]

- 13.Burnett TA, Freedland MB, Larson CR, Hain TC. Voice F0 responses to manipulations in pitch feedback. J Acoust Soc Am. 1998;103:3153–3161. doi: 10.1121/1.423073. [DOI] [PubMed] [Google Scholar]

- 14.Yeni-Komishan G, Chase RA, Mobley RL. The development of auditory feedback monitoring. II Delayed auditory feedback studies on the speech of children between two and three years of age. J Speech Hear Res. 1968;11:307–15. doi: 10.1044/jshr.1102.307. [DOI] [PubMed] [Google Scholar]

- 15.Belmore NF, Kewley-Port D, Mobley RL, Goodman VE. The development of auditory feedback monitoring: Delayed auditory feedback studies on the vocalizations of children aged six to 19 months. J Speech Hear Res. 1973;16:709–720. doi: 10.1044/jshr.1604.709. [DOI] [PubMed] [Google Scholar]

- 16.Houde JF, Jordan MI. Sensorimotor adaptation in speech production. Science. 1998;279:1213–1216. doi: 10.1126/science.279.5354.1213. [DOI] [PubMed] [Google Scholar]

- 17.Purcell DW, Munhall KG. Adaptive control of vowel formant frequency: Evidence from real-time formant manipulation. J Acoust Soc Am. 2006;120:966–977. doi: 10.1121/1.2217714. [DOI] [PubMed] [Google Scholar]

- 18.Villacorta VM, Perkell JS, Guenther FH. Sensorimotor adaptation to feedback perturbations of vowel acoustics and its relation to perception. J Acoust Soc Am. 2007;122:2306–2319. doi: 10.1121/1.2773966. [DOI] [PubMed] [Google Scholar]

- 19.MacDonald EN, Goldberg R, Munhall KG. Compensations in response to real-time formant perturbations of different magnitudes. J Acoust Soc Am. 2010;127:1059–1068. doi: 10.1121/1.3278606. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Doupe AJ, Kuhl PK. Birdsong and human speech: Common themes and mechanisms. Annual Rev Neurosci. 1999;22:567–631. doi: 10.1146/annurev.neuro.22.1.567. [DOI] [PubMed] [Google Scholar]

- 21.Swingley D. 11 month-olds’ knowledge of how familiar words sound. Developmental Science. 2005;8:432–443. doi: 10.1111/j.1467-7687.2005.00432.x. [DOI] [PubMed] [Google Scholar]

- 22.de Boisson-Bardies B. How Language Comes to Children. Cambridge: MIT press; 1999. [Google Scholar]

- 23.Stoel-Gammon C. Relationships between lexical and phonological development in young children. J Child Lang. 2011;38:1–34. doi: 10.1017/S0305000910000425. [DOI] [PubMed] [Google Scholar]

- 24.Baldwin DA. Infant’s ability to consult the speaker for clues to word reference. J Child Language. 1993;20:395–418. doi: 10.1017/s0305000900008345. [DOI] [PubMed] [Google Scholar]

- 25.Yoshida KA, Fennell CT, Swingley D, Werker JF. Fourteen-month-old infants learn similar-sounding words. Developmental Science. 2009;12:412–418. doi: 10.1111/j.1467-7687.2008.00789.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Sakata JT, Brainard MS. Social context rapidly modulates the influence of auditory feedback on avian vocal motor control. J Neurophysiol. 2009;102:2485–2497. doi: 10.1152/jn.00340.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Fitch WT, Giedd J. Morphology and development of the human vocal tract: A study using magnetic resonance imaging. J Acoust Soc Am. 1999;106:1511–1522. doi: 10.1121/1.427148. [DOI] [PubMed] [Google Scholar]

- 28.Vorperian HK, Kent RD. Vowel acoustic space development in children: a synthesis of acoustic and anatomic data. J Speech Lang Hear Res. 2007;50:1510–1545. doi: 10.1044/1092-4388(2007/104). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Goldstein MH, Schwade JA. From birds to words: Perception of structure in social interaction guides vocal development and language learning. In: Blumberg MS, Freeman JH, Robinson SR, editors. The Oxford Handbook of Developmental and Comparative Neuroscience. Oxford: Oxford University Press; 2010. pp. 708–729. [Google Scholar]

- 30.Kuhl PK, Tsao FM, Liu HM. Foreign-language experience in infancy: Effects of short-term exposure and social interaction on phonetic learning. PNAS. 2003;100:9096–9101. doi: 10.1073/pnas.1532872100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.West JM, King AP. Female visual displays affect the development of male song in the cowbird. Nature. 1988;334:244–246. doi: 10.1038/334244a0. [DOI] [PubMed] [Google Scholar]

- 32.Kuhl PK, Meltzoff AN. Infant vocalizations in response to speech: Vocal imitation and developmental change. J Acoust Soc Am. 1996;100:2425–2438. doi: 10.1121/1.417951. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Papousek M, Papousek H. Forms and functions of vocal matching in interactions between mothers and their precanonical infants. First Language. 1989;9:137–158. [Google Scholar]

- 34.Goldstein MH, King AP, West MJ. Social interaction shapes babbling: Testing parallels between birdsong and speech. PNAS. 2003;100:8030–8035. doi: 10.1073/pnas.1332441100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Stager CS, Werker JF. Infants listen for more phonetic detail in speech perception than in word-learning tasks. Nature. 1997;388:381–382. doi: 10.1038/41102. [DOI] [PubMed] [Google Scholar]

- 36.Eliades SJ, Wang X. Sensory-motor interaction in the primate auditory cortex during self-initiated vocalizations. J Neurophysiol. 2005;89:2194–2207. doi: 10.1152/jn.00627.2002. [DOI] [PubMed] [Google Scholar]

- 37.Eliades SJ, Wang X. Neural substrates of vocalization feedback monitoring in primate auditory cortex. Nature. 2008;453:1102–1106. doi: 10.1038/nature06910. [DOI] [PubMed] [Google Scholar]

- 38.Zheng ZZ, Munhall KG, Johnsrude IS. Functional overlap between regions involved in speech perception and in monitoring one’s own voice during speech production. J. Cog. Neurosci. 2010;22:1770–1781. doi: 10.1162/jocn.2009.21324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Orfanidis SJ. Optimum Signal Processing, An Introduction. New York: MacMillan; 1988. p. 590. [Google Scholar]