Abstract

In this study, confirmatory factor analyses were used to examine the interrelationships among latent factors of the Simple View of reading comprehension (word recognition and language comprehension) and hypothesized additional factors (vocabulary and reading fluency) in a sample of 476 adult learners with low literacy levels. The results provided evidence for reliable distinctions between word recognition, fluency, language comprehension, and vocabulary skills as components of reading. Even so, the data did not support the hypothesis that the Simple View needs to be expanded to include vocabulary or fluency factors, as has been posited in a few prior studies of younger and more able readers. Rather, word recognition and language comprehension alone were found to account adequately for variation in reading comprehension in adults with low literacy.

Recent reports from the National Assessment of Adult Literacy (NAAL) and the Adult Literacy and Life Skills Survey (ALL) converge in indicating that approximately 11% of adults in the United States are non-literate or lack sufficient English language proficiency to be assessed, and that an additional 13–20% lack fundamental reading skills (Kutner, Greenberg, Jin, Boyle, Hsu, & Dunleavy, 2007; Statistics Canada & OECD, 2005). The latter group of adults, who may be termed “low-literate,” are able to read only simple texts and to draw only low-level inferences from them, and thus cannot handle more challenging reading material at a level sufficient for attaining most educational and occupational goals. Although the past few decades have seen a great deal of empirical and theoretical investigation of skilled reading and of children’s reading abilities and disabilities, research on adults with low literacy has been sparse. At present, therefore, it is not clear how adequately our current understanding of the bases for skill differences among children provides an appropriate framework for understanding adults with low literacy, whose reading levels are roughly below those of seventh-grade students.

The starting point for most contemporary models of reading is the “Simple View” (Gough, 1972, 1996; Gough & Hillinger, 1980; Gough & Tunmer, 1986; Hoover & Gough, 1990; Tunmer & Hoover, 1992, 1993), which “makes two claims: first, that reading consists of word recognition and linguistic comprehension; and second, that each of these components is necessary for reading, neither being sufficient in itself” (Hoover & Tunmer, 1993, p. 3). Evidence that word recognition1 and language comprehension together account for large proportions of variance in reading comprehension in skilled readers as well as younger students, and that the relative contributions of these two components shifts over development, has also subsequently been obtained in numerous studies by other investigators (e.g., Carver, 1997, 2003; Catts, Adlof & Weismer, 2006; Curtis, 1980; Cutting & Scarborough, 2006; Francis, Fletcher, Catts & Tomblin, 2005; Goff, Pratt, & Ong, 2005; Joshi, Williams & Wood, 1998; Shankweiler, Lundquist, Katz, Stuebing, & Fletcher, 1999; Vellutino, Tunmer, Jaccard, & Chen, 2007).

Despite the accumulated evidence in support of the Simple View, concerns have been expressed that the model may be too simple (e.g., Joshi, 2005; Joshi & Aaron, 2000; Vellutino et al., 2007). In seeking to elaborate on the Simple View, researchers have most often questioned whether the model needs to be expanded to include a separate vocabulary component distinct from other language comprehension skills, or a separate processing speed/fluency component. In our sample, therefore, we investigated whether the addition of these hypothesized factors would provide a better account of reading comprehension differences among adults with low literacy.

Language comprehension: A unique role for vocabulary?

In investigations of the Simple View, the language comprehension component has usually been represented either by a listening comprehension task that assessed how well the reader understands sentences and longer passages when they are presented orally rather than in written form as on reading comprehension tests; or by a combination of oral language measures. In either case, vocabulary knowledge, as well as sentence- and text-processing capabilities, is required to do well on these tests. Vocabulary is well recognized to be associated with reading proficiency (Beck and McKeown, 1991; Cunningham & Stanovich, 1997; Daneman, 1988; Hirsch 2003; Perfetti, 2007), with correlations between vocabulary and reading comprehension tests typically in the range between .6 – .7 (Anderson & Freebody, 1981). According to Perfetti’s lexical quality hypothesis (Perfetti, 2007; Perfetti & Hart, 2002) and others (Braze, Tabor, Shankweiler, & Mencl, 2007; Joshi, 2005), vocabulary is posited to play a unique role in processing text for meaning.

When a distinction has been made between vocabulary and non-lexical facets of language comprehension in some recent studies, evidence has been found that this strengthens the prediction of reading comprehension in young adults (Braze et al., 2007) and for some reading comprehension tests in children (Cutting & Scarborough, 2006). Although the samples in those studies included mainly normally achieving readers, we found their results to be interesting, and sought to examine their applicability to adults with low literacy.

Fluency: A necessary additional component?

The speed with which one can read words and connected text may, on the one hand, be another indication (besides accuracy) of one’s proficiency in decoding and word recognition. On the other hand, it has been hypothesized that text reading fluency may be a third key component in models of the reading process that includes not only automaticity and efficiency at the lexical level, but also a coordination, synchronization, and integration function across lexical, sentential, and even comprehension levels (Berninger, Abbott, Billingsley & Nagy, 2001; Breznitz, 2006; Kame’enui & Simmons, 2001; Fuchs, Fuchs, Hosp, & Jenkins, 2001; Samuels, 2006). Evidence on this issue has been mixed, with results depending on how word and text fluency were measured, on the analytic methods, and on the ages and reading abilities of the samples, which have included elementary school children (Adlof, Catts, & Little, 2006; Buly & Valencia, 2002; Carver & David, 2001; Fuchs et al., 2001; Jenkins, Fuchs, van den Broek, Espin, & Deno, 2003; Joshi & Aaron, 2000; Tannenbaum, Torgesen, & Wagner, 2006; Torgesen, Rashotte & Alexander, 2001; Wayman, Wallace, Wiley, Ticha, & Espin, 2007; Wolf, & Katzir-Cohen, 2001), skilled adult readers (Carver, 2003), and adults with low literacy (Sabatini, 2002, 2003).

Goals of the Study

In this study, we examine the interrelationships among the Simple View components and hypothesized additional factors, and their relationship to reading comprehension. In doing so, we aimed to address the following questions with regard to adults with low literacy.

How well does the Simple View model account for variation in reading comprehension?

Can oral vocabulary be subsumed within the language comprehension component, or is it a distinct component on its own? If so, is it significantly related to reading comprehension independently from word recognition and language comprehension?

Do text-reading fluency and word-reading speed form a unitary processing speed factor or separate latent factors? Alone or in combination, are they significantly related to reading comprehension independently from word recognition and language comprehension?

Method

Participants

Adults who sought assistance from literacy programs in the mid-Atlantic and southern regions of the U.S. were recruited for participation in the study. All who scored below the seventh grade level on a screening test of sight word recognition (see below and Table 1) were included in the analyses for this study unless they lacked intermediate level English proficiency or had severe sensory, cognitive, medical, or psychiatric problems that would markedly interfere with assessment. From the 515 adults who met these criteria, 39 were excluded because they were multivariate outliers based on the critical Mahalanobis distance value of 34.53 (df = 13, p < .001), univariate outliers on one or more of the measures (more than 3.0 standard deviations from the sample mean), or both.

Table 1.

Performance by the Sample (N =476) on Measures of Reading and Language Skills. Descriptive statistics are for raw scores or, if available, Rasch-scaled W scores. For standardized tests, grade equivalents (GE) corresponding to the means are also shown

| Measure [# items] | score | M (SD) | GE | |

|---|---|---|---|---|

| Reading Comprehension | ||||

| PCMP | WJ Passage Comprehension [47] | W | 487 (13) | 2.9 |

|

| ||||

| Word Recognition/Decoding Accuracy (Untimed List Reading) | ||||

| Decoding of Pseudowords | ||||

| WJWA | WJ Word Attack [32] | W | 466 (26) | 1.8 |

| Recognition of Real Words | ||||

| WJWID | WJ Letter Word Identification [76] | W | 483 (23) | 3.4 |

| WRAT | WRAT Reading* [57] | raw | 31 (4) | 3.0 |

|

| ||||

| Reading Speed/Fluency | ||||

| Lists of Words/Pseudowords (# correct within 45 sec) | ||||

| TWSW | TOWRE Sight Word Efficiency [104] | raw | 58 (14) | 3.8 |

| TWPD | TOWRE Phonemic Decoding Eff. [63] | raw | 11 (9) | 1.8 |

| Rate for Reading of Connected Text for Meaning | ||||

| WJFLU | WJ Reading Fluency (sentences) [98] | W | 490 (25) | 2.5 |

| NBRS | NAAL BRS Oral Reading (texts) [n/a] | wcpm | 95 (38) | n/a |

|

| ||||

| Oral Language Skills | ||||

| Language Comprehension | ||||

| WJOC | WJ Oral Comprehension (text) [34] | W | 501 (16) | 4.5 |

| WJUD | WJ Understanding Directions [57] | W | 489 (10) | 3.7 |

| WJSR | WJ Story Recall [79] | W | 499 (5) | 4.2 |

| Vocabulary (Picture Naming) | ||||

| WJPV | WJ Picture Vocabulary [44] | W | 499 (14) | 4.3 |

| BNT | Boston Naming Test [60] | raw | 37 (9) | n/a |

This measure was used to screen participants for eligibility.

Note: WJ = Woodcock-Johnson; WRAT = Wide Range Achievement Test; TOWRE = Test of Word Reading Efficiency; NBRS = Basic Reading Skills oral passage reading measure from the National Assessment of Adult Literacy; wcpm = words correct per minute.

The remaining sample of 476 adults was 66% female and 83% African American, with ages ranging from 16 to 76 years (M = 36.9; SD =13.7). According to their responses in a structured interview regarding their educational backgrounds, adults in the sample had completed 1 to 12 years of schooling (M = 9.4, SD = 2.0); 8% were non-native speakers; and 29% had received special help with reading during their school years. The large proportion of African American participants is consistent with populations served in literacy programs in many urban centers, in which there is larger representation of this group among adults with low literacy. Generalizeability to other groups and settings is consequently uncertain.

Measures

The 13 measures that were used to assess reading and language skills are listed and briefly described in Table 1. To determine initial word recognition levels and screening for eligibility, the Reading subtest of the Wide Range Achievement Test – 3 (WRAT; Wilkinson, 1993) was used. Adults with word reading skills at the seventh grade level or below were eligible for the study. From the Woodcock-Johnson III Tests of Achievement (WJ: Woodcock, McGrew, & Mather, 2001), we administered the Reading cluster (Letter-Word Identification, (WJWID), Word Attack (WJWA), Passage Comprehension (PCMP), and Reading Fluency (WJFLU) subtests), the Oral Language Cluster (Story Recall (WJSR), Understanding Directions (WJUD), Picture Vocabulary (WJPV), and Oral Comprehension (WJOC) subtests). The Boston Naming Test (BNT), an expressive vocabulary measure, was also administered (Goodglass & Kaplan, 1983). From the Tests of Word Reading Efficiency (TOWRE: Torgesen, Wagner, & Rashotte, 1999), subtests requiring the speeded reading of real English words (Sight Word Efficiency: TWSW) and of pseudowords (Phonemic Decoding Efficiency: TWPD) were administered.

Participants also read four short passages that were provided with permission from the National Assessment of Adult Literacy (NAAL) Basic Reading Skills survey. The NAAL Basic Reading Skills (NBRS) score was computed by averaging the number of words read correctly per minute across the four passages. This test was administered nationally as part of the NAAL, and permission to use it was granted by the National Center for Educational Statistics.

With regard to the other measures in the battery, the difficulty of the items increased gradually on all tests except for Woodcock-Johnson Reading Fluency, on which the difficulty of the sentences remains similar throughout the test. Published manuals for all tests indicate that they have adequate reliability and validity for children with reading levels that are similar to those of adults with low literacy. It bears noting, however, that the standardization samples, though including a representative sample of adults, included very few unskilled adult readers. The validity of computing standardized scores for our population is thus highly questionable, and the use of age- or grade-adjusted scores is not essential for addressing the questions of interest. In our analyses, therefore, we used the publisher’s Rasch-scaled W-scores for Woodcock-Johnson subtests, and raw scores for the other tests.

Results

Preliminary Analyses

SPSS Version 15.0 and EQS Version 6.1 (Bentler, 1985–2006) were employed for all analyses. We first examined score distributions for the 13 measures for outliers, univariate and multivariate normality, and bivariate linearity. No curvilinearity was seen for any pairwise relationships between variables. However, distributions for all but four of the measures (WRAT, WJWID, TWSW, and NBRS) were significantly skewed. Likewise, the normalized Mardia’s multivariate kurtosis value of 7.45 suggested that the multivariate score distribution was non-normal. The absence of curvilinear relationships among the variables satisfied the requirement for employing linear factor analysis models, while the non-normality of the data suggested the need to employ appropriate adjustments to the evaluation of model fit, as described below.

Reading and Language Skills in the Sample

Table 1 provides a summary of test performance by the sample of adults with low literacy. Basic word-reading skills ranged from about the first through seventh grade levels, and most other reading scores were commensurate with their word-reading levels. However, it bears noting that their phonemic decoding skill levels on pseudoword items (WJWA and TWPD) were somewhat lower than when the task required recognition of real words (WJWID, TWSW), as has been observed previously in the literature on adult learners (e.g., Davidson & Strucker, 2002; Greenberg, Ehri, & Perin, 1997, 2002; Pratt & Brady, 1988; Read & Ruyter, 1985).

Slightly higher grade levels were shown for language comprehension, as measured by tests of Oral Comprehension (WJOC), Story Recall (WJSR), and Understanding Directions (WJUD). The sample’s vocabulary knowledge, as demonstrated on the WJ Picture Vocabulary (WJPV) was similar to language comprehension in level.

Relationships Among Skills

Correlations among the measures are shown in Table 2. As expected, Passage Comprehension (PCMP) was significantly associated with all other measures, although somewhat less strongly (r = .26 – .36) with decoding scores (WJWA and TWPD) and story recall (WJSR) than with word recognition (WRAT, WJWID, TWSW), reading fluency (WJFLU, NBRS), language comprehension (WJUD, WJOC), and vocabulary (WJPV, BNT) (.43 – .57). There were also fairly strong relations among the word recognition and decoding measures (.62–.84). The two text-reading fluency measures (WJFLU, NBRS) were highly correlated with each other (.79) and with the speeded TOWRE Sight Word scores (TWSW) (.76 and .87, respectively), and moderately with speeded TOWRE Phonemic decoding scores (TWPD) (.41 and .58 respectively).

Table 2.

Correlation of Test Scores with Each Other

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1. PCMP | -- | ||||||||||||

| 2. WJWA | .35 | -- | |||||||||||

| 3. WJWID | .57 | .62 | -- | ||||||||||

| 4. WRAT | .47 | .63 | .84 | -- | |||||||||

| 5. TWSW | .49 | .56 | .80 | .71 | -- | ||||||||

| 6. TWPD | .26 | .67 | .65 | .63 | .60 | -- | |||||||

| 7. WJFLU | .54 | .45 | .65 | .56 | .76 | .41 | -- | ||||||

| 8. NBRS | .47 | .51 | .77 | .68 | .87 | .58 | .79 | -- | |||||

| 9. WJOC | .52 | .00 | .10 | .07 | .07 | −.15 | .25 | .09 | -- | ||||

| 10. WJUD | .46 | .09 | .12 | .09 | .15 | −.05 | .32 | .14 | .59 | -- | |||

| 11. WJSR | .36 | .00 | .05 | .02 | .06 | −.10 | .20 | .07 | .58 | .58 | -- | ||

| 12. WJPV | .43 | .04 | .15 | .11 | .12 | −.04 | .26 | .13 | .65 | .46 | .44 | -- | |

| 13. BNT | .50 | .13 | .18 | .15 | .13 | .01 | .28 | .14 | .69 | .48 | .47 | .80 | -- |

N = 476

Note. For all coefficients except those in italics, p < .001, two-tailed. PCMP = WJ Passage Comprehension; WJWA = WJ Word Attack; WJWID = WJ Word Identification; WRAT = Wide Range Achievement Test – Reading; TWSW = TOWRE Single Word Efficiency; TWPD = Phonological Decoding Efficiency; WJFLU = WJ Reading Fluency; NBRS = NAAL Basic Reading Skills oral passage reading fluency; WJOC = WJ Oral Comprehension; WJUD = WJ Understanding Directions; WJSR = WJ Story Recall; WJPV = WJ Picture Vocabulary; BNT = Boston Naming Test.

The three language comprehension scores (WJOC, WJUD, WJSR) correlated moderately well with one another (.58–.59), and with the two vocabulary measures (WJPV, BNT) (.44–.69), which were closely associated (.80). However, these five spoken language measures correlated weakly with NBRS text reading rate (.07–.14) and were unrelated to word recognition and decoding skills.

Hypothesis Testing: Confirmatory Factor Analyses

To address the questions of interest, a series of confirmatory factor analyses (CFA) were conducted. We chose CFA, in part, because this method enabled us to estimate the interrelationships among latent constructs defined by multiple indicators within a single analysis. The use of multiple indicators is an improvement over a situation where a construct is represented by a single variable. First, latent factors can provide better representation of the construct (Pedhazur, 1997). Second, the use of latent factors allows estimation of model parameters adjusted for measurement error (Byrne, 1995). Maruyama (1998) recommends structural equation models when researchers want to know not only how well predictors explain a criterion variable, but also which specific latent constructs are most important in predicting.

Each CFA model was designed both (1) to test the fit of models that represent different relationships between observed variables and latent factors, and (2) to see how well those latent factors predicted reading comprehension. The general strategy was to compare the relative goodness of fit of competing CFA models that specified alternative underlying factor structures. The total proportion of variance in reading comprehension that was accounted for by a model, and the intercorrelations of its factors, were also examined. These procedures allowed us to evaluate the merits of our theoretical contrasts, and to examine what was gained or lost in parsimony. Finally, we examined which factors predicted reading comprehension. All CFA models tested in this study were based on the covariance matrix for the 13 measures, including WJ Passage Comprehension (PCMP), the single criterion measure for reading comprehension in the analysis. For all CFAs, maximum likelihood was used for model parameter estimation. The factor variances were set to 1.0 for scaling the factors, while all the path coefficients, which indicate the regression of the variables on the associated latent factors (factor loadings), were estimated freely. Due to the multivariate non-normality of the data, the Satorra-Bentler scaled chi-square statistic (Satorra, 1990), which offers an adjustment for non-normality, was used for evaluation of model fit. The appropriateness of each CFA model was evaluated by using multiple criteria: substantive interpretability of the patterns observed in the model parameter estimates, model parsimony, and overall goodness of fit to data. For evaluating the adequacy of the overall goodness of model fit, the following indices were obtained from the EQS output for each tested model: (1) the ratio of the Satorra-Bentler chi-square to the model degrees of freedom (χ2 S-B/df) for which a value of 3.0 or lower is considered to represent an adequate fit (Kline, 1998); (2) the consistent version of Akaike Information Criterion (CAIC), for which lower values indicate better-fitting models taking complexity of the model into account (Byrne, 1995); (3) the Non-Normed Fit Index (NNFI), for which values of .90 or higher indicate an adequate improvement over a model that specifies no latent factor, taking account of model complexity (Raykov & Marcoulides, 2000); (4) the Comparative Fit Index (CFI), for which values of .90 or higher indicate adequate overall improvement of model fit over an independent model that hypothesizes that all observed variables are uncorrelated; and (5) the Root Mean Square Error of Approximation (RMSEA), for which values of .08 or lower indicate an adequate fit (Browne & Cudeck, 1993).

In addition, the magnitudes of inter-factor correlations were examined to determine the extent to which latent factors were psychometrically distinct from one another (Bagozzi & Yi, 1992). For that purpose, a value of .90 or higher was considered to be an indication of lack of distinctness. Finally, for evaluating the relative goodness of fit of a pair of nested models, Satorra and Bentler’s (2001) procedure for conducting chi-square difference tests based on the Satorra-Bentler scaled chi-square statistic was followed. When different CFA models are based on the same set of variables, a model that contains a larger number of freely estimated model parameters is a less restrictive model, improving the likelihood of observing better model fit. Chi-square difference tests were thus used to test whether the more restrictive models differed significantly from those with more free parameters.

Models Tested With CFA Analyses

Seven CFA models were compared, each with reading comprehension as the criterion measure. Table 3 provides a comparison of the factor structures of the models and the values of key goodness-of-fit indices for each model. Standardized model parameter estimates, total R2 values, and intercorrelations among factors are shown in the Appendix.

Table 3.

Factor Structures and Key Fit Indices for Tested Models, All with Passage Comprehension (PCMP) as the Dependent Measure

| Model | Factors | Fit Indices | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | df | χ2 | χ2/df | CAIC | NNFI | CFI | RMSEA | |

| Simple View: Word Recognition + Language Comprehension | ||||||||||||

| 1 | WRAT | WJOC | 12 | 37.28 | 3.1 | -48.7 | .966 | .980 | .067 | |||

| WJWID | WJUD | |||||||||||

| WJWA | WJSR | |||||||||||

|

| ||||||||||||

| Vocabulary Added: (a) to Language Comprehension; (b) as Separate Factor | ||||||||||||

| 2(a) | WRAT | WJOC | 25 | 184.24 | 7.4 | 5.2 | .871 | .910 | .116 | |||

| WJWID | WJUD | |||||||||||

| WJWA | WJSR | |||||||||||

| WJPV | ||||||||||||

| BNT | ||||||||||||

|

| ||||||||||||

| 2(b) | WRAT | WJOC | WJPV | 22 | 72.58 | 3.3 | -85.0 | .953 | .971 | .070 | ||

| WJWID | WJUD | BNT | ||||||||||

| WJWA | WJSR | |||||||||||

|

| ||||||||||||

| Fluency Added: (a) to Word Recognition; (b) as Factor; (c) Text Fluency only; (d) as 2 Factors | ||||||||||||

| 3(a) | WRAT | WJOC | WJPV | 60 | 556.81 | 9.3 | 126.9 | .831 | .870 | .132 | ||

| WJWID | WJUD | BNT | ||||||||||

| WJWA | WJSR | |||||||||||

| WJFLU | ||||||||||||

| NBRS | ||||||||||||

| TWSW | ||||||||||||

| TWPD | ||||||||||||

|

| ||||||||||||

| 3(b) | WRAT | WJOC | WJPV | WJFLU | 56 | 366.82 | 6.6 | -34.2 | .887 | .919 | .108 | |

| WJWID | WJUD | BNT | NBRS | |||||||||

| WJWA | WJSR | TWSW | ||||||||||

| TWPD | ||||||||||||

|

| ||||||||||||

| 3(c) | WRAT | WJOC | WJPV | WJFLU | 56 | 462.98 | 8.3 | 62.0 | .852 | .894 | .124 | |

| WJWID | WJUD | BNT | NBRS | |||||||||

| WJWA | WJSR | |||||||||||

| TWSW | ||||||||||||

| TWPD | ||||||||||||

|

| ||||||||||||

| 3(d) | WRAT | WJOC | WJPV | WJFLU | TWSW | 51 | 313.17 | 6.1 | -52.3 | .895 | .931 | .104 |

| WJWID | WJUD | BNT | NBRS | TWPD | ||||||||

| WJWA | WJSR | |||||||||||

WRAT = WRAT Reading; WJWID = WJ Letter Word Identification; WJWA = WJ Word Attack; WJOC = WJ Oral Comprehension; WJUD = WJ Understanding Directions; WJSR = WJ Story Recall; WJPV = WJ Picture Vocabulary; BNT = Boston Naming Test; WJFLU = WJ Reading Fluency; NBRS = NAAL Basic Reading Skills; TWSW = TOWRE Sight Word Efficiency; TWPD = TOWRE Phonemic Decoding Efficiency; df = model degrees of freedom; χ2 = Satorra-Bentler scaled chi-square; χ2/df = Satorra-Bentler scaled chi-square/model degrees of freedom; CAIC = the consistent version of Akaike Information Criterion; NNFI = Non-Normed Fit Index; CFI = Comparative Fit Index; RMSEA = Root Mean Square Error of Approximation.

Model 1

To address the first research question, we tested a model that included just two latent factors: Word recognition (based on the three untimed word recognition and decoding scores) and Language Comprehension (based on WJ Oral Comprehension, Understanding Directions, and Story Recall scores). This model represents the Simple View, in which these two factors are each necessary but not sufficient to account for variation in reading comprehension.

The fit of Model 1 to the data was supported by the NNFI, CFI and RMSEA indices, which were all above the pre-determined criteria for an acceptable model fit (Table 3). The loadings for all variables associated with the Word Recognition and Language Comprehension factors were substantial (> .50). Reading Comprehension loaded on both factors at about the same substantial level (.52 and .54 respectively), and together the two factors accounted for 64.2% of the variance in Passage Comprehension scores. The correlation between the Word Recognition and Language Comprehension factors was statistically significant but very low (r = .12).

Models 2(a) and 2(b)

To address Question 2, we tested two models that expand the Simple View by adding vocabulary scores in different ways. Like Model 1, Model 2(a) specified two correlated latent factors, but the Language Comprehension factor was based on vocabulary scores in addition to the other language measures, rather than just the latter. This model is consistent with the notion that vocabulary skills are not distinct from other language skills. In contrast, Model 2(b) represents the alternative hypothesis that vocabulary may be better viewed as a separate factor in relation to reading comprehension. This model thus specifies three correlated latent factors: Word recognition, Language Comprehension, and Vocabulary. As noted above, model 2(b) contains a larger number of freely estimated model parameters, so it is a less restrictive model, improving the likelihood of observing better model fit. However, Model 2(a) is a more parsimonious model, so if it were to fit almost as well as 2(b), it would be preferred.

Which of the two models provided a better fit to the data? In terms of the overall goodness of fit (Table 3), Model 2(b) was supported by the NNFI, CFI and RMSEA indices, which were all above the pre-determined criteria for adequacy. The overall fit of Model 2(a) based on these indices was considerably poorer, in that CFI was the only index that met the criteria. The CAIC values were considerably lower for Model 2(b) than for Model 2(a). Moreover, a chi-square difference test indicated a significant difference between these two models (χ2 S-B difference =100.6; df = 3, p < .001). As shown in the Appendix, path coefficients were appropriate for both models. The correlation between the Language and Vocabulary factors in Model 2(b) was high (.82), although still below the rule-of-thumb value of .90 used for evaluating the distinctiveness of a pair of factors.

Therefore, Model 2(b) appears to be the better representation of the factor structure on the basis of the better overall fit indices, the significantly better fit than for Model 2(a), and the high but still acceptable inter-factor correlation between the Language and Vocabulary factors. The results thus indicate that vocabulary knowledge is psychometrically distinct from both word recognition and language comprehension skills, even though quite highly correlated with the latter. In designing additional models, therefore, we retained the distinct Vocabulary factor in each.

Models 3(a), 3(b), 3(c), and 3(d)

To address Question 3, we expanded on Model 2(b) by constructing four models to explore how reading speed/fluency might fit into the picture. Model 3(a) included the same three correlated latent factors as Model 2(b), but the Word Recognition factor was based on the four speeded measures in addition to the untimed measures of word recognition and pseudoword decoding. This model represents the hypothesis that reading speed (both the efficiency of list reading and the fluency of text reading) is just another demonstration of the individual’s skill in word recognition and decoding.

Model 3(b), in contrast, added Fluency as a fourth factor, derived from the four speeded measures. This model reflects the view that processing speed is a distinct component of reading skill. Model 3(c) is similar to 3(b), but the Fluency factor is based only on the text-reading fluency measures (WJFLU and NBRS) whereas word/pseudoword-reading speed measures are instead included in the Word Recognition factor. Finally, Model 3(d) also resembles 3(b), but splits Fluency into two separate factors: Fluency (of text reading) and Efficiency (of word/pseudoword reading).

The χ2 S-B/df and RMSEA indices of model fit were unsatisfactory for Model 3(d), although the values of CFI and NNFI were at about the level expected for a good-fitting model (Table 3). These results suggest that the fit of this model was only marginally acceptable. Furthermore, there were high correlations among factors (see Appendix), with Efficiency strongly related to both Word Recognition (.95) and Fluency (.998) in this model. Because this suggests that these pairs of factors are not psychometrically distinguishable from each other in Model 3(d), this model was deemed not to be a parsimonious representation of the underlying factor structure among the 13 variables. Model 3(d), being the least restrictive of the models, has a bias towards a better fit than the more restrictive prior models. However, with a goal of parsimony, an alternative model that still showed a reasonable fit to the data would still be judged to better represent the underlying factor structure.

Although all inter-factor correlations were below .90 in the other three models (see Appendix), the Word Recognition and Fluency factors in Models 3(b) and 3(c) were quite strongly associated with each other (.88 and .89, respectively). Fit indices (Table 3) for Model 3(b) were fairly comparable to those for Model 3(d), but there were a considerable increase in the value of χ2 S-B/df and decreases in the values of NNFI and CFI and an increase in the values of RMSEA for Models 3(a) and 3(c), and the latter models also had higher CAIC values, suggesting a poorer fit than for Models 3(d) and 3(b).

The evidence for the adequacy of these models was thus somewhat mixed, and none proved to be fully satisfactory with regard to the fit indices. Relatively speaking, however, Model 3(b) appears to provide the best representation of the underlying factor structure. The fit of this model is only marginally acceptable, however, and is significantly poorer than that of the less restrictive Model 3(d). However, the absence of extremely high inter-factor correlations in Model 3(b) is preferred over Model 3(d) in consideration of model parsimony. For the foregoing reasons, Model 3(b) was deemed to be the best model among those tested, indicating that speed and fluency are most appropriately represented by a single distinct factor. In the model, Vocabulary is a stand-alone factor that is highly correlated with the Language Comprehension factor (.82) but not with the Word Recognition (.19) or Fluency (.17) factors. Word/pseudoword-reading efficiency and text-reading fluency are not separate factors in the model, but instead operate together as a single Fluency factor. This Fluency factor is distinct from -- but highly correlated with (.88) -- the Word recognition factor, but weakly related to the Language Comprehension factor (.14).

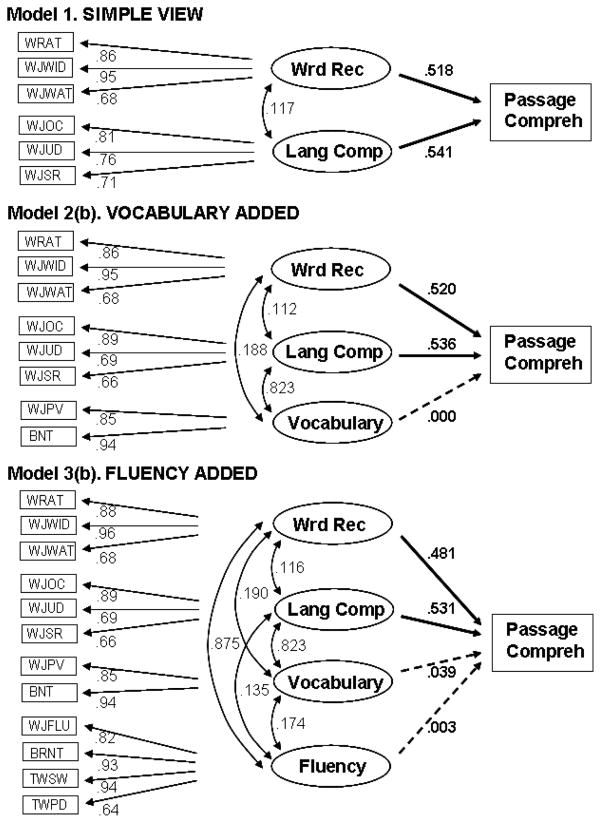

Figure 1 provides the path diagrams for Models 1, 2(b), and 3(b). Of the standardized path coefficients between Reading Comprehension and the model components, only two – from Word Recognition and from Language Comprehension – were significant in each of these models. These results suggest that, consistent with the Simple View model, these two factors are significantly related to reading comprehension in our sample of adults with low literacy, while Vocabulary or Speed/Fluency, as independent factors, are not.

Figure 1. Path Models for the Best-fitting One-, Two-, and Three-Factor Models. Solid lines indicate significant paths (|z|>1.96).

WRAT = WRAT Reading; WJWID = Woodcock-Johnson (WJ) Letter Word Identification; WJWA = WJ Word Attack; WJOC = WJ Oral Comprehension; WJUD = WJ Understanding Directions; WJSR = WJ Story Recall; WJPV = WJ Picture Vocabulary; BNT = Boston Naming Test; WJFLU = WJ Reading Fluency; NBRS = NAAL Basic Reading Skills; TWSW = TOWRE Sight Word Efficiency; TWPD = TOWRE Phonological Decoding Efficiency.

Discussion

The results provided a broad and unprecedented description of the reading skills of adults with low literacy, as will be discussed first. We will then turn to the answers provided by the CFA analyses to the three questions that we set out to address concerning the structural relations among reading skills.

Adults with Low Literacy Reading and Language Skills

Prior assessments of literacy in this population, such as in NAAL reports, have been largely confined to measures of reading comprehension and functional literacy. Our test battery provided a more extensive picture of adults’ skill profiles and the relationships among reading and oral language proficiency. For the most part, the reading levels of the sample were quite similar for most aspects of reading, including word recognition, reading comprehension, and fluency. Although norms are not available for the NBRS, the sample’s mean reading rate of 95 words correct per minute is about what might be expected of a third- or fourth-grader (e.g., Daane, Campbell, Grigg, Goodman, & Oranje, 2005). Furthermore, the correlations among skills in the sample were similar to what one might expect for typical children at these grade levels. The exception to this overall pattern, however, was that lower performance was seen on the two measures of pseudoword decoding (WJ Word Attack and TOWRE Phonological Decoding Efficiency). Thus, there was apparently less alignment between decoding and word recognition than would ordinarily be seen (as represented by test norms). This suggests that many adults with low literacy may substitute sight vocabulary for poorly acquired decoding skills.

Oral language and vocabulary levels were only slightly higher than reading skills levels for these adults with low literacy, despite their having had 16 to 76 years of oral language experience to draw upon. Their reading experience was almost certainly less extensive than for the typical adult, and this may be largely responsible for their limited language skills.

In this context, the relative disadvantage of phonological decoding versus sight word identification found in this study is particularly telling and paramount, consistent with prior research in adults with poor reading ability (Davidson & Strucker, 2002; Greenberg, Ehri, & Perin, 1997, 2002; Pratt & Brady, 1988; Read & Ruyter, 1985). Greater proficiency in decoding novel words presumably supports the accumulation of memory for real words, which can, with practice, lead to greater reading efficiency and fluency; i.e., more words and texts can be read per unit time. Whether adults who start out with very low skill levels can still acquire adequate decoding skills en route to improved reading fluency is a hypothesis that can be tested only in intervention studies that focus on enhancing word recognition skills (and fluency).

Furthermore, a markedly slow reading rate is likely to limit the amount of text that the adults can read, to exhaust mental energy and attention, and consequently to constrain further growth of language skills through the channel of reading. With the attainment of higher proficiency in word recognition skill, including higher order knowledge of the spelling system and English morphology (Berninger, 1995; Venezky, 1999), one would expect to observe consolidation of word recognition skill with fluency and vocabulary knowledge, especially given the central role of morphological structure in multi-syllabic, complex words (Assink & Sandra, 2003; Verhoeven & Perfetti, 2003). These hypothesized patterns await further study. Regardless, these patterns of relationships in adults with low literacy are quite different from typically developing children and differ in various degrees from patterns found in learners with reading disabilities/difficulties.

Question 1

How well does the simple view model account for variation in reading comprehension? In answer to our first question, we found that the Simple View model was represented with three measures in the Word Recognition factor (WJ Letter Word Identification, WJ Word Attack, and WRAT Reading) and three measures of Language Comprehension (WJ Oral Comprehension, WJ Understanding Directions, and WJ Story Recall). In our sample of low-literate adults, this model fit the data well. The respective measures loaded appropriately onto a two-factor model and both factors predicted about the same amount of variance in WJ Passage Comprehension scores. In all, 62.5% of the variance on this measure of reading comprehension was accounted for by the two factors derived from six variables.

Question 2

Our second question focused on oral vocabulary and reading comprehension: Can oral vocabulary be subsumed within the language comprehension component, or is it a distinct component on its own? If so, does it play an important role in accounting for reading comprehension? When the Language Comprehension factor was based on two vocabulary measures in addition to other language scores, the overall model fit was only marginally acceptable. However, a significantly better fit was obtained when the two vocabulary measures were specified to define a separate Vocabulary factor. These results indicate that Vocabulary was distinct from the Word Recognition factor and from Language Comprehension factor, although highly correlated with the latter.

Nonetheless, the Vocabulary factor was not significantly related to Passage Comprehension scores, although the other two factors were. Hence, adding the vocabulary factor resulted in little change in the % of variance in Reading Comprehension accounted for by the latent factors (62.5% vs. 62.2%). Although some prior studies have obtained results similar to ours, independent contributions of vocabulary to reading comprehension were found in recent studies of both children (Cutting & Scarborough, 2006) and young adults (Braze et al., 2007). The samples in those studies, however, included primarily nondisabled readers and different methods were applied. It is possible that these differences in sample composition and analytic methods may be responsible to some degree for the lack of agreement in results. Our results cannot be interpreted to mean that vocabulary is not related to reading comprehension; on the contrary, it is substantially correlated with the Language factor and reading comprehension. However, as an addition to the Simple View factors, vocabulary does not stand out as a substantial, independent, additional explanatory factor of reading comprehension scores of adults with low literacy.

Question 3

Finally, our third question focused on fluency and speed: Do text reading fluency and word reading speed form a unitary processing speed/ fluency factor or separate latent factors? Alone or in combination, do they contribute independently from word recognition and language comprehension as predictors of reading comprehension? We constructed and tested models that reflected four different conceptualizations of how reading speed (i.e., the fluency of text reading, and the speed of word recognition and decoding in isolation) might best be represented alongside other factors in relation to reading comprehension. Of these models, the one in which a single distinct fluency factor was added, based on timed measures of both text-reading and list-reading, was judged to provide the best and most parsimonious fit to the data in comparison with models in which: the four speeded measures were instead included in the word recognition factor; a separate fluency factor was based only on the text-reading fluency measures; and separate text fluency and word/pseudoword-reading efficiency factors were included, for a total of five factors. Although the five-factor model was least restrictive and hence by definition produced the best fitting values on key indices, the two added factors were correlated to an unacceptably high degree (r = .998), making them virtually indistinguishable psychometrically. Hence, the four-factor the model with a single fluency factor was deemed more acceptable.

Although superior to the others, the best model that incorporated the reading speed measures fell short of conventional criteria for overall model fit adequacy, and the strength of the relationship to reading comprehension was no better than the models discussed earlier in relation to our other research questions. Furthermore, even though a separate latent factor could be identified for fluency (as well as for vocabulary, word recognition, and language comprehension), it was not independently related to reading comprehension scores in this model (nor in other models that included one or two speed-related factors). Again, these results cannot be interpreted to mean that reading speed/fluency is not related to reading comprehension. Rather, as an addition to the Simple View factors, a speed/fluency factor does not appear to make a substantial, independent, additional contribution to explaining reading comprehension scores.

Is The Simple View Sufficient For Explaining Reading Comprehension Differences?

On the one hand, support was found for the hypothesized distinctions between vocabulary and other language skills, and between reading speed and word recognition accuracy. On the other hand, as illustrated in Figure 1, the most successful three- and four-factor models that incorporated these additions to the Simple View indicated that these additional factors were not significantly related to the reading comprehension scores with Simple View factors also in the model. The results strongly indicate that, as posited by the Simple View, word recognition and language comprehension are primary factors related to reading comprehension in low-literate adults.

There are several potential reasons why these results were not entirely consistent with prior findings of unique contributions of vocabulary or fluency to reading comprehension, sometimes reported with younger and nondisabled readers. First, because this was a sample selected to have a somewhat narrow range of reading levels, the concern might be raised that restricted ranges on our variables may have limited the sensitivity of the analyses. However, there was considerable variability on all measures in the sample, and range restriction thus could not be the source of disagreements among other studies, which included samples ranging from young children to adults (e.g., Adlof et al., 2006; Braze et al., 2007; Buly & Valencia, 2002; Tannenbaum et al., 2006; Vellutino et al., 2007).

Second, we administered only one reading comprehension measure and therefore could not model it as a latent factor, but only as an observed variable. There is reason for concern that the aspects of reading comprehension that are captured by the Woodcock-Johnson Passage Comprehension test may be restricted. This test requires reading of short passages only, and longer passages would make stronger demands on a reader’s skills in constructing memory and situational models of meaning (Kintsch, 1998; Keenan, Betjemann & Olson, 2008), a hallmark of skilled text comprehension. Indeed, several recent studies have demonstrated that various widely used tests of reading comprehension tests are not always strongly related to each other (Cutting & Scarborough, 2006; Francis et al., 2006; Keenan et al., 2008). Studies that have obtained evidence that vocabulary and fluency factors were each related to reading comprehension have mainly used other measures than the WJ Passage Comprehension test (e.g., Buly & Valencia, 2002; Jenkins et al., 2003; Tannenbaum et al., 2006), but some have not. Hence, it is possible that different results would have been obtained if alternative measures had been given to our sample so as to afford a broader assessment of the reading comprehension construct.

Third, most prior investigations of extensions of the Simple View have used traditional multiple regression methods with observed variables or composites rather than CFA modeling with latent variables. The choice of analytic approach could conceivably lead to differences in results.

Last, it is possible that the structural relationships among skills may be somewhat atypical for adults with low literacy. That is, regardless of the adequacy of comprehension measurement or the chosen analytic approach, models that apply successfully to other populations might not be expected to fit this one as well. One indication of such atypicality of the sample is that, as noted earlier, their decoding skills were generally lower than other reading skills, and their oral language was slightly superior to reading proficiency, with respect to national norms.

Implications for Assessment and Instruction of Adult Learners

The results suggest that to address the needs of adults with low literacy, multiple facets of reading and language skill will be needed to attain the goal of strengthening reading comprehension in this population. With respect to assessment, a single test of overall reading skill will not suffice for identifying the learner’s specific area(s) of weakness. Rather, at a minimum, both oral language abilities and basic word recognition skills should be assessed for screening, diagnostic, and progress-monitoring purposes. In addition, as resources permit, supplementary tests of reading fluency or vocabulary are likely to be helpful for making decisions about learner gains and program effectiveness. A well-chosen test battery can serve to identify adults’ instructional needs accurately and efficiently, permitting appropriate decisions to be made about designing instruction that aligns with a learner’s strengths and weaknesses.

With respect to the foci of instruction for adults who lack proficiency in reading comprehension, the results converge with prior research in indicating that many of these individuals have not fully mastered fundamental skills in word recognition and decoding. Teaching a repertoire of skills and strategies for quickly and accurately recognizing printed words and decoding novel words has rarely been a major focus in traditional adult education programs. However, when difficulties in these basic skills are revealed by assessment, instruction to strengthen them is clearly warranted. The findings also confirm that many adult learners need instruction to enhance their oral language comprehension, which in this study was represented by a composite score (a latent variable) based on tests that required the adults to recall stories, to answer questions about orally-presented passages, and to follow oral directions. Furthermore, it is important to remember that there was not a strong correlation between the two main factors, word recognition and language comprehension. Thus, difficulties in reading comprehension apparently arose for different reasons in the sample – stemming more heavily from language comprehension weaknesses in some individuals, from limitations in basic word-level processing in others, and in some cases from both factors.

Although a two-factor Simple View model was best fit by our data, the results also underscore the close associations of vocabulary to other oral language skills, and of fluency/speed to the accuracy of word recognition. Thus, it would be unwise to conclude that fluency and vocabulary are unimportant to successful reading comprehension or should be ignored when instructing adults with low literacy. A challenge for educators will be to design more comprehensive instructional programs that integrate the components that are beneficial for individual learners and to monitor students’ progress in reaching each educational goal. Research is urgently needed to examine the effectiveness of various approaches to addressing the variety of instructional needs of adults with low literacy.

Limitations of the Study

The population of adults with low-literacy has not been the focus of much prior research and the results of this first exploration of the Simple View in such a sample cannot be considered definitive. The structural relations among reading components that we obtained may have been dependent upon the particular analytic techniques, the measures of comprehension and other skills, and the composition of the sample. Future studies that tease apart such influences, and that take into account reader characteristics (such as reading level and educational history), will be needed for a fuller understanding of adult learners’ reading skills and how best to help them to comprehend text more successfully.

Conclusions

In a sample of adults with low literacy, CFA models provided evidence for reliable distinctions among four hypothesized components of reading skill: word recognition; fluency; language comprehension; and vocabulary. Nonetheless, the data were most consistent with the longstanding 2-factor Simple View account of reading, in which just word recognition and oral language comprehension are necessary and sufficient for accounting for differences in reading comprehension. That is, the results did not provide support for the hypothesis that the Simple View needed to be expanded to include fluency or vocabulary as distinct additional factors, as has been posited in some prior studies of younger and more able readers. The heterogeneity of reading skills and relationships among them that were observed in the sample highlight the need to assess an array of reading proficiencies in adults with low literacy, and to provide instruction for this population that addresses all areas of weakness.

Acknowledgments

This work was supported in part by grant HD 043774 from the National Institute for Literacy, Office of Vocational Education, and National Institute of Child Health and Human Development. We are grateful to the National Center for Educational Statistics and the National Assessment of Adult Literacy for granting permission to use the Basic Reading Skills portion of that instrument, and to Kelly Bruce and Jen Lentini for assistance with data collection and management. The authors thank Larry Stricker, Tom Quinlan, Dan Eignor, and anonymous reviewers for their helpful comments. Preliminary structural analyses of data from this project were previously reported in presentations to the American Educational Research Association and the Society for the Scientific Study of Reading. Any opinions expressed in this article are those of the authors and not necessarily of Educational Testing Service.

Appendix. Standardized Parameter Estimates for All Models Tested

Model 1. Total R2 = .642

| Variable | Path Coefficients

|

||

|---|---|---|---|

| Wrd Recog | Language | Error | |

| WRAT | .885* | .466* | |

| WID | .954* | .301* | |

| WJWA | .680* | .734* | |

| WJOC | .813* | .582* | |

| WJUD | .756* | .654* | |

| WJSR | .708* | .706* | |

|

| |||

| PCMP | .518* | .541* | .612* |

|

| |||

| Inter-factor Correlations | |||

| Wrd Recog | -- | ||

| Language | .117* | -- | |

p < .01

Models 2(a) and 2(b)

| Variable | Model 2(a). Total R2 = .629

|

Model 2(b). Total R2 = .671

|

|||||

|---|---|---|---|---|---|---|---|

| Path Coefficients

|

Error | Path Coefficients

|

Error | ||||

| Wrd Recog | Language | Wrd Recog | Language | Vocab | |||

| WRAT | .885* | .465* | .885* | .465* | |||

| WJWID | .953* | .303* | .953* | .302* | |||

| WJWA | .680* | .733* | .680* | .733* | |||

| WJOC | .839* | .545* | .887* | .461* | |||

| WJUD | .642* | .767* | .691* | .723* | |||

| WJSR | .612* | .791* | .662* | .750* | |||

| WJPV | .818* | .575* | .853* | .522* | |||

| BNT | .867* | .498* | .936* | .353* | |||

|

|

|

||||||

| PCMP | .496* | .515* | .636* | .520* | .536* | .000 | .615* |

|

|

|

||||||

| Inter-factor Correlations | Inter-Factor Correlations | ||||||

| Wrd Recog | -- | -- | |||||

| Language | .164* | -- | .112* | -- | |||

| Vocab | .188* | .823* | -- | ||||

p < .01

Models 3(a) and 3(b)

| Variable | Model 3(a). Total R2 = .648

|

Model 3(b). Total R2 = .672

|

Error | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Path Coefficients

|

Error | Path Coefficients

|

|||||||

| Wrd Recog | Language | Vocab | Wrd Recog | Language | Vocab | Fluency | |||

| WRAT | .830* | .557* | .875* | .485* | |||||

| WJWID | .904* | .427* | .964* | .267* | |||||

| WJWA | .664* | .748* | .675* | .738* | |||||

| WJOC | .892* | .452* | .886* | .463* | |||||

| WJUD | .687* | .727* | .692* | .722* | |||||

| WJSR | .659* | .753* | .662* | .749* | |||||

| WJPV | .853* | .522* | .853* | .523* | |||||

| BNT | .936* | .353* | .936* | .351* | |||||

| WJFLU | .781* | .625* | .816* | .578* | |||||

| NBRS | .892* | .451* | .929* | .370* | |||||

| TWSW | .913* | .408* | .939* | .345* | |||||

| TWPD | .687* | .726* | .642* | .767* | |||||

|

| |||||||||

| PCMP | .505* | .507* | .029 | .628* | .486* | .531* | .039 | .003 | .615* |

|

| |||||||||

| Inter-factor Correlations | |||||||||

| Wrd Recog | -- | -- | |||||||

| Language | .124* | -- | .116* | -- | |||||

| Vocabulary | .184* | .823* | -- | .190* | .823* | -- | |||

| Fluency | .875* | .135* | .174* | -- | |||||

p < .01

Models 3(c) and 3(d)

| Variable | Model 3(c). Total R2 = .663

|

Error | Model 3(d). Total R2 = .690

|

Error | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Path Coefficients

|

Path Coefficients

|

Effic | |||||||||

| Wrd Recog | Lang | Vocab | Flu | Wrd Recog | Lang | Vocab | Flu | ||||

| WRAT | .861* | .508* | .878* | .479* | |||||||

| WJWID | .930* | .368* | .958* | .288* | |||||||

| WJWA | .687* | .727* | .684* | .730* | |||||||

| WJOC | .889* | .459* | 890* | .456* | |||||||

| WJUD | .689* | .724* | .689* | .725* | |||||||

| WJSR | .661* | .750* | .660* | .751* | |||||||

| WJPV | .853* | .523* | .853* | .522* | |||||||

| BNT | .936* | .351* | .936* | .352* | |||||||

| WJFLU | .828* | .561* | .835* | .550* | |||||||

| NBRS | .957* | .291* | .948* | .317* | |||||||

| TWSW | .883* | .469* | .900* | .436* | |||||||

| TWPD | .710* | .704* | .666* | .746* | |||||||

|

| |||||||||||

| PCMP | .628* | .561* | -.123* | -.005 | .614* | .467 | .538 | -.030 | .082 | .003 | .616* |

|

| |||||||||||

| Inter-factor Correlations | |||||||||||

| Wrd Recog | -- | -- | |||||||||

| Language | .091* | -- | .114* | -- | |||||||

| Vocabulary | .169* | .823* | -- | .189* | 823* | -- | |||||

| Fluency | .890* | .161* | .190* | -- | .834* | .171* | .199* | -- | |||

| Efficiency | .945* | .065 | .133* | .998* | -- | ||||||

p < .01

Wrd Recog = Word Recognition; Lang = Language Comprehension; Vocab = Vocabulary; Flu = Fluency of text reading; Effic = Efficiency of word/pseudoword list reading; PCMP = WJ Passage Comprehension; WJWA = WJ Word Attack; WJWID = WJ Word Identification; WRAT = Wide Range Achievement Test Reading; TWSW = TOWRE Single Word Efficiency; TWPD = Phonological Decoding Efficiency; WJFLU = WJ Reading Fluency; NBRS = NAAL Basic Reading Skills oral passage reading fluency; WJOC = WJ Oral Comprehension; WJUD = WJ Understanding Directions; WJSR = WJ Story Recall; WJPV = WJ Picture Vocabulary; BNT = Boston Naming Test.

Contributor Information

John P. Sabatini, Educational Testing Service, Princeton, NJ

Jane R. Shore, Educational Testing Service, Princeton, NJ

Yasuyo Sawaki, Waseda University, Tokyo, Japan.

Hollis S. Scarborough, Haskins Laboratories, New Haven, CT

References

- Adlof SM, Catts HW, Little TD. Should the simple view of reading include a fluency component? Reading and Writing. 2006;19(9):933–958. [Google Scholar]

- Anderson RC, Freebody P. Vocabulary knowledge. In: Guthrie JT, editor. Comprehension and teaching. Newark, DE: International Reading Association; 1981. pp. 77–117. [Google Scholar]

- Assink EM, Sandra D, editors. Reading complex words: Cross-language studies. New York: Springer; 2003. [Google Scholar]

- Bagozzi RP, Yi Y. On the evaluation of structural equation models. Journal of the Academy of Marketing Science. 1992;16:74–94. [Google Scholar]

- Beck IL, McKeown MG. Conditions of vocabulary acquisition. In: Barr R, Karmil ML, Mosenthal PB, Pearson DD, editors. Handbook of reading research. II. New York: Longman Publishing Group; 1991. pp. 749–814. [Google Scholar]; Bentler PM. EQS for Windows 6.1. [Computer software] Encino, CA: Multivariate Software, Inc; 1985–2006. [Google Scholar]

- Berninger VW, editor. The varieties of orthographic knowledge II: Relationships to phonology, reading, and writing. Dordrecht, The Netherlands: Kluwer; 995. [Google Scholar]

- Berninger VW, Abbott RD, Billingsley F, Nagy W. Processes underlying timing and fluency of reading: Efficiency, automaticity, coordination, and morphological awareness. In: Wolf M, editor. Dyslexia, fluency, and the brain. Timonium, MD: York Press; 2001. pp. 383–414. [Google Scholar]

- Braze D, Tabor W, Shankweiler DP, Mencl WE. Speaking up for vocabulary: Reading skill differences in young adults. Journal of Learning Disabilities. 2007;40(3):226–243. doi: 10.1177/00222194070400030401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Breznitz Z. Fluency in reading: Synchronization of processes. Mahwah, NJ: Lawrence Erlbaum; 2006. [Google Scholar]

- Browne MW, Cudeck R. Alternative ways of assessing model fit. In: Bollen KA, Long JS, editors. Testing structural equation models. Newbury Park, CA: Sage Publications; 1993. pp. 136–162. [Google Scholar]

- Buly MR, Valencia SW. Below the bar: Profiles of students who fail state reading assessments. Educational Evaluation and Policy Analysis. 2002;24(3):219–239. [Google Scholar]

- Byrne BM. One application of structural equation modeling from two perspectives: Exploring EQS and LISREL strategies. In: Hoyle RH, editor. Structural equation modeling: Concepts, issues, and applications. Thousand Oaks, CA: Sage Publications; 1995. pp. 138–157. [Google Scholar]

- Carver RP. Reading for one second, one minute, or one year from the perspective of rauding theory. Scientific Studies of Reading. 1997;1(1):3–43. [Google Scholar]

- Carver RP. The highly lawful relationships among pseudoword decoding, word identification, spelling, listening, and reading. Scientific Studies of Reading. 2003;7(2):127–154. [Google Scholar]

- Carver RP, David AH. Investigating reading achievement using a causal model. Scientific Studies of Reading. 2001;5(2):107–140. [Google Scholar]

- Catts HW, Adlof SM, Weismer SE. Language deficits in poor comprehenders: A case for the simple view of reading. Journal of Speech, Language & Hearing Research. 2006;49(2):278–293. doi: 10.1044/1092-4388(2006/023). [DOI] [PubMed] [Google Scholar]

- Cunningham AE, Stanovich KE. Early reading acquisition and its relation to reading experience and ability 10 years later. Developmental Psychology. 1997;33(6):934–945. doi: 10.1037//0012-1649.33.6.934. [DOI] [PubMed] [Google Scholar]

- Curtis ME. Development of the components of reading. Journal of Educational Psychology. 1980;72(5):656–669. [Google Scholar]

- Cutting LE, Scarborough HS. Prediction of reading comprehension: Relative contributions of word recognition, language proficiency, and other cognitive skills can depend on how comprehension is measured. Scientific Studies of Reading. 2006;10(3):277–299. [Google Scholar]

- Daane MC, Campbell JR, Grigg WS, Goodman MJ, Oranje A. Fourth-grade students reading aloud: NAEP 2002 special study of oral reading (No. NCES 2006–469) Washington, DC: U. S. Department of Education, Institution of Education Sciences, National Center for Educational Statistics; 2005. [Google Scholar]

- Daneman M. Word knowledge and reading skill. In: Daneman M, Mackinnon GE, Waller TG, editors. Reading research: Advances in theory and practice. Vol. 6. San Diego: Academic Press; 1988. pp. 145–175. [Google Scholar]

- Davidson RK, Strucker J. Patterns of word-recognition errors among adult basic education native and nonnative speakers of English. Scientific Studies of Reading. 2002;6(3):299–316. [Google Scholar]

- Francis DJ, Fletcher JM, Catts HW, Tomblin JB. Dimensions affecting the assessment of reading comprehension. In: Paris SG, Stahl SA, editors. Children’s reading comprehension and assessment. Mahwah, NJ: Lawrence Erlbaum; 2005. pp. 369–394. [Google Scholar]

- Fuchs LS, Fuchs D, Hosp MK, Jenkins JR. Oral reading fluency as an indicator of reading competence: A theoretical, empirical, and historical analysis. Scientific Studies of Reading. 2001;5(3):239–256. [Google Scholar]

- Goff DA, Pratt C, Ong B. The relations between children’s reading comprehension, working memory, language skills and components of reading decoding in a normal sample. Reading and Writing: An Interdisciplinary Journal. 2005;18(7–9):583–616. [Google Scholar]

- Goodglass H, Kaplan E. Boston Diagnostic Aphasia Examination (BDAE) Philadelphia: Lea and Febiger; 1983. [Google Scholar]

- Gough PB. One second of reading. In: Kavanagh J, Mattingly I, editors. Language by ear and by eye. Cambridge, MA: MIT Press; 1972. pp. 331–358. [Google Scholar]

- Gough PB. How children learn to read and why they fail. Annals of Dyslexia. 1996;46:3–20. doi: 10.1007/BF02648168. [DOI] [PubMed] [Google Scholar]

- Gough PB, Hillinger ML. Learning to read: An unnatural act. Bulletin of The Orton Society. 1980;30:179–196. [Google Scholar]

- Gough PB, Tunmer WE. Decoding, reading, and reading disability. Remedial and Special Education. 1986;7:6–10. [Google Scholar]

- Greenberg D, Ehri LC, Perin D. Are word-reading processes the same or different in adult literacy students and third-fifth graders matched for reading level? Journal of Educational Psychology. 1997;89:262–275. [Google Scholar]

- Greenberg D, Ehri LC, Perin D. Do adult literacy students make the same word-reading and spelling errors as children matched for word-reading age? Scientific Studies of Reading. 2002;6(3):221–243. [Google Scholar]

- Hirsch ED., Jr Reading comprehension requires knowledge--of words and the world. American Educator. 2003;27:10–31. [Google Scholar]

- Hoover WA, Gough PB. The simple view of reading. Reading and Writing: An Interdisciplinary Journal. 1990;2:127–160. [Google Scholar]

- Hoover WA, Tunmer WE. The components of reading. In: Thompson GB, Tunmer WE, Nicholson T, editors. Reading acquisition processes. Philadelphia: Multilingual Matters; 1993. pp. 1–19. [Google Scholar]

- Jenkins JR, Fuchs LS, van den Broek P, Espin C, Deno SL. Accuracy and fluency in list and context reading of skilled and RD groups: Absolute and relative performance levels. Learning Disabilities: Research & Practice. 2003;18(4):237–245. [Google Scholar]

- Joshi RM. Vocabulary: A critical component of comprehension. Reading & Writing Quarterly. 2005;21(3):209–219. [Google Scholar]

- Joshi RM, Aaron PG. The component model of reading: Simple view of reading made a little more complex. Reading Psychology. 2000;21(2):85–97. [Google Scholar]

- Joshi RM, Williams KA, Wood JR. Predicting reading comprehension from listening comprehension: Is this the answer to the IQ debate? In: Hulme C, Joshi RM, editors. Reading and spelling: Development and disorders. Mahwah, NJ: Lawrence Erlbaum; 1998. pp. 319–327. [Google Scholar]

- Kame’enui EJ, Simmons DC. Introduction to this special issue: The DNA of reading fluency. Scientific Studies of Reading. 2001;5(3):203–210. [Google Scholar]

- Keenan JM, Betjemann RS, Olson RK. Reading comprehension tests vary in the skills they assess: Differential dependence on decoding and oral comprehension. Scientific Studies of Reading. 2008;12(3):281–300. [Google Scholar]

- Kintsch W. Comprehension: A paradigm for cognition. Cambridge, UK: Cambridge University Press; 1998. [Google Scholar]

- Kline RB. Principles and practice of structural equation modeling. New York: Guilford Press; 1998. [Google Scholar]

- Kutner M, Greenberg E, Jin Y, Boyle B, Hsu Y, Dunleavy E. Literacy in everyday life: Results from the 2003 National Assessment of Adult Literacy (NCES 2007–480) U.S. Department of Education; Washington, DC: National Center for Education Statistics; 2007. [Google Scholar]

- Maruyama GM. Basics of structural equation modeling. Thousand Oaks, CA: Sage Publications; 1998. [Google Scholar]

- Pedhazur E. Multiple regression in behavioral research: Explanation and prediction. 3. Orlando, FL: Harcourt; 1997. [Google Scholar]

- Perfetti C. Reading ability: Lexical quality to comprehension. Scientific Studies of Reading. 2007;11(4):357–383. [Google Scholar]

- Perfetti CA, Hart L. The lexical quality hypothesis. In: Verhoeven L, Elbro C, Reitsma P, editors. Precursors of functional literacy. Amsterdam, Philadelphia: John Benjamins; 2002. pp. 189–213. [Google Scholar]

- Pratt AC, Brady S. Relation of phonological awareness to reading disability in children and adults. Journal of Educational Psychology. 1988;80(3):319–323. [Google Scholar]

- Raykov T, Marcoulides GA. A first course in structural equation modeling. Mahwah, N. J: Lawrence Erlbaum; 2000. [Google Scholar]

- Read C, Ruyter L. Reading and spelling skills in adults of low literacy. Remedial and Special Education. 1985;6:43–51. [Google Scholar]

- Sabatini JP. Efficiency in word reading of adults: Ability group comparisons. Scientific Studies of Reading. 2002;6(3):267–298. [Google Scholar]

- Sabatini JP. Word reading processes in adult learners. In: Assink E, Sandra D, editors. Reading complex words: Cross-language studies. London: Kluwer Academic; 2003. pp. 265–294. [Google Scholar]

- Samuels SJ. Toward a model of reading fluency. In: Samuels SJ, Farstrup AE, editors. What research has to say about fluency instruction. Newark, DE: International Reading Association; 2006. pp. 24–46. [Google Scholar]

- Satorra A. Robustness issues in structural equation modeling: A review of recent developments. Quality and Quantity. 1990;24:367–386. [Google Scholar]

- Satorra A, Bentler P. A scaled difference chi-square test statistic for moment structure analysis. Psychometrika. 2001;66:507–514. doi: 10.1007/s11336-009-9135-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scarborough HS, Brady SA. Toward a common terminology for talking about speech and reading: A glossary of the “phon” words and some related terms. Journal of Literacy Research. 2002;34(2):299–334. [Google Scholar]

- Shankweiler D, Lundquist E, Katz L, Stuebing KK, Fletcher JM. Comprehension and decoding: Patterns of association in children with reading difficulties. Scientific Studies of Reading. 1999;3(1):69–94. [Google Scholar]

- Statistics Canada and Organisation for Economic Cooperation and Development (OECD) Learning a living: First results of the Adult Literacy and Life Skills Survey. Ottawa and Paris: Author; 2005. [Google Scholar]

- Tannenbaum KR, Torgesen JK, Wagner RK. Relationships between word knowledge and reading comprehension in third-grade children. Scientific Studies of Reading. 2006;10(4):381–398. [Google Scholar]

- Torgesen J, Rashotte CA, Alexander AW. Principles of fluency instruction in reading: Relationships with established empirical outcomes. In: Wolf M, editor. Dyxlexia, fluency, and the brain. Parkton, MD: York Press; 2001. pp. 333–355. [Google Scholar]

- Torgesen JK, Wagner RK, Rashotte CA. Test of Word Reading Efficiency (TOWRE) Austin, TX: Pro-Ed; 1999. [Google Scholar]

- Tunmer WE, Hoover WA. Cognitive and linguistic factors in learning to read. In: Gough PB, Ehri LC, Treiman R, editors. Reading acquisition. Hillsdale, NJ: Lawrence Erlbaum; 1992. pp. 175–224. [Google Scholar]

- Tunmer WE, Hoover WA. Components of variance models of language-related factors of reading disability: A conceptual overview. In: Joshi RJ, Leong CK, editors. Reading diagnosis and component processes. Dordrecht, Netherlands: Kluwer; 1993. pp. 135–173. [Google Scholar]

- Vellutino FR, Tunmer WE, Jaccard JJ, Chen R. Components of reading ability: Multivariate evidence for a convergent skills model of reading development. Scientific Studies of Reading. 2007;11(1):3–32. [Google Scholar]

- Venezky RL. The American way of spelling: The structure and origins of American English orthography. New York: Guilford Press; 1999. [Google Scholar]

- Verhoven L, Perfetti C. Introduction to this special issue: The role of morphology in learning to read. Scientific Studies of Reading. 2003;7(3):209–217. [Google Scholar]

- Wayman MM, Wallace T, Wiley HI, Ticha R, Espin CA. Literature synthesis on curriculum-based measurement in reading. The Journal of Special Education. 2007;41(2):85–120. [Google Scholar]

- Wilkinson G. Wide Range Achievement Test (WRAT3) Wilmington, DE: Wide Range, Inc; 1993. [Google Scholar]

- Wolf M, Katzir-Cohen T. Reading fluency and its intervention. Scientific Studies of Reading. 2001;5(3):211–239. [Google Scholar]

- Woodcock RW, McGrew KS, Mather N. Woodcock-Johnson III Tests of Achievement. Itasca, IL: Riverside Publishing; 2001. [Google Scholar]