Abstract

Self-administration of a multimedia health literacy measure in clinic settings is a novel concept. Demonstrated ease of use and acceptability will help predicate the future value of this strategy. We previously demonstrated the acceptability of a “Talking Touchscreen” for health status assessment. For this study, we adapted the touchscreen for self-administration of a new health literacy measure. Primary care patients (n=610) in clinics for underserved populations completed health status and health literacy questions on the Talking Touchscreen and participated in an interview. Participants were 51% female, 10% age 60+, 67% African American, 18% without a high school education, and 14% who had never used a computer. The majority (93%) had no difficulty using the touchscreen, including those who were computer-naïve (87%). Most rated the screen design as very good or excellent (72%), including computer-naïve patients (71%) and older patients (75%). Acceptability of the touchscreen did not differ by health literacy level. The Talking Touchscreen was easy to use and acceptable for self-administration of a new health literacy measure. Self-administration should reduce staff burden and costs, interview bias, and feelings of embarrassment by those with lower literacy. Tools like the Talking Touchscreen may increase exposure of underserved populations to new technologies.

Keywords: Computer Literacy, Health Literacy, User-Computer Interface, Multimedia, Vulnerable Populations

In its recent report on health literacy, the Institute of Medicine outlined a variety of literacy, technology and navigation skills that patients need to function optimally in a variety of health contexts (Committee on Health Literacy, Nielsen-Bohlman, Panzer, & Kindig, 2004). To better match health care materials and services to patients' literacy skills, accurate and clinically feasible assessment methods are needed. Numerous reading assessment tools exist, including ones that are composed of medical terms and content. The two most widely used measures are the Rapid Estimate of Adult Literacy in Medicine (REALM), which is a word recognition and pronunciation test (Davis et al., 1993), and the Test of Functional Health Literacy in Adults (TOFHLA), which uses actual materials patients may encounter in health care settings to determine how well they can perform basic reading comprehension and numeracy tasks (Parker, Baker, Williams, & Nurss, 1995). These measures are fairly easy to administer and score; however, a trained interviewer is required to administer both tests.

Modern health care systems increasingly rely on a variety of audiovisual, graphical and electronic media to present health information, assist in decision-making and collect self-report data (Institute of Medicine, 2002). Computer kiosks have been used to provide health information and education in a community setting (Kreuter et al., 2006) and increasing numbers of health care consumers are using the internet for a variety of tasks such as accessing their personal health information, communicating with their providers, scheduling appointments, and requesting referrals or prescription refills (Blumenthal & Glaser, 2007). Despite advances in recent years in health information technology and in computer and multimedia tools in health care settings, these tools remain inaccessible to many patients, particularly those who are older, from racial or ethnic minority groups, have lower income or have poor literacy skills (Cashen, Dykes, & Gerber, 2004; Suggs, 2006). In order for these tools to be widely used and equitably accessed, they must be easy to use, intuitive and acceptable to the users – i.e., the patients.

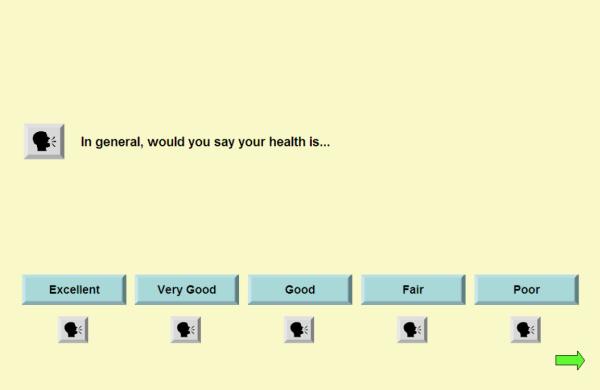

Hahn et al. developed a bilingual, multimedia, computer-based tool for health status assessment that meets these criteria of usability and acceptability (Hahn et al., 2007; Hahn et al., In press; Hahn et al., 2003). The tool, called the “Talking Touchscreen” (TT), is a multimedia program (text, graphics and audio) installed on a touchscreen tablet computer. One question at a time is displayed on the screen (e.g., “In general, would you say your health is…”) accompanied by an audio recording of the text (Figure 1). For privacy, participants listen to the audio recordings using headphones. The respondent may touch a sound icon on the screen to hear the audio as many times as needed. Individual buttons with response options (e.g., “Excellent” to “Poor”) also have audio recordings. A response is selected by touching one of the response buttons. Once selected, the button changes in color, providing visual confirmation of the chosen response. The respondent then advances to a new screen for the next question. The TT for self-administration of health status questions was well received by 420 English-speaking and 414 Spanish-speaking cancer patients with diverse literacy and computer skills (Hahn, et al., 2007; Hahn, et al., In press).

Figure 1.

Standard assessment question showing item and responses

We adapted the TT for self-administration of a new measure of health literacy. A detailed description of the development and pilot testing of the health literacy measure has been previously reported (Yost et al., 2009). For the purpose of this measurement tool, health literacy is defined as “the degree to which individuals have the capacity to read and comprehend health-related print material, identify and interpret information presented in graphical format (charts, graphs and tables), and perform arithmetic operations in order to make appropriate health and care decisions” (Yost, et al., 2009) (p. 5). Briefly, this new measure assesses three types of reading skills (prose, document and quantitative) defined by Educational Testing Service staff and adopted by the National Adult Literacy Survey (NALS) (Kirsch, Jungeblut, Jenkins, & Kolstad, 1993) and National Assessment of Adult Literacy (NAAL) (Kutner, Greenberg, Jin, & Paulsen, 2006). Prose literacy focuses on the understanding and use of information from texts; document literacy requires the ability to locate and use information from forms, tables, graphs, etc.; and quantitative literacy requires the ability to apply arithmetic operations using numbers embedded in printed materials.

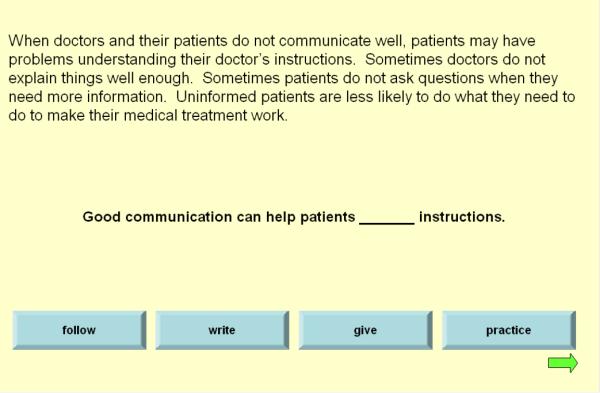

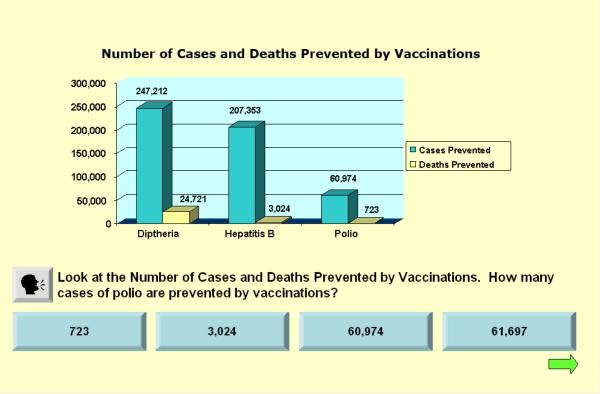

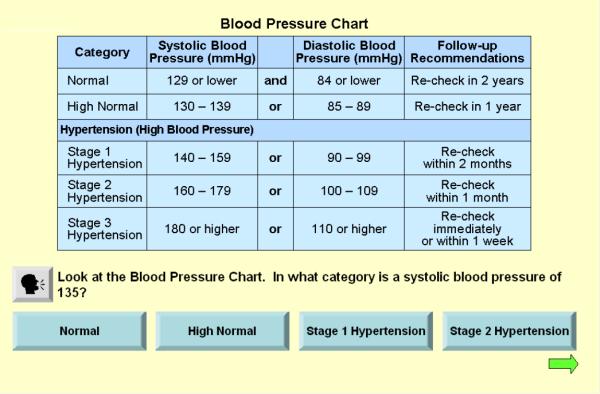

The most significant adaptation of the TT was in the item format. Items used in the TT for health status assessment (Hahn et al., 2004; Hahn, et al., 2003) are subjective evaluations of health, consisting of a single statement and up to five response options (see Figure 1). In contrast, items for the TT for health literacy assessment are objective and fact-based, and use three standardized formats for item administration. Prose items consist of a brief prose passage and a statement summarizing the content of the passage with one word missing. The respondents choose a word to complete the summary statement from four multiple-choice options (Figure 2). In addition to the actual question and four response options, all document (Figure 3) and most quantitative items (Figure 4) have a prompt with health-related information (e.g., table, chart, appointment card). The primary goal of this study was to explore ease of use and acceptability of the TT for self-administration of a new measure of health literacy. A secondary goal was to determine whether ease of use or acceptability differed by participants' sociodemographic characteristics, prior computer experience or level of health literacy.

Figure 2.

Prose health literacy item

Figure 3.

Document health literacy item

Figure 4.

Quantitative health literacy item

Methods

Participants

Participants were recruited using convenience sampling in the waiting areas of four primary care clinics in the Chicago area, including safety net facilities that provide care to underserved patients. Patients were eligible to participate if they were at least 21 years of age, spoke English, and had sufficient vision, hearing, cognitive function and manual dexterity to interact with the touchscreen. All participants provided informed consent in accordance with institutional review board requirements and received a $20 incentive for their time and effort.

Procedures

The TT program was installed on Toshiba touchscreen tablet computers. A research assistant (RA) introduced each participant to the touchscreen computer and the stylus, which is approximately the size of a pen, and provided headphones. The RA explained how to advance to the next screen and that touching the sound icon with the stylus would allow the respondent to hear the text spoken via an audio recording. The participant answered two simple practice questions while the RA observed. If the participant had no questions and did not need further assistance, the RA left the participant to self-administer the assessment in private, but remained nearby. Participants completed 10 health status questions and a preselected subset of approximately 30 health literacy items from a pool of 90 items (Yost, et al., 2009). The TT program tracked the time each respondent spent on each item. A sparse matrix sampling design was developed to create six overlapping subsets of health literacy items. The six subsets were administered sequentially (i.e., in order of enrollment of patients) to obtain equal numbers of completions for each subset. The selection of 30 items per participant was used to ensure that each of the 90 health literacy items would be administered to at least 200 patients. For each item subset, we estimated internal consistency reliability with the Kuder-Richardson formula for Cronbach's coefficient alpha (Nunnally & Bernstein, 1994).

Once the health literacy assessment had been completed, the RA conducted a debriefing interview asking participants to evaluate their experience with the TT, including difficulty using the touchscreen, quality of the audio recordings, and appearance of the text and graphics. They were also asked about their current computer use and familiarity with other touchscreen devices (e.g., ATM machines, airport check-in kiosks). Patients' perceptions of their experience with the TT were summarized and the association between these perceptions and patient characteristics (e.g., age, prior computer use) were evaluated using chi-square tests with a significance level of p<0.05. For each participant, we calculated a simple proportion of health literacy items answered correctly within each subset. Data from the six item subsets were then pooled and we evaluated the association between health literacy scores, patient characteristics and TT evaluation questions using t-tests and analysis of variance.

Results

We approached 748 patients in the clinic waiting rooms. Some patients declined participation (n=68) or were ineligible (n=17). We enrolled 663 (88.6%) of the patients approached, but a small number of these patients were unable to complete the TT assessment, primarily due to time constraints (n=53). Thus, 610 patients were enrolled and completed the assessment. About half of the participants were female, two-thirds were African-American, 10% were 60 years of age or older, 18% had less than a high school education, and 40% reported a household income of less than $10,000 (see Table 1). Most patients (70%) reported that they had used a computer within the past 12 months, and most (71%) had used some type of touchscreen for banking or at an airport.

Table 1.

Characteristics of Study Participants (N=610)

| Characteristic | n (%) |

|---|---|

| Female | 313 (51.3%) |

| Race and ethnicity | |

| African-American, Non-Hispanic | 406 (66.6%) |

| White, Non-Hispanic | 95 (15.6%) |

| Other, Non-Hispanic | 24 (3.9%) |

| Hispanic, any race | 76 (12.4%) |

| Missing | 9 (1.5%) |

| Age | |

| 21–39 | 169 (27.7%) |

| 40–49 | 162 (26.6%) |

| 50–59 | 212 (34.8%) |

| 60–77 | 64 (10.5%) |

| Missing | 3 (0.4%) |

| Highest grade completed | |

| <High School | 108 (17.7%) |

| High school graduate/GED | 237 (38.9%) |

| Some college | 187 (30.7%) |

| College graduate or advanced degree | 78 (12.8%) |

| Household Income | |

| <$10,000 | 244 (40.0%) |

| $10,000 – $19,999 | 159 (26.1%) |

| $20,000 – $49,999 | 103 (16.9%) |

| $50,000+ | 20 (3.3%) |

| Missing | 84 (13.8%) |

| Computer use | |

| Never | 86 (14.1%) |

| Not in the past 12 months | 55 (9.0%) |

| If used in the past 12 months | |

| 1–3 times per montha | 77 (12.6%) |

| 1–4 days per weeka | 132 (21.6%) |

| 5–7 days per weeka | 219 (35.9%) |

| Missing | 41 (6.7%) |

| Ever used a touchscreen (ATM, etc.)? | |

| No | 139 (22.8%) |

| Yes | 432 (70.8%) |

| Missing | 39 (6.4%) |

Frequency of use during the most active month

Table 2 presents the results of the evaluation questions asked in the debriefing interview conducted after the completion of the TT assessment. Approximately 40 patients did not complete the debriefing interview, primarily due to time constraints. Nearly all patients (93%) reported that they had no difficulty using the touchscreen for this study. Older patients and those with less education were more likely to have never used a computer before (p<0.001; results not shown). These patients were also more likely to have some difficulty using the touchscreen (p<0.001). Specifically, 17% of those without a high school degree reported some difficulty compared to those with a high school degree (4%), some college (7%) or a college degree (6%). In terms of age, 4% of those aged 21–39 and 40–49 reported some difficulty with the touchscreen compared to 11% and 12% of those aged 50–59 and 60–77, respectively. A few patients felt uncomfortable, anxious or nervous using the touchscreen (14%), primarily older patients and those with lower education. Patients favorably rated the design of the touchscreen, and this did not vary across sociodemographic characteristics. Most patients were not overly burdened by completing about 30 health literacy questions; however, older patients and those with lower education were more likely to report that there were too many questions. Length of time to complete the 30 health literacy items was approximately 18 minutes on average (median, approximately 15 minutes). The majority (75%) rated their study participation experience “better than expected,” and this did not vary across sociodemographic characteristics.

Table 2.

Evaluation of the Talking Touchscreen for Health Literacy Assessment (N=610)a

| Topic | Questions | n (%) |

|---|---|---|

| Touchscreen Evaluation | Any difficulty using the touchscreen? | |

| Not at all | 528 (92.5%) | |

| A little bit | 36 (6.3%) | |

| Somewhat or Quite a bit | 7 (1.2%) | |

| Ever feel uncomfortable, anxious or nervous while using the touchscreen? | ||

| Not at all | 495 (86.5%) | |

| A little bit | 61 (10.7%) | |

| Somewhat | 11 (1.9%) | |

| Quite a bit | 5 (0.9%) | |

| Overall rating of the design of the screens, including the colors and the layout | ||

| Poor | 3 (0.5%) | |

| Fair | 22 (3.8%) | |

| Good | 137 (24.0%) | |

| Very Good | 189 (33.1%) | |

| Excellent | 220 (38.5%) | |

| Overall rating of the buttons on the screens, including their size and shape | ||

| Poor | 2 (0.4%) | |

| Fair | 20 (3.5%) | |

| Good | 135 (23.6%) | |

| Very Good | 196 (34.3%) | |

| Excellent | 218 (38.2%) | |

|

| ||

| Overall Assessment | Length of assessment (about 30 items) | |

| Too many | 81 (14.2%) | |

| About right | 328 (57.5%) | |

| Could have answered more | 161 (28.3%) | |

| Compared to what you expected, how would you rate your experience participating in this research study? | ||

| A lot worse than expected | 1 (0.2%) | |

| A little worse than expected | 10 (1.8%) | |

| About the same as expected | 132 (23.2%) | |

| A little better than expected | 160 (28.1%) | |

| A lot better than expected | 266 (46.7%) | |

| Would you recommend this research study to other people? | 6(1.0%) | |

| No | 6 (1.0%) | |

| Maybe | 18 (3.2%) | |

| Yes | 546 (95.8%) | |

|

| ||

| Assistance Needs | Amount of help provided by the study interviewer | |

| None | 488 (86.1%) | |

| A little bit | 60 (10.6%) | |

| Some | 15 (2.6%) | |

| A lot | 1 (0.2%) | |

| Continuous help | 3 (0.5%) | |

Sample size varies by question due to some missing data (see text)

Internal consistency reliability coefficients were high for all six health literacy item subsets (range: 0.82 to 0.91). The mean proportion of health literacy items answered correctly on the six subsets ranged from 0.62 to 0.72. When data for all six subsets were pooled, the proportion of health literacy items answered correctly ranged from 0.13 to 1.00, with a mean of 0.67 and standard deviation of 0.20. The proportion correct did not differ by gender, nor did it differ across responses to most of the TT evaluation questions (see Table 3). There were statistically significant differences by some sociodemographic characteristics and in the rating of the screen design. However, with the exception of education, there were no apparent trends in the proportion correct across categories.

Table 3.

Proportion of Health Literacy Items Answered Correctly, by Patient Characteristics and Talking Touchscreen Evaluation Questions

| Mean Proportion Correct (SD) | p-value | |

|---|---|---|

| Patient Characteristic | ||

| Gender | ||

| Female (n=313) | 0.67 (0.19) | 0 487 |

| Male (n=297) | 0.66 (0.21) | |

| Race and ethnicity | ||

| African-American, Non-Hispanic (n=406) | 0.62 (0.20) | <0.001 |

| White, Non-Hispanic (n=95) | 0.79 (0.16) | |

| Other, Non-Hispanic (n=24) | 0.73 (0.14) | |

| Hispanic, any race (n=76) | 0.74 (0.15) | |

| Age | ||

| 21–39 (n=169) | 0.69 (0.21) | 0.006 |

| 40–49 (n=162) | 0.62 (0.22) | |

| 50–59 (n=212) | 0.67 (0.18) | |

| 60–77 (n=64) | 0.70 (0.17) | |

| Highest grade completed | ||

| <High School (n=108) | 0.62 (0.18) | <0.001 |

| High school graduate/GED (n=237) | 0.62 (0.20) | |

| Some college (n= 187) | 0.69 (0.19) | |

| College graduate or advanced degree (n=78) | 0.81 (0.17) | |

| Computer use | ||

| Never (n=86) | 0.68(0.19) | <0.001 |

| Not in the past 12 months (n=55) | 0.59 (0.17) | |

| If used in the past 12 months | ||

| 1–3 times per montha (n=77) | 0.64 (0.20) | |

| 1–4 days per weeka (n=132) | 0.66 (0.20) | |

| 5–7 days per weeka (n=219) | 0.72 (0.20) | |

|

| ||

| Touchscreen Evaluation | ||

| Any difficulty using the touchscreen? | ||

| Not at all (n=528) | 0.66 (0.20) | 0.208 |

| A little bit, somewhat, quite a bit (n=43) | 0.70 (0.16) | |

| Ever feel uncomfortable, anxious or nervous while using the touchscreen? | ||

| Not at all (n=495) | 0.67 (0.20) | 0.242 |

| A little bit, somewhat, quite a bit (n=77) | 0.64 (0.20) | |

| Overall rating of the design of the screens, including the colors and the layout | <0.001 | |

| Poor, Fair (n=25) | 0.63 (0.22) | |

| Good (n=137) | 0.66 (0.20 | |

| Very Good (n=189) | 0.72(0.19) | |

| Excellent (n=220) | 0.62 (0.20) | |

|

| ||

| Overall Assessment | ||

| Length of assessment (about 30 items) | ||

| Too many (n=81) | 0.67 (0.19) | 0.463 |

| About right (n=328) | 0.67 (0.20) | |

| Could have answered more (n=161) | 0.65 (0.21) | |

| Compared to what you expected, how would you rate your experience participating in this research study? | ||

| A lot or a little worse than expected (n=11) | 0.69 (0.24) | 0.141 |

| About the same as expected (n=132) | 0.69 (0.20) | |

| A little better than expected (n=160) | 0.67 (0.21) | |

| A lot better than expected (n=266) | 0.64 (0.20) | |

SD: standard deviation

Frequency of use during the most active month

A few open-ended questions were asked as part of the evaluation. One was “Would you please tell me what it was like to use the touchscreen?” Responses were overwhelmingly positive.

“It was complicated at first because I never used one before. Then I became a pro.” (58-year-old African-American male)

“It was nice. I especially liked the questions that talked to me.”(54-year-old African-American female)

“It was easy; you can answer the questions at your own pace.” (41-year-old African-American male)

“It was interesting and challenging to me because I have never used a computer before. But it was easy.”(63-year-old Hispanic male)

“It was exciting to use because I felt that I learned something new. Being my first time using the computer, it was enjoyable.” (59-year-old African-American female)

However, there were some negative comments in response to this overall question as well.

“Weird. Not into computers. Scary. Intimidating.” (61-year-old White female)

“Scary. Didn't want to break it.” (56-year-old African-American male)

“A little tough - vision is not too good.” (47-year-old African-American male)

Participants who reported that they felt uncomfortable, anxious or nervous using the touchscreen were probed to provide more information about why they felt that way. Below are some comments indicating that the discomfort was typically due to the user-computer interface, clinic waiting room conditions, or the tasks required when answering the health literacy questions.

“Computer was reading too slow.” (23-year-old Hispanic male)

“Because I have never used a computer before.” (48-year-old African-American female)

“Too many people around.” (44-year-old Hispanic female)

“It's kind of hard to concentrate with the sound of the TV in the background, so sometimes I had to read a question twice.” (46-year-old African-American male)

“I felt anxious because I think I was getting some wrong. I was trying to get them all right.” (52-year-old African-American male)

“Some of the reading questions were hard for me. I wished all the questions were read [aloud] to me.” (34-year-old African-American female)

“Some questions were talking about things I know nothing about. The graphs were confusing.” (64-year-old African-American male)

Conclusions

To our knowledge, this is the first study to develop and test a health literacy measure that can be self-administered. Our results suggest that the self-administered literacy test was easy to use, even for the vulnerable patient population that participated in this study. Our sample was diverse with respect to levels of health literacy, with some participants answering only a few health literacy questions correctly and others answering all of them correctly. Most of the TT evaluation questions were not associated with the level of health literacy, suggesting that this new measure of health literacy is acceptable to patients across the health literacy continuum.

These results are encouraging given that our participants comprised a vulnerable population and were recruited from safety net settings. Some may have predicted that this disadvantaged and potentially low computer literate population would have had difficulty using the TT. While we did find that older patients and those with less education were more likely to report difficulty, these patients were also less likely to have used a computer prior to this study. The vast majority of participants felt using the TT was easy. These findings demonstrate the potential utility of using TT technology with patients who are likely to have low reading literacy and low computer literacy. It is possible that the high acceptability of the TT may be due to the small number of computer-naïve participants. However, we found comparable acceptability in our previous studies, which included much higher proportions of computer-naïve participants (42% to 64%) (Hahn, et al., 2007; Hahn, et al., In press). Therefore, adaptation to this more complicated assessment tool did not detract from the general acceptability of the TT. This bodes well for further adapting the TT for other types of assessment.

Although we found high acceptability of the TT for health literacy, we acknowledge that usability is not equivalent to validity. Additional analyses are needed to assess construct validity and to evaluate whether any potential measurement bias exists. Comprehensive psychometric analyses are now being conducted for these purposes and to calibrate the difficulty of the health literacy items so that we can develop a computer-adaptive test. Depending on the desired level of measurement precision, an adaptive test might allow participants to complete even fewer than the 30 items administered in this study. Future analyses should examine the trade-off between potential additional accuracy gained with completing more questions versus the response burden and acceptability.

The TT is easy to use and acceptable for self-administration of a novel health literacy measure. It is currently being programmed into a web-based research management application that will make it widely available to interested users. This will greatly facilitate collecting literacy information for research and, if appropriate, in clinical practice. Moreover, it will provide a more standardized way of measuring literacy that would require little to no staff training, thus reducing research staff burden and costs, and the potential for interview bias. It may also reduce feelings of embarrassment by those with lower literacy. Use of the TT can also serve as a way to increase exposure of underserved populations to new technologies, and contribute information about the experiences of diverse populations with these technologies. Future potential applications of the TT include comparative studies of health literacy among different patient populations and individually targeted interventions to improve health literacy.

Acknowledgements

The authors thank Michael Bass and Irina Kontsevaia for programming the Talking Touchscreen; Rachel Hanrahan for informatics management; Katy Wortman for data management; Seung Choi and James Griffith for analysis support; and Yvette Garcia, Beatriz Menendez, Veronica Valenzuela and Priscilla Vasquez for recruiting and interviewing patients. We also thank all of the patients who participated in this study.

This study was supported by grant number R01-HL081485 from the National Heart, Lung, and Blood Institute.

References

- Blumenthal D, Glaser JP. Information technology comes to medicine. N Engl J Med. 2007;356(24):2527–2534. doi: 10.1056/NEJMhpr066212. [DOI] [PubMed] [Google Scholar]

- Cashen MS, Dykes P, Gerber B. eHealth technology and Internet resources: barriers for vulnerable populations. J Cardiovasc Nurs. 2004;19(3):209–214. doi: 10.1097/00005082-200405000-00010. quiz 215-206. [DOI] [PubMed] [Google Scholar]

- Committee on Health Literacy. Nielsen-Bohlman L, Panzer AM, Kindig DA. Health Literacy: A Prescription to End Confusion. The National Academies Press; Washington, D.C.: 2004. [PubMed] [Google Scholar]

- Davis TC, Long SW, Jackson RH, Mayeaux EJ, George RB, Murphy PW, et al. Rapid estimate of adult literacy in medicine: A shortened screening instrument. Family Medicine. 1993;25(6):391–395. [PubMed] [Google Scholar]

- Hahn E, Cella D, Dobrez D, Shiomoto G, Marcus E, Taylor SG, et al. The Talking Touchscreen: a new approach to outcomes assessment in low literacy. Psycho-Oncology. 2004;13(2):86–95. doi: 10.1002/pon.719. [DOI] [PubMed] [Google Scholar]

- Hahn E, Cella D, Dobrez D, Weiss B, Du H, Lai JS, et al. The impact of literacy on health-related quality of life measurement and outcomes in cancer outpatients. Quality of Life Research. 2007;16(3):495–507. doi: 10.1007/s11136-006-9128-6. [DOI] [PubMed] [Google Scholar]

- Hahn E, Du H, Garcia S, Choi S, Lai J-S, Victorson D, et al. Literacy-fair measurement of health-related quality of life will facilitate comparative effectiveness research in Spanish-speaking cancer outpatients. Medical Care. doi: 10.1097/MLR.0b013e3181d6f81b. In press. [DOI] [PubMed] [Google Scholar]

- Hahn EA, Cella D, Dobrez DG, Shiomoto G, Taylor SG, Galvez AG, et al. Quality of life assessment for low literacy Latinos: A new multimedia program for self-administration. Journal of Oncology Management. 2003;12(5):9–12. [PubMed] [Google Scholar]

- Institute of Medicine . Speaking of Health: Assessing Health Communication Strategies for Diverse Populations. The National Academies Press; Washington, DC: 2002. [PubMed] [Google Scholar]

- Kirsch I, Jungeblut A, Jenkins L, Kolstad A. Adult literacy in America: A first look at the results of the National Adult Literacy Survey. National Center for Education Statistics, US Department of Education; Washington, DC: 1993. [Google Scholar]

- Kreuter MW, Black WJ, Friend L, Booker AC, Klump P, Bobra S, et al. Use of computer kiosks for breast cancer education in five community settings. Health Educ Behav. 2006;33(5):625–642. doi: 10.1177/1090198106290795. [DOI] [PubMed] [Google Scholar]

- Kutner M, Greenberg E, Jin Y, Paulsen C. The Health Literacy of America's Adults (No. NCES 2006-483) National Center for Education Statistics: U.S. Department of Education; Washington, DC: 2006. [Google Scholar]

- Nunnally JC, Bernstein IH. Psychometric Theory. McGraw-Hill, Inc.; New York: 1994. [Google Scholar]

- Parker RM, Baker DW, Williams MV, Nurss JR. The test of functional health literacy in adults: A new instrument for measuring patients' literacy skills. Journal of General Internal Medicine. 1995;10(10):537–541. doi: 10.1007/BF02640361. [DOI] [PubMed] [Google Scholar]

- Suggs LS. A 10-year retrospective of research in new technologies for health communication. J Health Commun. 2006;11(1):61–74. doi: 10.1080/10810730500461083. [DOI] [PubMed] [Google Scholar]

- Yost KJ, Webster K, Baker DW, Choi SW, Bode RK, Hahn EA. Bilingual health literacy assessment using the Talking Touchscreen/la Pantalla Parlanchina: Development and pilot testing. Patient Education and Counseling. 2009;75(3):295–301. doi: 10.1016/j.pec.2009.02.020. [DOI] [PMC free article] [PubMed] [Google Scholar]