Abstract

The importance of health literacy has grown considerably among researchers, clinicians, patients and policymakers. Better instruments and measurement strategies are needed. Our objective was to develop a new health literacy instrument using novel health information technology and modern psychometrics. We designed Health LiTT as a self-administered multimedia touchscreen test based on item response theory (IRT) principles. We enrolled a diverse group of 619 English-speaking primary care patients in clinics for underserved patients. We tested three item types (prose, document, quantitative) that worked well together to reliably measure a single dimension of health literacy. The Health LiTT score meets psychometric standards (reliability of 0.90 or higher) for measurement of individual respondents in the low to middle range. Mean Health LiTT scores were associated with age, race/ethnicity, education, income and prior computer use (p<0.05). We created an IRT-calibrated item bank of 82 items. Standard setting needs to be performed to classify and map items onto the construct and to identify measurement gaps. We are incorporating Health LiTT into an existing online research management tool. This will enable administration of Health LiTT on the same touchscreen used for other patient-reported outcomes, as well as real-time scoring and reporting of health literacy scores.

Recognition of the importance of health literacy has grown considerably among researchers, clinicians, patients and policymakers (Ad Hoc Committee on Health Literacy for the Council on Scientific Affairs & American Medical Association, 1999; Berkman et al., 2004; Committee on Health Literacy, Nielsen-Bohlman, Panzer, & Kindig, 2004; Institute of Medicine Forum on the Science of Health Care Quality Improvement Implementation, Chao, Anderson, & Hernandez, 2009; U.S. Department of Health and Human Services Office of Disease Prevention and Health Promotion, 2010). Two recent editorials presented recommendations for better integration of health literacy, health disparities/health equity and patient-centered care initiatives (Hasnain-Wynia & Wolf, 2010; Paasche-Orlow & Wolf, 2010). To facilitate such initiatives, psychometrically sound measurement instruments are needed to reliably estimate health literacy. Jordan and colleagues conducted a critical review of existing health literacy instruments and found inconsistencies in the definition and measurement of health literacy, limited empirical evidence of construct validity, and weaknesses in their psychometric properties (Jordan, Osborne, & Buchbinder, 2010).

More precise measurement of health literacy will help to determine the level at which low literacy begins to adversely affect health outcomes. Wolf and colleagues recently evaluated seven categories of health literacy scores (Wolf, Feinglass, Thompson, & Baker, 2010). They identified various thresholds at which health literacy was associated with self-reported health status and all-cause mortality. Continued research is needed. Better measurement precision will enhance the ability to estimate the size of the population at risk from low health literacy, and to identify vulnerable patients in clinical settings. Measures should also be brief and easily scored in real-time to enable communication tailored to the patient’s literacy level, and to provide reliable and valid scores for use in testing interventions.

Our objective was to develop a new health literacy measurement system using novel health information technology and modern psychometric principles. Specifically, we designed the system as a self-administered multimedia test, and sought to develop an item bank using item response theory (IRT). IRT describes the association between an underlying level of a trait (in this case, health literacy) and the probability of a particular item response. An item bank comprises carefully calibrated questions that develop, define and quantify a common theme, which can provide an operational definition of a trait (Choppin, 1968, 1981). Using a calibrated item bank, one can easily create full-length test instruments, short-forms and computerized adaptive testing (CAT).

Our new health literacy measurement system (“Health Literacy Assessment Using Talking Touchscreen Technology;” Health LiTT) was designed to address important attributes recommended by the Medical Outcomes Trust for multi-item measures of latent traits (Lohr, 2002). This manuscript reports on the psychometric properties of the English language version of the measure (Health LiTT).

METHODS

Development of items and multimedia computer-based assessment

We previously reported on the development of the Health LiTT items and administration methods (Yost et al., 2010; Yost et al., 2009); a brief summary is provided here. We assembled an expert advisory panel to clearly define the construct we wanted to measure, considering previous definitions offered by others (Committee on Health Literacy, et al., 2004) and then tailoring it to specifically fit what could reasonably be measured using a multimedia assessment tool. Our resulting definition of health literacy is as follows: “Health Literacy is the degree to which individuals have the capacity to read and comprehend health-related print material, identify and interpret information presented in graphical format (charts, graphs, tables), and perform arithmetic operations in order to make appropriate health and care decisions” (Yost, et al., 2009) (p.298). This definition encompasses an individual’s capacity to process and understand health-related information, and the ability to apply that information in the management of her/his own health. We propose that the capacity to obtain information, which is part of previous definitions (Committee on Health Literacy, et al., 2004), is a navigation skill that requires a different measurement tool.

We implemented a rigorous stepwise methodology for item format, topic and concept development with a goal of deriving pools of three different types of items commonly used to measure literacy: 1) prose, 2) document, and 3) quantitative (Yost, et al., 2009). A modified native cloze technique was used for the prose items, which consist of a brief text passage of health-related information followed by a single-sentence comprehension item with a missing word. A respondent must choose the correct missing word from four multiple-choice options. Document items are administered with a related image (e.g., table, graph, prescription label). The item asks a question about information that can be located in the image. A respondent must choose the correct response from four multiple-choice options. Quantitative items are often coupled with an image, but can also be in text format only. All quantitative items present the respondent with a question that requires some type of arithmetic computation to determine the correct answer, which is chosen from four multiple-choice options. Items were developed to cover a range of reading levels, primarily targeted to the low to middle range. In order to inform item difficulty level and range, we used a combination of the Flesch-Kincaid index (Kincaid, Fishburne, Rogers, & Chissom, 1975), the Lexile Framework® for Reading (Stenner & Wright, 2004), qualitative assessments of item difficulty levels by the advisory panel, and pilot testing data. We administered a final set of 90 items for IRT calibration testing.

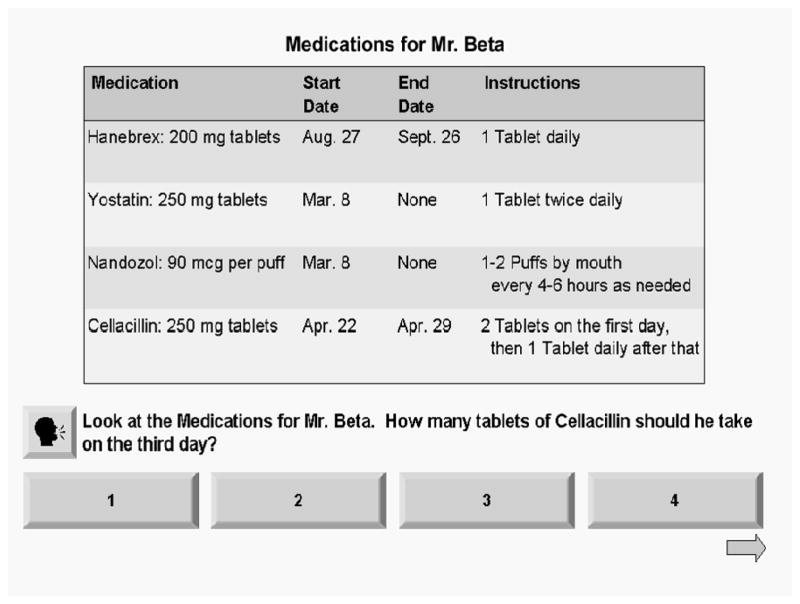

We adapted our multimedia “Talking Touchscreen” to enable respondents to self-administer health literacy items (Hahn et al., 2004; Hahn et al., 2003; Yost, et al., 2010; Yost, et al., 2009). Screens and audio files were created to mimic the current combination of self- and interviewer-administration methods used for the Test of Functional Health Literacy in Adults (Parker, Baker, Williams, & Nurss, 1995). For example, respondents read the Health LiTT prose passages on their own. For document and quantitative items, the image or text appears on the screen along with a question and a set of response buttons. The respondent touches the screen if s/he would like to hear the question read out loud (see sample document image in Figure 1).

Figure 1.

Sample Health LiTT Item (Document)

Participant recruitment

Participants were recruited from two urban and two suburban Chicago metropolitan area primary care clinics that provide care to underserved patients, many of whom do not have health insurance. Two recruitment methods were used at both clinics: flyers posted near the reception desk and direct invitation by a research assistant (RA) in the waiting area. Eligibility criteria were broad to maximize generalizability: age 21 or older, English-speaking, and sufficient vision, hearing, cognitive function and manual dexterity as judged by the RA to interact with the touchscreen laptop. Each RA completed comprehensive training based on our standard operating procedures developed from 20 years of patient-oriented research with diverse populations. All participants provided informed consent in accordance with institutional review board requirements, and received $20 for completing the assessment.

Measures and assessment procedures

Study activities occurred in a private room at each clinic, whenever possible. The RA used interviewer-administered questionnaires to obtain standard sociodemographic and clinical information as well as participants’ self-assessment of their reading abilities. The RA introduced each participant to the touchscreen tablet laptop and the stylus, which is approximately the size of a pen, and provided headphones. The participant answered two practice questions while the RA observed. If the participant had no questions and did not need further assistance, the RA left the participant to self-administer the assessment in private, but remained nearby. Each participant completed one of six pre-constructed subsets of 30 Health LiTT items (Yost, et al., 2010; Yost, et al., 2009). They also completed a 10-item health status instrument (Hays, Bjorner, Revicki, Spritzer, & Cella, 2009). No time limit was enforced for completion of the assessment, and the program tracked the time each respondent spent on each item. The RA conducted a debriefing interview asking participants to evaluate their experience with the touchscreen, and to provide information about their current use and familiarity with other computer and touchscreen devices.

Psychometric and statistical analyses

The Health LiTT items use a multiple-choice format with one correct answer. To meet minimum sample size requirements for the analytic models, we administered each item to approximately 200 patients. To reduce respondent burden, we developed a sparse matrix sampling design to create six overlapping subsets of Health LiTT items. Each subset included 12–13 prose, 8–10 document and 8–10 quantitative items. Each pair of adjacent subsets shared 15 items in common. The six subsets were administered sequentially (i.e., in order of patient enrollment) to obtain equal numbers of completions for each subset.

To examine the dimensionality of the new measure, we conducted confirmatory factor analysis (CFA) with one factor for each item type (prose, document, quantitative). We used Mplus to solve for CFA model parameters (Muthen & Muthen, 1998–2010). We then evaluated a two-parameter logistic (2-PL) IRT model for dichotomous data. All unidimensional IRT models require that only one trait be measured by the items in the scale. The 2-PL model includes parameters for item difficulty and an additional parameter for each item, which estimates how well an item can differentiate among respondents with different levels of the trait (Panter, Swygert, Dahlstrom, & Tanaka, 1997; Reise & Henson, 2003; Steinberg & Thissen, 1995). The IRT calibrations were obtained using PARSCALE version 4.1 (Muraki & Bock, 2003). Poorly performing items were identified by an adjusted point-biserial correlation less than 0.20 (correlation between the item and total score in which the item is excluded from the total score), or by poor model fit (chi-square statistics, p<0.05). The model was then recalibrated after removal of items.

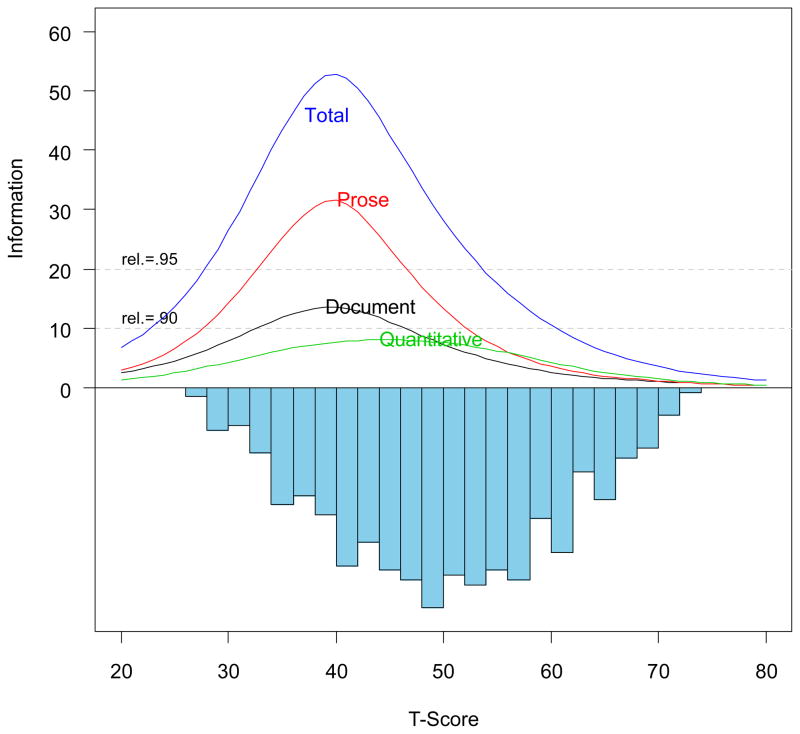

The Health LiTT patient scores were transformed to a T-score scale, with a mean of 50 and a standard deviation of 10 in this sample. IRT calibration results were summarized graphically using the test information curve, which is a measure of precision. Classical reliability estimates can be approximated by the information curve. We also estimated classical reliability (Cronbach’s alpha) for each subset of 30 items, and applied the Spearman-Brown prophecy formula to obtain estimated/predicted alphas based on only 15 items (Stanley, 1971; Wainer, 2000).

Categories for sociodemographic and clinical variables were collapsed for purposes of analysis, and mean Health LiTT scores were compared between categories using analysis of variance techniques.

RESULTS

We enrolled a diverse group of 619 primary care patients. A few patients did not complete the Health LiTT assessment (n=11) and we excluded them from our analyses. Approximately one-half of the study participants were female; age ranged from 21 to 77 years; two-thirds were non-Hispanic Blacks; and the majority had at least a high school education (Table 1). Nearly half had no health insurance and most reported very low income. The average length of time to complete 30 Health LiTT items was 18 minutes (Yost, et al., 2010).

Table 1.

Sociodemographic Characteristics and Associations with Health LiTT Scores (n=608a)

| Sociodemographic Characteristic | n (%) | Mean (SD) Health LiTT score | p-value b |

|---|---|---|---|

| Female | 311 (51) | 50.4 (9.9) | 0.256 |

| Male | 297 (49) | 49.5 (10.1) | |

|

| |||

| Age, years | 0.021 | ||

| 21–39 | 169 (28) | 51.2 (10.6) | |

| 40–49 | 162 (27) | 48.1 (10.6) | |

| 50–59 | 212 (35) | 50.1 (9.2) | |

| 60–77 | 62 (10) | 51.6 (8.6) | |

| Missing | 3 (<1) | --- | |

|

| |||

| Race/ethnicity | <0.001 | ||

| Black, non-Hispanic | 410 (67) | 47.6 (9.8) | |

| White, non-Hispanic | 95 (16) | 56.7 (8.9) | |

| Other, non-Hispanic | 27 (4) | 52.4 (7.9) | |

| Hispanic, any race | 76 (13) | 53.4 (7.7) | |

|

| |||

| Highest education | <0.001 | ||

| Grade school | 13 (2) | 45.1 (7.5) | |

| Some High School | 94 (15) | 47.4 (8.1) | |

| High School graduate/GED | 236 (39) | 47.7 (9.7) | |

| Some college | 187 (31) | 51.3 (9.8) | |

| College grad./advanced degree | 78 (13) | 57.7 (9.4) | |

|

| |||

| Health insurance | 0.907 | ||

| None | 297 (49) | 50.2 (10.0) | |

| Any kind | 285 (47) | 50.1 (10.0) | |

| Don’t know/missing | 26 (4) | --- | |

|

| |||

| Household income | <0.001 | ||

| < $10,000 | 245 (40) | 48.8 (9.6) | |

| $10,000 – $19,999 | 162 (27) | 48.9 (9.9) | |

| $20,000 – $34,999 | 95 (16) | 53.6 (9.4) | |

| >= $35,000 | 44 (7) | 53.8 (11.9) | |

| Missing | 35 (6) | --- | |

|

| |||

| Income adequacy c | 0.685 | ||

| Not at all | 412 (68) | 49.9 (9.7) | |

| Somewhat | 134 (22) | 50.6 (10.9) | |

| Definitely | 36 (6) | 50.7 (10.7) | |

| Don’t know/missing | 26 (4) | --- | |

|

| |||

| Prior computer use | <0.001 | ||

| Never | 87 (14) | 45.8 (9.2) | |

| Not in past 12 months | 55 (9) | 45.9 (7.5) | |

| In past 12 months | 454 (75) | 51.2 (10.1) | |

| Missing | 12 (2) | --- | |

|

| |||

| Ever have trouble reading printed information from doctors or nurses? | 0.171 | ||

| None of the time | 422 (69) | 50.5 (10.2) | |

| A little of the time | 59 (10) | 50.3 (8.5) | |

| Some of the time | 80 (13) | 48.7 (9.7) | |

| Most or all of the time | 24 (4) | 46.7 (9.9) | |

| Missing | 23 (4) | ||

|

| |||

| Ever have trouble reading everyday things like the newspaper? | 0.442 | ||

| None of the time | 503 (83) | 50.4 (10.2) | |

| A little of the time | 34 (6) | 48.7 (7.9) | |

| Some of the time | 34 (6) | 48.1 (8.0) | |

| Most or all of the time | 14 (2) | 48.5 (10.9) | |

| Missing | 23 (4) | --- | |

SD: standard deviation

We enrolled 619 patients, but only 608 completed the Health LiTT assessment (see text).

Overall p-value for difference between mean Health LiTT scores (analysis of variance techniques; see text)

“In general, do you feel that your income is adequate to meet your needs?”

When examining CFA results, a three-factor model had slightly better fit compared to a one-factor model. However, correlations among the three factors (grouped by prose, document and quantitative items) were 0.90–0.95, suggesting good evidence for unidimensionality, i.e., the presence of a strong dominant dimension. The 2-PL IRT model also fit the data well. Table 2 provides descriptive statistics for the proportions of items answered correctly, and the adjusted point-biserial correlations, separately for the three item types. In the initial analysis of all 90 items, the proportion of items answered correctly ranged from 15% to 95% (Table 2a). The average proportion correct was lower for quantitative items (55%) compared to prose (77%) and document (70%) items. Adjusted point-biserial correlations ranged from 0.06 to 0.62. For dichotomous data, the maximum possible correlation is approximately 0.80 (Nunnally & Bernstein, 1994). Only six items had a correlation below our criterion value of 0.20. Two additional items had poor model fit (p<0.05). We dropped these eight items and re-calibrated the 2-PL IRT model.

Table 2.

Proportion of Items Correct and Adjusted Point-biserial Correlations, by Health LiTT Item Type

| Item Type | No. Items | Proportion Correct

|

Adjusted point-biserial correlation

|

||||||

|---|---|---|---|---|---|---|---|---|---|

| Mean | SD | Min | Max | Mean | SD | Min | Max | ||

| a. Initial set (90 items) | |||||||||

| Prose | 37 | 0.773 | 0.156 | 0.288 | 0.950 | 0.435 | 0.094 | 0.289 | 0.621 |

| Document | 27 | 0.703 | 0.136 | 0.365 | 0.886 | 0.364 | 0.110 | 0.160 | 0.537 |

| Quantitative | 26 | 0.553 | 0.202 | 0.150 | 0.887 | 0.351 | 0.147 | 0.061 | 0.561 |

|

| |||||||||

| b. Final set (82 items) | |||||||||

| Prose | 36 | 0.735 | 0.139 | 0.370 | 0.950 | 0.443 | 0.097 | 0.263 | 0.624 |

| Document | 26 | 0.716 | 0.120 | 0.404 | 0.886 | 0.374 | 0.107 | 0.208 | 0.540 |

| Quantitative | 20 | 0.608 | 0.176 | 0.297 | 0.887 | 0.385 | 0.121 | 0.091 | 0.559 |

The model fit the data well and provided good information (low standard error, high reliability), especially in the low to middle range of health literacy (see top part of Figure 2). Psychometric standards require a reliability of 0.90 or higher for individual measurement (Nunnally & Bernstein, 1994). The horizontal reference lines in Figure 2 indicate that the total Health LiTT score meets this standard across most of the score range, which provides precision accurate enough for measurement of individual respondents, not just for summarizing group scores. Figure 2 also shows information results for measures created using only specific item types. Precision is naturally lower with a subset of items. Classical reliability (Cronbach’s alpha) was 0.83–0.91 for each 30-item subset, and was estimated to be 0.72–0.84 for 15-item subsets.

Figure 2.

Item Information and Reliability, and Distribution of Person Scores Estimated by the Final 2-PL Calibration Model

Descriptive statistics for the final set of 82 items are shown in Table 2b. Proportions of items answered correctly range from 30% to 95%, and the mean proportion correct remains lower for quantitative items (61%) compared to prose (74%) and document (72%) items. Although two of these final items had low adjusted point-biserial correlations, we did not conduct additional iterative analyses. Health LiTT scores (theta) followed a normal (Gaussian) distribution (see bottom part of Figure 2).

The two right columns in Table 1 show the unadjusted mean Health LiTT scores for the various categories of participants’ sociodemographic characteristics. Many of the differences display expected trends, although not all achieved statistical significance. For example, lower Health LiTT was associated with lower education and income levels, no prior computer use, and poorer self-reported reading abilities. Health LiTT scores also differed across age and race/ethnicity groups.

CONCLUSIONS

This new health literacy measure (Health LiTT) meets or exceeds psychometric standards. We demonstrated that three item types work well together to reliably measure the single dimension of health literacy. Among 90 items tested in this sample of 608 primary care patients, only eight (9%) performed poorly and were removed. The remaining 82 items yielded a reliable estimate of health literacy, especially in the low to middle range. Health LiTT scores were normally distributed, and evidence for construct validity was supported.

We found strong evidence for our initial assumption that the Health LiTT instrument measures a unidimensional construct. The 2003 National Assessment of Adult Literacy (NAAL) also created an overall health literacy scale combining three item types (Kutner, Greenberg, Jin, & Paulsen, 2006). This suggests that it is appropriate and useful to create one overall composite score, and that separate scores for our three Health LiTT item types (prose, document, quantitative) are not needed to estimate an individual’s health literacy. For population-based studies for which the goal is to understand the level of proficiency for successfully completing different, common health-related reading tasks, it may still be helpful to create and report performance separately for prose, document and quantitative items. However, research studies seeking to measure health literacy as an individual characteristic (e.g., as a predictor of outcomes) can use the Health LiTT items to generate a single health literacy score.

We calibrated the Health LiTT items using an IRT model, which offers unique advantages over classical test theory approaches: near-equal interval measurement, representation of respondents and items on the same scale, and independence of person estimates from the particular set of items used for estimation (Hambleton & Swaminathan, 1985). The use of IRT for developing item banks enables radical changes in health assessment. For example, classical test theory requires that instruments be administered in their entirety in order to obtain a valid score. In contrast, items in a typical IRT-calibrated item bank can be used to construct multiple instruments, using different items, of varying lengths, all of which are on the same scale and represent the same trait (Hambleton & Swaminathan, 1985). When drawn from the same item bank, the scores produced by any set of bank items are expressed on the same metric.

IRT-calibrated item banks can be used to enable the development of computerized adaptive testing (CAT), where one can administer short, tailored tests to every individual, with scores comparable to longer, fixed-length assessments (Wainer, 2000). In a CAT, item selection is guided by an individual’s response to previously administered questions. This has the potential to yield more precise estimates than those derived from static measures. We will develop a CAT for Health LiTT.

We are incorporating Health LiTT into Assessment CenterSM, which is a free, online research management tool (http://www.assessmentcenter.net/). Assessment CenterSM enables customization of items and instruments, real-time scoring of CATs, secure storage of protected health information, automated accrual reports, real-time data export, and many other features. This will enable administration of Health LiTT on the same touchscreen used for other patient-reported outcomes, thus providing a feasible way to assess patients’ literacy in clinical practice and research. Administration by computer enables responses to be scored in real-time and presentation of graphic and/or written reports to the clinical researcher, physician and/or patient, enabling immediate use of the information to inform research and/or clinical decision-making (Cella & Chang, 2000; Gershon et al., 2003; Gershon & Bergstrom, 2006; Hahn, Cella, Bode, Gershon, & Lai, 2006; Wolfe & Pincus, 1999).

It is likely that our new Health LiTT measurement system will avoid some of the potential stigma associated with low literacy because it is easily self-administered, even by respondents with poor reading skills and those who have never used a computer (Yost, et al., 2010; Yost, et al., 2009). This approach differs from literacy screening that relies on interviewer-administration. There is an ongoing dialogue about conducting health literacy screening in clinical practice (Garcia, Hahn, & Jacobs, 2010; Hahn, Garcia, & Cella, 2010; Paasche-Orlow & Wolf, 2008). For those who may want to explore clinical screening, the Health LiTT measurement system may provide a useful strategy.

This study and future work will combine to provide evidence of how our Health LiTT measurement system is addressing the eight attributes recommended by the Medical Outcomes Trust for multi-item measures of latent traits: 1) a conceptual and measurement model, 2) reliability, 3) validity, 4) responsiveness, 5) interpretability, 6) low respondent and administrative burden, 7) alternative forms, and 8) cultural and language adaptations (Lohr, 2002). We developed a conceptual model and rigorous methodology for item development (Yost, et al., 2009). The health literacy items have good content validity, covering a variety of topics that should be relevant to primary care patients and their health care providers (Yost, et al., 2009). The items meet high standards for measurement reliability, which means that the Health LiTT system can provide scores precise enough for an individual respondent. This provides an advantage over existing health literacy instruments, which can only be used to summarize scores for groups of respondents. We presented initial evidence to support the construct validity of health literacy as measured by the Health LiTT scores. Respondent and administrative burden have been minimized by implementing novel multimedia assessment for self-administration of items (Yost, et al., 2010). By creating an item bank of 82 items, customized alternative forms can easily be created.

Future work is being planned to address the remaining attributes outlined above. Items and images have been adapted for Spanish by a certified team of language coordinators and translators with knowledge of medical terminology, using a validated multi-step translation methodology (Eremenco, Cella, & Arnold, 2005; Wild et al., 2005). The Spanish version of Health LiTT has been adapted for self-administration with our “Talking Touchscreen” (“Pantalla Parlanchina”) and is currently being tested (Hahn, et al., 2003; Yost, et al., 2009). We are in the planning stages of designing a longitudinal study to measure responsiveness and a study with a larger and more representative sample to improve the stability of the item parameters, and are developing a standard setting initiative to create meaningful and distinct categories of health literacy scores. Approaches such as those developed by Rudd and colleagues (Rudd, Kirsch, & Yamamoto, 2004) will be especially useful.

There are some limitations to this work. Although we believe this sample is fairly typical of patients who receive care in primary care clinics for underserved patients, this limits its representativeness of all adults who might take this test in the future. The current version of Health LiTT will not be able to distinguish between those with high and very high health literacy. By design, reliability of the test is better in the low to middle range and poorer in the upper range of measurement because we were primarily interested in more fully capturing the low to middle range of health literacy. We hope to learn more about the level at which low health literacy begins to adversely affect health and health care use. While our item development process included an expert advisory panel to define the construct and to provide qualitative assessments of item difficulty (Yost, et al., 2010), we have not yet conducted formal standard setting to classify and map items onto the construct, and to identify measurement gaps. There are currently unequal numbers of item types (36 prose, 26 document, 20 quantitative). Information/precision is influenced by the number of items which means that reliability is lower for the quantitative items. In addition, a short-form (15 items) may not meet psychometric standards for individual measurement (reliability of 0.90 or higher) although it will meet standards for group comparisons (reliability of 0.70 or higher) and reliability for a CAT should be higher.

The Health LiTT measurement system uses novel health information technology and meets high psychometric standards for measuring health literacy in individual respondents. Self-administration should avoid the potential stigma associated with low literacy. To avoid bias due to different cognitive processing speeds among patients (see Baker, Gazmararian, Sudano, & Patterson, 2000), there is no time limit on the Health LiTT assessment. This is also consistent with information exchange in real-world health care settings where patients are often given materials to take home to review, process and interpret at their own speed. Health LiTT provides a new measurement strategy to estimate the size of the population at risk from low health literacy, to identify vulnerable patients in clinical settings, and to provide reliable and valid scores for use in testing interventions.

Acknowledgments

This study was supported by grant # R01-HL081485 from the National Heart, Lung, and Blood Institute. Portions of this manuscript were presented at the Health Literacy 2nd Annual Research Conference, Bethesda MD, October 18–19, 2010. The authors thank David Cella, Richard Gershon, David Victorson and Kimberly Webster for scientific and creative contributions. We also thank all the patients who participated in this study.

Contributor Information

ELIZABETH A. HAHN, Department of Medical Social Sciences, Feinberg School of Medicine, Northwestern University, Chicago, Illinois, USA

SEUNG W. CHOI, Department of Medical Social Sciences, Feinberg School of Medicine, Northwestern University, Chicago, Illinois, USA

JAMES W. GRIFFITH, Department of Medical Social Sciences, Feinberg School of Medicine, Northwestern University, Chicago, Illinois, USA

KATHLEEN J. YOST, Department of Health Sciences Research, Mayo Clinic, Rochester, Minnesota, USA

DAVID W. BAKER, Department of Medicine, Feinberg School of Medicine, Northwestern University, Chicago, Illinois, USA

References

- Ad Hoc Committee on Health Literacy for the Council on Scientific Affairs, American Medical Association. Health literacy: Report of the Council on Scientific Affairs. JAMA. 1999;281(6):552–557. [PubMed] [Google Scholar]

- Baker DW, Gazmararian JA, Sudano J, Patterson M. The association between age and literacy among the elderly. Journal of Gerontology: Social Sciences. 2000;55B:S368–S374. doi: 10.1093/geronb/55.6.s368. [DOI] [PubMed] [Google Scholar]

- Berkman ND, DeWalt DA, Pignone MP, Sheridan SL, Lohr KN, Lux L, et al. Literacy and health outcomes. Evidence report/technology assessment no. 87 (No. AHRQ Publication No. 04-E007-2) Rockville, MD: Agency for Healthcare Research and Quality; 2004. [PMC free article] [PubMed] [Google Scholar]

- Cella D, Chang CH. A discussion of Item Response Theory (IRT) and its applications in health status assessment. Medical Care. 2000;38(9 Suppl):1166–1172. doi: 10.1097/00005650-200009002-00010. [DOI] [PubMed] [Google Scholar]

- Choppin B. An item bank using sample-free calibration. Nature. 1968;219(156):870–872. doi: 10.1038/219870a0. [DOI] [PubMed] [Google Scholar]

- Choppin B. Educational measurement and the item bank model. In: Lacey C, Lawton D, editors. Issues in Evaluation and Accountability. London, England: Methuen; 1981. pp. 204–221. [Google Scholar]

- Nielsen-Bohlman L, Panzer AM, Kindig DA Committee on Health Literacy . Health Literacy: A Prescription to End Confusion. Washington, D.C: The National Academies Press; 2004. [PubMed] [Google Scholar]

- Eremenco SL, Cella D, Arnold BJ. A comprehensive method for the translation and cross-cultural validation of health status questionnaires. Evaluation and the Health Professions. 2005;28(2):212–232. doi: 10.1177/0163278705275342. [DOI] [PubMed] [Google Scholar]

- Garcia SF, Hahn E, Jacobs E. Addressing low literacy and health literacy in clinical oncology practice. Journal of Supportive Oncology. 2010;8(2):64–69. [PMC free article] [PubMed] [Google Scholar]

- Gershon R, Cella D, Dineen K, Rosenbloom S, Peterman A, Lai JS. Item response theory and health-related quality of life in cancer. Expert Review of Pharmacoeconomics & Outcomes Research. 2003;3(6):783–791. doi: 10.1586/14737167.3.6.783. [DOI] [PubMed] [Google Scholar]

- Gershon RC, Bergstrom BA. Computerized adaptive testing. In: Wood TM, Zhu W, editors. Measurement Theory and Practice in Kinesiology. Champaign, IL: Human Kinetics Europe Ltd; 2006. pp. 127–143. [Google Scholar]

- Hahn E, Cella D, Dobrez D, Shiomoto G, Marcus E, Taylor SG, et al. The Talking Touchscreen: a new approach to outcomes assessment in low literacy. Psycho-Oncology. 2004;13(2):86–95. doi: 10.1002/pon.719. [DOI] [PubMed] [Google Scholar]

- Hahn EA, Cella D, Bode RK, Gershon R, Lai JS. Item Banks and Their Potential Applications to Health Status Assessment in Diverse Populations. Medical Care. 2006;44(11 Suppl 3):S189–S197. doi: 10.1097/01.mlr.0000245145.21869.5b. [DOI] [PubMed] [Google Scholar]

- Hahn EA, Cella D, Dobrez DG, Shiomoto G, Taylor SG, Galvez AG, et al. Quality of life assessment for low literacy Latinos: A new multimedia program for self-administration. Journal of Oncology Management. 2003;12(5):9–12. [PubMed] [Google Scholar]

- Hahn EA, Garcia SF, Cella D. Patient attitudes and preferences regarding literacy screening in ambulatory cancer care clinics. Patient Related Outcome Measures. 2010;1:19–27. doi: 10.2147/prom.s9361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hambleton RK, Swaminathan H. Item response theory: principles and applications. Boston, MA: Kluwer-Nijhoff; 1985. [Google Scholar]

- Hasnain-Wynia R, Wolf MS. Promoting Health Care Equity: Is Health Literacy a Missing Link? Health Services Research. 2010;45(4):897–903. doi: 10.1111/j.1475-6773.2010.01134.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hays RD, Bjorner J, Revicki DA, Spritzer K, Cella D. Development of physical and mental health summary scores from the Patient Reported Outcomes Measurement Information System (PROMIS) global items. Quality of Life Research. 2009;18(7):873–880. doi: 10.1007/s11136-009-9496-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chao S, Anderson K, Hernandez LM. Institute of Medicine Forum on the Science of Health Care Quality Improvement Implementation. Toward health equity and patient-centeredness: integrating health literacy, disparities reduction, and quality improvement: workshop summary. Washington, D.C: National Academies Press; 2009. [PubMed] [Google Scholar]

- Jordan JE, Osborne RH, Buchbinder R. Critical appraisal of health literacy indices revealed variable underlying constructs, narrow content and psychometric weaknesses. Journal of Clinical Epidemiology. 2010;64(4):366–379. doi: 10.1016/j.jclinepi.2010.04.005. [DOI] [PubMed] [Google Scholar]

- Kincaid JP, Fishburne RP, Jr, Rogers RL, Chissom BS. Derivation of new readability formulas (Automated Readability Index, Fog Count and Flesch Reading Ease Formula) for Navy enlisted personnel (No. Research Branch Report 8–75) Millington, TN: Naval Technical Training; U.S. Naval Air Station; Memphis, TN: 1975. [Google Scholar]

- Kutner M, Greenberg E, Jin Y, Paulsen C. The Health Literacy of America’s Adults: Results from the 2003 National Assessment of Adult Literacy (NCES 2006–483) Washington, DC: National Center for Education Statistics: U.S. Department of Education; 2006. [Google Scholar]

- Lohr KN. Assessing health status and quality-of-life instruments: Attributes and review criteria. Quality of Life Research. 2002;11(3):193–205. doi: 10.1023/a:1015291021312. [DOI] [PubMed] [Google Scholar]

- Muraki E, Bock D. PARSCALE. Lincolnwood, IL: Scientific Software International, Inc; 2003. [Google Scholar]

- Muthen LK, Muthen BO. M-Plus User’s Guide. 6. Los Angeles, CA: Muthen & Muthen; 1998–2010. [Google Scholar]

- Nunnally JC, Bernstein IH. Psychometric Theory. New York: McGraw-Hill, Inc; 1994. [Google Scholar]

- Paasche-Orlow M, Wolf M. Evidence Does Not Support Clinical Screening of Literacy. Journal of General Internal Medicine. 2008;23(1):100–102. doi: 10.1007/s11606-007-0447-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paasche-Orlow MK, Wolf MS. Promoting health literacy research to reduce health disparities. Journal of Health Communication. 2010;15:34–41. doi: 10.1080/10810730.2010.499994. [DOI] [PubMed] [Google Scholar]

- Panter A, Swygert K, Dahlstrom W, Tanaka J. Factor analytic approaches to personality item-level data. Journal of Personality Assessment. 1997;68(3):561–589. doi: 10.1207/s15327752jpa6803_6. [DOI] [PubMed] [Google Scholar]

- Parker RM, Baker DW, Williams MV, Nurss JR. The test of functional health literacy in adults: A new instrument for measuring patients’ literacy skills. Journal of General Internal Medicine. 1995;10(10):537–541. doi: 10.1007/BF02640361. [DOI] [PubMed] [Google Scholar]

- Reise S, Henson J. A discussion of modern versus traditional psychometrics as applied to personality assessment scales. Journal of Personality Assessment. 2003;81(2):93–103. doi: 10.1207/S15327752JPA8102_01. [DOI] [PubMed] [Google Scholar]

- Rudd R, Kirsch I, Yamamoto K. Literacy and health in America. 2004 Available from http://www.ets.org/Media/Research/pdf/PICHEATH.pdf.

- Stanley J. American Council on Education. Reliability. In: Thorndike RL, Angoff WH, Lindquist EF, editors. Educational measurement. 2. Washington: American Council on Education; 1971. [Google Scholar]

- Steinberg L, Thissen D. Item response theory in personality research. In: Fiske S, Shrout P, editors. Personality Research Methods. Hillsdale: Lawrence Erlbaum & Associates; 1995. pp. 161–181. [Google Scholar]

- Stenner AJ, Wright BD. Uniform Reading and Readability Measures. In: Wright BD, Stone MH, editors. Making Measures. Chicago: The Phaneron Press; 2004. pp. 79–115. [Google Scholar]

- U.S. Department of Health and Human Services Office of Disease Prevention and Health Promotion. National action plan to improve health literacy. Washington, DC: Author; 2010. [Google Scholar]

- Wainer H. Computerized Adaptive Testing: A Primer. Mahwah, NJ: Lawrence Earlbaum Associates; 2000. [Google Scholar]

- Wild D, Grove A, Martin M, Eremenco S, Ford S, Verjee-Lorenz A, et al. Principles of Good Practice for the Translation and Cultural Adaptation Process for Patient reported outcomes(PRO) Measures: Report of the ISPOR Task Force for Translation and Cultural Adaptation. Value in Health. 2005;8(2):94–104. doi: 10.1111/j.1524-4733.2005.04054.x. [DOI] [PubMed] [Google Scholar]

- Wolf MS, Feinglass J, Thompson J, Baker DW. In search of ‘low health literacy’: Threshold vs. gradient effect of literacy on health status and mortality. Social Science and Medicine. 2010;70(9):1335–1341. doi: 10.1016/j.socscimed.2009.12.013. [DOI] [PubMed] [Google Scholar]

- Wolfe F, Pincus T. Listening to the patient: A practical guide to self-report questionnaires in clinical care. Arthritis and Rheumatism. 1999;42(9):1797–1808. doi: 10.1002/1529-0131(199909)42:9<1797::AID-ANR2>3.0.CO;2-Q. [DOI] [PubMed] [Google Scholar]

- Yost KJ, Webster K, Baker DB, Jacobs EA, Anderson A, Hahn EA. Acceptability of the talking touchscreen for health literacy assessment. Journal of Health Communication. 2010;15(Suppl 2):80–92. doi: 10.1080/10810730.2010.500713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yost KJ, Webster K, Baker DW, Choi SW, Bode RK, Hahn EA. Bilingual health literacy assessment using the Talking Touchscreen/la Pantalla Parlanchina: Development and pilot testing. Patient Education and Counseling. 2009;75(3):295–301. doi: 10.1016/j.pec.2009.02.020. [DOI] [PMC free article] [PubMed] [Google Scholar]