Abstract

The role of body orientation in the orienting and allocation of social attention was examined using an adapted Simon paradigm. Participants categorized the facial expression of forward facing, computer-generated human figures by pressing one of two response keys, each located left or right of the observers' body midline, while the orientation of the stimulus figure's body (trunk, arms, and legs), which was the task-irrelevant feature of interest, was manipulated (oriented toward the left or right visual hemifield) with respect to the spatial location of the required response. We found that when the orientation of the body was compatible with the required response location, responses were slower relative to when body orientation was incompatible with the response location. In line with a model put forward by Hietanen (1999), this reverse compatibility effect suggests that body orientation is automatically processed into a directional spatial code, but that this code is based on an integration of head and body orientation within an allocentric-based frame of reference. Moreover, we argue that this code may be derived from the motion information implied in the image of a figure when head and body orientation are incongruent. Our results have implications for understanding the nature of the information that affects the allocation of attention for social orienting.

Keywords: social attention, spatial attention, Simon task, head-body orientation, implied motion

Introduction

The ability to infer where another person is directing his or her attention is important for social interactions. It not only helps to understand the intentions of others, but also to predict their actions and consequently react adaptively (e.g., Verfaillie and Daems, 2002; Gallese et al., 2004; Manera et al., 2010, 2011). In this sense, the ability to accurately determine another person's direction of attention allows the observer to gain insight into his or her mental state, such as the current desires and goals (Baron-Cohen, 1995), and this constitutes a considerable adaptive advantage (Emery, 2000; Langton et al., 2000). In particular, it has been argued that there is a propensity for observers to orient their attention to the same object to which other people are attending, a process referred to as “joint attention,” and that this process is automatic (Baron-Cohen, 1995; Galantucci and Sebanz, 2009; Sebanz and Knoblich, 2009). Here we investigated the nature of the social information that contributes to this automatic deployment of attention, and specifically test the role of the relative orientation of head and body in this process.

In many cases, eye-gaze provides a particularly powerful cue to the direction and location of where another person is attending (e.g., Gibson and Pick, 1963; Baron-Cohen, 1995; Langton et al., 2000; for reviews also see Frischen et al., 2007; Becchio et al., 2009; Birmingham and Kingstone, 2009). For example, Driver et al. (1999) and Friesen and Kingstone (1998) used an adapted version of Posner's cueing paradigm (1980) with spatially unpredictive central gaze cues and reported evidence for reflexive attentional shifts in the direction of the eye-gaze. This automaticity suggests that gaze direction is likely to be transformed into a spatial code which subsequently produces a shift in the allocation of attention (Zorzi et al., 2003).

Further evidence in support of the automatic generation of a spatial code was provided by Zorzi et al. (2003; also see Ansorge, 2003) in a study involving a variant of the Simon task (Simon and Craft, 1970; Lu and Proctor, 1995). In this study, participants were shown simple drawings of schematic eyes with colored irises that were either presented looking centrally or oriented to the left or right. The participants' task was to respond to the color of the irises (blue or green) by pressing one of two response keys, each located left or right of the body midline. Even though the stimuli were not lateralised as in the regular Simon paradigm and gaze direction was task-irrelevant, participants were faster to respond to the color of the eyes when the side of the response key corresponded with gaze direction than when it did not. Thus, task-irrelevant gaze direction automatically generated a directional spatial code, which in turn affected response selection (Lu and Proctor, 1995; Zorzi and Umiltà, 1995).

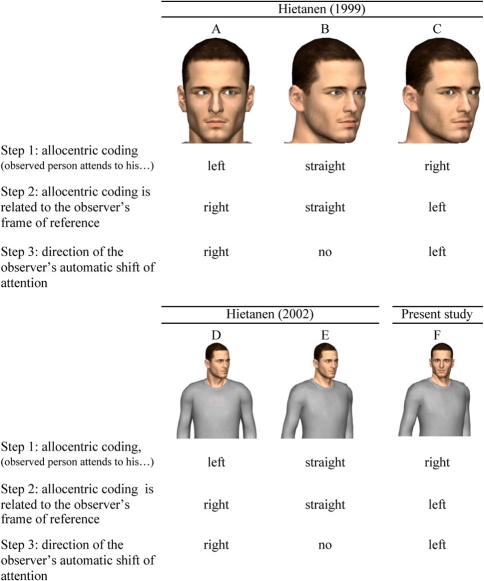

Since eye gaze direction does not necessarily coincide with the direction of the head, it can be predicted that attentional allocation on the basis of gaze cueing is also affected by head orientation. Hietanen (1999) manipulated gaze direction and head orientation independently in a Posner (1980) cueing paradigm and found that reflexive orienting of attention was induced only when eye and head direction did not coincide. First, a central, non-predictive cue with a frontal face and averted gaze facilitated responses to targets appearing at the gazed at location (see e.g., Figure 1A for an adapted version of the stimulus). This suggests that the direction of gaze automatically triggered a shift of attention, activating a motor program which in turn influenced the response. Second, a face directed laterally with gaze oriented in the same direction (Figure 1B) did not result in attentional cueing effects. According to Hietanen, this could be due to the fact that an averted head with a congruently averted gaze is a less powerful social signal in that the observed person is interpreted to be completely unrelated to the observer. Third, and most pertinent to the present study, when presented with a lateral head and a frontal gaze (averted back to the observer as shown in Figure 1C), there was a reverse validity effect: observers were fastest when the head was oriented in a direction which was opposite (egocentric) to the location of the target stimulus. To explain this counterintuitive result, Hietanen suggested that, in the orienting of social attention, the direction of the observed person's gaze or head is not directly encoded relative to an observer-based (or egocentric) frame of reference. Instead, gaze and head direction cues are combined into an allocentric frame of reference centered on the observed person (see step 1 in Figure 1), and only then is the direction of attention related to the observer's own egocentric frame of reference (step 2 in Figure 1). More specifically, a head oriented to the right of the observer with the gaze directed toward the observer (e.g., Figure 1C) would be coded as “a person attending to his/her right” in an allocentric reference frame centered on the observed person and this shifts the attention of the observer to the left (just like a head oriented straight with the eyes averted to the left of the observer is coded as “a person looking to his right” allocentrically, inducing an attentional shift to the observer's left). Indeed, with two interacting persons facing each other, a leftward direction in an allocentric reference frame corresponds to a rightward direction in an observer-centered reference frame and vice versa.

Figure 1.

Automatic attention shifts based on referential coding of body parts in an allocentric-based frame of reference. (A) frontal head with gaze averted, (B) lateral head with straight gaze in the same direction, (C) lateral head with gaze toward observer, (D) frontal body with head averted, (E) sideways body with head in the same direction, and (F) sideways body with head facing the observer.

Whilst it is clear that perceived eye and head direction are important cues for determining the direction and location of another person's attention, and have been studied quite extensively (for a review see Frischen et al., 2007), the role of body information (i.e., the direction of the torso, arms, and legs) on reflexive attentional shifts is less well understood. In an experiment similar to the 1999 study, Hietanen (2002) again used a Posner paradigm in which spatially unpredictive cues of a person's head and upper part of the torso were displayed centrally. A cue containing head and upper-body oriented in different directions (i.e., head rotated toward the target but a front-facing upper-body, as shown in Figure 1D) facilitated reaction times (RTs) while a cue with identical head and upper-body direction (i.e., both head and upper-body rotated toward the target, e.g., Figure 1E) did not. These results, together with those mentioned earlier on eye and head direction (Hietanen, 1999), provide support for the suggestion that in the orienting of social attention, different cues are initially used to derive the other person's direction of attention in an allocentric reference frame and only after this, the other person's direction of attention is related to the observer's egocentric frame of reference (Hietanen, 2002).

Based on the “reverse validity effect” (see e.g., Figure 1C), a model in which social attention orienting uses a hierarchy of social attention cues was proposed (Hietanen, 1999, 2002). According to the model, gaze direction is inferred relative to head orientation, and head orientation in turn is inferred relative to body orientation. If the derived relationships between these cues suggest that the other person has a laterally averted attention direction, a spatially defined directional code is then activated which may trigger an automatic shift of the observer's own attention in that direction (step 3 in Figure 1)1.

Present study

The most direct evidence for Hietanen's hypothesis that gaze, head, and body cues in social orienting are combined in an allocentric coordinate system centered on the observed person (rather than in an egocentric frame of reference) comes from the finding that, when the head is averted and the person is gazing at the observer (as shown in Figure 1C), a reverse validity effect is observed in a Posner paradigm. The aim of the present study was to extend this evidence in two ways.

First, we wanted to investigate the role of body orientation (i.e., trunk, arms, and legs) on the orienting of social attention in general and allocentric allocation of attention in particular. As discussed in the Introduction, Hietanen (2002) already manipulated the relationship between torso and head direction and he observed that cues with a body oriented toward the viewer and the head averted produced a facilitation on response time in valid trials (e.g., Figure 1D). Hietanen interpreted this as evidence for allocentric coding. However, while not in contradiction with such an account, this observation can be interpreted as evidence for egocentric coding as well [faster right (left) responses when the figure is looking to the observer's right (left)]. More specifically, we reasoned that the generation of an allocentrically based spatial directional code should not be limited to situations in which an observed person's head (and gaze) is averted to one side, with the body in frontal view (i.e., different head/body orientations, e.g., Figure 1D), but should generalize to situations in which the body is oriented away and the person is facing the observer. For example, in the case of a frontal view of the head (with straight gaze) combined with a body oriented to the right of the observer (as shown in Figure 1F) the predicted spatial directional code should be in the opposite direction of the body orientation. Indeed, such a combination of head/body orientations would, if coded in an allocentrically based frame of reference, activate a spatial code directed to the observed person's right (see Figure 1, step 1; and, therefore, the observer's left in an egocentrically frame, step 2), despite the fact that head and eye gaze are oriented directly toward the viewer.

Second, based on the concept of convergent operations (Garner et al., 1956), we intended to extend the evidence collected with a Posner cueing paradigm to data gathered with another experimental paradigm. Specifically, we used an adapted Simon paradigm similar to Zorzi et al. (2003; see also Ansorge, 2003), which required processing of a feature of the stimulus cue, namely the facial expression of a figure (i.e., the task-relevant feature). The figure was presented in full body and the orientation of the body, facing either the left or right hemifield vis-à-vis the head, was manipulated. The face was always presented as facing forward (i.e., toward the participant) and only the face contained information relevant to discriminate the emotional state of the person, rendering the body orientation task-irrelevant. Note that, by focusing the task on the face of the figure, the body was, at least in retinal terms, presented outside of foveal vision although perhaps not outside of attentional focus (because attention may spread to the body in an object-based manner; Scholl, 2001; Mozer and Vecera, 2005). The leftward or rightward body orientation was pitted against the spatial location of the required response (i.e., left or right button), with the orientation of the body being either compatible or incompatible with the required response location2.

We predicted that if body orientation is automatically processed and activates a directional spatial code then it should induce a “Simon effect.” Moreover, if attentional allocation on the basis of social cues is based on direct egocentric coding (centered on the observer), performance to compatible response locations should be facilitated relative to incompatible response locations. For example, a body oriented toward the right visual hemifield should facilitate responses made within that same hemifield in comparison to those made in the other hemifield. In contrast, however, Hietanen's model predicts that the directional spatial code is derived from an integration of head and body orientation coded in an allocentric frame of reference (centered on the observed person). If this is the case, then RTs to facial expressions requiring a right response should be faster when the figure's body is oriented to the left than to the right. In other words, this model predicts a “reverse compatibility effect” (similar to the “reverse validity effect” reported by Hietanen, 1999).

Methods

Participants

Eleven undergraduate students from the University of Leuven, (six male and five female, with a mean age of 28.2 years) participated in the experiment. All reported normal or corrected-to-normal vision and were naïve with respect to the purpose of the study. Our study was approved by the Department of Psychology Ethics Committee, University of Leuven and, accordingly, all participants provided informed consent prior to the experiment.

Apparatus and stimuli

Stimuli were displayed on a 19-inch CRT color monitor with a refresh rate of 75 Hz, driven by a Dell XPS 420 PC using E-prime software (version 2.0). Participants sat in a darkened room, with their head stabilized on a chin-rest which was positioned 80 cm from the monitor and aligned with the midpoint of the screen. Responses were collected using a serial response box, model 200A (Psychology Software Tools), which was placed in front of the participant and was aligned with the horizontal midpoint of the screen.

Stimuli consisted of human avatars which were presented against a white background. The images of the avatars subtended a visual angle of 9° in the vertical direction and the image of the head subtended a visual angle of approximately 1.1° × 0.7°. Images of avatars were presented in the center of the screen with the midline of the face aligned with the vertical axis of the screen. A fixation cross, centered on the mouth of the subsequent avatar, was presented prior to each stimulus image.

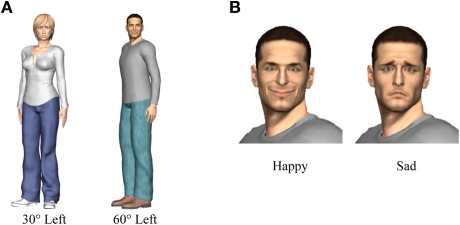

The images of the avatars were generated using the “Poser”™ computer graphics software (version 7.0, E-Frontier Inc.). Four different avatars were used, two male and two female, and each wore different clothing. Each was illuminated by three “infinite” light sources (a frontal light and two lights positioned 30° left and right of the avatar from an angle of 45° above the viewer), which created a smooth shading on the models. Images were rendered in color without external shadows. The models were presented in a standing position with their heads facing toward the participant. Eight different images were created from each avatar by combining two different facial expressions (happy and sad) with four different body orientations (60° left, 30° left, 30° right, and 60° right). The direction of the eye gaze and the head remained fixed for all images. Examples of the stimuli are shown in Figure 2.

Figure 2.

(A) Example of a female avatar with body orientation to the left with an angle of 30° (sad facial expression) and a male avatar with body orientation to the left with an angle of 60° (happy facial expression). (B) Close-up of happy and sad facial expressions of a male avatar.

Design

The protocol of the experiment was based on a “Simon” task. Participants had to discriminate between happy and sad facial expressions of the avatar (the relevant dimension) by manually pressing the left or right response button. Half of the participants pressed the left response button to indicate a happy face and the right button for a sad face, and vice versa for the remaining participants. The location of the response keys for the task was pitted against the hemifield location to which the body of the avatar was facing (the task-irrelevant dimension). The experiment was based on a fully factorial, three-way, within-subjects design in which overall body orientation (left or right), angle of the body orientation (30° or 60°), and the location of the response key (left or right) were manipulated.

Procedure

Each trial began with the presentation of a fixation cross for 760 ms, followed by an 80 ms blank interval, and then the stimulus. Participants were instructed to keep their eyes on the fixation cross and to respond to the facial expression as rapidly and accurately as possible once the avatar appeared. Participants responded with the index finger of their right or left hand (i.e., left index finger on left button for a happy face, right index finger on right button for a sad face or vice versa). The stimulus remained present until a button press was recorded. Feedback was provided following each response: correct responses were followed by a green plus sign (0.5° × 0.5°) and incorrect responses were followed by a red minus sign, and these symbols were presented at the location of the previous fixation cross for 240 ms. The inter-trial interval was set at 1000 ms.

Participants first completed a set of 32 practice trials, followed by six blocks of 96 experimental trials. Each experimental block included three repetitions of the 32 stimuli (four avatars, two facial expressions, two directions of body orientation, and two angles of the body orientation) which were presented in a random order. After each block, participants were encouraged to take a one-minute rest. The entire experiment lasted approximately 30 minutes after which participants were debriefed.

Results

The median RTs for correct responses and associated error rates to each of the eight conditions as a function of response location (left or right), hemifield orientation of the body (left or right), and angle of body orientation (30° or 60°) are presented in Table 1. We conducted separate three-way, within-subject analyses of variance (ANOVA) on these RT and error data.

Table 1.

Average median reaction times (ms) and error rates (%) as a function of response location, body orientation, and angle of body orientation.

| Left body orientation | Right body orientation | |||||

|---|---|---|---|---|---|---|

| 30° | 60° | Mean | 30° | 60° | Mean | |

| REACTION TIMES | ||||||

| Left response | 608 | 610 | 609 | 597 | 596 | 596 |

| Right response | 597 | 602 | 599 | 604 | 612 | 608 |

| ERRORS | ||||||

| Left response | 3.28 | 4.42 | 3.85 | 3.41 | 4.17 | 3.79 |

| Right response | 3.28 | 5.30 | 4.29 | 4.42 | 5.93 | 5.18 |

The analysis of the correct RT data revealed no main effects of response location, direction of body orientation, or angle of body orientation (all F-values < 1). However, the interaction between response location and direction of body orientation was significant, F(1, 10) = 14.756, MSe = 171.432, p < 0.01. None of the other interactions reached significance (all F-values < 1). As shown in Figure 3, participants were faster in deciding which emotional expression was expressed by the avatar when the body of the avatar was oriented toward the hemifield opposite to that of the required response. For example, when a happy face required a right response, participants were faster when the body of the avatar was oriented to the observer's left than when it was oriented to the right. This reverse stimulus-response compatibility effect by a task-irrelevant stimulus attribute, namely body orientation, was 11 ms on average (with a lower 95% confidence limit of 4.4 and an upper limit of 16.9; see Loftus and Masson, 1994). Note that we reanalyzed the data with the gender of the avatar as an additional variable, but never observed a main or interaction effect involving avatar gender that approached significance.

Figure 3.

Median reaction times (ms) as a function of response location and body orientation. Mean error rates (%) are shown between brackets.

The overall mean percentage error across participants was 4.28%. The within-subjects ANOVA revealed no main effects of response location (p > 0.22) or direction of body orientation (F < 1). However, a significant main effect of angle of body orientation was observed, F(1, 10) = 12.460, MSe = 3.253, p < 0.01: errors were more frequent when the angle of the body was oriented by 60° compared to 30° (M60° = 4.96% vs. M30° = 3.60%). All interactions failed to even approach significance (all F-values < 1). The mean error rates associated with the (non-significant) interaction between response location and direction of body orientation found in the RT data are shown in Figure 3. Importantly, these findings imply that the significant interaction between response location and direction of body orientation in the RT data cannot be explained by a speed-accuracy trade-off (error rates for compatible body-orientation/response-location trials were 4.51% on average, and 4.04% for incompatible trials).

Discussion

The present study examined whether the orientation of the body (i.e., trunk, arms, and legs) is automatically processed and generates a directional spatial code. To examine this issue, a Simon paradigm was adopted in which the task required processing of a non-spatially oriented feature of the stimulus, namely its facial expression, while at the same time the (task-irrelevant) direction in which the body was oriented was manipulated and this direction was either compatible or incompatible with the location of the response key. We found a systematic reverse compatibility effect: when the categorization of the facial expression required a left (right) response, RTs were faster when the body was oriented to the right (left) compared to when it was oriented to the same side as the response location. Even though the direction of the body was task-irrelevant, and presented in parafoveal/peripheral vision, it nevertheless generated a directional spatial code which subsequently affected response selection (Lu and Proctor, 1995; Zorzi and Umiltà, 1995). This Simon effect suggests that the processing of body direction is automatic.

In line with the “reverse validity effect” observed by Hietanen (1999), the “reverse compatibility effect” observed here suggests that the orientation of the trunk does not generate a spatial code in an observer-based frame of reference but rather in an allocentric frame of reference centered on the observed person. In this sense, the present study provides further evidence in support of Hietanen's model (1999, 2002) on the orienting of social attention in which spatially defined directional codes which automatically trigger attention shifts are activated based on referential coding of body parts (i.e., in an allocentric-based frame of reference). In particular, Hietanen suggested a hierarchical referencing scheme in which gaze direction is referenced to head orientation, and head orientation in turn is referenced to body orientation. Accordingly, in the present experiment, the combined hierarchical coding of head orientation relative to body orientation activated a spatial code in the opposite direction of the body orientation (even though the head of the figure was facing toward the observer), producing the observed reverse compatibility effect.

Furthermore, these findings are in contrast to those predicted by a model in which different social cues (e.g., eye, head, and body direction) are processed in parallel, and have independent and additive effects as suggested by Langton and colleagues (Langton, 2000; Langton et al., 2000). According to their model, a spatial code consistent with the direction of the body from the observer's point of view should have been activated, predicting a compatibility effect (i.e., faster RTs when response location and body orientation are in agreement), which was not what was found.

We speculate that one reason why allocentric coding may be important is that, when body and gaze are not oriented congruently, the perception of implied motion is induced (Freyd, 1983; Kourtzi and Kanwisher, 2000; Jellema and Perrett, 2003a, 2006). For example, a human figure with its trunk and legs oriented to the left (of the viewer) and a head facing front, may imply a rotating action of the head from a resting posture with congruent head and body direction (toward the left of the viewer) to the figure's allocentric left (to the right of the viewer). Thus, the incongruently oriented head and body may have induced some form of implied motion perception which activated a dynamically based directional spatial code as a result of the allocentric coding of body parts.

Neurophysiological and neuroimaging studies have provided evidence that the superior temporal sulcus (STS) region of the brain, which is known to be activated by images of the face and body, is also activated by images which imply body-part motion and more generally to stimuli that signal the actions of another individual (for a discussion of these studies see for example, Allison et al., 2000; Kourtzi et al., 2008). Human fMRI (e.g., Grossman and Blake, 2002; Beauchamp et al., 2003; Peuskens et al., 2005; Thompson et al., 2005) and rTMS studies (Grossman et al., 2005) documented that STS regions show a strong specialization for the perception of actions and body postures. This was confirmed in single-cell recording studies in monkeys (e.g., Jellema et al., 2000; Barraclough et al., 2006; Jellema and Perrett, 2003a, b, 2006; Vangeneugden et al., 2009). Moreover, of the cells that respond to static images of human figures, approximately 60% are sensitive to the degree of articulation shown by the human. Furthermore, about half of the cells sensitive to images of human figures prefer implied motion (Barraclough et al., 2006). Jellema and Perrett (2003a) found cells in the anterior part of the STS coding for a particular articulated3 action both when actually presented and when implied in a still image (see also Vangeneugden et al., 2011, for recent evidence of neurons in STS responding to momentary pose during an action sequence). Furthermore, the cells studied did not respond to the sight of a non-articulated static posture, which formed the starting-point of the action, but responded vigorously to the articulated static posture formed by the end-point of the action. It is worth noting that the stimuli of articulated static postures which formed the end-point of the actions in that study were very similar to the stimuli used in the present study. The activated directional spatial code causing the reverse compatibility effect in the present study may reflect the influence of processes related to implied motion processing in STS. However, although it is known that reciprocal connections between STS and the inferior parietal lobule exist (Harries and Perrett, 1991; Seltzer and Pandya, 1994; Rizzolatti and Matelli, 2003), a link between implied motion processing in STS and attentional function remains speculative (Jellema and Perrett, 2006) until further investigations are conducted.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This research was funded by a Trinity College Dublin, School of Psychology Research Studentship (awarded to Iwona Pomianowska), a grant (No. 06/IN.1/I96) from Science Foundation Ireland (awarded to Fiona N. Newell), and a grant (No. FWO G.0621.07) from the Research Foundation Flanders (FWO) (awarded to Karl Verfaillie).

Footnotes

Note that an alternative to the hierarchical model has been proposed. In contrast to Hietanen's (1999, 2002) studies, a paradigm which required a more explicit discrimination of gaze direction (i.e., an adapted Stroop paradigm in which participants had to respond either to the direction of gaze or to the orientation of the head and gaze and body information were placed into conflict or not) provides evidence for a facilitation of congruent eye gaze and head direction on performance and inhibition of incongruent eye-head directions (e.g., Langton, 2000; Langton et al., 2000; Seyama and Nagayama, 2005). On the basis of these findings, Langton and colleagues proposed a model in which the directional information provided by different social cues is processed in parallel (even if some cues may be completely task-irrelevant) and these cues have independent and additive effects on the discrimination of the perceived direction of social attention. We will come back to this alternative model in the Discussion.

To avoid confusion, here and in the remainder of this article, we will use the terms “compatible” and “incompatible” to refer to the relationship between the required response locations (based on the emotional expression displayed) and the task-irrelevant stimulus attribute, body orientation. The terms “congruent” and “incongruent” will be used to refer to the relationship between head (gaze) and body orientation.

Following Jellema and Perrett (2003a) we define articulated actions as actions where one body part (e.g., head) moves with respect to the remainder of the body which remains static. Non-articulated actions are actions where the equivalent body parts do not move with respect to each other, but move as a whole. Similarly, articulated static body postures contain a torsion or rotation between parts, while non-articulated postures do not.

References

- Allison T., Puce A., McCarthy G. (2000). Social perception from visual cues: role of the STS region. Trends Cogn. Sci. 4, 267–278 10.1016/S1364-6613(00)01501-1 [DOI] [PubMed] [Google Scholar]

- Ansorge U. (2003). Spatial Simon effects and compatibility effects induced by observed gaze direction. Vis. Cogn. 10, 363–383 [Google Scholar]

- Baron-Cohen S. (1995). Mindblindness: An Essay on Autism and Theory of Mind. Cambridge, MA: MIT Press [Google Scholar]

- Barraclough N. E., Xiao D., Oram M. W., Perrett D. I. (2006). The sensitivity of primate STS neurons to walking sequences and to the degree of articulation in static images. Prog. Brain Res. 154, 135–148 10.1016/S0079-6123(06)54007-5 [DOI] [PubMed] [Google Scholar]

- Beauchamp M. S., Lee K. E., Haxby J. V., Martin A. (2003). FMRI responses to video and point-light displays of moving humans and manipulable objects. J. Cogn. Neurosci. 15, 991–1001 10.1162/089892903770007380 [DOI] [PubMed] [Google Scholar]

- Becchio C., Bertone C., Castiello U. (2009). How the gaze of others influences object processing. Trends Cogn. Sci. 12, 254–258 10.1016/j.tics.2008.04.005 [DOI] [PubMed] [Google Scholar]

- Birmingham E., Kingstone A. (2009). Human social attention: a new look at past, present, and future investigations. Ann. N.Y. Acad. Sci. 1156, 118–140 10.1111/j.1749-6632.2009.04468.x [DOI] [PubMed] [Google Scholar]

- Driver J., Davis G., Ricciardelli P., Kidd P., Maxwell E., Baron-Cohen S. (1999). Gaze perception triggers reflexive visuospatial orienting. Vis. Cogn. 6, 509–540 [Google Scholar]

- Emery N. J. (2000). The eyes have it: the neuroethology, function and evolution of social gaze. Neurosci. Biobehav. Rev. 24, 581–604 10.1016/S0149-7634(00)00025-7 [DOI] [PubMed] [Google Scholar]

- Freyd J. J. (1983). The mental representation of movement when static stimuli are viewed. Percept. Psychophys. 33, 575–581 [DOI] [PubMed] [Google Scholar]

- Friesen C. K., Kingstone A. (1998). The eyes have it: Reflexive orienting is triggered by non-predictive gaze. Psychon. Bull. Rev. 5, 490–495 [Google Scholar]

- Frischen A., Bayliss A. P., Tipper S. P. (2007). Gaze cueing of attention: visual attention, social cognition, and individual differences. Psychol. Bull. 133, 694–724 10.1037/0033-2909.133.4.694 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galantucci B., Sebanz N. (2009). Joint action: current perspectives. Top. Cogn. Sci. 1, 255–259 [DOI] [PubMed] [Google Scholar]

- Gallese V., Keysers C., Rizzolatti G. (2004). A unifying view of the basis of social cognition. Trends Cogn. Sci. 8, 396–403 10.1016/j.tics.2004.07.002 [DOI] [PubMed] [Google Scholar]

- Garner W. R., Hake H. W., Eriksen C. W. (1956). Operationism and the concept of perception. Psychol. Rev. 63, 149–159 [DOI] [PubMed] [Google Scholar]

- Gibson J. J., Pick A. (1963). Perception of another's person looking. Am. J. Psychol. 76, 86–94 [PubMed] [Google Scholar]

- Grossman E. D., Batelli L., Pascual-Leone A. (2005). Repetitive TMS over posterior STS disrupts perception of biological motion. Vision Res. 45, 2847–2853 10.1016/j.visres.2005.05.027 [DOI] [PubMed] [Google Scholar]

- Grossman E. D., Blake R. (2002). Brain areas active during visual perception of biological motion. Neuron 35, 1167–1175 10.1016/S0896-6273(02)00897-8 [DOI] [PubMed] [Google Scholar]

- Harries M. H., Perrett D. I. (1991). Visual processing of faces in temporal cortex: physiological evidence for a modular organization and possible anatomical correlates. J. Cogn. Neurosci. 3, 9–24 [DOI] [PubMed] [Google Scholar]

- Hietanen J. K. (1999). Does your gaze direction and head orientation shift my visual attention? Neuroreport 10, 3443–3447 [DOI] [PubMed] [Google Scholar]

- Hietanen J. K. (2002). Social attention orienting integrates visual information from head and body orientation. Psychol. Res. 66, 174–179 10.1007/s00426-002-0091-8 [DOI] [PubMed] [Google Scholar]

- Jellema T., Baker C. I., Wicker B., Perrett D. I. (2000). Neural representation for the perception of the intentionality of actions. Brain Cogn. 44, 280–302 10.1006/brcg.2000.1231 [DOI] [PubMed] [Google Scholar]

- Jellema T., Perrett D. I. (2003a). Cells in monkey STS responsive to articulated body motions and consequent static posture: a case of implied motion? Neuropsychologia 41, 1728–1737 10.1016/S0028-3932(03)00175-1 [DOI] [PubMed] [Google Scholar]

- Jellema T., Perrett D. I. (2003b). Perceptual history influences neural responses to face and body postures. J. Cogn. Neurosci. 15, 961–971 10.1162/089892903770007353 [DOI] [PubMed] [Google Scholar]

- Jellema T., Perrett D. I. (2006). Neural representations of perceived bodily actions using a categorical frame of reference. Neuropsychologia 44, 1535–1546 10.1016/j.neuropsychologia.2006.01.020 [DOI] [PubMed] [Google Scholar]

- Kourtzi Z., Kanwisher N. (2000). Activation in human MT/MST by static images with implied motion. J. Cogn. Neurosci. 12, 48–55 [DOI] [PubMed] [Google Scholar]

- Kourtzi Z., Krekelberg B., van Wezel R. J. A. (2008). Linking form and motion in the primate brain. Trends Cogn. Sci. 12, 230–236 10.1016/j.tics.2008.02.013 [DOI] [PubMed] [Google Scholar]

- Langton S. R. H. (2000). The mutual influence of gaze and head orientation in the analysis of social attention direction. Q. J. Exp. Psychol. 53A, 825–845 10.1080/713755908 [DOI] [PubMed] [Google Scholar]

- Langton S. R. H., Watt R. J., Bruce V. (2000). Do the eyes have it? Cues to the direction of social attention. Trends Cogn. Sci. 4, 50–59 10.1016/S1364-6613(99)01436-9 [DOI] [PubMed] [Google Scholar]

- Loftus G. R., Masson M. E. J. (1994). Using confidence intervals in within-subject designs. Psychon. Bull. Rev. 1, 476–490 [DOI] [PubMed] [Google Scholar]

- Lu C. H., Proctor R. W. (1995). The influence of irrelevant location information on performance: a review of the Simon and spatial Stroop effects. Psychon. Bull. Rev. 2, 174–207 [DOI] [PubMed] [Google Scholar]

- Manera V., Becchio C., Schouten B., Bara B. G., Verfaillie K. (2011). Communicative interactions improve visual detection of biological motion. PLoS One 6, e14594. 10.1371/journal.pone.0014594 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Manera V., Schouten B., Becchio C., Bara B. G., Verfaillie K. (2010). Inferring intentions from biological motion: a stimulus set of point-light communicative interactions. Behav. Res. Methods 42,168–178 10.3758/BRM.42.1.168 [DOI] [PubMed] [Google Scholar]

- Mozer M. C., Vecera S. P. (2005). “Object-based and space-based attention,” in Neurobiology of attention, eds Itti L., Rees G., Tsotsos J. K. (New York, NY: Elsevier; ), 130–134 [Google Scholar]

- Peuskens H., Vanrie J., Verfaillie K., Orban G. (2005). Specificity of regions processing biological motion. Eur. J. Neurosci. 21, 2864–2875 10.1111/j.1460-9568.2005.04106.x [DOI] [PubMed] [Google Scholar]

- Posner M. I. (1980). Orienting of attention. Q. J. Exp. Psychol. 32, 3–25 [DOI] [PubMed] [Google Scholar]

- Rizzolatti G., Matelli M. (2003). Two different systems form the dorsal visual system: anatomy and functions. Exp. Brain Res. 153, 146–157 10.1007/s00221-003-1588-0 [DOI] [PubMed] [Google Scholar]

- Scholl B. J. (2001). Objects and attention: the state of the art. Cognition 80, 1–46 10.1016/S0010-0277(00)00152-9 [DOI] [PubMed] [Google Scholar]

- Sebanz N., Knoblich G. (2009). Prediction in joint action: what, when, and where. Top. Cogn. Sci. 1, 353–367 [DOI] [PubMed] [Google Scholar]

- Seltzer B., Pandya D. N. (1994). Parietal temporal and occipital projections to cortex of the superior temporal sulcus in the rhesus monkey: a retrograde tracer study. J. Comp. Neurol. 243, 445–463 10.1002/cne.903430308 [DOI] [PubMed] [Google Scholar]

- Seyama J., Nagayama R. S. (2005). The effect of torso direction on the judgment of eye direction. Vis. Cogn. 12, 103–116 [Google Scholar]

- Simon J. R., Craft J. L. (1970). Effects of an irrelevant auditory stimulus on visual choice reaction time. J. Exp. Psychol. 86, 272–274 [DOI] [PubMed] [Google Scholar]

- Thompson J. C., Clarke M., Stewart T., Puce A. (2005). Configural processing of biological motion in human superior temporal sulcus. J. Neurosci. 25, 9059–9066 10.1523/JNEUROSCI.2129-05.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vangeneugden J., Pollick F., Vogels R. (2009). Functional differentiation of macaque visual temporal cortical neurons using a parametric action space. Cereb. Cortex 19, 593–611 10.1093/cercor/bhn109 [DOI] [PubMed] [Google Scholar]

- Vangeneugden J., De Mazière P. A., Van Hulle M. M., Jaeggli T., Van Gool L., Vogels R. (2011). Distinct mechanisms for coding of visual actions in macaque temporal cortex. J. Neurosci. 31, 385–401 10.1523/JNEUROSCI.2703-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Verfaillie K., Daems A. (2002). Representing and anticipating human actions in vision. Vis. Cogn. 9, 217–232 [Google Scholar]

- Zorzi M., Mapelli D., Rusconi E., Umiltà C. (2003). Automatic spatial coding of perceived gaze direction is revealed by the Simon effect. Psychon. Bull. Rev. 10, 423–429 [DOI] [PubMed] [Google Scholar]

- Zorzi M., Umiltà C. (1995). A computational model of the Simon effect. Psychol. Res. 58, 193–205 [DOI] [PubMed] [Google Scholar]