Sir,

Inferential statistics is being used to make inferences from our data to more general conditions, while descriptive statistics is used simply to describe what is there in our data. With inferential statistics, we try to reach conclusions that extend beyond our immediate data alone. For instance, we use inferential statistics to try to infer from the sample data what the population might think. Hypothesis testing (using P-values) and point estimation (using confidence intervals) are two concepts of inferential statistics that help in making inference about population from samples.[1]

Hypothesis testing is a method of inferential statistics. Research begins with a hypothesis about a population parameter called the null hypothesis. The null hypothesis states that there is no difference in the outcome between the intervention group and the control group. Data are then collected and viability of the null hypothesis is determined in light of the data. Rejecting the null hypothesis provides evidence that the treatment had an effect. Now, the aim of tests of significance is to calculate the “probability” that an observed outcome has merely happened by chance. This probability is known as the “P-value”.[2,3] If the P-value is small (P<0.05), then null hypothesis can be rejected and we can assert that findings are ‘statistically significant’. Rejecting the null hypothesis means that the findings are unlikely to have arisen by chance and rejecting the idea that there is no difference between the two treatments.When P<0.05, the degree of difference or association being tested would occur by chance only five times out of a hundred. When P<0.01, the difference or association being observed would occur by chance only once in a hundred.[2–4] Hence,if our goal is to assess whether trial results are likely to have occurred simply through chance or they are real, assuming that there is no real difference between new intervention and old, then P-value calculation can be helpful.It should, however, be noted that non-significance does not mean ‘no effect’. Small studies may often report non-significance even when there are important, real effects, which otherwise a large study could have detected. Moreover, statistical significance does not necessarily mean a clinically important observation. It is the size of the effect that determines the importance and not the statistical significance. Moreover, hypothesis testing cannot tell us how large or small the effect is. If we want an estimate of the actual effect, we need the confidence interval.[2,4]

Confidence interval (CI) is defined as ‘a range of values for a variable of interest constructed so that this range has a specified probability of including the true value of the variable. The specified probability is called the confidence level, and the end points of the confidence interval are called the ‘confidence limits’. By convention, the confidence level is usually set at 95%. The 95% CI is defined as “a range of values for a variable of interest constructed so that this range has a 95% probability of including the true value of the variable”. In simple words, it means that we can be 95% sure that truth is somewhere between 95% confidence interval. Because we are only 95% confident, there is a 5% probability that we might be wrong i.e. 5% probability that the true value might lie either below or above the two confidence limits. Thus, the 95% CI corresponds to hypothesis testing with P<0.05. Hypothesis testing produces a decision about any observed difference: either that the difference is ‘statistically significant’ or that it is ‘statistically insignificant,’ whereas confidence interval gives an idea about the range of the observed effect size.[2–4] Major advantage of confidence interval is that we can also assess significance from a confidence interval. If the confidence interval captures the value reflecting ‘no effect’, this represents a difference that is statistically nonsignificant. If the confidence interval does not enclose the value reflecting ‘no effect’, this represents a difference that is statistically significant. Thus, not only ‘statistical significance’ (P<0.05) can be inferred from confidence intervals, – but also these intervals show the largest and smallest effects that are likely to take place.[2,4]

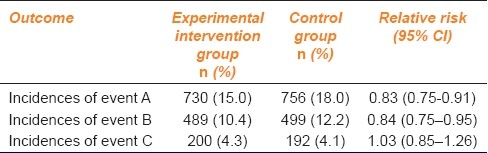

For absolute risk reduction, the value of no effect is zero because if a specific intervention leads to zero risk reduction (i.e. risk in control group minus risk in intervention group = 0), this means that it has no effect compared with the control. For ratios such as relative risk and odds ratio, the value of no effect is one because if the ratio is equal to one this means that the incidence of outcome in the intervention group is equal to that in the control group or there is no difference in the outcome between the intervention group and the control group.[2] Now let us consider an example described in Table 1. For the first outcome measure, the observed difference is statistically significant at the 5% level, because the interval does not include a relative risk of one. Similarly for second outcome measure, the observed difference is also statistically significant at the 5% level, because the interval does not include a relative risk of one. But for the third outcome measure which shows a slight increase in risk in the experimental intervention group, the observed difference is not statistically significant at the 5% level, because the interval does include a relative risk of one.[2]

Table 1.

Outcome measure and statistical significance[2]

Another advantage of confidence interval compared to hypothesis testing is the additional information that it provides. The upper and lower limits of the interval give us information on how big or small the true effect might plausibly be, and the width of the confidence interval also gives us useful information. As a rule of thumb, it can be assumed that the narrower is the CI the better it is. If the confidence interval is narrow, we can be quite confident that any effects far from this range have been ruled out by the study.[2,4] However, by definition, there will always be 5% risk to assume a significant difference when actually there is none. Thus, you may be misled by chance into believing in something that is not real (type I error). This is an unavoidable feature of statistical significance, whether it is assessed using confidence intervals or P-values.[4]

Thus, it can be concluded, as suggested by Akobeng AK (2008), that confidence interval is more informative than P-value. The P-value can be derived from the confidence interval; it provides no information on clinical importance and it is less informative. Hence, the practice of reporting confidence interval by the authors of the research articles is highly recommended.[5] However, it is not unusual to find both statistical measures to be reported in research articles. In fact, du Prel et al., have suggested that the two statistical concepts are complementary and not contradictory because if the size of the sample and the dispersion or a point estimate are known, confidence intervals can be calculated from P-values, and vice versa.[3]

References

- 1.Montori VM, Kleinbart J, Newman TB, Keitz S, Wyer PC, Moyer V, et al. Tips for learners of evidence-based medicine: 2. Measures of precision (confidence intervals) CMAJ. 2004;171:611–5. doi: 10.1503/cmaj.1031667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Davies HT, Crombie IK. What are confidence intervals and P-values? 2009. Apr, [Last cited on 2011Apr 16]. Available from: http://www.medicine.ox.ac.uk/bandolier/painres/download/whatis/What_are_Conf_Inter.pdf .

- 3.Du Prel JB, Hommel G, Röhrig B, Blettner M. Confidence interval or P-value?: Part 4 of a series on evaluation of scientific publications. Dtsch Arztebl Int. 2009;106:335–9. doi: 10.3238/arztebl.2009.0335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Attia A. Why should researchers report the confidence interval in modern research? Middle East Fertil Soc J. 2005;10:78–81. [Google Scholar]

- 5.Akobeng AK. Confidence intervals and P-values in clinical decision making. Acta Paediatr. 2008;97:1004–7. doi: 10.1111/j.1651-2227.2008.00836.x. [DOI] [PubMed] [Google Scholar]