Abstract

Background

Effectiveness of clinical information systems to improve nursing and patient outcomes depends on human factors including system usability, organizational workflow, and user satisfaction.

Objective

To examine to what extent residents, family members, and clinicians find a sensor data interface used to monitor elder activity levels usable and useful in an independent living setting.

Methods

Three independent expert reviewers conducted an initial heuristic evaluation. Subsequently, 20 end users (5 residents, 5 family members, 5 registered nurses, and 5 physicians) participated in the evaluation. During the evaluation each participant was asked to complete three scenarios taken from three residents. Morae recorder software was used to capture data during the user interactions.

Results

The heuristic evaluation resulted in 26 recommendations for interface improvement; these were classified under the headings content, aesthetic appeal, navigation, and architecture, which were derived from heuristic results. Total time for elderly residents to complete scenarios was much greater than for other users. Family members spent more time than clinicians, but less time than residents to complete scenarios. Elder residents and family members had difficulty interpreting clinical data and graphs, experienced information overload, and did not understand terminology. All users found the sensor data interface useful for identifying changing resident activities.

Discussion

Older adult users have special needs that should be addressed when designing clinical interfaces for them, especially information as important as health information. Evaluating human factors during user interactions with clinical information systems should be a requirement before implementation.

Keywords: sensor networks, passive monitoring, gerontology, user-centered design, human factors

The use of clinical information systems to gather health information is increasingly important for clinicians, and patients and their families. Clinical information systems are used by healthcare providers to support clinical decision making to deliver appropriate patient care and to alert clinicians to potential adverse events. Patients and their family members are also increasingly savvy in use of information systems to access vital healthcare information (Cresci, Yarandi, & Morrell, 2010). Effectiveness of clinical information systems to provide usable information for clinicians to appraise and predict nursing and patient outcomes depends on several factors, including usability of the information system, presentation of data, and satisfaction during interactions with the information system (Alexander & Staggers, 2009).

In independent living settings, use of technology such as nonwearable sensors can facilitate earlier detection of changes like reduced activity in an elder’s apartment and alert providers to intervene earlier (Courtney, Demiris, & Hensel, 2007). These systems provide new ways of detecting subtle changes that do not require traditional face-to-face assessment of individual residents. However, these technologies must first be evaluated to understand human interactions with them, how the technology functions, and the environment. This paper includes an evaluation of a clinical information system composed of passive sensors used to track human motion and physiologic parameters of elders residing in an independent living facility called TigerPlace. Residents who are living in independent settings such as TigerPlace are typically vulnerable to decline, frail and often require some nursing care; therefore, the terms patient and resident are used interchangeably for the purposes of this paper. The specific aim of this research was to examine to what extent residents, family members, and clinicians find the sensor data interface usable and useful in an independent living setting.

Background

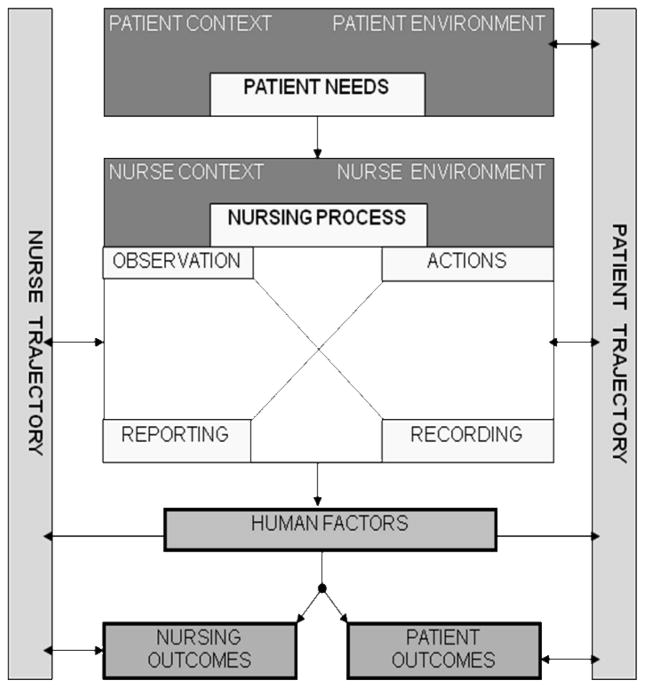

Clinicians and patients interact with computers on many levels. Staff nurses and physicians collect information from their clients, store client information in clinical information systems, and then retrieve that information for making clinical decisions. The information is used by clinicians to keep an ongoing record of events, actions, behaviors, perceptions, and progress made during health care encounters. Effectiveness of clinical information systems that allow nursing staff to predict nursing and patient outcomes depends on the usability of the system, organizational workflow, and satisfaction with technology. Therefore, understanding human interactions with information technology has the potential to improve clinical processes and patient outcomes. The following research was guided by a framework for nursing informatics called the Nurse-Patient Trajectory Framework (Alexander, 2007). The framework utilizes nursing process theory, human factors, nursing and patient trajectories, and outcomes to evaluate clinical information systems.

The Nurse-Patient Trajectory Framework

The Nurse-Patient Trajectory Framework can be used to predict which information structures, processes, and technologies can be used to achieve desired outcomes for patients and providers (Bakken, Stone, & Larson, 2008; Figure 1). Research using this framework was designed to focus specifically on how nurses and patients interact with information systems and how those interactions influence decision making and outcomes. However, it is also important to consider other people who interact with nurses and patients as they make decisions and arrive at outcomes along their trajectories. For example, nurses assess resident conditions, determine from these assessments that resident needs may have changed, take action and alert physicians or family members that needs have changed. A thorough discussion of the components of the Nurse Patient Trajectory Framework have been published elsewhere (Alexander G.L., 2007) and will not be discussed here. However, human factors are a central concept for this paper and will be described briefly.

Figure 1.

The Nurse-Patient Trajectory Framework

Human factors

For the purpose of this paper human factors refers to a discipline focused on optimizing the relationship between technology and humans (Alexander, 2010; Czaja & Nair, 2006). For example, in a study comparing older users and nonusers in use of Internet health information resources, nonusers are more likely to make health care decisions based on information found offline when compared to Internet users with access to more information (Taha, Sharit, & Czaja, 2009). Nonusers who do not use the Internet typically obtain a larger percentage of health information from newspapers, popular magazines, or television, but most of the time they turn to family and friends as a source of information (Taha et al., 2009). In health care, human-factors researchers investigate the relationships between patients, their families, and providers; tools they use, such as the Internet; the environments in which they live and work; and the tasks they perform. Human factors concepts guided the selection of methods for this study.

Passive Sensor Systems in TigerPlace

The purpose of this paper is to evaluate whether or not residents in an independent living center (TigerPlace), residents’ family members, and health care providers find an interface designed to monitor resident activity levels useful and usable. During the research human factors that are important for the design of sensor systems were examined. A variety of passive infrared sensors are available for detecting motion, location, falls, and functional activity and are installed in resident’s apartments in an independent living setting called TigerPlace. A thorough description of all sensor modalities and other references used are described elsewhere (Skubic, Alexander, Popescu, Rantz, & Keller, 2009). Sensor systems are becoming much more common in research literature, but mostly in other disciplines than nursing, such as engineering and lived environments and aging. For example, remote monitoring technology has been installed in community-living elders residences to monitor meal preparation, physical activity, vitamin use, and personal care (Reder, Ambler, Philipose, & Hedrick, 2010). Researchers indicated that elders and their caregivers experienced peace of mind and greater perceived safety, well-being, and independence as a result of new monitoring systems. Another instance includes an Automated Technology for Elder Assessment, Safety and Environment (AT EASE) remote monitoring system for elders in independent living residences (Feeney-Mahoney, Mahoney, & Liss, 2009). User subgroups interviewed (residents, family members, management) all shared concerns about safety and well-being and stated there is a need for enhanced monitoring using sensors.

In this study, sensor information was operationalized as where a patient who is a resident in independent living spends most of their time in an apartment, location in their residence, and physiological data including measures of restlessness and vital signs, and where certain activities occur in the elder’s apartment. For example, functional activity data might show that a resident spends some time during the day in the kitchen and uses the stove, is frequently up and out of bed during the night using the bathroom, and while in bed experiences some periods of restlessness. These data provide an assessment of the baseline trajectories experienced by patients in TigerPlace, can be linked to declining activity levels, and can be used as another source of information for the electronic health record (Rantz et al., 2010).

Every data element in the network of sensor data can be visualized on a computer screen thorough a Web-based interface that can be used to determine baseline activity patterns. During this study, researchers wanted to provide an opportunity for all types of end users including residents who lived with the sensors and their family members, nurses, and physicians to interact with the interface using scenario-based think-aloud methods. The aim was to determine if these end users perceived the sensor interface as usable and useful.

Interface development for sensor network

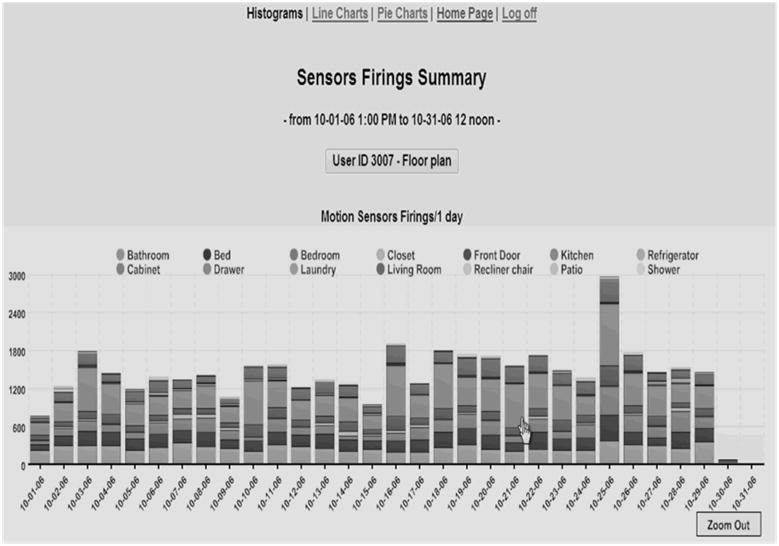

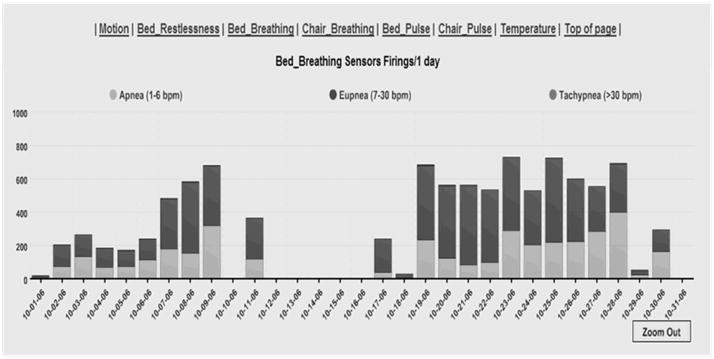

A subgroup of the larger Eldertech research team interested in human computer interaction began meeting biweekly in April 2007. Members of the interdisciplinary group included nurses with specific expertise in gerontology and others with expertise in health informatics, engineering, clinical medicine, information science, and physical therapy. By August 2007, the interdisciplinary researchers developed a data sensor interface with capability to illustrate sensor data in different formats. For example, data could be viewed in histograms, line graphs, and pie charts; users could query sensor data by date and time of day for each sensor location (e.g., bed, bedroom, bathroom, closet, front door, kitchen, living room shower); and users could drill down into smaller increments of time, ranging across month, week, day, and hour to observe trends in different sensor data over a length of time. Maps of residents’ apartments indicating sensor placement and range of sensors were integrated into the interface for users. Development occurred through iterative reviews of the sensor interface and discussions between clinicians who had expertise in caring for gerontological populations and designers of the information system. Examples of the interface are shown in Figures 2a and 2b. The interface was then evaluated using sequential approaches, including an expert heuristic review.

Figure 2.

Figure 2a: Activity Motion Sensor Data

Figure 2b: Bed Breathing Sensor Data

Expert review of the sensor interface

Three reviewers trained in usability research and who were independent of the research team were hired to complete an expert review of the sensor data interface (Alexander et al., 2008). This review was completed prior to end user assessments. A heuristic evaluation checklist was created to suit the interface being evaluated, keeping in mind the audience of the Web site--elderly residents, family members, and health care providers. Individual reviews were conducted before the reviewers came together for a meeting to discuss each of the items under the criteria. An overview of this heuristic evaluation is provided (see Table, Supplemental Digital Content 1, which includes a listing of heuristics used, with a brief heuristic description, ratings given by expert reviewers, and selected comments made by the reviewers).

Expert heuristic evaluation of the interface

There were 87 items distributed under the 16 heuristic criteria. Twenty-nine of these items were not applicable to the interface, primarily because the interface was at a very early stage of development. The number of criteria meeting and not meeting heuristic criteria (or not applicable), percent agreement with applicable criteria, and severity ratings have been described previously (Alexander et al., 2008). Based on this review, the expert team made 26 recommendations for improvement, these recommendations were categorized under four themes derived from the heuristic evaluation including content (n = 4), aesthetic appeal (n = 12), navigation (n = 9), and architecture (n = 1). Interface improvements in each of these categories would make the interface more usable and useful for end users. Interface design issues were addressed by the research team during iterative reviews prior to the usability assessment.

Specific recommendations within the content area included constructing clearer more descriptive titles; for example, better labeling methods, adding a description of the interface on the home page by including descriptions about interface utilities, and consistently maintaining the same order of legends and keys throughout.

Aesthetic appeal improves as dialogue on the interface becomes more relevant to the users. The sensor interface could be improved by adding error messages when a user clicks on an item not associated with hyperlinks, such as a graph title. Furthermore, aesthetic appeal would be improved by minimizing the number of new windows that open with every click of the mouse and when using zooming in features such as date fields. Finally, providing greater distinction between line colors on graphs would improve visibility and readability.

Navigation through the sensor interface could be enhanced by making the hyperlinked texts more visible with conventional hyperlink colors; that is, blue for unvisited sites and purple for visited sites. Another improvement would be to add navigation options that direct users to specific parts of the interface, such as a Home link that would send users back to the home page of the interface where they can choose a new resident. Furthermore, it was recommended to add prompts that need to be approved by the user before the application closes. This feature would prevent the user from accidently exiting from a page before they complete reviews.

Finally, the architecture of the interface could be improved by giving users better options for viewing data; for example, giving the user the ability to decide how they want to look at the data. Architectural options could enhance efficiency of use by enabling users to look at graphs all at one time on one scrollable page or individual graphs one at a time. Anchors could be used after each graph to navigate the user automatically and consistently back to the top of a page to select preferred views of each graph.

Following the expert heuristic review, researchers continued to meet and the interface was refined using some of the suggestions mentioned. Once the research team had addressed all the recommendations through iterative reviews of the interface we began conducting usability assessments. Approval for all research was granted through the University’s Institutional Review Board.

Method

Setting

Since 2005, the Eldertech research team has been developing a passive sensor technology system that detects motion activities in residents in an independent living setting, TigerPlace (Rantz et al., 2005). Sensor systems have been installed in 34 residents’ apartments who desire to age in place. The longevity of the sensor data collection enables collection of data and developing algorithms for identifying declining patient trajectories such as increased bed restlessness or decline in a resident’s normal daily activity patterns.

Usability Assessments

Both the expert review and end user assessments were completed with the assistance of The Information Experience (IE) Lab located on the campus of the University of Missouri. The IE Lab functions as a testing and evaluation area with network enabled data collection workstations using advanced data collection software such as Morae© usability assessment software (Techsmith, Inc., Okemos, MI).

End user usability assessment

Following the expert review, updates were made to the interface during iterative reviews conducted by the research team to correct some of findings from the heuristic evaluation before end user assessments were conducted. For example, labels were added to graphs, color schemes were modified, and font sizes were adjusted so that users could find information more easily on the interface. Following the updates, four groups of end users (residents, family members, nurses, and physicians) were recruited to evaluate the interface. The target was 5 members per group. This decision was based on evidence that most usability problems are detected with the first 5 subjects, running additional subjects is not likely to reveal new information, and great return on investment is realized with small groups (Turner, Lewis, & Nielsen, 2006).

Residents who participated in this usability evaluation were frail and had multiple chronic conditions and most had mobility problems, representative of most TigerPlace residents. Therefore, resident usability assessments were conducted at the independent living facility with the assistance of the IE Lab. Family members and health care providers came to the IE Lab for their usability assessment.

Interviews were conducted with each participant individually in a quiet private location. Individual assessments were recorded directly to a computer hard drive for later review and analysis. Participants were informed that they would be participating in a usability study designed to explore their interactions with a sensor data interface. Each testing site had two rooms: one for private usability testing and one for observation of usability participants and capturing interactions using Morae software. Networked computers were used by IE lab staff and other researchers to observe interactions of participants and record observations simultaneously.

Data acquisition

Morae recorder software was used to capture data during each interaction. Observational notes were also recorded by remote observers during the testing. The interaction data included video of the participant using the sensor data interface; audio in the immediate environment; quantitative data such as keystrokes, mouse clicks, and window events; text presented to the user; and webpage changes. Event markers and comments were recorded and are summarized in the heuristic table (see Table, Supplemental Digital Content 1). Event markers were preset to represent situations like user needs help, user frustrated, start task, and end task.

Test set-up

Prior to testing, each participant was given instruction on the user interface by one of the research team members (a registered nurse certified in human computer interaction methods and who had experience with the interface) using a training manual designed to provide standardized training about the sensor system for each participant. This instruction provided information on the purpose of the sensor systems, how to navigate through the screens to get through the data, and details about how the data can be used. Each participant also was informed that Morae software was used to capture and record their interactions with the sensor data interface.

Participants were given three scenarios to complete on three distinct residents who had sensors installed in their apartments (see Table, Supplemental Digital Content 2, which provides study training scenarios). Scenarios were developed by expert geriatric registered nurses who were part of the research team investigating the sensor data network. Scenarios were developed around periods when residents experienced known sentinel events (e.g., hospitalization, decline in health, falls). Scenario 1 included sensor data around a period of time when a resident experienced a hospitalization following an acute illness at home. Scenario 2 included a period of time when a resident was not feeling well, had decreased activity levels in the apartment, and was spending more time in bed. Scenario 3 included information on a restless resident who was moving back and forth between a bed and a chair at night in order to get more comfortable during sleep. Actual resident data were de-identified.

Participants were asked to complete each scenario using the sensor data interface; they were asked to think aloud as they progressed through the scenarios, verbally indicating their thought processes as they began to search through the sensor activity data using the scenarios as their guide. The Morae software was activated at the beginning of the instruction for the sensor data interface and digital recordings made throughout each scenario for later comparison. Each participant’s interaction was timed using the Morae software.

After the usability test each participant was asked to complete a short survey. The survey asked participants to provide demographic information about their age, job experience, how much web experience they have had, education level, and daily use of web.

Data analysis

Time statistics by activities and tasks were analyzed including total time spent on introduction and tutorials. Timed tests and interface-related issues were compared within the group of residents and clinician users. Results are described using the 16 heuristic categories and summarized qualitative and quantitative data collected during the interviews.

Results

Usability Assessments

Twenty end users--five from each group of residents, family members, nurses, and physicians--participated in this study. Demographics of the users obtained from a short survey collected at the time of assessments are described next followed by results of data collected during user interactions. Total time on activities and scenarios was collected using Morae software.

Residents

Participating residents were all over the age of 70 years. Four females and one male resident completed usability assessments. Two of the users had used computers before; three had never used computers. None of the residents had seen the sensor data interface before.

Generally, the total time to complete activities and tasks on the interface was higher for elderly residents when compared to other users. Every resident took nearly an hour to get through the tutorial. Interviewers halted the interactions at an hour to limit respondent burden during interactions. Most residents only partially completed the scenarios in this amount of time. Residents required assistance by interviewers during their interactions with the sensor data interface. In fact, one resident did not get through the tutorial in order to be able to use scenarios and view sensor data. Residents had difficulty manipulating the mouse, and the interviewer had to assist the residents in entering date ranges into fields, which increased time to complete activities. Residents experienced difficulty manipulating the mouse over text boxes, often repeatedly clicking on parts of the interface just next to text boxes. Other issues (see Table, Supplemental Digital Content 3, which describes resident interactions.

Family members

Participating family members were between the ages of 35 and 65 years old. Four females and one male were interviewed. Two of these participants had 10 plus years of Internet experience, two had 5–9 years, and one had less than 4 years. Participant relations were son-in-law, daughter, and step-daughter. None of the family members had used the interface before.

Family members’ time spent learning the interface during the tutorial ranged from 7–19 minutes. Similarly, family members individually spent 8–15 minutes reviewing sensor data after each scenario was read and after initial data entry was accomplished to retrieve sensor data. Family members spent more time reviewing sensor data on the third scenario (average 13 minutes) and the least time on scenario two (average 10 minutes).

Nurses

Participating nurses ranged in age from 40 to 60 years old. The nurses were females with 20 or more years of work experience and included one clinical educator, two assistant professors, one nurse clinician, and one nurse care coordinator. All the nurse participants had 9 or more years of Internet experience. None of the nurses had seen the sensor data interface before.

Nurses took nearly 8 minutes to complete the sensor interface tutorial. They consistently spent between 10 and 12 minutes completing each of the scenarios after reading the scenario and providing initial data entry. Nurses spent most of their time, nearly 12 minutes, on Scenario 1. They spent the least amount of time, just under 11 minutes, on Scenario 2.

Physicians

Physicians were 40–60 years old and included two females and three males. Practice disciplines were family practice, geriatrics, and general internal medicine. All participants had 10 or more years of Internet experience. Only one of the physicians had seen the interface.

Physicians spent 7–12 minutes learning how to use the interface before moving on to scenarios. Physicians each averaged 9–13 minutes completing scenarios. They spent the most time reviewing sensor data on Scenario 1 (just over 15 minutes). They took the least time (just under 8 minutes) reviewing data related to Scenario 2.

Group observations

Observations from the four user groups were categorized within heuristics (Table 3). Overall, and not surprisingly, a greater number of issues were identified in the resident group, which substantially increased their time to complete activities. For example, residents had more difficulty viewing data; they had some difficulty interpreting graphs, thought there was too much information, and did not understand the terminology. However, all of the users found the information comforting. Family members thought the interface would be very useful for remotely monitoring the health and physical activities of their family; for example, one family member expressed that she would use the sensor data to monitor her mother’s sleep patterns because her mother has Alzheimer’s and tends to forget (see Table, Supplemental Digital Content 4, which provides comments by user group). All residents stated that collecting this information made them feel safer, knowing that someone was watching for changes in activity levels and potential health problems.

Users identified some persistent problems previously identified by the expert review panel using the heuristic criteria (see Table, Supplemental Digital Content 1). For example, under Heuristic #4, the expert reviewer noted that color usage in the graphs as problematic. In some instances, colors lacked adequate contrast and were too similar, so locations of sensors within the apartment could be confused. All user groups noted problems with color selections on the interface (Table 3). Another color issue was identified via Heuristic #2, indistinctive color (e.g., it is difficult to discriminate orange and red restlessness). Size of the data points (too small) was identified in Heuristic #7 and font size issues were addressed in Heuristic #12. Most residents had difficulty seeing the information on the screen, because the font was too small and there was no way to adjust it on the screen. To overcome this problem, some residents selected contrasting backgrounds of black or white to enhance visualization.

Under Heuristic #10, the experts identified that navigation was difficult and information was not easy to find. Again, the residents had problems because there was too much information displayed or they felt overwhelmed by the charts. All users found some parts of the display confusing; for example, the stove temperature sensor was listed in the same vicinity on the interface with other physiological parameters. The placement and labeling of the stove sensor information confused users because the labels did not represent the users’ terminology consistently (temperature in a health care record usually means body temperature, but in this case it meant stove temperature).

During the test session, the nurses and physicians often used the data to interpret clinical information. For example, health care providers watched for warning signs by paying close attention to resident activities within the apartment. When clinicians noticed changes in activities, they attempted to determine if it was prior to a change in patient status, such as increasing bed restlessness prior to hospitalization. Both nurses and physicians found the data useful for interpreting health status. However, residents and family members had more difficulty interpreting the sensor output because they didn’t know what normal activity was for an individual or age group (see Table, Supplemental Digital Content 4). This could have contributed to their increased time to complete activities. Despite problems noted by users, comments made during interactions illuminate how useful the sensor data could be to assist in monitoring for changing trajectories of frail elderly residents living independently.

Discussion

The results support recent research advocating that older adults have special needs that should be addressed when designing interfaces for them, especially information as important as health information used to make decisions about their health care (Czaja, Sharit, Nair, & Lee, 2008). Designing health information systems with the needs of older end users in mind is important to maximize their interactions with these devices. Using these user-centric design principles, human factors allows older end users to incorporate wanted technologies into their daily lives more easily, and they find the information more useful. A result of enhanced usefulness and usability is greater acceptance and satisfaction with technology and potentially improved patient trajectories (Figure 1). Evaluating human factors during user interactions with clinical information systems should be a requirement before any field implementation. Additionally, end user involvement is critical to identify important problem areas that can be overlooked during iterative reviews by developers who have different requirements and needs.

In this study, residents, family members, and clinicians found the interface useful for identifying the level of activities for residents who had the sensor systems installed, residents found the data less useful. Residents indicated they already know their activity levels and therefore they did not find the information helpful. However, residents did report a sense of security knowing that someone was watching out for them when they were alone in their apartment. The sensors and data provided a safer environment for them. All resident users felt the sensor data would be useful for health care providers and family members to have to monitor them for changes in activity levels. All residents interviewed were willing to share their sensor data information with their families and providers.

Clinicians who had experience working with elderly individuals in the community claimed that access to this type of sensor interface data would definitely help them make better decisions in the field about the residents. Usability issues encountered did not deter them from attempting and, in most cases, successfully finding altered activity levels within resident’s data. This discovery was completed with a minimum of assistance on the part of the investigator conducting the usability interviews. Clinician users discussed important issues that still need to be addressed with the sensor data and interface including developing better ways to visualize the data to detect changes from normal baseline activity levels and using terminologies and parameters for physiological measures that match the real world of health care providers. Improving visual acuity and screen readability has the effect of improving clinician interactions with technology by making clinical information more accessible, understandable, and consistent with clinician work. These attributes of clinical information improve information flow along trajectories and can enhance nursing and patient outcomes (Figure 1).

Methodological Issues

Important methodological issues were discovered. First, although a standardized training manual was used, the introduction and tutorial to familiarize users with the interface could have been delivered in a more standardized way. This would ensure that the participants knew all the necessary details about the interface before testing began. Second, to conduct quantitative user analysis, clear instructions should be given to the evaluator for the scenario start and end to compare these times consistently between users and user groups. The users should be asked to read the scenario and their understanding of the scenario should be clarified before starting the usability assessment. Third, evaluators should decide beforehand when tasks or scenarios should begin. Time frames to consider include when the user starts reading the scenario, when the user starts typing in the details to access the data related to the scenario, or when the user starts interpreting the graphs that are illustrated. Finally, the evaluator should decide beforehand what would be the task end measure; for example, when the user says they are done or when the user clicks on the Home button.

Conclusion

The effectiveness of clinical information systems to provide useful information for clinical decision making is dependent on the usability of system, data presentation, match between the real world of end users and the system, and satisfaction of users during interactions. There are important considerations for different types of users based on age, vocation, and experience. This is particularly true in health care settings where users are of a wide range of age and experience and have multiple types of jobs. Evaluation of human-to-computer interactions in health care settings will lead to improvements in the development of clinical information systems and greater understanding of how information can be used, which will positively impact care delivery processes, clinical decision making, and health care outcomes.

Supplementary Material

Supplemental Digital Content 1. Table that includes a listing of heuristics used. Doc

Supplemental Digital Content 2. Table that provides study training scenarios. Doc

Supplemental Digital Content 3. Table that describes resident interactions. Doc.

Supplemental Digital Content 4. Table that provides comments by user group. Doc.

Acknowledgments

This project was supported by grant K08HS016862 from the Agency for Healthcare Research and Quality, grant 90AM3013 from the United States Administration on Aging’s Technology to Enhance Aging in Place at TigerPlace, and grant IIS-0428420 from the National Science Foundation’s ITR Technology Interventions for Elders with Mobility and Cognitive Impairments projects. The content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality, the U.S. Administration on Aging, or the National Science Foundation.

Contributor Information

Gregory L. Alexander, Sinclair School of Nursing, University of Missouri, Columbia, Missouri.

Bonnie J. Wakefield, Sinclair School of Nursing, University of Missouri, Columbia, Missouri.

Marilyn Rantz, Sinclair School of Nursing, University of Missouri, Columbia, Missouri.

Marjorie Skubic, Electrical and Computer Engineering, University of Missouri, Columbia, Missouri.

Myra A. Aud, Sinclair School of Nursing, University of Missouri, Columbia, Missouri.

Sanda Erdelez, School of Information Science and Learning Technologies, University of Missouri, Columbia, Missouri.

Said Al Ghenaimi, School of Information Science and Learning Technologies, University of Missouri, Columbia, Missouri.

References

- Alexander GL. The nurse-patient trajectory framework. MEDINFO. 2007:910–914. [PMC free article] [PubMed] [Google Scholar]

- Alexander GL. Human factors. In: Saba V, McCormick K, editors. Essentials of nursing informatics. 5. New York, NY: McGraw-Hill; 2010. [Google Scholar]

- Alexander GL, Rantz MJ, Skubic M, Aud MA, Wakefield B, Florea E, et al. Sensor systems for monitoring functional status in assisted living facility residents. Research in Gerontological Nursing. 2008;1:238–244. doi: 10.3928/19404921-20081001-01. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alexander GL, Staggers N. A systematic review of the designs of clinical technology: Findings and recommendations for future research. ANS: Advances in Nursing Science. 2009;32:252–279. doi: 10.1097/ANS.0b013e3181b0d737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bakken S, Stone PW, Larson EL. A nursing informatics research agenda for 2008–18: Contextual influences and key components. Nursing Outlook. 2008;56:206–214. doi: 10.1016/j.outlook.2008.06.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Courtney KL, Demiris G, Hensel BK. Obtrusiveness of information-based assistive technologies as perceived by older adults in residential care facilities: A secondary analysis. Medical Informatics and the Internet in Medicine. 2007;32:241–249. doi: 10.1080/14639230701447735. [DOI] [PubMed] [Google Scholar]

- Cresci MK, Yarandi HN, Morrell RW. The digital divide and urban older adults. Computer, Informatics, Nursing. 2010;28:88–94. doi: 10.1097/NCN.0b013e3181cd8184. [DOI] [PubMed] [Google Scholar]

- Czaja SJ, Nair SN. Human Factors Engineering and Systems Design. In: Salvendy G, editor. Handbook of human factors and ergonomics. 3. Hoboken, NJ: Wiley; 2006. pp. 32–53. [Google Scholar]

- Czaja SJ, Sharit J, Nair SN, Lee CC. Older adults and the use of e-health information. Gerontechnology. 2008;7:97. [Google Scholar]

- Feeney-Mahoney D, Mahoney EL, Liss E. AT EASE: Automated Technology for Elder Assessment, Safety, and Environmental monitoring. Gerontechnology. 2009;8:11–25. [Google Scholar]

- Rantz MJ, Marek KD, Aud M, Tyrer HW, Skubic M, Demiris G, et al. A technology and nursing collaboration to help older adults age in place. Nursing Outlook. 2005;53:40–45. doi: 10.1016/j.outlook.2004.05.004. [DOI] [PubMed] [Google Scholar]

- Rantz MJ, Skubic M, Alexander GL, Popescu M, Aud MA, Wakefield BJ, et al. Developing a comprehensive electronic health record to enhance nursing care coordination, use of technology, and research. Journal of Gerontological Nursing. 2010;36:13–17. doi: 10.3928/00989134-20091204-02. [DOI] [PubMed] [Google Scholar]

- Reder S, Ambler G, Philipose M, Hedrick S. Technology and Long-term Care (TLC): A pilot evaluation of remote monitoring of elders. Gerontechnology. 2010;9:18–31. [Google Scholar]

- Skubic M, Alexander GL, Popescu M, Rantz MJ, Keller J. A smart home application to eldercare: Current status and lessons learned. Technology and Health Care. 2009;17:183–201. doi: 10.3233/THC-2009-0551. [DOI] [PubMed] [Google Scholar]

- Taha J, Sharit J, Czaja SJ. Use of and satisfaction with sources of health information among older internet users and nonusers. The Gerontologist. 2009;49:663–673. doi: 10.1093/geront/gnp058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turner CW, Lewis JR, Nielsen J. Determining usability test sample size. In: Karwowski W, editor. International encyclopedia of ergonomics and human factors. 2. Boca Raton, FL: CRC Press; 2006. pp. 3084–3088. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental Digital Content 1. Table that includes a listing of heuristics used. Doc

Supplemental Digital Content 2. Table that provides study training scenarios. Doc

Supplemental Digital Content 3. Table that describes resident interactions. Doc.

Supplemental Digital Content 4. Table that provides comments by user group. Doc.