Summary

We consider the supervised classification setting, in which the data consist of p features measured on n observations, each of which belongs to one of K classes. Linear discriminant analysis (LDA) is a classical method for this problem. However, in the high-dimensional setting where p ≫ n, LDA is not appropriate for two reasons. First, the standard estimate for the within-class covariance matrix is singular, and so the usual discriminant rule cannot be applied. Second, when p is large, it is difficult to interpret the classification rule obtained from LDA, since it involves all p features. We propose penalized LDA, a general approach for penalizing the discriminant vectors in Fisher’s discriminant problem in a way that leads to greater interpretability. The discriminant problem is not convex, so we use a minorization-maximization approach in order to efficiently optimize it when convex penalties are applied to the discriminant vectors. In particular, we consider the use of L1 and fused lasso penalties. Our proposal is equivalent to recasting Fisher’s discriminant problem as a biconvex problem. We evaluate the performances of the resulting methods on a simulation study, and on three gene expression data sets. We also survey past methods for extending LDA to the high-dimensional setting, and explore their relationships with our proposal.

Keywords: classification, feature selection, high dimensional, lasso, linear discriminant analysis, supervised learning

1. Introduction

In this paper, we consider the classification setting. The data consist of a n × p matrix X with p features measured on n observations, each of which belongs to one of K classes. Linear discriminant analysis (LDA) is a well-known method for this problem in the classical setting where n > p. However, in high dimensions (when the number of features is large relative to the number of observations) LDA faces two problems:

The maximum likelihood estimate of the within-class covariance matrix is approximately singular (if p is almost as large as n) or singular (if p > n). Even if the estimate is not singular, the resulting classifer can suffer from high variance, resulting in poor performance.

When p is large, the resulting classifier is difficult to interpret, since the classification rule involves a linear combination of all p features.

The LDA classifier can be derived in three different ways, which we will refer to as the normal model, the optimal scoring problem, and Fisher’s discriminant problem (see e.g. Mardia et al. 1979, Hastie et al. 2009). In recent years, a number of papers have extended LDA to the high-dimensional setting in such a way that the resulting classifier involves a sparse linear combination of the features (see e.g. Tibshirani et al. 2002, 2003, Grosenick et al. 2008, Leng 2008, Clemmensen et al. 2011). These methods involve regularizing or penalizing the log likelihood for the normal model, or the optimal scoring problem, by applying an L1 or lasso penalty (Tibshirani 1996).

In this paper, we instead approach the problem through Fisher’s discriminant framework, which is in our opinion the most natural of the three problems that result in LDA. The resulting problem is nonconvex. We overcome this difficulty using a minorization-maximization approach (see e.g. Lange et al. 2000, Hunter & Lange 2004, Lange 2004), which allows us to solve the problem efficiently when convex penalties are applied to the discriminant vectors. This is equivalent to recasting Fisher’s discriminant problem as a biconvex problem that can be optimized using a simple iterative algorithm, and is closely related to the sparse principal components analysis proposal of Witten et al. (2009).

To our knowledge, our approach to penalized LDA is novel. Clemmensen et al. (2011) state the same criterion that we use, but then go on to solve instead a closely related optimal scoring problem. Trendafilov & Jolliffe (2007) consider a closely related problem, but they propose a specialized algorithm that can be applied only in the case of L1 penalties on the discriminant vectors; moreover, they do not consider the high-dimensional setting. In this paper, we take a more general approach that has a number of attractive features:

It results from a natural criterion for which a simple optimization strategy is provided.

A reduced rank solution can be obtained.

It provides a natural way to enforce a diagonal estimate for the within-class covariance matrix, which has been shown to yield good results in the high-dimensional setting (see e.g. Dudoit et al. 2001, Tibshirani et al. 2003, Bickel & Levina 2004).

It yields interpretable discriminant vectors, where the concept of interpretability can be chosen based on the problem at hand. Interpretability is achieved via application of convex penalties to the discriminant vectors. For instance, if L1 penalties are used, then the resulting discriminant vectors are sparse.

This paper is organized as follows. We review Fisher’s discriminant problem in Section 2, we review the principle behind minorization-maximization algorithms in Section 3, and we propose our approach for penalized classification using Fisher’s linear discriminant in Section 4. A simulation study and applications to gene expression data are presented in Section 5. Since many proposals have been made for sparse LDA, we review past work and discuss the relationships between various approaches in Section 6. In Section 7, we discuss connections between our proposal and past work. Section 8 contains the Discussion.

2. Fisher’s discriminant problem

2.1. Fisher’s discriminant problem with full rank within-class covariance

Let X be a n × p matrix with observations on the rows and features on the columns. We assume that the features are centered to have mean zero, and we let Xj denote feature/column j and xi denote observation/row i. Ck ⊂ {1, …, n} contains the indices of the observations in class k, and nk = |Ck|, . The standard estimate for the within-class covariance matrix Σw is given by

| (1) |

where μ̂k is the sample mean vector for class k. In this section, we assume that Σ̂w is non-singular. Furthermore, the standard estimate for the between-class covariance matrix Σb is given by

| (2) |

In later sections, we will make use of the fact that , where Y is a n × K matrix with Yik an indicator of whether observation i is in class k.

Fisher’s discriminant problem seeks a low-dimensional projection of the observations such that the between-class variance is large relative to the within-class variance. That is, we sequentially solve

| (3) |

Note that the problem (3) is generally written with the inequality constraint replaced with an equality constraint, but the two are equivalent if Σ̂w has full rank, as is shown in the Appendix. We will refer to the solution β̂k to (3) as the kth discriminant vector. In general, there are K − 1 nontrivial discriminant vectors.

A classification rule is obtained by computing Xβ̂1, …, Xβ̂K − 1 and assigning each observation to its nearest centroid in this transformed space. Alternatively, one can transform the observations using only the first k < K − 1 discriminant vectors in order to perform reduced rank classification. LDA derives its name from the fact that the classification rule involves a linear combination of the features.

One can solve (3) by substituting , where is the symmetric matrix square root of Σ̂w. Then, Fisher’s discriminant problem is reduced to a standard eigenproblem. In fact, from (2), it is clear that Fisher’s discriminant problem is closely related to principal components analysis on the class centroid matrix.

2.2. Existing methods for extending Fisher’s discriminant problem to the p > n setting

In high dimensions, there are two reasons that problem (3) does not lead to a suitable classifier:

Σ̂w is singular. Any discriminant vector that is in the null space of Σ̂w but not in the null space of Σ̂b can result in an arbitrarily large value of the objective.

The resulting classifier is not interpretable when p is very large, because the discriminant vectors contain p elements that have no particular structure.

A number of modifications to Fisher’s discriminant problem have been proposed to address the singularity problem. Krzanowski et al. (1995) consider modifying (3) by instead seeking a unit vector β that maximizes βTΣ̂bβ subject to βTΣ̂wβ = 0, and Tebbens & Schlesinger (2007) further require that the solution does not lie in the null space of Σ̂b. Others have proposed modifying (3) by using a positive definite estimate of Σw. For instance, Friedman (1989), Dudoit et al. (2001), and Bickel & Levina (2004) consider the use of the diagonal estimate

| (4) |

where is the jth diagonal element of Σ̂w (1). Other positive definite estimates for Σw are suggested in Krzanowski et al. (1995) and Xu et al. (2009). The resulting criterion is

| (5) |

where Σ̃w is a positive definite estimate for Σw. The criterion (5) addresses the singularity issue, but not the interpretability issue.

In this paper, we extend (5) so that the resulting discriminant vectors are interpretable. We will make use of the following proposition, which provides a reformulation of (5) that results in the same solution:

Proposition 1

The solution β̂k to (5) also solves the problem

| (6) |

where

| (7) |

is defined as follows: , and for k > 1, is an orthogonal projection matrix into the space that is orthogonal to for all i < k.

Throughout this paper, Σ̂w will always refer to the standard maximum likelihood estimate of Σw (1), whereas Σ̃w will refer to some positive definite estimate of Σw for which the specific form will depend on the context.

3. A brief review of minorization algorithms

In this paper, we will make use of a minorization-maximization (or simply minorization) algorithm, as described for instance in Lange et al. (2000), Hunter & Lange (2004), and Lange (2004). Consider the problem

| (8) |

If f is a concave function, then standard tools from convex optimization (see e.g. Boyd & Vandenberghe 2004) can be used to solve (8). If not, solving (8) can be dificult. (We note here that minimization of a convex function is a convex problem, as is maximization of a concave function. Hence, (8) is a convex problem if f(β) is concave in β. For non-concave f(β) – for instance if f(β) is convex – (8) is not a convex problem.)

Minorization refers to a general strategy for maximizing non-concave functions. The function g(β|β (m)) is said to minorize the function f(β) at the point β (m) if

| (9) |

A minorization algorithm for solving (8) initializes β (0), and then iterates:

| (10) |

Then by (9),

| (11) |

This means that in each iteration the objective is nondecreasing. However, in general we do not expect to arrive at the global optimum of (8) using a minorization approach: global optima for non-convex problems are very hard to obtain, and a local optimum is the best we can hope for except in specific special cases. Different initial values for β (0) can be tried and the solution resulting in the largest objective value can be chosen. A good minorization function is one for which (10) is easily solved. For instance, if g(β|β (m)) is concave in β then standard convex optimization tools can be applied.

In the next section, we use a minorization approach to develop an algorithm for our proposal for penalized LDA.

4. The penalized LDA proposal

4.1. The general form of penalized LDA

We would like to modify the problem (5) by imposing penalty functions on the discriminant vectors. We define the first penalized discriminant vector β̂1 to be the solution to the problem

| (12) |

where Σ̃w is a positive definite estimate for Σw and where P1 is a convex penalty function. In this paper, we will be most interested in the case where Σ̃w is the diagonal estimate (4), since it has been shown that using a diagonal estimate for Σw can lead to good classification results when p ≫ n (see e.g. Tibshirani et al. 2002, Bickel & Levina 2004). Note that (12) is closely related to penalized principal components analysis, as described for instance in Jolliffe et al. (2003) and Witten et al. (2009) – in fact, it would be exactly penalized principal components analysis if Σ̃w were the identity.

To obtain multiple discriminant vectors, rather than requiring that subsequent discriminant vectors be orthogonal with respect to Σ̃w - a difficult task for a general convex penalty function - we instead make use of Proposition 1. We define the kth penalized discriminant vector β̂k to be the solution to

| (13) |

where is given by (7), with an orthogonal projection matrix into the space that is orthogonal to for all i < k, and . Here Pk is a convex penalty function on the kth discriminant vector. Note that (12) follows from (13) with k = 1.

In general, the problem (13) cannot be solved using tools from convex optimization, because it involves maximizing an objective function that is not concave. We apply a minorization algorithm to solve it. For any positive semidefinite matrix A, f(β) = βTAβ is convex in β. Thus, for a fixed value of β (m),

| (14) |

for any β, and equality holds when β = β (m). Therefore,

| (15) |

minorizes the objective of (13) at β (m). Moreover, since Pk is a convex function, g(βk|β(m)) is concave in βk and hence can be maximized using convex optimization tools. We can use (15) as the basis for a minorization algorithm to find the kth penalized discriminant vector. The algorithm assumes that the first k − 1 penalized discriminant vectors have already been computed.

Algorithm 1: Obtaining the kth penalized discriminant vector

If k > 1, define an orthogonal projection matrix that projects onto the space that is orthogonal to for all i < k. Let .

Let . Note that .

Let be the first eigenvector of .

-

For m = 1, 2, … until convergence: let be the solution to

(16) Let β̂k denote the solution at convergence.

Of course, the solution to (16) will depend on the form of the convex function Pk. In the next section, we will consider two specific forms for Pk.

Once the penalized discriminant vectors have been computed, classification is straightforward: as in the case of classical LDA, we compute Xβ̂1, …, Xβ̂K − 1 and assign each observation to its nearest centroid in this transformed space. To perform reduced rank classification, we transform the observations using only the first k < K − 1 penalized discriminant vectors.

4.2. Penalized LDA-L1 and penalized LDA-FL

4.2.1. Penalized LDA-L1

We define penalized LDA-L1 to be the solution to (13) with an L1 penalty,

| (17) |

When the tuning parameter λk is large, some elements of the solution β̂k will be exactly equal to zero. In (17), σ̂j is the within-class standard deviation for feature j; the inclusion of σ̂j in the penalty has the effect that features that vary more within each class undergo greater penalization. Penalized LDA-L1 is appropriate if we want to obtain a sparse classifier - that is, a classifier for which the decision rule involves only a subset of the features. In particular, the resulting discriminant vectors are sparse, so penalized LDA-L1 amounts to projecting the data onto a low-dimensional subspace that involves only a subset of the features.

To solve (17), we use the minorization approach outlined in Algorithm 1. Step (d) can be written as

| (18) |

The solution to (18) is given in Proposition 2 in Section 4.2.3.

4.2.2. Penalized LDA-FL

We define penalized LDA-FL to be the solution to the problem (13) with a fused lasso penalty (Tibshirani et al. 2005):

| (19) |

When the nonnegative tuning parameter λk is large then the resulting discriminant vector will be sparse in the features, and when the nonnegative tuning parameter γk is large then the discriminant vector will be piecewise constant. This classifier is appropriate if the features are ordered on a line, and one believes that the true underlying signal is sparse and piecewise constant.

To solve (13), we again apply Algorithm 1. Step (d) can be written as

| (20) |

Proposition 2 in Section 4.2.3 provides the solution to (20).

4.2.3. The minorization step for penalized LDA-L1 and penalized LDA-FL

Now we present Proposition 2, which provides a solution to (18) and (20). In other words, Proposition 2 provides details for performing Step (d) in Algorithm 1 for penalized LDA-L1 and penalized LDA-FL.

Proposition 2

-

To solve (18), we first solve the problem

(21) If d̂ = 0 then β̂k = 0. Otherwise, .

-

To solve (20), we first solve the problem

(22) If d̂= 0 then β̂k = 0. Otherwise, .

The proof is given in the Appendix. Some comments on Proposition 2 are as follows:

-

where S is the soft-thresholding operator, defined as

(24) and applied component wise. To see why, note that differentiating (21) with respect to dj indicates that the solution will satisfy(25) where Γj is the subgradient of |dj|, defined as(26) where a is some number between 1 and −1. Then (23) follows from (25).

On the other hand, if Σ̃w is a non-diagonal positive definite estimate of Σw, then one can solve (21) by coordinate descent (see e.g. Friedman et al. 2007). (21) is in that case closely related to the lasso, but may involve more demanding computations. This is due to the fact that when p ≫ n the standard lasso can be implemented by storing the n × p matrix X rather than the entire p × p matrix XTX. But if Σ̃w is a p × p matrix without special structure then one must store it in full in order to solve (21).

If Σ̃w is a diagonal estimate for Σw then (22) is a diagonal fused lasso problem, for which fast algorithms have been proposed (see e.g. Hoefling 2009, Johnson 2010).

4.2.4. Comments on tuning parameter selection

We now consider the problem of selecting the tuning parameter λk for the penalized LDA-L1 problem (17). The simplest approach would be to take λk = λ, i.e. the same tuning parameter value for all components. However, this results in effectively penalizing each component more than the previous components, since the unpenalized objective value of (17), which is equal to the largest eigenvalue of , is nonincreasing in k. So instead, we take the following approach. We first fix a nonnegative constant λ, and then we take where ||·|| indicates the largest eigenvalue. Note that when p ≫ n, this largest eigenvalue can be quickly computed using the fact that has low rank. The value of λ can be chosen by cross-validation.

In the case of the penalized LDA-FL problem (19), instead of choosing λk and λk directly, we instead fix nonnegative constants λ and γ. Then, we take and . λ and γ can be chosen by cross-validation.

4.2.5. Timing results for penalized LDA

We now comment on the computations involved in the algorithms proposed earlier in this section. We used a very simple simulation corresponding to no signal in the data: Xij ~ N (0, 1) and there were four equally-sized classes. Table 1 summarizes the computational times required to perform penalized LDA-L1 and penalized LDA-FL with the diagonal estimate (4) used for Σ̃w. The R library penalized LDA was used. Timing depends critically on the convergence criterion used; we determine that the algorithm has “converged” when subsequent iterations lead to a relative improvement in the objective of no more than 10−6; that is, |ri − ri+1|/ri+1 < 10−6 where ri is the objective obtained at the ith iteration. Of course, computational times will be shorter if a less strict convergence threshold is used. All timings were carried out on a AMD Opteron 848 2.20 GHz processor.

Table 1.

Timing results for penalized LDA-L1 (with λ = 0.005) and penalized LDA-FL (with λ = γ = 0.005) for various values of n and p, with 4-class data. Mean (and standard error) of running time (in seconds), over 25 repetitions. The diagonal estimate (4) was used for Σ̃w

| p=20 | p=200 | p=2000 | p=20000 | ||

|---|---|---|---|---|---|

| Penalized LDA-L1 | n=20 | 0.049 (0) | 0.059 (0.002) | 0.199 (0.022) | 5.1 (0.851) |

| n=200 | 0.062 (0) | 0.147 (0.001) | 1.182 (0.014) | 11.835 (0.417) | |

|

| |||||

| Penalized LDA-FL | n=20 | 0.064 (0.003) | 0.108 (0.007) | 1.018 (0.102) | 118.61 (9.915) |

| n=200 | 0.075 (0.001) | 0.219 (0.012) | 1.835 (0.102) | 118.557 (8.895) | |

4.3. Recasting penalized LDA as a biconvex problem

Rather than using a minorization approach to solve the nonconvex problem (12), one could instead recast it as a biconvex problem. Consider the problem

| (27) |

Partially optimizing (27) with respect to u reveals that the β that solves it also solves (12). Moreover, (27) is a biconvex problem (see e.g. Gorski et al. 2007): that is, with β held fixed, it is convex in u, and with u held fixed, it is convex in β. This suggests a a simple iterative approach for solving it.

Algorithm 4: A biconvex formulation for penalized LDA

Let β (0) be the first eigenvector of .

-

For m = 1, 2, … until convergence:

- Let u(m) solve

(28) - Let β (m) solve

(29)

Combining Steps (b)(i) and (b)(ii), we see that β (m) solves

| (30) |

Comparing (30) to (16), we see that the biconvex formulation (27) results in the same update step as the minorization approach outlined in Algorithm 1. This biconvex formulation is very closely related to the sparse principal components analysis proposal of Witten et al. (2009), which corresponds to the case where Σ̃w = I and a bound form is used for the penalty P (β). Since is a weighted version of the class centroid matrix, our penalized LDA proposal is closely related to performing sparse principal components analysis on the class centroids matrix.

5. Examples

5.1. Methods included in comparisons

In the examples that follow, penalized LDA-L1 and penalized LDA-FL were performed using the diagonal estimate (4) for Σ̃w, as implemented in the R package penalized LDA. The nearest shrunken centroids (NSC; Tibshirani et al. 2002, 2003) method was performed using the R package pamr, and the shrunken centroids regularized discriminant analysis (RDA; Guo et al. 2007) method was performed using the rda R package. Briefly, NSC results from using a diagonal estimate of Σw and imposing L1 penalties on the class mean vectors under the normal model, and RDA combines a ridge-type penalty in estimating Σw with soft-thresholding of . These methods are discussed further in Section 6.

The tuning parameters for each of the methods considered were as follows. For penalized LDA-L1, λ described in Section 4.2.4 was a tuning parameter. For penalized LDA-FL, we treated λ = γ (see Section 4.2.4) as a single tuning parameter in order to avoid performing tuning parameter selection on a two-dimensional grid. Moreover, penalized LDA had an additional tuning parameter, the number of discriminant vectors to include in the classifier. NSC has a single tuning parameter, which corresponds to the amount of soft-thresholding performed. RDA has two tuning parameters, one of which controls the number of features used and the other controls the ridge penalty used to regularize the estimate of Σw.

5.2. A simulation study

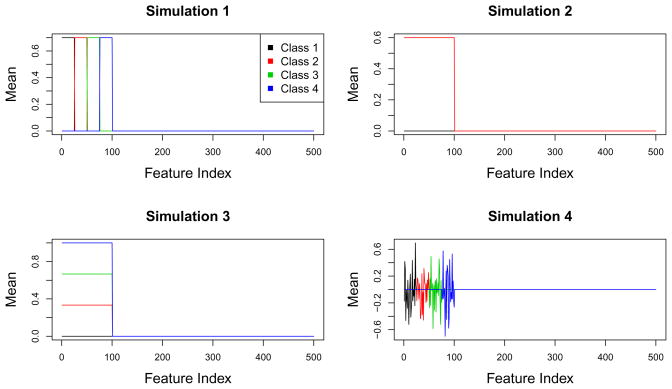

We compare penalized LDA to NSC and RDA in a simulation study. Four simulations were considered. In each simulation, there are 1200 observations, equally split between the classes. Of these 1200 observations, 100 belong to the training set, 100 belong to the validation set, and 1000 are in the test set. Each simulation consists of measurements on 500 features, of which 100 differ between classes.

Simulation 1. Mean shift with independent features

There are four classes. If observation i is in class k, then xi ~ N (μk, I), where μ1j = 0.7 × 1(1≤j≤25), μ2j = 0.7 × 1(26≤j≤50), μ3j = 0.7×1(51≤j≤75), μ4j = 0.7×1(75≤j≤100).

Simulation 2. Mean shift with dependent features

There are two classes. For i ∈ C1, xi ~ N(0, Σ) and for i ∈ C2, xi ~ N(μ, Σ), μj = 0.6 × 1(j≤200). The covariance structure is block diagonal, with 5 blocks each of dimension 100 × 100. The blocks have (j, j′) element 0.6|j−j′|. This covariance structure is intended to mimic gene expression data, in which genes are positively correlated within a pathway and independent between pathways.

Simulation 3. One-dimensional mean shift with independent features

There are four classes, and the features are independent. For i ∈ Ck, if j ≤ 100, and Xij ~ N(0, 1) otherwise. Note that a one-dimensional projection of the data fully captures the class structure.

Simulation 4. Mean shift with independent features and no linear ordering

There are four classes. If observation i is in class k, then xi ~ N(μk, I). The mean vectors are defined as follows: μ1j ~ N (0, 0.32) if 1 ≤ j ≤ 25 and μ1j = 0 otherwise, μ2j ~ N (0, 0.32) if 26 ≤ j ≤ 50 and μ2j = 0 otherwise, μ3j ~ N(0, 0.32) if 51 ≤ j ≤ 75 and μ3j = 0 otherwise, μ4j ~ N(0, 0.32) if 75 ≤ j ≤ 100 and μ4j = 0 otherwise.

Figure 1 displays the class mean vectors for each simulation.

Fig. 1.

Class mean vectors for each simulation.

For each method, models were fit on the training set using a range of tuning parameter values. Tuning parameter values were then selected to minimize the validation set error. Finally, the training set models with appropriate tuning parameter values were evaluated on the test set. Penalized LDA-FL was performed in Simulations 1–3 but not in Simulation 4, since in Simulation 4 the features do not have a linear ordering as assumed by the fused lasso penalty (see Figure 1).

Test set errors and the numbers of nonzero features used are reported in Table 2. For penalized LDA, the numbers of discriminant vectors used are also reported. Penalized LDA-FL has by far the best performance in the first three simulations, since it exploits the fact that the important features have a linear ordering. Of course, in real data applications, penalized LDA-FL can only be applied if such an ordering is present. Note that penalized LDA tends to use fewer than three components in Simulation 3, in which a one-dimensional projection is sufficient to explain the class structure.

Table 2.

Simulation results. Mean (and standard errors), computed over 25 repetitions, of test set errors, number of nonzero features, and number of discriminant vectors used

| Pen. LDA-L1 | Pen. LDA-FL | NSC | RDA | ||

|---|---|---|---|---|---|

| Sim 1 | Errors | 117.48 (3) | 38.4 (2) | 88.96 (2.6) | 96.8 (3.4) |

| Features | 301.16 (20.1) | 159.28 (15.8) | 290.28 (16.7) | 226.6 (15.7) | |

| Components | 3 (0) | 3 (0) | - | - | |

|

| |||||

| Sim 2 | Errors | 90.04 (2.8) | 77 (1.9) | 88.44 (2.7) | 112.2 (5.8) |

| Features | 229.36 (20.4) | 170.16 (18.4) | 341.28 (24.8) | 414.84 (32.6) | |

| Components | 1 (0) | 1 (0) | - | - | |

|

| |||||

| Sim 3 | Errors | 150.8 (5.4) | 83.44 (2.3) | 276.64 (4) | 291 (4.8) |

| Features | 147.84 (7.1) | 115.92 (9.1) | 439.6 (10.7) | 349.32 (24.5) | |

| Components | 1 (0) | 1 (0) | - | - | |

|

| |||||

| Sim 4 | Errors | 60.56 (1.1) | - | 58.28 (1.2) | 57 (0.9) |

| Features | 311.4 (22.1) | - | 135.4 (22.6) | 98 (7.3) | |

| Components | 3 (0) | - | - | - | |

5.3. Application to gene expression data

We compare penalized LDA-L1, NSC, and RDA on three gene expression data sets:

Ramaswamy data

A data set consisting of 16,063 gene expression measurements and 198 samples belonging to 14 distinct cancer subtypes (Ramaswamy et al. 2001). The data set has been studied in a number of papers (see e.g. Zhu & Hastie 2004, Guo et al. 2007, Witten & Tibshirani 2009) and is available from http://www-stat.stanford.edu/~hastie/glmnet/glmnetData/.

Nakayama data

A data set consisting of 105 samples from 10 types of soft tissue tumors, each with 22,283 gene expression measurements (Nakayama et al. 2007). We limited the analysis to five tumor types for which at least 15 samples were present in the data; the resulting subset of the data contained 86 samples. The data are available on Gene Expression Omnibus (Barrett et al. 2005) with accession number GDS2736.

Sun data

A data set consisting of 180 samples and 54,613 expression measurements (Sun et al. 2006). The samples fall into four classes: one non-tumor class and three types of glioma. The data are available on Gene Expression Omnibus with accession number GDS1962.

Each data set was split into a training set containing 75% of the samples and a test set containing 25% of the samples. Cross-validation was performed on the training set and test set error rates were evaluated. The process was repeated ten times, each with a random choice of training set and test set. Results are reported in Table 3. The results suggest that the three methods tend to have roughly comparable performance. A reviewer pointed out that there is substantial variability in the number of features used by each classifier across each training/test set split. Indeed, this instability in the set of genes selected likely reflects the fact that in the analysis of many real data types, sparsity is simply an approximation, rather than a property that we expect to hold exactly.

Table 3.

Results obtained on gene expression data over 10 training/test set splits. Quantities reported are the mean (and standard deviation) of test set errors and nonzero coefficients.

| NSC | Penalized LDA-L1 | RDA | ||

|---|---|---|---|---|

| Ramaswamy | Errors | 16.3 (4.16) | 18.8 (3.05) | 24 (17.45) |

| Features | 2336.9 (2292.03) | 14873.5 (720.29) | 5022.5 (2503.35) | |

|

| ||||

| Nakayama | Errors | 4.2 (2.15) | 4.4 (1.51) | 2.8 (1.23) |

| Features | 5908 (7131.5) | 10478.7 (2116.27) | 22283 (0) | |

|

| ||||

| Sun | Errors | 15 (4.29) | 15.2 (3.29) | 15.7 (4.52) |

| Features | 30004.9 (18557.68) | 21634.8 (7443.21) | 54183.4 (693.23) | |

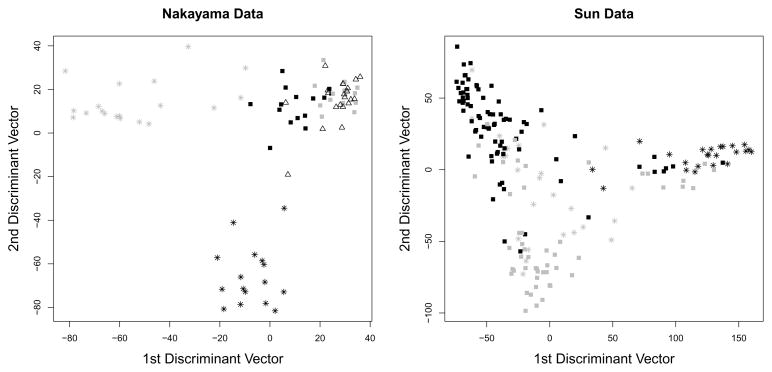

Penalized LDA-L1 has the added advantage over RDA and NSC of yielding penalized discriminant vectors that can be used to visualize the observations, as in Figure 2.

Fig. 2.

For the Nakayama and Sun data, the samples were projected onto the first two penalized discriminant vectors. The samples in each class are shown using a distinct symbol.

6. The normal model, optimal scoring, and extensions to high dimensions

In this section, we review the normal model and the optimal scoring problem, which lead to the same classification rule as Fisher’s discriminant problem. We also review past extensions of LDA to the high-dimensional setting.

6.1. The normal model

Suppose that the observations are independent and normally distributed with a common within-class covariance matrix Σw ∈ ℝp×p and a class-specific mean vector μk ∈ ℝp. The log likelihood under this model is

| (31) |

where c is a constant. If the classes have equal prior probabilities, then by Bayes’ theorem, a new observation x is assigned to the class for which the discriminant function

| (32) |

is maximal. One can show that this is the same as the classification rule obtained from Fisher’s discriminant problem.

6.2. The optimal scoring problem

Let Y be a n × K matrix, with Yik = 1i∈Ck. Then, optimal scoring involves sequentially solving

| (33) |

for k = 1, …, K − 1. This amounts to recasting the classification problem as a regression problem, where a quantitative coding θk of the K classes must be chosen along with the regression coefficient vector βk. The solution β̂k to (33) is proportional to the solution to (3). Somewhat involved proofs of this fact are given in Breiman & Ihaka (1984) and Hastie et al. (1995). We present a simpler proof in the Appendix.

6.3. LDA in high dimensions

In recent years, a number of authors have proposed extensions of LDA to the high-dimensional setting in order to achieve sparsity (Tibshirani et al. 2002, 2003, Guo et al. 2007, Trendafilov & Jolliffe 2007, Grosenick et al. 2008, Leng 2008, Fan & Fan 2008, Shao et al. 2011, Clemmensen et al. 2011). In Section 4, we proposed penalizing Fisher’s discriminant problem. Here we briefly review some past proposals that have involved penalizing the log likelihood under the normal model, and the optimal scoring problem.

The nearest shrunken centroids (NSC) proposal (Tibshirani et al. 2002, 2003) assigns an observation x* to the class that minimizes

| (34) |

where , S is the soft-thresholding operator (24), and we have assumed equal prior probabilities for each class. This classification rule approximately follows from estimating the class mean vectors via maximization of an L1-penalized version of the log likelihood (31), and assuming independence of the features (Hastie et al. 2009). The shrunken centroids regularized discriminant analysis (RDA) proposal (Guo et al. 2007) arises instead from applying the normal model approach with covariance matrix Σ̃w = Σ̂w + ρI and performing soft-thresholding in order to obtain a classifier that is sparse in the features.

Several authors have proposed penalizing the optimal scoring criterion (33) by imposing penalties on βk (see e.g. Grosenick et al. 2008, Leng 2008). For instance, the sparse discriminant analysis (SDA) proposal (Clemmensen et al. 2011) involves sequentially solving

| (35) |

where λ is a nonnegative tuning parameter and Ω is a positive definite penalization matrix. If Ω = γI for γ > 0, then this is an elastic net penalty (Zou & Hastie 2005). The resulting discriminant vectors will be sparse if λ is sufficiently large. If λ = 0, then this reduces to the penalized discriminant analysis proposal of Hastie et al. (1995). The criterion (35) can be optimized in a simple iterative fashion: we optimize with respect to βk holding θk fixed, and we optimize with respect to θk holding βk fixed. In fact, if any convex penalties are applied to the discriminant vectors in the optimal scoring criterion (33), then an iterative approach can be developed that decreases the objective at each step. However, the optimal scoring problem is a somewhat indirect formulation for LDA.

Our penalized LDA proposal is instead a direct extension of Fisher’s discriminant problem (3). Trendafilov & Jolliffe (2007) consider a problem very similar to penalized LDA-L1. But they discuss only the p < n case. Their algorithm is more complex than ours, and does not extend to general convex penalty functions.

A summary of proposals that extend LDA to the high-dimensional setting through the use of L1 penalties is given in Table 4. In the next section, we will explore how our penalized LDA-L1 proposal relates to the NSC and SDA methods.

Table 4.

Advantages and disadvantages of using the normal model (NM), optimal scoring (OS), and Fisher’s discriminant analysis (FD) as the basis for penalized LDA with an L1 penalty

| Advantages | Disadvantages | Citation | |

|---|---|---|---|

| NM | Sparse class means if diagonal estimate of Σw used. Computations are fast. | Does not give sparse discriminant vectors. No reduced-rank classification. | Tibshirani et al. (2002) |

| OS | Sparse discriminant vectors. | Dificult to enforce diagonal estimate for Σw, which is useful if p > n. Computations can be slow. |

Grosenick et al. (2008) Leng (2008) Clemmensen et al. (2011) |

| FD | Sparse discriminant vectors. Simple to en-force diagonal estimate of Σw. Computations are fast using diagonal estimate of Σw. | Computations can be slow when p is large, unless diagonal estimate of Σw is used. | This work. |

7. Connections with existing methods

7.1. Connection with sparse discriminant analysis

Consider the SDA criterion (35) with k = 1. We drop the subscripts on β1 and θ1 for convenience. Partially optimizing (35) with respect to θ reveals that for any β for which YTXβ ≠ 0, the optimal θ equals . So (35) can be rewritten as

| (36) |

Assume that each feature has been standardized to have within-class standard deviation equal to 1. Take Σ̃w = Σ̂w + Ω, where Ω is chosen so that Σ̃w is positive definite. Then, the following proposition holds.

Proposition 3

Consider the penalized LDA-L1 problem (17) where λ1 > 0 and k = 1. Suppose that at the solution β* to (17), the objective is positive. Then, there exists a positive tuning parameterλ2 and a positive scalar c such that cβ* corresponds to a zero of the generalized gradient of the SDA objective (36).

A proof is given in the Appendix. Note that the assumption that the objective is positive at the solution β* is not very taxing - it simply means that β* results in a higher value of the objective than does a vector of zeros. Proposition 3 states that if the same positive definite estimate for Σw is used for both problems, then the solution of the penalized LDA-L1 problem corresponds to a point where the generalized gradient of the SDA problem is zero. But since the SDA problem is not convex, this does not imply that there is a correspondence between the solutions of the two problems. Penalized LDA-L1 has some advantages over SDA. Unlike SDA, penalized LDA-L1 has a clear relationship with Fisher’s discriminant problem. Moreover, unlike SDA, it provides a natural way to enforce a diagonal estimate of Σw.

7.2. Connection with nearest shrunken centroids

The following proposition indicates that in the case of two equally-sized classes, NSC is closely related to the penalized LDA-L1 problem with the diagonal estimate (4) for Σw.

Proposition 4

Suppose that K = 2 and . Let β̂ denote the solution to the problem

| (37) |

where Σ̃w is the diagonal estimate (4). Consider the classification rule obtained by computing Xβ̂ and assigning each observation to its nearest centroid in this transformed space. This is the same as the NSC classification rule (34).

Note that (37) is simply a modified version of the penalized LDA-L1 criterion, in which the between-class variance term has been replaced with its square root. Therefore, penalized LDA-L1 with a diagonal estimate of Σw and NSC are closely connected when K = 2. This connection does not hold for larger values of K, since NSC penalizes the elements of the p × K class centroid matrix, whereas penalized LDA-L1 penalizes the eigenvectors of this matrix. A proof of Proposition 4 is given in the Appendix.

8. Discussion

We have extended Fisher’s discriminant problem to the high-dimensional setting by imposing penalties on the discriminant vectors. The penalty function is chosen based on the problem at hand, and can result in an interpretable classifier. A potentially useful but unexplored area of application for our proposal is fMRI data, for which one could use a penalty that incorporates the spatial structure of the voxels.

There is a strong connection between our penalized LDA proposal and previous work on penalized principal components analysis (PCA). When Pk is an L1 penalty, (12) is closely related to the SCoTLASS proposal for sparse PCA (Jolliffe et al. 2003). The criterion (12) and Algorithm 1 for optimizing it are closely related to the penalized principal components algorithms considered by a number of authors (see e.g. Zou et al. 2006, Shen & Huang 2008, Witten et al. 2009). This connection stems from the fact that Fisher’s discriminant problem is simply a generalized eigenproblem.

The R language software package penalized LDA implementing penalized LDA-L1 and penalized LDA-FL will be made available on CRAN, http://cran.r-project.org/.

Acknowledgments

We thank two anonymous reviewers for helpful suggestions, and we thank Line Clemmensen for responses to our inquiries. Trevor Hastie provided helpful comments that improved the quality of this manuscript. Robert Tibshirani was partially supported by National Science Foundation Grant DMS-9971405 and National Institutes of Health Contract N01-HV-28183.

Appendix

Equivalence between (3) and standard formulation for LDA

We have stated Fisher’s discriminant problem as (3), but a more standard formulation is

| (38) |

We now show that (3) and (38) are equivalent, provided that the solution is not in the null space of Σ̂b. It suffices to show that if α solves (3), then αTΣ̂wα = 1.

We proceed with a proof by contradiction. Suppose that α solves (3) and αTΣ̂wα < 1, αTΣ̂bα > 0. Let . Since c > 1, it follows that (cα)TΣ̂b(cα) > αTΣ̂bα. And cα is in the feasible set for (3). This contradicts the assumption that α solves (3). Hence, any solution to (3) that is not in the null space of Σ̂b also solves (38).

Note that we do not concern ourselves with solutions that are in the null space of Σ̂b, as these are not useful for the purpose of discrimination and will arise only if too many discriminant vectors are used.

Proof of Proposition 1

Proof

Letting denote the symmetric matrix square root of Σ̃w and , (6) becomes

| (39) |

which is equivalent to

| (40) |

where . Equivalence of (40) and (39) can be seen from partially optimizing (40) with respect to uk.

We claim that β̃k and uk that solve (40) are the kth left and right singular vectors of A. By inspection, the claim holds when k = 1. Now, suppose that the claim holds for all i < k, where k > 1. Partially optimizing (40) with respect to β̃k yields

| (41) |

By definition, is an orthogonal projection matrix into the space orthogonal to

| (42) |

for all i < k, where proportionality follows from the fact that β̃i and ui are the ith singular vectors of A for all i < k. Hence, . Therefore, by (41), uk is the kth eigenvector of ATA, or equivalently the kth right singular vector of A. So by (40), β̃k is the kth left singular vector of A, or equivalently the kth eigenvector of . Therefore, the solution to (6) is the kth discriminant vector.

Proof of Proposition 2

For (18), the Karush-Kuhn-Tucker (KKT) conditions (Boyd & Vandenberghe 2004) are given by

| (43) |

where we have dropped the “k” subscripts and superscripts for ease of notation, and where Γ is a p-vector of which the jth element is the subgradient of with respect to βj; i.e. Γj = σ̂j if βj > 0, Γj = −σ̂j if βj < 0, and Γj is in between σ̂j and − σ̂j if βj = 0.

First, suppose that for some j, |(2Σ̂bβ(m−1))j| > λσ̂j. Then it must be the case that 2δΣ̃wβ ≠ 0. So δ > 0 and βTΣ̃wβ = 1. Then the KKT conditions simplify to

| (44) |

Substituting d = δβ, this is equivalent to solving (21) and then dividing the solution d̂ by .

Now, suppose instead that |(2Σ̂bβ(m−1))j| ≤ λσ̂j for all j. Then, by (43), it follows that β̂ = 0 solves (18). By inspection of the subgradient equation for (21), we see that in this case d̂ = 0 solves (21) as well. Therefore, the solution to (18) is as given in Proposition 2.

The same set of arguments applied to (20) lead to Proposition 2(b).

Proof of Proposition 3

Proof

Consider (17) with tuning parameter λ1 and k = 1. Then by Theorem 6.1.1 of Clarke (1990), if there is a nonzero solution β*, then there exists μ ≥ 0 such that

| (45) |

where Γ(β) is the subdifferential of ||β||1. The subdifferential is the set of subgradients of ||β||1; the jth element of a subgradient equals sign(βj) if βj ≠ 0 and is between −1 and 1 if βj = 0. Left-multiplying (45) by β* yields 0 = 2β*TΣ̂bβ* − λ1 ||β*||1 − 2μβ*TΣ̃wβ* Since the sum of the first two terms is positive (since β* is a nonzero solution), it follows that μ > 0.

Now, define a new vector that is proportional to β*:

| (46) |

where . By inspection, a ≠ 0, since otherwise β* would not be a nonzero solution. Also, let . Note that , so λ2 > 0.

The generalized gradient of (36) with tuning parameter λ2 evaluated at β̂ is proportional to

| (47) |

or equivalently,

| (48) |

Comparing (45) to (48), we see that 0 is contained in the generalized gradient of the SDA objective evaluated at β̂.

Proof of Proposition 4

Proof

Since n1 = n2, NSC assigns an observation x ∈ ℝp to the class that maximizes

| (49) |

where X̄kj is the mean of feature j in class k, and the soft-thresholding operator S is given by (24). On the other hand, the classification rule resulting from (37) assigns x to the class that minimizes

| (50) |

This follows from the fact that (37) reduces to

| (51) |

since and .

Since the first term in (50) is positive if k = 1 and negative if k = 2, (37) classifies to class 1 if and classifies to class 2 if . Because X̄1j = −X̄2j, by inspection of (49), the two methods result in the same classification rule.

Proof of equivalence of Fisher’s LDA and optimal scoring

Proof

Consider the following two problems:

| (52) |

and

| (53) |

In Hastie et al. (1995), a somewhat challenging proof is given of the fact that the solutions β̂ to the two problems are proportional to each other. Here, we present a more direct argument. In (52) and (53), Ω is a matrix such that Σ̂w + Ω is positive definite; if Ω = 0 then these two problems reduce to Fisher’s LDA and optimal scoring. Optimizing (53) with respect to θ, we see that the β that solves (53) also solves

| (54) |

For notational convenience, let and . Then, the problems become

| (55) |

and

| (56) |

It is easy to see that the solution to (55) is the first eigenvector of Σ̃b. Let β̂ denote the solution to (56). Consequently, β̂TΣ̃bβ̂ > 0. So β̂ satisfies

| (57) |

and therefore . Now (57) indicates that β̂ is an eigenvector of Σ̃b with eigenvalue ; it remains to show that β̂ is in fact the first eigenvector.

Notice that if we let w = β̂Tβ̂ then , and so . Then the objective of (56) evaluated at β̂ equals

| (58) |

The minimum occurs when λ is large. So the solution to (56) is the largest eigenvector of Σ̃b.

This argument can be extended to show that subsequent solutions to Fisher’s discriminant problem and the optimal scoring problem are proportional to each other.

Contributor Information

Daniela M. Witten, Department of Biostatistics, University of Washington, USA.

Robert Tibshirani, Department of Health Research & Policy, and Statistics, Stanford University, USA.

References

- Barrett T, Suzek T, Troup D, Wilhite S, Ngau W, Ledoux P, Rudnev D, Lash A, Fujibuchi W, Edgar R. NCBI GEO: mining millions of expression profiles– database and tools. Nucleic Acids Research. 2005;33:D562–D566. doi: 10.1093/nar/gki022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickel P, Levina E. Some theory for Fisher’s linear discriminant function,’ naive Bayes’, and some alternatives when there are many more variables than observations. Bernoulli. 2004;10(6):989–1010. [Google Scholar]

- Boyd S, Vandenberghe L. Convex Optimization. Cambridge University Press; 2004. [Google Scholar]

- Breiman L, Ihaka R. Technical report. Univ. California; Berkeley: 1984. Nonlinear discriminant analysis via scaling and ACE. [Google Scholar]

- Clarke F. Optimization and nonsmooth analysis. SIAM, Troy; New York: 1990. [Google Scholar]

- Clemmensen L, Hastie T, Witten D, Ersboll B. Sparse discriminant analysis 2011 [Google Scholar]

- Dudoit S, Fridlyand J, Speed T. Comparison of discrimination methods for the classification of tumors using gene expression data. J Amer Statist Assoc. 2001;96:1151–1160. [Google Scholar]

- Fan J, Fan Y. High-dimensional classification using features annealed independence rules. Annals of Statistics. 2008;36(6):2605–2637. doi: 10.1214/07-AOS504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedman J. Regularized discriminant analysis. Journal of the American Statistical Association. 1989;84:165–175. [Google Scholar]

- Friedman J, Hastie T, Hoefling H, Tibshirani R. Pathwise coordinate optimization. Annals of Applied Statistics. 2007;1:302–332. [Google Scholar]

- Gorski J, Pfeuffer F, Klamroth K. Biconvex sets and optimization with biconvex functions: a survey and extensions. Mathematical Methods of Operations Rsearch. 2007;66:373–407. [Google Scholar]

- Grosenick L, Greer S, Knutson B. Interpretable classifiers for fMRI improve prediction of purchases. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2008;16(6):539–547. doi: 10.1109/TNSRE.2008.926701. [DOI] [PubMed] [Google Scholar]

- Guo Y, Hastie T, Tibshirani R. Regularized linear discriminant analysis and its application in microarrays. Biostatistics. 2007;8:86–100. doi: 10.1093/biostatistics/kxj035. [DOI] [PubMed] [Google Scholar]

- Hastie T, Buja A, Tibshirani R. Penalized discriminant analysis. Annals of Statistics. 1995;23:73–102. [Google Scholar]

- Hastie T, Tibshirani R, Friedman J. The Elements of Statistical Learning; Data Mining, Inference and Prediction. Springer Verlag; New York: 2009. [Google Scholar]

- Hoefling H. A path algorithm for the fused lasso signal approximator. 2009 arXiv:0910.0526. [Google Scholar]

- Hunter D, Lange K. A tutorial on MM algorithms. The American Statistician. 2004;58:30–37. [Google Scholar]

- Johnson N. A dynamic programming algorithm for the fused lasso and l0-segmentation 2010 [Google Scholar]

- Jolliffe I, Trendafilov N, Uddin M. A modified principal component technique based on the lasso. Journal of Computational and Graphical Statistics. 2003;12:531–547. [Google Scholar]

- Krzanowski W, Jonathan P, McCarthy W, Thomas M. Discriminant analysis with singular covariance matrices: methods and applications to spectroscopic data. Journal of the Royal Statistical Society, Series C. 1995;44:101–115. [Google Scholar]

- Lange K. Optimization. Springer; New York: 2004. [Google Scholar]

- Lange K, Hunter D, Yang I. Optimization transfer using surrogate objective functions. Journal of Computational and Graphical Statistics. 2000;9:1–20. [Google Scholar]

- Leng C. Sparse optimal scoring for multiclass cancer diagnosis and biomarker detection using microarray data. Computational Biology and Chemistry. 2008;32:417–425. doi: 10.1016/j.compbiolchem.2008.07.015. [DOI] [PubMed] [Google Scholar]

- Mardia K, Kent J, Bibby J. Multivariate Analysis. Academic Press; 1979. [Google Scholar]

- Nakayama R, Nemoto T, Takahashi H, Ohta T, Kawai A, Yoshida T, Toyama Y, Ichikawa H, Hasegama T. Gene expression analysis of soft tissue sarcomas: characterization and reclassification of malignant fibrous histiocytoma. Modern Pathology. 2007;20(7):749–759. doi: 10.1038/modpathol.3800794. [DOI] [PubMed] [Google Scholar]

- Ramaswamy S, Tamayo P, Rifkin R, Mukherjee S, Yeang C, Angelo M, Ladd C, Reich M, Latulippe E, Mesirov J, Poggio T, Gerald W, Loda M, Lander E, Golub T. Multiclass cancer diagnosis using tumor gene expression signature. PNAS. 2001;98:15149–15154. doi: 10.1073/pnas.211566398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shao J, Wang Y, Deng X, Wang S. Sparse linear discriminant analysis by thresholding for high dimensional data. Annals of Statistics 2011 [Google Scholar]

- Shen H, Huang JZ. Sparse principal component analysis via regularized low rank matrix approximation. Journal of Multivariate Analysis. 2008;101:1015–1034. [Google Scholar]

- Sun L, Hui A, Su Q, Vortmeyer A, Kotliarov Y, Pastorino S, Passaniti A, Menon J, Walling J, Bailey R, Rosenblum M, Mikkelsen T, Fine H. Neuronal and glioma-derived stem cell factor induces angiogenesis within the brain. Cancer Cell. 2006;9:287–300. doi: 10.1016/j.ccr.2006.03.003. [DOI] [PubMed] [Google Scholar]

- Tebbens J, Schlesinger P. Improving implementation of linear discriminant analysis for the high dimension/small sample size problem. Computational Statistics and Data Analysis. 2007;52:423–437. [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. J Royal Statist Soc B. 1996;58:267–288. [Google Scholar]

- Tibshirani R, Hastie T, Narasimhan B, Chu G. Diagnosis of multiple cancer types by shrunken centroids of gene expression. Proc Natl Acad Sci. 2002;99:6567–6572. doi: 10.1073/pnas.082099299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tibshirani R, Hastie T, Narasimhan B, Chu G. Class prediction by nearest shrunken centroids, with applications to DNA microarrays. Statistical Science. 2003;18:104–117. [Google Scholar]

- Tibshirani R, Saunders M, Rosset S, Zhu J, Knight K. Sparsity and smoothness via the fused lasso. J Royal Statist Soc B. 2005;67:91–108. [Google Scholar]

- Trendafilov N, Jolliffe I. DALASS: Variable selection in discriminant analysis via the LASSO. Computational Statistics and Data Analysis. 2007;51:3718–3736. [Google Scholar]

- Witten D, Tibshirani R. Covariance-regularized regression and classification for high-dimensional problems. J Royal Stat Soc B. 2009;71(3):615–636. doi: 10.1111/j.1467-9868.2009.00699.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Witten D, Tibshirani R, Hastie T. A penalized matrix decomposition, with applications to sparse principal components and canonical correlation analysis. Biostatistics. 2009;10(3):515–534. doi: 10.1093/biostatistics/kxp008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu P, Brock G, Parrish R. Modified linear discriminant analysis approaches for classification of high-dimensional microarray data. Computational Statistics and Data Analysis. 2009;53:1674–1687. [Google Scholar]

- Zhu J, Hastie T. Classification of gene microarrays by penalized logistic regression. Biostatistics. 2004;5(2):427–443. doi: 10.1093/biostatistics/5.3.427. [DOI] [PubMed] [Google Scholar]

- Zou H, Hastie T. Regularization and variable selection via the elastic net. J Royal Stat Soc B. 2005;67:301–320. [Google Scholar]

- Zou H, Hastie T, Tibshirani R. Sparse principal component analysis. Journal of Computational and Graphical Statistics. 2006;15:265–286. [Google Scholar]