Abstract

Traditionally the object of economic theory and experimental psychology, economic choice recently became a lively research focus in systems neuroscience. Here I summarize the emerging results and I propose a unifying model of how economic choice might function at the neural level. Economic choice entails comparing options that vary on multiple dimensions. Hence, while choosing, individuals integrate different determinants into a subjective value; decisions are then made by comparing values. According to the good-based model, the values of different goods are computed independently of one another, which implies transitivity. Values are not learned as such, but rather computed at the time of choice. Most importantly, values are compared within the space of goods, independent of the sensori-motor contingencies of choice. Evidence from neurophysiology, imaging and lesion studies indicates that abstract representations of value exist in the orbitofrontal and ventromedial prefrontal cortices. The computation and comparison of values may thus take place within these regions.

Keywords: Neuroeconomics, Subjective value, Action value, Orbitofrontal cortex, Transitivity, Adaptation

1. INTRODUCTION

Economic choice can be defined as the behavior observed when individuals make choices solely based on subjective preferences. Since at least the XVII century, this behavior has been the central interest of economic theory (which justifies the term “economic choice”), and also a frequent area of research in experimental psychology. In the last decade, however, economic choice has attracted substantial interest in neuroscience, for at least three reasons. First, economic choice is an intrinsically fascinating topic, intimately related to deep philosophical questions such as free will and moral behavior. Second, over many generations, economists and psychologists accumulated a rich body of knowledge, identifying concepts and quantitative relationships that describe economic choice. In fact, economic choice is a rare case of high cognitive function for which such a formal and established behavioral description exists. This rich “psychophysics” can now be used to both guide and constrain research in neuroscience. Third, economic choice is directly relevant to a constellation of mental and neurological disorders, including frontotemporal dementia, obsessive-compulsive disorder and drug addiction. These reasons explain the blossoming of an area of research referred to as neuroeconomics (Glimcher et al 2008).

In a nutshell, research in neuroeconomics aspires to describe the neurobiological processes and cognitive mechanisms that underlie economic choices. Although the field is still in its infancy, significant progress has been made already. Examples of economic choice include the choice between different ice cream flavors in a gelateria, the choice between different houses for sale, and the choice between different financial investments in a retirement plan. Notably, options available for choice in different situations can vary on a multitude of dimensions. For example, different flavors of ice cream evoke different sensory sensations and may be consumed immediately; different houses may vary for their price, their size, the school district, and the distance from work; different financial investment may carry different degrees of risk, with returns available in a distant, or not-so-distant, future. How does the brain generate choices in the face of this enormous variability? Economic and psychological theories of choice behavior have a cornerstone in the concept of value. While choosing, individuals assign values to the available options; a decision is then made by comparing these values. Hence, while options can vary on multiple dimensions, value represents a common unit of measure to make a comparison. From this perspective, understanding the neural mechanisms of economic choice amounts to describing how values are computed and compared in the brain.

Much research in recent years thus focused on the neural representation of economic value. As detailed in this review, a wealth of results obtained with a variety of techniques – single cell recordings in primate and rodents, functional imaging in humans, lesion studies in multiple species, etc. – indicates that neural representations of value exist in several brain areas and that lesions in some of these areas – most notably the orbitofrontal cortex (OFC) and ventromedial prefrontal cortex (vmPFC) – specifically impair choice behavior. In other words, the brain actually computes values when subjects make economic choices.

To appreciate the significance of this proposition, it is helpful to step back and take a historical and theoretical perspective. Neoclassic economic theory can be thought of as a rigorous mathematical construct founded on a limited set of axioms (Kreps 1990). In this framework, the concept of value is roughly as follows. Under few and reasonable assumptions, any large set of choices can be accounted for as if the choosing subject maximized an internal value function. Thus values are central to the economist’s description of choice behavior. Note, however, that the concept of value in economics is behavioral and analytical, not psychological. In other words, the fact that choices are effectively described in terms of values does not imply that subjects actually assign values while choosing. Thus by taking an “as if” stance, economic theory explicitly avoids stating what mental processes actually underlie choice behavior. The distinction between an “as if” theory and a psychological theory might seem subtle if not evanescent. However, this distinction is critical in economics and it helps appreciating the contribution of recent research in neuroscience. The “as if” stance captures a fundamental limit: based on behavior alone, values cannot be measured independently of choice. Consequently, the assertion that choices maximize values is intrinsically circular. The observation that values are actually computed in the brain essentially breaks this circularity. Indeed, once the correspondence between a neural signal and a behavioral measure of value has been established, that neural signal provides an independent measure of value, in principle dissociable from choices. In other words, the assertion that choices maximize values becomes potentially falsifiable and thus truly scientific (Popper 1963). For this reason, I view the discovery that values are indeed encoded at the neural level as a major conceptual advance and perhaps the most important result of neuroeconomics to date.

With this perspective, the purpose of the present article is threefold. First, I review the main experimental results on the neural mechanisms of value encoding and economic choice. Second, I place the current knowledge in a unifying framework, proposing a model of how economic choice might function at the neural level. Third, I indicate areas of current debate and suggest directions for future research. The paper is organized as follows. Section 2 introduces basic concepts and outlines a “good-based” model of economic choice. Section 3 describes the standard neuroeconomic method used to assess the neural encoding of subjective value. Section 4 summarizes a large body of work from animal neurophysiology, human imaging and lesion studies, which provides evidence for an abstract representation of value. Section 5 discusses the neural encoding of action values and their possible relevance to economic choice. Finally, section 6 highlights open issues that require further experimental work. Overall, I hope to provide a comprehensive, though necessarily not exhaustive, overview of this field.

2. ECONOMIC CHOICE: A GOOD-BASED MODEL

What cognitive and neural computations take place when individuals make economic choices? In broad strokes, my proposal is as follows. I embrace the view that economic choice is a distinct mental process (Padoa-Schioppa 2007) and that it entails assigning values to the available options. The central proposition of the model is that the brain maintains an abstract representation of “goods”, and that the choice process – the computation and comparison of values – takes place within this space of goods. Thus I refer to this proposal as a “good-based” model of economic choice. I define a “commodity” as a unitary amount of a specified good independently of the circumstances in which it is available (e.g., quantity, cost, delay, etc.). The value of each good is computed at the time of choice on the basis of multiple “determinants”, which include the specific commodity, its quantity, the current motivational state, the cost, the behavioral context of choice, etc. The collection of these determinants thus defines the “good”. While choosing, individuals compute the values of different options independently of one another. This computation does not depend on the sensori-motor contingencies of choice (the spatial configuration of the offers or the specific action that will implement the choice outcome). These contingencies may, however, affect values in the form of costs. In particular, the actions necessary to obtain different goods often bear different costs. The model proposed here assumes that the action costs (i.e., the physical effort) is computed, represented in a non-spatial way and integrated with other determinants in the computation of subjective value. According to the good-based model, computation and comparison of values take place within prefrontal regions, including the orbitofrontal cortex (OFC), the ventromedial prefrontal cortex (vmPFC) and possibly other areas. The choice outcome – the chosen good and/or the chosen value – then guides the selection of a suitable action (good-to-action transformation). The good-based model, depicted in Fig.1, is thus defined by the following propositions:

Economic choice is a distinct mental function, qualitatively different from other overt behaviors that can be construed as involving a choice (e.g., perceptual decisions, associative learning). Economic choice entails assigning values to the available options.

A “good” is defined by a commodity and a collection of determinants that characterize the conditions under which the commodity is offered. Determinants can be either “external’ (e.g., cost, time delay, risk, ambiguity, etc.) or “internal” to the subject (e.g., motivational state, im/patience, risk attitude, ambiguity attitude, etc.).

The brain maintains an abstract representation of goods. More specifically, when a subject makes a choice, different sets of neurons represent the identities and values of different goods. The ensemble of these sets of neurons provides a “space of goods”. This representation is abstract in the sense that the encoding of values does not depend on the sensori-motor contingencies of choice. Choices take place within this representation – values are computed and compared in the space of goods.

Some determinants may be learned through experience (e.g., the cost of a particular good), while other determinants may not be learned (e.g., the motivational state, the behavioral context). The process of value assignment implies an integration of different determinants. Thus the value of each good is computed “online” at the time of choice.

While choosing, individuals normally compute the values of different goods independently of one another. Such “menu invariance” implies transitive preferences.

Values computed in different behavioral conditions can vary by orders of magnitude. The encoding of value adapts to the range of values available in any given condition and thus maintains high sensitivity.

With respect to brain structures, the computation and comparison of values takes place within prefrontal regions, including OFC, vmPFC, and possibly other regions. The choice outcome then guides a good-to-action transformation that originates in prefrontal regions and culminates in “premotor” regions, including parietal, pre-central and subcortical regions.

In addition to providing the bases for economic choices, subjective values inform a variety of neural systems, including sensory and motor systems (through attention and attention-like mechanisms), learning (e.g., through mechanisms of reinforcement learning), emotion (including autonomic functions), etc.

Figure 1.

Good-based model. The value of each good is computed integrating multiple determinants, of which some are external (e.g., commodity, quantity, etc.) and other are internal (motivation, im/patience, etc.). Offer values of different goods are computed independently of one another and then compared to make a decision. This comparison takes place within the space of goods. The choice outcome (chosen good, chosen value) then guides an action plan through a good-to-action transformation. Values and choice outcomes also inform other brain systems, including sensory systems (through perceptual attention), learning (e.g., through mechanisms of reinforcement learning) and emotion (including autonomic functions).

As illustrated in the following pages, this good-based model accounts for a large body of experimental results. It also makes several predictions that shall be tested in future work. In this respect, the good-based model proposed here should be regarded as a working hypothesis. Notably, my proposal differs from other models of economic choice previously discussed by other authors. Throughout the paper, I will highlight these differences and suggest possible approaches to assess the merits of different proposals.

3. MEASURING ECONOMIC VALUE AND ITS NEURAL REPRESENTATION

Consider a person choosing between two houses for sale at the same price – one house is smaller but closer to work, the other is larger but further from work. All things being equal, the person would certainly prefer to live in a large house and close to work, but that option is beyond her budget. Thus while comparing houses to make a choice, the person must weigh against each other two dimensions – the distance from work and the square footage of the house. Physically, these two dimensions are different and incommensurable. However, the value that the chooser assigns to the two options provides a common scale, a way to compare the two dimensions. Thus intrinsic to the concept of value is the notion of a trade-off between physically distinct and competing dimensions (i.e., different determinants). This example also highlights two fundamental attributes of value. First, value is subjective – for example, one person might be willing to live in a smaller house in order to avoid a long commute, while another person might accept a long commute in order to enjoy a larger house. Second, measuring the subjective value assigned by a particular individual to a given good necessarily requires asking the subject to choose between that good and other options.

In recent years, neuroscience scholars have embraced these concepts and used them to study the neural encoding of economic value. In the first study to do so (Padoa-Schioppa & Assad 2006), we examined trade-offs between commodity and quantity. In this experiment, monkeys chose between two juices offered in variable amounts. The two juices were labeled A and B, with A preferred. When offered one drop of juice A versus one drop of juice B (offer 1A:1B), the animals chose juice A. However, the animals were thirsty – they generally preferred larger amounts of juice to smaller amounts of juice. The amounts of the two juices offered against each other varied from trial to trial, which induced a commodity-quantity trade-off in the choice pattern. For example in one session (Fig.2ab), offer types included 0B:1A, 1B:2A, 1B:1A, 2B:1A, 3B:1A, 4B:1A, 6B:1A, 10B:1A and 3B:0A. The monkey generally chose 1A when 1B, 2B or 3A were available in alternative, it was roughly indifferent between the two juices when offered 4B:1A, and it chose B when 6B or 10B were available. In other words, the monkey assigned to 1A a value roughly equal to the value it assigned to 4B. A sigmoid fit provided a more precise indifference point 1A=4.1B (Fig.2b). This equation established a relationship between juices A and B. On this basis, we computed a variety of value-related variables, which were then used to interpret the activity of neurons in the OFC. In particular, our analysis showed that neurons in this area encode three variables: offer value (the value of only one of the two juices), chosen value (the value chosen by the monkey in any given trial) and taste (a binary variable identifying the chosen juice) (Fig.2c–e).

Figure 2.

Measuring subjective values: value encoding in the OFC. a. Economic choice task. In this experiment, monkey chose between different juices offered in variable amounts. Different colors indicated different juice types and the number of squares indicated different amounts. In the trial depicted here, the animal was offered 4 drops of peppermint tea (juice B) versus 1 drop of grape juice (juice A). The monkey indicated its choice with an eye movement. b. Choice pattern. The x-axis represents different offer types ranked by the ratio #B:#A. The y-axis represents the percent of trials in which the animal chose juice B. The monkey was roughly indifferent between 1A and 4B. A sigmoid fit indicated, more precisely, that 1A = 4.1B. The relative value (4.1 here) is a subjective measure in multiple senses. First, it depends on the two juices. Second, for given two juices, it varies for different individuals. Third, for any individual and two given juices, it varies depending, for example, on the motivational state of the animal (thirst). Thus to examine the neural encoding of economic value, it is necessary to examine neural activity in relation to the subjective values measured concurrently. c. OFC neuron encoding the offer value. Black circles indicate the behavioral choice pattern (relative value in the upper left) and red symbols indicate the neuronal firing rate. Red diamonds and circles refer, respectively, to trials in which the animal chose juice A and juice B. There is a linear relationship between the activity of the cell and the quantity of juice B offered to the monkey. d. OFC neuron encoding the chosen value. There is a linear relationship between the activity of the cell and the value chosen by the monkey in each trial. For this session, 1A=2.4B. The activity of the cell is low when the monkey chooses 1A or 2B, higher when the monkey chooses 2A or 4B, and highest when the monkey chooses 1A or 6B. Neurons encoding the chosen value are thus indentified based on the relative value of the two juices. e. OFC neuron encoding the taste. The activity of the cell is binary depending on the chosen juice but independent of its quantity. (2d–e, same conventions as in 2c.) Adapted from Padoa-Schioppa and Assad (2006) Nature (Nature Publishing Group) and from Padoa-Schioppa (2009) J Neurosci (Soc for Neurosci, with permission).

In our experiment (Fig.2), offers varied on two dimensions – juice type (commodity) and juice amount (quantity). However, the same method can be applied when offers vary on other dimensions, such as probability, cost, delay, etc. For example, Kable and Glimcher (2007) conducted on human subjects an experiment on temporal discounting. People and animals often prefer smaller rewards delivered earlier to larger rewards delivered later – an important phenomenon with broad societal implications. In the study of Kable and Glimcher, subjects chose in each trial between a small amount of money delivered immediately and a larger amount of money delivered at a later time. For given delivery time T, the authors varied the amount of money, and identified the indifference point – the amount of money delivered at time T such that the subject would be indifferent between the two options. Further, the authors repeated this procedure for different delivery times T. Indifference points – fitted with a hyperbolic function – provided a measure of the subjective value choosers assigned to time-discounted money. During the experiment, the authors recorded the blood-oxygen-level-dependent (BOLD) signal. In the analyses, they used the measure of subjective value obtained from the indifference point as a regressor for the neural activity. The results showed that the vmPFC encodes time-discounted values. (See also (Kim et al 2008; Kobayashi & Schultz 2008; Louie & Glimcher 2010).)

An interesting procedure to measure indifference points is to perform a “second price auction”. For example in a study by Plassmann et al (2007), hungry human subjects were asked to declare the highest price they would be willing to pay for a given food (i.e., their indifference point, also called “reservation price”). Normally, people would try to save money and declare a price lower than their true reservation price. However, second price auctions discourage them from doing so by randomly generating a second price after the subjects have declared their own price. If the second price is lower than the declared price, subjects get to buy the food and pay the second price; if the second price is higher than the declared price, subjects don’t get to buy the food at all. In these conditions, the optimal strategy for subjects is to declare their true reservation price. This procedure thus measures for each subject the indifference point between food and money. Using this measure, Plassmann et al confirmed that the BOLD signal in the OFC encodes the value subjects assigned to different foods. (See also (De Martino et al 2009).)

In summary, to measure the neural representation of subjective value, it is necessary to let the subject choose between alternative offers, infer values from the indifference point, and use that measure to interpret neural signals. This experimental method – used widely in primate neurophysiology (Kim et al 2008; Kimmel et al 2010; Klein et al 2008; Kobayashi & Schultz 2008; Louie & Glimcher 2010; O'Neill & Schultz 2010; Sloan et al 2010; Watson & Platt 2008) and human imaging (Brooks et al 2010; Christopoulos et al 2009; De Martino et al 2009; FitzGerald et al 2009; Gregorios-Pippas et al 2009; Hsu et al 2009; Levy et al 2010; Peters & Buchel 2009; Pine et al 2009; Shenhav & Greene 2010) – is now standard in neuroeconomics.

4. AN ABSTRACT REPRESENTATION OF ECONOMIC VALUE

In this section, I review the evidence from neural recordings and lesion studies indicating that the representation of value in OFC and vmPFC is abstract and causally linked to economic choices. I then describe how this representation of value is affected by the behavioral context choice, and I discuss the evidence suggesting that values are computed online.

Evidence from neural recordings

A neuronal representation of value can be said to be “abstract” (i.e., in the space of goods) if two conditions are met. First, the encoding should be independent of the sensori-motor contingencies of choice. In particular, the activity representing the value of any given good should not depend on the action executed to obtain that good. Second, the encoding should be domain general. In other words, the activity should represent the value of the good affected by all the relevant determinants (commodity, quantity, risk, cost, etc.). Current evidence for such an abstract representation is most convincing for two brain areas – OFC and vmPFC. In this subsection and the next, I review the main experimental results from, respectively, neural recordings and lesion studies.

In our original study (Fig.2), we actually examined a large number of variables that OFC neurons might possibly encode, including offer value, chosen value, other value (the value of the unchosen good), total value, value difference (chosen value minus unchosen value), taste, etc. Several statistical procedures were used to identify a small set of variables that would best account for the neuronal population. The results can be summarized as follows. First, offer value, chosen value and taste accounted for the activity of neurons in the OFC significantly better than any other variable examined in the study. Any additional variable explained less than 5% of responses. Second, the encoding of value in OFC was independent of the sensori-motor contingencies of the task. Indeed, less than 5% of OFC neurons were significantly modulated by the spatial configuration of the offers on the monitor or by direction of the eye movement. Third, each neuronal response encoded only one variable and the encoding was linear. In other words, a linear regression of the firing rate onto the encoded variables generally provided a very good fit, and adding terms to the regression (quadratic terms or additional variables) usually failed to significantly improve the fit. Fourth, the timing of the encoding appeared to match the mental processes monkeys presumably undertook during each trial. In particular, neurons encoding the offer value – the variable on which choices were presumably based – were the most prominent immediately after the offers were presented to the animal (Padoa-Schioppa & Assad 2006).

With respect to the first condition – independence from sensori-motor contingencies – the evidence for an abstract representation of values thus seems robust. Indeed consistent results were obtained in other single cell studies in primates (Grattan & Glimcher 2010; Kennerley & Wallis 2009; Roesch & Olson 2005).

With respect to the second condition – domain generality – current evidence for an abstract representation of value is clearly supportive. Indeed, domain generality has been examined extensively using functional imaging in humans. For example, Peters and Büchel (2009) let subjects choose between different money offers that could vary on two dimensions – delivery time and probability. Using the method described above, they found that neural activity in the OFC and ventral striatum encoded subjective values as affected by either delay or risk. In another study, Levy et al (2010) let subject choose between money offers that varied either for risk or for ambiguity. Using the same method, they found that the BOLD signal in vmPFC and ventral striatum encoded subjective values in both conditions. (More recent evidence suggests that the ventral striatum is not involved in choice per se (Cai et al 2011).) De Martino et al (2009) compared the encoding of subjective value when subjects gain or loose money – an important distinction because behavioral measures of value are typically reference-dependent (Kahneman & Tversky 1979). They found that OFC activity encoded the subjective value under either gains or losses. Taken together, these results consistently support a domain general representation of subjective value in the OFC and vmPFC. As a caveat, I shall note that because of the low spatial resolution, functional imaging data cannot rule out that different determinants of value might be encoded by distinct, but anatomically nearby, neuronal populations.

Several determinants of choice have also been examined at the level of single neurons. For example, Roesch and Olson (2005) delivered to monkeys different quantities of juice with variable delays. They found that OFC neurons were modulated by both variables and that neurons that increased their firing rates for increasing juice quantities generally decreased their firing rate for increasing time delays. Although the study did not provide a measure of subjective value, the results do suggest an integrated representation of value. In related work, Morrison and Salzman (2009) delivered to monkeys positive or negative stimuli (juice drops or air puffs). Consistent with domain generality, neuronal responses in the OFC had opposite signs. In another study, Kennerley et al (2009) found a sizable population of OFC neurons modulated by three variables – the amount of juice, the required effort, and the likelihood of receiving the juice at the end of the trial. Notably, the firing rate generally increased as a function of the magnitude and as a function of the probability and decreased as a function of the effort (or the other way around). In other words, the modulation across determinants was congruent. Although these experiments did not measure subjective value, the results clearly support the notion of a domain-general representation.

In conclusion, a wealth of empirical evidence is consistent with the notion that OFC and vmPFC harbor an abstract representation of value, although the issue of domain generality needs confirmation at the level of single cells and for determinants not yet tested. Interestingly, insofar as a representation of value exists in rodents (Schoenbaum et al 2009; van Duuren et al 2007), it does not appear to meet the conditions for abstraction defined here. Indeed, several groups found that neurons in the rodent OFC are spatially selective (Feierstein et al 2006; Roesch et al 2006). Furthermore, experiments that manipulated two determinants of value found that different neuronal populations in the rat OFC represent reward magnitude and time delay – a striking difference with primates (Roesch & Olson 2005; Roesch et al 2006). Although the reasons for this discrepancy are not clear (Zald 2006), it has been noted that the architecture of the orbital cortex in rodents and primates is qualitatively different (Wise 2008). It is thus possible that an abstract representation of value may have emerged late in evolution in parallel with the expansion of the frontal lobe. However, it cannot be excluded that domain-general value signals exist in other regions of the rodent brain.

Evidence from lesion studies

While establishing a link between OFC and vmPFC and the encoding of value, the evidence reviewed so far does not demonstrate a causal relationship between neural activity in these areas and economic choices. Such relationship emerges from lesion studies. In this respect, one of the most successful experimental paradigms is that of “reinforcement devaluation”. In these experiments animals choose between two different foods. During training sessions, animals reveal their “normal” preferences. Before test sessions, however, animals are given free access to their preferred food. Following such selective satiation, control animals switch their preferences and choose their usually-less-preferred food. In contrast, in animals with OFC lesions, this satiation effect disappears. In other words, after OFC lesions, animals continue to choose the same food and thus seem incapable of computing values. This result has been replicated by several groups in both rodents (Gallagher et al 1999; Pickens et al 2003) and monkeys (Izquierdo et al 2004; Kazama & Bachevalier 2009; Machado & Bachevalier 2007a; b). Notably, OFC lesions specifically affect value-based decisions as distinguished, for example, from “strategic” (i.e., rule-based) decisions (Baxter et al 2009) or from perceptual judgments (Fellows & Farah 2007).

In the scheme of Fig.1, selective satiation alters subjective values by manipulating the motivational state of the animal. However, OFC lesions disrupt choice behavior also when trade-offs involve other determinants of value. For example with respect to risk, several groups reported that patients with OFC lesions present an atypical risk-seeking behavior (Damasio 1994; Rahman et al 1999). Along similar lines, Hsu et al (2005) found that OFC patients are much less adverse to ambiguity compared to normal subjects. Interestingly, OFC lesions affect choices also when the trade-off involves a social determinant such as fairness, as observed in the Ultimatum Game (Koenigs & Tranel 2007). With respect to time delays, OFC patients are sometimes described as impulsive (Berlin et al 2004). However, animal studies on the effects of OFC lesions on intertemporal choices actually provide diverse results. Specifically, Winstanley et al (2004) found that rats with OFC lesions are more patient than control animals, while Mobini et al (2002) found the opposite effect. Notably, Winstanley et al trained animals before the lesion, while Mobini et al trained animals after the lesion. Moreover, in another study, Rudebeck et al (2006) found that intertemporal preferences following OFC lesions are rather malleable – lesioned animals that initially seemed more impulsive than controls became indistinguishable from controls after performing in a forced-delay version of the task. In the scheme of Fig.1, these results may be explained as follows. Normally, choices are based on values integrated in the OFC. Absent the OFC, animals choose in a non-value-based fashion, with one determinant “taking over”. Training affects what option animals “default to” when OFC is ablated.

One determinant of choice for which current evidence is arguably more controversial is action cost. Arguments against domain generality have been based in particular on two sets of experiments conducted by Rushworth and colleagues. In a first experiment (Rudebeck et al 2006; Walton et al 2002), rats could choose between two possible options, one of which was more effortful but more rewarding. The authors found that the propensity to choose the effortful option was reduced after lesions to anterior cingulate cortex (ACC) but not significantly altered after OFC lesions. In another study (Rudebeck et al 2008), the authors tested monkeys with ACC or OFC lesions in two variants of a matching task, where the correct response was identified either by a particular object (object-based) or by a particular action (action-based). Both sets of lesions reduced performance in both tasks. However, ACC lesions had a comparatively higher effect on the action-based than on the object-based variant, whereas the contrary was true for OFC lesions. On this basis, it was proposed that stimulus values (i.e., good values defined disregarding action costs) and action costs are computed separately, respectively in OFC and ACC (Rangel & Hare 2010; Rushworth et al 2009). While this proposal deserves further examination, I shall note that the results of Rushworth and colleagues actually do not rule out a domain-general representation of value in the OFC. Indeed, as illustrated above for intertemporal choices, ablating a valuation center does not necessarily lead to a consistent bias for or against one determinant of value. Thus the results of the first experiment (Rudebeck et al 2006) – which, in fact, have not been replicated in primates (Kennerley et al 2006) – do implicate the ACC in some aspect of effort-based choices, but are not conclusive on the OFC. On the other hand, the second study (Rudebeck et al 2008) is less obviously relevant to the issue of value encoding, because matching tasks do not necessarily require an economic choice in the sense defined here. Indeed in matching tasks, there is always a correct answer and the subjects are required to infer it from previous trials, not to state a subjective preference (Padoa-Schioppa 2007). Even assuming that animals undertake in matching tasks the same cognitive and neural processes underlying economic choice, it is difficult to establish whether impairments observed after selective brain lesions are due to deficits in learning or in choosing. Finally, in the study of Rudebeck et al (2008), the action-based variant of the task was much more difficult than the object-based (many more errors) and OFC lesions disrupted performance in both variants. Hence, it is possible that OFC lesions selectively interfered with the choice component of the task (and thus affected both variants equally), while ACC lesions only affected the action-based variant. In conclusion, current evidence on choices in the presence of action cost can be certainly reconciled with the hypothesis that OFC harbors an abstract and domain-general representation of subjective value.

To summarize, OFC and vmPFC lesions disrupt choices as defined by a variety of different determinants. Although lesion studies generally lack fine spatial resolution, the results are generally consistent with a domain-general representation of subjective value. Most importantly, the disruptive effect of OFC and vmPFC lesions on choice behavior establishes a causal link between the neuronal representation of subjective value found by neural recordings in these areas and economic choices.

Choosing in different contexts: menu invariance and range adaptation

The results reviewed in the previous sections justify the hypothesis that choices might be based on values computed in OFC and vmPFC. Notably, different neurons in the OFC encode different variables (Fig.2). In a computational sense, the valuation stage underlying the choice is captured by neurons encoding the offer value. Thus the current hypothesis is that choices might be based on the activity of these neurons. In this respect, a critical question is whether and how the encoding of value depends on the behavioral context of choice. There are at least two aspects to this issue.

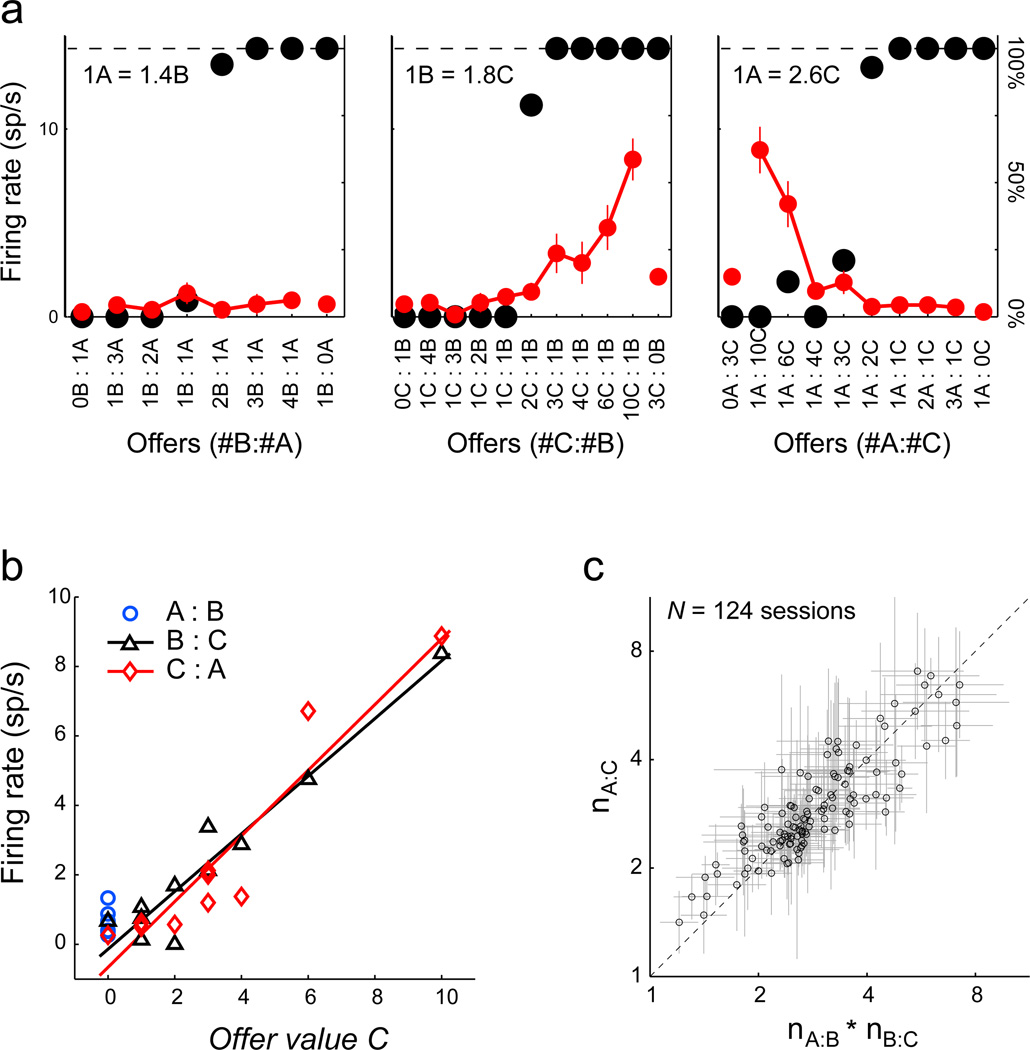

First, for any given offer, a variety of different goods might be available as an alternative. For example in a gelateria, a person might choose between nocciola and pistacchio or, alternatively, between nocciola and chocolate. A critical question is whether the value a subject assigns to a given good depends on what other good is available in alternative (i.e., on the menu). Notably, this question is closely related to another critical question – whether preferences are transitive. Given three goods A, B and C, transitivity holds true if A>B and B>C imply A>C (where ‘>’ stands for ‘is preferred to’). Preference transitivity is a hallmark of rational choice behavior and one of the most fundamental assumptions of economic theory (Kreps 1990). Transitivity and menu invariance are closely related because preferences may violate transitivity only if values depend on the menu (Grace 1993; Tversky & Simonson 1993). Although transitivity violations can some times be observed (Shafir 2002; Tversky 1969), in most circumstances human and animal choices indeed satisfy transitivity. In a second study, we showed that the representation of value in the OFC is invariant for changes of menu (Padoa-Schioppa & Assad 2008). In this experiment, monkey chose between 3 juices labeled A, B and C, in decreasing order of preference. Juices were offered pairwise and trials with the 3 juice pairs (A:B, B:C and C:A) were interleaved. Neuronal responses encoding the offer value of one particular juice typically did not depend on the juice offered as an alternative (Fig.3), and similar results were obtained for chosen value neurons and taste neurons. If choices are indeed based on values encoded in the OFC, menu invariance might thus be the neurobiological origin of preference transitivity. Corroborating this hypothesis, Fellows and Farah (2007) found that patients with OFC lesions asked to express preference judgments for different foods violate transitivity significantly more often than both control subjects and patients with dorsal prefrontal lesions – an effect not observed with perceptual judgments (e.g., in the assessment of different colors).

Figure 3.

Menu invariance and preference transitivity. a. One neuron encoding the offer value. In this experiment, monkeys chose between 3 juices (A, B and C) offered pairwise. The three panels refer, respectively, to trials A:B, B:C and C:A. In each panel, the x-axis represents different offer types, black circles indicate the behavioral choice pattern and red symbols indicate the neuronal firing rate. This neuron encodes the variable offer value C independently of whether juice C is offered against juice B or juice A. In trials A:B, the cell activity is low and not modulated. b. Linear encoding. Same neuron as in 3a, with the firing rate (y-axis) plotted against the encoded variable (x-axis) separately for different trial types (indicated by different symbols, see legend). c. Value transitivity. For each juice pair X:Y, the relative value nXY is measured from the indifference point. The three relative values satisfy transitivity if (in a statistical sense) nAB * nBC = nAC. In this scatter plot, each circle indicates one session (± s.d.) and the two axes indicate, respectively, nAB * nBC and nAC. Data lie along the identity line, indicating that subjective values measured in this experiment satisfy transitivity. Choices based on a representation of value that is menu invariant are necessarily transitive. Adapted from Padoa-Schioppa and Assad (2008), Nature Neurosci (Nature Publishing Group).

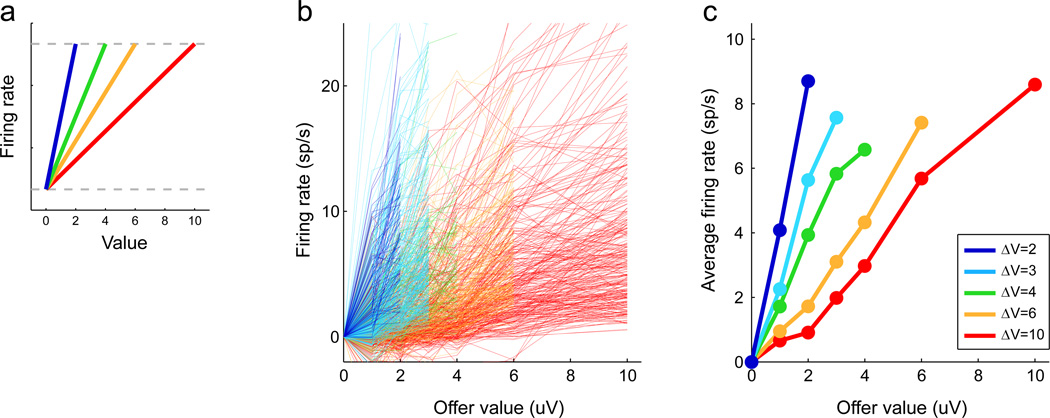

Second, values computed in different behavioral conditions can vary substantially. For example the same individual might choose some times between goods worth a few dollars (e.g., when choosing between different ice cream flavors in a gelateria) and other times between goods worth many thousands of dollars (e.g., when choosing between different houses for sale). At the same time, any representation of value is ultimately limited to a finite range of neuronal firing rates. Moreover, given a range of possible values, an optimal (i.e., maximally sensitive) representation of value would fully exploit the range of possible firing rates. These considerations suggest that the neuronal encoding of value might adapt to the range of values available in any given condition – a hypothesis recently confirmed (Padoa-Schioppa 2009). The basic result is illustrated in Fig.4, which depicts the activity of 937 offer value neurons from the OFC. Different neurons were recorded in different sessions and the range of values offered to the monkey varied from session to session. Yet, the distribution of activity ranges measured for the population did not depend on the range of values offered to the monkey. In other words, OFC neurons adapted their gain (i.e., the slope of the linear encoding) in such a way that a given range of firing rates described different ranges of values in different behavioral conditions. Corroborating results of Kobayashi et al (2010) indicate that this adaptation can take place within 15 trials. Interestingly, neuronal firing rates in OFC do not depend on whether the encoded juice is preferred or non-preferred in that particular session (Padoa-Schioppa 2009).

Figure 4.

Range adaptation in the valuation system. a. Model of neuronal adaptation. The cartoon depicts the activity of a value-encoding neuron adapting to the range of values available in different conditions. The x-axis represents value, the y-axis represents the firing rate and different colors refer to different value ranges. In different conditions, the same range of firing rates encodes different value ranges. b. Neuronal adaptation in the OFC. The figure illustrates the activity of 937 offer value responses. Each line represents the activity of one neuron (y-axis) plotted against the offer value (x-axis). Different responses were recorded with different value ranges (see color labels). While activity ranges vary widely across the population, the distribution of activity ranges does not depend on the value range. c. Population averages. Each line represents the average obtained from neuronal responses in 4b. Adaptation can be observed for any value, as average responses are separated throughout the value spectrum. Similar results were obtained for neurons encoding the chosen value. Adaptation was also observed for individual cells recorded with different value ranges. Adapted from Padoa-Schioppa (2009) J Neurosci (Soc for Neurosci, with permission).

It has often been discussed whether the brain represents values as “relative” or “absolute” (Seymour & McClure 2008). This question can be rephrased by asking what parameters of the behavioral context do or do not affect the encoding of value. The results illustrated here indicate that the encoding of value in the OFC is menu invariant and range adapting. Importantly, while menu invariance and range adaptation hold in normal circumstances, when preferences are stable and transitive, violation of these neural properties might possibly be observed in the presence of choice fallacies (Camerer 2003; Frederick et al 2002; Kahneman & Tversky 2000; Tversky & Shafir 2004) – a promising topic for future research (Kalenscher et al 2010).

Online computation of economic values

While indicating that an abstract representation of good values is encoded in prefrontal areas, the results discussed so far do not address how this representation is formed. In this respect, two broad hypotheses can be entertained. One possibility is that values are learned through experience and retrieved from memory at the time of choice. Alternatively, values could be computed “online” at the time of choice. In observance with a long tradition in experimental psychology (Skinner 1953; Sutton & Barto 1998), referred to as behaviorism, economic choice is often discussed within the framework of, or as intertwined with, associative learning (Glimcher 2008; Montague et al 2006; Rangel et al 2008). In other words, it is often assumed that subjective values are learned and retrieved from memory. Several consideration suggests, however, that values are more likely not learned-and-retrieved, but rather computed online at the time of choice. Intuitively, this proposition follows from the fact that people and animals choose often and effectively between novel goods and/or in novel situations. Consider, for example, a person choosing between two possible cocktails in a bar. The person might be familiar with both drinks. Yet, her choice will likely depend on unlearned determinants such as the motivational state (e.g., does she “feels like” a dry or sweet drink at this time), the behavioral context (e.g., what cocktail did her friend order), etc. Thus describing her choice on the basis of learned-and-retrieved values seems difficult.

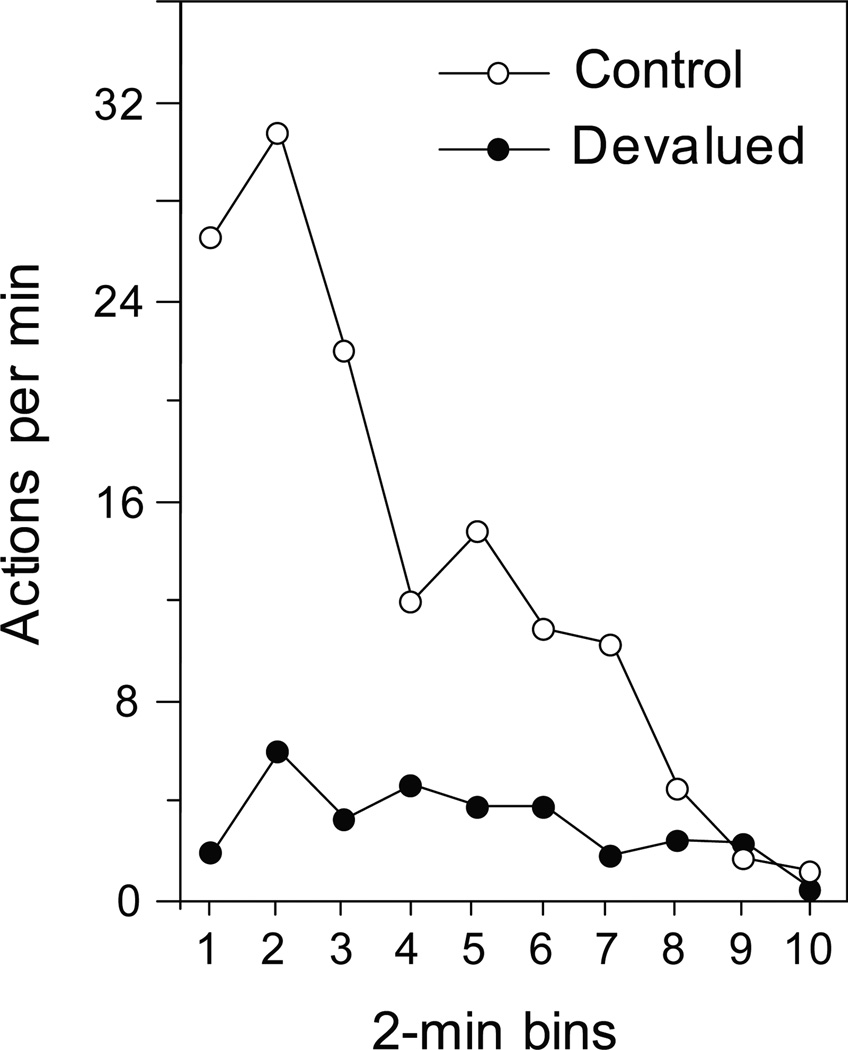

Experimental evidence for values being computed online comes from an elegant series of studies conducted by Dickinson, Rescorla, Balleine and their colleagues on reinforcement devaluation in rats (Adams & Dickinson 1981; Balleine & Ostlund 2007; Colwill & Rescorla 1986). In the simplest version of the experiment, animals were trained to perform a task (e.g., pressing a lever) to receive a given food. Subsequently, the animals were selectively satiated with that food and tested in the task. Critically, animals were tested “in extinction”. In other words, the food was not actually delivered upon successful execution of the task. Thus the performance of the animals gradually degraded over trials during the test phase. Most importantly, however, the performance of satiated animals was significantly lower than that of control animals throughout the test phase (Fig.5). In other words, satiated animals assigned to the food a lower value compared to that assigned by controls – an interpretation confirmed by a variety of control studies and in a free choice version of the experiment (Balleine & Dickinson 1998). To my understanding, this result is at odds with the hypothesis that values are learned during training, stored in memory and simply retrieved at the time of choice. Indeed, if this were the case, rats would retrieve in the test phase the value learned in the training phase, which is the same for experimental animals and control animals. In contrast, this result suggests that animals compute values online based on both current motivation and previously acquired knowledge1. Interestingly, overtraining, which presumably turned choice into a habit, made animals insensitive to devaluation (Adams 1982).

Figure 5.

Effects of selective devaluation. In the training phase of this study, rats learned to perform a task (lever press or chain pull) to obtain a reward (food pellet or starch, in a counterbalanced design). Before testing, animals were selectively satiated with one of the two foods (devaluation). They were then tested in extinction. Thus their performance, measured in actions per minute (y-axis), dropped over time (x-axis) for either food. Critically, the performance for the devalued food (filled symbols) was consistently below that for the control food (empty symbols). Adapted from Balleine and Dickinson (1998), Neuropharmacology (Elsevier, with permission).

In summary, intuition and empirical evidence suggest that subjective values are computed online at the time of choice, not learned and retrieved from memory. At the same time, more work is necessary to understand how the neural systems of valuation and associative learning interact and inform each other. Most important for the present purposes, the neural mechanisms by which different determinants – including learned and unlearned determinants – are integrated in the computation of values remain unknown. While these mechanisms likely involve a variety of sensory, limbic and association areas, further research is necessary to shed light on this critical aspect of choice behavior.

5. ACTION VALUES AND THEIR POSSIBLE RELEVANCE TO ECONOMIC CHOICE

As reviewed in the previous section, a defining trait of the representation of value found in OFC and vmPFC is that values are encoded independently of the sensori-motor contingencies of choice. In contrast, in other brain areas, values modulate neuronal activity that is primarily sensory and/or motor. Such “non-abstract” representations have been found in numerous regions including dorsolateral prefrontal cortex (Kim et al 2008; Leon & Shadlen 1999), anterior cingulate (Matsumoto et al 2003; Seo & Lee 2007; Shidara & Richmond 2002), posterior cingulate (McCoy et al 2003), lateral intraparietal area (Louie & Glimcher 2010; Sugrue et al 2004), dorsal premotor area, supplementary motor area, frontal eye fields (Roesch & Olson 2003), supplementary eye fields (Amador et al 2000), superior colliculus (Ikeda & Hikosaka 2003; Thevarajah et al 2010), striatum (Kawagoe et al 1998; Kim et al 2009; Lau & Glimcher 2008; Samejima et al 2005) and centromedian nucleus of the thalamus (Minamimoto et al 2005). A comprehensive review of the relevant experimental work is beyond my current purpose. However, I will discuss the possible significance of these value representations for economic choice.

Non-abstract value modulations are often interpreted in the “space of actions”. In other words, the spatially selective component of the neural activity is interpreted as encoding a potential action and the value modulation is interpreted as a bias contributing to the process of action selection. Thus many experimental results have been or can be described in terms of “action values”. In broad terms, a neuron can be said to encode an action value if it is preferentially active when a particular action is planned and if it is modulated by the value associated with that action. Influential theoretical accounts posit that decisions are ultimately made on the basis of action values (Kable & Glimcher 2009; Rangel & Hare 2010). According to these “action-based” models, values are attached to different possible actions in the form of action values and the decision – the comparison between values – unfolds as a process of action selection. This view of economic choice is clearly in contrast with the good-based proposal. Thus it is important to discuss whether current evidence for the neuronal encoding action values can be reconciled with the good-based model proposed here. In this respect, a few considerations are in order.

First, in some cases, spatially selective signals modulated by value might be better interpreted as sensory rather than motor. In perceptual domains, value modulates activity by the way of attention – a more valuable visual stimulus inevitably draws higher attention. Thus such value signals might be best described in terms of spatial attention (Maunsell 2004). For example, neurons in the lateral intraparietal area (LIP) activate both in response to visual stimuli placed in their response field and in anticipation of an eye movement. Value modulations recorded in economic choice tasks are strong during presentation of the visual stimulus and significantly lower before the saccade, when movement-related activity dominates (Louie & Glimcher 2010). This observation suggests that value modulates activity in this area by the way of attention – a view bolstered by the fact that value modulations in LIP are normalized as predicted by psychophysical theories of attention (Bundesen 1990; Dorris & Glimcher 2004). Similar arguments may apply to other brain areas where neural activity interpreted in terms of action values is most likely not genuinely motor.

Second, action values possibly relevant to economic choice should be distinguished from action values defined in the context of reinforcement learning (RL) (Sutton & Barto 1998). Typically, models of RL describe an agent facing a problem with multiple possible actions, one of which is objectively correct. An “action value” is an estimate of future rewards for a given action and the agent learns action values by trial-and-error. According to behaviorism, any behavior, including economic choice, results from stimulus-response associations. Thus the behaviorist equates action values defined in RL to action values possibly relevant to economic choice. As noted above, a general problem with the behaviorist account is that people and animals can choose effectively between novel goods. The RL variant of this account has the additional problem that choosing a particular good may require different actions at different times. For these reasons, action values possibly relevant to economic choice cannot be equated to action values defined in RL. Consequently, evidence for neuronal encoding of action values gathered using tasks that include a major learning component – instrumental conditioning (Samejima et al 2005), dynamic matching tasks (Lau & Glimcher 2008; Sugrue et al 2004) or n-armed bandit tasks – and obtained inferring values from models of RL must be considered with caution. This issue is particularly relevant for brain regions, such as the dorsal striatum, that have been clearly linked to associative learning as distinguished from action selection (Kim et al 2009; Williams & Eskandar 2006).

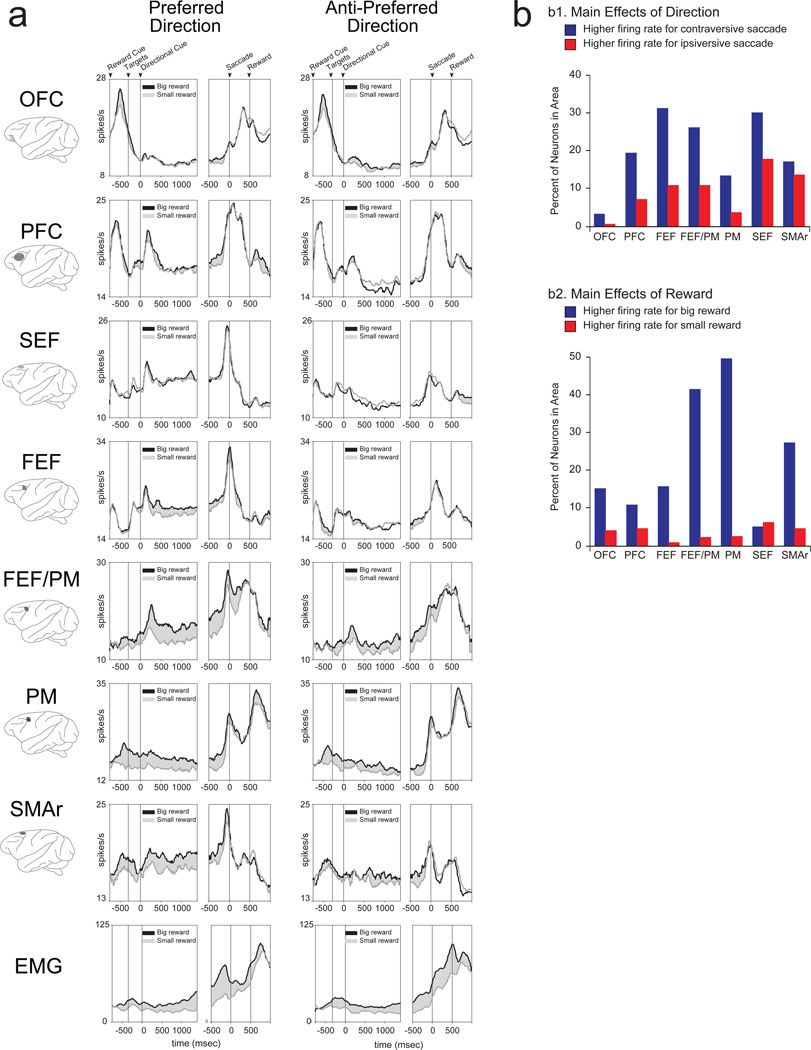

Third and most important, value signals can modulate physiological processes downstream of and unrelated to the decision. A compelling example is provided by Roesch and Olson (2003), who trained three monkeys in a variant of the memory saccade task. At the beginning of each trial, a cue indicated whether the amount of juice delivered for a correct response would be large or small. The authors found neuronal modulations consistent with action values in the frontal eye fields, supplementary eye fields, premotor cortex and supplementary motor area. Strikingly, however, modulations consistent with action values were also found in the electromyographic (EMG) activity of neck and jaw muscles (Fig.6). This suggests that value modulations recorded in cortical motor areas in this experiment – and possibly other experiments – might be downstream of and unrelated to any decision in the sense defined here.

Figure 6.

Action values signals downstream of the decision. a. Activity profiles from OFC, lateral prefrontal cortex (PFC), supplementary eye fields (SEF), frontal eye fields (FEF), premotor cortex (PM), supplementary motor area (SMA) and muscle electromyographic activity (EMG). For each brain region, black and grey traces refer, respectively, to trials with high and low value. Left and right panels refer to saccades towards, respectively, the preferred and anti-preferred directions. For each area, the overall difference between the activity observed in the left and right panels (highlighted in b1) can be interpreted as encoding the action. The difference between the black and grey traces (highlighted in b2) is a value modulation. b. Summary of action value signals. The top panel (b1) highlights the encoding of possible actions (contraversive and ipsiversive for blue and red bars, respectively). The bottom panel highlights value modulations (positive and negative encoding for blue and red bars, respectively). Action encoding is minimal in the OFC but significant in all motor areas. In contrast, value modulation is significant both in the OFC and in motor areas. Strikingly, there is a strong value modulation also in the EMG (bottom panels in 6a). Muscles certainly do not contribute to economic choice – a clear example of action value unrelated to the decision. Thus value modulations in the motor areas – which ultimately control the motor output – are most likely related to value modulations in the EMG, not to the decision process per se. Adapted from Roesch and Olson (2003, 2005), J Neurophysiol (Am Physiol Soc, with permission).

Taken together, these considerations suggest that evidence for the neural encoding of action values and their possible relevance to economic choices should be vetted against alternative hypotheses. With this premise, what evidence is necessary to hypothesize that an action value signal contributes to economic choice in the sense postulated by action-based models? It is reasonable to require three minimal conditions. (a) Neural activity must be genuinely motor. (b) Neural activity must be modulated by subjective value. (c) Neural activity must be not downstream of the decision. These conditions provide a more restrictive definition of “action value”. Critically, to my knowledge, evidence of neuronal activity satisfying these three conditions has never been reported. In fact, even relaxing condition (b), I am not aware of any result that satisfies both conditions (a) and (c). In particular, for activity encoding action values recorded in genuinely motor regions (which presumably satisfied condition (a)), it is generally difficult to rule out that responses were computationally downstream of the decision process.2

In summary, neural activity encoding action values can contribute to a decision if it encodes action, it encodes value, and it does not follow the decision. Of course, the current lack of evidence for such neural activity does not per se falsify action-based models of economic choice. At the same time, current evidence on the encoding of action values can certainly be reconciled with the good-based model and thus does not challenge the present proposal.

6. OPEN QUESTIONS AND DEVELOPMENTS

As illustrated in the previous sections, the good-based model explains a wealth of experimental results in the literature. At the same time, many aspects of this model remain to be tested. In this section I briefly discuss two issues that seem particularly urgent.

Perhaps the most distinctive trait of the good-based model is the proposal that values are compared in the space of goods, independently of the actions necessary to implement choices. In this view, action values do not contribute to economic choice per se. Thus the good-based model is in contrast to action-based models, according to which choices are ultimately made by comparing the value of different action plans (Glimcher et al 2005; Rangel & Hare 2010).

Ultimately, assessing between the two models requires dissociating in time economic choice and action planning. Consistent with the current proposal, recent work suggests that choices can be made independent of action planning (Cai & Padoa-Schioppa 2010; Wunderlich et al 2010). Many aspects of this issue remain, however, to be clarified. For example, in many situations, goods available for choice require courses of action associated with different costs. The hypothesis put forth here – that action costs are integrated with other determinants of value in a non-spatial representation – remains to be tested. Also, it can be noted that in most circumstances a choice ultimately leads to an action. Thus if choices indeed take place in the space of goods, a fundamental question is how choice outcomes are transformed into action plans. The good-to-action transformation, or series of transformations, is poorly understood and should be investigated in future work.

Another important issue is the relative role of OFC and vmPFC in economic choice and value-guided behavior. These two regions roughly correspond to two anatomically defined networks named, respectively, the orbital network (OFC) and the medial network (vmPFC) (Ongur & Price 2000). In an elegant series of studies, Price and colleagues showed that these two networks have distinct and largely segregated anatomical connections (Price & Drevets 2010). The orbital network receives inputs from nearly all sensory modalities and from limbic regions, consistent with a role in integrating different determinants into a value signal. In contrast, the medial network is strongly interconnected with the hypothalamus and brain stem, suggesting a role in the control of autonomic functions and visceromotor responses (Price 1999). Indeed, neural activity in this region is known to correlate with heart rate and skin conductance (Critchley 2005; Fredrikson et al 1998; Ziegler et al 2009). The relationship between decision making, emotion and autonomic functions, while often discussed, remains substantially unclear. One possibility is that autonomic responses play a direct role in decision making (Damasio 1994). Another possibility is that values and decisions, made independently, inform emotion and autonomic responses. A third possibility is that decisions emerge from the interplay of multiple decision systems (McClure et al 2004). The scheme of Fig.1 is somewhat intermediate. Indeed, I posit the existence of a unitary representation of value, which integrates sensory stimuli and motivational states. In turn, values inform emotional and autonomic responses. Importantly, more work is necessary to clarify the relation between motivation, emotion and autonomic responses.

7. CONCLUSIONS

In conclusion, I reviewed current knowledge on the neural mechanisms of economic choice and, more specifically, on how values are computed, represented and compared when individuals make a choice. I also presented a good-based model that provides a unifying framework and that accounts for current results. Finally, I discussed open issues that shall be examined in the future.

Much work in the past few years was designed to test the hypothesis that, while making choices, individuals indeed assign subjective values to the available goods. This proposition has now been successfully tested with respect to a variety of determinants – commodity, quantity, risk, delay, effort, and others. While other determinants remain to be examined, current evidence affords the provisional conclusion that economic values are indeed represented at the neuronal level. This conclusion might appear deceptively foreknown. In fact, a concept of value rooted in neural evidence is a paradigmatic step forward compared to how values have been conceptualized in the past century. Indeed, both behaviorism and neoclassical economics – arguably the dominant theories of choice in psychology and economics since the 1930’s – explicitly state that values are purely descriptive entities, not mental states. For this reason, the demonstration that economic values are neurally and thus psychologically real entities may be regarded as a major success for the emerging field of neuroeconomics.

SUMMARY POINTS.

Different types of decision (e.g., perceptual decisions, economic choice, action selection, etc.) involve different mental operations and different brain mechanisms. Economic choice involves assigning values to different goods and comparing these values.

Measuring the neural representation of economic value requires letting subjects choose between different options, inferring subjective values from the indifference point, and using that measure to analyze neural activity.

A representation of economic value is abstract if neural activity does not depend on the sensori-motor contingencies of choice and if the representation is domain-general. Such an abstract representation exists in the OFC and vmPFC. Lesions to these areas specifically disrupt economic choice behavior.

The representation of value in the OFC is menu invariant. In other words, values assigned to different goods are independent of one another. Menu invariance implies preference transitivity.

Values computed in different behavioral conditions may vary substantially. The representation of value in the OFC is range adapting. In other words, a given range of neural activity represents different value ranges in different behavioral conditions.

While computing the value of a given good, subjects integrate a variety of determinants. Some determinants may be learned while other determinants may not be learned. Thus values are computed online at the time of choice.

A neural representation of action values may possibly contribute to economic choice if three conditions are met: neural activity must be genuinely motor; neural activity must be modulated by subjective value; neural activity must be not downstream of the decision.

In addition to guiding an action, values and choice outcomes inform a variety of cognitive and neural systems, including sensory systems (through perceptual attention), learning (e.g., through mechanisms of reinforcement learning) and emotion (including autonomic functions).

FUTURE ISSUES.

Where in the brain are different determinants of value (e.g., risk, cost, delay, etc.) computed and how are they represented?

The process of integrating multiple determinants into a value signal can be thought of as analogous to computing a non-linear function with many arguments. How is this computation implemented at the neuronal level? Can it be captured with a computational model?

What are the neuronal mechanisms through which different values are compared to make a decision? Are the underlying algorithms similar to those observed in other brain systems?

Assuming that choices indeed take place in goods space, what are the neuronal mechanisms through which a choice outcome is transformed into an action plan?

In the OFC and other areas, neurons may encode values in a positive or negative way (i.e., the encoding slope may be positive or negative). Do these two neuronal populations play different roles in choice behavior?

Abstract representations of value appear to exist in the primate OFC and vmPFC, but the relative contributions of these two brain regions to choice behavior are not clear. In fact, the anatomical connectivity of the “orbital network” and “medial network” is markedly different. How do OFC and vmPFC contribute to economic choices?

No abstract representation of value has yet been found in the rodent OFC – a striking difference with primates. Possible reasons for this discrepancy include a poor homology between “OFC” as defined in different species, the hypothesis that an abstract representation of value may have emerged late in evolution, and differences in experimental procedures. How can differences between species be explained best?

Choice traits such as temporal discounting, risk aversion and loss aversion ultimately affect subjective values. Thus their neuronal correlates may be and have been observed by measuring neural activity encoding subjective value. However, these measures generally do not explain the neurobiological origin of these choice traits. Can temporal discounting and other choice traits be explained as the result of specific neuronal properties?

ACKNOWLEDGEMENTS

This article is dedicated to my father Tommaso Padoa-Schioppa, in memory. I am grateful to John Assad and Xinying Cai for helpful discussions and comments on the manuscript. My research is supported by the NIMH (grant MH080852) and the Whitehall Foundation (grant 2010-12-13).

GLOSSARY

- Action plan

Reflects the spatial nature of the action, including the kinematics and/or the dynamics of the movement.

- Action value

A neuron encodes an action value if is preferentially active when a particular action is planned and if it is modulated by the value associated with that action.

- Action-based models

According to action-based models, economic decisions are made by comparing action values.

- Determinant

A determinant is a dimension on which goods may vary. During choice, different determinants are integrated into a subjective value.

- Good

A good is defined by a commodity and by a collection of determinants.

- Good-based models

According to good-based models, values are computed and compared in goods space. The choice outcome subsequently guides an action plan.

- Menu invariance

holds true if values are assigned to different goods independently of one another. Menu invariance implies preference transitivity.

- Preference transitivity

Preferences (indicated by ‘>’) satisfy transitivity if for any 3 goods X, Y and Z, X>Y and Y>Z imply X>Z. Transitivity is the hallmark of rational decision making.

- Sensori-motor contingencies of choice

The spatial location of the offers and the action (the movement of the body in space) executed to obtain the chosen good.

ACRONYMS

- ACC

anterior cingulate cortex

- BOLD

blood-oxygen-level-dependent

- LIP

lateral intraparietal

- OFC

orbitofrontal cortex

- RL

reinforcement learning

- vmPFC

ventromedial prefrontal cortex

Footnotes

If values were learned and retrieved from memory, these results would have to be interpreted assuming that during the devaluation phase the brain "automatically" updates stored values to reflect the new motivational state. However, this hypothesis seems hardly credible if one considers the fact that the motivational appeal of different goods is in perpetual evolution. For example, the value an individual would assign to any given food changes many times a day, during and after every meal, every time the individual exercises, or simply over time as sugar levels in the blood stream get lower. Thus the hypothesis that values are learned and retrieved implies that the brain holds and constantly updates a large look-up table of values – a rather expensive design. The hypothesis put forth here – that values are only computed when needed – appears more parsimonious.

This observation remains valid beyond the domain of economic choice. Indeed, neural activity that could be interpreted in terms of action values generally violates condition (a) or/and condition (c). Consider for example condition (c). In order to satisfy it, it is necessary to design experiments that allow dissociating in time the decision and the subsequent motor response (Bennur and Gold, 2011; Cai and Padoa-Schioppa, 2010; Cisek and Kalaska, 2002; Gold and Shadlen, 2003; Horwitz et al, 2004; Wunderlich et al, 2010). Evidence that decisions cannot be made in the absence of action planning would support the action-based hypothesis. However, we are not aware of any such evidence. To the contrary, recent results by Bennur and Gold (2010) demonstrate that perceptual decisions can occur in the absence of any action planning. In this respect, it is interesting to notice that a study by Cisek and Kalaska (2002), explicitly designed to satisfy condition (c), obtained results that strikingly violate condition (a). In their experiment, monkeys were first indicated two potential targets for a reaching movement. Subsequently, the ambiguity was resolved in favor of one of the two targets. Insofar as this task requires a “decision”, neurons encoding potential movements prior to the final instruction would be consistent with the “decision” unfolding as a process of action selection. Remarkably, the authors did not find any evidence for such neurons. Indeed, cells in motor and premotor cortices (areas F1 and F2) did not activate before the final instruction. Conversely, neurons that activated prior to the final instruction were from prefrontal cortex (area F7) and thus most likely not motor (Picard and Strick, 2001).

ADDITIONAL READINGS

Niehans J, 1990. A history of economic theory: classic contributions, 1720–1980. Johns Hopkins University Press, 578 pp.

Ross D, 2005. Economic theory and cognitive science: microexplanation. MIT Press, 444 pp.

Kagel JH, Battalio RC, Green L, 1995. Economic choice theory: an experimental analysis of animal behavior. Cambridge University Press, 230 pp.

Heyman GM, 2009. Addiction: a disorder of choice. Harvard University Press, 200 pp.

Padoa-Schioppa C, 2008. The syllogism of neuro-economics. Economics and Philosophy 24: 449–57

Glimcher PW, 2011. Foundations of neuroeconomic analysis. Oxford University Press.

REFERENCES

- Adams CD. Variations in the sensitivity of instrumental responding to reinforcer devaluation. Q J Exp Psych. 1982;34B:77–98. [Google Scholar]

- Adams CD, Dickinson A. Instrumental responding following reinforcer devaluation. Q J Exp Psych. 1981;33B:109–121. [Google Scholar]

- Amador N, Schlag-Rey M, Schlag J. Reward-predicting and reward-detecting neuronal activity in the primate supplementary eye field. J Neurophysiol. 2000;84:2166–2170. doi: 10.1152/jn.2000.84.4.2166. [DOI] [PubMed] [Google Scholar]

- Balleine BW, Dickinson A. Goal-directed instrumental action: contingency and incentive learning and their cortical substrates. Neuropharmacology. 1998;37:407–419. doi: 10.1016/s0028-3908(98)00033-1. [DOI] [PubMed] [Google Scholar]

- Balleine BW, Ostlund SB. Still at the choice-point: action selection and initiation in instrumental conditioning. Ann N Y Acad Sci. 2007;1104:147–171. doi: 10.1196/annals.1390.006. [DOI] [PubMed] [Google Scholar]

- Baxter MG, Gaffan D, Kyriazis DA, Mitchell AS. Ventrolateral prefrontal cortex is required for performance of a strategy implementation task but not reinforcer devaluation effects in rhesus monkeys. Eur J Neurosci. 2009;29:2049–2059. doi: 10.1111/j.1460-9568.2009.06740.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bennur S, Gold JI. Distinct representations of a perceptual decision and the associated oculomotor plan in the monkey lateral intraparietal area. J Neurosci. 2011;31:913–921. doi: 10.1523/JNEUROSCI.4417-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berlin HA, Rolls ET, Kischka U. Impulsivity, time perception, emotion and reinforcement sensitivity in patients with orbitofrontal cortex lesions. Brain. 2004;127:1108–1126. doi: 10.1093/brain/awh135. [DOI] [PubMed] [Google Scholar]

- Brooks AM, Pammi VS, Noussair C, Capra CM, Engelmann JB, Berns GS. From bad to worse: striatal coding of the relative value of painful decisions. Front Neurosci. 2010;4:176. doi: 10.3389/fnins.2010.00176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bundesen C. A theory of visual attention. Psychol Rev. 1990;97:523–547. doi: 10.1037/0033-295x.97.4.523. [DOI] [PubMed] [Google Scholar]

- Cai X, Kim S, Lee D. Heterogeneous coding of temporally discounted values in the dorsal and ventral striatum during intertemporal choice. Neuron. 2011;69:170–182. doi: 10.1016/j.neuron.2010.11.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cai X, Padoa-Schioppa C. Dissociating economic choice from action selection. Society for Neuroscience Meeting Planner. 2010 [Google Scholar]

- Camerer C. Behavioral game theory: experiments in strategic interaction. Princeton, NJ: Russell Sage Foundation - Princeton University Press; 2003. [Google Scholar]

- Christopoulos GI, Tobler PN, Bossaerts P, Dolan RJ, Schultz W. Neural correlates of value, risk, and risk aversion contributing to decision making under risk. J Neurosci. 2009;29:12574–12583. doi: 10.1523/JNEUROSCI.2614-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cisek P, Kalaska JF. Simultaneous encoding of multiple potential reach directions in dorsal premotor cortex. J Neurophysiol. 2002;87:1149–1154. doi: 10.1152/jn.00443.2001. [DOI] [PubMed] [Google Scholar]

- Colwill RM, Rescorla RA. Associative structures in instrumental conditioning. In: Bower GH, editor. The psychology of learning and motivation. Orlando, FL: Academic Press; 1986. pp. 55–104. [Google Scholar]

- Critchley HD. Neural mechanisms of autonomic, affective, and cognitive integration. J Comp Neurol. 2005;493:154–166. doi: 10.1002/cne.20749. [DOI] [PubMed] [Google Scholar]

- Damasio AR. Descartes' error: emotion, reason, and the human brain. New York, NY: Putnam; 1994. p. xix.p. 312. [Google Scholar]

- De Martino B, Kumaran D, Holt B, Dolan RJ. The neurobiology of reference-dependent value computation. J Neurosci. 2009;29:3833–3842. doi: 10.1523/JNEUROSCI.4832-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorris MC, Glimcher PW. Activity in posterior parietal cortex is correlated with the relative subjective desirability of action. Neuron. 2004;44:365–378. doi: 10.1016/j.neuron.2004.09.009. [DOI] [PubMed] [Google Scholar]

- Feierstein CE, Quirk MC, Uchida N, Sosulski DL, Mainen ZF. Representation of spatial goals in rat orbitofrontal cortex. Neuron. 2006;51:495–507. doi: 10.1016/j.neuron.2006.06.032. [DOI] [PubMed] [Google Scholar]

- Fellows LK, Farah MJ. The role of ventromedial prefrontal cortex in decision making: judgment under uncertainty or judgment per se? Cereb Cortex. 2007;17:2669–2674. doi: 10.1093/cercor/bhl176. [DOI] [PubMed] [Google Scholar]

- FitzGerald TH, Seymour B, Dolan RJ. The role of human orbitofrontal cortex in value comparison for incommensurable objects. J Neurosci. 2009;29:8388–8395. doi: 10.1523/JNEUROSCI.0717-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frederick S, Loewenstein G, O'Donogue T. Time discounting and time preference: a critical review. J. Econ. Lit. 2002;40:351–401. [Google Scholar]

- Fredrikson M, Furmark T, Olsson MT, Fischer H, Andersson J, Langstrom B. Functional neuroanatomical correlates of electrodermal activity: a positron emission tomographic study. Psychophysiology. 1998;35:179–185. [PubMed] [Google Scholar]

- Gallagher M, McMahan RW, Schoenbaum G. Orbitofrontal cortex and representation of incentive value in associative learning. J Neurosci. 1999;19:6610–6614. doi: 10.1523/JNEUROSCI.19-15-06610.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glimcher PW. Choice: towards a standard back-pocket model. In: Glimcher PW, Camerer CF, Fehr E, Poldrack RA, editors. Neuroeconomics: decision making and the brain. Amsterdam: Elsevier; 2008. pp. 501–519. [Google Scholar]

- Glimcher PW, Camerer CF, Fehr E, Poldrack RA. Neuroeconomics: decision making and the brain. Amsterdam: Elsevier; 2008. p. 538. [Google Scholar]

- Glimcher PW, Dorris MC, Bayer HM. Physiological utility theory and the neuroeconomics of choice. Games Econ Behav. 2005;52:213–256. doi: 10.1016/j.geb.2004.06.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gold JI, Shadlen MN. The influence of behavioral context on the representation of a perceptual decision in developing oculomotor commands. J Neurosci. 2003;23:632–651. doi: 10.1523/JNEUROSCI.23-02-00632.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]