Abstract

In this paper, we propose a low-cost contact-free measurement system for both 3-D data acquisition and fast surface parameter registration by digitized points. Despite the fact that during the last decade several approaches for both contact-free measurement techniques aimed at carrying out object surface recognition and 3-D object recognition have been proposed, they often still require complex and expensive equipment. Therefore, alternative low cost solutions are in great demand. Here, two low-cost solutions to the above-mentioned problem are presented. These are two examples of practical applications of the novel passive optical scanning system presented in this paper.

Keywords: passive scanning, health monitoring, robot navigation, laser

1. Introduction

Optical scanning is a very common method to obtain fast, safe and cheap information. In this paper a novel passive optical scanning method is presented.

1.1. Large civil structures health monitoring

As we know, today’s civil infrastructures consist of various units and systems such as buildings, bridges, hydroelectric plant dams, offshore platforms, transportation and communication systems, and so on; and a significant amount of expenditures and long working periods have been needed for our society to build up its infrastructure. The satisfactory performance of our infrastructure and systems is vital for our economic activity [1,2].

Buildings and, in general, infrastructures have an enormous impact on the quality of the environment. However, if they are degraded by improper maintenance, they can become very expensive. To prevent this, it is necessary to develop maintenance technology for inspection, monitoring, repair, rebuilding and carrying out financial planning recommendations.

Structural health monitoring (SHM) is one of the most important components in the maintenance technology for civil infrastructures and, until now, some methods of structural monitoring have been used successfully, while recently proposed methods offer the possibility of extending applications and improving efficiency.

Damage in a structure generally causes a local increase in flexibility, which depends on the extent of the damage. Current methods of damage detection include visual inspections, classical nondestructive techniques (NDT) and vibration/modal-based methods [1–3]. Most of the NDT techniques are based on regular ground inspections which are time consuming and expensive. Typical NDT methods for damage evaluation include: sonic, ultrasonic, acoustic-ultrasonic, acoustic emissions, pulse-echo, x-ray imaging, ground penetrating radar (GPR), and dye penetrant inspection to detect cracks [4].

Global Positioning System (GPS) technology, along with the use of Pseudo-Satellites, has been widely used for monitoring the deformation of civil structures. However this technology has some drawbacks, namely the high cost of receivers and pseudo satellites and it cannot be used when the signals are completely blocked by either natural or man-made obstacles [5].

Recent advances in the development of new techniques involve the use of embedded smart sensor and actuator technology in order to reduce the need for visual inspection, to assess structural integrity and mitigate potential risk in large civil structures such as highways, bridges and buildings.

These sensors and actuators are typically made of a variety of materials including piezoelectric and magnetostrictive materials, shape memory alloys, electrorheological and magnetorheological fluids, fiber optic sensors, and so on. These materials can typically be embedded into the host matrix material of the structure in order to either excite or measure its state [6,7].

Most of today’s bridges and roads do not have built-in “intelligence”. Therefore, they cannot take advantage of the benefits of the advanced technologies available for SHM, and lots of them are in bad conditions due to inadequate maintenance, excess loading (relative to their original design and expected usage), and adverse environmental conditions (salt, acid rain, etc.).

In this paper, the design and potential of passive optical scanning (POS) aperture in deformation monitoring of civil structures is discussed. In short, POS is based on optical exploration of luminous reference points previously fixed to the civil structure, and in order to determine the damage level of the structure, the coordinates are periodically compared with the values contained in a historical information data base.

1.2. Vision system for mobile robot navigation

Position determination for a mobile robot along with the ability to measure surfaces and objects in 3-D is an important part of autonomous navigation and obstacle detection, quarry mapping, landfill surveying, and hazardous environment surveying.

In spite of the fact that a significant number of dead reckoning systems use landmark recognition, either by extracting relevant natural features (corners, objects, etc.) using a camera or by identifying beacons placed in some specific places using both a camera and an optical scanner, in many cases dead reckoning is insufficient because it leads to large inaccuracies over time.

Beacon- and landmark-based estimators require the emplacement of beacons and the presence of either natural or man-made structures in the environment [8], and due to the fact that image processing consumes an amount of time that cannot be discarded and requires positioning accuracy as well, it cannot satisfy the autonomous navigation in many cases. Major drawbacks of beacon-based navigation are that the beacons must be placed within a range and that they must be appropriately configured in the robot work space.

The GPS based navigation for autonomous land vehicles has the capabilities to determine the locations of vehicles based on satellites. Although commercial Differential GPS (DGPS) service increases the accuracy to several meters, it is still not sufficient for autonomous driving. It is well known that with the different types of GPS systems that exist we can obtain positions with errors from 0.02 m to l m. Nevertheless this accuracy cannot be guaranteed all the time in most working environments, where partial satellite occlusion and multipath effects can prevent satisfactory GPS receiver operation [9–13]. Environmental sensors, such as 3-D scanning laser rangefinder and ultrasonic environmental sensors, are used as well.

Given the current state-of-the art of this topic [13–15], we believe that a 3-D laser scanner would be the best choice for building 3-D maps. However, 3-D scanners are expensive solutions for most mobile robot applications, and lots of them are not fast enough for real-time map building on fast-moving vehicles, due to the relatively slow vertical scan.

A cost-effective alternative for 3-D mapping is to mount a 2-D laser scanner on the front-top of a mobile robot. During motion, the fanning beam of the scanner sweeps over the terrain in front of the robot, effectively creating a 3-D map.

Triangulation-based laser range finders and light-striping techniques are well-known techniques from more than twenty years ago, and contrary to other active techniques such as structured light, coded light, time of flight, etc., laser range scanners are commonly used for contact-less measuring of surfaces and 3-D scenes in a wide range of applications.

Most commercial laser scan systems use both a camera and a laser beam or laser plane, the surface recovery is based on triangulation (i.e., the intersection of the illuminating laser beam and the rays projected back to the camera [12]) and expensive high-precision actuators are often used for rotating/translating the laser plane or for rotating/translating the object.

In this paper, a scanning vision system that overcomes the above limitations and provides a system that will meet the existing demand for more advanced mobile vehicle navigation systems has been designed.

The system has been developed for visual inspection tasks in both indoor and outdoor environments; and the proposed POS system along with a Laser Positioning System (LPS) allow us to obtain 3-D information of possible obstacles in front of a mobile robot, to make intelligent decisions and to carry out tasks of automatic navigation. This paper also focuses on some of the design and application issues of the POS aperture.

Finally, it is important to highlight that a novel passive scanning aperture (PSA) device is introduced in this paper in order to increase spatial resolution, as the central part of a proposed system with the technical tasks of SHM and robot vision.

2. Problem Statement

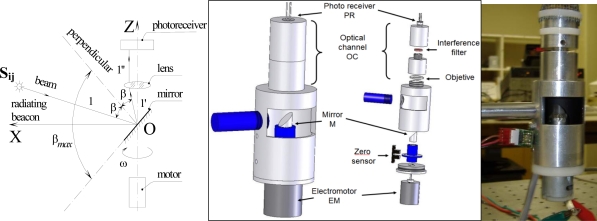

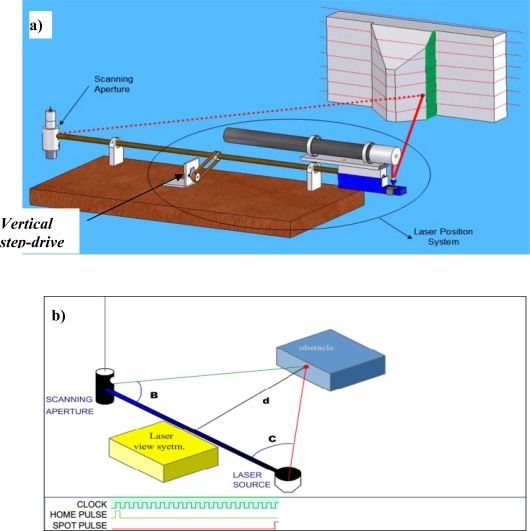

The foundations to solve the tasks of navigation and civil structures monitoring, are the creation of the POS (see Figure 1), which has been developed to detect incidence light angle from monitored structures [14–19].

Figure 1.

POS system.

In general, this device is based on the principle of the automated theodolite and, due to its optical design, it allows capture of the same-rate optical data from the active targets located at different heights in the pre-defined field-of-view (FOV). Also, owing to the fixed distance (focal distance F) between the mirror M and the objective lens, the POS is able to register uniform signal amplitude in the photoreceiver circuit. This allows it to obtain the same coordinate accuracy for points located at different heights within desired FOV.

The POS must be placed in a fixed location in the robot or the SHM system, depending on the application, and it consists of a fixed assembly to support and align the electro-optical elements of the system. The optical elements are the following: double-convex lens, an interference filter and a 45° cylindrical mirror microrod.

The mirror is mounted on the shaft of a direct current (DC) electric motor (EM) (see Figure 1) that turns the mirror around on its own vertical axis. It has two sensors, an opto-switch to indicate the starting point of the turn of the mirror (START signal) and a photodiode to detect light emission points (STOP signal) (see Figures 1 and 2) [14–18].

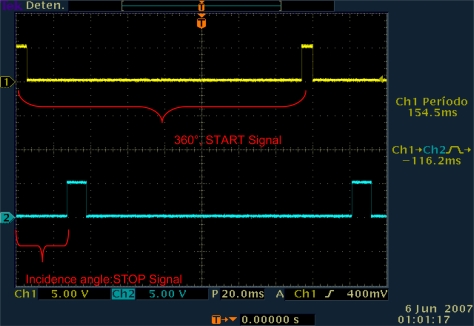

Figure 2.

Electric signals generated by POS system on spot and synchronous point detection.

The main task of the POS is to conduct a search in the horizontal plane of the light emission points Sij (see Figure 1), which represent the (i,j)-position of the active target during the scanning (i: length, j: height), that are within its FOV. These emission points either can be fixed previously on a study surface or can be reflection points of light on a surface.

The principle of operation of the POS is as follows: the mirror rotates at a constant speed and whenever it passes over an initial reference point, the starting point of the rotation of the mirror (START signal; see Figure 2), a START signal is generated starting the pulse counting of a constant high-frequency reference signal fo, until the instant of time at which the scanning plane coincides with the emitting point Sij.

Figure 2 corresponds to a typical screenshot of the Tektronix TDS-3012B two-channel oscilloscope connected to the zero-sensor circuit and the photo-receiver circuit of the prototype under real-time operating conditions. In this case, by scanning plane we mean the plane formed by two intersected straight lines, the motor rotation axis and the gravitational force line projection on the plane of mirror. When the point Sij belongs to this imaginary scanning plane, the STOP pulse is formed. Only at this short instant of time, when the check point Sij coincides with the scanning plane, there exists the physical possibility of carrying out the transmission of a certain part of the light energy emitted by Sij. When this happens, the ray caught by the mirror is reflected with the same angle as the one it arrived at the double-convex lens, and it passes through the interference filter until the moment at which it arrives at the photo-detector, generating a STOP signal.

In the case that there is no signal, if during a complete rotation of the mirror an emitted ray of light is not detected, then the counter of pulses of fo will be reset.

In this process we can find the horizontal coincidence angle Bi between the START signal and the STOP signal,

| (1) |

where NBi is the number of pulses of fo between the START and the STOP signals, and N2π1 is the number of pulses of fo in a complete rotation of the mirror. These codes depend on the rotation speed of the motor ω and the frequency fo [19].

3. Practical Applications of the Proposed Method

3.1. Monitoring of civil structures

One of the applications of the POS is in monitoring of civil structures for determining the health of such structures [20]. These structures might be sufficiently different from one another, and the proposed system configuration can be slightly modified in order to obtain the desired data from the surface of the structure in an optimal way.

3.1.1. Bridge monitoring

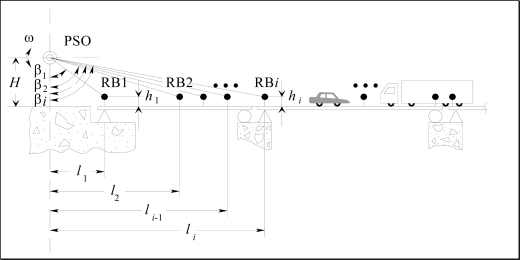

As an example we consider the bridge shown in Figure 3. Here it is necessary to install Radiating Beacons (RB) on the surface of the structure at the same height hi The distance li between each RB is measured during the installation, and in order to measure the horizontal and vertical displacements of each RB, two POS are installed: one POS to measure horizontal angles (HPOS) with vertical axis rotation and the other POS to measure elevation angles (VPOS) with horizontal axis rotation.

Figure 3.

Horizontal POS placement for bridge monitoring.

Then, the following expression is valid for each RBi from i = 1 to n when there is no deformation:

| (2) |

When there is a deformation with magnitude Δhi at the point RBi, the measured angle by the POS βi changes in magnitude Δβi and the expression (2) is given by:

| (3) |

Therefore:

| (4) |

In this case, the system does not have to be affected by the vibrations of the structure. The rotation velocity of the POS is fixed and based on the natural vibration frequency of the monitoring structure and on the distance “POS – structure” in order to be able to observe abnormal distortions.

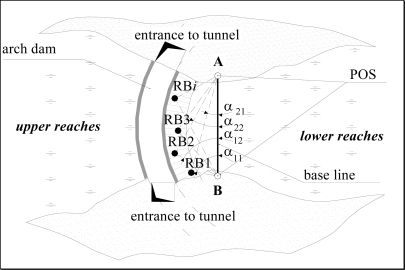

3.1.2. Arch dam monitoring

Figure 4 shows the placement of two vertical POSs for monitoring the arch dam of a hydroelectric power station. The POSs measures in pairs the horizontal angles α1i and α2i between the base line AB and RBi. The length of the base line D and the Cartesian coordinates of the POS are previously determined by known geodetic methods.

Figure 4.

Placement of two vertical POSs for arch dam monitoring.

The angles ∠RBi are calculated with the following equation:

| (5) |

According to the sine theorem, we find the triangles sides with vertex A, B and RBi, and the xi, yi Cartesian coordinates of the points RBi. If there are changes in the RBi coordinates, then we will register changes in the angles α1i and α2i, which will indicate dam deformation. In a similar manner, the POS system is installed for tunnel monitoring. In extended tunnels, several functionally connected POS can be placed along its axis.

3.2. Scanning vision system for robot navigation

The Scanning Vision system is another application of a POS [21–23]. In this case, it is only necessary to install one POS with a scanning LPS. Figure 5 shows a simple diagram of a triangulation scanner. The laser beam -reflected from a mirror in the LPS- is projected on the object. The diffusely reflected light is collected by the POS if laser spots are projected.

Figure 5.

POS and LPS placement for automatic navigation task. a) General view of the system with interaction of the parts in functioning. b) Triangulation angles distance and signals from sensors and reference f (“clock” pulses).

The laser positioning circuitry (see Figure 5) controls both the emission angle Cij, and the elevation angle Σβi. The elevation angle is not shown in Figure 5; however, it is calculated exactly in the same way that the angle Cij, by direct count of steps of vertical step-drive (see Figure 5 a)). Both angles can be calculated in the same manner. The angle Bij is calculated by the POS measurements; and the triangulation distance, the distance a between the centers of POS mirror and the LPS mirror [see Figure 5 b)], is also known. In other words, the distance a is the same as the base-bar length.

As shown in Figure 5, since all geometric parameters are known, the x, y, z coordinates of the point Sij on the object can be computed in trigonometric form. If a single laser dot is projected, the system measures the coordinates of just one point of the object [19]. When a laser discrete stripe is projected, all points along the stripe are digitized. The basic triangulation scheme can be enhanced to improve the optical quality and field depth by simple variation of “motor step size/steps quantity”.

If we place the fast-operation POS on autonomous mobile objects, such as robots, vehicles, transportation carriage, military devices, and so on, we can use it as an artificial vision system for automatic navigation task solutions.

4. Experimental Results of the Prototype

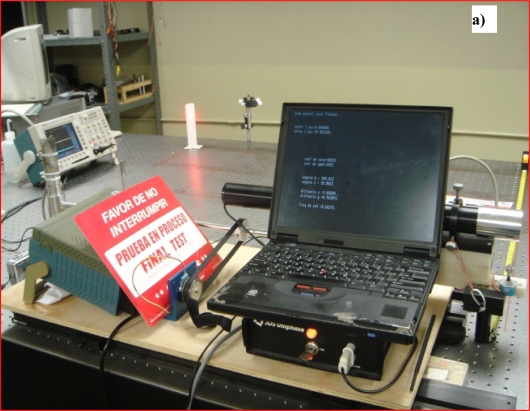

The system shown in Figure 5 was designed and constructed for laboratory tests [22–25]. The experiment to obtain the coordinates (x,y) of an illuminated point into scene was carried out by using a JDS Uniphase Model 1135P laser source (20 mW; He-Ne; see Figure 6).

Figure 6.

Experiments using laser spot: a) on obstacle variable position. b) in order to determine coordinates (x,y).

Parameters of the prototype:

sampling rate: 231 points per image in a fixed FOV of 1 × 2 m with a reference pulse train of 114.675 kHz.

rotation speed: For the JAMECO DC motor MD5-2554AS-AA the speed of rotation is 13,860 rpm (231 rps) at 17,000 rpm, output torque is equal to 72.92 g·cm. But in practice, it was reduced to 7–13 rps due to time response of selected photosensor.

dynamic range: 7–13 Hz with 114.675 KHz reference frequency at maximum speed.

spectral range: λ = 625–740 nm for sensor, with peak value nearby laser bandwidth, and 633 nm for laser.

spatial resolution: For a distance of 2.5 m on the optical table, it was 0.001–0.01 of the x,y-value in the best points, in the center of the FOV, and 0.05–0.08 of the x,y-value in the worst points, at the edge of the FOV. Detailed experimental data are presented in [22] and [23].

The experiments to test the accuracy of the measurement of the incidence angle were carried out by using an active target in a form of light bulb, in this case a 12 VDC 50 W incandescent lamp, see Figure 7. However, for an increase in the striking distance it is still possible to use a LED or a super-bright LED.

Figure 7.

90° angle measurement using a light bulb as the energy source.

Experimental data based on 200 samples of 45° and 90° measurement angles are shown in Table 1.

Table 1.

Statistical data for 45° and 90° measurement angles.

| 90° | 45° | |

|---|---|---|

| Average | 90.278 | 45.316 |

| Error t | 0.142 | 0.035 |

| Median | 90.201 | 45.284 |

| standard deviation | 1.419 | 0.354 |

| Variance | 2.014 | 0.125 |

| Asymmetry coefficient | 0.302 | 0.080 |

| Range | 7.168 | 1.984 |

| Min. Value | 86.495 | 44.296 |

| Max. Value | 93.663 | 46.280 |

| Samples | 100.000 | 100.000 |

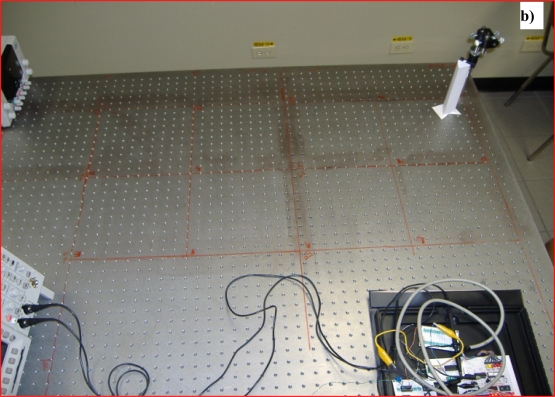

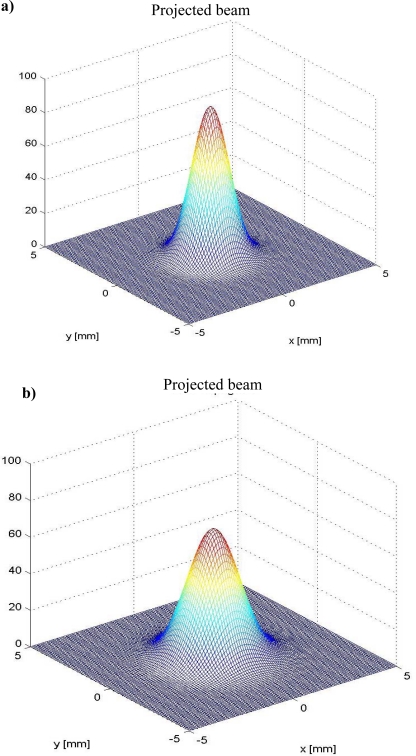

From Figure 8 it can be seen that the optical signal amplitude is sensitive to both rotation velocity and striking distance, and that this relation is strongly non-linear.

Figure 8.

Signal energy attenuation and distribution in projected spot: a) striking distance 2.5 m. b) striking distance 5.0 m.

For each one of the 2.5 m intervals within the expected striking distance 0–100 m, the computational model for a spatial intensity attenuation of the radiated light from 0% to 95% was simulated by using the Matlab software Poon. Since the experiments were limited in practice by the geometrical size of the optical table (see Figures 6 and 7), Figure 8 shows radial power distribution of laser beam for the revised cases of striking distance 2.5 m and 5.0 m, respectively.

As it can be seen from Figure 8, the registered light emission converted to an electrical signal by the photoreceiver (Silicon Detector 15.0MM2, Edmund Optics) can vary significantly, even at small distances. Due to this reason it was also expedient to carry out additional experimental research on these limitations for declared practical applications. For the task of navigation, from 5 m to 10 m, the performance of the proposed configuration was satisfactory.

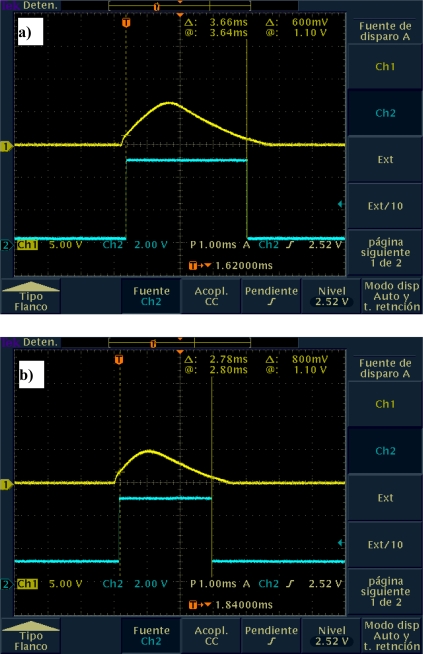

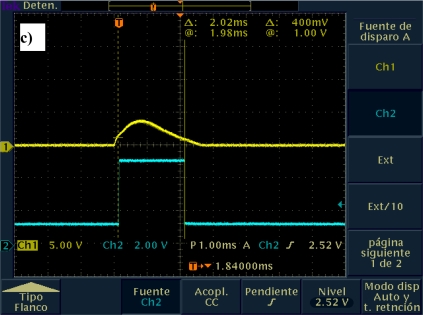

The output signal of the POS was analyzed in order to determine the dynamic limits of this system. Figure 9 shows changes in the amplitude of the output signal of the POS system with respect to changes in the speed of the DC motor.

Figure 9.

POS output signal amplitude variation according to rpm on mirror motor.

As the speed of the DC motor increases, the signal amplitude decreases due to the capacitance of the photodiode model [14–18]. According to Figure 9, for a scanning velocity variation from 2–3 rps up to 25 rps, there are variations in both the pulse amplitude (from 400 mV up to 1.10 V) and the pulse width (from 2.02 ms up to 3.66 ms). Such variations can be easily corrected by using signal conditioning techniques. However, as can be seen from the above mentioned reasons, for SHM tasks these speed parameters are already acceptable for normal operation.

5. Conclusions

In this paper, in order to carry out 3-D object recognition, a contact-free measurement system for object surface recognition has been presented. Such a system has the following advantages over other 3-D triangulation scanners:

It requires neither expensive non-telescope tubes for scanning ray positioning nor mega-pixel sensors matrix.

It can be adjusted to be used in a wide range of practical applications due to the ability to change the step-size of the emitter subsystem for each specific task.

The results of the application of the remote precise optical-scanning system presented in this paper have been satisfactory.

Acknowledgments

This work has been partially supported by the Autonomous University of Baja California, Mexico (projects No. 2352, in 2003–2004 and N2386, in 2005–2006), the Ministry of Science and Innovation (MICINN) of Spain under the research project TEC2007-63121, and the Universidad Politecnica de Madrid.

References and Notes

- 1.James G., Mayes R, Came T., Reese G. Damage Detection and Health Monitoring of Operational Structures; American Society of Mechanical Engineers-Winter Annual Meeting; Chicago, IL, USA. November 6–11, 1994; Experimental Structural Dynamics Department, Sandia National Laboratories; p. 10. Technical report; [Google Scholar]

- 2.Doebling S.W., Farrar C.R., Prime M.B., Shevitz D.W. Damage Identification and Health Monitoring of Structural and Mechanical Systems from Changes in Their Vibration Characteristics: A Literature Review. Los Alamos National Laboratory, University of California; Los Alamos, NM, USA: 1996. p. 136. Report LA-12767-MS; [Google Scholar]

- 3.Mallet L., Lee B.B., Staszewski1 W.J., Scarpa F. Structural health monitoring using scanning laser vibrometry: II. Lamb waves for damage detection. Smart Mater. Struct. 2004;13:261–269. [Google Scholar]

- 4.Sohn H., Farrar S.R., Hemez F.N., Shunk D., Stinemates D.W., Nader B.R. Review of Structural Health Monitoring Literature from 1996–2001. Los Alamos National Laboratory, University of California; Los Alamos, NM, USA: 2003. Report LA-13976-MS; [Google Scholar]

- 5.Dai L., Wang J., Rizos C., January S. Pseudo-satellite applications in deformation monitoring. GPS Solutions. 2002;5:80–87. [Google Scholar]

- 6.Pines D.J., Lovell P.A. Conceptual framework of a remote wireless health monitoring system for large civil structures. Smart Mater. Struct. 1998;7:627–636. [Google Scholar]

- 7.Tennyson R.C., Mufti A.A., Rizkalla S., Tadros G., Benmokrane B. Structural health monitoring of innovative bridges in Canada with fiber optic sensors. Smart Mater. Struct. 2001;10:560–573. [Google Scholar]

- 8.Hancock J., Langer D., Hebert M., Sullivan R., Ingimarson D., Hoffman E., Mettenleiter M., Froehlich C. Active laser radar for high-performance measurements. Proceedings of the 1998 IEEE International Conference on Robotics & Automation; Leuven, Belgium. May 1998; pp. 1465–1470. [Google Scholar]

- 9.Thomas J.I., Oliensis J. Automatic position estimation of a mobile robot. Proceedings of Ninth Conference on Artificial Intelligence for Applications; Orlando, FL, USA. 1993; pp. 438–444. [Google Scholar]

- 10.Wang L., Emura T., Ushiwata T. Automatic guidance of a vehicle based on DGPS and a 3D map. IEEE Intelligent Transportation Systems Conference Proceedings; Dearborn, MI, USA. 2000; pp. 131–136. [Google Scholar]

- 11.Guivant J., Nebot E., Baiker S. High Accuracy navigation using laser range sensors in outdoor applications. Proceedings of the 2000 IEEE International Conference on Robotics & Automation; San Francisco, CA, USA. April 2000; pp. 3817–3822. [Google Scholar]

- 12.Winkelbach S., Molkenstruck S., Wahl F.M. Low-cost laser range scanner and fast surface registration approach. Lecture Notes Comput. Sci. 2006;4174:718–728. [Google Scholar]

- 13.Wehr A., Lohr U. Airborne laser scanning - an introduction and overview. ISPRS J. Photogram. Remote Sens. 1999;54:68–82. [Google Scholar]

- 14.Hernandez W. Photometer circuit based on positive and negative feedback compensations. Sensors Lett. 2007;5:612–614. [Google Scholar]

- 15.Hernandez W. Linear robust photometer circuit. Sensors Actuat. A. 2008;141:447–453. [Google Scholar]

- 16.Hernandez W. Performance analysis of a robust photometer circuit. IEEE Trans. Circuits Syst. Express Briefs. 2008;55:106–110. [Google Scholar]

- 17.Hernandez W. Robustness and noise voltage analysis in two photometer circuits. IEEE Sens. J. 2007;7:1668–1674. [Google Scholar]

- 18.Hernandez W. Input-output transfer function analysis of a photometer circuit based on an operational amplifier. Sensors. 2008;8:35–50. doi: 10.3390/s8010035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Tyrsa V.Y., Sergiyenko O., Tyrsa V.V., Bravo M., Devia L., Rendon I. Mobile robot navigation by laser scanning means. 3rd International Conference on Cybernetics and Information Technologies, Systems and Applications CITSA – 2006; Orlando, FL, USA. July 20–23, 2006; pp. 340–345. [Google Scholar]

- 20.López M.R., Sergiyenko O., Tyrsa V., Perdomo W.H., Balbuena D.H., Cruz L.D., Burtseva L., Hipólito J.I.N. Optoelectronic method for structural health monitoring. Int. J. Struct. Health Monit. 2009;4 (in press). [Google Scholar]

- 21.Sergiyenko O., Burtseva I., Bravo M., IRendón I., Tyrsa V. Scanning vision system for mobile vehicle navigation. IEEE-LEOS Proceedings of Multiconference on Electronics and Photonics (MEP-2006); Guanajuato, Mexico. 2006; pp. 178–181. [Google Scholar]

- 22.Rivas M., Sergiyenko O., Aguirre A., Devia L., Tyrsa V., Rendón I. Spatial data acquisition by laser scanning for robot or SHM task. IEEE-IES Proceedings of International Symposium on Industrial Electronics (ISIE-2008); Cambridge, UK. 2008; pp. 1458–1463. [Google Scholar]

- 23.Sergiyenko O., Tyrsa V., Hernandez-Balbuena D., Rivas López M., Rendón López I., Cruz L.D. Precise optical scanning for practical multiapplications. Proceedings of IEEE-34th Annual Conference of IEEE Industrial Electronics (IECON’08); Orlando, FL, USA. November 10–13, 2008; pp. 1656–1661. [Google Scholar]

- 24.Lopez M.R., Sergiyenko O., Tyrsa V. Machine vision: approaches and limitations. In: Xiong Z., editor. Computer Vision. IN-TECH; Vienna, Austria: 2008. pp. 395–428. [Google Scholar]

- 25.Balbuena D.H., Sergiyenko O., Tyrsa V., Burtseva L., López M.R. Signal frequency measurement by rational approximations. Measurement. 2009;42:136–144. [Google Scholar]