Abstract

This paper presents a sensor system for robot localization based on the information obtained from a single camera attached in a fixed place external to the robot. Our approach firstly obtains the 3D geometrical model of the robot based on the projection of its natural appearance in the camera while the robot performs an initialization trajectory. This paper proposes a structure-from-motion solution that uses the odometry sensors inside the robot as a metric reference. Secondly, an online localization method based on a sequential Bayesian inference is proposed, which uses the geometrical model of the robot as a link between image measurements and pose estimation. The online approach is resistant to hard occlusions and the experimental setup proposed in this paper shows its effectiveness in real situations. The proposed approach has many applications in both the industrial and service robot fields.

Keywords: robotics, vision sensor, intelligent spaces

1. Introduction

This paper presents a sensor system composed of a single camera attached to a fixed position and orientation in a bounded environment (indoor workplace) which is observing a mobile robot. The aim of the sensor system is to obtain the orientation and position (i.e., pose) of a mobile robot using both visual information retrieved by the camera and relative odometry readings obtained from the internal sensors of the robot. The camera acquisition and image processing tasks are executed in a specialized hardware, which also controls the behavior and internal sensors of the mobile robot through a wireless channel. The proposed schema allows the robot to perform complex tasks without requiring dedicated processing hardware on it. This approach is sustained in the Intelligent Space [1] concept and it can be equally applied to multiple scenarios, specially both in the industrial field (e.g., automatic crane positioning, autonomous car parking) and in the service fields (e.g., wheelchair positioning in medical environments or autonomous driving of mobile platforms). The single camera solution presented in this paper allows to cover large areas with less cameras compared to multiple camera configurations where overlapped areas are mandatory. This feature reduces the cost and improves the reliability of the intelligent space philosophy.

In this paper, we suppose that the camera is correctly calibrated and thus the parameters governing the projection model of the camera are previously known. To connect the pose of the robot with information found in the image plane of the camera, we propose to obtain a 3D geometric model of the mobile robot. Such model is composed of several sparse points whose coordinates represent some well-localized points belonging to the physical structure of the robot. These points are determined by image measurements, called image features, which usually correspond to corner-like points due to texture changes or geometry changes such as 3D vertexes. Usually in industrial fields the image features are obtained by including some kind of artificial marker on the structure of the robot (infrared markers or color bands). These methods are very robust and can be used to recognize human activity and models with high degrees of freedom (AICON 3D online [2] or ViconPeak online systems [3]). However, in this paper we want to minimize the required “a priori” knowledge of the robot, so that it is not necessary to place artificial markers or beacons on it to detect its structure in the images.

Independent of the nature of the image features, the information obtained from a camera is naturally ambiguous and thus some sort of extra metric information are required in order to solve such ambiguity. In this work, we propose to use the odometry sensors inside the robot (i.e., wheel velocities) to act as the metric reference.

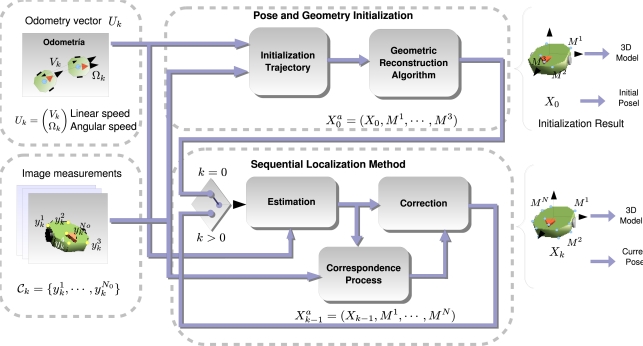

The general diagram of the algorithm proposed in this paper is shown in Figure 1. It shows a clear division of the processes involved in obtaining the pose of the robot: first we denote as “Initialization of Pose and Geometry” to those processes necessary to start up the system, such as the 3D model of the robot and the initial pose it occupies. The initialization consists of a batch processing algorithm where the robot is commanded to follow a certain trajectory so that the camera is able to track some points of the robot’s structure under different viewpoints jointly with the recording of the odometry information. All this information is combined to give the 3D model of the robot and the initial pose it occupies.

Figure 1.

General diagram of the proposed localization system using a vision sensor and odometry readings.

Given the initialization information, the second group of processes, named “Sequential Localization”, provides the pose of the robot in a sequential manner. It is composed of a pose estimator, given odometry readings and a pose correction block which combines the estimation of the pose with image measurements to accurately give a coherent pose with the measurements. This algorithm operates entirely on-line and thus the pose is available at each time sample.

Both group of processes are supplied with two main sources of information:

Image measurements: they consist of the projection in the camera’s image plane of certain points of the robot’s 3D structure. The measurement process is in charge of searching coherent correspondences through images with different perspective changes due to the movement of the robot.

Motion estimation of the robot: The odometry sensors built on-board the robot supply the localization system with an accurate motion estimation in short trajectories but that is prone to accumulative errors in large ones.

1.1. Previous Works

Despite the inherent potential of using external cameras to localize robots, there are relative few attempts to solve it compared to the approach that consider the camera on-board the robot [4, 5]. However, some examples of robot localization with cameras can be found in the literature, where the robot is equipped with artificial landmarks, either active [6, 7] or passive ones [8, 9]. In other works a model of the robot, either geometrical or of appearance [10, 11], is learnt previously to the tracking task. In [12, 13], the position of static and dynamic objects is obtained by multiple camera fusion inside an occupancy grid. An appearance model is used afterwards to ascertain which object is each robot. Despite the technique used for tracking, the common point of many of the proposals found in the topic comes from the fact that rich knowledge is obtained previously to the tracking, in a supervised task.

In this paper the localization of the robot is obtained in terms of its natural appearance. We propose a full metric and accurate system based on identifying natural features belonging to the geometric structure of the robot. On most natural objects we can find points whose image projection can be tracked in the image plane independently of the position the object occupies and based on local properties found in the image (i.e., lines, corners or color blobs). Those points are considered natural markers, as they serve as reference points in the image plane that can be easily relate with their three-dimensional counterparts. The set of methods focused on tracking natural markers have become a very successful and deeply studied topic in the literature [14, 15], as they represent the basic measurements of most of existing reconstruction methods.

Scene reconstruction from image measurements is a classic and mature discipline in the computer vision field. Among the wide amount of proposals it can be highlighted those grouped under the name “Bundle Adjustment” [16, 17]. Their aim is essentially to estimate, jointly and optimally, the 3D structure of a scene and the camera parameters from a set of images taken under some kind of motion. (i.e., it can be the camera that moves or equally some part of the scene w.r.t. the camera).

In general terms, Bundle Adjustment reconstruction methods are based in iterative optimization methods which try to minimize the image discrepancy between the measured positions of the 3D model and the expected ones using the last iteration solution. The discrepancy balances the contribution of the measurements into the final solution and plays an important role in this paper. Our main contribution is a redefinition of the discrepancy function using a Maximum Likelihood approach which takes into account the statistical distribution of the error. This distribution is especially affected by the odometry errors which are accumulative in long trajectories.

On the other hand, once a geometric model is obtained using a structure-from-motion approach, its pose with respect to a global coordinate origin can be easily retrieved by measuring the projection of the model in the image plane. This problem, commonly known as the Perspective n Point Problem (PnP), has received considerable attention in the literature, where some accurate solutions are found such as [18] or the recent global solution proposed in [19]. In this paper we instead follow a filtering approach, where not only image measurements but also last time pose information and odometry information are used to obtain the pose. This approach, which is based on the use of a Kalman Filter, is much more regular than solving the PnP problem at each time instant and will be described in this paper.

The paper is organized as follows. In Section 2. the notation and mathematical elements used in the paper are described. In Section 3. the description of the initialization algorithms is given. The online Kalman loop is explained in Section 4. and some results in a real environment are shown in Section 5. Conclusions are in Section 6.

2. Definitions and Notation

This section presents a description of the symbols and mathematical models used in the rest of the paper.

2.1. Robot and Image Measurements

The robot’s pose at time k is described by a vector Xk. We suppose that the robot’s motion lie on the plane z = 0 referred from the world coordinate origin OW (See Figure 2). The pose vector Xk is described by 3 components (xk, yk, αk), corresponding to two position coordinates xk, yk and the rotation angle αk in the z axis. The motion model Xk = g(Xk−1, Uk) defines the relationship between the current pose Xk with respect to the previous time one Xk−1 and the input Uk given by odometry sensors inside the robot. In our proposal the robot used is a differential wheeled platform and thus Uk = (Vlk, Ωk)T, where Vlk and Ωk describe the linear and angular speed of the robot’s center of rotation (OR in Figure 2) respectively. The motion model g is then very simple in function of the discretized linear speed Vlk and the angular speed Ωk.

Figure 2.

Spatial relationship between world’s coordinate origin OW, robot’s coordinate origin OR and camera’s coordinate origin OC.

The robot’s geometry is composed of a sparse set of N 3D points = {M1, ⋯, MN} referred from the local coordinate origin OR described by robot’s pose Xk. The points Mi are static in time due to robot’s rigidness, and thus, no temporal subindex is required for them. Function uses actual pose Xk to express Mi in the global coordinate origin OW that Xk is referred to (see Figure 2):

| (1) |

where:

| (2) |

The augmented vector , which is the state vector of the system, is defined as the concatenation in one column vector of both the pose Xk and the set of static structure points :

| (3) |

An augmented motion model ga is defined as the transition of the state vector:

| (4) |

It must be noticed that the motion model ga leaves the structure points contained in the state vector untouched, as we suppose that the object is rigid.

The whole set of measurements obtained in the image plane of the camera at time k is denoted by Yk. Each measurement inside Yk is defined by a two-dimensional vector , describing the projection of each point inside in the image plane, where i stands for the correspondence with the point Mi. It must be remarked that, in general, Yk does not contain the image projection of every point in the set M as some of them can be occluded depending on the situation.

The camera is modeled with a zero-skew “pin-hole” model (see [17] for more details), with the following matrix Kc containing the intrinsic parameters:

| (5) |

The extrinsic parameters of the camera (i.e., position and orientation of the camera w.r.t. OW) are described using the rigid transformation Rc, Tc (i.e., rotation matrix and translation vector). The matrix Rc is defined by three Euler angles αc, βc, γc. The vector Tc can be decomposed into its three spatial coordinates Tx, Ty, Tz. All calibration parameters can be grouped inside vector P:

| (6) |

Given a single measurement from the set Yk, its 2D coordinates can be expressed using the “pin-hole” model and the aforementioned calibration parameters:

| (7) |

where represents a projective scale factor that can be obtained so that the third element of the right part of Equation (7) is equal to one. It is important to remark that the projection model, although simple, depends in a non-linear fashion w.r.t. Mi due to the factor . For compactness the projection model of Equation (7) can be described as the function h:

| (8) |

In the same way, the whole vector Yk can be expressed with the following function:

| (9) |

2.2. Random Processes

This paper explicitly deals with uncertainties by describing every process as a random variable with a Gaussian distribution whose covariance matrix stands for its uncertainty. The random processes are expressed in bold typography (i.e., Xk is the random process describing the pose and Xk is a realization of it) and each of them are defined by a mean vector and a covariance matrix. Therefore is defined by its mean and covariance (or simplifying ). The following processes are considered in the paper:

Pose Xk = 𝒩(X̂k, ∑k) and 3D model M = 𝒩(M̂, ∑M) processes. Its joint distribution is encoded in .

Measurement process Yk = 𝒩(Ŷk, ∑Yk), whose uncertainty comes from errors in image detection.

Odometry input values Uk = 𝒩(Ûk, ∑Uk), which are polluted by random deviations.

3. Initialization Process

The initialization step consists of obtaining the initial pose the robot occupies and a 3D model of its structure using visual features (i.e., to obtain an initial guess of ). The importance of this step is crucial, as the robot’s geometric model serves as the necessary link between robot’s pose and image measurements. The initialization allows to use afterwards the online approach presented in Section 4.

A delayed initialization approach is proposed, based on collecting image measurements and the corresponding odometry position estimation along a sequence of time (i.e., k = 1, ⋯, K). After taking a sufficient amount of measurements of the object in motion, an iterative optimization method is used to obtain the best solution for according to a cost criterion. The odometry readings are used in this step as metric information, which allows to remove the natural ambiguity produced by measurements from a single camera. The main problem of using odometry as a metric reference for reconstruction comes from the accumulative error it presents, which is a very well-known problem in dead-reckoning tasks.

The initialization algorithm consists of a Maximum Likelihood (M.L.) estimation of the vector , which does not estimate the initialization value itself but its equivalent Gaussian distribution by using a “Bundle Adjustment” method (See Figure 3). The M.L. approach (see [16, 17] f or more details) allows to properly tackle the growing drift present in the odometry estimation by giving fair less importance or weight to those instants where the odometry is expected to be more uncertain.

Figure 3.

Maximum likelihood initialization by means of reducing the residual of the expected measurements (red diamonds) and the measured image trajectories (blue circles).

In the following sections, the initialization algorithm is explained in detail.

3.1. Odometry Estimation

A set of odometry readings, U1, ⋯, UK are collected over a set of K consecutive time samples k = 1, ⋯, K, which corresponds to the initialization trajectory performed by the robot. Using a motion model g given initial position X0, an estimation of the robot’s pose in the whole initialization sequence is obtained as follows:

| (10) |

where we recall that Xk and Uk denote Gaussian processes. Using expression (10), and by propagating the statistical processes through function g, the joint distribution p(X1, ⋯, XK|X0) can be obtained. The propagation of statistical processes through non-linear functions is in general a very complex task, as it requires to solve integral equations. However in this paper we will follow a first order approximation of the non-linear functions centered in the mean of the process (see [20] for more details). Using this technique the Gaussian processes Xk and Uk can be iteratively propagated through function g at the cost of being an approximation. This paper shows that despite being an approximation this approach end in a good estimation of the initialization vector.

By denoting as X to the joint vector containing all poses , the joint distribution of all poses, given the initial state X0, p(X|X0) is approximated as Gaussian p.d.f. with mean X̂ and covariance matrix ∑X.

3.2. Image Measurements

In this step we describe how to collect the position in the image plane of different points from the robot’s structure during the initialization trajectory. We base our method in the work [15] where the SIFT descriptor is introduced. The process used in the initialization is composed of two steps:

-

Feature-based background subtraction.

We describe the static background of the scene using a single image, from which a set of features, and its correspondent SIFT descriptors are obtained. The sets 𝒟b and b are our feature-based background model.

Given an input image, namely Ik, we find the sets 𝒟k and k. We consider that a feature , belongs to the background if we can find a feature , such that and . This method although simple shows to be very effective and robust in real environments (See Figure 4).

-

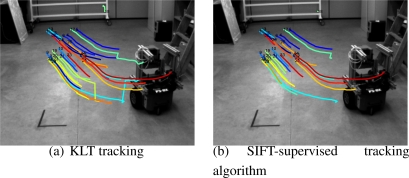

Supervised tracking algorithm.

Given the first image of the initialization trajectory, namely I1, we obtain the set of N features 1, once they are cleaned by the aforementioned background subtraction method. We propose a method to track them in the whole initialization trajectory.

By only using SIFT descriptors and its tracking in consecutive frames does not produce stable tracks, specially when dealing with highly irregular objects where many of the features are due to corners. We thus propose to use a classical feature tracking approach based on the Kanade–Lucas–Tomasi (KLT) algorithm [14]. To avoid degeneracy in the tracks, which is a very common problem in those kind of trackers, we use the SIFT descriptors to remove those segments of the tracks that clearly do not correspond to the feature tracked. This can be done easily by checking distance between the descriptors in the track. The threshold used must be chosen experimentally so that it does not eliminate useful parts of the tracks. In Figure 5a we can see the tracks obtained by the KLT tracker without degeneracy supervision. In Figure 5b the automatically removed segments are displayed.

Figure 4.

Feature-based background subtraction method.

Figure 5.

Comparison between the KLT tracker and the SIFT-supervised KLT version.

3.3. Likelihood Function

Using the feature-based algorithm proposed before a set of measurements in the whole trajectory, Y1, ⋯, YK, is obtained, where each vector Yk contains the projection of N points from robot’s structure at time sample k. The set of N points, M1, ⋯, MN, jointly with the initial pose X0, represent the initialization unknowns. As the robot’s motion does not depend of its structure, the following statistical independence is true:

| (11) |

If all trajectories are packed into vector YL, the following function put in relation YL with the distribution of expression (11):

| (12) |

where Vk represents the uncertainty in image detection. Using distribution showed in (11), and propagating statistics through function (12), the following likelihood distribution is obtained:

| (13) |

The likelihood function (13) is represented by a Gaussian distribution using a first order approximation of (12). It is thus defined by a mean ŶL and a covariance matrix ∑L:

| (14) |

3.4. Maximum Likelihood Approach

The likelihood function (14), parametrized by its covariance matrix ∑L and its mean ŶL, is dependent of the conditional parameters and unknown values .

The “Maximum Likelihood” estimation consists of finding the values for that maximize the likelihood function:

| (15) |

In its Gaussian approximation and by taking logarithms, it is converted into the following minimization problem:

| (16) |

where YL is the realization of the process (i.e., the set of measurements from the image) and ŶL are the expected measurements given a value of the parameters X0, M1, ⋯, MN.

The configuration of ∑L is ruled by the expected uncertainty in the measurements and the statistical relation between them. Usually all cross-correlated terms of ∑L are non-zero, which has an important effect in the sparsity of the Hessian used inside the optimization algorithm and consequently in the computational complexity of the problem (see [21] for more details).

The covariance matrix ∑L can be approximated assuming either independence between time samples (discarding cross-correlation terms between time indexes) or total independence between time and each measurement (discarding all cross-correlation terms except the 2×2 boxes belonging to a single measurement). In Table 1, the different cost functions are shown depending on the discarded terms of ∑L. The different approximations of ∑L have a direct impact in the accuracy and the reconstruction error, and the results will be shown in Section 5.

Table 1.

Different cost functions depending on the approximations of ∑L.

| Type of Approximation | cost function |

|---|---|

| Complete Correlated matrix ∑L (M.C.) | |

| 2N × 2N block approximation of ∑L (M.B.) | |

| 2 × 2 block approximation of ∑L (M.D.) | |

| Identity approximation of ∑L (M.I.) |

Intuitively, if ∑L results to be a identity matrix, the cost function is reduced to a simple image residual minimization extensively used in Bundle Adjustment techniques, where in principle all cost differences have equal importance:

| (17) |

The result of minimizing Equation (16) instead of the usual (17) show significant improvements in the reconstruction error. In Section 5. a comparison is shown between the initialization accuracy under the different assumptions of the matrix ∑L, from its diagonal version to the complete correlated form. The minimum of (16) is obtained using the Levenberg–Mardquardt [17] iterative optimization method.

We suppose that during the measurement step there is a low probability of encountering outliers in the features. This argument can be very optimistic in some real configurations where more objects appear in the scene and produce occlusions or false matchings. For those cases, all cost functions presented in this section can be modified to be robust against outliers by using M-Estimators. We refer the reader to [17] for more details.

3.5. Initialization before Optimization

The use of an iterative optimization method for obtaining the minimum of (16) requires a setup value from which to start iterating. An initial estimation of close to its correct value reduces the probability to fall in a local extrema of the cost function.

The method proposed in this paper to give an initial value for consists of a non-iterative and exact method, which gives directly an accurate solution for in absence of noise in odometry values. This method is based on the assumption that the robot moves in a plane and thus only the angle over its Z axis is needed. As explained below, this assumption allows to solve the problem with a rank deficient Linear Least Squares approximation, which is solved using the Singular Value Decomposition of the system’s matrix and imposing a constraint that ensures a valid rotation. Its development is briefly introduced next.

For a point Mi of robot’s model and at time k of the initialization trajectory, the image measurement results from the following projection:

| (18) |

where the matrix and vector represent the rotation and position of the robot in the floor plane given by the odometry supposing that . The rotation matrix R0 and the offset T0 correspond with the initial pose in form of rigid transformation:

| (19) |

where R0 is a non-linear function of the orientation angle. The expression (18) depends non-linearly of vector and so a new parametrization is proposed jointly with a transformation which removes the projective parameter .

The point Mi is replaced by the rotated , removing thus the product between unknowns. The orientation angle in the pose is substituted by parameters a = cos(α0) and b = sin(α0), with the constraint a2 + b2 = 1. Using the new parameterization the expression (18) is transformed in the following:

| (20) |

with :

| (21) |

where y are the two first coordinates of .

The new unknown vector, which correspond to the new parametrization of , is:

| (22) |

The expression (20) is decomposed in the following elements:

| (23) |

where , y are linear in terms of Φ but not in terms of .

| (24) |

The projective scale is removed from the transformation by using vector product properties:

| (25) |

where two lineally independent equations are obtained for Φ.

| (26) |

Using all measurements inside YL, a lineal system of equations is obtained in terms of Φ:

| (27) |

It is straightforward to show that system of (27) has a single-parameter family of solutions. If Φ0 is a possible solution, then Φ0 + ψΔ is a solution for any ψ ∈ R, with Δ:

| (28) |

In fact, if Δ is normalized, it matches up with the eigen-vector associated to the zero eigenvalue of matrix AT A.

Using the constraint that a2 + b2 = 1, and the singular value decomposition of matrix A, an exact solution of system (20) is obtained.

Once Φ is available, the solution is obtained by inverting the parametrization:

| (29) |

This method, although exact, is prone to error due to odometry noise and does not benefit from the Maximum Likelihood metric. However it is valid as a method to give an initial value for before using the iterative approach.

3.6. Degenerate Configurations

The kind of trajectory performed by the robot during initialization has direct influence in the solution of . There are three kinds of movements that yields degenerate solutions:

Straight motion: there is no information about the center of rotation of the robot and thus the pose has multiple solutions.

- Rotational motion around robot axis: the following one-parameter (i.e., n) family of solutions gives identical measurements in the image plane:

with .(30) (31) - Circular trajectory: under a purely circular trajectory the following one-parameter family of initialization vectors gives identical measurements in the image plane:

(32) (33)

In practical cases it has been proved to be effective enough to combine straight trajectories with circular motion to avoid degeneracies in the solution of .

3.7. Obtaining the Gaussian Equivalent of the Solution

Once the minimum of (16) is reached we suppose that the resulting value of is the mean of the distribution . This section describes how to also obtain the covariance matrix.

The covariance matrix of the optimized parameters is easily obtained by using a local approximation of the term YL − ŶL in the vicinity of the minimum using the following expression:

| (34) |

where J is the Jacobian matrix of Ŷ with respect to parameters . The Jacobian is available from the optimization method, in which is used to compute the iteration steps.

4. Online Robot Localization

In this section the solution to given the last pose information is derived. The fact that last frame information is available and the assumption of soft motion between frames allows to greatly simplify the problem.

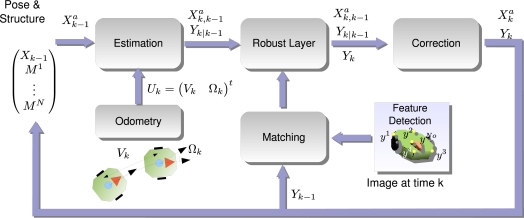

A special emphasis is given to the fact that any process handled by the system is considered a random entity, in fact a Gaussian distribution defined at each case by its mean vector and covariance matrix. The problem of obtaining pose and structure, encoded in given image observations Yk and the previous time estimation is viewed from the point of view of statistical inference, which means searching for the posterior probability distribution . That distribution gives the best estimation of given all the past knowledge available. In Figure 6, a brief overview of the online method is presented.

Figure 6.

Overview of the online algorithm.

The online approach is divided into three steps:

Estimation Step: using the previous pose distribution , defined by its mean and covariance matrix , and the motion model ga, a Gaussian distribution which infers the next state is given .

Robust Layer: the correspondence between image measurements and the 3D model of the robot easily fails, so a number of wrongly correspondences or outliers pollute the measurement vector Yk. Using a robust algorithm and the information contained in the state vector, the outliers are discarded before the next step.

Correction Step: using an outlier-free measurement vector, we are confident to use all the information available to obtain the target posterior distribution

In all three steps it is required to propagate statistic processes over non-linear functions (f and h). As was stated in the previous section we show how to face the problem using first order expansions as it offers more compactness and is more readable. As a consequence the “Estimation” and “Correction” steps are solved using the so called Extended Kalman Filter (EKF) equations, which have been already implemented in problems of similar complexity such as visual Simultaneous Localization and Mapping (SLAM) [5].

4.1. Estimation Step

The estimation step uses the motion models available to infer the next pose of the robot which implies an increment in uncertainty. Starting from the last pose distribution , the motion model ga and the noise included in odometry values, the following update is obtained:

| (35) |

where and are the mean and covariance matrix of distribution:

The matrices JX and JU are the first derivatives of the function ga with respect to and Uk respectively. Usually JX in odometry systems is the identity, therefore, at this step, the covariance matrix results to be bigger in terms of eigenvalues, which means uncertainty.

4.2. Correction Step

The correction step removes the added uncertainty in the estimation by using image measurements. It passes from the distribution to the target distribution , which includes the last measurement.

Using the estimation shown in (35), and knowing the correspondence between measurements with the camera and structure point of the state vector, the estimated measurement is given:

| (36) |

| (37) |

| (38) |

where Jh is the Jacobian matrix of the function ha with respect to and ∑V is block diagonal matrix with ∑v on each block. Function ha performs the projection in the image plane of the camera of all visible points that form up the measurement vector Yk. The correction step itself is a linear correction of and by means of the Kalman gain KG:

| (39) |

| (40) |

| (41) |

As it is stated in (41) the resulting is reduced compared to which means that after the correction step, the uncertainty is “smaller”.

4.3. Robust Layer

The robust layer has the objective of removing bad measurements from Yk to avoid inconsistent updates of in the correction step. In this paper we propose to include the Random Sample Consensus (RANSAC) algorithm [22] between the estimation and correction step of the filter. The general idea is to found among the measured data Yk a set which agrees in the pose Xk, using the 3D model obtained in .

The interest of applying RANSAC in a sequential update approach resides on several reasons: firstly it allows to efficiently discard outliers from Yk preventing algorithm’s degeneracy, which happens even if the motion model is accurate. Secondly, compared to online robust approaches, where a robust cost function is optimized, RANSAC allows not to break the Kalman filter approach, as it only cleans the measurement vector of outliers. Furthermore we have observed experimentally that the RANSAC algorithm can be very fast between iterations, as only a few outliers are inside the data. (We use the RANSAC implementation described in [17], which implements a dynamical computation of the outlier probability).

The RANSAC method proposed in the commented framework obtains the consensus pose Xk from the set of measurements Yk and the 3D model available in using the algorithm presented in [18]. For a robot moving in a plane, as it is the case with the mobile robot considered in this paper, it is enough to use a minimum of 2 correspondences between the model and the measurement which makes the RANSAC very fast for removing outliers.

4.4. Image Measurements

Contrary to the initialization case, in this step we have an accurate prediction of the tracked points in the image plane at each time instant, namely vector Yk|k−1. Using such prediction we can easily match the 3D points in the state vector with measurements taken in a measurement set k, using the SIFT detector applied to current image Ik. The matching is done in terms of distance in the image plane. Let a feature inside vector Yk|k−1 and a candidate obtained using SIFT method. We conclude that they are matched if , where ‖M states for the Mahalanobis distance using the covariance ∑yk|k−1 computed from the matrix ∑Yk|k−1. The Mahalanobis distance allows to take into account the uncertainty predicted for and also helps to select a threshold τmax with a probabilistic criterion.

5. Results

This section describes the experimental setup developed to support the theoretical algorithms proposed in this paper. The experiments are divided in those performed over synthetic data and those run in a real implementation of the Intelligent Space in the University of Alcala (ISPACE-UAH) [23]. In both kind of experiments the same camera parameters are used, derived from the real device used in its real placement. The single camera consists of a CCD based sensor with resolution of 640 × 480 pixels and a physical size of 1/2 (around 8 mm diagonal). The optical system is chosen with a focal length of 6.5 mm which gives around 45° of Field of View (FOV). The camera is connected to a processing and acquisition node through a IEEE1394 port, which support 15 fps in RGB image format acquisition. The intrinsic parameters are the following:

| (42) |

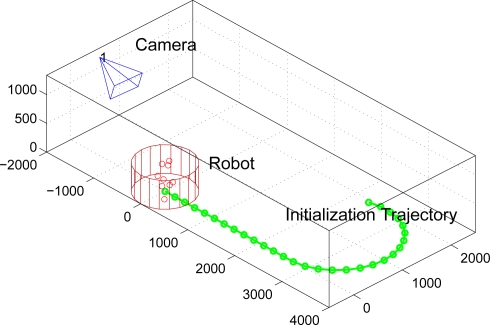

The camera is placed with respect a global coordinate origin, as it is displayed in Figure 7. Camera calibration is performed previously to the localization task, using checkerboards as calibration patterns and the “Matlab Calibration Toolbox” implemented by [24]. The distortion parameters of the camera are not considered in this case. As it can be shown in Figure 7, the camera is placed in oblique angle, which is specially useful for covering large areas with less FOV requirements (less distortion) compared to overhead configurations.

Figure 7.

Geometric distribution of the camera and robot’s trajectory.

The robotic platform is connected to the same processing node controlling the camera by means of a wireless network. The camera acquisition and the odometry values obtained from the robot are synchronized. The control loop of the robot is internal, and it is prepared to follow position landmarks based on odometry feedback at faster sampling frequency than the image localization system (15 fps). Therefore, for each image acquisition cycle, the odometry system obtain several readings that are used by the internal loop control. In this paper the localization information provided by the cameras is not included in the control loop of the robot.

5.1. Simulated Data

In this experiment the robot structure is simulated with a set of N = 10 points distributed randomly inside a cylinder with radius R = 0.5m and height h = 1m. The odometry system is supposed to have an uncertainty and in linear and angular speed respectively. The initialization trajectory is shown in Figure 8. The image measurements are considered polluted with a Gaussian process of , independently of each measurement and image coordinate.

Figure 8.

Initialization trajectory of the robot in the experiment based on synthetic data.

To compare the performance of the initialization method proposed in Section 3., the following error magnitudes are described:

| (43) |

where T0,gth, α0,gth and correspond to the ground truth values of the initialization vector . The following two experiments are proposed:

-

Initialization errors in function of the odometry error. In this experiment the value of and are multiplied by the following multiplicative factor:

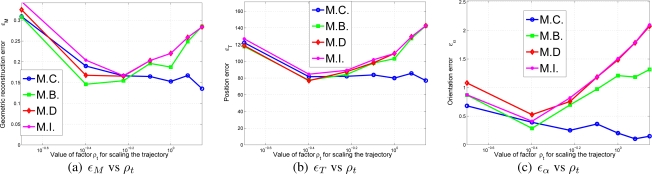

(44) The different errors of Equation 43 can be viewed in Figure 9a–c in terms of ρ. As it can be observed in the results, the M.C. (Complete correlated matrix ∑L) method outperforms the rest of approximations of ∑L, specially the full diagonal method M.I., which means that the statistical modelling proposed in this paper is effective.

-

Initialization errors in function of the trajectory length. The trajectory used in the experiment and displayed in Figure 8 is uniformly scaled by parameter ρt,

so that it can be guessed the relationship between trajectory length and initialization errors. In Figure 10 the initialization errors are displayed versus ρt.(45) In light of the results shown in Figure 10a–c, the M.C. method is capable of reducing the error no matter how large is the trajectory chosen. However, in the rest of the approximations of ∑L there is an optimal point where the initialization errors are minimum. This results make sense, as without statistical modelling large trajectories contain accumulative errors which usually affects the final solution of .

Figure 9.

Experiment showing the different initialization errors in function of the amount of error in odometry readings.

Figure 10.

Experiment showing the different initialization errors in function of the trajectory length performed by the robot.

Both experiments clearly manifest that the complete matrix ∑L, with all its cross-correlated terms (M.C.), outperforms the rest of proposals, especially when it is compared to the case where ∑L is the identity matrix (M.I.), which means no statistical modeling.

5.2. Real Data

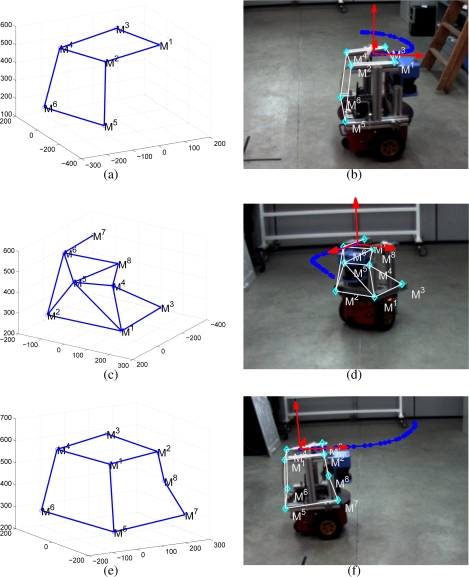

The initialization experiment proposed in this paper consists of a robot performing a short trajectory from which its 3D model and initial position is obtained using the results of Section 3.. We present a comparison of the initialization results using three different trajectories. Each one of the trajectories is displayed in Figure 11 as a colored interval of the whole trajectory performed by the robot in the experiment.

Figure 11.

Intervals of robot’s trajectory used for initialization.

The results of the initialization method on each of the 3 trajectories selected is shown in Figure 12. Depending on the trajectory used, the features viewed by the camera vary and thus the corresponding initialized 3D model. As it can be seen in Figure 12, the 3D model is accurate and its projection matches with points in the robot’s structure in all cases. It must be remarked that on each case, the position obtained as a result of the initialization (i.e., X0) corresponds to the first position of each interval.

Figure 12.

Single camera initialization results. On each row it is shown the resulting reconstruction and its projection in the image plane of the camera.

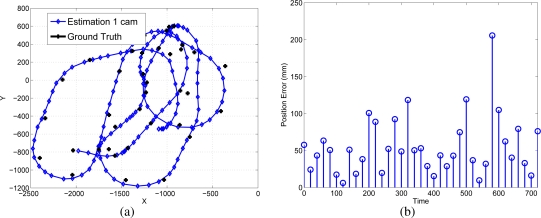

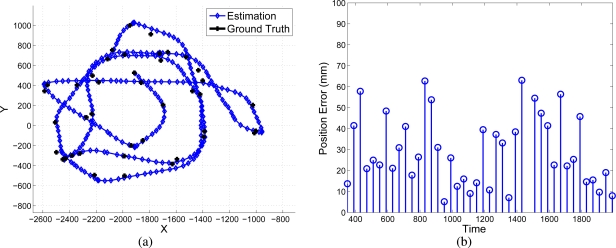

The sequential algorithm is tested using the whole trajectory shown in Figure 11. The initial pose and 3D model are the result of the initialization results shown in Figure 12e,f. The estimated position of the robot, compared to a “ground truth” measurement, is presented in Figure 13a,b.

Figure 13.

Online robot’s pose compared with the “ground-truth”.

The ground truth is obtained by means of a manually measured 3D model of the robot. The model is composed of 3D points, that are easily recognized by a human observer. By manually clicking points of the 3D model in the image plane, and by using the method proposed in [18], the reference pose of the robot is obtained in some of the frames of the experiment.

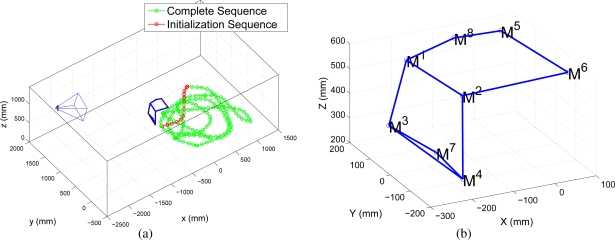

Another experiment is proposed to test the online algorithm with occlusions and a larger path followed by the robot. In Figure 14a it is shown the geometric placement of the camera and in Figure 14b it is shown the geometric model obtained during initialization.

Figure 14.

Scene geometry and 3D model obtained during initialization.

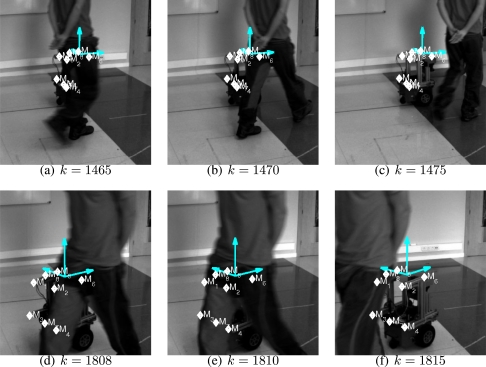

The resulting localization error is shown in Figure 15b. In Figure 16 it is shown several frames, indexed by the time sample number k, presenting hard occlusions between the camera and the robot without losing the tracking. The RANSAC method used in the Kalman loop avoid erroneous matches in the occluded parts of the object. Besides, in those frames with completely occluded features, only the estimation step of the Kalman filter is done, which is accurate enough for short periods of time.

Figure 15.

Localization results in an experiment with occlusions.

Figure 16.

Occlusions and its influence in the localization accuracy.

In both sequential experiments the 3D model is composed of 8 to 10 points and no extra geometrical information is introduced in the state vector. As a future line of study a simultaneous reconstruction and localization approach can be adopted to introduce online extra 3D points in the state vector as the robot is tracked. A very similar approach is done in the Simultaneous Localization and Mapping (SLAM) problem, and some of their solutions [5] are perfectly applicable to the problem assessed in this paper.

6. Conclusions

This paper has presented a complete localization system for mobile robots inside intelligent environments with a single external camera. The objectives of obtaining the pose of the robot based on its natural appearance has been tackled in the paper using a reconstruction approach followed by a sequential localization approach.

The main contributions of this paper are summarized in the following list:

The initialization step provides a non-supervised method for obtaining the initial pose and structure of the robot previously to its sequential localization. A Maximum Likelihood cost function is proposed, which obtains the pose and geometry given a trajectory performed by the robot. The proposal of this paper allows to compensate for the odometry drift in the solution. Also an exact initialization method has been proposed and the degenerate configurations have been identified theoretically.

The online approach of the algorithm obtains the robot’s pose by using a sequential Bayesian inference approach. A robust step, based on the RANSAC algorithm, is proposed to clean the measurement vector out of outliers.

The results show that the proposed method is suitable to be used in real environments. The accuracy and non-drifting nature of the pose estimation have been also evaluated in a real environment.

The future research must be oriented to scale the problem for large configurations of non-overlapped cameras and multiple robots, where extra problems arise, such as to automatically detect the transitions between cameras and online refinement of the geometric models. It is important from our point of view to, in the future, combine the information given by the system proposed in this paper with information sensed onboard the robots using cameras. This approach allows to jointly build large maps attached to information given by the cameras, so that robots can be localized and controlled to perform a complex task.

Acknowledgments

This work was supported by the Ministry of Science and Technology under RESELAI project (references TIN2006-14896-C02-01) and also by the Spanish Ministry of Science and Innovation (MICINN) under the VISNU project (REF-TIN2009-08984).

References

- 1.Lee J., Hashimoto H. Intelligent space concept and contents. Advanced Robotics. 2002;16:265–280. [Google Scholar]

- 2.Aicon 3D Available online: www.aicon.de (accessed January 2010).

- 3.ViconPeak Available Online: www.vicon.com (accessed January 2010).

- 4.Se S., Lowe D., Little J. Vision-based global localization and mapping for mobile robots. IEEE Trans. Robotics Automat. 2005;21:364–375. [Google Scholar]

- 5.Davison A.J., Murray D.W. Simultaneous Localization and Map-Building Using Active Vision. IEEE Trans. Patt. Anal. 2002;24:865–880. [Google Scholar]

- 6.Hada Y., Hemeldan E., Takase K., Gakuhari H. Trajectory tracking control of a nonholonomic mobile robot using iGPS and odometry. Proceedings of IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems; Tokyo, Japan. 2003. pp. 51–57. [Google Scholar]

- 7.Fernandez I., Mazo M., Lazaro J., Pizarro D., Santiso E., Martin P., Losada C. Guidance of a mobile robot using an array of static cameras located in the environment. Auton. Robots. 2007;23:305–324. [Google Scholar]

- 8.Morioka K., Mao X., Hashimoto H. Global color model based object matching in the multi-camera environment. Proceedings of IEEE /RSJ International Conference on Intelligent Robots and Systems; Beijing, China. 2006. pp. 2644–2649. [Google Scholar]

- 9.Chung J., Kim N., Kim J., Park C.M. POSTRACK: a low cost real-time motion tracking system for VR application. Proceedings of Seventh International Conference on Virtual Systems and Multimedia; Berkeley, CA, USA. 2001. pp. 383–392. [Google Scholar]

- 10.Sogo T., Ishiguro H., Ishida T. Acquisition of qualitative spatial representation by visual observation. Proceedings of International Joint Conference on Artificial Intelligence; Stockholm, Sweden. 1999. [Google Scholar]

- 11.Kruse E., Wahl F.M. Camera-based observation of obstacle motions to derive statistical data for mobile robot motion planning. Proceedings of IEEE International Conference on Robotics and Automation; Leuven, Belgium. 1998. pp. 662–667. [Google Scholar]

- 12.Hoover A., Olsen B.D. Sensor network perception for mobile robotics. Proceedings of IEEE International Conference on Robotics and Automation; Krakow, Poland. 2000. pp. 342–347. [Google Scholar]

- 13.Steinhaus P., Ehrenmann M., Dillmann R. MEPHISTO. A Modular and extensible path planning system using observation. Lect. Notes Comput. Sci. 1999;1542:361–375. [Google Scholar]

- 14.Shi J., Tomasi C. Good features to track. Proceedings of IEEE Conference on Computer Vision and Pattern Recognition; Seattle, WA, USA. 1994. pp. 593–600. [Google Scholar]

- 15.Lowe D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vision. 2004;60:91–110. [Google Scholar]

- 16.Triggs B., McLauchlan P., Hartley R., Fitzgibbon A. Bundle adjustment–a modern synthesis. Lect. Notes Comput. Sci. 1999;1883:298–372. [Google Scholar]

- 17.Hartley R., Zisserman A. Multiple View Geometry in Computer Vision. 2nd ed. Cambridge University Press; Cambridge, UK: 2003. [Google Scholar]

- 18.Moreno-Noguer F., Lepetit V., Fua P. Accurate non-iterative o (n) solution to the PnP problem. Proceedings of IEEE International Conference on Computer Vision; Rio de Janeiro, Brasil. 2007. pp. 1–8. [Google Scholar]

- 19.Schweighofer G., Pinz A. Globally optimal o (n) solution to the pnp problem for general camera models. Proceedings of BMVC; Leeds, UK. 2008. [Google Scholar]

- 20.Van der Merwe R., de Freitas N., Doucet A., Wan E. The Unscented Particle Filter. Engineering Department of Cambridge University; Cambridge, UK: 2000. Technical Report CUED/F-INFENG/TR380; [Google Scholar]

- 21.Pizarro D., Mazo M., Santiso E., Hashimoto H. Springer Tracts in Advanced Robotics; Heidelberg, Germany: 2008. Mobile robot geometry initialization from single camera; pp. 93–102. Field and Service Robotics; [Google Scholar]

- 22.Fischler M.A., Bolles R.C. Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Comm. Assoc. Comp. Match. 1981;24:381–395. [Google Scholar]

- 23.GEINTRA Group of Electronic Engineering Applied to Intelligent Spaces and Transport. Available online: http://www.geintra-uah.org/en (accessed December 2009).

- 24.Bouguet J.Y. Matlab Calibration Toolbox. Available online: http://www.vision.caltech.edu/bouguetj/calib-doc (accessed December 2008).