Abstract

When thinking about ethics, technology is often only mentioned as the source of our problems, not as a potential solution to our moral dilemmas. When thinking about technology, ethics is often only mentioned as a constraint on developments, not as a source and spring of innovation. In this paper, we argue that ethics can be the source of technological development rather than just a constraint and technological progress can create moral progress rather than just moral problems. We show this by an analysis of how technology can contribute to the solution of so-called moral overload or moral dilemmas. Such dilemmas typically create a moral residue that is the basis of a second-order principle that tells us to reshape the world so that we can meet all our moral obligations. We can do so, among other things, through guided technological innovation.

Keywords: Moral overload, Engineering, Technological progress

Introduction

Engineers are often confronted with moral dilemmas in their design work because they are presented with conflicting (value) requirements (cf.Van de Poel 2009). They are supposed to accommodate for example both safety and efficiency, security and privacy, accountability and confidentiality. The standard reaction to moral dilemmas is to try and weigh up the different moral considerations and establish which values are more important for the engineering task at hand, think about trade-offs or justifications for giving priority to one of the values at play. It seems only natural to think about moral solutions to moral problems arrived at by moral means. Sometimes this is the only thing we can do. Sometimes however our moral dilemmas are amenable to a technical solution. We tend to forget that since a moral dilemma is constituted by a situation in the world which does not allow us to realize all our moral obligations in that situation at the same time, solutions to a dilemma may also be found by changing the situation in such a way that we can satisfy all our value commitments. We argue here that some moral dilemmas may very well have engineering solutions and that certain types of moral dilemmas can be tackled by means of technical innovations. Our analysis draws attention to a special feature of the responsibility of engineers, namely the responsibility to prevent situations which are morally dilemmatic and which must inevitably lead to suboptimal solutions or compromises and trade-offs from a moral point of view. We start our analysis from a familiar place: the analysis of moral dilemmas and the problem of moral overload.

We are repeatedly confronted by situations in which we cannot satisfy all the things that are morally required of us. Sometimes our moral principles and value commitments can simply not all be satisfied at the same time given the way the world is. The result is that we are morally ‘overloaded’. These situations have been extensively studied in moral philosophy, rational choice theory and welfare economics and are referred to as ‘hard choices’, ‘moral dilemmas’ or ‘conflicting preferences’ (e.g. Kuran 1998; Van Fraassen 1970; Levi 1986). The problem that has received most of the attention is the question of how we ought to decide in these dilemmatic cases between the various options and alternatives open to the agent. There is however another aspect that has received far less attention and that is sometimes referred to as “the moral residue”, i.e. the moral emotions and psychological tensions that are associated with the things that were not done, the road not travelled, the moral option forgone. A moral residue provides those who are exposed to it with an incentive to avoid moral overload in the future. It can therefore function as a motor for improvement, in fact as a motor for technological innovation. If an instance of technological innovation successfully reduces moral overload it constitutes an instance of moral progress, so we will argue.

Moral Overload and Moral Dilemmas

Timur Kuran (1998) has referred to situations in which we have different value commitments by which we cannot live simultaneously as situations of “moral overload”. The basic idea of moral overload is that an agent is confronted with a choice situation in which different obligations apply but in which it is not possible to fulfil all these obligations simultaneously.

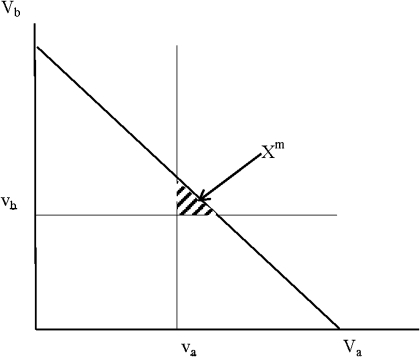

Kuran provides the following more detailed definition of moral overload. An agent A has to make a particular decision in a choice situation in which A can only choose one option. The options she can choose from form together the opportunity set X, which is defined by physical and practical constraints. The agent has a number of values Va … Vx; each of these values requires her to avoid a subset of the options. More specifically the values instruct the agent to keep vn > vn, where vn is the actual realisation of value Vn by an option and vn a threshold that Vn should at least meet. The set of feasible options that meet all these value thresholds forms the moral opportunity set Xm (see Fig. 1).

Fig. 1.

The moral opportunity set Xm. Under certain conditions Xm may be empty, so creating moral overload. (The figure is based on Fig. 1 in Kuran 1998:235)

Now in some choice situations, the moral opportunity set is empty: the agent cannot live by her values. This situation is defined as moral overload. In a situation of moral overload the agent is not only confronted with a difficult choice problem, she is also forced to do something that violates her values; moral overload therefore results in a feeling of moral guilt or moral regret.

The notion of moral overload is quite similar to what others have described as a moral dilemma. The most basic definition of a moral dilemma is the following (Williams 1973:180):

The agent ought to do a

The agent ought to do b

The agent cannot do a and b

One available option of action meets one obligation but not the other. The agent again can fulfil the second obligation, but not without violating the first. Conflicting moral obligations create moral dilemmas. The nature, structure and logic of moral dilemmas has been extensively studies and has been discussed among others by Bas van Fraassen (1970), Bernard Williams (1973) and Ruth Marcus (1980).

William’s definition of a moral dilemma is somewhat different from Kuran’s definition of moral overload because it is defined in terms of ‘oughts’ instead of in terms of ‘values’ and because it is defined over two options a and b instead of over a larger set of options, but the basic deontic structure is obviously the same. For our current purpose, Levi’s (1986:5) definition of what he calls ‘moral struggle’ is particularly relevant:

The agent endorses one or more value commitments P1, P2, …, Pn.

Value commitment Pi stipulates that in contexts of deliberation of type Ti, the evaluation of feasible options should satisfy constraint Ci.

The specific decision problem being addressed is recognized to be of each of the types T1, T2, …, Tn so that all constraints C1, C2, …, Cn are supposed to apply.

The decision problem currently faced is one where the constraints C1, C2, …, Cn cannot all be jointly satisfied.

Levi sees his definition of moral struggle, which is almost identical to Kuran’s definition of moral overload, as a more general characterisation of moral dilemmas. It is not only more general because it applies to a set of options and a set of value commitments instead of just two options and two ‘oughts’ but also because the notion of value commitment is more general than the notion of ‘ought.’ According to Levi, value commitments may be represented as moral principles, but also as ‘expressions of life goals, personal desires, tastes or professional commitments’ (Levi, 1986:5). This suggests that we also can have non-moral overload generated by conflicting non-moral value commitments in a choice situation. We may, for example, have a conflict between a prudential and a moral value or between two prudential values or two preferences.

Strategies for Dealing With Moral Overload

There are various strategies for dealing with moral overload or moral dilemmas. In this section we will discuss several of such strategies. We will see that although these strategies may be more or less adequate in the individual choice situation, they in most cases do not take away what has been called the ‘moral residue.’ Moral residue here refers to the fact that even if we may have made a justified choice in the case of moral overload or a moral dilemma, there remains a duty unfulfilled, a value commitment not met.

One way to deal with a moral dilemma is to look for the option that is best all things considered. Although this can be done in different ways, it will usually imply a trade-off among the various relevant value commitments. The basic idea here is that the fact that an option x does not meet value commitment Pi with respect to value Vi could be compensated by better meeting one or more of the other values. Such a strategy reduces the multidimensional decision problem to a one-dimensional decision-problem.

Value commitments can, however, not always be traded off. This is sometimes expressed in terms of value incommensurability, i.e. the fact that values cannot be measured on the same scale (Chang 1997). What makes trade-offs impossible is, however, probably not just formal incommensurability, i.e. the lack of a measurement method to compare (degrees of) values on one scale, but rather substantive incommensurability, i.e. the fact that some values resist trade-offs: less of one value cannot be compensated by having more of another (Tetlock 2003; Raz 1986; Baron and Spranca 1997). No money in the world can compensate the loss of a dear friend. Another way of expressing this idea is that if trade-offs or compensations are tried there is always some residue that cannot be compensated; it is in fact this residue that creates a feeling of moral guilt or regret that is typical for moral dilemmas and moral overload. This also implies that even if we might be justified in believing that one value commitment is more important than another one in a morally dilemmatic choice, this does not take away the occurrence of a moral residue.

Making value trade-offs is not the only strategy for dealing with moral dilemmas and moral overload. We will focus here on the various strategies, social mechanisms and social institutions that Kuran (1998) discusses. He distinguishes three categories of strategies. The first comprises strategies that allow the agent to lower the threshold for one or more of the value commitments in the particular choice situation while retaining the long-term value commitments. The second category of strategies allows the agent to avoid entering into a choice situation that is characterized by moral overload. The third category is formed by strategies that help to avoid moral overload by reformulating or revising long-term value commitments. All of these strategies can be employed by individuals but all of them also have an institutional component, i.e. they are made possible or made easier through the existence of certain social institutions that help to alleviate value conflict of individuals.

Kuran discusses three strategies of the first category that allow the agent to lower the threshold for one or more value commitment in the particular choice situation while retaining the long-term value commitment: compensation, casuistry and rationalization. Compensation is often not directly possible in the choice situation because the relevant values in a situation of moral overload resist trade-offs and are therefore not amenable to direct compensation. However, agents can and often do—as empirical evidence suggests—compensate a moral loss in one situation by doing more than is required in a next situation. Compensation may then allow agents to live by their values over the course of their life, even if they cannot live up to their value commitments in every choice situation.1

Kuran describes casuistry, the second strategy, as ‘the use of stratagems to circumvent a value without discarding it formally’ (Kuran 1998:251). The use of such tricks is obviously one of the things that gave casuistry a bad name in the past (cf. Jonsen and Toulmin 1988). It might indeed strike one as wicked to propose this as a strategy for dealing with moral overload. It might nevertheless have some value because it helps to preserve the value commitment for future choice situations without incurring a feeling of guilt in the current choice situation.

In rationalization, the agent tries to rationalize away the conflict between two values. Take the following example. In choosing a means of transport, one may have the prudential value of ‘comfort’ and the moral value of ‘taking care for the environment’. The values conflict because the most comfortable means of transport, the car, pollutes the environment more than, for example, the train. The agent may now rationalize away the conflict by arguing that after all the train is more comfortable than a car for example because you do not have to drive yourself and have time to read. In this way, rationalization not only alleviates the felt tension between the two values, but it also affects the choice made. In the example given the agent in effect restrained her prudential value in favour of the moral value at play.

The second category of strategies aims at avoiding moral overload. Kuran suggests two strategies for doing so: escape and compartmentalisation. Escape is a strategy in which an agent tries to prevent moral overload by avoiding choices. Compartmentalisation refers to the splitting up of an individual’s life or of society in different contexts two which different values apply. In as far as compartmentalisation is successful it avoids the need to choose between two or more values in a specific choice context.

The third category comprises strategies in which the agent revises her value commitments in order to avoid moral overload. Kuran refers to this as ‘moral reconstruction’. Obviously, moral reconstruction only makes sense if an agent is repeatedly not able to live by her value commitments or if they are independent reasons to revise a value commitment, for example because it was mistaken in the first place or has become out-dated due to, for example, historical developments. In the absence of such independent reasons, moral reconstruction to avoid moral dilemmas is often dubious. As Hansson writes:

More generally, dilemma-avoidance by changes in the code always takes the form of weakening the code and thus making it less demanding. There are other considerations that should have a much more important role than dilemma-avoidance in determining how demanding a moral code should be. In particular, the major function of a moral code is to ensure that other-regarding reasons are given sufficient weight in human choices. The effects of a dilemma per se are effects on the agent’s psychological state, and to let such considerations take precedence is tantamount to an abdication of morality (Hansson 1998:413).

Nevertheless, a milder form of moral reconstruction, not mentioned by Kuran, might sometimes be acceptable. In some cases, it is possible to subsume the conflicting values under a higher order value. Kantians, for example, tend to believe that all value conflicts can eventually be solved by having recourse to the only value that is unconditionally good, the good will. One need not share this optimism, to see that it makes sometimes perfect sense to try to redefine the conflicting values in term of one higher-order value. A good example is the formulation of the value ‘sustainable development’ in response to the perceived conflict between the value of economic development and the abatement of poverty on the one hand, and environmental care and care for future generations on the other hand. In 1987, sustainable development was defined by the Brundlandt committee of the UN as ‘development that meets the needs of the present without compromising the ability of future generations to meet their own needs’ (WCED 1987).

Although higher order values, like sustainability, may be useful to decide how to act in a moral dilemma, they do often not just dissolve the dilemma. Often the overarching value may refer to, or contain, a range of more specific component values and norms that are conflicting and incommensurable (cf. Chang 1997:16; Richardson 1997:131). This means that even if a justified choice may be made in a dilemmatic situation on basis of an overarching value, a moral residue, in the sense of a moral value or norm not (fully) met, may still occur.

As we have seen there are different ways in which people can react to moral overload. Not all replies are, however, equally morally acceptable. Casuistry and rationalisation, for example, may be psychologically functional, but they may well lead to morally unacceptable choices. Trying to avoid entering in a moral dilemma by escape or compartmentalisation may sometimes be morally desirable but is certainly not always morally praiseworthy. In some circumstances, it may also be interpreted as a way of neglecting one’s moral responsibilities. As we have seen also, moral reconstruction, whiles sometimes adequate, may in other circumstances be unacceptable. Moreover, even if there a morally justified choice in a dilemmatic situation, this choice as such does usually not take away the occurrence of a moral residue.

The occurrence of a moral residue or moral guilt is thus typical for choice under moral overload. The moral residue or guilt is, however, not just an unfortunate by-product we have to live with, but it will motivate the agent to organize her live in such a way that in the future moral overload is reduced. Marcus (1980) has in fact suggested that we have a second-order duty to avoid moral dilemmas: “One ought to act in such a way that, if one ought to do X and one ought to do Y, then one can do both X and Y.” This principle is regulative; the second order ‘ought’ does not imply ‘can.’ The principle applies to our individual lives, but also entails a collective responsibility (Marino 2001) to create the circumstances in which we as a society can live by our moral obligations and our moral values. One way in which we can do so is by developing new technologies.

Moral Residues as Motors for Technological Innovation

Ruth Marcus (1980) has put forward the following second-order regulatory principle:

(BF1) One ought to act in such a way that, if one ought to do x and one ought to do y, then one can do both x and y.

To understand this principle, it is useful first to revisit the question whether, and in what sense, ‘ought’ implies ‘can’. If OA (it is obligatory that A), then PA (it is permitted that A), and therefore MA (it is logically possible that A). In this sense, OA → MA is valid. But in many other senses, OA does not entail MA. For example, if M means “economically possible,” or “politically possible,” or “physically possible,” or “biologically possible” or “possible without losing your life,” or “astrologically possible,” or “using only your bare hands and no any instrument whatsoever,” or “possible with your left thumb,” then OA → MA is invalid. In such cases, it makes sense to say that OA should imply MA:

O(OA → MA).

According to standard deontic logic with a possibility operator, (1) is a theorem if and only if (2) is a theorem (indeed, both (1) and (2) are theorems of that system):

-

(2)

O(OA&OB → M(A&B)).

Formula (2) expresses principle BF1, while (1) expresses the following principle, which seems weaker but in fact has the same force:

(BF2) One ought to act in such a way that if one ought to do x, one can do x.

We will refer to “principle (BF1/BF2)” to refer to the principles (BF1) and (BF2) which can be derived from each other. In cases in which OA& ~ MA, there is what Ruth Marcus calls a “moral residue” because OA cannot be fulfilled. This may cause anxiety and distress. What can one do in such cases?

One approach in such situations is to try to avoid entering into a moral dilemma or situation of moral overload in the first place. Principle BF1/BF2, for example implies that one should not make two promises that cannot be fulfilled simultaneously. More generally, the second category of strategies discussed by Kuran, which includes the strategies of escape and compartmentalisation, are relevant here (see previous section).

However, principle BF1/BF2 can also be fulfilled by a set of strategies that seems to be missing in Kuran’s overview: strategies that help to avoid moral overload by expanding the opportunity set, i.e. by changing the world in such a way that we can live by all our values. We may refer to this set of strategies as ‘innovation’. Innovation can be institutional or technical in nature. We are here primarily interested in innovation which has its origin in engineering, technology and applied science. Our thesis to be defended here is that technical innovation and engineering design are important, though often neglected, means for reducing or even avoiding moral overload on a collective level and dealing with dilemmatic situations and their moral tensions on an individual level. We argue that technical innovation and engineering design sometimes offer genuine ways out of moral mazes and provide opportunities to obviate moral dilemmas and reduce the regret, guilt and moral residues that are inevitably linked to them.

The crucial point is that innovation can make the impossible possible, not in the sense of “logically possible,” of course, but in the sense of “feasible” or “physically realizable.” Given technologies S and T, where S is less advanced than T, it may be the case that ~MSA&MTA: A is not possible with technology S but A is possible with technology T. Here MTA may be explicated as MA&N(MA → T), where N means “necessarily”: it is possible that A, but only in the presence of T. Seen from this perspective, (BF1/BF2) admonishes us to look for more advanced technology in cases in which we cannot fulfill our obligations on the basis of current technology. If N(MA → T) is true, then principle (BF1/BF2) implies O(OA → T) and O(OA → OA&MTA). In other words, if OA then we should look for technology T such that OA&MTA. It is in this sense that moral residues in combination with principle (BF1/BF2) can promote technological innovation.

We provide the following examples.

(1) Suppose that your new neighbors have invited you for their house-warming party and you feel obliged to attend (OA). But you also have to look after your baby (OB). Suppose also that there is no baby-sitter available. If your actions were limited to those that were available in Ancient Greece you would have a problem because ~MG(A&B), where G is Greek technology. However, we now have the baby phone. It enables you to take care of your baby during your visit to the neighbors. As a result, M(A&B) is now true and both O(A&B) and (BF1/BF2) can be fulfilled. It is in this way that technology may lead to empowerment. If technology such as the baby phone did not exist, somebody should invent it.

(2) Trade-off between security and privacy. As a Society we value privacy, but at the same time we value security and the availability of information about citizens. This tension is exemplified in the debates about ubiquity of CCTV cameras in public places. We either hang them everywhere and have the desired level of security (OA) in that area but give up on our privacy (~OB), or out of respect of privacy we do not hang them everywhere (OB), but settle for less security (~OA). Respect for our privacy may pull us in the direction of reticence, whereas security pushes us in the direction of making more information about individual citizens available to the outside world. Smart CCTV systems allow us to have our cake and eat it, in the sense that their smart architecture allows us to enjoy the functionality and at the same time realize the constraints on the flow and availability of personal data that respect for privacy requires (MT(A&B)). These applications are referred to as Privacy Enhancing Technologies (PET).

(3) Trade-off between economic growth and sustainability. Environmental technology in Germany is among the most advanced in the world. One of the reasons why this is the case is because in Germany in the Sixties the Green Party was very influential and articulated the obligation to reconcile economic growth with the protection of the environment. It is only because this tension between desirable production and economic growth (OA) was explicitly contrasted with cherished environmental values (OB) that an opportunity was created to find ways in which the two could be reconciled. Environmental technology is exactly the sort of smart technology that changes the world in such a way as to allow us to produce and grow without polluting the environment (MT(A&B)).

(4) Trade-off between military effectiveness and proportionality. We are sometimes under an obligation to engage in military interventions which satisfy the universally accepted principles of ius cogens or ius ad bellum (OA), we at the same time foresee that these military operations may cause the death of innocent non-combatants (OB). Here we find ourselves torn between two horns of a dilemma in a particular case of a mission or on a collective level we are morally overloaded since we have two values which we cannot satisfy at the same time, i.c. destroy the enemies’ weapons of mass destruction, and on the other hand prevent innocent deaths (~M(A&B)). Non-lethal weapons or precision/smart weapons ideally allow us to satisfy both obligations (MT(A&B)) (cf. Cummings 2006). This example only serves to exhibit the logic of the military thinking concerning advanced weapons technology. Whether the envisaged technology really delivers the goods needs to be established independently.

The list of examples of this type is extensible ad lib. For this reason, we propose the following hypothesis: moral residues in combination with principle (BF1/BF2) can—and often do—act as motors of technological progress.

Moral Progress and Technological Innovation

Meeting principle (BF1/BF2) can be described as moral progress because it allows us to better fulfil our moral obligations (Marino 2001). We have shown that technical innovation can be a means to fulfil principle (BF1/BF2). This implies that technological innovation can result in moral progress.

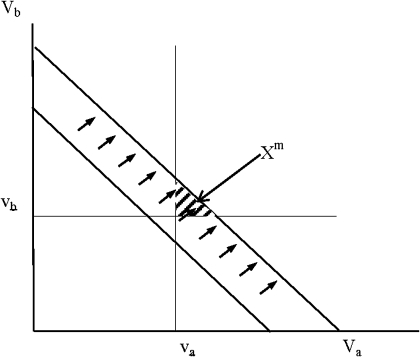

The reason why technical innovation can entail moral progress is that it enlarges the opportunity set. In the examples mentioned, technical innovation moved the boundary of the opportunity set in the upper right direction (see Fig. 2). As an effect the moral opportunity set, which was empty in the case of (moral) overload, became non-empty. Even if the moral opportunity set does not become non-empty the degree of moral overload is reduced by moving the boundary of the opportunity set in the upper right direction.

Fig. 2.

By extending the opportunity set, the moral opportunity set Xm may become non-empty

Of course, not all instances of technological innovation entail moral progress. While technical innovation may result in progress in some respects, it may at the same time represent a decline in other important value dimensions. Due to agricultural innovations, grain output in many western countries has significantly increased per area of land cultivated but it has decreased per unit of energy consumed (Pacey 1983:14). Another reason why technical innovation does not necessarily result in moral progress is that it may result in a ‘technological fix,’ i.e. a technical solution to a problem that is social in nature (Weinberg 1966). Technological fixes are not always undesirable or inadequate, but there is a danger that what is addressed is not the real problem but the problem in as far as it is amendable to technical solutions (see also Sarewitz 1996, especially chapter 8). It can, for example, be argued that world hunger is not primarily a problem of production capacity, which can be enlarged by technical innovation, but rather a problem of distribution of food, income and land, which is far less amendable to technical solutions.

Despite such reservations, we still think that it can be claimed that technical innovation results in moral progress in those cases in which it means an improvement in all relevant value dimensions. There is, nevertheless, another possible objection to this view and, that is, that it assumes a static notion of the relevant value dimensions. It has been argued that technological innovation does not only change the opportunity set but also morality, and thus the value dimensions along which we judge moral progress (Swierstra et al. 2009).

Although it is true that technology can change morality—think about the change in sexual morals due to the availability of anticonceptives—we think that technology-induced moral change at the level of fundamental values are the exception rather than the rule. In many cases, we can therefore simply assess moral progress by the standard of current values. Nevertheless, technical innovation may sometimes make new values dimensions relevant that were not considered in the design of a technology. We can think of two reasons why this might occur.

One reason is that technical innovation not only enlarges the range of options but that new options also bring new side-effects and risks. This may introduce new value dimensions that should be considered in the choice situation and these new value dimensions may create new forms of moral overload. Nuclear energy may help to decrease the emission of greenhouse gases and at the same time provide a reliable source of energy, but it also creates long-term risks for future generations due to the need to store the radioactive waste for thousands of year. It thus introduces the value dimension of intergenerational justice and creates new moral overload. The design of new reactor types and novel fuel cycles is now explored to deal with those problems (Taebi and Kadak 2010).

Second, technical innovation may introduce choice in situations in which there was previously no choice. An example is prenatal diagnostics. This technology creates the possibility to predict that an as yet unborn child will have a certain disease with a certain probability. This raises the question whether it is desirable to abort the foetus in certain circumstances. This choice situation is characterised by a conflict between the value of life (even if this life is not perfect) and the value of avoiding unnecessary suffering. Given that prenatal diagnostic technologies introduce such new moral dilemmas one can wonder whether the development of such technologies meets principle (BF1/BF2). The same applies to the technologies for human enhancement that are now foreseen in the field of nanotechnology and converging technologies.

Implications for the Responsibility of Engineers

We have seen that while technological innovation might be a means to fulfil principle (BF1/BF2), not all innovations meet principle (BF1/BF2). We think this has direct implications for the responsibility of engineers that develop new technology. We suggest that engineers, and other actors involved in technological development, have a moral responsibility to see to it that the technologies that they develop meet principle (BF1/BF2).

This higher order moral obligation to see to it that can be done what ought to be done can be construed as an important aspect of an engineer’s task responsibility. This has been described as a meta-task responsibility (Van den Hoven 1998; Rooksby 2009), or an obligation to see to it (by designing an artifact) that one self or others (users or clients) can do what ought to be done.

An interesting way to fulfil this responsibility is the approach of Value Sensitive Design. In Value Sensitive Design the focus is on incorporating moral values into the design of technical artifacts and systems by looking at design from an ethical perspective concerned with the way moral values such as freedom from bias, trust, autonomy, privacy, and justice, are facilitated or constrained (Friedman et al. 2006; Flanagan et al. 2008; Van den Hoven 2005). Value Sensitive Design focuses primarily and specifically on addressing values of moral import. Other frameworks tend to focus more on functional and instrumental values, such as speed, efficiency, storage capacity or usability. Although building a user-friendly technology might have the side-effect of increasing a user’s trust or sense of autonomy, in Value Sensitive Design the incorporation of moral values into the design is a primary goal instead of a by-product. According to Friedman, Value-Sensitive Design is primarily concerned with values that centre on human well-being, human dignity, justice, welfare, and human rights (Friedman et al. 2006). It requires that we broaden the goals and criteria for judging the quality of technological systems to include explicitly moral values Value Sensitive Design is at the same time, as pointed out by Van den Hoven (2005), “a way of doing ethics that aims at making moral values part of technological design, research and development”. More specifically it looks at ways of reconciling different and opposing values in engineering design or innovations, so that we may have our cake and eat it (Van den Hoven 2008). Value Sensitive Design may thus be an excellent way to meet principle (BF1/BF2) through technical innovation.

Conclusion

In discussions about technology, engineering and ethics, technology and engineering are usually treated as the source of ethical problems, and ethics is treated as a constraint on engineering and technological development. We have shown that also a quite different relation exists between these realms. Ethics can be the source of technological development rather than just a constraint and technological progress can create moral progress rather than just moral problems. We have shown this by a detailed analysis of how technology can contribute to the solution of so-called moral overload or moral dilemmas. Such dilemmas typically create a moral residue that is the basis of a second-order principle that tells us to reshape the world so that we can meet all our moral obligations. We can do so, among other things, through guided technological innovation. We have suggested Value Sensitive Design as a possible approach to guide the engineering design process in the right direction.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Footnotes

Compensation may be made easier by social institutions in several ways. Kuran mentions redemption or the absolution from sins in Christianity as one institution. The modern welfare state also provides compensation mechanisms, e.g. social workers compensate in taking care of the elderly and those who need assistance, when family and relatives lack the time to assist as a result of their other value commitments.

References

- Baron J, Spranca M. Protected values. Organizational Behavior and Human Decision Processes. 1997;70(1):1–16. doi: 10.1006/obhd.1997.2690. [DOI] [Google Scholar]

- Chang R, editor. Incommensurability, incomparability, and practical reasoning. Cambridge, Mass: Harvard University Press; 1997. [Google Scholar]

- Cummings ML. Integrating ethics in design through the value-sensitive design approach. Science and Engineering Ethics. 2006;12:701–715. doi: 10.1007/s11948-006-0065-0. [DOI] [PubMed] [Google Scholar]

- Flanagan M, Howe DC, Nissenbaum H. Embodying values in technology. Theory and practise. In: Van J, den Hoven J, Weckert J, editors. Information technology and moral philosophy. Cambridge: Cambridge University Press; 2008. pp. 322–353. [Google Scholar]

- Friedman B, Kahn PHJ, Borning A. Value sensitive design and information systems. In: Zhang P, Galletta D, editors. Human-computer interaction in management information systems: Foundations (Vol. 5, pp. 348–372, Advances in mangement information systems) Armonk, NY: M.E, Sharpe; 2006. [Google Scholar]

- Hansson SO. Should we avoid moral dilemmas? The Journal of Value Inquiry. 1998;32:407–416. doi: 10.1023/A:1004329011239. [DOI] [Google Scholar]

- Jonsen AR, Toulmin S. The abuse of casuistry. A history of moral reasoning. Berkeley: University of California Press; 1988. [Google Scholar]

- Kuran T. Moral overload and its alleviation. In: Ben-Ner A, Putterman L, editors. Economics, values, organization. Cambridge: Cambridge University Press; 1998. pp. 231–266. [Google Scholar]

- Levi I. Hard Choices. Decion making under unresolved conflict. Cambridge: Cambridge University Press; 1986. [Google Scholar]

- Marcus RB. Moral dilemmas and consistency. Journal of Philosophy. 1980;77:121–136. doi: 10.2307/2025665. [DOI] [Google Scholar]

- Marino P. Moral dilemmas, collective responsibility, and moral progress. Philosophical Studies. 2001;104:203–225. doi: 10.1023/A:1010357607895. [DOI] [Google Scholar]

- Pacey A. The culture of technology. Oxford, England: Blackwell; 1983. [Google Scholar]

- Raz J. The morality of freedom. Oxford: Oxford University Press; 1986. [Google Scholar]

- Richardson HS. Practical reasoning about final ends. Cambridge: Cambridge University Press; 1997. [Google Scholar]

- Rooksby E. How to be a responsible slave: Managing the use of expert information systems. Ethics and Information Technology. 2009;11(1):81–90. doi: 10.1007/s10676-009-9183-0. [DOI] [Google Scholar]

- Sarewitz DR. Frontiers of illusion: Science, technology, and the politics of progress. Philadelphia: Temple University Press; 1996. [Google Scholar]

- Swierstra T, Stemerding D, Boenink M. Exploring techno-moral change. The case of the obesitypill. In: Sollie P, Düwell M, editors. Evaluating new technologies. Dordrecht: Springer; 2009. pp. 119–138. [Google Scholar]

- Taebi, B., & Kadak, A. C. (2010). Intergenerational considerations affecting the future of nuclear power: Equity as a framework for assessing fuel cycles. Risk Analysis, 30(9), 1341–1362, doi:10.1111/j.1539-6924.2010.01434.x. [DOI] [PubMed]

- Tetlock PE. Thinking the unthinkable: Sacred values and taboo cognitions. Trends in cognitive sciences. 2003;7(7):320–324. doi: 10.1016/S1364-6613(03)00135-9. [DOI] [PubMed] [Google Scholar]

- Van de Poel I. Values in engineering design. In: Meijers A, editor. Handbook of the philosophy of science. Volume 9: Philosophy of technology and engineering sciences. Oxford: Elsevier; 2009. pp. 973–1006. [Google Scholar]

- Van den Hoven J. Moral responsibility, public office and information technology. In: Snellen, Donk Vd., editors. Public administration in an information age. Amsterdam: IOS Press; 1998. [Google Scholar]

- Van den Hoven, J. (2005). Design for values and values for design. Information Age, 4–7.

- Van den Hoven J. Moral methodology and information technology. In: Tavani H, Himma K, editors. Handbook of computer ethics. Hoboken, NJ: Wiley; 2008. [Google Scholar]

- Van Fraassen B. Values and the heart’s command. Journal of Philosophy. 1970;70(1):5–19. doi: 10.2307/2024762. [DOI] [Google Scholar]

- WCED . Our common future. Report of the world commission on environment and development. Oxford: Oxford University Press; 1987. [Google Scholar]

- Weinberg AM. Can technology replace social engineering? In: Teich AH, editor. Technology and the future. New York: St.Martin’s Press; 1966. pp. 55–64. [Google Scholar]

- Williams B. Problems of the self. Philosophical papers 1956–1972. Cambridge: Cambridge University Press; 1973. [Google Scholar]