Abstract

fMRI was used to examine lexical processing in native adult Chinese speakers. A 2 task (semantics and phonology) × 2 modality (visual and auditory) within-subject design was adopted. The semantic task involved a meaning association judgment and the phonological task involved a rhyming judgment to two sequentially presented words. The overall effect across tasks and modalities was used to identify seven ROIs, including the left fusiform gyrus (FG), the left superior temporal gyrus (STG), the left ventral inferior frontal gyrus (VIFG), the left middle temporal gyrus (MTG), the left dorsal inferior frontal gyrus (DIFG), the left inferior parietal lobule (IPL), and the left middle frontal gyrus (MFG). ROI analyses revealed two modality-specific areas, FG for visual and STG for auditory, and three task-specific areas, IPL and DIFG for phonology and VIFG for semantics. Greater DIFG activation was associated with conflicting tonal information between words for the auditory rhyming task, suggesting this region’s role in strategic phonological processing, and greater VIFG activation was correlated with lower association between words for both the auditory and the visual meaning task, suggesting this region’s role in retrieval and selection of semantic representations. The modality- and task-specific effects in Chinese revealed by this study are similar to those found in alphabetical languages. Unlike English, we found that MFG was both modality- and task-specific, suggesting that MFG may be responsible for the visuospatial analysis of Chinese characters and orthography-to-phonology integration at a syllabic level.

INTRODUCTION

An increasing number of studies have used functional brain imaging to investigate lexical processing in Chinese. Two meta-analyses of the comparison between Chinese and English lexical processing have been published recently that show both cross-language similarities and differences (Bolger, Perfetti, & Schneider, 2005; Tan, Laird, Li, & Fox, 2005). Tan et al. (2005) showed that both languages exhibit activation in the left fusiform gyrus (FG) and in the left inferior frontal gyrus (IFG). Bolger et al. (2005) also suggest similarities between English and Chinese lexical processing by showing that both languages commonly activated the left mid-FG and the left IFG as well as the mid/anterior portion of the left posterior superior temporal gyrus (STG) and the left occipito-temporal region. Tan et al. also reported some cross-language differences in that only Chinese showed activation in the left middle frontal gyrus (MFG) and it was significantly greater than English, and that only English showed activation in the left temporo-parietal region [including STG or middle temporal gyrus (MTG) and supramarginal gyrus] and it was significantly greater than in Chinese. Bolger et al. largely replicated this language difference found by Tan et al.

Despite the growing number of studies on Chinese lexical processing, it remains unclear whether brain areas involved in reading and language processing in this language are modality or task specific. Most studies have explored Chinese lexical processing in the visual modality (Booth et al., 2006; Dong et al., 2005; Kuo et al., 2004; Chee, Soon, & Lee, 2003; Siok, Jin, Fletcher, & Tan, 2003; Tan et al., 2000, 2003; Fu, Chen, Smith, Iversen, & Matthews, 2002; Luke, Liu, Wai, Wan, & Tan, 2002; Chee et al., 2000), whereas few have examined the auditory modality (Xiao et al., 2005). To our knowledge, no single study has directly explored modality effects in Chinese lexical processing.

Although some studies on Chinese lexical processing have included both phonological and semantic processing tasks (Dong et al., 2005; Tan, Liu, et al., 2001) or both orthographic and phonological processing tasks (Dong et al., 2005), few have directly compared brain activation on these tasks (Booth et al., 2006; Kuo et al., 2004; Peng, Xu, Ding, Li, & Liu, 2003). To our knowledge, only three studies (Booth et al., 2006; Dong et al., 2005; Peng et al., 2003) have directly compared activation between tasks that tap into semantic versus phonological processing. Although Dong et al. (2005) did not directly compare tasks, all the three studies found that the semantic task showed greater activation than the phonological task in the left ventral inferior frontal gyrus (VIFG) [Brodmann’s area (BA) 47]. Booth et al. (2006) additionally found that the semantic task showed greater activation than the phonological task in the STG/MTG (BA 22, 21) and that the rhyming task showed greater activation in a posterior dorsal region of the left IFG/MFG (BA 9/44) and in the left inferior parietal lobule (IPL; BA 40). Booth et al. suggested that the small number of subjects (n = 7) in the Peng et al. (2003) study may have prevented them from finding reliable task differences or that the phonological tasks in the Peng et al. (vowel monitoring) and the Dong et al. (homophone judgment) studies were so simple that they were not sensitive to task differences. In summary, only Booth et al. have found reliable task differences in the left STG/MTG (BA 22, 21), in the posterior dorsal region of the left IFG/MFG (BA 9/44), and in the left IPL (BA 40), so further studies are needed to confirm the roles of these areas in the semantic and the phonological processing in Chinese. Furthermore, no single study has simultaneously manipulated both task and modality factors. By combining modality and task in one study, we could determine the mutual influence of these two factors on brain areas involved in Chinese lexical processing.

Several studies in English have simultaneously manipulated modality and task factors to investigate whether areas involved in English lexical processing are modality or task specific. Booth et al. (2002) found task-specific and modality-independent activation for semantic processing in the left IFG (BA 46, 47) and in the left MTG (BA 21) as well as modality-specific activation in the left FG (BA 37) for written words and in the left STG (BA 22) for spoken words. In another study, Booth et al. additionally found that cross-modal tasks (visual rhyming and auditory spelling) compared with intramodal tasks (visual spelling and auditory rhyming) generated greater activation in the left inferior parietal cortex (BA 40, 39). This finding is consistent with some other studies explored the cross-modal effects in lexical processing (Booth et al., 2002; Lee et al., 1999; Sugishita, Takayama, Shiono, Yoshikawa, & Takahashi, 1996; Flowers, Wood, & Naylor, 1991). The above studies generally suggest that the left FG (BA 19, 37) is modality specific for written word form processing; the left STG (BA 22, 42) is modality specific for spoken word form processing; the left inferior parietal cortex (BA 40, 39) is modality independent and involved in phonological processing; and the MTG (BA 21) is modality independent and involved in semantic processing. Other studies suggest that the left IFG is modality independent with the anterior ventral portion involved in semantic processing and the posterior dorsal portion involved in phonological processing (Devlin, Matthews, & Rushworth, 2003; Fiez, Balota, Raichle, & Petersen, 1999; Poldrack et al., 1999; Fiez, 1997). None of those above studies in English have shown brain regions affected by both modality and task.

The goal of this study was to explore whether brain regions involved in phonological and semantic processing in Chinese are modality or task specific. Toward this end, we used fMRI while Chinese adults performed tasks that required judgments to spoken or written words based upon whether words were semantically associated or rhymed. Based on previous literature, mainly in English, we expected to demonstrate modality-specific activation in FG for visual processing and STG for auditory processing. We also expected to demonstrate task-specific activation in VIFG and MTG for semantic processing and dorsal inferior frontal gyrus (DIFG) and IPL for phonological processing. The MFG has been consistently implicated in Chinese lexical processing, so we also wished to examine modality- and task-specific activation in this region. To further delineate the role of these regions in phonological and semantic processing, we examined whether association strength was related to activation in the semantic tasks and whether conflicting tone information was related to activation in the phonological tasks.

METHODS

Participants

Sixteen participants (mean age = 22.8 years, range = 19.2–24.9 years), nine men, participated in the experiment. All participants were right-hand dominant as measured by the Edinburgh Handedness Inventory (Oldfield, 1971). All participants reported to be free from neurological or psychiatric disorders and have normal vision and hearing. In addition, all participants did not have diagnosed problems in attention, reading, or oral language. Before the experiment, participants were also interviewed to ensure that they could speak Mandarin Chinese with little dialectal accents.

Materials and Tasks

Meaning and Rhyming Judgment Tasks

In the word judgment tasks, two two-character words were presented sequentially, and the participants had to determine whether the second word matched the previous word according to a predefined rule. In the meaning task, participants determined whether the second word was semantically related with the first word. In the rhyming task, participants determined whether the second word rhymed with the first word according to the final character of the words. If there was a match according to the criterion, the participant pressed a button with the index finger using the right hand; if there was no match, the participant pressed a different button with the index finger using the left hand.

A potential confound is that subjects needed to make meaning judgment based on both characters of the words whereas the rhyming judgment could be based on only the final character of the words. However, it is impossible to use single-character Chinese words as stimuli for auditory meaning task because there are many homophones for single-character Chinese words. For example, /shi4/ has several corresponding characters: “

(room),” “

(room),” “

(market),” “

(market),” “

(event),” “

(event),” “

(design),” “

(design),” “

(to show).” But for two-character Chinese words, homophones are rare. To make the four tasks comparable, two-character Chinese words rather than single-character Chinese words were chosen as stimuli for all four tasks.

(to show).” But for two-character Chinese words, homophones are rare. To make the four tasks comparable, two-character Chinese words rather than single-character Chinese words were chosen as stimuli for all four tasks.

Stimulus Characteristics

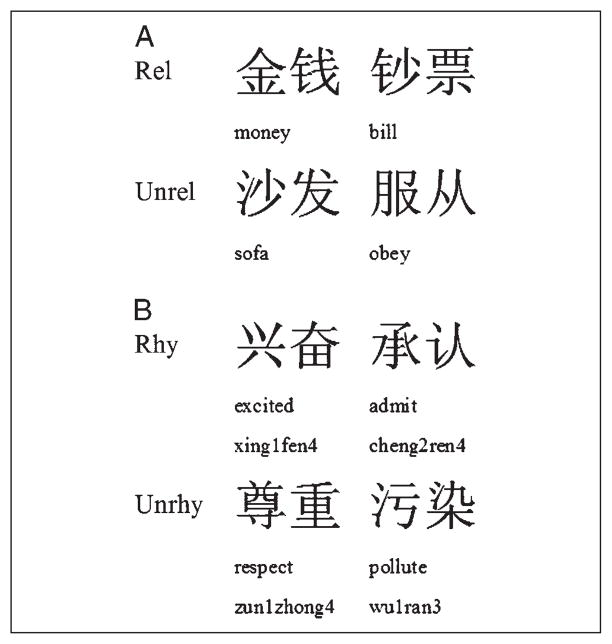

For the meaning judgment task, 25% of the trials contained pairs with a high association, 25% contained pairs with a low association, and 50% contained pairs of words that were unrelated (for examples of related and unrelated pairs, see Figure 1A). A 7-point scale was used to assess the association between the first and the second word. Forty participants in Beijing were asked to judge to what extent pairs of words were related. An average score across participants less than 4.2 was considered low association (M = 3.6), whereas an average score greater than 5.0 was considered high association (M = 5.4). For the rhyming judgment task, 50% of the trials are rhymed and 50% of the trials did not rhyme (for examples, see Figure 1B). The second character (the foot) of the word in each pair did not share the same phonetic radical so that the participants could not base their rhyming judgment on the spelling of the word. For the auditory modality, all stimuli were recorded in a soundproof acoustic lab using Goldwave software and a high quality stereo microphone. A native Chinese female speaker read each word in isolation so that there would be no contextual effects. Each word had a sample rate of 22,050 Hz and a sample size of 8 bits, and no words were longer than 800 msec. Individual files were created for each word, and all words were normalized to be equal amplitude.

Figure 1.

Examples for the meaning task (A) and the rhyming task (B). Rel = related pairs; Unrel = unrelated pairs; Rhy = rhyming pairs; Unrhy = unrhyming pairs.

Several stimulus variables were controlled across tasks so that our effects of interest were not confounded by nuisance variables. First, all of the words contained two syllables. Second, the tasks consisted of words with similar written and spoken word frequency. Chinese-written frequency was determined by a corpus (1.3 million words and 1.8 million characters) that covers almost all fields of human activity, such as politics, economy, philosophy, literature, biology, and medicine (Wang, Chang, & Li, 1985). Chinese-spoken word frequency was determined by a corpus of 1.7 million characters that came from 374 persons living in Beijing with different age, sex, education level, and occupation (Lu, 1993). Third, the tasks consisted of words with similar numbers of strokes. Stroke is the smallest component of Chinese characters and is a measure of visual spatial complexity, that is, it is the number of steps required to write a character. Refer to Table 1 for information on word frequency and strokes for the Chinese stimuli. We confirmed that there were no significant main effects or interactions for written or spoken word frequency as well as for strokes by calculating ANOVA including the following independent variables: 2 task (meaning and rhyming) × 2 modality (visual and auditory) × 2 word (first and second).

Table 1.

Means (SD) for Written and Spoken Word Frequency and Strokes for the Meaning and the Rhyming Tasks in the Visual and Auditory Modality (First and Second Word in Pair)

| Written Frequency

|

Spoken Frequency

|

Strokes

|

||||

|---|---|---|---|---|---|---|

| Meaning | Rhyming | Meaning | Rhyming | Meaning | Rhyming | |

| Visual | ||||||

| First | 33.5 (46.7) | 32.7 (38.6) | 14.5 (17.6) | 10.3 (12.7) | 15.4 (4.0) | 17.1 (4.3) |

| Second | 31.1 (27.0) | 33.08 (33.9) | 12.9 (18.9) | 13.0 (18.4) | 17.2 (5.3) | 17.4 (4.6) |

| Auditory | ||||||

| First | 31.2 (61.8) | 34.7 (45.0) | 11.0 (16.1) | 11.5 (21.2) | 18.1 (4.4) | 17.3 (4.5) |

| Second | 34.5 (70.9) | 33.4 (31.4) | 13.8 (25.7) | 10.9 (22.3) | 17.4 (4.5) | 15.9 (4.3) |

Control Tasks

For control blocks in the visual tasks, the stimuli were abstract, nonlinguistic symbols consisting of straight lines (e.g., / /). For control blocks in the auditory tasks, the stimuli were high-frequency (700 Hz), medium-frequency (500 Hz), and low-frequency (300 Hz) nonlinguistic pure tones. The tones were 600 msec in duration and contained a 100-msec linear fade in and a 100-msec linear fade out. Both tasks required participants to determine whether the second stimulus was the same as the first.

Timing

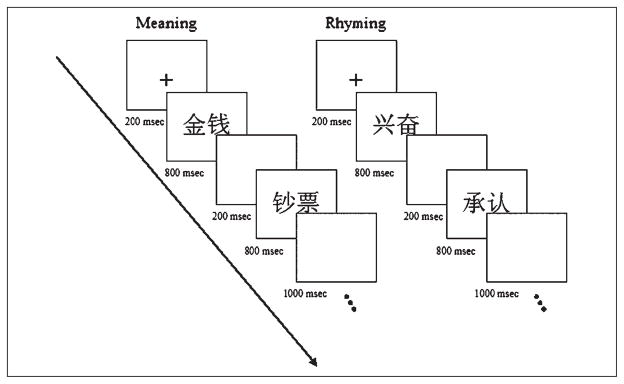

Each task lasted 4 min 24 sec, consisting of eight blocks of 33 sec. This included a 3-sec introduction screen to each block: “meaning judgment” for semantic blocks and “rhyming judgment” for phonological blocks. Four lexical blocks alternated with four control blocks. In each trial for the lexical blocks, a fixation cross was presented on the screen first for 200 msec, then two consecutive words were presented visually or auditorily with each word presented for 800 msec with a 200-msec blank interval between the two words and a 1000-msec blank interval after the second word (for timing of trials, see Figure 2). Thus, each trial lasted a total of 3000 msec, and there were 10 trials in each block. Participants were told that once they saw the second word on the screen or heard the second word, they could respond. Participants were encouraged to respond as quickly as possible without making errors. Control blocks for the visual and the auditory tasks were designed to equate the timing of the lexical and the control blocks. As with the lexical blocks, there was a 3-sec introduction screen to each block: “lines” for visual control blocks and “tones” for auditory control blocks.

Figure 2.

Experimental timing for the meaning and the rhyming tasks.

Experimental Procedure

There were four fMRI runs for each participant, one for each task-by-modality combination. The order of the runs was counterbalanced across participants. Participants were given a brief practice session before the experiment for them to be familiarized with the procedure.

Data Collection

All images were acquired using a 2T GE/Elscint Prestige whole-body MRI scanner. Participant’s head was secured by foam rubber to minimize movement. A susceptibility-weighted single shot EPI method with BOLD was used. The following scan parameters were used: TR = 3000 msec, TE = 45 msec, flip angle = 90°, FOV = 373 × 210 mm, matrix size = 128 × 72, and slice thickness = 6 mm. Eighteen contiguous axial slices were acquired to cover the whole brain at 88 time points during the total imaging time of 4 min 24 sec. At the end of the functional imaging session, a high-resolution, T1-weighted 3-D image was acquired. The following scan parameters were used: TR = 25 msec, TE = 6 msec, flip angle = 28°, FOV = 220 × 220 mm, matrix size = 220 × 220, slice thickness = 2 mm, and number of slices = 89. Behavioral data (RT and error rates) were collected simultaneously.

Imaging Data Analysis

The fMRI data analysis was performed using SPM2 (http://www.fil.ion.ucl.ac.uk/spm). Functional images were realigned to the last functional volume (the one closest to the T1 anatomical scan). All statistical analyses were conducted on these movement-corrected images (no individual participant had more than 2 mm movement in the x, y, or z dimension). An average functional image was generated, coregistered with structural images, normalized to the Montreal Neurological Institute stereotaxic template with 2 × 2 × 4 spatial resolution, and then smoothed with Gaussian filter at FWHM of 8 mm. The data of one participant were not used due to severe image artifact.

The general linear model (GLM) was used to estimate condition effects for individual subjects. For each individual, we calculated contrasts [lexical–control] to analyze the two-word judgment tasks (meaning and rhyming) in the two modalities (visual and auditory). A cross-task and modality overall effect of interest was computed with a random-effect one-way ANOVA model. ROI analyses were used to focus on the left hemisphere regions thought to be involved in language and reading. An inclusive anatomical mask of an ROI (e.g., FG) was used on the overall effect across tasks and modalities and then peak activation was computed. The coordinates of the peak activation for the seven ROIs are as follows: FG (−40, −72, −20), STG (−62, −24, −4), VIFG (−40, 28, −12), MTG (−56, −2, −8), DIFG (−48, 2, 24), IPL (−36, −40, 28), and MFG (−38, 38, 16). A 6-mm radius sphere ROI was drawn centered on the peak activation voxel in SPM2 using the volume of interest toolbar. Only voxels whose activation surpassed the threshold p < .005 uncorrected were used to compute the average beta value for each ROI. Corresponding analyses in the same ROIs for the right hemisphere are also reported in the results. In addition to the modality and the task comparisons, we correlated behavioral accuracy of each task to activation in each ROI for each task, and we examined the correlation of association strength to whole-brain activation separately for each semantic task and the effect of conflicting versus nonconflicting tonal information on whole-brain activation separately for each rhyming task (see Results section).

RESULTS

Behavioral Results

Table 2 presents behavioral data on the word judgment and control blocks. For the word judgment blocks, we calculated Task (meaning and phonology) × Modality (visual and auditory) ANOVAs separately on RT and accuracy. These analyses revealed that the visual modality had significantly faster RT and higher accuracy than the auditory modality, F(1,15) = 120.56, p = .000 for RT and F(1,15) = 99.17, p = .000 for accuracy. There were no task main effects or Task × Modality interaction effects for the word judgment blocks.

Table 2.

Means (M) and Standard Errors (SE) for RT (msec) and Accuracy (%) in the Word Judgment (Meaning and Rhyming) and the Control Conditions in the Visual and Auditory Modality

| RT

|

%

|

|||

|---|---|---|---|---|

| M | SE | M | SE | |

| Visual | ||||

| Meaning | 885 | 43.0 | 95.8 | 1.0 |

| Control | 707 | 58.0 | 97.7 | 0.8 |

| Rhyming | 876 | 39.3 | 94.0 | 1.7 |

| Control | 695 | 57.2 | 98.0 | 0.8 |

| Auditory | ||||

| Meaning | 1270 | 24.9 | 80.2 | 1.6 |

| Control | 1007 | 46.9 | 95.0 | 1.4 |

| Rhyming | 1234 | 23.3 | 84.5 | 1.4 |

| Control | 955 | 42.3 | 94.1 | 1.7 |

We also calculated the same ANOVAs as above for the control blocks. This analysis revealed that the visual modality had significantly faster RT and higher accuracy than the auditory modality, F(1,15) = 62.07, p = .000 for RT and F(1,15) = 6.90, p = .019 for accuracy. There were no task main effects or Task × Modality interaction effects for the control blocks.

fMRI Results

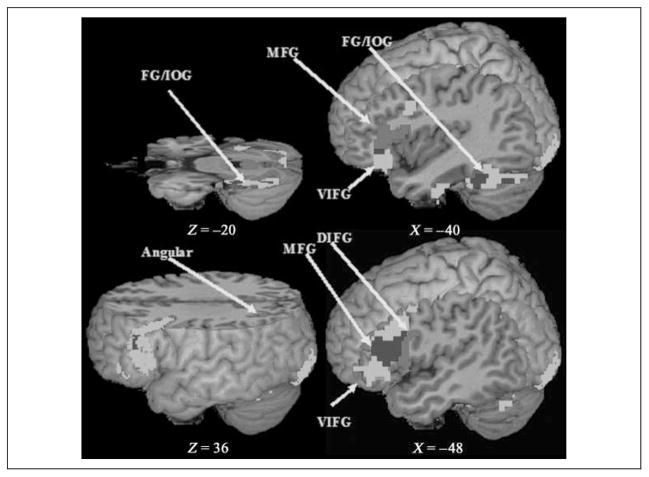

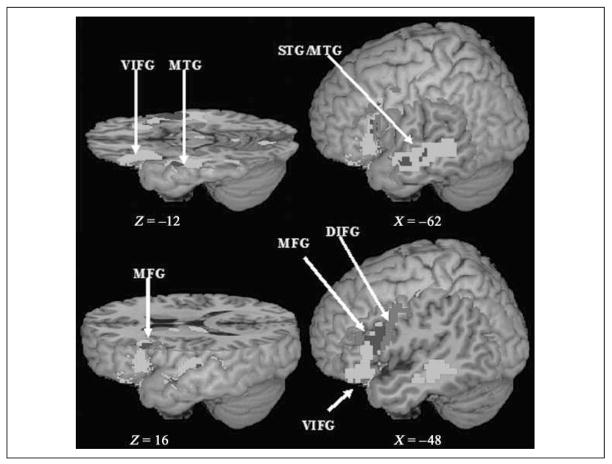

We calculated four contrasts [lexical–control]: one for each word judgment task (meaning and rhyming) in each of the two modalities (visual and auditory). Table 3 presents the peak voxels of this random effects comparison ( p < .004, FDR corrected was used to maximize distinct clusters). Participants activated a neural network that was mostly lateralized to the left, and we focus here on reporting areas of activation in our left hemisphere ROIs. As illustrated in Figure 3, there was activation for both visual tasks in FG (BA 37) and IFG/MFG (BA 45/ 46). The visual meaning task additionally activated VIFG (BA 47), and the visual rhyming task additionally activated DIFG (BA 44/9) and angular gyrus (BA 39). As illustrated in Figure 4, there was activation for both auditory tasks in STG (BA 22) and IFG/MFG (BA 45/46). The auditory meaning task additionally activated VIFG (BA 47) and MTG (BA 21), and the auditory rhyming task additionally activated DIFG (BA 44/9).

Table 3.

Activation for the Meaning and the Rhyming Tasks in the Visual and Auditory Modality

| Regions | H | BA | Visual

|

Auditory

|

||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Meaning

|

Rhyming

|

Meaning

|

Rhyming

|

|||||||||||||||||||

| x | y | z | Z | Voxel | x | y | z | Z | Voxel | x | y | z | Z | Voxel | x | y | z | Z | Voxel | |||

| IFG/MFG | L | 47 | – | – | – | – | – | – | – | – | – | – | −30 | 22 | −8 | 5.23 | 774 | – | – | – | – | – |

| L | 46 | −52 | 34 | 20 | 5.03 | 753 | −46 | 32 | 16 | 5.22 | 1827 | – | – | – | – | – | −46 | 32 | 20 | 4.8 | 27 | |

| L | 45 | −48 | 26 | 20 | 4.98 | a | – | – | – | – | – | – | – | – | – | – | −46 | 10 | 20 | 4.8 | 191 | |

| L | 13 | – | – | – | – | – | −44 | 22 | 8 | 5.08 | a | – | – | – | – | – | – | – | – | – | – | |

| L | 9 | −50 | 16 | 40 | 4.61 | a | – | – | – | – | – | – | – | – | – | – | – | – | – | – | – | |

| Angular gyrus | L | 39 | – | – | – | – | – | −30 | −64 | 36 | 4.13 | 37 | – | – | – | – | – | – | – | – | – | – |

| FG | L | 37 | −36 | −48 | −24 | 4.94 | 474 | −38 | −44 | −28 | 4.31 | 78 | – | – | – | – | – | – | – | – | – | – |

| Cuneus/lingual gyrus | L | 18 | −28 | −100 | −8 | 4.91 | a | – | – | – | – | – | −2 | −84 | 0 | 4.81 | 491 | – | – | – | – | – |

| STG/MTG | L | 38 | – | – | – | – | – | – | – | – | – | – | −50 | −4 | −12 | 5.62 | 741 | −52 | −2 | 12 | 4.3 | 16 |

| L | 22 | – | – | – | – | – | – | – | – | – | – | −60 | −24 | 0 | 5.61 | a | −62 | −24 | 4 | 4.5 | 15 | |

| MeFG | L | 8 | −4 | 20 | 48 | 4.16 | 51 | – | – | – | – | – | – | – | – | – | – | −48 | 4 | 44 | 4.4 | 49 |

| Uncus | L | 20 | −36 | −12 | −36 | 4.84 | 48 | – | – | – | – | – | – | – | – | – | – | – | – | – | – | – |

| Declive | L | – | −38 | −58 | −24 | 4.62 | a | −36 | −62 | −28 | 4.09 | 48 | – | – | – | – | – | – | – | – | – | – |

| Extra nuclear | L | – | – | – | – | – | – | – | – | – | – | – | −10 | 0 | 8 | 4.52 | 144 | – | – | – | – | – |

| Thalamus | L | – | – | – | – | – | – | – | – | – | – | – | −8 | −18 | 12 | 4.45 | a | – | – | – | – | – |

| IFG | R | 47 | – | – | – | – | – | 36 | 20 | −8 | 4.7 | 235 | – | – | – | – | – | – | – | – | – | – |

| 45 | – | – | – | – | – | 40 | 20 | 8 | 4.37 | a | – | – | – | – | – | – | – | – | – | – | ||

| 13 | – | – | – | – | – | – | – | – | – | – | 32 | 24 | −4 | 5.34 | 193 | – | – | – | – | – | ||

| MFG | R | 10 | – | – | – | – | – | – | – | – | – | – | 50 | 34 | 28 | 4.06 | 26 | – | – | – | – | – |

| MeFG | R | 8 | – | – | – | – | – | – | – | – | – | – | 2 | 24 | 44 | 5.09 | 719 | 2 | 20 | 52 | 4.8 | 125 |

| 6 | – | – | – | – | – | – | – | – | – | – | 6 | 32 | 40 | 4.61 | a | – | – | – | – | – | ||

| IOG | R | 18 | 28 | −92 | −16 | 5.57 | 329 | – | – | – | – | – | – | – | – | – | – | – | – | – | – | – |

| Cuneus | R | 18 | 26 | −96 | −8 | 5.33 | a | – | – | – | – | – | – | – | – | – | – | – | – | – | – | – |

| MOG | R | 18 | – | – | – | – | – | 28 | −96 | −4 | 4.54 | 75 | – | – | – | – | – | – | – | – | – | – |

| STG/MTG | R | 22 | – | – | – | – | – | – | – | – | – | – | 60 | −12 | −8 | 6.08 | 776 | – | – | – | – | – |

| 38 | – | – | – | – | – | – | – | – | – | – | 50 | 12 | −16 | 5.10 | a | 42 | 14 | −28 | 5.3 | 31 | ||

| Declive | R | – | 36 | −64 | −24 | 4.51 | 33 | 8 | −50 | −32 | 5.01 | 237 | – | – | – | – | – | – | – | – | – | – |

| – | – | – | – | – | – | 38 | −58 | −28 | 4.56 | 123 | – | – | – | – | – | – | – | – | – | – | ||

| Lateral ventricle | R | – | – | – | – | – | – | 18 | −30 | 20 | 4.69 | 427 | – | – | – | – | – | – | – | – | – | – |

| Extra nuclear | R | – | 34 | 24 | 0 | 4.43 | 22 | 18 | 6 | 20 | 4.38 | a | – | – | – | – | – | – | – | – | – | – |

| Declive | L+R | – | – | – | – | – | – | – | – | – | – | – | – | – | – | – | – | 0 | −78 | −20 | 5 | 49 |

H = hemisphere; L = left; R = right; BA = Brodmann’s areas; x, y, z: Montreal Neurological Institute coordinates; Z = z score; IFG = inferior frontal gyrus; MFG = middle frontal gyrus; MeFG = medial frontal gyrus; FG = fusiform gyrus; STG = superior temporal gyrus; MTG = middle temporal gyrus; MOG = middle occipital gyrus; IOG = inferior occipital gyrus. Clusters presented are more than 15 contiguous voxels surviving a threshold of 0.004 (FDR corrected).

Clusters with more than one local maxima.

Figure 3.

Activation map for the meaning task and the rhyming task in the visual modality. Green indicates activation for the meaning task; red indicates activation for the rhyming task; blue indicates overlapping activation for both tasks. FG = fusiform gyrus; IOG = inferior occipital gyrus; MFG = middle frontal gyrus; VIFG = ventral inferior frontal gyrus; Angular = angular gyrus; DIFG = dorsal inferior frontal gyrus.

Figure 4.

Activation map for the meaning task and the rhyming task in the auditory modality. Green indicates the activation for the meaning task; red indicates the activation for the rhyming task; blue indicates the overlapping activation for both tasks.

Because the visual modality had higher accuracy than the auditory modality, it is possible that differences in brain activation between modalities could reflect these performance differences. To rule out this possibility, we entered the difference between modalities in accuracy ([average accuracy of visual modality – average accuracy of auditory modality]/average accuracy of four tasks) as a covariate in a regression analysis. This accuracy difference was not correlated (p < .05, FDR corrected) with activation differences between modalities (lexical vs. control in visual modality – lexical vs. control in auditory modality). Therefore, accuracy was not included as a covariate in subsequent analyses.

The overall effect across tasks and modalities was used to identify seven ROIs in the left hemisphere, including FG, STG, VIFG, MTG, DIFG, IPL, and MFG. A within-subject two-way ANOVA was used to examine the task (meaning and rhyming) and modality (visual and auditory) effects for each ROI. Main effects and interactions for these ANOVAs are reported as well as follow-up paired t tests.

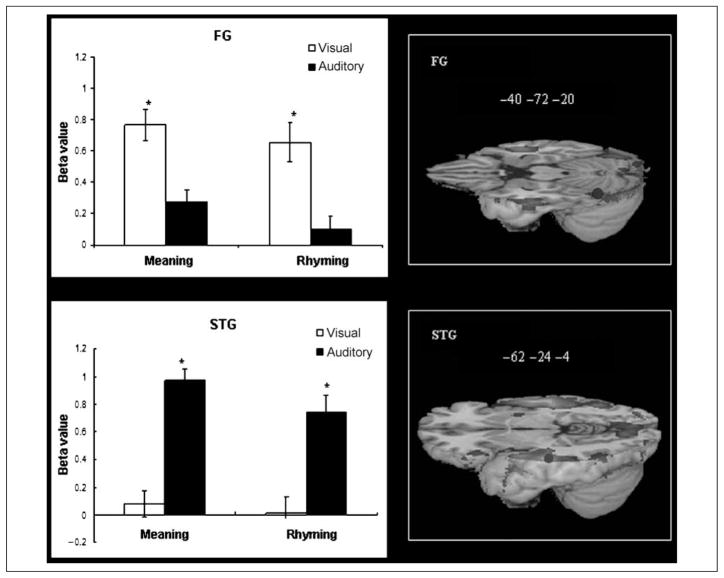

Figure 5 presents modality differences in brain activation. The visual modality produced greater activation in FG, F(1,14) = 26.293, p = .000, and the auditory modality produced greater activation in STG, F(1,14) = 55.098, p = .000. There were no significant task effects or interaction effects between task and modality for these two regions. Paired t tests confirmed that FG showed greater activation in the visual than auditory modality for both the meaning, t(14) = 5.268, p = .000, and the rhyming tasks, t(14) = 4.591, p = .000, and that STG showed greater activation in the auditory than visual modality for both the meaning, t(14) = 8.749, p = .000, and the rhyming tasks, t(14) = 5.107, p = .000. The MTG region results patterned similarly to STG, so the results are not presented here.

Figure 5.

Modality-specific effect in FG and STG. Blue dots mark the peak of ROIs in brain images. *p < .005 between modalities in bar graphs.

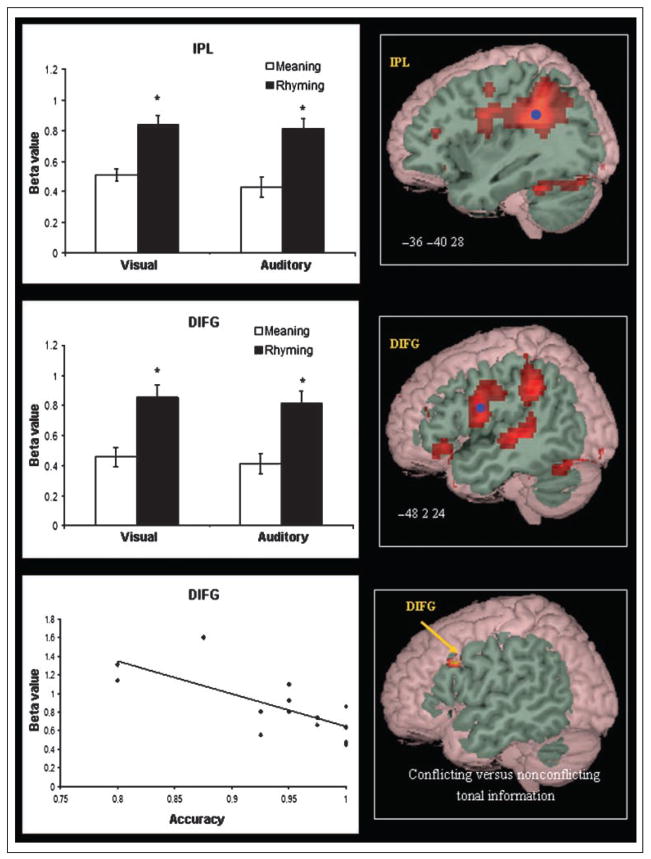

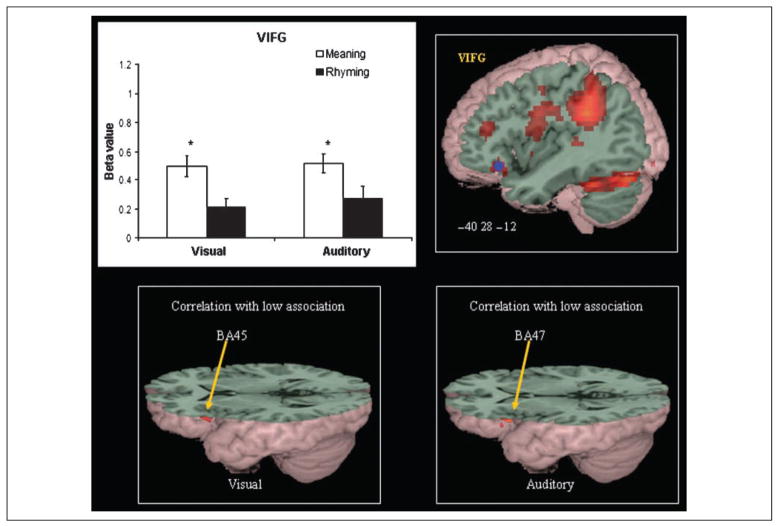

Figures 6 and 7 present task differences in brain activation. The rhyming task produced greater activation in DIFG, F(1,14) = 30.217, p = .000, and IPL, F(1,14) = 45.550, p = .000, whereas the meaning task produced greater activation in VIFG, F(1,14) = 35.148, p = .000. There were no significant modality effects or Modality × Task interaction effects for these regions. Paired t tests confirmed that dorsal inferior frontal area showed greater activation in the rhyming than meaning task for both the visual, t(14) = 4.441, p = .001, and the auditory modalities, t(14) = 4.882, p = .000, and that IPL showed greater activation in the rhyming than meaning task for both the visual, t(14) = 6.533, p = .000, and the auditory modalities, t(14) = 5.675, p = .000. Paired t tests confirmed that ventral inferior frontal area showed greater activation in the meaning than rhyming task for both the visual, t(14) = 5.974, p = .000, and the auditory modalities, t(14) = 3.468, p = .004.

Figure 6.

Task-specific effects for the rhyming tasks in IPL and DIFG. Blue dots mark the peak of ROIs in brain images. *p < .005 between tasks in bar graphs. Difference in DIFG between word pairs with conflicting tonal information versus those with nonconflicting tonal information is shown in bottom right. Correlation between beta value in DIFG and accuracy for the rhyming task is shown in bottom left.

Figure 7.

Task-specific effects for the meaning tasks in VIFG. Blue dots mark the peak of ROIs in brain images. *p < .005 between tasks in bar graphs. Correlation in VIFG between association strength and activation separately for the visual and auditory meaning tasks is shown below.

We computed correlations between beta values of the seven ROIs and accuracy separately for the four tasks. No significant correlation was found except that greater activation in DIFG was correlated with the lower accuracy in the visual rhyming task, r(15) =−.732, p < .05, Bonferroni corrected (see Figure 6).

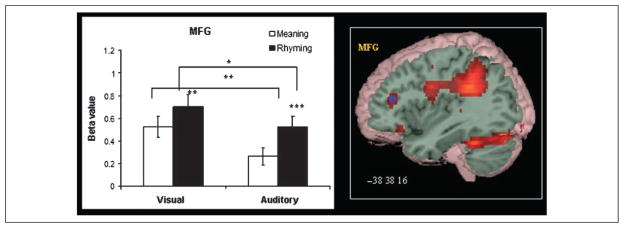

Figure 8 presents the modality and the task differences for MFG. There was greater activation for this region for the visual modality, F(1,14) = 11.471, p = .004, and for the rhyming task, F(1,14) = 15.136, p = .002. There was not a Modality × Task interaction for this region. Paired t tests confirmed greater activation in the rhyming than meaning task for both the visual, t(14) = 3.078, p = .008, and the auditory modalities, t(14) = 3.569, p = .003, and greater activation in the visual than auditory modality for both the meaning, t(14) = 3.231, p = .006, and the rhyming tasks, t(14) = 2.728, p = .016.

Figure 8.

Modality- and task-specific effects in MFG. Blue dot marks the peak of the ROI in brain images. *p < .05,**p < .01, ***p < .005 in bar graphs.

Using the same method as for the left hemisphere, we identified seven corresponding ROIs in the right hemisphere. In the end, only six ROIs were included in the analysis because right MFG did not activate at p < .005 uncorrected. Similar to the left hemisphere, this analysis revealed modality effects in the right hemisphere for FG, t(14) = 4.144, p = .001 for the meaning task and t(14) = 4.060, p = .001 for the rhyming task, and STG, t(14) = 8.992, p = .000 for the meaning task and t(14) = 5.461, p = .000 for the rhyming task. The MTG region patterned similarly to STG. Also similar to the left hemisphere, this analysis revealed task effects for the right hemisphere in VIFG, t(14) = −0.198, p = .846 for the visual modality and t(14) = 4.508, p = .000 for the auditory modality, DIFG, t(14) = 3.005, p = .009 for the visual modality and t(14) = 2.961, p = .010 for the auditory modality, and IPL, t(14) = 3.726, p = .002 for the visual modality and t(14) = 4.438, p = .001 for the auditory modality.

We used a mixed GLM combining sustained and transient effects in an exploratory analysis of whether association strength was related to activation in the semantic tasks and whether conflicting tones were related to activation in the rhyming tasks. Sustained effects (i.e., lexical vs. control blocks) were coded into the GLM model as a separate regressor with an assumed boxcar shape convolved with canonical hemodynamic response function; transient effects of individual trials were also modeled using canonical hemodynamic response functions as regressors. For the semantic tasks, transient effects included instruction, unrelated trials, and related trials. Association strength was modeled as a covariate for related trials. For the rhyming tasks, transient effects included instruction, T+R+ trials (second character of first word shared same tone with second word in rhyming trials), T−R+ trials (second character of first word did not share same tone with second word in rhyming trials), and nonrhyming trials. There were slightly uneven trial numbers for each rhyming task (11 T+R+ trials and 9 T−R+ trials for the visual modality and 9 T+R+ trials and 11 T−R+ trials for the auditory modality). For the semantic tasks, weaker association strength was significantly correlated with greater activation in the left VIFG (x = −58, y = 26, z = 4; voxels = 20; Z = 4.05; BA 45) and left DIFG (x = −54, y = 20, z = 32; voxels = 25; Z = 3.46; BA 9) for the visual meaning task and in the left VIFG (x = −58, y = 20, z = 0; voxels = 12; Z = 3.62; BA 47) and DIFG (x = −52, y = 18, z = 28; voxels = 43; Z = 4.24; BA 9/ 45) for the auditory meaning task (see Figure 7). In addition, the conflicting tone condition (T−R+) compared with the nonconflicting tone condition (T−R+) showed greater activation in DIFG (x = −54, y = 16, z = 32; voxels = 17; Z = 3.68; BA 9) only in the auditory rhyming task (see Figure 6).

DISCUSSION

The present study asked Chinese adults to make rhyming and meaning judgments to words that were presented in the visual and the auditory modality. This design allowed us to determine brain regions that were specific for a particular modality, regions that were specific for a particular task (i.e., phonological vs. semantic processing), and regions that were sensitive to both modality and task. We will discuss each of these effects in turn.

Modality-specific Regions: FG and STG

The present study showed that the left FG was activated to a greater degree in the visual modality than in the auditory modality for both meaning and rhyming tasks, whereas the left STG was activated to a greater degree in the auditory modality than in the visual modality for both meaning and rhyming tasks. This modality specificity suggests specialization for processing written word forms in visual association areas and spoken word forms in auditory association areas. This result is consistent with English studies that suggest a different neural focus in the left hemisphere for the orthographic and phonological lexicons in adults (Booth et al., 2002; Giraud & Price, 2001; Fujimaki et al., 1999; Binder et al., 1994; Nobre, Allison, & McCarthy, 1994; Howard et al., 1992; Petersen, Fox, Snyder, & Raichle, 1990). The right FG and the right STG showed a similar modality effect as these regions in the left hemisphere. Right FG activation has consistently been found in Chinese reading, and researchers have suggested its role in processing orthographically complex Chinese characters (Bolger et al., 2005; Tan et al., 2005). Right STG activation has also been found in Chinese speech processing (Xiao et al., 2005; Gandour et al., 2004; Wang, Sereno, Jongman, & Hirsch, 2003), and researchers have suggested its role in tonal pitch processing of the Chinese language (Zatorre & Gandour, 2008; Gandour et al., 2004).

Task-specific Areas: IPL

The present study showed that the left IPL was more activated in the rhyming task than in the meaning task independent of modality of presentation. The nonsensitivity to modality in IPL supports the long-held notion that this area is a heteromodal region (Mesulam, 1998). Studies of English generally agree that the left IPL is involved in phonological processing, but there is substantial disagreement of this region’s precise role. Some have argued it is involved in the conversion between orthography and phonology (Booth et al., 2002; Xu et al., 2001; Lurito, Kareken, Lowe, Chen, & Mathews, 2000; Pugh et al., 1996), and others have suggested that it is part of the phonological loop (Paulesu, Frith, & Frackowiak, 1993) or is involved in verbal working memory more generally (Zurowski et al., 2002; Smith, Jonides, & Koeppe, 1996). Studies of Chinese also generally support the role of the left IPL in phonological processing, but again there are various interpretations of the computations performed in this region. As with English, some have argued for its involvement in the conversion between orthography and phonology (Booth et al., 2006), and others have argued that it is involved in short-term maintenance of phonological codes (Tan et al., 2005). In addition, some have suggested that IPL is involved in the visuospatial analysis of complex Chinese characters when mapping from orthography to phonology (Dong et al., 2005).

Our results of greater activation in the left IPL for the rhyming compared with the meaning tasks, independent of modality of presentation, generally support the role of this region in phonological memory. The rhyming task requires the segmentation of the vowel from syllable level phonology because Chinese character phonology cannot be accessed through grapheme-to-phoneme correspondences but rather must be accessed through a syllable level mapping from orthography to phonology. This segmentation process may require the maintenance of phonological codes in memory to make a rhyming judgment. A recent meta-analysis suggested that the left inferior parietal cortex (i.e., supramarginal gyrus), close to the region showing greater activation for the rhyming tasks in our study, along with a frontal region makes up a phonological working memory loop (Vigneau et al., 2006). The greater activation for the rhyming compared with the meaning task in the left IPL does not seem to be due to greater demands on orthographic to phonological conversion processes for two reasons. First, the coordinates showing a task difference in our study are quite different from those found in studies examining the conflict between orthographic and phonological representations in rhyming tasks in the visual modality (Bitan, Burman, et al., 2007; Bitan, Cheon, et al., 2007). Second, we found effects in the left IPL for the visual as well as the auditory modality, although the latter does not require a mapping between orthography and phonology for correct performance. Moreover, studies of English that have examined rhyming in the auditory modality do not show activation in the left IPL (Cone, Burman, Bitan, Bolger, & Booth, in press). Finally, our results do not support the hypothesis that the left IPL is involved in visuospatial analysis of complex Chinese characters because the visual complexity of the visual rhyming task was comparable to that of the visual meaning task, but visual rhyming task produced greater activation in this region compared with the visual meaning task.

Task-specific Regions: DIFG and VIFG

Based on the English studies, researchers have proposed that the anterior ventral portion of the left IFG may be involved in semantic modulation, whereas the posterior dorsal portion of the left IFG may be involved in phonological modulation (Devlin et al., 2003; Poldrack et al., 1999; Fiez, 1997). Our results are consistent with the above English studies demonstrating greater activation for the meaning tasks in VIFG and greater activation for the rhyming tasks in DIFG. Our results are also consistent with our former study demonstrating these effects in Chinese in the visual modality (Booth et al., 2006). The present study extends these findings by showing that the functional dissociation between the dorsal and the ventral inferior frontal areas is modality independent.

The present study revealed that weaker association strength for the meaning tasks was significantly correlated with greater activation in the left VIFG (BA 45, 47) in both the visual and the auditory modalities. Chou, Booth, Bitan, et al. (2006) and Chou, Booth, Burman, et al. (2006) found a similar association strength effect in the left VIFG in English children in both the visual and the auditory modalities. The present study extends these findings by showing a similar association strength effect in both visual and auditory modality in Chinese. This is the first study to investigate how association strength in a semantic relatedness judgment task modulates brain activation in Chinese and suggests that the left VIFG is critically involved in semantic processing. Greater activation for weaker association pairs could result from increased demands on the retrieval or selection of appropriate semantic features (Chou, Booth, Bitan, et al., 2006; Chou, Booth, Burman, et al., 2006).

The present study also revealed that weaker association strength for the meaning tasks was correlated with greater activation in the left DIFG (BA 9) for both the visual and the auditory modalities. In general, DIFG (BA 44/45) has been implicated in phonological processing in English (Fiez et al., 1999; Poldrack et al., 1999; Fiez, 1997); however, the portion of IFG (BA 9) showing the association effect in the present study is different from the region thought to be involved in phonological processing. In fact, our results are consistent with Fletcher, Shallice, and Dolan (2000), who found that semantic judgments in adults to weaker association pairs produced greater activation in the left DIFG (BA 46/9) as compared with stronger association pairs (Fletcher et al., 2000). BA 9 is believed to be involved in the representation and working memory of visuospatial and verbal information and in the coordination of cognitive resources in a central executive system (Courtney, Petit, Maisog, Ungerleider, & Haxby, 1998; D’Esposito et al., 1995; Petrides, Alivisatos, Meyer, & Evans, 1993). Siok, Perfetti, Jin, and Tan (2004) suggested that BA 9 coordinates and integrates visual-orthographic and semantic (and phonological) processes in verbal and spatial working memory in Chinese lexical processing (Siok et al., 2004). Greater activation for weaker association pairs in the dorsal part of IFG (BA 9) in the present study could result from increased demands on the cognitive resources or the integration of appropriate semantic features.

The present study also showed that word pairs with conflicting information in the rhyming task in the auditory, but not the visual modality, showed greater activation in the left DIFG (BA 9). These word pairs required an affirmative rhyming judgment because they contained the same segmental information (i.e., phonemes) despite the fact that they were spoken in different Chinese suprasegmental tones. Cone et al. (in press) found greater activation in the left DIFG for English word pairs with conflicting orthographic and phonological information (e.g., pint–mint) as compared with pairs with nonconflicting information (e.g., fall–ball) in a rhyming task in the auditory modality. Cone et al. suggested that the left DIFG may be involved in the strategic phonological processing in the face of conflicting orthographic and phonological segmental representations. In the present study, the left DIFG may also be involved in the strategic phonological processing in the face of conflicting suprasegmental tone and segmental phoneme representations. This is the first study to investigate how conflicting tone-segment information modulates brain activation in Chinese and suggests that the left DIFG is critically involved in phonological processing.

Our finding of greater activation in DIFG for word pairs with conflicting tonal information is consistent with behavioral studies that have explored segmental and tonal priming effects in Chinese. Zhou and Zhuang (2000) found that character naming was facilitated when characters shared both segmental and tonal information with preceding picture names, whereas character naming was inhibited when characters shared segmental information with pictures but differed in tones (Zhou & Zhuang, 2000). Zhou and Zhuang also found inhibitory effects in lexical decision tasks when the prime and the target shared only segmental but not tonal information. Our study did not find greater activation in DIFG for word pairs with conflicting tonal information presented in the visual modality. This is inconsistent with an English study that found greater activation in the left DIFG for conflicting (e.g., pint–mint) compared with nonconflicting (e.g., fall–ball) conditions using a rhyming judgment task in the visual modality (Bitan, Cheon, et al., 2007). One possible explanation for the inconsistent results across languages is that activation of tonal information in the visual modality is relatively weak in Chinese as compared with the auditory modality. In support of this, Zhang and Yang (2005) and Chen, Chen, and Dell (2002) did not find a priming effect when the prime and the target were presented visually and shared only tonal information, although they did find an effect when the prime and the target shared tonal and segmental information (Zhang & Yang, 2005; Chen et al., 2002). The weaker effect of tones on visual word processing, as compared with auditory word processing, is also supported by the Zhou (2000). They found a tonal inhibitory effect when primes and targets were presented visually only at a long SOA (357 msec) whereas an inhibitory effect when primes were presented auditorily and targets were presented visually and existed even when targets were presented immediately at the offset of primes.

The present study also revealed that greater activation in the left DIFG was correlated with lower accuracy in the visual rhyming task. DIFG has been identified as a region involved in sublexical processing in English (Fiez et al., 1999). In English, the majority of words have consistent sublexical mappings from graphemes (letters) to phonemes (Coltheart, Curtis, Atkins, & Haller, 1993). In contrast, Chinese is a logographic writing system in which the characters do not have grapheme to phoneme correspondences. Chinese characters are read by mapping onto phonology at the syllable level. Although 85% of Chinese characters contain a phonetic component that can give information about the pronunciation, estimates of the validity of this information reveal that only 28% of phonetic components sound the same as their resultant whole characters (Tan et al., 2005). This means that reliance on the phonetic component for determining the pronunciation of the whole character will lead to incorrect pronunciations the majority of time. The lower accuracy subjects in the present study may be inappropriately relying on DIFG to segment the phonetic component of the characters, and this may lead to a greater number of errors on the visual rhyming task. Several behavioral studies have indicated that younger or less skilled children may inappropriately rely on the phonetic component for pronunciation. Yang and Peng (1995) showed that the regularity effect in Chinese character naming was smaller for sixth grade children compared with third grade children, suggesting that older children rely less on the phonetic components (Yang & Peng, 1995). Ho, Chan, Chung, Lee, and Tsang (2007) reported that most children (62%) with a reading disability in Chinese can be classified as surface dyslexics, demonstrated by high rates of phonetic and analogy errors (Ho et al., 2007).

Task- and Modality-specific Areas: MFG

Our results revealed that the left MFG was activated to a greater degree in the rhyming than meaning task and in the visual than auditory modality. Greater activation for the rhyming task may result from mappings between orthographic and phonological representations. For the visual modality, mapping from orthography to phonology is necessary for correct performance, whereas for the auditory modality mapping to written word forms may result from the interactive nature of orthographic and phonological representations even during spoken language processing (Chereau, Gaskell, & Dumay, 2007; Ziegler & Ferrand, 1998; Dijkstra, Roelofs, & Fieuws, 1995). Greater activation in MFG for the visual modality may also be due to the greater visuospatial analysis needed in the visual modality than in the auditory modality. Our interpretation is consistent with previous research that has suggested that the MFG is responsible for the visuospatial analysis of Chinese characters and the orthography-to-phonology mapping at the syllable level, which are demanded by the logographic and the monosyllabic nature of written Chinese (Tan et al., 2003, 2005; Siok et al., 2003, 2004; Tan, Feng, Fox, & Gao, 2001). Our study is the first to demonstrate significant differences between the visual and the auditory modality as well as between phonological and semantic processing in the left MFG.

Conclusion

In conclusion, this study demonstrated modality- and task-specific effects in Chinese in the left hemisphere regions that are similar to alphabetic languages. Modality specificity was shown in FG for visual word forms suggesting its role in orthographic processing and in STG for spoken word forms suggesting its role in phonological processing. Task specificity was shown in VIFG for the meaning task. This area is probably involved in the retrieval and the selection of semantic representations as greater activation in this area was correlated with word pairs with weaker semantic association. Task specificity was also shown in IPL and DIFG for the rhyming task. Activation in the DIFG likely involved strategic phonological processing as greater activation in this area was correlated with word pairs that had conflicting segmental (i.e., phonemes) and tonal information. Finally, we found that MFG was both modality and task specific, suggesting that this region is involved in the visuospatial analysis of Chinese characters and orthography-to-phonology integration at a syllabic level, demanded by the logographic and the monosyllabic nature of written Chinese. This is the first study to directly show both modality- and task-specific effects in Chinese and provides insight into the functional roles of different components of the language and reading network. Although two-character Chinese words constitute nearly 80% of Chinese words, whether the findings reported here for two-character words can be generalized to the reading of single-character words merits investigation in the future.

Acknowledgments

This research was supported by grants from the National Institute of Child Health and Human Development (HD042049) and the National Institute of Deafness and Other Communication Disorders (DC06149) to James R. Booth; by grants from the National Natural Science Foundation of China (No. 30670705) to Danling Peng; and by grants from the National Natural Science Foundation of China (No. 30600180) to Yan Song.

References

- Binder JR, Rao SM, Hammeke TA, Yetkin FZ, Jesmanowicz A, Bandettini PA, et al. Functional magnetic resonance imaging of human auditory cortex. Annals of Neurology. 1994;35:662–672. doi: 10.1002/ana.410350606. [DOI] [PubMed] [Google Scholar]

- Bitan T, Burman DD, Chou TL, Lu D, Cone NE, Cao F, et al. The interaction between orthographic and phonological information in children: An fMRI study. Human Brain Mapping. 2007;28:880–891. doi: 10.1002/hbm.20313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bitan T, Cheon J, Lu D, Burman DD, Gitelman DR, Mesulam MM, et al. Developmental changes in activation and effective connectivity in phonological processing. Neuroimage. 2007;38:564–575. doi: 10.1016/j.neuroimage.2007.07.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolger DJ, Perfetti CA, Schneider W. Cross-cultural effect on the brain revisited: Universal structures plus writing system variation. Human Brain Mapping. 2005;25:92–104. doi: 10.1002/hbm.20124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Booth JR, Burman DD, Meyer JR, Gitelman DR, Parrish TB, Mesulam MM. Functional anatomy of intra- and cross-modal lexical tasks. Neuroimage. 2002;16:7–22. doi: 10.1006/nimg.2002.1081. [DOI] [PubMed] [Google Scholar]

- Booth JR, Lu D, Burman DD, Chou TL, Jin Z, Peng DL, et al. Specialization of phonological and semantic processing in Chinese word reading. Brain Research. 2006;1071:197–207. doi: 10.1016/j.brainres.2005.11.097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chee MW, Soon CS, Lee HL. Common and segregated neuronal networks for different languages revealed using functional magnetic resonance adaptation. Journal of Cognitive Neuroscience. 2003;15:85–97. doi: 10.1162/089892903321107846. [DOI] [PubMed] [Google Scholar]

- Chee MW, Weekes B, Lee KM, Soon CS, Schreiber A, Hoon JJ, et al. Overlap and dissociation of semantic processing of Chinese characters, English words, and pictures: Evidence from fMRI. Neuroimage. 2000;12:392–403. doi: 10.1006/nimg.2000.0631. [DOI] [PubMed] [Google Scholar]

- Chen JY, Chen TM, Dell GS. Word-form encoding in mandarin Chinese as assessed by the implicit priming task. Journal of Memory and Language. 2002;46:751–781. [Google Scholar]

- Chereau C, Gaskell MG, Dumay N. Reading spoken words: Orthographic effects in auditory priming. Cognition. 2007;102:341–360. doi: 10.1016/j.cognition.2006.01.001. [DOI] [PubMed] [Google Scholar]

- Chou TL, Booth JR, Bitan T, Burman DD, Bigio JD, Cone NE, et al. Developmental and skill effects on the neural correlates of semantic processing to visually presented words. Human Brain Mapping. 2006;27:915–924. doi: 10.1002/hbm.20231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chou TL, Booth JR, Burman DD, Bitan T, Bigio JD, Lu D, et al. Developmental changes in the neural correlates of semantic processing. Neuroimage. 2006;29:1141–1149. doi: 10.1016/j.neuroimage.2005.09.064. [DOI] [PubMed] [Google Scholar]

- Coltheart M, Curtis B, Atkins P, Haller M. Models of reading aloud: Dual-route and parallel-distributed-processing approaches. Psychological Review. 1993;100:589–608. [Google Scholar]

- Cone NE, Burman DD, Bitan T, Bolger DJ, Booth JR. Developmental changes in brain regions involved in phonological and orthographic processing during spoken language processing. Neuroimage. 41:623–635. doi: 10.1016/j.neuroimage.2008.02.055. (in press) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Courtney SM, Petit L, Maisog JM, Ungerleider LG, Haxby JV. An area specialized for spatial working memory in human frontal cortex. Science. 1998;279:1347–1351. doi: 10.1126/science.279.5355.1347. [DOI] [PubMed] [Google Scholar]

- D’Esposito M, Detre JA, Alsop DC, Shin RK, Atlas S, Grossman M. The neural basis of the central executive system of working memory. Nature. 1995;378:279–281. doi: 10.1038/378279a0. [DOI] [PubMed] [Google Scholar]

- Devlin JT, Matthews PM, Rushworth MF. Semantic processing in the left inferior prefrontal cortex: A combined functional magnetic resonance imaging and transcranial magnetic stimulation study. Journal of Cognitive Neuroscience. 2003;15:71–84. doi: 10.1162/089892903321107837. [DOI] [PubMed] [Google Scholar]

- Dijkstra T, Roelofs A, Fieuws S. Orthographic effects on phoneme monitoring. Canadian Journal of Experimental Psychology. 1995;49:264–271. doi: 10.1037/1196-1961.49.2.264. [DOI] [PubMed] [Google Scholar]

- Dong Y, Nakamura K, Okada T, Hanakawa T, Fukuyama H, Mazziotta JC, et al. Neural mechanisms underlying the processing of Chinese words: An fMRI study. Neuroscience Research. 2005;52:139–145. doi: 10.1016/j.neures.2005.02.005. [DOI] [PubMed] [Google Scholar]

- Fiez JA. Phonology, semantics, and the role of the left inferior prefrontal cortex. Human Brain Mapping. 1997;5:79–83. [PubMed] [Google Scholar]

- Fiez JA, Balota DA, Raichle ME, Petersen SE. Effects of lexicality, frequency, and spelling-to-sound consistency on the functional anatomy of reading. Neuron. 1999;24:205–218. doi: 10.1016/s0896-6273(00)80833-8. [DOI] [PubMed] [Google Scholar]

- Fletcher PC, Shallice T, Dolan RJ. “Sculpting the response space”—An account of left prefrontal activation at encoding. Neuroimage. 2000;12:404–417. doi: 10.1006/nimg.2000.0633. [DOI] [PubMed] [Google Scholar]

- Flowers DL, Wood FB, Naylor CE. Regional cerebral blood flow correlates of language processes in reading disability. Archives of Neurology. 1991;48:637–643. doi: 10.1001/archneur.1991.00530180095023. [DOI] [PubMed] [Google Scholar]

- Fu S, Chen Y, Smith S, Iversen S, Matthews PM. Effects of word form on brain processing of written Chinese. Neuroimage. 2002;17:1538–1548. doi: 10.1006/nimg.2002.1155. [DOI] [PubMed] [Google Scholar]

- Fujimaki N, Miyauchi S, Putz B, Sasaki Y, Takino R, Sakai K, et al. Functional magnetic resonance imaging of neural activity related to orthographic, phonological, and lexico-semantic judgments of visually presented characters and words. Human Brain Mapping. 1999;8:44–59. doi: 10.1002/(SICI)1097-0193(1999)8:1<44::AID-HBM4>3.0.CO;2-#. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gandour J, Tong Y, Wong D, Talavage T, Dzemidzic M, Xu Y, et al. Hemispheric roles in the perception of speech prosody. Neuroimage. 2004;23:344–357. doi: 10.1016/j.neuroimage.2004.06.004. [DOI] [PubMed] [Google Scholar]

- Giraud AL, Price CJ. The constraints functional neuroimaging places on classical models of auditory word processing. Journal of Cognitive Neuroscience. 2001;13:754–765. doi: 10.1162/08989290152541421. [DOI] [PubMed] [Google Scholar]

- Ho CS, Chan DW, Chung KK, Lee SH, Tsang SM. In search of subtypes of Chinese developmental dyslexia. Journal of Experimental Child Psychology. 2007;97:61–83. doi: 10.1016/j.jecp.2007.01.002. [DOI] [PubMed] [Google Scholar]

- Howard D, Patterson K, Wise R, Brown WD, Friston K, Weiller C, et al. The cortical localization of the lexicons. Positron emission tomography evidence. Brain. 1992;115:1769–1782. doi: 10.1093/brain/115.6.1769. [DOI] [PubMed] [Google Scholar]

- Kuo WJ, Yeh TC, Lee JR, Chen LF, Lee PL, Chen SS, et al. Orthographic and phonological processing of Chinese characters: An fMRI study. Neuroimage. 2004;21:1721–1731. doi: 10.1016/j.neuroimage.2003.12.007. [DOI] [PubMed] [Google Scholar]

- Lee BC, Kuppusamy K, Grueneich R, El-Ghazzawy O, Gordon RE, Lin W, et al. Hemispheric language dominance in children demonstrated by functional magnetic resonance imaging. Journal of Child Neurology. 1999;14:78–82. doi: 10.1177/088307389901400203. [DOI] [PubMed] [Google Scholar]

- Lu B, editor. Modern Beijing spoken Chinese corpus. Beijing: Language Education Institute, Beijing Language University; 1993. [Google Scholar]

- Luke KK, Liu HL, Wai YY, Wan YL, Tan LH. Functional anatomy of syntactic and semantic processing in language comprehension. Human Brain Mapping. 2002;16:133–145. doi: 10.1002/hbm.10029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lurito JT, Kareken DA, Lowe MJ, Chen SH, Mathews VP. Comparison of rhyming and word generation with fMRI. Human Brain Mapping. 2000;10:99–106. doi: 10.1002/1097-0193(200007)10:3<99::AID-HBM10>3.0.CO;2-Q. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mesulam MM. From sensation to cognition. Brain. 1998;121:1013–1052. doi: 10.1093/brain/121.6.1013. [DOI] [PubMed] [Google Scholar]

- Nobre AC, Allison T, McCarthy G. Word recognition in the human inferior temporal lobe. Nature. 1994;372:260–263. doi: 10.1038/372260a0. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: The Edinburgh Inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Paulesu E, Frith CD, Frackowiak RS. The neural correlates of the verbal component of working memory. Nature. 1993;362:342–345. doi: 10.1038/362342a0. [DOI] [PubMed] [Google Scholar]

- Peng DL, Xu SY, Ding GS, Li EZ, Liu Y. Brain mechanism for the phonological and semantic processing of Chinese character. Chinese Journal of Neuroscience. 2003;19:287–291. [Google Scholar]

- Petersen SE, Fox PT, Snyder AZ, Raichle ME. Activation of extrastriate and frontal cortical areas by visual words and word-like stimuli. Science. 1990;249:1041–1044. doi: 10.1126/science.2396097. [DOI] [PubMed] [Google Scholar]

- Petrides M, Alivisatos B, Meyer E, Evans AC. Functional activation of the human frontal cortex during the performance of verbal working memory tasks. Proceedings of the National Academy of Sciences, USA. 1993;90:878–882. doi: 10.1073/pnas.90.3.878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poldrack RA, Wagner AD, Prull MW, Desmond JE, Glover GH, Gabrieli JD. Functional specialization for semantic and phonological processing in the left inferior prefrontal cortex. Neuroimage. 1999;10:15–35. doi: 10.1006/nimg.1999.0441. [DOI] [PubMed] [Google Scholar]

- Pugh KR, Shaywitz BA, Shaywitz SE, Constable RT, Skudlarski P, Fulbright RK, et al. Cerebral organization of component processes in reading. Brain. 1996;119:1221–1238. doi: 10.1093/brain/119.4.1221. [DOI] [PubMed] [Google Scholar]

- Siok WT, Jin Z, Fletcher P, Tan LH. Distinct brain regions associated with syllable and phoneme. Human Brain Mapping. 2003;18:201–207. doi: 10.1002/hbm.10094. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Siok WT, Perfetti CA, Jin Z, Tan LH. Biological abnormality of impaired reading is constrained by culture. Nature. 2004;431:71–76. doi: 10.1038/nature02865. [DOI] [PubMed] [Google Scholar]

- Smith EE, Jonides J, Koeppe RA. Dissociating verbal and spatial working memory using PET. Cerebral Cortex. 1996;6:11–20. doi: 10.1093/cercor/6.1.11. [DOI] [PubMed] [Google Scholar]

- Sugishita M, Takayama Y, Shiono T, Yoshikawa K, Takahashi Y. Functional magnetic resonance imaging (fMRI) during mental writing with phonograms. NeuroReport. 1996;7:1917–1921. doi: 10.1097/00001756-199608120-00009. [DOI] [PubMed] [Google Scholar]

- Tan LH, Feng CM, Fox PT, Gao JH. An fMRI study with written Chinese. NeuroReport. 2001;12:83–88. doi: 10.1097/00001756-200101220-00024. [DOI] [PubMed] [Google Scholar]

- Tan LH, Laird AR, Li K, Fox PT. Neuroanatomical correlates of phonological processing of Chinese characters and alphabetic words: A meta-analysis. Human Brain Mapping. 2005;25:83–91. doi: 10.1002/hbm.20134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tan LH, Liu HL, Perfetti CA, Spinks JA, Fox PT, Gao JH. The neural system underlying Chinese logograph reading. Neuroimage. 2001;13:836–846. doi: 10.1006/nimg.2001.0749. [DOI] [PubMed] [Google Scholar]

- Tan LH, Spinks JA, Feng CM, Siok WT, Perfetti CA, Xiong J, et al. Neural systems of second language reading are shaped by native language. Human Brain Mapping. 2003;18:158–166. doi: 10.1002/hbm.10089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tan LH, Spinks JA, Gao JH, Liu HL, Perfetti CA, Xiong J, et al. Brain activation in the processing of Chinese characters and words: A functional MRI study. Human Brain Mapping. 2000;10:16–27. doi: 10.1002/(SICI)1097-0193(200005)10:1<16::AID-HBM30>3.0.CO;2-M. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vigneau M, Beaucousin V, Herve PY, Duffau H, Crivello F, Houde O, et al. Meta-analyzing left hemisphere language areas: Phonology, semantics, and sentence processing. Neuroimage. 2006;30:1414–1432. doi: 10.1016/j.neuroimage.2005.11.002. [DOI] [PubMed] [Google Scholar]

- Wang H, Chang RB, Li YS, editors. Modern Chinese frequency dictionary. Beijing: Beijing Language University Press; 1985. [Google Scholar]

- Wang Y, Sereno JA, Jongman A, Hirsch J. fMRI evidence for cortical modification during learning of Mandarin lexical tone. Journal of Cognitive Neuroscience. 2003;15:1019–1027. doi: 10.1162/089892903770007407. [DOI] [PubMed] [Google Scholar]

- Xiao Z, Zhang JX, Wang X, Wu R, Hu X, Weng X, et al. Differential activity in the left inferior frontal gyrus for pseudowords and real words: An event-related fMRI study on auditory lexical decision. Human Brain Mapping. 2005;25:212–221. doi: 10.1002/hbm.20105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu B, Grafman J, Gaillard WD, Ishii K, Vega-Bermudez F, Pietrini P, et al. Conjoint and extended neural networks for the computation of speech codes: The neural basis of selective impairment in reading words and pseudowords. Cerebral Cortex. 2001;11:267–277. doi: 10.1093/cercor/11.3.267. [DOI] [PubMed] [Google Scholar]

- Yang H, Peng DL. The learning and naming of Chinese characters of elementary school children. The 7th International Conference on the Cognitive Processing of Chinese and Other Asian Languages; Hong Kong. 1995. [Google Scholar]

- Zatorre RJ, Gandour JT. Neural specializations for speech and pitch: Moving beyond the dichotomies. Philosophical Transactions of the Royal Society of London, Series B, Biological Sciences. 2008;363:1087–1104. doi: 10.1098/rstb.2007.2161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang QF, Yang YF. The phonological planning unit in Chinese monosyllabic word production. Psychological Science. 2005;28:374–378. [Google Scholar]

- Zhou, Zhuang J. Lexical tone in the speech production of Chinese words. Paper presented at the ICSLP.2000. [Google Scholar]

- Ziegler JC, Ferrand L. Orthography shapes the perception of speech: The consistency effect in auditory word recognition. Psychonomic Bulletin & Review. 1998;5:683–689. [Google Scholar]

- Zurowski B, Gostomzyk J, Gron G, Weller R, Schirrmeister H, Neumeier B, et al. Dissociating a common working memory network from different neural substrates of phonological and spatial stimulus processing. Neuroimage. 2002;15:45–57. doi: 10.1006/nimg.2001.0968. [DOI] [PubMed] [Google Scholar]