Abstract

Reward has been shown to promote human performance in multiple task domains. However, an important debate has developed about the uniqueness of reward-related neural signatures associated with such facilitation, as similar neural patterns can be triggered by increased attentional focus independent of reward. Here, we used functional magnetic resonance imaging to directly investigate the neural commonalities and interactions between the anticipation of both reward and task difficulty, by independently manipulating these factors in a cued-attention paradigm. In preparation for the target stimulus, both factors increased activity within the midbrain, dorsal striatum, and fronto-parietal areas, while inducing deactivations in default-mode regions. Additionally, reward engaged the ventral striatum, posterior cingulate, and occipital cortex, while difficulty engaged medial and dorsolateral frontal regions. Importantly, a network comprising the midbrain, caudate nucleus, thalamus, and anterior midcingulate cortex exhibited an interaction between reward and difficulty, presumably reflecting additional resource recruitment for demanding tasks with profitable outcome. This notion was consistent with a negative correlation between cue-related midbrain activity and difficulty-induced performance detriments in reward-predictive trials. Together, the data demonstrate that expected value and attentional demands are integrated in cortico-striatal-thalamic circuits in coordination with the dopaminergic midbrain to flexibly modulate resource allocation for an effective pursuit of behavioral goals.

Keywords: attention, fMRI, midbrain, reward, task demands

Introduction

Reward is known to effectively facilitate behavior and promote learning (Wise 2004). Mesolimbic responses to reward, including in the dopaminergic midbrain and the ventral striatum, are considered to be crucial for the acquisition of stimulus–reward associations (Schultz 2004). Once a stimulus–reward association is established, mesolimbic neural responses to reward-predicting stimuli are thought to reflect the magnitude and probability of the anticipated reward (Schultz 1997; O'Doherty et al. 2003; Wittmann, et al. 2005; Knutson and Cooper 2005; D'Ardenne et al. 2008; Schott et al. 2008).

While it has been theorized that motivation modulates attention and other cognitive-control processes (e.g., Small et al. 2005; Braver et al. 2007; Locke and Braver 2008; Croxson et al. 2009; Engelmann et al. 2009), the mechanisms by which reward-driven behavioral facilitation is accomplished are not fully understood. More recently, a debate developed about the uniqueness of the neural signatures of reward-related facilitation, which may help illuminate the mechanisms involved (e.g., Maunsell 2004; Bendiksby and Platt 2006; Pessoa and Engelmann 2010). In particular, it has been proposed that highly similar neural processes are engaged in response to the anticipation of attentionally demanding tasks, entirely independent of any reward prospect (Nieoullon 2002; Salamone et al. 2005; Arnsten et al. 2009). In line with this notion, recent observations in humans suggest that the dopaminergic midbrain, in conjunction with cortical control regions, is involved in flexible behavioral adjustments (Boehler, Bunzeck, et al. 2011) and resource recruitment (Boehler, Hopf, et al. 2011), even in the absence of reward. Furthermore, animal research shows that the dopaminergic midbrain is not only sensitive to reward-related stimuli but also to other salient events including alerting and aversive stimuli (reviewed in Bromberg-Martin et al. 2010). Together, these observations suggest that the role of the dopaminergic midbrain goes beyond the mere reflection of incentive value of a given situation, but rather may serve a more complex function in which stimulus valuation and situational processing requirements are integrated, presumably in coordination with other subcortical and cortical circuits. Such a broader role is further supported by findings indicating that the cognitive symptoms in numerous neurological and psychiatric disorders are related to pathological changes in the dopaminergic system including Parkinson’s disease and addiction (Nieoullon 2002; Everitt and Robbins 2005).

While the above findings provide evidence for the view that reward may act by utilizing processing routes of attentional and executive control systems, a systematic investigation of this proposed overlap, as well as the potential interaction between the 2 factors, is lacking. With the present functional magnetic resonance imaging (fMRI) study, we sought to pin down neural processes that are shared and distinct between reward-dependent and reward-independent resource recruitment. Specifically, we employed a spatial attention task in which a cue predicted the location and the difficulty level (hard vs. easy) of the upcoming target, as well as the potential to win money in that trial (reward vs. no-reward). By focusing on the cue phase, which was temporally separated from the actual task execution, we were able to distinguish neural responses reflecting anticipated reward and anticipated difficulty level. Crucially, the brief and temporally unpredictable presentation of the subsequent target required the participants to immediately prepare for the task upon cue presentation, thereby prompting attentional orienting, as well as the pre-target recruitment of cognitive resources. With this design, we could assess the neural underpinnings of the effects of both reward prospect and attentional demand on cognitive resource recruitment and their potential interaction.

Materials and Methods

Participants and Paradigm

Fourteen healthy right-handed participants performed a cued visual discrimination task inside the fMRI scanner (mean age ± standard deviation: 21.7 ± 3.2, 10 female). Three additional participants had to be excluded from the analysis due to poor performance in the discrimination task (1) or poor visual fixation (2). All participants gave written informed consent in accordance with the Duke Medical Center Institutional Review Board for human subjects and were paid a basic amount of $40 for 2 h plus a reward bonus that depended on task performance averaging about $15.

As per an instructional arrow cue at the beginning of each trial, participants were asked to shift their attention covertly to the left or right visual field and to respond quickly to a subsequent target at the cued location (Fig. 1A). Throughout all experimental runs, a small gray fixation square (0.5°) was visible in the center of a black screen, as well as 2 gray placeholder frames in the left and right lower visual field (6° below and 6° lateral of fixation). The arrow cue was presented for 800 ms and could either be blue or green, thereby signaling potential-reward and no-reward trials, respectively. Furthermore, a black or white square embedded in the arrow indicated whether the discrimination difficulty of the upcoming target would be hard or easy, respectively. Reward-predicting as well as difficulty-predicting colors were counterbalanced across participants.

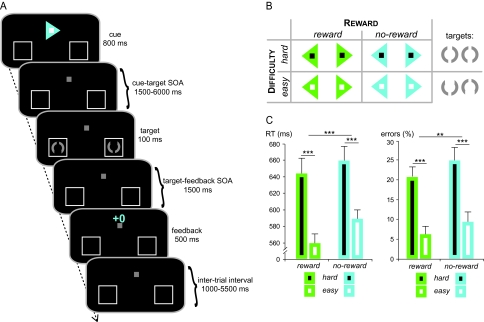

Figure 1.

Paradigm and task performance. (A) Each trial started with a colored arrow cue pointing to the target location. Additionally, the cue indicated the difficulty level of the upcoming target discrimination, as well as the potential to win money for accurate performance. After a variable SOA, the target display was briefly presented and participants decided via button press which gap in the target circle was bigger (top vs. bottom). Each trial ended with a visually presented performance feedback. (B) The potential to win money in the current trial (factor Reward) was indicated by the color of the arrow cue (e.g., green = reward vs. blue = no-reward), while the difficulty level (factor Difficulty) was indicated by the color of the embedded square (e.g., black = hard vs. white = easy). (C) In terms of the target discrimination performance, both RTs and error rates were affected by Reward and Difficulty, albeit, as expected, in opposite directions. Error bars depict the standard error of the mean across subjects; asterisks indicate significant main effects (***P < 0.001; **P < 0.01).

After a variable delay, a bilateral stimulus pair was presented in the placeholder boxes for 100 ms, and participants performed a discrimination task on the stimulus in the cued visual hemifield. The opposite-hemifield stimulus was included to avoid exogenous attentional capture at the time of the target appearance. The stimulus onset asynchrony (SOA) between cue and target was pseudorandomly varied between 1500 and 6000 ms (average SOA = 2500 ms) to promote an effective event-related blood oxygen level–dependent (BOLD)-response estimation (Hinrichs et al. 2000). The target stimuli were gray circles (radius 1°) with 2 gaps opposite from one another that had to be discriminated regarding their size. Participants indicated which gap was larger by pressing the right index finger for “top gap” and the right middle finger for “bottom gap.” For easy targets, the larger gap subtended a directional angle of 90° versus 40° for hard targets, which therefore affected how hard it was to distinguish it from the opposite smaller gap (20°). The design resulted in a total of 4 main cueing conditions, after collapsing across left and right (Fig. 1B; “no-reward easy,” “no-reward hard,” “reward easy,” and “reward hard”).

The target display was followed by a visual feedback stimulus (duration = 500 ms; SOA = 1500 ms) indicating whether the response was accurate and fast enough. A variable inter-trial interval of 1000–5500 ms was introduced to separate the feedback from the subsequent cue. Notably, in order to prevent a major asymmetry between conditions, the performance feedback was always informative in both reward and no-reward trials. In potential-reward trials, the feedback additionally indicated a gain or loss of 10 cents (“+10” vs. “−10”), whereas the feedback in no-reward trials was accompanied by zeros (“+0” vs. “−0”). The response time-out was adjusted dynamically during the experiment based on the individual response-time data to assure a stable positive/negative outcome ratio of 75% to 25% for each participant. This procedure led to a mean gain of $2.5 per run for each participant. At the end of each run, the updated dollar amount was displayed, serving as additional performance feedback. Again, while participants did not receive monetary feedback in no-reward trials, the routine to provide comparable positive/negative feedback rates was analogous to reward trials to avoid that effects are merely driven by the presence or absence of cognitive performance feedback (Daniel and Pollmann 2010). Importantly, this dynamic adjustment of the response time-out only affected the visual feedback during the experiment, whereas the behavioral analyses (response time [RT] and error rates) were performed based on the actual responses within a window of 150–1200 ms after target onset.

fMRI Data Acquisition

Prior to actual scanning, participants performed a 10-minute training session to get familiarized with the task. Inside the scanner, participants performed six 9-minute runs, yielding a total of 114 trials in each experimental condition. Each run included 3 brief performance breaks during the ongoing scanning. fMRI images were acquired using a 3-T GE EXCITE HD scanner with an 8-channel head-coil array. Each functional run consisted of 344 images acquired in an axial slice orientation (30 slices, 3-mm thickness) using an interleaved scanning order (inward-spiral sequence with SENSE acceleration factor of 2, time repetition = 1.5 s, time echo = 25 ms, field of view [FOV] = 192 mm, matrix size of 64 × 64 yielding an in-plane resolution of 3 × 3 mm). The first 5 volumes of each run were discarded to reach steady magnetization.

For each participant, a T1-weighted high-resolution whole-brain anatomical scan (3D FSPGR sequence, FOV = 256 mm, voxel size of 1 × 1 × 1 mm3) and a high-resolution T2-weighted anatomical scan (FOV = 256 mm, voxel size of 1 × 1 × 1 mm3) were acquired to enable spatial coregistration and normalization, as well as to localize subregions of the midbrain, respectively. Participants were instructed to keep accurate central fixation throughout the task and were monitored online using an MR-compatible eye-tracking system (Viewpoint, Arrington Research, Scottsdale, AZ).

fMRI Data Analysis

Images were preprocessed and analyzed using the Statistical Parametric Mapping software package SPM5 (Wellcome Trust Centre for Neuroimaging, University College, London, UK). Anatomical images were co-registered to the SPM template and spatially normalized using the gray- and white-matter segmentation routine implemented in SPM5. Functional images were corrected for acquisition delay, spatially realigned, co-registered to the original T1-weighted image, and spatially normalized using the parameters used to warp this anatomical image onto the template. After reslicing to a final voxel size of 2 × 2 × 2 mm, functional images were smoothed with an isotropic 6-mm full-width half-maximum Gaussian kernel. A high-pass temporal filter of 128 s was applied during model estimation (Ashburner and Friston 1999).

A 2-stage model was used for statistical analysis (Friston et al. 1995). For each experimental condition, BOLD responses were modeled by delta functions at the stimulus onsets for all event types, which were then all convolved with a canonical hemodynamic response function (HRF). The resulting parameters together with the corresponding temporal and dispersion derivatives as well as 6 realignment parameters for each run formed covariates of a general linear model (GLM, Friston et al. 1995). By decorrelating the cue and target phase, the present design enabled isolation of the activity related to the cue from the activity due to all other events (targets, feedback, and breaks), which were modeled as regressors of no interest. Individual participants’ contrast images were entered into a repeated-measures analysis of variances (rANOVA; false discovery rate (FDR)–corrected significance threshold P < 0.05, voxel-extent threshold k > 15) with factors “Reward” and “Difficulty” as implemented in SPM5 to investigate the main effects and interaction between the 2 factors. In order to define overlapping activations and deactivations associated with both reward and difficulty, the main effects were subsequently submitted to a conjunction analysis (FDR-corrected P < 0.05, voxel-extent threshold k > 15).

To further illustrate the rANOVA results, we extracted the mean BOLD signal changes from selected regions of interest (ROIs) using the MarsBaR analysis toolbox (Brett et al. 2002). ROIs were defined as spheres centered at the local activity maxima derived from the conjunction between the 2 voxel-wise main effects, as well as from the selective main effects and their interaction (radius 4 mm; within midbrain 2 mm). It should be emphasized that these ROI-based values serve to illustrate the overlapping main effects of anticipated reward and anticipated difficulty rather than being statistically inferential (Kriegeskorte et al. 2009). Furthermore, the observed main effects in regions that concomitantly exhibited a voxel-wise interaction should be interpreted in the light of this higher order effect. It is important to note that the analysis of the imaging data was entirely focused on the cue phase in order to investigate attentional orienting and resource recruitment during the anticipation of a target independent of the actual execution of the discrimination task.

Results

Behavioral Results

Most participants maintained very accurate fixation, and no differential effects of eye-position deviations were observed between conditions. However, 2 additional participants had to be excluded from further analyses due to extensive eye movements (see Materials and Methods).

Initially, RT and accuracy data were submitted to a 2 × 2 × 2 rANOVA with factors Reward, Difficulty, and Side (target in left vs. right visual field). Since there was no interaction between any of the main factors and Side (all P > 0.3), the data were collapsed across target side and tested via 2 × 2 rANOVAs. Target responses were faster and more accurate following reward as compared with no-reward cues (RTs: Reward: F1,13 = 24.58, P < 0.001; Error rate: F1,13 = 19.93, P = 0.001). Furthermore, on difficult as compared with easy targets, RTs were significantly slower (Difficulty: F1,13 = 59.99, P < 0.001) and participants committed more errors (F1,13 = 87.33, P < 0.001). The reward-related RT decrease was more pronounced for easy targets, which was reflected in an interaction between Reward and Difficulty (F1,13 = 9.05, P = 0.01) and confirmed by a significant post hoc t-test comparing the RT difference between easy versus hard targets in reward and no-reward trials, respectively (t13 = 3.01, P = 0.01). No interaction effect was observed regarding error rates (P > 0.5).

Cue-Related Modulations of Reward and Difficulty Anticipation

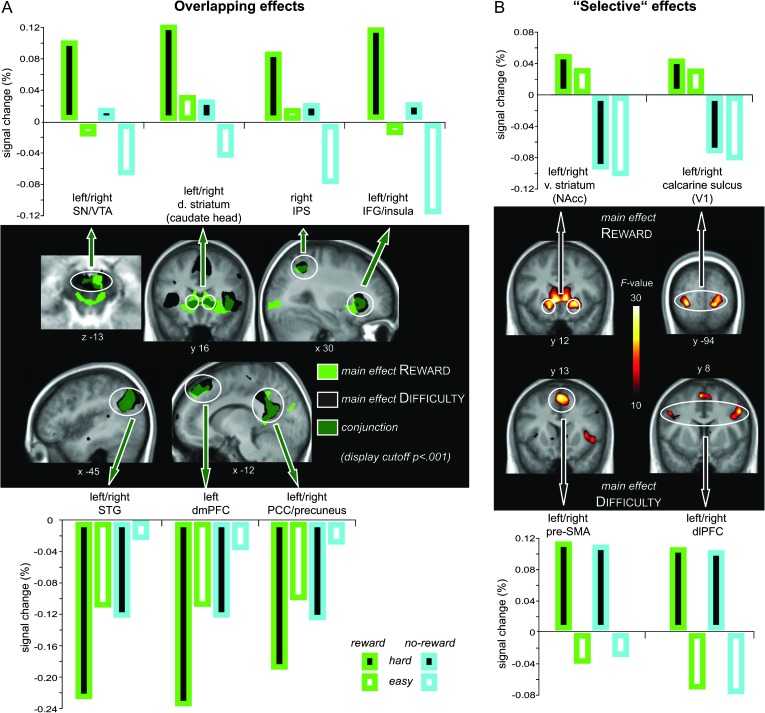

The whole-brain rANOVA focusing on the cue phase revealed main effects of both reward and difficulty anticipation in a widespread network of cortical and subcortical regions (Tables 1 and 2). The most prominent activations associated with reward as compared with no-reward cues included the dorsal striatum (encompassing both the caudate head and body), the ventral striatum (encompassing the nucleus accumbens [NAcc] and ventral putamen), the mesencephalic substantianigra/ventral tegmental area (SN/VTA) complex, inferior frontal gyrus (IFG) and anterior insula, anterior midcingulate cortex (aMCC), intraparietal sulcus (IPS), posterior cingulate cortex (PCC), calcarine sulcus (V1), posterior thalamus, and superior colliculi (for a complete overview, see Table 1). In addition, significantly larger deactivations for reward as compared with no-reward cues were found in regions of the default-mode network, including the superior temporal gyrus (STG), the dorsomedial and ventromedial prefrontal cortex (dmPFC, vmPFC), the caudal PCC and precuneus region, and the hippocampus.

Table 1.

Activity clusters associated with the anticipation of Reward

| Region | L/R | k | MNI coordinates |

F value | ||

| x | y | z | ||||

| Main effect Reward | ||||||

| Dorsal striatum (caudate head) | L/R | 1471 | 8 | 16 | 2 | 53.5 |

| Dorsal striatum (caudate head) | −6 | 14 | 0 | 40.3 | ||

| Thalamus/pulvinar | 4 | −20 | 14 | 39.3 | ||

| Ventral striatum (nucleus accumbens, NAcc) | −8 | 16 | −4 | 38.9 | ||

| IFG (Inferior frontal gyrus)/anterior insula | 44 | 20 | 0 | 36.6 | ||

| Ventral striatum (Nacc) | 20 | 10 | −6 | 35.7 | ||

| Dorsal striatum (caudate body) | 10 | 2 | 10 | 32.5 | ||

| Ventral striatum (Nacc) | −14 | 14 | −10 | 29.2 | ||

| Putamen | 26 | 16 | −8 | 26.0 | ||

| Dorsal striatum (caudate body) | −4 | −2 | 10 | 25.7 | ||

| Calcarine sulcus | R | 286 | 16 | −92 | −8 | 33.3 |

| Calcarine sulcus | 22 | −90 | −2 | 30.1 | ||

| Calcarine sulcus | L | 135 | −24 | −94 | −6 | 38.0 |

| Subthalamic nucleus | R | 128 | 8 | −16 | −6 | 30.4 |

| Superior colliculus | 4 | −24 | −4 | 28.2 | ||

| Periaqueductal gray | 8 | −26 | −14 | 22.0 | ||

| Ventral tegmental area/substantia nigra (VTA/SN) | 4 | −14 | −12 | 21.7 | ||

| aMCC (Anterior midcingulate ctx) | R | 209 | 12 | 40 | 18 | 22.5 |

| aMCC | 6 | 24 | 24 | 19.2 | ||

| IFG/anterior insula | L | 66 | −24 | 26 | 0 | 26.4 |

| PCC (posterior cingulate ctx) | L/R | 105 | 0 | −26 | 32 | 24.9 |

| PCC | 2 | −20 | 36 | 24.0 | ||

| IPS (Intraparietal sulcus) | R | 28 | 38 | −52 | 46 | 19.9 |

| Default-mode network | ||||||

| STG (superior temporal gyrus) | L | 448 | −44 | −66 | 28 | 44.1 |

| dmPFC (dorsomedial prefrontal ctx) | L | 333 | −16 | 40 | 44 | 36.6 |

| dmPFC | −16 | 34 | 46 | 32.7 | ||

| vmPFC (ventromedial prefrontal ctx) | L | 90 | −2 | 58 | 26 | 24.2 |

| Hippocampus | R | 25 | 24 | −14 | −18 | 28.6 |

| Hippocampus | 20 | −10 | −22 | 28.6 | ||

| Hippocampus | L | 25 | −22 | −12 | −20 | 27.3 |

| Hippocampus | −18 | −8 | −18 | 27.3 | ||

| Caudal PCC/precuneus | L | 72 | −10 | −56 | 26 | 23.5 |

| Caudal PCC/precuneus | −12 | −60 | 16 | 22.4 | ||

L: left hemisphere; R: right hemisphere; k: cluster size; MNI: Montreal Neurological Institute; ctx: cortex F value: FDR-corrected significance threshold at P < 0.05 (FDR).

Table 2.

Activity clusters associated with the anticipation of Difficulty

| Region | L/R | k | MNI coordinates |

F value | ||

| x | y | z | ||||

| Main effect Difficulty | ||||||

| IFG/anterior insula | R | 1180 | 38 | 20 | 2 | 89.9 |

| IFG/anterior insula | 34 | 24 | 8 | 65.2 | ||

| Dorsal striatum (caudate body) | 10 | 2 | 10 | 22.8 | ||

| Dorsal striatum (caudate head) | 10 | 16 | 2 | 22.5 | ||

| IFG/anterior insula | L | 679 | −32 | 26 | −2 | 69.1 |

| IFG/anterior insula | −26 | 28 | 0 | 61.8 | ||

| aMCC | L/R | 1264 | 10 | 20 | 48 | 64.5 |

| aMCC | 6 | 30 | 40 | 41.3 | ||

| Pre-SMA (pre-supplementary motor area) | 0 | 14 | 56 | 47.9 | ||

| Pre-SMA | 2 | 24 | 58 | 39.1 | ||

| dlPFC (dorsolateral prefrontal ctx) | R | 447 | 50 | 6 | 38 | 33.2 |

| dlPFC | 52 | 6 | 46 | 25.7 | ||

| Thalamus/pulvinar | R | 99 | 6 | −18 | 16 | 29.8 |

| IPS | R | 98 | 30 | −54 | 48 | 24.8 |

| IPS | L | 72 | −24 | −60 | 50 | 24.6 |

| dlPFC | L | 140 | −48 | 8 | 36 | 24.6 |

| dlPFC | −42 | 28 | 46 | 22.9 | ||

| Dorsal striatum (caudate head) | L | 42 | −12 | 14 | 0 | 20.7 |

| VTA/SN | L/R | 28 | −2 | −16 | −14 | 19.3 |

| VTA/SN | 2 | −20 | −16 | 18.9 | ||

| Superior colliculus | L | 18 | −2 | −32 | 0 | 18.7 |

| Default-mode network | ||||||

| STG | L | 1470 | −46 | −66 | 28 | 70.6 |

| STG | −38 | −76 | 38 | 57.0 | ||

| dmPFC | L | 1138 | −6 | 44 | 52 | 60.3 |

| dmPFC | −16 | 36 | 50 | 50.2 | ||

| dmPFC | −24 | 20 | 58 | 48.2 | ||

| Caudal PCC/precuneus | L/R | 1753 | −10 | −60 | 16 | 40.9 |

| Caudal PCC/precuneus | −14 | −56 | 22 | 30.6 | ||

| Caudal PCC/precuneus | 4 | −54 | 22 | 37.2 | ||

| STG | R | 609 | 34 | −76 | 44 | 39.8 |

| STG | 40 | −58 | 32 | 37.5 | ||

| Hippocampus | R | 66 | 24 | −16 | −16 | 30.5 |

| Hippocampus | 22 | −6 | −20 | 24.4 | ||

| vmPFC | R | 179 | 6 | 52 | −8 | 28.7 |

| vmPFC | 4 | 52 | −12 | 25.0 | ||

| Hippocampus | L | 76 | −24 | −22 | −14 | 26.4 |

| Hippocampus | −30 | −22 | −14 | 24.4 | ||

L: left hemisphere; R: right hemisphere; k: cluster size; MNI: Montreal Neurological Institute; ctx: cortex F value: FDR-corrected significance threshold at P < 0.05 (FDR).

Cue-related anticipation of hard as compared with easy targets induced activity increases in the dorsal striatum (caudate head and body), the SN/VTA complex, IFG and anterior insula, IPS, aMCC and the pre-supplementary motor area (pre-SMA), dorsolateral prefrontal cortex (dlPFC) extending into the inferior frontal junction, posterior thalamus, and the superior colliculi (for a complete overview, see Table 2). Similar to reward-predicting cues, cues predicting difficult versus easy targets led to significant deactivations in the default-mode network of attention.

Note that the main effects in regions that were accompanied by a significant interaction in the voxel-wise analysis should be interpreted in the light of the respective higher order interaction effects (see Interaction between Cue-Related Reward and Difficulty Anticipation).

Comparison of the Observed Cue-Related Main Effects

To illustrate the considerable regional overlap between the anticipation of reward and difficulty, the associated main effects were submitted to a conjunction analysis (see Table 3, Fig. 2). BOLD signal change values were extracted based on the local activity maxima in this conjunction and averaged across left and right cues and across hemispheres (provided those regions exhibited comparable bilateral activation patterns). The majority of the activated regions, including IFG, IPS, dorsal striatum (caudate head), and the SN/VTA complex, were independently activated by both reward and difficulty anticipation, resulting in a mostly additive effect on the BOLD signal (Fig. 2A, upper panel). Analogously, regions of the default-mode network, including STG, dmPFC, vmPFC, and PCC/precuneus, were similarly deactivated by reward and difficulty anticipation, again in an additive way (Fig. 2A, lower panel).

Table 3.

Activity overlap between the anticipation of Reward and Difficulty

| Region | L/R | k | MNI coordinates |

F value | ||

| x | y | z | ||||

| Conjunction between [main effect Reward] ∩ [main effect Difficulty] | ||||||

| IFG/anterior insula | R | 210 | 40 | 20 | 0 | 36.6 |

| IFG/anterior insula | 28 | 22 | 4 | 17.5 | ||

| IFG/anterior insula | L | 84 | −24 | 26 | 0 | 26.4 |

| Dorsal striatum (caudate head) | R | 170 | 14 | 22 | −6 | 23.5 |

| Dorsal striatum (caudate body) | 10 | 2 | 10 | 22.8 | ||

| Dorsal striatum (caudate head) | 10 | 16 | 2 | 22.5 | ||

| Dorsal striatum (caudate head) | L | 36 | −12 | 14 | 0 | 20.7 |

| Thalamus/pulvinar | L/R | 72 | 6 | −18 | 16 | 29.8 |

| IPS | R | 16 | 34 | −52 | 46 | 17.5 |

| SN/VTA | L/R | 12 | −4 | −14 | −12 | 16.8 |

| Default-mode network | ||||||

| STG | L | 580 | −44 | −66 | 28 | 44.1 |

| STG | −56 | −74 | 26 | 15.6 | ||

| dmPFC | L | 340 | −14 | 40 | 46 | 32.9 |

| dmPFC | −14 | 30 | 48 | 31.3 | ||

| Hippocampus | R | 10 | 24 | −14 | −18 | 27.3 |

| Caudal PCC/precuneus | L/R | 229 | −10 | −56 | 26 | 23.5 |

| Caudal PCC/precuneus | −12 | −60 | 16 | 22.4 | ||

| STG | R | 18 | 42 | −68 | 32 | 19.7 |

L: left hemisphere; R: right hemisphere; k: cluster size; MNI: Montreal Neurological Institute; ctx: cortex F value: FDR-corrected significance threshold at P < 0.05 (FDR).

Figure 2.

Neural modulations of cue-related reward and difficulty anticipation. (A) Activations associated with the main effect of reward (green) are overlaid with activations associated with the main effect of difficulty (black) in a conjunction contrast with a display cutoff at a significance threshold of P < 0.001. Both anticipated reward and anticipated difficulty increased activity within overlapping cortical and subcortical attentional control regions (upper panel) and decreased activity within overlapping regions of the default-mode network (lower panel). Bar graphs represent BOLD signal values extracted based on the local maxima of the conjunction analysis (Table 3). (B) In addition, anticipated reward increased activity within bilateral ventral striatum, V1, and PCC (not shown), while anticipated difficulty increased activity within bilateral pre-SMA and dlPFC. Bar graphs represent BOLD signal values extracted based on the local maxima of the respective main effects (Tables 1 and 2).

Beyond these additive effects of reward and difficulty anticipation, several regions were predominantly modulated by only one dimension of the cue stimulus (Fig. 2B). Specifically, the anticipation of reward primarily engaged the ventral striatum (encompassing the NAcc), the calcarine sulcus in the occipital cortex (V1), and the PCC (not shown), while the anticipation of difficulty primarily engaged the pre-SMA and the dlPFC. To test for potential modulations by the respective opposite factor in these 5 regions, we extracted the BOLD signal change for all 4 conditions. Note that the signal change values (Fig. 2B) representing the originally contrasted conditions on which the ROI selection was based upon (for reward: “reward” vs. “no-reward”; for difficulty: “hard” vs. easy”) are merely included for completeness rather than being inferential as they naturally reflect the voxel-wise main effects (Kriegeskorte et al. 2009). The only regions of these 2 sets that were significantly modulated by the respective other factor, that is, reward-related regions modulated by difficulty, were the left ventral striatum (t13 = 2.86, P = 0.014) and bilateral V1 (left: t13 = 2.23, P = 0.044; right: t13 = 2.86, P = 0.013), as indicated by significant paired t-tests between hard versus easy cues (all other P values > 0.2).

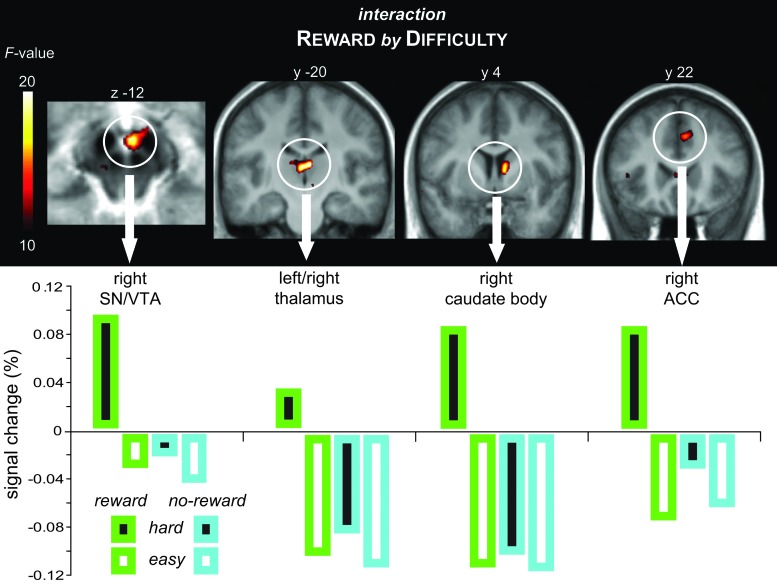

Interaction between Cue-Related Reward and Difficulty Anticipation

Importantly, in addition to the main effects in partly overlapping and partly selective regions, the rANOVA revealed a significant voxel-wise interaction for the cue-related activity between the 2 factors (Table 4; Fig. 3) in the SN/VTA complex, the posterior thalamus/pulvinar, the right caudate body, and the right aMCC. The pattern of BOLD signal change extracted from ROIs centered at the respective local activity maxima show that the interaction in all these regions reflected a highly selective activity increase for cues that predicted both reward and high difficulty as compared with all other cue types. To relate the cue-related neural activity in the regions exhibiting such an interaction to the performance in the discrimination task, the BOLD signal change derived from these regions (i.e., VTA, thalamus, caudate body, and aMCC) was submitted to an across-subjects correlational analysis with their RT measures. We found that an increase in the neural signal within the VTA was associated with a reduced RT disadvantage in difficult trials (i.e., performance improvement), as indicated by a negative correlation between the difference in the cued-related BOLD signal (hard minus easy trials) and the respective difference in RT (r = −0.69, P = 0.006). Hence, greater VTA activity was associated with faster responses in difficult trials. Importantly, this effect was primarily driven by the reward trials, as indicated by a significant correlation when considering reward trials alone (hard minus easy: r = −0.66, P = 0.010), but no correlation when considering no-reward trials alone (hard minus easy: r = 0.13, P = 0.658). In addition, neural activity in the right caudate body was correlated with faster responses in reward as compared with no-reward trials (r = −.61, P = 0.020).

Table 4.

Regions exhibiting an interaction between Reward and Difficulty

| Region | L/R | k | MNI coordinates | F value | ||

| x | y | z | ||||

| Reward by Difficulty interaction | ||||||

| Thalamus/pulvinar | L/R | 191 | −4 | −22 | 8 | 21.6 |

| Thalamus/pulvinar | 4 | −20 | 12 | 20.9 | ||

| Medial thalamus | 14 | −26 | 12 | 20.9 | ||

| Medial thalamus | −6 | −14 | 16 | 14.4 | ||

| Dorsal striatum (caudate body) | R | 41 | 10 | 4 | 12 | 20.0 |

| SN/VTA | R | 18 | 4 | −12 | −12 | 18.9 |

| aMCC | R | 25 | 10 | 20 | 40 | 14.9 |

L: left hemisphere; R: right hemisphere; k: cluster size; MNI: Montreal Neurological Institute; ctx: cortex F value: FDR-corrected significance threshold at P < 0.05 (FDR).

Figure 3.

Interaction between cue-related reward and difficulty anticipation. The right SN/VTA complex, posterior thalamus, right caudate body, and the right aMCC exhibited a significant interaction between anticipated reward and difficulty in the voxel-wise rANOVA. The BOLD signal change values revealed that this effect was driven by a selective activity increase for cues predicting both reward and high difficulty.

Discussion

In the current fMRI study, we used a spatial cuing paradigm in which a trial-by-trial cue not only predicted the location of an upcoming target but also its discrimination difficulty (hard vs. easy) and the potential to win money in that trial (reward vs. no-reward). As expected, we found that the prospect of reward facilitated target discrimination performance. Moreover, our task-difficulty manipulation was very effective as well, in that participants were slower and less accurate in the hard discrimination condition.

On the neural level, during the cuing phase for the anticipation of reward and the anticipation of high task demands, we observed strikingly overlapping activations in a widespread network, including the IFG and anterior insula region, the IPS, the dorsal striatum, the aMCC, the thalamus, and the mesencephalic SN/VTA complex. These shared neural modulations support the view that information about potential reward and task demands triggers highly similar processes that have been linked to attentional control (Kastner et al. 1999; Gitelman et al. 1999; Hopfinger et al. 2000; Corbetta and Shulman 2002; Woldorff et al. 2004). In keeping with this notion, reward seems to act by utilizing and modulating attentional processes that are typically also employed in endogenous attentional control, as has been suggested (e.g., Pessoa and Engelmann 2010), rather than by triggering a cascade that is highly unique to reward. The observed deactivation pattern in default-mode regions further supports this notion as activity in this network typically decreases as attentional demands, and ultimately attentional control, increase (Gusnard et al. 2001; Raichle et al. 2001). In the present study, both reward-predicting and difficulty-predicting trials share a common neural pattern, that is, greater deactivation during reward versus no-reward and hard versus easy trials, thereby mirroring the activity pattern in attentional control regions. In turn, these deactivations are likely related to a reduced vulnerability to attentional lapses, which have been associated with increased default-mode activity (Weissman et al. 2006).

While earlier studies provided evidence for a functional overlap between cognitive-control processes related to reward and attentional control, using either reward (e.g., Engelmann et al. 2009) or task demands (e.g., Boehler, Hopf, et al. 2011), we now explicitly delineate the neural communalities and interactions of these factors by incorporating these manipulations in a fully crossed factorial design within the same study. Moreover, the current results show for the first time that stimulus processing in a number of these regions appears to be modulated in an additive way, with highest activity levels for cues predicting both reward and high task demands, comparable intermediate activity levels for cues predicting only one of the 2, and lowest levels for cues predicting neither.

Despite this functional overlap in the attention and default-mode networks, some regions were predominantly sensitive to only one type of salient information provided by the cue. In particular, the ventral striatum, as well as the PCC and visual cortex (V1), showed clearly enhanced responses during the anticipation of reward as compared with anticipation of higher task difficulty. The cluster in the ventral part of the striatum likely comprises the NAcc (as well as ventral parts of the putamen), an area that has been established as being one of the most important target areas of the dopaminergic projections from the midbrain (Wise 2004; Haber and Knutson 2010). The reward-related response in this region is in line with the prototypical reward-anticipation response that is thought to signal the incentive value of a stimulus (Knutson et al. 2001; Schultz 2002; Wise 2004; Knutson and Gibbs 2007). Similarly, the rostral part of the PCC has been shown to be involved in the processing of reward-related stimuli (Knutson et al. 2001; Small et al. 2005; Platt and Huettel 2008). While being particularly modulated by the prospect of reward, the left ventral striatum and bilateral V1 were to some extent also sensitive to anticipated difficulty, as revealed by comparing the extracted BOLD signal change in these regions from hard and easy trials. These additional modulations are consistent with our recent observations of difficulty-related signals in these regions in the absence of reward (Boehler, Hopf, et al. 2011). Importantly, as compared with this latter finding, the present design allowed us to clearly associate the neural activity to cue processing alone, that is, independent of target processing. However, on the voxel-wise level, the anticipation of high task difficulty was not sufficient to trigger activity in these regions in the present experiment, suggesting a greater behavioral relevance of reward-predicting as compared with difficulty-predicting cues. These differential effects of anticipated reward and anticipated difficulty may in turn be related to the notion that reward associations impart greater motivational value and higher prioritization of an event fairly automatically (Serences 2008; Kiss et al. 2009).

Anticipation of greater task difficulty, in and of itself, led to increased activity in medial frontal and dorsolateral prefrontal regions. Specifically, cues predicting hard as compared with easy targets were associated with activity in the pre-SMA in the medial frontal wall, a region that has been implicated in cognitive-control and attention processes and is known to be modulated by task demands (e.g., Brass and von Cramon 2002; Cole and Schneider 2007). Similarly, the dlPFC, and in particular the inferior frontal junction, has been associated with a variety of cognitive-control functions, including the representation and maintenance of task sets (e.g., Banich et al. 2000; Brass and von Cramon 2002; Derrfuss et al. 2004; Cole and Schneider 2007; Menon and Uddin 2010). Together, the observed frontal activations during the anticipation of high versus low task difficulty are therefore likely to reflect increased top-down executive control during the preparation for a highly demanding task as compared with an easy one.

In addition, with regard to the important question of how information about reward and task demands is integrated on a neural level, we found significant voxel-wise interactions in the medial dopaminergic midbrain, along with other subcortical regions and the aMCC, reflecting selective activity enhancement in response to difficult cues that were concomitantly predictive of reward. It has been recently suggested that the dopaminergic midbrain is involved in the flexible recruitment of cognitive resources to meet varying task demands (Nieoullon 2002; Arnsten et al. 2009; Boehler, Bunzeck, et al. 2011; Boehler, Hopf, et al. 2011), a role that goes beyond the mere coding of incentive values. However, it remained unclear whether these 2 functions are mutually independent processes that would result in a mostly additive, independent set of effects, or whether they would interact if triggered concomitantly. Although the overlap in attentional control regions during reward-predicting and difficulty-predicting cues in the present study suggests that highly similar mechanisms are employed, the interaction pattern in the dopaminergic midbrain indicates that difficulty alone may not automatically prompt maximal recruitment of processing resources in the context of a reward paradigm. The results of the present study suggest instead that additional resources may be invested to accomplish a demanding task particularly when it seems worth the effort. This notion is further supported by the observation that greater VTA activity in response to the cue was correlated with a smaller difficulty-related RT disadvantage across subjects if the present trial was rewarded. Such a mechanism would thus go beyond merely signaling the incentive value of a given situation, thereby emphasizing the role of the dopaminergic system within a broader framework of “motivational control” and its role in the allocation of processing resources to control and adapt behavior (Jocham and Ullsperger 2009; van Schouwenburg et al. 2010; Bromberg-Martin et al. 2010).

Moreover, it has been demonstrated that prototypical mesolimbic reward anticipation responses to an incentive cue are actually diminished if mental effort has to be invested over a longer time in a subsequent task to obtain the reward—a phenomenon that has been described as “effort discounting” (Botvinick et al. 2009; Croxson et al. 2009; Kool et al. 2010). Accordingly, if the observed interaction would be primarily driven by the mere representation of incentive value, we would expect to have found the opposite pattern from what we observed in the present study—that is, an attenuation of the response for high as compared with low difficulty trials. In contrast, we found a selective activity boost in response to cues that predicted both reward and high difficulty, supporting the notion that the dopaminergic midbrain is involved in increased resource recruitment when this is particularly needed or desired (Nieoullon 2002; Salamone et al. 2005; Arnsten et al. 2009). With respect to the effort-discounting literature, it is important to note, however, that our paradigm required participants to immediately prepare for the upcoming target once the cue was presented, thereby emphasizing resource recruitment rather than mere valuation of the cue, which in turn likely gave rise to an additive rather than discounting effect of task load on reward anticipation (cf. Croxson et al. 2009).

The interaction pattern in the midbrain was paralleled by a similar interaction effect in the caudate body, the aMCC, and the posterior thalamus. Previous studies have indicated that the anterior cingulate cortex and the caudate nucleus are particularly sensitive to different kinds of behaviorally salient events, including those associated with reward (Downar et al. 2002; Zink et al. 2004; Davidson et al. 2004; Zink et al. 2006; Seeley et al. 2007). It has been furthermore suggested that the anterior cingulate cortex may represent a neural “hub” that helps prioritize stimulus processing and action selection by integrating multiple salient inputs (Downar et al. 2002; Rushworth et al. 2007; Pessoa and Engelmann 2010). More recently, a similar integrative and modulatory role has been suggested for the thalamus due to its role as a critical relay structure between cortical and subcortical regions (for a review, see Haber and Calzavara 2009). Despite being considered one of the major target regions of dopaminergic midbrain neurons, the ventral striatum did not entirely mirror the neural response within the VTA. This dissociation likely arises from the complex connectivity patterns of the ventral striatum with subcortical and cortical regions. More specifically, it is likely that in complex task settings such as in the present study, the ventral striatal response is a product of the dopaminergic input from the midbrain and interactions with prefrontal regions (Wise 2004). In this context, it should be noted that modulations of fMRI signals in the dopaminergic midbrain and its target regions cannot be automatically equated with dopaminergic neurotransmission, although a body of evidence has been accumulating for this relationship (e.g., Knutson and Gibbs 2007; Schott et al. 2008; Buckholtz et al. 2010). In addition, it has been recently argued (D'Ardenne et al. 2008) that whole-head group-level fMRI approaches can be problematic for the investigation of the midbrain due to its small size and proximity to blood vessels (but see Duzel et al. 2009). To ameliorate such problems, we optimized our data-acquisition approach, including implementing high-resolution functional matrices and optimized co-registration procedures that incorporate T2-weighted anatomical scans to provide an excellent anatomical contrast and thus the ability to accurately locate mesencephalic subregions.

Together, the present findings suggest that information about reward value and the expected difficulty to obtain the reward is integrated by means of interactions between the dopimanergic midbrain and cortico-striatal-thalamic circuits, contributing to the modulation of resource recruitment in order to facilitate performance when it appears particularly worthwhile. It should be noted, however, that such a mechanism is likely to be highly dependent on the task context. In particular, the context of reward might generally shift resource-recruitment strategies by incorporating information about both value and task demands to prioritize resource allocation in trials in which higher effort is most likely to pay off. In contrast, in the absence of reward, high levels of perceptual difficulty, working memory load, or stimulus conflict could be sufficient to drive a similar cascade in a self-propelling manner.

Funding

National Institutes of Health (R01-MH060415 and R01-NS051048 to M.G.W.); German Research Foundation Grant (BO 3345/1-1 to C.N.B.)

Acknowledgments

Conflict of Interest: None declared.

References

- Arnsten AFT, Vijayraghavan S, Wang M, Gamo NJ, Paspalas CD. Dopamine’s influence on prefrontal cortical cognition: actions and circuits in behaving primates. In: Iversen L, Iversen S, Dunnett S, Bjorklund A, editors. Dopamine Handbook. New York: Oxford University Press; 2009. pp. 230–248. [Google Scholar]

- Ashburner J, Friston KJ. Nonlinear spatial normalization using basis functions. Hum Brain Mapp. 1999;7(4):254–266. doi: 10.1002/(SICI)1097-0193(1999)7:4<254::AID-HBM4>3.0.CO;2-G. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banich MT, Milham MP, Atchley RA, Cohen NJ, Webb A, Wszalek T, Kramer AF, Liang Z, Barad V, Gullett D, et al. Prefrontal regions play a predominant role in imposing an attentional 'set': evidence from fMRI. Brain Res Cogn Brain Res. 2000;10(1–2):1–9. doi: 10.1016/s0926-6410(00)00015-x. [DOI] [PubMed] [Google Scholar]

- Bendiksby MS, Platt ML. Neural correlates of reward and attention in macaque area LIP. Neuropsychologia. 2006;44(12):2411–2420. doi: 10.1016/j.neuropsychologia.2006.04.011. [DOI] [PubMed] [Google Scholar]

- Boehler CN, Bunzeck N, Krebs RM, Noesselt T, Schoenfeld MA, Heinze HJ, Munte TF, Woldorff MG, Hopf JM. Substantianigra activity level predicts trial-to-trial adjustments in cognitive control. J CognNeurosci. 2011;23(2):362–373. doi: 10.1162/jocn.2010.21473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boehler CN, Hopf JM, Krebs RM, Stoppel CM, Schoenfeld MA, Heinze HJ, Noesselt T. Task-load-dependent activation of dopaminergic midbrain areas in the absence of reward. J Neurosci. 2011;31(13):4955–4961. doi: 10.1523/JNEUROSCI.4845-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botvinick MM, Huffstetler S, McGuire JT. Effort discounting in human nucleus accumbens. Cogn Affect Behav Neurosci. 2009;9(1):16–27. doi: 10.3758/CABN.9.1.16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brass M, von Cramon DY. The role of the frontal cortex in task preparation. Cereb Cortex. 2002;12(9):908–914. doi: 10.1093/cercor/12.9.908. [DOI] [PubMed] [Google Scholar]

- Braver TS, Gray JR, Burgess GC. Explaining the many varieties of working memory variation: dual mechanisms of cognitive control. New York: Oxford University Press; 2007. pp. 77–106. [Google Scholar]

- Brett M, Anton J-L, Valabregue R, Poline J-P. Region of interest analysis using an SPM toolbox (abstract). Available on CD-Rom. Neuroimage. 2002;16(2) [Google Scholar]

- Bromberg-Martin ES, Matsumoto M, Hikosaka O. Dopamine in motivational control: rewarding, aversive, and alerting. Neuron. 2010;68(5):815–834. doi: 10.1016/j.neuron.2010.11.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckholtz JW, Treadway MT, Cowan RL, Woodward ND, Li R, Ansari MS, Baldwin RM, Schwartzman AN, Shelby ES, Smith CE, et al. Dopaminergic network differences in human impulsivity. Science. 2010;329(5991):532. doi: 10.1126/science.1185778. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cole MW, Schneider W. The cognitive control network: integrated cortical regions with dissociable functions. Neuroimage. 2007;37(1):343–60. doi: 10.1016/j.neuroimage.2007.03.071. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci. 2002;3(3):201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Croxson PL, Walton ME, O'Reilly JX, Behrens TE, Rushworth MF. Effort-based cost-benefit valuation and the human brain. J Neurosci. 2009;29(14):4531–4541. doi: 10.1523/JNEUROSCI.4515-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daniel R, Pollmann S. Comparing the neural basis of monetary reward and cognitive feedback during information-integration category learning. J Neurosci. 2010;30(1):47–55. doi: 10.1523/JNEUROSCI.2205-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- D'Ardenne K, McClure SM, Nystrom LE, Cohen JD. BOLD responses reflecting dopaminergic signals in the human ventral tegmental area. Science. 2008;319(5867):1264–1267. doi: 10.1126/science.1150605. [DOI] [PubMed] [Google Scholar]

- Davidson MC, Horvitz JC, Tottenham N, Fossella JA, Watts R, Ulug AM, Casey BJ. Differential cingulate and caudate activation following unexpected nonrewarding stimuli. Neuroimage. 2004;23(3):1039–1045. doi: 10.1016/j.neuroimage.2004.07.049. [DOI] [PubMed] [Google Scholar]

- Derrfuss J, Brass M, von Cramon DY. Cognitive control in the posterior frontolateral cortex: evidence from common activations in task coordination, interference control, and working memory. Neuroimage. 2004;23(2):604–612. doi: 10.1016/j.neuroimage.2004.06.007. [DOI] [PubMed] [Google Scholar]

- Downar J, Crawley AP, Mikulis DJ, Davis KD. A cortical network sensitive to stimulus salience in a neutral behavioral context across multiple sensory modalities. J Neurophysiol. 2002;87(1):615–620. doi: 10.1152/jn.00636.2001. [DOI] [PubMed] [Google Scholar]

- Duzel E, Bunzeck N, Guitart-Masip M, Wittmann B, Schott BH, Tobler PN. Functional imaging of the human dopaminergic midbrain. Trends Neurosci. 2009;32(6):321–328. doi: 10.1016/j.tins.2009.02.005. [DOI] [PubMed] [Google Scholar]

- Engelmann JB, Damaraju E, Padmala S, Pessoa L. Combined effects of attention and motivation on visual task performance: transient and sustained motivational effects. Front Hum Neurosci. 2009;3:4. doi: 10.3389/neuro.09.004.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Everitt BJ, Robbins TW. Neural systems of reinforcement for drug addiction: from actions to habits to compulsion. Nat Neurosci. 2005;8(11):1481–1489. doi: 10.1038/nn1579. [DOI] [PubMed] [Google Scholar]

- Friston K, Holmes AP, Worsley KJ, Poline JB, Frith CD, Frackowiak RS. Statistical parametric maps in functional imaging: a general linear approach. Hum Brain Mapp. 1995;2(4):189–210. [Google Scholar]

- Gitelman DR, Nobre AC, Parrish TB, LaBar KS, Kim YH, Meyer JR, Mesulam M. A large-scale distributed network for covert spatial attention: further anatomical delineation based on stringent behavioural and cognitive controls. Brain. 1999;122(Pt 6):1093–1106. doi: 10.1093/brain/122.6.1093. [DOI] [PubMed] [Google Scholar]

- Gusnard DA, Raichle ME, Raichle ME. Searching for a baseline: functional imaging and the resting human brain. Nat Rev Neurosci. 2001;2(10):685–694. doi: 10.1038/35094500. [DOI] [PubMed] [Google Scholar]

- Haber SN, Calzavara R. The cortico-basal ganglia integrative network: the role of the thalamus. Brain Res Bull. 2009;78(2–3):69–74. doi: 10.1016/j.brainresbull.2008.09.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haber SN, Knutson B. The reward circuit: linking primate anatomy and human imaging. Neuropsychopharmacology. 2010;35(1):4–26. doi: 10.1038/npp.2009.129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hinrichs H, Scholz M, Tempelmann C, Woldorff MG, Dale AM, Heinze H-J. Deconvolution of event-related fMRI responses in fast-rate experimental designs: tracking amplitude variations. J Cogn Neurosci. 2000;12(Suppl 2):76–89. doi: 10.1162/089892900564082. [DOI] [PubMed] [Google Scholar]

- Hopfinger JB, Buonocore MH, Mangun GR. The neural mechanisms of top-down attentional control. Nat Neurosci. 2000;3(3):284–291. doi: 10.1038/72999. [DOI] [PubMed] [Google Scholar]

- Jocham G, Ullsperger M. Neuropharmacology of performance monitoring. Neurosci Biobehav Rev. 2009;33(1):48–60. doi: 10.1016/j.neubiorev.2008.08.011. [DOI] [PubMed] [Google Scholar]

- Kastner S, Pinsk MA, De Weerd P, Desimone R, Ungerleider LG. Increased activity in human visual cortex during directed attention in the absence of visual stimulation. Neuron. 1999;22(4):751–761. doi: 10.1016/s0896-6273(00)80734-5. [DOI] [PubMed] [Google Scholar]

- Kiss M, Driver J, Eimer M. Reward priority of visual target singletons modulates event-related potential signatures of attentional selection. PsycholSci. 2009;20(2):245–251. doi: 10.1111/j.1467-9280.2009.02281.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knutson B, Cooper JC. Functional magnetic resonance imaging of reward prediction. Curr Opin Neurol. 2005;18(4):411–417. doi: 10.1097/01.wco.0000173463.24758.f6. [DOI] [PubMed] [Google Scholar]

- Knutson B, Fong GW, Adams CM, Varner JL, Hommer D. Dissociation of reward anticipation and outcome with event-related fMRI. Neuroreport. 2001;12(17):3683–3687. doi: 10.1097/00001756-200112040-00016. [DOI] [PubMed] [Google Scholar]

- Knutson B, Gibbs SE. Linking nucleus accumbens dopamine and blood oxygenation. Psychopharmacology (Berl) 2007;191(3):813–822. doi: 10.1007/s00213-006-0686-7. [DOI] [PubMed] [Google Scholar]

- Kool W, McGuire JT, Rosen ZB, Botvinick MM. Decision making and the avoidance of cognitive demand. J Exp Psychol Gen. 2010;139(4):665–682. doi: 10.1037/a0020198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Simmons WK, Bellgowan PS, Baker CI. Circular analysis in systems neuroscience: the dangers of double dipping. Nat Neurosci. 2009;12(5):535–540. doi: 10.1038/nn.2303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Locke HS, Braver TS. Motivational influences on cognitive control: behavior, brain activation, and individual differences. Cogn Affect Behav Neurosci. 2008;8(1):99–112. doi: 10.3758/cabn.8.1.99. [DOI] [PubMed] [Google Scholar]

- Maunsell JHR. Neuronal representations of cognitive state: reward or attention? Trends Cogn Sci. 2004;8(6):261–265. doi: 10.1016/j.tics.2004.04.003. [DOI] [PubMed] [Google Scholar]

- Menon V, Uddin LQ. Saliency, switching, attention and control: a network model of insula function. Brain Struct Funct. 2010;214(5–6):655–667. doi: 10.1007/s00429-010-0262-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nieoullon A. Dopamine and the regulation of cognition and attention. Prog Neurobiol. 2002;67(1):53–83. doi: 10.1016/s0301-0082(02)00011-4. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP, Dayan P, Friston K, Critchley H, Dolan RJ. Temporal difference models and reward-related learning in the human brain. Neuron. 2003;38(2):329–337. doi: 10.1016/s0896-6273(03)00169-7. [DOI] [PubMed] [Google Scholar]

- Pessoa L, Engelmann JB. Embedding reward signals into perception and cognition. Front Neurosci. 4:17. 2010 doi: 10.3389/fnins.2010.00017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Platt ML, Huettel SA. Risky business: the neuroeconomics of decision making under uncertainty. Nat Neurosci. 2008;11(4):398–403. doi: 10.1038/nn2062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raichle ME, MacLeod AM, Snyder AZ, Powers WJ, Gusnard DA, Shulman GL. A default mode of brain function. Proc Natl Acad Sci U S A. 2001;98(2):676–682. doi: 10.1073/pnas.98.2.676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rushworth MF, Behrens TE, Rudebeck PH, Walton ME. Contrasting roles for cingulate and orbitofrontal cortex in decisions and social behaviour. Trends Cogn Sci. 2007;11(4):168–176. doi: 10.1016/j.tics.2007.01.004. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Correa M, Mingote SM, Weber SM. Beyond the reward hypothesis: alternative functions of nucleus accumbens dopamine. Curr Opin Pharmacol. 2005;5(1):34–41. doi: 10.1016/j.coph.2004.09.004. [DOI] [PubMed] [Google Scholar]

- Schott BH, Minuzzi L, Krebs RM, Elmenhorst D, Lang M, Winz OH, Seidenbecher CI, Coenen HH, Heinze HJ, Zilles K, et al. Mesolimbic functional magnetic resonance imaging activations during reward anticipation correlate with reward-related ventral striatal dopamine release. J Neurosci. 2008;28(52):14311–14319. doi: 10.1523/JNEUROSCI.2058-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz W. Dopamine neurons and their role in reward mechanisms. Curr Opin Neurobiol. 1997;7(2):191–197. doi: 10.1016/s0959-4388(97)80007-4. [DOI] [PubMed] [Google Scholar]

- Schultz W. Getting formal with dopamine and reward. Neuron. 2002;36(2):241–263. doi: 10.1016/s0896-6273(02)00967-4. [DOI] [PubMed] [Google Scholar]

- Schultz W. Neural coding of basic reward terms of animal learning theory, game theory, microeconomics and behavioural ecology. Curr Opin Neurobiol. 2004;14(2):139–147. doi: 10.1016/j.conb.2004.03.017. [DOI] [PubMed] [Google Scholar]

- Seeley WW, Menon V, Schatzberg AF, Keller J, Glover GH, Kenna H, Reiss AL, Greicius MD. Dissociable intrinsic connectivity networks for salience processing and executive control. J Neurosci. 2007;27(9):2349–2356. doi: 10.1523/JNEUROSCI.5587-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Serences JT. Value-based modulations in human visual cortex. Neuron. 2008;60(6):1169–1181. doi: 10.1016/j.neuron.2008.10.051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Small DM, Gitelman D, Simmons K, Bloise SM, Parrish T, Mesulam MM. Monetary incentives enhance processing in brain regions mediating top-down control of attention. Cereb Cortex. 2005;15(12):1855–1865. doi: 10.1093/cercor/bhi063. [DOI] [PubMed] [Google Scholar]

- van Schouwenburg M, Aarts E, Cools R. Dopaminergic modulation of cognitive control: distinct roles for the prefrontal cortex and the basal ganglia. Curr Pharm Des. 2010;16(18):2026–2032. doi: 10.2174/138161210791293097. [DOI] [PubMed] [Google Scholar]

- Weissman DH, Roberts KC, Visscher KM, Woldorff MG. The neural basis of momentary lapses of attention. Nat Neurosci. 2006;9:971–978. doi: 10.1038/nn1727. [DOI] [PubMed] [Google Scholar]

- Wise RA. Dopamine, learning and motivation. Nat Rev Neurosci. 2004;5(6):483–494. doi: 10.1038/nrn1406. [DOI] [PubMed] [Google Scholar]

- Wittmann BC, Schott BH, Guderian S, Frey JU, Heinze HJ, Duzel E. Reward-related FMRI activation of dopaminergic midbrain is associated with enhanced hippocampus-dependent long-term memory formation. Neuron. 2005;45(3):459–467. doi: 10.1016/j.neuron.2005.01.010. [DOI] [PubMed] [Google Scholar]

- Woldorff MG, Hazlett CJ, Fichtenholtz HM, Weissman DH, Dale AM, Song AW. Functional parcellation of attentional control regions of the brain. J Cogn Neurosci. 2004;16(1):149–165. doi: 10.1162/089892904322755638. [DOI] [PubMed] [Google Scholar]

- Zink CF, Pagnoni G, Chappelow J, Martin-Skurski M, Berns GS. Human striatal activation reflects degree of stimulus saliency. Neuroimage. 2006;29(3):977–983. doi: 10.1016/j.neuroimage.2005.08.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zink CF, Pagnoni G, Martin-Skurski ME, Chappelow JC, Berns GS. Human striatal responses to monetary reward depend on saliency. Neuron. 2004;42(3):509–517. doi: 10.1016/s0896-6273(04)00183-7. [DOI] [PubMed] [Google Scholar]