Abstract

The article describes a systematic assessment model and its potential application to a college's ongoing curricular assessment activities. Each component of the continuous quality improvement model is discussed, including (1) the definition of a competent practitioner, (2) development of the core curricular competencies and course objectives, (3) students’ baseline characteristics and educational attainment, (4) implementation of the curriculum, (5) data collection about the students’ actual performance on the curriculum, and (6) reassessment of the model and curricular outcomes. Over time, faculty members involved in curricular assessment should routinely reassess the rationale for selecting outcomes; continually explore reliable and valid methods of assessing whether students have reached their learning goals; get legitimate support for assessment activities from faculty members and administration; routinely review curricular content related to attitudinal, behavioral, and knowledge-learning outcomes; and determine what to do with the collected assessment data.

Keywords: assessment, curricular assessment, continuing quality improvement, competency

INTRODUCTION

As emphasized by Standard 15 of Standards 2007 of the Accreditation Council for Pharmacy Education (ACPE), assessment is a crucial component of a college or school of pharmacy's evaluation plan to ensure the attainment of critical student learning outcomes: “The assessment activities must employ a variety of valid and reliable measures systematically and sequentially throughout the professional degree program. The college or school must use the analysis of assessment measures to improve student learning and the achievement of the professional competencies.”1

This article describes a systematic assessment plan and its application to ongoing assessment activities. The model allows individual colleges and schools to incorporate reliable and valid measures aligned with their own pedagogy and preexisting quality measures. Other models exist2 and have some similarities but either have not been empirically tested or have been used for a single class vs. an entire curriculum.3 The proposed model has not yet been tested, although evidence for specific relationships within the model (usually bivariate) is presented to support its conceptualization.

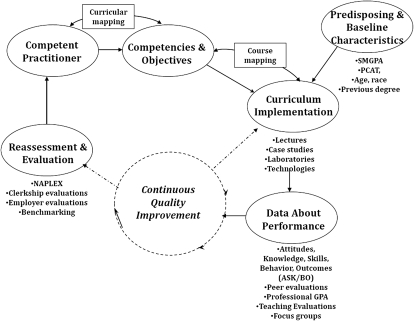

The curricular assessment model (Figure 1) incorporates principles of continuous quality improvement (CQI) in the health professions.4,5 The primary CQI tenets incorporated into the model include goal and objective development and linkage; reliable and valid measurements to assess the performance of a college on its goals and objectives; and routine reassessment of the endpoints and performance. The model and the specific patterns of the relationships illustrated among the components can be empirically tested and elucidated using multivariate and structural equation models. Data used in the model are not necessarily new or collected exclusively for quality assessment and improvement purposes, but rather are collected as part of a college's routine, ongoing activities. In this article, the 6 components of the CQI model are discussed, including the definition of a competent practitioner, development of the core curricular competencies and course objectives, students’ baseline characteristics, implementation of the curriculum, data collection about the students’ actual performance on the curriculum, and reassessment and evaluation of curricular outcomes.

Figure 1.

College of pharmacy assessment model.

APPLICATION OF THE CQI MODEL TO CURRICULUM ASSESSMENT PROGRAMS

The Competent Practitioner

The mission of a college is generally stated as some permutation of educating contemporary practitioners who are competent to practice. Professional competency is an ever-changing construct requiring ongoing assessment, evaluation, innovation, and adaptation because of the changing nature of pharmacy practice. Information regarding the knowledge, skills, and attitudes (eg, professionalism) of a competent practitioner can be routinely solicited from preceptors, alumni, and employers. For example, experts’ opinions regarding student and pharmacist competency can be solicited during preceptor training programs, from local and national advisory boards, and by surveying employers of the college's graduates, among others. Information regarding contemporary practice can then be translated into professional competencies and objectives.

Core Competencies and Objectives

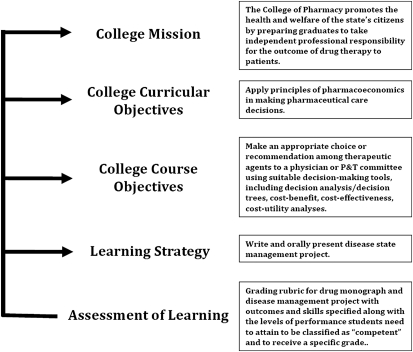

The second component of the assessment model is the core competencies and objectives at both curricular and course levels. A college's assessment plan should be directly aligned with its mission and goals and its strategies for attaining those goals (Figure 2). Core curricular competencies are central to successfully assessing the curriculum and ensuring that the desired learning and performance outcomes are met. Curricular competencies are dynamic and updating them is an ongoing process. Developing core curricular competencies requires consideration of multiple resources.1 In addition to the opinions of external and internal stakeholders, other useful resources include Center for the Advancement of Pharmaceutical Education guidelines,6 curricular competencies from other colleges and schools, and Appendix B of the ACPE Guidelines and Standards.1 The final version of the institution's specific competencies should receive formal support of the faculty and be communicated to outside stakeholders (eg, preceptors, alumni, and employers).

Figure 2.

Pathway for Curricular Development and Assessment

Figure 2 illustrates how the college-wide mission can be transformed into measurable learning and assessment strategies. For example, the college's mission is to “promote health and welfare of the citizens…by preparing graduates to take independent professional responsibility for the outcome of drug therapy in patients.” One model that describes a variety of drug therapy treatment outcomes is the Economic, Clinical, and Humanistic Outcomes (ECHO) model.7 Using the ECHO model, an appropriate core curricular objective would be to “apply principles of pharmacoeconomics in making pharmaceutical care decisions.” A fitting course objective for the curriculum might be to “make an appropriate choice or recommendation…including decision analysis/decision trees, cost-benefit-cost effectiveness or cost-utility analysis.” The learning strategy used to evaluate whether the course objective is met might be an assignment developing a medication monograph that includes cost/pharmacoeconomic considerations.” Finally, the assessment of learning might use a rubric to evaluate the medication monograph. This approach links assessment of learning directly to the mission of the college.

In a college's assessment program, steps that are globally designated as developing core competencies and objectives are: (1) defining the outcome, (2) identifying approaches to measure the outcome, (3) setting the acceptable standard of expected outcome (ie, the benchmark), and (4) determining the specifics of data collection.4,5 Curricular mapping is a strategy for aligning the traits and attributes of a competent practitioner with the college's core competencies and course/lecture objectives.2

Mapping has 3 applications in this assessment model. For the purpose of describing this model, the first 2 applications are curricular mapping and course mapping. The purpose and process are the same but the content and level of specificity in the measurement are different. The third application, mapping to measurement of the level of competency, is discussed later in the paper. Figure 1 illustrates that curricular mapping links the knowledge and skills required by a competent practitioner with the core curricular competencies that must be met to practice competently. Curricular mapping was conceived as a process for recording the content and skills actually taught in the classroom. It has served as both an instrument and a procedure for determining and monitoring the curriculum.8 However, over the nearly 3 decades since that time, descriptions have included statements about mapping intended outcomes and identifying acceptable outcome standards (eg, curricular competencies and course objectives). In contrast, course mapping links the core curricular competencies with the subsequent course- and lecture-level objectives, learning strategies, and the final endpoint, assessment of learning outcomes. In the model (Figure 1), course mapping links competencies and objectives with curriculum implementation.

Assessment of Student Baseline Characteristics

Understanding the impact of inherent differences among students’ baseline predisposing factors (eg, educational, social, and economic background) is prerequisite to a discussion about the hypothesis of a causal path between core competencies and objectives and implementation of the curriculum. Differences in baseline predisposing factors (eg, learning styles) impact individual students’ responses to teaching styles and may indirectly impact outcomes. Whether the impact of baseline predisposing factors on learning outcomes is direct or indirect via learning strategies incorporated into the implementation of the curriculum has not yet been adequately studied, as evidenced by the assumption of a direct impact in the studies cited above. In short, students’ baseline knowledge, skills, and other abilities must be controlled to effectively assign sole causation of the curriculum's implementation to the outcomes. For example, faculty members sometimes assume that students “know nothing” when they enter pharmacy school. This is a faulty assumption, as past experiences do impact progression throughout the curriculum.9 Students admitted into the college often have work experience as pharmacy technicians, have pharmacist family members, or have volunteered in pharmacies. The curriculum must take “starting point” heterogeneity into account and assess whether it modifies students’ success with the implemented curriculum.

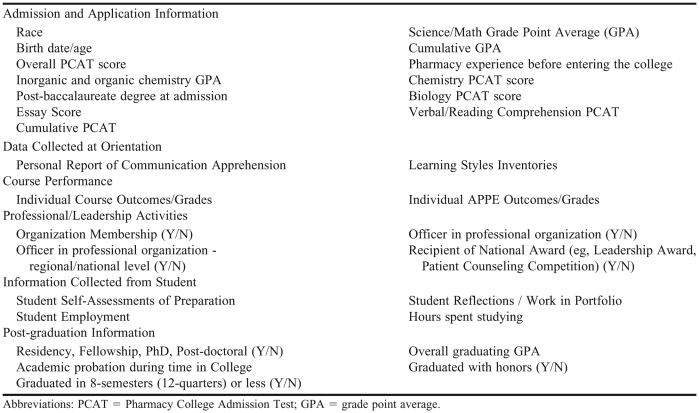

To predict learning outcomes, various sources of information are used to assess students’ baseline status at the time they enter college. Typical baseline characteristics (Figure 1) gathered for learning outcome assessment purposes include information presented in the students’ applications, including gender,10-14 race,10-14 age,10,15,16 science/math grade point average (GPA),10,15 Pharmacy College Admission Test (PCAT) scores (components and/or composite),10,15,17,18 prior academic degree,10,12,18-21 and grades from prerequisite courses.17,22,23 Other baseline information valuable to curriculum implementation (eg, teaching styles/strategies to optimize student learning) includes assessment of students’ computer skills, knowledge of pharmacy practice and professional pathways, and educational foundation aptitudes, such as communication apprehension24,25 and learning style.26,27

Implementation of the Curriculum

Successful implementation of the curriculum is facilitated by course mapping. As part of the mapping process, accreditation standards, individual course objectives, teaching methods, materials, and anticipated assessment methods (Figure 2) should be periodically reviewed and/or approved by the college's curriculum committee to ascertain currency and appropriateness. Instructors should be able to explain: how the course will be taught (eg, primarily lecture, seminar, laboratory, case studies); whether the teaching and assessment methods are appropriate for the course and allow adequate ascertainment of whether the learning objectives were met; and how the course objectives are aligned and/or contribute to meeting the college's core curricular competencies.

As part of its curriculum implementation, a college provides a variety of learning activities in the curriculum. Colleges use enhanced technology to transmit classroom lectures to students in other parts of the state, nation, and world; and college faculty members develop teaching strategies, such as skill-based laboratories, case studies, assignments to reinforce and assess students’ knowledge, role-playing scenarios, and formal peer and faculty assessments of knowledge and clinical and communication skills. Colleges must ensure a direct link between competencies and objectives and learning strategies implemented in the curriculum to achieve the intended professional outcomes. This is most effectively accomplished through course mapping.

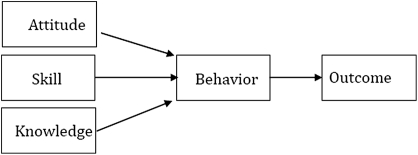

Another potential measure of the effectiveness of the teaching process and the link between competencies and objectives, implementation of the curriculum and assessing its outcomes is a melding of the ASK (attitude, skills, knowledge) and ABO (attitude, behavior, outcomes) models (Figure 3) to drive development and assessment of relevant objectives and learning outcomes. Using the ASK model as part of the assessment model recognizes that certain attitudes, skills, and knowledge are core competencies and objectives of the curriculum. Successful implementation of the curriculum assumes that inputs, such as classroom and practice experiences, will be used to achieve the desired outcome, ie, transforming a student into “a successful, competent practitioner with an exceptional professional attitude that motivates the pharmacist to perform at the highest skill levels using up-to-date clinical and non-clinical knowledge.” Theoretically, implementation of the curriculum passes on knowledge, skills, and attitudes that are melded to shape the appropriate academic and professional practice behaviors that result in the desired academic, professional, and clinical outcomes. However, current measurement models assume that all inputs into the curriculum contribute equally to the successful development of a pharmacist, despite the fact that one instructional technique may be better than another at imparting knowledge or attitude change. Careful examination and elucidation of the ASK/ABO portion of the model embedded in the “implementation of the curriculum” can and should be empirically tested. The Journal is replete with examples of single and combined teaching methods that effect knowledge, but assessment of the impact of different pedagogical methods on change in various other aspects of ASK/ABO is rare. For example, in Volume 75, Issue 6, of the Journal, 8 articles were published that met this description.28-35

Figure 3.

Development of Professional Practice Behaviors and Outcomes

Data Collection About Performance

Once the adequacy of the curriculum's implementation is ensured, valid assessment of attainment of a college's competencies can be made. Data collection consumes the most time, effort, and resources in a typical assessment program. Colleges can use multiple objective and subjective measures of effectiveness to assess the curriculum and its implementation and closely monitor students’ progress throughout their time in the college.

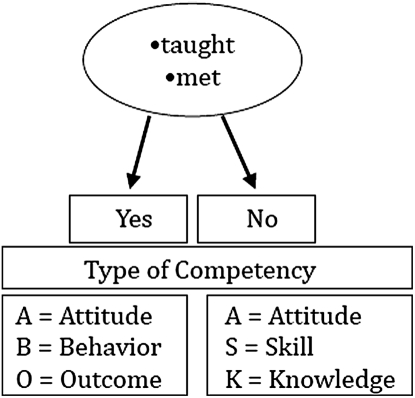

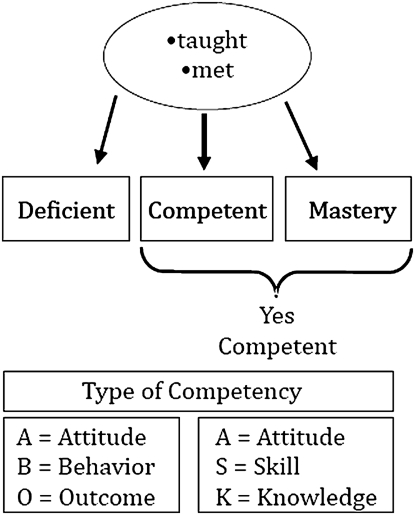

Figures 4 and 5 show 2 measurement models of competency assessment. The pedagogical distinction between the 2 models is essential to the quantitative and reproducible assessment of students’ learning and curriculum evaluation, but the individual curricular competencies and course objectives are the same in both models. Figure 4 shows a more traditional assessment model wherein the indicator is, “Did you teach the material in your class?” This binary outcome measure is different from a model that determines the competency level that should be attained at a particular point in the curriculum (eg, first, second, third, or fourth year). Newer mapping models include metrics for ASK and ABOs, such as introduction and reinforcement of and emphasis on content.2 However, the specific set of metrics in this article refers to the outcomes of the teaching process vs. the teaching process itself and provides different information for decision-making. Based on models such as Bloom's Taxonomy of Learning,36 for example, a learning objective that must be demonstrated is different from one that is just to be understood. In the model in Figure 5, assessment of “competence” and “mastery” of outcomes is much more complicated and difficult than simply assessing whether specific content has been taught in a course. Along with teaching of specific content or skills, the question “Do students meet the course's objectives (eg, knowledge, skill, attitudes) at the designated learning level?” must be answered. Teaching the content alone does not ensure that students are competent or have mastered the content.

Figure 4.

Presence or absence assessment model for curricular mapping for competency-based course outcomes.

Figure 5.

Multilevel assessment model for curricular mapping for competency-based course outcomes.

If course mapping is implemented as intended, once course objectives are developed and articulated for each class, then faculty members will formally develop specific learning strategies appropriate for each objective. The learning assessment strategy should be consistent with the level of learning that is expected (introductory, intermediary, advanced, or another appropriate level using Bloom's or another taxonomy). It also should allow for systematic building of course complexity analogous to moving up Bloom's Taxonomy; for example, from knowledge to application to synthesis. Each learning strategy should have its own objective measure(s) of performance at the appropriate level of learning, as would assignments, laboratory skill exercises, and introductory and advanced experiential programs.

After the objectives and competencies and their measurement are agreed upon, the body responsible for curricular assessment should develop a strategy that adequately reflects the complexities of the curriculum and the sophistication of learning that is required to become a competent pharmacy professional, including assessing whether students are being taught the knowledge, attitudes, and skills needed to meet the competencies; determining whether the level the ASK competencies and objectives are being taught in the curriculum (introductory, advanced); establishing how competencies are being assessed (eg, are the assessment strategies appropriate for the level of learning); and confirming that the ASK competencies and objectives can be applied in appropriate situations. Assessment strategies should ensure both teaching and assessments are aligned at the correct levels.37 For example, questions on examinations that require students to compare and contrast (eg, cost-utility with cost-benefit analysis), when the material is taught at the knowledge level (eg, list the 4 types of pharmacoeconomic analyses), are not good indicators of the curriculum's effectiveness.

In addition to student-specific ASK and ABO, colleges and schools may have various sources of curricular assessment information data (Appendix 1), including information from faculty members, preceptors, alumni, and employers. These different strategies include closed- and open-ended questions on teaching evaluations, peer evaluation, focus groups with students near the end of each semester, and curriculum committee review.

Colleges’ curricular assessment programs should provide course coordinators and faculty members with feedback and guidance regarding portions of the course that can be improved and provide instructors with students’ opinions regarding whether course objectives were met. Most universities require student evaluations for faculty members in professional classes. Questions in university-wide question pools might include student assessments of the instructors’ description of course objectives and whether course learning activities met the objectives. In addition to the university-wide questions, individual colleges might query students with measures about learning competencies directly relevant to the program, such as questions germane to lifelong learning and problem solving. Course effectiveness-related questions might include items to assess whether the instructor “taught course material in a way that was relevant to the practice of pharmacy,” “taught how to identify possible solutions to problems related to the course material,” and “integrated course materials relevant to material from other courses.” Course and instructor evaluations might have open-ended questions to provide faculty members with extra subjective and specific course feedback. Open-ended comments should be confidential and available only to the instructor, unless the instructor chooses to share them.

Colleges also may use informal and formal peer teaching evaluations to ensure effective implementation of the curriculum. Informal formative peer evaluations are strongly encouraged for new faculty members, new courses, and periodically, for established courses. Informal assessments and evaluations might be coordinated by individual instructors with colleagues on an ad hoc basis. In both instances, when a formal peer evaluation is undertaken, faculty members internal and external to the college review, assess, and provide a summative evaluation of the faculty member's teaching. In each of the previous examples, the aggregate results of the quantitative and qualitative assessments can be used to make changes in the curriculum's implementation to improve outcomes.

When using new and innovative assessments, evaluation of the reliability and validity of the measures is an important component in assessing student learning. Every effort should be made to incorporate appropriate psychometric principles to assess the quality of the collected information.38 In other cases, preexisting measures validated in other disciplines can be used to assess the impact of the curriculum on areas other than academics. For example, one college investigated student burnout in its new distance learning program39 and another assessed perceived stress of students in a 3-year doctor of pharmacy program.40

Students’ attainment of nonacademic curricular and course outcomes can be assessed in a variety of ways and levels in the classroom, during clinical training, and postgraduation. Professional training presents challenges in instilling traditional values of altruism, responsibility, accountability, and other professional attributes. It is important to determine whether educational programs are providing students with the correct professional attitudes and whether students are putting them into practice. If any 1 leg of the 3-legged stool (ie, attitudes, skills, knowledge) is faulty, corrective measures are needed. Although it will be difficult, examining performance outcomes in authentic practice situations is necessary to ascertain whether students are internalizing professional attitudes. Given the stated mission of the college and profession (eg, promote health and welfare of the state's citizens), professional attitudes should be reflected in practice behaviors and, ultimately, better quality of care after graduation.

A significant shift in the balance between academic classroom and practice-oriented coursework has occurred since the implementation of Standards 2007. For example, within the past 5 years, 300 hours of introductory pharmacy practice experiences (IPPEs) have been added to curricular requirements.1 Assessment of experiential programs is one of the most important yet unreliable sources of information about our students’ performance and preparation for practice, including preceptor assessments in IPPEs and advance pharmacy practice experiences (APPEs). Colleges use preceptors’ assessments of student performance during APPEs as the primary performance-based assessment of the curriculum. Over the past 2 years or more, AACP has formed working groups to develop standardized measures for IPPE and APPE performance competencies. In addition, multiple consortia of colleges have developed standardized instruments for APPE assessment, although most of the consortia are geographically based.41

Next, during and at the end of students’ tenure in the college, various indicators of professional and leadership development, as a proxy for professionalization, can be assessed at the local, state, and national levels, including research awards, leadership awards (eg, American Pharmacists Association, American Society of Health-System Pharmacists [ASHP], Rho Chi, Patient Counseling Competition) and elected and appointed positions (Academy of Student Pharmacists, ASHP, Rho Chi, Phi Lambda Sigma, Kappa Epsilon). At the other end of the spectrum, the effectiveness of a college curriculum also includes minimization of unprofessional behaviors, such as student conduct code violations, academic dishonesty, chemical impairment, Health Insurance Portability and Accountability Act (HIPAA) violations, and harassment.

Factors that compete with students’ academic work may contribute to more or less success in the curriculum. For example, the number of hours spent participating in community activities or studying may have an impact on student success.16 While these factors are not within the control of the college, they should be part of the data that are collected and statistically controlled to assess their direct and indirect impact on performance outcomes.

Colleges also have more subjective means of assessing curricular effectiveness upon completion of the curriculum and after graduation. For example, student self-assessment of learning can provide valuable data to identify curriculum weaknesses38 although it should be used in conjunction with expert assessment (eg, through APPEs). Self-assessment allows for a rough estimate of students’ preparation to perform clinical skills as well as comparisons of consecutive classes to determine students’ perceptions to perform these skills at the same point in the curriculum. Likewise, over several years, trends in student perceptions of performance can be assessed over 4 years of the curriculum. Similarly, improvement in students’ self-assessments should occur at expected times given the curricular content and, whether the self-assessments reflect a relative lack of proficiency in areas of the curriculum that are not given much attention. For example, if a curriculum contains little or no financial management coursework, students’ perceptions in this area should be low relative to other areas, such as pharmacotherapy. Also, if a course is scheduled in the second year, students should be expected to improve between the end of the first and second years.

Colleges have a myriad of possibilities to routinely assess the quality of the curriculum, its implementation, and outcomes. Colleges should be continually evaluating alternative performance-based assessments at several different levels. As the academic enterprise evolves, innovative assessment strategies are needed to evaluate learning at the individual “classroom” level (ie, virtual classrooms; in-class, point-of-delivery assessment using “voting / polling” technology). More importantly, further evaluation of comprehensive endpoint assessments is warranted, including standardized practice experiences41 and progress42-44 and capstone/high-stakes testing.45,46

Reassessment and Evaluation

Most states require students to pass the North American Pharmacy Licensure Examination (NAPLEX) and the Multistate Pharmacy Jurisprudence Examination for licensure. High-stakes examinations of this type allow benchmarking with other colleges and schools on a standardized summative assessment measure, along with data that could potentially inform responsible academic bodies about the success of curricular changes (eg, changes in the aggregate and area scores of the NAPLEX). While one could argue that NAPLEX scores could be used to measure performance of the curriculum, they are also both a curriculum endpoint and professional “entry point.” Moreover, until NAPLEX data obtainable from the National Association of Boards of Pharmacy are more specific and can be aligned directly with curricular outcomes, they remain a gross measure of curricular success and, as such, are simply useful for benchmarking with other colleges and schools.

While most studies show that experiences in pharmacy school are the best predictors of post-licensure examination success47 (a direct effect), the fit and specification of the model need to be tested. In its most direct interpretation, the model would support the notion that participation in the curriculum is the only factor that impacts student performance. However, factors other than pharmacy training have an association with NAPLEX. For example, prepharmacy GPA and prepharmacy students’ scores on the PCAT48,49 are associated with NAPLEX scores, as well as GPA in pharmacy courses. As is the case with the relationship between baseline predisposing characteristics (eg, inherent academic abilities) on curricular performance, individual predisposing baseline differences may result in score differences on the NAPLEX, although the exact mechanisms of these differences has not yet been elucidated.

Theoretically, curricular assessment plans should never be complete if colleges are committed to continuous curricular improvement. The most distinctive feature of the model (Figure 1) is the CQI feedback loop. The feedback loop represents the notion that assessing students’ learning is an ongoing, ever-evolving task. Two dashed arrows emanating from the feedback loop in Figure 1 illustrate the ongoing nature of the data-reassessment/evaluation relationship. For example, when curricular changes are made, performance data must be collected, evaluated, and assessed, and an improvement strategy developed and implemented. After the change is implemented, additional data are collected to ascertain whether the expected outcomes were achieved, then the cycle is repeated. As with the continuing evolution of the curriculum, the assessment plan needs to be routinely evaluated and reassessed to ensure that it aligns with the curriculum.

Faculty members involved in curricular assessment should routinely ask questions that reflect the following key assessment tenets over time. These questions should allow colleges’ curricula to keep up with professional practice advancements and change in response to areas identified as not meeting learning and performance standards.

Reassess the rationale for selecting outcomes. As the profession and curricular content evolve, so should the outcomes selected as indictors of success. While the specific outcomes may change and evolve, the rationale for selecting the outcomes should remain relatively stable. For example, curricular and course outcomes should reflect successful completion of the college's mission. There should be direct alignment between the learning level that is required and the type of outcome (ie, memorization vs. evaluation of information). While the rationale for selecting specific outcomes should not radically change with the newest fad, it should inform colleges’ assessment priorities and drive the day-to-day plan to efficiently, effectively, and regularly assess its educational outcomes. Prioritizing is essential. There are never sufficient resources to assess every outcome and doing so is not necessary. A priority should be to assess students’ attitudes, skills, and knowledge in authentic circumstances. The heart of the task is to measure well-selected outcomes covering the most important attributes that represent the curriculum's attitude, behavior, and cognitive outcome goals.

Continually assess reliable and valid methods of evaluating student attainment of learning goals. Instruments should measure students’ progress toward desired outcomes and competencies; general knowledge and comprehension; specific knowledge and comprehension; and critical thinking and problem-solving skills. These enhanced assessment strategies should incorporate complex tasks that integrate meaningful practice-based knowledge and skills and should be tested and subject to validation by others. More innovative, thoughtful, and selective assessment methods are needed.

Ensure legitimate faculty and administrative support of assessment and evaluation activities to enhance perceptions of the value. Faculty members routinely experiment with innovative teaching strategies, but results are infrequently evaluated or reported in the literature. Faculty members rarely make time to document teaching innovations and effectiveness because of the perception that academic scholarship has low value and rewards compared with other types of scholarship. Faculty and administration support of those charged with assessing the curriculum and its success is equally essential in attaining student learning outcomes and with facilitating changes when weaknesses are uncovered. College assessment programs require meaningful support at all levels, but this requires adequate resources in faculty release time, data collection, analysis, committee meetings and acknowledgement of the value of the activity for promotion and tenure purposes. In a university system where “academic freedom” is an important foundational tenet, there has to be administrative authority to ensure that curricular changes are informed by assessment outcomes. Strong leadership is necessary to balance academic freedom issues with the implementation of a quality educational strategy. Faculty, college, and university support of the persons responsible for implementing and evaluating the curriculum is essential.

Routinely review curricular content related to attitude, behavior, and outcomes. Identification of appropriate practice competencies is an important but difficult first component in assessing curricular outcomes. However, even more difficult are the second and third components: identification of the critical knowledge, skill, and attitude elements of the competencies and development of mechanisms to evaluate them. Honest and substantive mapping of the knowledge, attitudes, and skills embodied in the curriculum also must be conducted to ascertain whether courses are really offering learning strategies (eg, authentic practice simulations or experiential opportunities). Course evaluations should be more reflective and precise and relate directly to curriculum objectives. Locating informational and assessment gaps are a continual part of the plan in curricular and course assessments.

Determine what is to be done with the collected data for program improvement. Data are often collected and never used. Because collecting information without a direct and meaningful purpose is burdensome to both faculty members and students, colleges must avoid collecting redundant information by automating data collection, reporting, and assessment. Multiple means of collecting and prioritizing data must be integrated into normal operations, and various sources of preexisting data should be used to triangulate on methods of assessing successful learning, such as preceptor evaluations and outcomes in performance-based classes.

SUMMARY

Emphasis placed on accountability for educational outcomes is vital to a superior education and providing citizens with the highest quality care. Although only some portions of the proposed CQI model have been tested empirically, elaboration of the model to identify the most important direct and indirect factors affecting the explanation of academic success would be valuable. The model provides guidance for testing quality-related factors that can be manipulated to investigate their impact on learning and skill performance. In order to adequately test this model, faculty members involved in curricular assessment should routinely reassess the rationale for selecting outcomes, continually explore reliable and valid methods of assessing whether students have achieved their learning goals, obtain legitimate faculty and administrative support for assessment activities; routinely review curricular content related to attitudinal, behavioral, and knowledge-learning outcomes; and determine what to do with the assessment data after it is collected.

Appendix 1.

Examples of Data for Curricular Assessment

REFERENCES

- 1.Accreditation Council for Pharmaceutical EducationStandards 2007. Accreditation Standards and Guidelines. http://www.acpe-accredit.org/standards/default.asp Accessed October 6, 2011.

- 2.Kelley KA, McAuley JW, Wallace LJ, Frank SG. Curricular mapping: process and product. Am J Pharm Educ. 2008;72(5):Article 100. doi: 10.5688/aj7205100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Draugalis JR, Slack MK. A continuous quality improvement model for developing innovative instructional strategies. Am J Pharm Educ. 1999;63(4):354–358. [Google Scholar]

- 4.Institute of Medicine. Health Professionals Education: A Bridge to Quality. Washington, DC: National Academy Press; 2003. [Google Scholar]

- 5.Batalden PB, Stoltz PK. A framework for the continual improvement of health care: building and applying professional and improvement knowledge to test changes in daily work. Jt Comm J Qual Improv. 1993;19(10):424–452. doi: 10.1016/s1070-3241(16)30025-6. [DOI] [PubMed] [Google Scholar]

- 6.American Association of Colleges of Pharmacy. Center for the Advancement of Pharmaceutical Education. Educational Outcomes 2004. http://www.aacp.org/resources/education/Documents/CAPE2004.pdf. Accessed October 6, 2011.

- 7.Kozma CM, Reeder CE, Schultz RM. Economic, clinical and humanistic outcomes: a planning model for pharmacoeconomic research. Clin Ther. 1993;15(6):1121–1132. [PubMed] [Google Scholar]

- 8.O'Malley PJ. Learn the truth about curriculum. Exec Educ. 1982;4(8):14–26. [Google Scholar]

- 9.Barnett ME, T-L Tang T, Sasaki-Hill D, Kuperberg JR, Knapp K. Impact of previous pharmacy work experience on pharmacy school academic performance. Am J Pharm Educ. 2010;74(3):Article 42. doi: 10.5688/aj740342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bandalos DL, Sedlacek WE. Predicting success of pharmacy students using traditional and nontraditional measures by race. Am J Pharm Educ. 1989;53(2):145–148. [Google Scholar]

- 11.Ried LD, McKenzie M. A preliminary report on the academic performance of pharmacy students in a distance education program. Am J Pharm Educ. 2004;68(3):Article 65. [Google Scholar]

- 12.Chisholm MA, Cobb HH, Kotzan JA. Significant factors for predicting academic success of first-year pharmacy students. Am J Pharm Educ. 1995;59(4):374–376. [Google Scholar]

- 13.Kelly KA, Secnik K, Boye ME. An evaluation of the pharmacy college admissions test as a tool for pharmacy college admissions committees. Am J Pharm Educ. 2001;65(3):225–230. [Google Scholar]

- 14.Carroll CA, Garavalia LS. Gender and racial differences in select determinants of student success. Am J Pharm Educ. 2002;66(4):382–387. [Google Scholar]

- 15.Meagher DG, Pan T, Perez CD. Predicting performance in the first year of pharmacy school. Am J Pharm Educ. 2011;75(5):Article 81. doi: 10.5688/ajpe75581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Charupatanapong N, McCormick WC, Rascati KL. Predicting academic performance of pharmacy students: demographic comparisons. Am J Pharm Educ. 1994;58(3):262–268. [Google Scholar]

- 17.Kotzan JA, Entrekin DN. Validity comparison of PCAT and SAT in the prediction of first-year GPA. Am J Pharm Educ. 1977;41(1):4–7. [PubMed] [Google Scholar]

- 18.Chisholm MA, Cobb HH, DiPiro JT, Lauthenschlager GJ. Development and validation of a model that predicts the academic ranking of first-year pharmacy students. Am J Pharm Educ. 1999;63(4):388–394. [Google Scholar]

- 19.Chisholm MA. Students performance throughout the professional curriculum and the influence of achieving a prior degree. Am J Pharm Educ. 2001;65(4):350–354. [Google Scholar]

- 20.Chisholm MA, Cobb HH, Kotzan JA, Lautenschlager G. Prior four-year degree and academic performance of first-year pharmacy students: a three year study. Am J Pharm Educ. 1997;61(3):278–281. [Google Scholar]

- 21.Torosian G, Marks RG, Hanna DW, Lepore RH. An analysis of admission criteria. Am J Pharm Educ. 1978;42(1):7–10. [Google Scholar]

- 22.Lioa WC, Adams JP. Methodology for the prediction of pharmacy student success. I: preliminary aspects. Am J Pharm Educ. 1977;41(2):124–127. [PubMed] [Google Scholar]

- 23.Hardigan PC, Lai LL, Arneson D, Robeson A. Significance of academic merit, test scores, interviews and the admissions process: a case study. Am J Pharm Educ. 2001;65(1):40–44. [Google Scholar]

- 24.Hastings JK, West DS. Comparison of outcomes between two laboratory techniques in a pharmacy communications course. Am J Pharm Educ. 2003;67(4):Article 101. [Google Scholar]

- 25.Berger BA, Baldwin JH, McCroskey JC, Richmond VP. Communication apprehension in pharmacy students: a national study. Am J Pharm Educ. 1983;47(2):95–101. [Google Scholar]

- 26.Shuck AA, Phillips CR. Assessing pharmacy students’ learning styles and personality types: a ten-year analysis. Am J Pharm Educ. 1999;63(1):27–33. [Google Scholar]

- 27.Austin Z. Development and validation of the pharmacists' inventory of learning styles (PILS) Am J Pharm Educ. 2004;68(2):Article 37. [Google Scholar]

- 28.Gilligan AM, Warholak TL, Murphy JE, Hines LE, Malone DC. Pharmacy students’ retention of knowledge of drug-drug interactions. Am J Pharm Educ. 2011;75(6):Article 110. doi: 10.5688/ajpe756110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ryan GJ, Chesnut R, Odegard PS, Dye JT, Jia H, Johnson JF. The impact of diabetes concentration programs on pharmacy graduates’ provision of diabetes care services. Am J Pharm Educ. 2011;75(6):Article 112. doi: 10.5688/ajpe756112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Curtin LB, Finn LA, Czosnowski QA, Whitman CB, Cawley MJ. Computer-based simulation training to improve learning outcomes in mannequin-based simulation exercises. Am J Pharm Educ. 2011;75(6):Article 113. doi: 10.5688/ajpe756113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Woelfel JA, Boyce E, Patel RA. Geriatric care as an introductory pharmacy practice experience. Am J Pharm Educ. 2011;75(6):Article 115. doi: 10.5688/ajpe756115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Brown SD, Pond BB, Creekmore KA. A case-based toxicology elective course to enhance student learning in pharmacotherapy. Am J Pharm Educ. 2011;75(6):Article 118. doi: 10.5688/ajpe756118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Yuksel N. Pharmacy course on women's and men's health. Am J Pharm Educ. 2011;75(6):Article 119. doi: 10.5688/ajpe756119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Roche VF, Limpach AL. A collaborative and reflective academic advanced pharmacy practice experience. Am J Pharm Educ. 2011;75(6):Article 120. doi: 10.5688/ajpe756120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Petrie JL. Integration of pharmacy students within a level II trauma center. Am J Pharm Educ. 2011;75(6):Article 121. doi: 10.5688/ajpe756121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Bloom BS, Engelhart MD, Furst EJ, Hill WH, Krathwohl D. Taxonomy of educational objectives. New York: McKay; 1956. the cognitive domain. [Google Scholar]

- 37.Alsharif NZ, Galt KA. Evaluation of an instructional model to teach clinically relevant medicinal chemistry in a campus and a distance pathway. Am J Pharm Educ. 2008;72(2):Article 31. doi: 10.5688/aj720231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Ried LD, Brazeau GA, Kimberlin C, Meldrum M, McKenzie M. Students' perceptions of their preparation to provide pharmaceutical care. Am J Pharm Educ. 2002;66(4):347–356. [Google Scholar]

- 39.Ried LD, Motycka C, Mobley C, Meldrum M. Comparing burnout of student pharmacists at the founding campus with student pharmacists at a distance. Am J Pharm Edu. 2006;70(5):Article 114. doi: 10.5688/aj7005114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Frick LJ, Frick JL, Coffman RE, Dey S. Student stress in a three-year doctor of pharmacy program using a mastery learning education model. Am J Pharm Educ. 2011;75(4):Article 64. doi: 10.5688/ajpe75464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ried LD, Nemire R, Doty R, et al. How do you measure SUCCESS? an advanced pharmacy practice experience competency-based student performance assessment program. Am J Pharm Educ. 2007;71(6):Article 128. doi: 10.5688/aj7106128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.National Association of Boards of Pharmacy. Pharmacy Curriculum Outcomes Assessment. http://www.nabp.net/programs/assessment/pcoa/faqs/#1. Accessed October 6, 2011.

- 43.Alston GL, Love BL. Development of a reliable, valid annual skills mastery assessment examination. Am J Pharm Educ. 2010;74(5):Article 80. doi: 10.5688/aj740580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Szilagyi JE. Curricular progress assessments: the MileMarker. Am J Pharm Educ. 2008;72(5):Article 101. doi: 10.5688/aj7205101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Thomas SG, Hester EK, Duncan-Hewitt W, Vallaume WA. A high-stakes assessment approach to applied pharmacokinetics instruction. Am J Pharm Educ. 2008;72(6):Article 146. doi: 10.5688/aj7206146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Draugalis JR, Jackson TR. Objective curricular evaluation: applying the Rasch model to a cumulative examination. Am J Pharm Educ. 2004;68(2):Article 35. [Google Scholar]

- 47.Besinque K, Wong WY, Louie SG, Rho JP. Predictors of success rate in the California State Board of Pharmacy Licensure examination. Am J Pharm Educ. 2000;64(1):50–53. [Google Scholar]

- 48.Kuncel NR, Crede M, Thomas LL, Klieger DM, Seiler SN, Woo SE. A meta-analysis of the validity of the pharmacy college admission test (PCAT) and grade predictors of pharmacy student performance. Am J Pharm Educ. 2005;69(3):Article 51. [Google Scholar]

- 49.Lowenthal W, Wergin JF. Relationship among student preadmission characteristics, NABPLEX scores, and academic performance during later years in pharmacy school. Am J Pharm Educ. 1979;43(1):7–11. [Google Scholar]