Abstract

Objective. To implement and assess an interactive, clinically applicable first-year physiology course using team-based learning.

Design. The course was designed on a team-based learning backbone using 6 modules, pre-class preparation, a readiness-assurance process, and in-class application. Integrative cases were used to review concepts prior to examinations. Various assessment methods were used to measure changes, including course evaluations, an attitudinal survey tool, and a knowledge examination.

Assessment. Course evaluations indicated a higher perception of active learning in the revised format compared with that of the previous year's course format. There also were notable differences in opportunities to promote communication skills, work as part of a team, and collaborate with diverse individuals. The assessment of content knowledge indicated that students who completed the revised format course outperformed the previous year's students in both foundational knowledge and application-type questions.

Conclusion. Using more team-based learning within a physiology course had a favorable impact on student retention of material and attitudes toward the course.

Keywords: team-based learning, self-paced learning, higher-order learning, curriculum, assessment, physiology

INTRODUCTION

As part of its accreditation standards, the American Council on Pharmaceutical Education (ACPE) promotes the development of critical thinking and problem-solving skills supported through application-type exercises.1 This standard is in concordance with research suggesting that learning that takes place in an appropriate context for the learner is superior to learning that occurs out of context.2 The context can be an environment (eg, an experiential practice site) or classroom activities relevant to the material being presented and, most importantly, to its eventual application. While many, if not all, pharmacy programs require physiology as a prerequisite course to admission, physiology courses may be aimed at general knowledge and comprehension of physiologic principles. The application of these concepts may occur at some later time, such as in pharmacotherapy courses or experiential training.

Large courses tend to use the lecture approach because it is an efficient and effective method of teaching knowledge-type material. According to McKeachie, instructors do not need to lecture when concepts are available in printed form at an appropriate level for the student.3 Instead, lecture should be used when the content cannot be learned through self-paced methods, such as reading or use of Web-based materials. This approach is supported by evidence that self-directed learning is more effective than lecture in the acquisition of foundational knowledge.4,5

As physiology is a prerequisite for pharmacy curricula and students will have varying degrees of comfort with the content, presenting physiology in a more self-directed learning environment could allow learners to focus on areas of their greatest weakness. With the responsibility for obtaining and mastering the foundational knowledge shifted to students, class time can be used to apply concepts relevant to pharmacy practice. In this learning environment, the emphasis consequently shifts to higher orders of learning (eg, application, analysis, synthesis and evaluation). This environment also would be expected to be more efficient for learning, as emphasis clearly is on the context in which a particular concept is learned.2

The use of methods such as team-based learning (TBL) allows for self-directed activities and focuses class time on application. The backbone of TBL is self-directed learning, and pre-exposure provides learners with a foundation on which to build connections. As students are provided with an increasing amount of background material, learning occurs more quickly and more deeply.2 In a meta-analysis, Hattie and Temperely found that allowing students to recognize their previously acquired cognitive ability is a powerful teaching practice, leading to enhanced student achievement.6

The goal of this course redesign was to increase active student engagement and focus on the application of physiologic concepts to pharmacy practice. The course was restructured using a backbone of TBL with the use of case studies and pre-class questions to facilitate discussion.

DESIGN

Physiology is a 4-credit-hour course offered during the first semester of the first year of pharmacy school at the University of North Carolina. Class was scheduled for 3 days per week as two 50-minute and one 2-hour session. There were 153 students enrolled in the course, 142 on the main campus in Chapel Hill and 11 at a satellite campus in Elizabeth City, NC. All class sessions were synchronously video teleconferenced. In most cases, the instructors in this course were also course instructors for the respective pharmacotherapy section of the material in subsequent years of the curriculum. All instruction originated from the Chapel Hill campus, even though there was a facilitator on the satellite campus to help with administrative logistics (eg, preparing folders). In previous years, the course had been managed and delivered by the School of Medicine using primarily a lecture approach.

The course was divided into 6 modules: cardiovascular, renal, central nervous system (CNS), gastrointestinal, respiratory, and endocrine physiology. Each content area was allocated approximately 8 hours of contact time, with the exception of gastrointestinal physiology, which was allocated only 4 hours. Students were randomly assigned to groups of 6 that were balanced for previous degree, reputation of the prior institution they attended (based on average SAT scores), age, and gender.

Each module consisted of 3 phases: pre-class preparation, readiness-assurance testing, and application of concepts to patient cases. Pre-class preparation was achieved using instructor-developed readings based on the course textbook, and additional resources, such as review articles and primary literature, were used when appropriate. The instructor-developed readings summarized major concepts and were less than 16 pages in length, with an average word count per reading assignment of 5,300 words, including learning objectives, graphs, tables, and figures. When appropriate, the readings referred to further details in a textbook.

The second phase of each module occurred during the first in-class day of the module. Students first completed an individual readiness assurance test (iRAT), which consisted of 10 to 15 multiple-choice questions based on the instructor-developed reading material. The assessment represented a broad overview of the material that included basic definitions, terminology, and concepts. Next, students were administered a quiz with their assigned team in what is referred to as the team readiness assurance test (gRAT), which used IF-AT forms (Epstein Educational Enterprise, Cincinatti, OH) to provide learners with immediate feedback. After the iRAT and gRAT were completed, the faculty facilitator addressed any questions regarding the quiz and introduced topics that would be discussed during the case days.

In the third phase, application and reinforcing foundational concepts were the primary focus. These discussions occurred on days with a 2-hour block to allow for adequate development of case application concepts as well as responses to student questions. On days that were 50-minute blocks, students were given the opportunity to complete assignments or prepare for the next section of material. For most modules, students were given patient cases prior to class and were expected to answer a series of questions relating to each case. These questions were divided into 2 categories: foundational knowledge (ie, questions they should look up prior to class and answer independently) and application of knowledge (ie, questions that required students to reflect on the foundational content prior to class). Students were encouraged to write down their responses to these pre-class questions. During class, the faculty facilitator began by reviewing the case and its related questions with the entire group of students. As much as possible, faculty members facilitated discussion of case concepts, allowing students to share correct answers and fill in gaps. For the section on the CNS, the class was divided into thirds (ie, students attended class in groups of 50 rather than the full cohort) and a Socratic discussion method was used. Cases in this section were not provided ahead of time.

In the fourth phase, prior to each examination, students worked in teams to complete integrative cases. The purposes of these cases were to review major concepts within that section of material, integrate the topics the examination would cover, and link the material to other courses (eg, biochemistry). These cases were reviewed the week prior to each examination.

Student learning was assessed using several mechanisms. The preparatory quizzes contributed approximately 25% of the course grade. The class selected the ratio of the iRAT to gRAT to their total quiz grade, given a range from 70%:30% to 30%:70%. After the midterm examination, the class was offered the opportunity to revote on this ratio.

There were 2 examinations that constituted 50% of the course grade, each covering 3 modules. Approximately half of each examination was composed of multiple-choice questions, with the remainder being a short-answer format. One week before the examination, students received 5 short-answer questions per module that might appear on the examination (15 questions total); 2 of these questions per module were included on the actual examination (6 total).

The integrative cases constituted the final 25% of the course grade. Students were provided 2 integrative cases, each having 10 questions, approximately 2 weeks prior to the examination. Students completed both cases but submitted only 1 for a grade.

The course was assessed using several methods. A 24-question assessment was developed and completed by second-year pharmacy students at the beginning of the academic year, approximately 9 months after completing their physiology course. This assessment covered all 6 modules and included 2 knowledge-type questions per module (12 total) and 2 application-type questions per module (12 total). This assessment was used to compare the previous course format with the revised, application-based format.

During the semester using the TBL format, the students were asked to complete a preliminary survey tool pertaining to their comfort level with each topic area (on a scale of 0 to 100, with 0 being no comfort and 100 being substantial comfort) and their interest in the topic area (ranked 1 through 6, with 1 being least interested and 6 being most interested). This survey tool was administered again at the conclusion of the course along with an additional final course survey tool pertaining to various aspects of the course.

After each case day, students were asked to complete an abridged instructor evaluation (2 evaluations for modules with 8 contact hours, and 1 evaluation for modules with 4 contact hours). This evaluation consisted of 5 Likert-type questions sampled from the end-of-semester instructor survey tools and 2 open-ended questions: “What did the instructor do that helped you learn?” and “What could have the instructor done to help you learn?” As this was a new course for all the instructors, the open-ended questions were based on the small-group instructional-diagnosis method for instructor evaluation7 and were used to help subsequent instruction during the course. The 5 Likert questions were also used to compare with the final end-of-semester evaluations to obtain preliminary information on time-dependency of instructor evaluations, ie, to examine whether there was a difference in instructor evaluations when assessed immediately after instruction rather than months later at the end of the semester. The end-of-semester evaluations were used as preliminary data to investigate any relationship between instructor evaluations immediately following instruction and instructor evaluations at the end of the semester. End-of-course evaluations also were used to investigate relationships with the student self-reporting of interest in or comfort with the content area.

EVALUATION AND ASSESSMENT

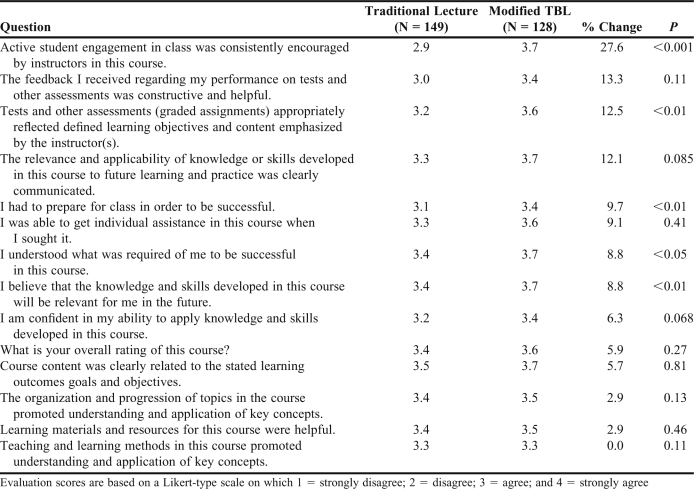

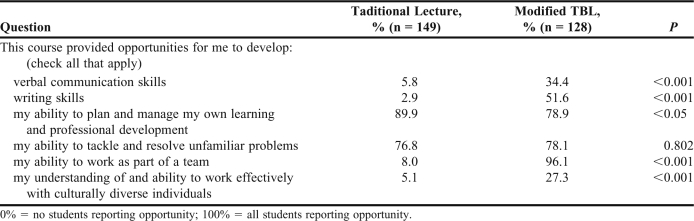

Overall, the course evaluations were positive, with the current course being in the top 10 percentile for all core courses in the curriculum for that semester; in the previous year, the course was in the top 18%. Table 1 compares the end-of-semester course evaluations of the current year to those of the the previous year. While all course evaluation questions were higher in the current year, the largest percent differences were related to the perception of being actively engaged in class. Using a 2-way ANOVA for course format and campus, significant differences were observed in areas related to active student engagement, assessments, reflecting learning outcomes, class preparation related to success in the course, understanding the requirements for success, and relevance of knowledge and skills to the future. There were no differences between campuses in any of these areas. When examining potential differences relative to course format, questions related to “active learning being encouraged” (p < 0.001) and “preparing for class was necessary to be successful” (p < 0.04) were significantly more favorable in the satellite cohort during the current year compared with that during the previous year. The course evaluation survey tool also included a question related to the opportunity to develop various skills. Responses indicated that the current format provided more opportunities to promote communication skills (written and verbal), to work as part of a team, and to work effectively with diverse individuals. In the previous format, students felt the course offered more opportunities to manage their own learning (Table 2). There was no difference in attitudes regarding the opportunities to solve or tackle unfamiliar problems.

Table 1.

Comparison of Course Evaluations Between the Team-Based Learning (TBL) and Traditional Lecture Course Formats

Table 2.

Comparisons of Opportunities for Skill Development Between Team-Based Learning (TBL) and Traditional Lecture Course Formats

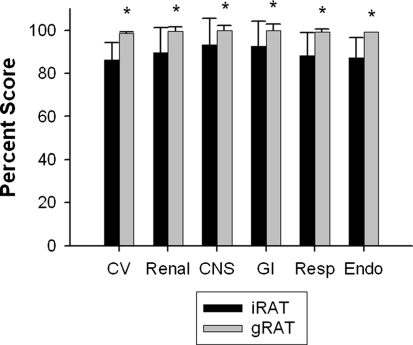

Figure 1 shows the individual and team quiz scores. Using t tests, there were significant differences between the individual and team grades for each quiz topic, with mean group scores exceeding individual scores (p < 0.001).

Figure 1.

Comparison of Individual Readiness Assessment Test (iRAT) and the Team Readiness Assessment Test (gRAT). All gRATs were significantly higher than the respective iRAT (* p < 0.05 compared with respective iRAT). Data presented as mean and standard deviation (n=153). Abbreviations: CV = cardiovascular, renal = renal physiology, CNS = central nervous system, GI = gastrointestinal physiology, Resp = respiratory, Endo = endocrinology.

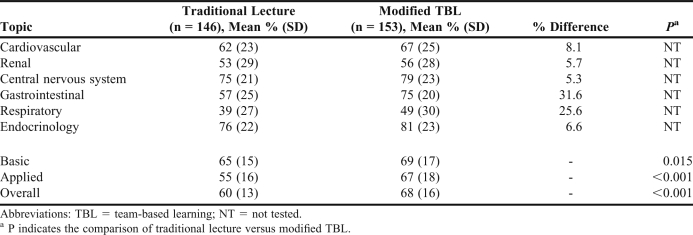

Table 3 compares the physiology assessment used between the students who had a primarily lecture-format course and those who had the new, restructured course. Scores increased with the new format. There were significant increases in scores for both the basic foundational and applied questions. There were no apparent differences in scores when examining the 2 formats on the distant campus or when comparing examination performance between the 2 campuses (data not shown).

Table 3.

Comparisons of Standardized Physiology Examination Scores Given to Students with the Previous Lecture Format and the Modified Team-Based Learning Format

Students completed a year-end attitudinal survey tool regarding various aspects of the course (n = 151). Approximately 40% of students preferred larger class settings compared with 34% of students who preferred smaller discussion groups (ie, the format used for the CNS section). Students had a strong preference for abridged pre-class assignments and instructor developed notes (85% of students agree with statement) compared with entire book chapters (8.5% of students who disagreed). Forty-six percent of students felt it was essential to receive some class time off to help prepare for class, whereas 26% of students did not feel it was essential. When asked how much preparation time off from class was optimal, 44% said 1 hour, 40% said 2 hours, and 16% said 3 hours. Most students (50%) used this free time to work on other classes, and 21% used it for either other classes or physiology; only 10% of students used the time for physiology only. Overall, students were comfortable learning on their own, with a mean score of 7.5 (± 1.8) out of 10. The students were asked how the various assessment procedures contributed to their learning. Most students (≥ 90%) agreed that the integrative cases, quizzing procedures, examination format, and receipt of short-answer questions prior to the examination facilitated their learning. Seventy-four percent of students disagreed with the statement that their learning suffered because of the course format. Seventy-one percent of students disagreed that the format resulted in less contact with their instructor. Seventy percent of students agreed that they learned more because of the course format; 59% considered the quality of instructor contact to be better than that in other courses; and 71% responded that they did not prefer a traditional lecture format.

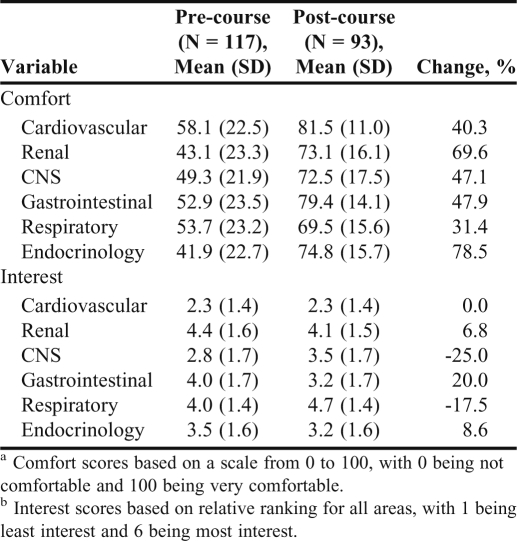

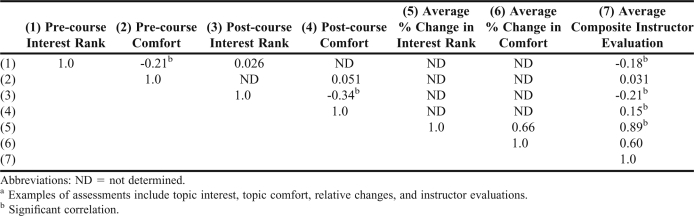

Prior to and immediately after the course, students were asked to rank the 6 topics in order of interest and to assess their comfort level with each topic on a sliding 100-point scale (Table 4). Spearman rank correlations were used to determine relationships between absolute values of student interest, comfort, percent change in both interest and comfort, and the average composite score for the end of semester course evaluations (Table 5). Significant correlations were found between the absolute values of pre- and post-course interest rank and comfort, the absolute values of pre-course and post-course interest rank and instructor evaluations, and the absolute scores for post-course comfort and instructor evaluations. When examining changes in comfort, all subject areas showed at least a 30% increase. There was a significant correlation between the average composite final instructor evaluation and the average percent change in interest, but not between the average composite final instructor evaluation and the average percent change in comfort. Interim instructor evaluations were compared with final instructor evaluations to obtain pilot information on the temporal relationship. The changes in instructor evaluations from the interim compared with the final evaluations were between -10.5% and 4.6% (mean -0.6%, median 0.2%).

Table 4.

Change in Student Comforta and Ranking of Topic Interestb Before and After the Modified Team-Based Learning (TBL) Course, mean (SD)

Table 5.

Spearman Rank Correlations Among Various Assessmentsa

DISCUSSION

A large, lecture-based physiology course was transitioned into a more self-directed, active-learning course using a modified TBL format. This transition involved dividing the class into groups and assigning subtopics. Each module topic began with students acquiring foundational knowledge on their own, followed by a readiness assessment process similar to that seen with TBL. In this format, students completed pre-class readings, attended class to complete a series of quizzes to establish whether they had acquired the foundational concepts, and class time was used to apply those physiologic concepts to cases relevant to pharmacy practice.

When comparing the revised format with the previous year's format, which used a standard lecture approach, student evaluations of the course for active learning were higher and there was an increase in the application of physiologic concepts. This focus on application was reflected in the assessment students completed approximately 9 months after finishing the course. Students who completed the new-format course performed better, especially on the application section, than did the previous cohort who completed the lecture-format course.

There were no notable differences in student performance at the satellite campus between the 2 course formats, but there were differences in the end-of-semester course evaluations in the areas of active learning and class preparation. The former observation may indicate increased student-instructor engagement at the satellite campus with this type of format compared with that in a traditional lecture format. Students at the satellite site tended to report that the technology is a barrier to instructor interaction and that they would have felt more engaged in a face-to-face course and if the instructor encouraged students to ask questions.8

Generally, there are 3 criteria that allow the learner's brain to know that it has acquired knowledge: modality, frequency, and duration. Learning must be reinforced in a way that the learner relates to, reinforced with repetition, and validated for some length of time.2 In the new course format, students were exposed to different modes, including reading (eg, pre-class assignments), written assignments (eg, integrative cases) and verbal activities (eg, class discussion, team quiz). The frequency and duration components were built into the format by means of repetition of pre-class readings, 2 quizzes on the subject, in-class discussions, integrative cases, and pre-exposure to short-answer examination questions. Each of these aspects supported memory formation by aiding the recall component; that is, by bringing out the information that is stored in memory. Repeated assessment and recall has been shown to increase long-term retention of material.9-11

One important aspect of the format for this course may have been the use of cases and the study questions for those cases, as cases were used in class and as a review for each examination. In a review of the impact of study questions on later examination performance, questions generally aided learning, and higher-level questions rather than low-level factual questions increased the effectiveness of student processing of reading material.3 Pressley and colleagues found that when students physically write answers to questions, even before reading the material, they perform significantly better on later assessments than do students who only read study questions prior to completing the reading assignment or who do not receive study questions.12

This preliminary investigation showed that the change in student interest in a given topic was correlated with instructor evaluations; however, pre-course interest in a topic was also correlated with instructor evaluations. This current investigation did not find a correlation between instructor evaluations and change in comfort. Student interest reflects attention level in class, interest in learning the material, perception of the intellectual challenge of a course, and acquired competence.13 Student interest is important in part because it facilitates effective teaching and creates a more favorable learning environment.14 Pre-class interest in a topic is a confounding variable in instructor evaluations by students.15 Because this study showed that change in interest was correlated with instructor evaluations, instructors may have the ability to change student interest regardless of changes in comfort with the topic. Instructors who use responsive teaching (defined as the capacity to know students’ needs, respond quickly, and thus engage in systematic learning) positively influence student interest.13 Students who are described as competent within an area seem to base their interest more on the particular topic area rather than on their ability to learn the material. Thus, it is unclear whether high levels of interest in a given area will be adequate to propel these individuals into the next stage of development; ie, interest alone may not improve student performance in a given area.16

When learners are more interested, they perceive themselves as learning more,17 and within this course, there were changes in perceived comfort level in learning. Even though there was no correlation between change in interest and change in comfort post-course, there was a significant correlation between the absolute values of interest, defined as ranking of topic and comfort level post-course. This suggests that perception of learning is related to interest.

A surprising finding was that changes in comfort did not significantly correlate with instructor evaluations. Abrantes and colleagues used path analysis to determine that learning performance predicts perceived learning (β = 0.23) and perceived learning can predict better course evaluations.13 However, this study found that pedagogical affect, such as instructional methods (β = 0.31) and student interest (β = 0.50), were stronger predictors of perceived learning. While the correlation coefficient for comfort and instructor evaluation was high (r = 0.60), the small sample size (n = 6) results in high variability, which may be a limiting factor in finding a significant correlation.

A positive attitude toward teaching style leads to higher achievement and learning performance.18 Students tend to prefer instructional methods that are more experiential and interactive, encourage understanding rather than rote memorization, emphasize application, integrate theoretical and practical knowledge, and produce more transferable knowledge.2,17,19 These findings may be reflected in the change of course evaluations between the traditional lecture format and the revised format, as the revised version focused more on the application and integration of areas and encouraged interactivity.

There was some indication that the perception of organization may impact perceived learning or instructor evaluations. This was noted by the impact of the Socratic discussion within the CNS module compared with that of some other modules. Because other modules had varying degrees of PowerPoint (Microsoft, Redmond, WA) slides, the lack of slides or note sets may be perceived by students as unstructured, which may impact instructor evaluations and partially explain a large decrease in interest. Course or lesson organization relates directly to students’ ability to handle uncertainty.20 An unstructured lesson may make students feel uncomfortable and, consequently, have a negative impact on their instructor evaluations and their perceived learning,21 whereas a more structured and organized course may lead to a favorable instructor assessment and self-evaluation.20,21

There are several limitations to the comparison of the 2 course formats. The examination used to compare the 2 methods had limited sampling of the breadth of physiologic concepts and a limited number of questions in the knowledge and application levels. The design of the current examination was based on goals of the revised course and may not necessarily reflect goals of the previous course, even though all subject areas were present in the previous course. With respect to the affective assessment of interest and comfort, there were no such assessments in the traditional course or other comparable formatted courses aside from end-of-semester course evaluations. Additionally, interest was assessed using a rank rather than an absolute value. It is unclear whether having students assess interest on an absolute scale rather than a relative scale would impact correlations to instructor evaluations or comfort within a particular topic, but it would lower the ability to detect a positive change in interest independent of changes in the interest within other areas.

There are some areas for improvement in the revised course. Based on student feedback, the amount of free time will be reduced in future iterations so that students have 1 hour off for every 2 hours of discussion (∼33% time off) instead of the current format of 3 hours off for every 4 hours of discussion (∼42% time off). Feedback from students also indicates they would like some free class periods to be used to answer questions regarding the next section of material prior to the quiz. Because the current format does not have a formal class period, the 1-hour free time the week prior to a quiz will be used for a general question-and-answer opportunity. Based on faculty discussions, adding hematology as a standalone component instead of combining it with cardiovascular physiology may be warranted to help facilitate discussions in other areas.

SUMMARY

A modified TBL format increased active learning within a foundational physiology course. The course modifications had some positive impact on course evaluations and learning, especially the ability to apply information to relevant examples. Some key components for success may be related to student-friendly pre-class material, various types of assessments that serve both a formative and summative purpose, organization of materials, and some class time replaced with free time to accomplish the more self-directed learning goals of the course. The TBL format could be adapted for a variety of classes, with the major barrier being the ability to divide the course into modules. While class size adds some logistical barriers, the use of TBL can be implemented in any class size and may be more beneficial in larger classes as it increases interaction among students and, to a lesser degree, between students and instructors.

ACKNOWLEDGEMENTS

The authors thank Jo Ellen Rodgers, Bob Dupuis, Ralph Raasch, and Dennis Williams for the discussions related to course execution and instruction within the course.

REFERENCES

- 1.Education ACoP. Chicago: Illinois; 2011. Accreditation standards and guidelines for the professional program in pharmacy leading to the doctor of pharmacy degree. [Google Scholar]

- 2.Jensen E. Brain-based learning: the new paradigm of teaching. 2nd ed. Thousand Oaks, CA.: Corwin Press; 2008. [Google Scholar]

- 3.McKeachie WJ, Svinicki MD, Hofer BK. McKeachie's teaching tips: strategies, research, and theory for college and university teachers. 12th ed. Boston: Houghton Mifflin; 2006. [Google Scholar]

- 4.Bligh DA. What's the use of lectures? 1st ed. San Francisco: Jossey-Bass Publishers; 2000. [Google Scholar]

- 5.Murad MH, Coto-Yglesias F, Varkey P, Prokop LJ, Murad AL. The effectiveness of self-directed learning in health professions education: a systematic review. Med Educ. 2010;44(11):1057–1068. doi: 10.1111/j.1365-2923.2010.03750.x. [DOI] [PubMed] [Google Scholar]

- 6.Hattie J, Timperley H. The power of feedback. Rev Educ Res. 2007;77(1):81–112. [Google Scholar]

- 7.Gillespie KH, Hilsen LR, Wadsworth EC. A guide to faculty development: practical advice, examples, and resources. Bolton: Mass.: Anker Pub. Co; 2002. [Google Scholar]

- 8.Doggett AM. The videoconferencing classroom: what do students think? J Industrial Teach Educ. 2007;44(4):29–41. [Google Scholar]

- 9.Karpicke JD, Roediger HL., III Repeated retrieval during learning is the key to long-term retention. J Mem Lang. 2007;57(2):151–62. [Google Scholar]

- 10.Larsen DP, Butler AC, Roediger HL., III Test-enhanced learning in medical education. Med Educ. 2008;42(10):959–966. doi: 10.1111/j.1365-2923.2008.03124.x. [DOI] [PubMed] [Google Scholar]

- 11.Roediger HL, III, Karpicke JD. Test-enhanced learning: taking memory tests improves long-term retention. Psychol Sci. 2006;17(3):249–255. doi: 10.1111/j.1467-9280.2006.01693.x. [DOI] [PubMed] [Google Scholar]

- 12.Pressley M, et al. What happens when university students try to answer prequestions that accompany textbook material? Contemp Educ Psychol. 1990;15(1):27–35. [Google Scholar]

- 13.Abrantes J, Seabra C, Lages L. Pedagogical affect, student interest, and learning performance. J Bus Res. 2007;60(9):960–964. [Google Scholar]

- 14.Marsh HW, Cooper TL. Prior Subject Interest, Students' Evaluations, and Instructional Effectiveness. 1980. [DOI] [PubMed] [Google Scholar]

- 15.Prave RS, Baril GL. Instructor Ratings: Controlling for Bias from Initial Student Interest. J Educ Bus. 1993;68(6):362–366. [Google Scholar]

- 16.Alexander PA, Kulikowich JM, Schulze SK. How subject-matter knowledge affects recall and interest. Am Educ Res J. 1994;31(2):313–337. [Google Scholar]

- 17.Tynjala P. Towards expert knowledge? a comparison between a constructivist and a traditional learning environment in the university. Int J Educ Res. 1999;31(5):357–442. [Google Scholar]

- 18.Dunn R, et al. Grouping Students for Instruction: Effects of Learning Style on Achievement and Attitudes. J Soc Psychol. 1990;130(4):485–494. doi: 10.1080/00224545.1990.9924610. [DOI] [PubMed] [Google Scholar]

- 19.Ambrose SA. 1st ed. San Francisco, CA: Jossey-Bass; How learning works: seven research-based principles for smart teaching. [Google Scholar]

- 20.Marks R. Determinants of student evaluations of global measures of instructor and course value. J Mark Educ. 2000;22(2):108–119. [Google Scholar]

- 21.Marsh HW, Bailey M. Multidimensional Students' Evaluations of Teaching Effectiveness: A Profile Analysis. 1991. [Google Scholar]