Abstract

Purpose

To investigate use of a new guideline-based, computerized clinical decision support (CCDS) system for asthma in a pediatric pulmonology clinic of a large academic medical center.

Methods

We conducted a qualitative evaluation including review of electronic data, direct observation, and interviews with all nine pediatric pulmonologists in the clinic. Outcome measures included patterns of computer use in relation to patient care, and themes surrounding the relationship between asthma care and computer use.

Results

The pediatric pulmonologists entered enough data to trigger the decision support system in 397/445 (89.2%) of all asthma visits from January 2009 to May 2009. However, interviews and direct observations revealed use of the decision support system was limited to documentation activities after clinic sessions ended. Reasons for delayed use reflected barriers common to general medical care and barriers specific to subspecialty care. Subspecialist-specific barriers included the perceived high complexity of patients, the impact of subject matter expertise on the types of decision support needed, and unique workflow concerns such as the need to create letters to referring physicians.

Conclusions

Pediatric pulmonologists demonstrated low use of a computerized decision support system for asthma care because of a combination of general and subspecialist-specific factors. Subspecialist-specific factors should not be underestimated when designing guideline-based, computerized decision support systems for the subspecialty setting.

Keywords: Decision Support Systems, Clinical, Qualitative Evaluation, Asthma

INTRODUCTION

Computerized clinical decision support (CDS) systems enhance care by providing intelligently filtered, patient-specific information and advice to clinicians at the appropriate time.1,2 Current knowledge about the promoters and barriers to the use of CDS rests largely on data collected in primary care and in hospital settings.3-6 Nearly 200 million ambulatory care visits are made to subspecialists’ offices each year in the United States, yet relatively little is known about the use of CDS by subspecialists.7,8 Well-designed systems for subspecialists will require accurate knowledge about subspecialist perspectives.

Asthma care is the focus of a growing number of CDS systems, but most of these systems have been implemented in primary care settings.9 Subspecialists such as allergists and pediatric pulmonologists care for roughly one third of the 6.7 million children diagnosed with asthma in the United States.10 Subspecialists tend to adhere to asthma care guidelines more closely than do primary care providers.11-13 However, accurate identification of patients with uncontrolled asthma remains a problem, even in subspecialty settings.14 Preliminary studies suggest subspecialists may view electronic health records differently from their primary care counterparts.15 Whether subspecialist-specific factors such as subject matter expertise impact subspecialists’ regard for computerized CDS is not known.

We developed a computerized CDS system for pediatric pulmonologists who provide asthma care in a subspecialty clinic. Our goal was to investigate use of the system while paying particular attention to subspecialist-specific factors. By taking a qualitative approach, we sought to gain information about the nature of pediatric pulmonologists’ use of the electronic health record, about key obstacles to their use of the CDS system during patient care, and about their thoughts on the usefulness of a guideline-based CDS system in a pediatric subspecialty setting.

METHODS

Site description

The pediatric pulmonology clinic at Yale University received the first CDS system developed by GLIDES (GuideLines Into DEcision Support), which brought together researchers from Yale University School of Medicine, Yale-New Haven Health System, and Nemours. The clinic has a total of nine clinicians: five attending pediatric pulmonologists, three fellows, and one nurse practitioner. Patients are referred to the clinic by primary care physicians in Connecticut, Rhode Island, and New York.

The clinic has used an electronic health record (Centricity EMR/formerly “Logician,” General Electric, Fairfield, CT) to document all ambulatory care visits since May 2005. Clinicians can document their visit notes by using a desktop computer located in each examination room or by using one of several desktop computers located in conference rooms adjacent to the examination rooms. Electronic documentation for asthma care visits occurs via templates that capture pertinent asthma history, physical examination findings, and management decisions. As part of the GLIDES project, new “smart forms” were added to existing templates within the EHR.16

CDS description

The “smart forms” were based on Expert Panel Report 3 (EPR-3), the most recent version of the National Heart, Lung, and Blood Institute’s guideline for the diagnosis and management of asthma, released in 2007.17 Although it pays particular attention to primary care providers, EPR-3’s Guideline Implementation Panel explicitly targets any “prescribing clinician” for asthma care.18

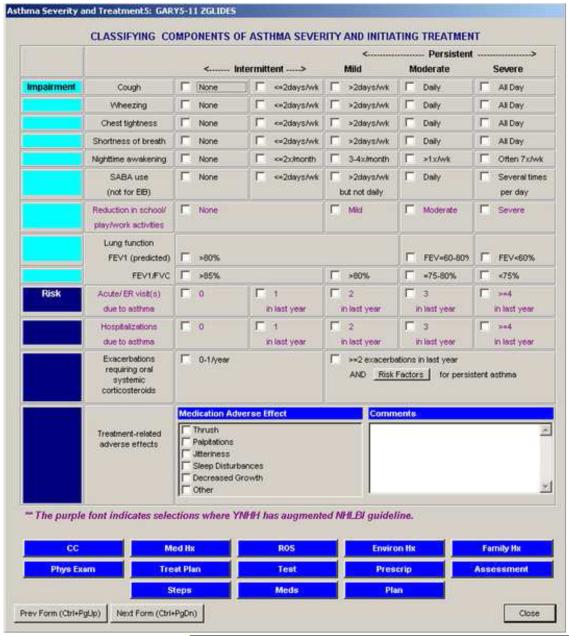

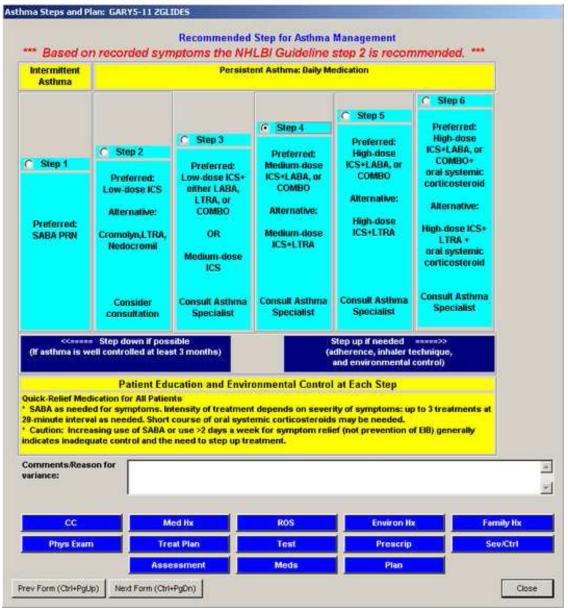

The “smart forms” have previously been described in detail. 19 Briefly, the forms featured screens that visually resembled figures in EPR-3 (see Figures 1 and 2). Clinicians clicked radio buttons (similar to check boxes) to record risk factors for asthma and to document history of asthma symptoms. As clinicians entered data on impairment or risk, the CDS automatically calculated asthma severity (for new patients) or control (for return patients) in the background. For new patients, the CDS then also automatically suggested a level of therapy. These suggestions were automatically provided at the point in the smart form series at which the clinician was asked for his or her own judgment. If the clinician chose a different level of therapy than was recommended by the CDS system, red text appeared at the top of the screen that indicated a potential variance with EPR-3 based on the data available to the computer (see Figure 2). To document a reason for variance or to record any other findings, clinicians were encouraged to enter comments in a text box provided for this purpose.

Figure 1.

Screenshot of a “smart form” for classifying asthma severity in a new patient.

Figure 2.

Screenshot of a “smart form” alerting the user to a variance between chosen therapy and guideline-recommended therapy.

The “smart forms” were designed based upon recommendations found in EPR-3 and with input from the pediatric pulmonologists, two of whom served on the GLIDES design team. The pediatric pulmonologists supplied expert knowledge and provided insight into anticipated usage of the “smart forms.” The pediatric pulmonologists helped plan the implementation and launch of the “smart forms,” which became available for use in their subspecialty clinic in January 2009.

Data collection

We retrieved utilization data from the electronic health record about asthma care visits to the pediatric pulmonology clinic at Yale-New Haven Hospital from January 2009 to May 2009. These data included use of each data element in the “smart form” as well as demographic data about patient age, gender, race/ethnicity, and provider level of training. One investigator (DEE) directly observed each pediatric pulmonologist, including those who served on the design team, on two separate occasions, at approximately four months post-implementation (May 2009) and again at nine months post-implementation (September 2009). Observation periods lasted between thirty and forty-five minutes, during which DEE noted each “smart form” screen accessed by each clinician at the time of the patient visit.

EAL conducted individual, semi-structured interviews with all nine pediatric pulmonologists, including those who participated on the design team, between May 2009 and July 2009. Interviews lasted between eighteen and forty-eight minutes. Each interview was digitally recorded and subsequently transcribed. Topics for discussion included clinic workflow, computer use during clinical care, and clinical practice guidelines. To ensure accurate understanding of the clinician’s perspective, at several points the interviewer repeated elements of the conversation back to the clinician for clarification or confirmation.

The Human Investigation Committee at Yale University School of Medicine approved our study protocol and granted a Health Insurance Portability and Accountability Act (HIPAA) waiver for review of patient charts. We obtained signed informed consent from each clinician prior to periods of direct observation and prior to interviews.

Transcript analysis

We used a grounded theory approach to identify emerging themes directly from the clinicians’ own words.20 At least three authors reviewed each transcript and came to a consensus view of how each transcript should be coded. We followed an iterative process of clinician interview, transcript review, and adjustment of the coding framework. We identified new themes until saturation was achieved and all nine clinicians had been interviewed. The interviews yielded 213 typed double-spaced pages and one photograph for analysis. We used qualitative data analysis software (NVivo 8, QSR International, Melbourne, Australia) to manage codes and to identify illustrative quotes.

RESULTS

Study sample

Between January 2009 and May 2009, there were 445 visits to the pediatric pulmonary clinic for asthma. Patients were a median of 7 years old (interquartile range 3 – 12). A total of 209/445 (47.0%) were white, 105/445 (23.6%) were Hispanic, and 104/445 (23.4%) were African-American. Attending pediatric pulmonologists documented 186/445 (41.8%) of patient visits, while the fellows documented 138/445 (31.0%) and the nurse practitioner documented 121/445 (27.2%) of patient visits, respectively.

Clinicians triggered the computerized CDS (i.e., by clicking at least one data entry field leading to automated assessment of asthma severity) in 43/55 (78.2%) of new patient visits. Clinicians triggered the computerized CDS (i.e., by clicking at least one data entry field leading to automated assessment of asthma control) in 354/390 (90.8%) of return patient visits. As a result, clinicians entered enough structured data to trigger decision support for 397/445 (89.2%) of all patient visits for asthma care.

Computer use during patient care

Based on direct observation, and confirmed by interviews, none of the pediatric pulmonologists used the computers in the exam rooms. In conference rooms, the pediatric pulmonologists accessed the “smart forms” to review patient medications, to generate asthma action plans, and to print prescriptions. Only one pediatric pulmonologist entered enough data during the course of a patient visit to enable a computerized assessment of asthma control while the patient was still in clinic. The pediatric pulmonologists generally used the “smart forms” as documentation tools and to write letters to referring physicians once clinic sessions ended.

Reflections on computer use

We interviewed each clinician to better understand their pattern of computer use. The pediatric pulmonologists identified social, technical, and workflow-related factors related to computer use in general, as well as themes specifically related to the decision support, when asked to comment on the “smart forms,” on the utility of computers during patient care, and on guidelines-based decision support in their clinic. Each set of factors included statements applicable to all physicians and statements unique to asthma subspecialists (see Tables I and II).

Table I.

General and subspecialist-specific themes explaining low use of a guideline-based, computerized CDS system for asthma care by pediatric pulmonologists.

| Factor | Themes oriented towards general medical care |

Themes oriented towards subspecialty care |

|---|---|---|

| Clinical | General limitations of guidelines |

Impact of subject matter expertise on guideline adherence |

| Human advantage over computers in clinical decision- making |

Highly complex patient scenarios | |

| Inadequate support for subspecialist-only pathways |

||

|

| ||

| Social | Perceived negative impact of computer use on the patient- doctor relationship |

Perceived negative impact of computer use on the patient-expert relationship |

| Meeting patient expectations for expert “face time” |

||

|

| ||

| Technical | Slow or non-functioning computers |

Inadequate support for composition of acceptable letters to referring physicians |

|

| ||

| Workflow- related |

Time constraints | Additional time-intensive patient education requirements |

| Impact of paper-based artifacts | ||

Table II.

Comments illustrating general and subspecialist-specific barriers to use of a computerized CDS system for asthma care.

| Factor | Generally applicable comments | Subspecialist-specific comments |

|---|---|---|

| Clinical | Guidelines are just that – guidelines…It’s usually not to dictate how you have to practice medicine. It’s usually a guide. (Attending) |

[EPR-3] is based on expert opinion, and that’s very clearly stated. So I think that, keeping that in mind, we have expertise, too, so I think that our expert opinion counts as well. (Attending) |

| I don’t like it. [The computer] doesn’t have to make decisions - I’m the one who should make the decisions. Because…it’s not like one plus one equals two. It’s different. We’re dealing with human beings…I think that I just got used to me thinking instead [of the computer]. (Fellow) |

And so should I get an IgE and a RAST test or maybe send you to Allergy [clinic] to get skin prick testing done, and see if you qualify for immune therapy or [omalizumab] therapy? So those are the kinds of tools that specialists would need, which is not something that pediatricians would need. Because which pediatrician is gonna start thinking about [omalizumab] for an asthmatic in their office? They’re not gonna do that. It’s actually not even their job to do that. (Attending) |

|

| We can’t [use the “smart forms” as part of the visit]. It’s not possible in our setting…because our history-taking is complicated. It’s long. People come with charts and studies…It just isn’t like a well child visit. It can never be like a well child visit. Where, you know, you ask questions by rote, and sometimes the answers are by rote. (Attending) |

||

|

| ||

| Social | I don’t know how the computer can actually be part of the doctor-patient relationship in a natural and intuitive way. It actually cannot be. I mean I can tell you that the current system does not serve that purpose. (Attending) |

I feel they come to the specialist because they want to hear from the specialist not from their own pediatrician. (Attending) |

| I need a five-minute visit to feel like a half an hour. But a half an hour visit while I’m documenting in front of them is going to make them feel like I haven’t paid attention to them at all. (Attending) |

||

| Technical | The kids play with the computers, and they sound like they’re taking off [noise of intermittent cooling fan]. You know, like some rocket explosion or something like that. Even when you’re not using them. So, it’s not uncommon for them to not be working. (Fellow) |

There are times when the patient has left and I’ve thought about [the “smart forms”]. Actually as I’m typing the letter, because that’s when you formulate your thoughts…So that the person who has sent you the patient has some idea of what it is you were thinking and what you want to do. And suddenly you realize, you know, I just didn’t ask this. Or maybe I should’ve actually done this. And then it gets put into the letter as, “I will consider doing this, this, and this [at the next visit].” (Attending) |

|

| ||

| Workflow- related |

[I take notes] on paper. The [Interval History forms] that we were using before the electronic system came about. We still have the paper forms there because the nurses record vital signs on those paper forms. And so…they help to guide me through the questioning process. I’m able to take notes just as you would normally. (Attending) |

But in the end, it needs to be a tool that is easy to use. And you can quickly use it to help you make certain decisions in the limited amount of time you have. I mean, I have, yes, I have more time than the average 12 to 15 minutes that patients get with the pediatrician, but it is still not unlimited. (Attending) |

1) Decision support system factors

Discussions about clinical practice guidelines led to the often-repeated comment, “Guidelines are guidelines.” The pediatric pulmonologists regarded clinical practice guidelines as starting points, not endpoints, for clinical care. Much of the pediatric pulmonologists’ reasoning for delaying use of the “smart forms” until the completion of clinic sessions revolved around notions of patient complexity in the subspecialty setting. They believed that their patients’ clinical scenarios were more complex than the scenarios encountered by primary care providers and that guidelines focused on the “typical” patient were therefore less applicable to their patients.

As subspecialists in respiratory medicine, the pediatric pulmonologists also considered themselves experts who did not need decision-support when it came to asthma management. The pediatric pulmonologists pointed out that in addition to scientific evidence, expert opinion played a major role in the development of recommendations appearing in EPR-3. As long as expert opinion played a role, then the pediatric pulmonologists felt justified in using their own expertise. Neither EPR-3 nor its computerized version as “smart forms” was perceived as sufficiently valid to change what the pediatric pulmonologists already believed from their own experience.

Finally, the pediatric pulmonologists would have preferred decision support outside the scope of “smart forms” based on EPR-3 recommendations. They looked for pathways that were specifically oriented towards subspecialist decision-making, such as when to order sophisticated allergy testing or when to begin immune therapy. Thus, their own expert knowledge, combined with a lack of tools appropriate for expert care, led the pediatric pulmonologists to largely ignore the “smart forms’” assessments.

2) Social considerations

In addition to misgivings about the value of the “smart forms,” the pediatric pulmonologists raised a concern common to general medical care that computer use during the patient encounter would adversely affect the patient-clinician relationship. A good rapport with the patient required the clinician’s full attention, which they felt could not be maintained while viewing the computer screen or clicking for structured data entry. Some pediatric pulmonologists believed that use of a smaller device (e.g., a computerized tablet) might be acceptable, but use of the desktop computer under their current conditions posed too much of a social risk.

The pediatric pulmonologists’ view of a good patient-clinician relationship was further influenced by their role as consultant experts. Referring again to notions of patient complexity, the pediatric pulmonologists reported that primary care providers referred patients to the clinic for help managing difficult cases. Patients often represented diagnostic or therapeutic challenges. Consequently, the pediatric pulmonologists felt obligated to provide a level of care not yet experienced by the patients. They interpreted this as maximizing “face time” and postponing computer use until after the patient left. Furthermore, expert “face time” appeared to satisfy patient expectations about the differences between primary care and subspecialty care.

3) Technical factors

Technical factors also contributed to computer avoidance during the patient visit. Computers in the exam room were rarely turned on at the start of clinic, and when they were turned on, they were often slow and distracting. In conference rooms, the pediatric pulmonologists found working computers to write letters about patient visits back to referring physicians. While structured data entry within the “smart forms” accomplished much of this task, the automated output required a substantial amount of editing. Consequently, the pediatric pulmonologists delayed modification of the letter until the end of clinic and after patient care decisions had been made. As a result, any opportunity for the computer to influence decision-making came too late.

4) Workflow-related factors

The potential for computer use to disrupt clinic workflow, in the context of general medical care, represented a major area of concern. The pediatric pulmonologists worried that computer use would slow the pace of seeing patients. Consequently, they developed numerous workarounds that allowed the clinic to function smoothly with a minimum level of computer use. They retained paper-based forms and notes, learned how to skip data entry forms to go directly to medication ordering, kept paper shadow charts, and enabled nurses to generate and print the asthma action forms themselves.

One of the key paper-based processes was the completion of an Interval History form by patients in the waiting room. Patients used the Interval History form to communicate recent events, respiratory complaints, and any other concerns to the pediatric pulmonologists for the upcoming visit. Because the pediatric pulmonologists needed this information to guide the visit and to make management decisions, they referenced the paper interval history form instead of the computer. Furthermore, the interval history form was a paper-based medium on which the pediatric pulmonologists took notes. In contrast, the computerized “smart forms” were not as readily available for note-taking in the presence of the patient.

Discussions about workflow also revealed subspecialist-specific perspectives. The pediatric pulmonologists believed that they had more time than primary care providers to see patients, but they did not find that the extra time was effectively spent taking advantage of the computerized CDS. The “smart forms,” for example, did not help to solve a relatively simple but common reason for referral, which was improper inhaler technique. According to the pediatric pulmonologists, improper inhaler technique by patients was often overlooked by primary care providers seeking to explain persistent asthma symptoms. So the extra time was better spent with extra history-taking and extra patient education, not extra computer use.

COMMENT

We used a qualitative approach to investigate use of a guideline-based, computerized CDS system for asthma care by pediatric pulmonologists. The pediatric pulmonologists entered enough data to trigger the CDS system in 89% of asthma care visits but only did so as part of documentation activities after the completion of clinic sessions. Very low use during the patient visit was related to a variety of decision support, social, technical, and workflow-related factors. These factors indicated barriers to computer use during the course of general medical care and other barriers unique to computerized CDS in a subspecialty setting.

Knowledge about the use of computerized CDS in subspecialty settings is sparse. Lo et al found that subspecialists working in cardiology, dermatology, endocrinology, and pain management clinics could adopt an electronic health record without increasing overall patient visit time.21 Unertl et al found that providers in specialized clinics for diabetes, multiple sclerosis, and cystic fibrosis avoided computerized documentation during clinical encounters.22-24 Computerized CDS, however, must be used during clinical encounters if it is to have an optimal effect on the care process.2,25,26

The pediatric pulmonologists identified many of the same factors that are well understood to impede use of computerized CDS in other settings.27 Ease of use, adaptability to local workflow, and opinions about the underlying guidelines all impact adoption.6,28 Use of the electronic health record in general depends heavily on the availability of non-electronic artifacts exemplified by the interval history form used by the pediatric pulmonologists.29 The availability of an electronic means by which patients could provide their data might have improved utilization, as more data would have been available electronically in real time. Although the pediatric pulmonologists used the computerized CDS system for ambulatory care, providers using computerized systems for order entry report the same technical, social, and clinical issues for inpatient care.30

The pediatric pulmonologists also identified factors unique to the subspecialty setting. These included the necessity to compose well-written letters back to referring primary care providers, the influence of their own subject matter expertise on their opinions about practice guidelines, and the importance of meeting patient expectations for “face time” with subspecialists. While the computer supported various aspects of general medical care (e.g., printing prescriptions and creating asthma action plans) the computerized CDS did not adequately address what the pediatric pulmonologists believed to be the most important aspects of subspecialty care.

If subspecialty care encompasses unique characteristics, then unique opportunities may exist for computerized CDS to influence care in subspecialty settings. Incorporation of subspecialist-only pathways into CDS algorithms may be one way to accommodate subject matter expertise. For example, pulmonologists expressed a desire for help with more sophisticated decision-making such as deciding when to pursue advanced allergy testing, or when to begin immune therapy. Facilitating the composition of consultant letters to referring physicians may also prove amenable to computerized CDS systems. If the pediatric pulmonologists were able to complete their documentation during patient visits, including consultant letters facilitated by the computerized CDS, then they would have encountered the CDS as they were making patient care decisions and not afterwards.

Another notable finding of this study is that even though the pediatric pulmonologists were an integral part of the CDS design and implementation processes, significant barriers remained undiscovered until well after implementation. In particular, the informatics team did not appreciate the level of resistance by specialists to guideline-based decision support in general. The specialists were recruited prior to the design phase but after the decision had been made to implement a decision support system: at that stage, it was too late for specialists to influence the main direction of the project. Consequently, they focused more on ensuring the documentation capability of the system was optimal for their needs than on large scale workflow overhaul. As other authors have noted, involvement of end users is critical but not sufficient for successful CDS implementation.31,32 To determine the overall “fit” of the CDS system into the subspecialty clinic, focus groups with clinicians, a period of direct observation or more formal usability testing may have been helpful.33,34 Successful CDS implementation requires crossing multiple “chasms” related to the CDS system’s design, to the project’s management, and to the target environment’s organizational structure.35

Our evaluation has both strengths and limitations. An in-depth, qualitative approach allowed us to obtain highly detailed information that was not evident from examination of electronic data alone. Had we not also conducted direct observations and qualitative interviews, we would erroneously have concluded that the CDS implementation was successful. Our evaluation was limited to a single site and CDS system. We did not interview primary care providers or subspecialist providers in other settings to validate our general and subspecialist-specific themes. We performed interviews approximately four months after implementation of the computerized CDS system. It is possible that the pediatric pulmonologists would have exhibited greater use of the CDS or viewed the CDS differently after a longer period of time. However, a second round of direct observation nine months after implementation revealed no discernible changes in patterns of use.

In conclusion, we found that pediatric pulmonologists documented a high percentage of asthma care visits using a new guideline-based, computerized CDS system but that use of the system during patient care was low. Barriers to use were characteristic of problematic CDS implementations in the general medical setting but also illuminated factors that were specific to the subspecialty setting. Key subspecialist-specific factors were the impact of expert knowledge and the necessity of well-constructed letters to referring physicians. Designers of computerized CDS tools will need to address unique aspects of subspecialty environments if they hope to influence the level of guideline-based care in these settings.

Highlights.

There is a requirement for “Highlights” in the revision; I don’t know what is intended by this requirement and there are no instructions about it in the guide for authors. I have uploaded this placeholder document for the purposes of being able to submit the revision.

We evaluate a computerized decision support system for asthma care in a subspecialty clinic.

Subspecialist-specific factors play a role in adoption of decision support systems.

Examples include unique workflow patterns and high levels of subject matter expertise.

Unique aspects of subspecialty environments should be considered when designing systems for these settings.

Summary points

| What was already known |

|

| What this study adds to our knowledge |

|

ACKNOWLEDGEMENTS

This work was supported by contract HHSA 290200810011 from the Agency for Healthcare Research and Quality and grant T15-LM07065 from the National Library of Medicine. Dr. Horwitz is supported by the CTSA Grant UL1 RR024139 and KL2 RR024138 from the National Center for Research Resources (NCRR), a component of the National Institutes of Health (NIH), and NIH roadmap for Medical Research. Article contents are solely the responsibility of the authors and do not necessarily represent the official view of the NCRR or NIH. Drs. Lomotan, Shiffman, and Horwitz had full access to all the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis.

The authors would like to thank Alia Bazzy-Asaad, MD and Tina Tolomeo, DNP, APRN, FNP-BC, AE-C for their contributions to the GLIDES project and for their help gathering data about the subspecialty clinic.

Footnotes

Author contributions Obtained funding (RNS), plan and design of study (EAL, LJH, LIH), data collection (EAL, DEE), data analysis (EAL, LJH, GR-G, LIH), manuscript preparation (EAL), critical review and editing of manuscript (EAL, LJH, DEE, GR-G, RNS, LIH).

Conflict of interest No author reports a conflict of interest with this study

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- 1.Osheroff JA, Teich JM, Middleton B, Steen EB, Wright A, Detmer DE. A roadmap for national action on clinical decision support. J Am Med Inform Assoc. 2007;14(2):141–145. doi: 10.1197/jamia.M2334. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kawamoto K, Houlihan CA, Balas EA, Lobach DF. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005;330(7494):765–773. doi: 10.1136/bmj.38398.500764.8F. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Short D, Frischer M, Bashford J. Barriers to the adoption of computerised decision support systems in general practice consultations: a qualitative study of GPs’ perspectives. Int J Med Inf. 2004;73(4):357–362. doi: 10.1016/j.ijmedinf.2004.02.001. [DOI] [PubMed] [Google Scholar]

- 4.Sittig DF, Krall MA, Dykstra RH, Russell A, Chin HL. A survey of factors affecting clinician acceptance of clinical decision support. BMC Med Inf Decis Mak. 2006;6:6–13. doi: 10.1186/1472-6947-6-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Toth-Pal E, Wardh I, Strender LE, Nilsson G. Implementing a clinical decision-support system in practice: a qualitative analysis of influencing attitudes and characteristics among general practitioners. Inform Health Soc Care. 2008;33(1):39–54. doi: 10.1080/17538150801956754. [DOI] [PubMed] [Google Scholar]

- 6.Rousseau N, McColl E, Newton J, Grimshaw J, Eccles M. Practice based, longitudinal, qualitative interview study of computerised evidence based guidelines in primary care. BMJ. 2003;326(7384):314. doi: 10.1136/bmj.326.7384.314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Goud R, de Keizer NF, ter Riet G, et al. Effect of guideline based computerised decision support on decision making of multidisciplinary teams: cluster randomised trial in cardiac rehabilitation. BMJ. 2009;338:b1440–1449. doi: 10.1136/bmj.b1440. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Mack EH, Wheeler DS, Embi PJ. Clinical decision support systems in the pediatric intensive care unit. Pediatr Crit Care Med. 2009;10(1):23–28. doi: 10.1097/PCC.0b013e3181936b23. [DOI] [PubMed] [Google Scholar]

- 9.Sanders DL, Aronsky D. Biomedical informatics applications for asthma care: a systematic review. J Am Med Inform Assoc. 2006;13(4):418–427. doi: 10.1197/jamia.M2039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bloom B, Cohen RA. Summary health statistics for U.S. children: National Heal Interview Survey, 2007. National Center for Health Statistics. Vital Health Stat. 2009;10(239) [PubMed] [Google Scholar]

- 11.Diette GB, Skinner EA, Nguyen TT, Markson L, Clark BD, Wu AW. Comparison of quality of care by specialist and generalist physicians as usual source of asthma care for children. Pediatrics. 2001;108(2):432–437. doi: 10.1542/peds.108.2.432. [DOI] [PubMed] [Google Scholar]

- 12.Vollmer WM, O’Hollaren M, Ettinger KM, et al. Specialty differences in the management of asthma. A cross-sectional assessment of allergists’ patients and generalists’ patients in a large HMO. Arch Intern Med. 1997;157(11):1201–1208. [PubMed] [Google Scholar]

- 13.Legorreta AP, Christian-Herman J, O’Connor RD, Hasan MM, Evans R, Leung KM. Compliance with national asthma management guidelines and specialty care: a health maintenance organization experience. Arch Intern Med. 1998;158(5):457–464. doi: 10.1001/archinte.158.5.457. [DOI] [PubMed] [Google Scholar]

- 14.Marcus P, Arnold RJ, Ekins S, et al. A retrospective randomized study of asthma control in the US: results of the CHARIOT study. Curr Med Res Opin. 2008;24(12):3443–3452. doi: 10.1185/03007990802557880. [DOI] [PubMed] [Google Scholar]

- 15.Allareddy V, Allareddy V, Kaelber DC. Comparing perceptions and use of a commercial electronic medical record (EMR) between primary care and subspecialty physicians. AMIA Annu Symp Proc. 2006:841. [PMC free article] [PubMed] [Google Scholar]

- 16.Schnipper JL, Linder JA, Palchuk MB, et al. “Smart Forms” in an Electronic Medical Record: documentation-based clinical decision support to improve disease management. J Am Med Inform Assoc. 2008;15(4):513–523. doi: 10.1197/jamia.M2501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.EPR-3. Expert Panel Report 3: Guidelines for the diagnosis and management of Asthma (EPR-3 2007) U.S. Department of Health and Human Services; National Institutes of Health; National Heart, Lung, and Blood Institute; National Asthma Education and Prevention Program; Bethesda, MD: 2007. NIH Publication No. 07-4051. [Google Scholar]

- 18.Guidelines implementation panel report for: EPR-3 - Guidelines for the diagnosis and management of asthma. U.S. Department of Health and Human Services; National Institutes of Health; National Heart, Lung, and Blood Institute; National Asthma Education and Prevention Program; Bethesda, MD: 2008. NIH Publication No. 09-7147. [Google Scholar]

- 19.Hoeksema LJ, Bazzy-Asaad A, Lomotan EA, et al. Accuracy of a computerized clinical decision-support system for asthma assessment and management. J Am Med Inform Assoc. 2011;18(3):243–250. doi: 10.1136/amiajnl-2010-000063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Glaser BG, Strauss AL. The discovery of grounded theory: strategies for qualitative research. Aldine; Chicago: 1967. [Google Scholar]

- 21.Lo HG, Newmark LP, Yoon C, et al. Electronic health records in specialty care: a time-motion study. J Am Med Inform Assoc. 2007;14(5):609–615. doi: 10.1197/jamia.M2318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Unertl KM, Weinger MB, Johnson KB. Applying direct observation to model workflow and assess adoption. AMIA Annu Symp Proc. 2006:794–798. [PMC free article] [PubMed] [Google Scholar]

- 23.Unertl KM, Weinger MB, Johnson KB, Lorenzi NM. Modeling workflow and information flow in chronic disease care. J Am Med Inform Assoc. 2009;16(6):826–36. doi: 10.1197/jamia.M3000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Unertl KM, Weinger M, Johnson K. Variation in use of informatics tools among providers in a diabetes clinic. AMIA Annu Symp Proc. 2007:756–760. [PMC free article] [PubMed] [Google Scholar]

- 25.Grimshaw JM, Russell IT. Effect of clinical guidelines on medical practice: a systematic review of rigorous evaluations. Lancet. 1993;342(8883):1317–1322. doi: 10.1016/0140-6736(93)92244-n. [DOI] [PubMed] [Google Scholar]

- 26.Anand V, Biondich PG, Liu G, Rosenman M, Downs SM. Child Health Improvement through Computer Automation: the CHICA system. Stud Health Technol Inform. 2004;107(Pt 1):187–191. [PubMed] [Google Scholar]

- 27.Moxey A, Robertson J, Newby D, Hains I, Williamson M, Pearson S. Computerized clinical decision support for prescribing: provision does not guarantee uptake. J Am Med Inform Assoc. 2010;17(1):25–33. doi: 10.1197/jamia.M3170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Eccles M, McColl E, Steen N, et al. Effect of computerised evidence based guidelines on management of asthma and angina in adults in primary care: cluster randomised controlled trial. BMJ. 2002;325(7370):941. doi: 10.1136/bmj.325.7370.941. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Saleem JJ, Russ AL, Justice CF, et al. Exploring the persistence of paper with the electronic health record. Int J Med Inf. 2009;78(9):618–628. doi: 10.1016/j.ijmedinf.2009.04.001. [DOI] [PubMed] [Google Scholar]

- 30.Ash JS, Gorman PN, Lavelle M, et al. A cross-site qualitative study of physician order entry. J Am Med Inform Assoc. 2003;10(2):188–200. doi: 10.1197/jamia.M770. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Bates DW, Kuperman GJ, Wang S, et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc. 2003;10(6):523–530. doi: 10.1197/jamia.M1370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Osheroff JA, Pifer EA, Teich JM, Sittig DF, Jenders RA. Improving outcomes with clinical decision support: an implementer’s guide. Health Information Management and Systems Society. 2005 [Google Scholar]

- 33.Holden RJ, Karsh BT. A theoretical model of health information technology behavior. Behav Inf Technol. 2009;28(1):21–38. [Google Scholar]

- 34.Karsh BT. Clinical improvement and redesign: how change in workflow can be supported by clinical decision support. Agency for Healthcare Research and Quality; Rockville, MD: Jun, 2009. AHRQ Publication No. 09-0054. [Google Scholar]

- 35.Lorenzi NM, Novak LL, Weiss JB, Gadd CS, Unertl KM. Crossing the implementation chasm: a proposal for bold action. J Am Med Inform Assoc. 2008;15(3):290–296. doi: 10.1197/jamia.M2583. [DOI] [PMC free article] [PubMed] [Google Scholar]