Abstract

Cooperation, where one individual incurs a cost to help another, is a fundamental building block of the natural world and of human society. It has been suggested that costly punishment can promote the evolution of cooperation, with the threat of punishment deterring free-riders. Recent experiments, however, have revealed the existence of ‘anti-social’ punishment, where non-cooperators punish cooperators. While various theoretical models find that punishment can promote the evolution of cooperation, these models a priori exclude the possibility of anti-social punishment. Here we extend the standard theory of optional public goods games to include the full set of punishment strategies. We find that punishment no longer increases cooperation, and that selection favors substantial levels of anti-social punishment for a wide range of parameters. Furthermore, we conduct behavioral experiments, which lead to results that are consistent with our model predictions. As opposed to an altruistic act that promotes cooperation, punishment is mostly a self-interested tool for protecting oneself against potential competitors.

Introduction

Explaining the evolution of cooperation is a topic of importance to both biologists and social scientists, and significant progress has been made in this area1–6. Various mechanisms such as reciprocal altruism, spatial selection, kin selection and multi-level selection have been proposed to explain the evolution of cooperation. In addition to these mechanisms, the role of costly punishment in promoting cooperation has received much attention. Many behavioral experiments have demonstrated that people are willing to incur costs to punish others7–14. Complementing these empirical findings, several evolutionary models have been developed to explore the potential effect of punishment on promoting cooperation15–20. Many researchers have concluded that the propensity to punish can encourage cooperation, although this position has not gone unquestioned12,21–24.

The positive role of punishment has been challenged by recent experimental work that shows the existence of a more sinister form of punishment: sometimes non-cooperators punish cooperators14,24–31. In western countries, this ‘anti-social punishment’ is generally rare, except when it comes in the form of retaliation for punishment received in repeated games14,24–26,28. A series of cross-cultural experiments, however, finds substantial levels of anti-social punishment which cannot be explained by explicit retaliation27,30,31 (see Supplementary notes for further analysis). It is this phenomenon of punishment targeted at cooperators, rather than explicit retaliation, which is the focus of our paper.

Anti-social punishment is puzzling, as it is inconsistent with both rational self-interest and the hypothesis that punishment facilitates cooperation. Social preference models of economic decision-making also predict that it should not occur32–35. Due to its seemingly illogical nature, anti-social punishment has been excluded a priori from most previous theoretical models for the evolution of cooperation, which only allow cooperators to punish defectors (exceptions include refs 22,23,36,37). Yet empirically, sometimes cooperators are punished, raising interesting evolutionary questions. What are the effects of anti-social punishment on the co-evolution of punishment and cooperation? And can the punishment of cooperators be explained in an evolutionary framework?

In this paper, we extend the standard theory of optional Public Goods Games17–19,38 to explore anti-social punishment of cooperators. We study a finite population of N individuals. In each round of the game, groups of size n are randomly drawn from the population to play a one-shot optional public goods game followed by punishment. Each player chooses whether to participate in the public goods game as a cooperator (C) or defector (D), or to abstain from the public goods game and operate as a loner (L). Each cooperator pays a cost c to contribute to the public good, which is multiplied by a factor r > 1, and split evenly among all participating players in the group. Loners pay no cost and receive no share of the public good, but instead receive a fixed payoff σ. This loner’s payoff is less than the (r-1)c payoff earned in a group of all cooperators, but greater than the 0 payoff earned in a group of all defectors. If only one group member chooses to participate, then all group members receive the loner’s payoff σ. Following the public goods game, each player has the opportunity to punish any or all of the n-1 other members of the group. A given player pays a cost γ for each other player he chooses to punish, and incurs a cost β for each punishment that he receives (γ < β).

Each of the N players has a strategy, which specifies her action in the public goods game (C, D or L). Each player also has a decision rule for the punishment round that specifies whether she punishes those members of her group who cooperated in the public goods game, those who defected, or those who opted out. For example, a C-NPN strategist cooperates in the public goods game, does not punish cooperators, punishes defectors, and does not punish loners; and an L-PNN strategist opts out of the public goods game, punishes cooperators, and does not punish defectors or loners. In total there are 24 strategies. We contrast this full strategy set with the limited strategy set that has been considered before17–19. The limited set has only four strategies: cooperators that never punish, defectors that never punish, loners that never punish, and cooperators that punish defectors.

We study the transmission of strategies through an evolutionary process, which can be interpreted either as genetic evolution or as social learning. In both cases, strategies which earn higher payoffs are more likely to spread in the population, while lower payoff strategies tend to die out. Novel strategies are introduced by mutation in the case of genetic evolution, or innovation and experimentation in the case of social learning. We use a frequency dependent Moran process39 with an exponential payoff function40. We perform exact numerical calculations in the limit of low mutation41,42, which characterizes genetic evolution and the long-term evolution of societal norms, as well as agent based simulations for higher mutation rates which may be more appropriate for short-term learning and exploration dynamics19,43 (see Supplementary Methods for details).

In summary, we find that although punishment dramatically increases cooperation when only cooperators can punish defectors, this positive effect of punishment disappears almost entirely when the full set of punishment strategies is allowed. Just as punishment protects cooperators from invasion by defectors, it also protects defectors from invasion by loners, and loners from invasion by cooperators. Thus punishment is not ‘altruistic’ or particularly linked to cooperation. Instead natural selection favors substantial amounts of punishment targeted at all three public goods game actions, including cooperation. Furthermore, we find that the parameter sets which lead to high levels of cooperation (and little anti-social punishment) are those with efficient public goods (large r) and very weak punishment (small β). Finally, we generate testable predictions using our evolutionary model, and present preliminary experimental evidence which is consistent with those predictions.

Results

Effect of allowing the full set of punishment strategies

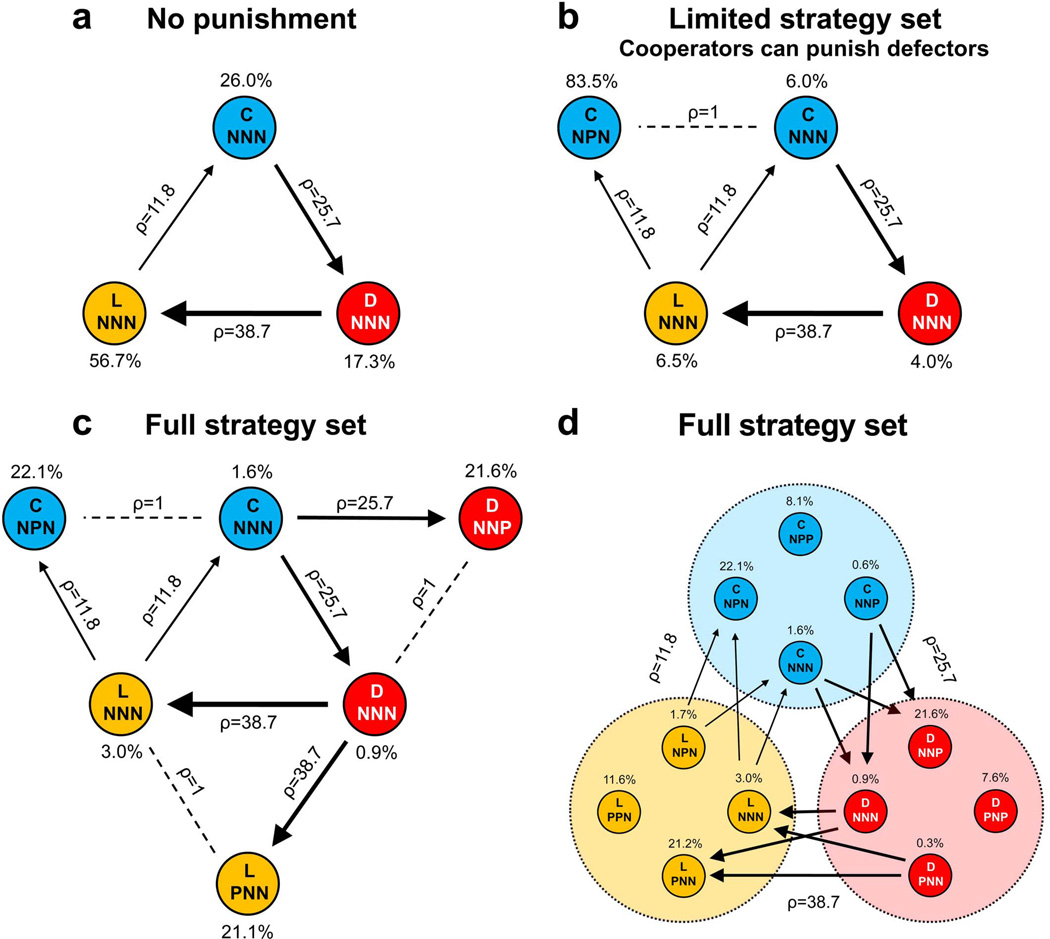

In the absence of punishment, defectors invade cooperators, loners invade defectors and cooperators invade loners44, as in a rock-paper-scissors cycle (Figure 1a). The system spends a similar amount of time in each of the three behavioral states, and cooperation is not the dominant outcome (although there is more cooperation than in the game without loners). If cooperators are allowed to punish defectors, however, the cooperator-defector-loner cycle is broken when the system reaches punishing cooperators (Figure 1b). For this limited strategy set, the population spends the vast majority of its time in a cooperative state17.

Figure 1. Using the full punishment strategy set, anti-social punishment is common and punishment does not promote cooperation.

Time-averaged frequencies of each strategy and transition rates between homogeneous populations, (a) without punishment, (b) when cooperators can punish defectors, and (c,d) with the full set of punishment strategies. A strategy X-Y1Y2Y3 is defined by a public goods game action (X: C=cooperator, D=defector, L=loner), a punishment decision taken towards cooperators (Y1: N = no action, P = punish), defectors (Y2: N or P) and loners (Y3: N or P). Transition rates ρ are the probability that a new mutant goes to fixation multiplied by the population size. We indicate neutral drift (ρ = 1, dotted lines), slow transitions (ρ = 11.8, thin lines), intermediate transitions (ρ = 25.7, medium lines) and fast transitions (ρ = 38.7, thick lines). Transitions with rates less than 0.1 are not shown. Parameter values are N = 100, n = 5, r = 3, c = 1, γ = 0.3, β = 1 and σ = 1. In panels c and d, strategies which punish others taking the same public goods action are included in the analysis, but not pictured because they are strongly disfavored by selection and virtually non-existent in the steady state distribution. For clarity, transitions with ρ < 10 are not shown in panel d.

But what happens when all punishment strategies are available? Now the cooperator-defector-loner cycle can be broken as easily in the loner or defector states as in a cooperative state (Figure 1c,d). Without punishment, loners are invaded by cooperators; but loners that punish cooperators are protected from such an invasion. Similarly, defectors are invaded by loners; but defectors who punish loners are protected. Thus, when all punishment strategies are available, the dynamics effectively revert back to the original cooperator-defector-loner cycle. The salient difference is that now the most successful strategies use punishment against threatening invaders. We see that adding punishment does not provide much benefit to cooperators once the option to punish is available to all individuals, instead of being artificially restricted to cooperators punishing defectors. Furthermore, in this light non-cooperative strategies that pay to punish cooperators seem less surprising, and we see why natural selection can lead to the evolution of anti-social punishment.

Robustness to parameter variation

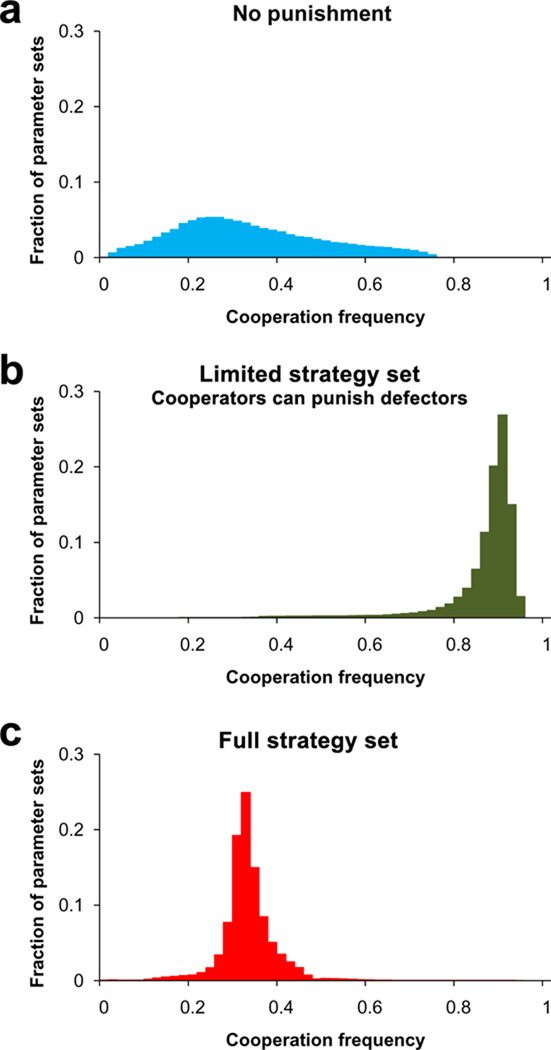

These results are not particular to the parameter values used in Figure 1. We have examined the steady state frequency of each strategy averaged over 100,000 randomly sampled parameter sets (see Supplementary notes). The outcome is remarkably similar to what is observed in Figure 1. The average level of cooperation is 34% in the absence of punishment, jumps to 87% with restricted punishment, and falls back to 34% with the full punishment strategy set. Although restricted punishment makes cooperation the dominant outcome for the vast majority of parameter sets, the full punishment strategy set does not (Figure 2). For more than 98% of the randomly chosen parameter sets, the frequency of cooperation is below 0.5.

Figure 2. Robustness of results across parameter sets.

Shown are the results of 100,000 numerical calculations using N = 100, n = 5, c = 1 and randomly sampling from uniform distributions on the intervals 1 < r < 5, 0 < σ < (r-1)c, 0 < γ < 5, and γ < β < 5γ. Results are shown for (a) the no-punishment strategy set, (b) the restricted punishment strategy set where only cooperators can punish loners, and (c) the full punishment strategy set.

We also find that the evolutionary success of anti-social punishment in the full strategy set is robust to variation in the payoff values. The frequencies of all three forms of punishment averaged over the 100,000 parameter sets are quite similar (punish cooperators, 40%; punish defectors, 41%, punish loners, 37%), and the frequency of each punishment type varies relatively little across parameter sets.

Furthermore, these results are not unique to the low mutation limit. Agent based simulations for higher mutation rates show that (i) punishment does not increase the average frequency of cooperation in the full strategy set; and (ii) all three forms of punishment (anti-C, anti-D and anti-L) have similar average frequencies (see Supplementary notes for details).

Discussion

Here we have shown have evolution can lead to punishment targeted at cooperators. We find that in our framework, loners are largely responsible for this anti-social punishment, and that it protects them against invasion by cooperators. The concept of loners punishing cooperators may seem strange given that loners could be envisioned as trying to avoid interactions with others. However, we find strong selection pressure in favor of such behavior: loners who avoid others in the context of the public goods game, but subsequently seek out and punish cooperators, outcompete fully solitary loners.

Our findings raise serious questions about the commonly held view that punishment promotes the evolution of cooperation. There is no reason to assume a priori that only cooperators punish others. In fact, there is substantial empirical evidence to the contrary24–31. As we have shown, using the full strategy set dramatically changes the evolutionary outcome, and punishment no longer increases cooperation. These results highlight the importance of not restricting analysis to a subset of strategies, and emphasize the need to reexamine other models of the co-evolution of punishment and cooperation while including all possible punishment strategies23,36.

But if our framework suggests that the taste for punishment did not evolve to promote cooperation, then why do humans display the desire to punish? And why do many behavioral experiments find that punishment discourages free-riding in the lab7–12,14? As opposed to being particularly suited to protecting cooperators from free-riders, our model suggests that costly punishment is an effective tool for subduing potential invaders of any kind. This finding is reminiscent of early work on the ability of punishment to stabilize disadvantageous norms20, while adding the critical step of the emergence of punishing behavior. Our results are also suggestive of a type of in-group bias45–50, as our most successful strategists punish those who are different from themselves, while not punishing those who are the same.

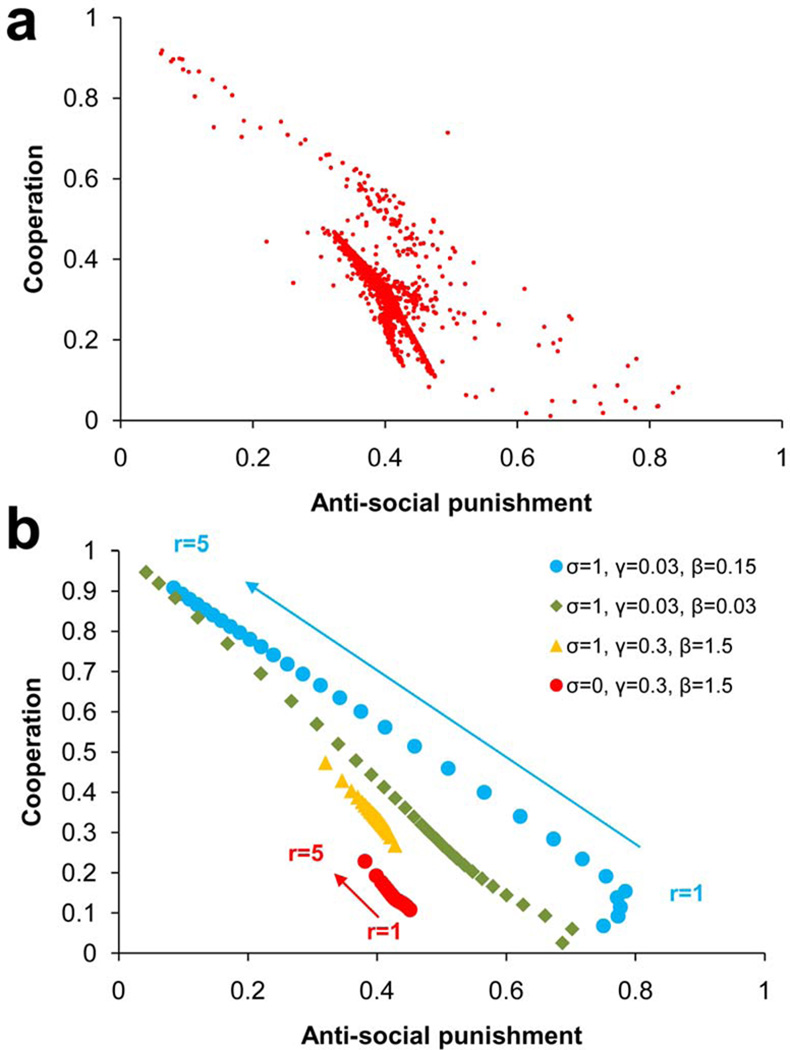

Therefore we would expect the level of anti-social punishment to vary depending on the makeup of the population. In populations with a high frequency of cooperators, such as those societies in which most previous behavioral experiments have been conducted, we anticipate punishment to be largely directed towards defectors. In populations where cooperators are less common, however, we expect higher levels of punishment targeted at cooperators. Consistent with this intuition, we find a clear inverse relationship between steady state cooperation frequency and anti-social punishment across randomly sampled parameter sets (Figure 3a).

Figure 3. Inverse relationship between cooperation and anti-social punishment across parameters.

(a) The steady state frequency of cooperation and anti-social punishment from 5,000 random parameter sets is shown. An inverse relationship is clearly visible: when antisocial punishment is rare, cooperation (and pro-social punishment) are common. (b) To explore this relationship, we vary r from (σ-c) to 5 for various values of σ, γ, and β, fixing N=100, n=5, and c=1. We see that increasing r always increases cooperation while decreasing anti-social punishment. We also see that when β is small, the range of cooperation and anti-social punishment values is large, whereas values are tightly constrained when β is larger. Achieving high levels of cooperation and low levels of anti-social punishment requires both large expected returns on public investment (large r) and symbolic punishments (small β).

Examining specific parameter sets we find that a larger cooperation multiplier, r, increases cooperation and decreases anti-social punishment (Figure 3b). The importance of r is further demonstrated by a sensitivity analysis calculating the marginal effect of each parameter (see Supplementary notes). In addition to having large r, we find that punishment must be largely ineffective in order to achieve a high level of cooperation. Among the parameter sets in Figure 3a with steady state cooperation over 65%, the average effect of punishment is β = 0.11 (compared to an overall average of β = 7.45). Systematic parameter variation gives further evidence of an interaction between r and β (see Supplementary notes). When punishment is weak, a wide range of outcomes is possible, including high levels of cooperation if r is large. When punishment is strong, however, all strategies can effectively protect against invasion. Thus neutral drift between punishing and non-punishing strategies dominates the dynamics, and the range of outcomes is tightly constrained. These relationships between cooperation, anti-social punishment and the payoff parameters r and β are consistent with cross-cultural sociological evidence (see Supplementary notes). Exploring the connection between model parameters, sociological variation and play in experimental games is an important direction for future research across societies.

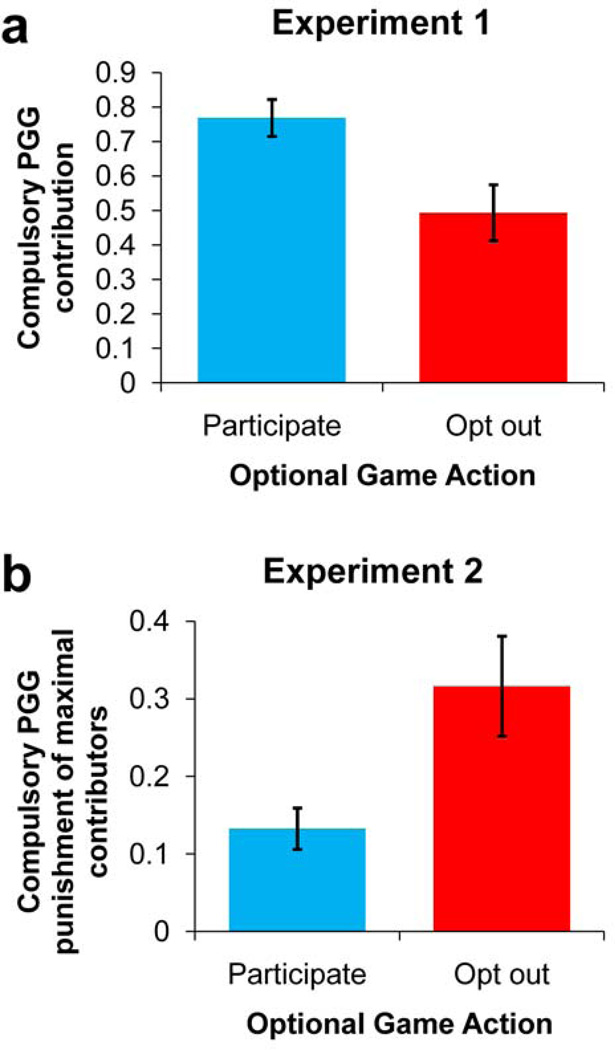

Taken together with data from cross-cultural experiments27,30,31, our evolutionary model generates testable predictions about behavior in the laboratory. Most previous experiments on public goods have explored compulsory games, which do not offer the choice to abstain in favor of a fixed loner’s payoff (an exception is ref 38 where many people do take the loner’s payoff when offered). In the compulsory framework, cross-cultural experiments find evidence of low contributors who punish high contributors. Our model, which is based on an optional public goods game, finds that most punishment of cooperators comes from loners rather than defectors. Therefore, our evolutionary model makes two testable predictions about behavior in the lab: (i) that many low contributors in compulsory games will opt for the loner’s payoff if given the chance; and (ii) that players who take the loner’s payoff in an optional game will engage in more anti-social punishment in a compulsory game.

To begin evaluating these predictions, we use the internet to recruit participants 51,52 for two incentivized behavioral experiments46,47(see Methods for experimental details, and Supplementary notes for statistical analysis). In the first experiment, subjects engage in both an optional and a compulsory one-shot public goods game. Consistent with our first theoretical prediction, we find that subjects who choose the loner’s payoff in the optional game contribute significantly less in the compulsory game (Figure 4a). Thus many subjects who appear to be defectors in the compulsory game prefer to be loners, and may be bringing intuitions evolved as loners to bear in the experiment. To test our second theoretical prediction, the second experiment has subjects participate in two one-shot public goods games, an optional game followed by a compulsory game with costly punishment. The results are again in agreement with the model’s prediction: subjects who opt out of the optional game engage in significantly more punishment of high contributors (Figure 4b). Thus these experiments provide preliminary empirical evidence in support of our theoretical framework, although intuitions evolved in optional games are not the only possible explanation for the data. Further experimental work exploring cooperation and punishment in optional games, as well as the relationship between play in optional and compulsory games, is an important direction for future research.

Figure 4. Two behavioral experiments are consistent with model predictions.

(a) In Experiment 1, subjects play an optional public goods game followed by a compulsory public goods game. The average fraction contributed in the compulsory game is significantly lower among subjects who opt out of the optional game (Rank-sum, N=73, p=0.006). Thus as predicted, loners contribute less in compulsory games. (b) In Experiment 2, subjects play an optional game followed by a compulsory game with costly punishment. Subjects indicate how much (0, 1 or 2) they would punish each possible contribution level in the compulsory game. Subjects who opt out of the optional game invest significantly more in punishing those that contribute the maximal amount (Rank-sum, N=196, p=0.003). Thus as predicted, loners engage in more anti-social punishment. All games are one-shot interactions among 4 players, with contributions to the public good multiplied by 2. Punishment technology is 3:1. Results are robust to various controls and alternate methods of analysis; see Supplementary notes for details.

We have also performed an evolutionary analysis of the compulsory game, where opting out is not possible (see Supplementary notes). Here we still find that anti-social punishment is favored by selection; that cooperation and anti-social punishment are inversely correlated; and that non-negligible amounts of pro- and anti-social punishment co-occur in many parameter sets. In the compulsory game, however, cooperation is never favored over defection using the full strategy set, and anti-social defectors are always the most common strategy. Thus, modeling the game exactly as it is performed in the experiments cannot explain the behaviors that are often observed in such experiments. The preferences displayed by subjects in these experiments must have evolved under circumstances that are somewhat different from those encountered in the experiments (see Supplementary notes). Adding the possibility to abstain leads to a model which can describe the range of experimental behaviors.

We have shown that although punishment does not increase cooperation or aggregate payoffs in our model, there is nonetheless an incentive to punish. Once punishment becomes available, it is essential for each strategy type to adopt it so as to protect against dominance by similarly armed others. As opposed to shifting the balance of strategies towards cooperation, punishment works to maintain the status quo. This maintenance, however, comes at a high price. Punishment is destructive for all parties and thus reduces the average payoff, without creating the benefit of increased cooperation. If all parties could agree to abandon punishment, everyone would benefit; but in a world without punishment, a strategy that switched to punishing potential invaders would dominate. Therefore choosing to punish is not altruistic in our framework, but rather self-interested.

Punishing leads to a tragedy of the commons where all individuals are forced to adopt punishment strategies. Abstaining from punishment becomes an act of cooperation, while using punishment is a form of second-order defection. The cooperative imperative is not the promotion of punishment, which is costly yet ineffective in our model, but instead the maintenance of cooperation through non-destructive means12,53,54.

Methods

Experimental overview

Together with previous experiments on compulsory games27, our model makes testable predictions about the behavior of experimental subjects: loners are predicted to be lower contributors in compulsory games, and to be most likely to punish high contributors. To evaluate these predictions, we conducted two incentivized behavioral experiments. Experiment 1 investigates the contribution behavior of loners in a compulsory game, while Experiment 2 considers the degree of anti-social punishment exhibited by loners in a compulsory game.

Recruitment using Amazon Mechanical Turk

Both experiments were conducted via the internet, using the online labor market Amazon Mechanical Turk (AMT) to recruit subjects. AMT is an online labor market in which employers contract workers to perform short tasks (typically less than 5 minutes) in exchange for small payments (typically less than $1). The amount paid can be conditioned on the outcome of the task, allowing for performance-dependent payments and incentive-compatible designs. AMT therefore offers an unprecedented tool for quickly and inexpensively recruiting subjects for economic game experiments. Although potential concerns exist regarding conducting experiments over the internet, numerous recent papers have demonstrated the reliability of data gathered using AMT across a range of domains51,52,55–58. Most relevant for our experiments are two studies using economic games. The first shows quantitative agreement in contribution behavior in a repeated public goods game between experiments conducted in the physical lab and those conducted using AMT with approximately 10-fold lower stakes58. The second replication again found quantitative agreement between the lab and AMT, this time in cooperation in a one-shot Prisoner’s Dilemma51. It has also been shown that AMT subjects display a level of test-retest reliability similar to what is seen in the traditional physical laboratory on measures of political beliefs, self-esteem, Social Dominance Orientation, and Big-Five personality traits56, as well as demographic variables such as belief in God, age, gender, education level and income52,57; that AMT subjects do not differ significantly from college undergraduates in terms of attentiveness or basic numeracy skills, and demonstrate similar effect sizes as undergraduates in tasks examining framing effects, the conjunction fallacy and outcome bias55; and are significantly more representative of the American population than undergraduates56. Thus there is ample reason to believe in the validity of experiments conducted using subjects recruited from AMT.

Experiment 1 design

In October 2010, 124 subjects were recruited through AMT to participate in Experiment 1, and assigned to either a treatment or control condition. Subjects received a $0.20 showup fee for participating, and earned on average an additional $0.63 based on decisions made in the experiment. First, subjects read a set of instructions for a one-shot public goods game, in which groups of 4 interact with a cooperation multiplier of r = 2, each choosing how much of a $0.40 endowment to contribute (in increments of 2 cents to avoid fractional cent amounts). Subjects then answered two comprehension questions to ensure they understood the payoff structure, and only those who answered correctly were allowed to participate.

The 73 subjects randomly assigned to the treatment group were then informed that the game was optional, and they could choose either to participate or to abstain in favor of a fixed payoff of $0.50. Those who chose to participate then indicated their level of contribution. Next, subjects were informed that they would play a second game with 3 new partners, which was a compulsory version of the first game. They were further informed that one of the two games (optional or compulsory) would be randomly selected to determine their payoff. This was done to keep the payoff range the same as in the control experiment described below, where subjects played only one game. In order to prevent between-game learning, subjects were not informed about the outcome of the optional game before making their decision in the compulsory game. To test if behavior in the compulsory game was affected by the preceding optional game, the remaining 51 subjects participated in a control condition in which they participated only in a compulsory public goods game. Payoffs were determined exactly as described (no deception was used).

Experiment 2 design

In November 2010, 196 subjects were recruited through AMT to participate in Experiment 2. Subjects received a $0.40 showup fee for participating, and earned on average an additional $0.92 based on decisions made in the experiment. As in Experiment 1, subjects began by reading a set of instructions for a one-shot public goods game with groups of 4, a cooperation multiplier of r = 2, and a $0.40 endowment, and then answered two comprehension questions in order to participate. Subjects were then informed that the game was optional, and decided whether to participate or opt out for a fixed $0.50 payment. Subjects who chose to participate were given 5 contribution levels to choose from: 0, 10, 20, 30 or 40. Thus the first game of Experiment 2 is identical to that of Experiment 1's treatment condition, except for a more limited set of contribution options to facilitate punishment decisions as described below.

Subjects were then informed that they would play a second game with 3 new partners, which differed from the first game in two ways: it was compulsory, and it would be followed by a Stage 2 in which participants could interact directly with each other group member. In Stage 2, subjects had three direct actions to pick from: choosing option A had no effect on either player; choosing option B caused them to lose 4 cents while the other player lost 12 cents; and choosing option C caused them to lose 8 cents while the other player lost 24 cents. Thus we offered mild (B) and severe (C) punishment options with a 1:3 punishment technology. Subjects were allowed to condition their Stage 2 choice on the other player's contribution in the compulsory public goods game. To do so, we employ the strategy method: subjects indicate which action (A, B, C) they would take towards group members choosing each possible contribution level (0, 10, 20, 30, 40). It has been shown that using the strategy method to elicit punishment decisions has a quantitative (although not qualitative) effect on the level of punishment targeted at defectors, but has little effect on punishment targeted at cooperators59. As anti-social punishment is our main focus, we therefore feel confident in our use of the strategy method. In order to prevent between-game learning, subjects were not informed about the outcome of the optional game before making their decision in the compulsory game. Payoffs were determined exactly as described (no deception was used).

See Supplementary notes for further details of the experimental setup, sample instructions, and detailed analysis of the results.

Supplementary Material

Acknowledgements

We thank Anna Dreber, Simon Gachter, Christoph Hauert, Benedikt Herrmann, Karl Sigmund, and Arne Traulsen for helpful comments and suggestions, Martijn Egas, Benedikt Herrmann Simon Gachter for sharing their experimental data, and Nicos Nikiforakis for the use of his publicly available data. Support from the John Templeton Foundation, the NSF/NIH joint program in mathematical biology (NIH grant R01GM078986), the Bill and Melinda Gates Foundation (Grand Challenges grant 37874), and J. Epstein is gratefully acknowledged.

Footnotes

Author contributions: DR and MN designed and executed the research and wrote the paper.

The authors have no competing interests to declare.

References

- 1.Nowak MA. Five rules for the evolution of cooperation. Science. 2006;314:1560–1563. doi: 10.1126/science.1133755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Sigmund K. The calculus of selfishness. Princeton Univ Press; 2010. [Google Scholar]

- 3.Ostrom E. Governing the commons: The evolution of institutions for collective action. Cambridge Univ Pr; 1990. [Google Scholar]

- 4.Cressman R. The stability concept of evolutionary game theory: a dynamic approach. Springer-Verlag; 1992. [Google Scholar]

- 5.Helbing D, Yu W. The outbreak of cooperation among success-driven individuals under noisy conditions. Proceedings of the National Academy of Sciences. 2009;106:3680–3685. doi: 10.1073/pnas.0811503106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Worden L, Levin SA. Evolutionary escape from the prisoner's dilemma. Journal of theoretical biology. 2007;245:411–422. doi: 10.1016/j.jtbi.2006.10.011. [DOI] [PubMed] [Google Scholar]

- 7.Yamagishi T. The provision of a sanctioning system as a public good. Journal of Personality and Social Psychology. 1986;51:110–116. [Google Scholar]

- 8.Ostrom E, Walker J, Gardner R. Covenants With and Without a Sword: Self-Governance is Possible. The American Political Science Review. 1992;86:404–417. [Google Scholar]

- 9.Fehr E, Gächter S. Cooperation and punishment in public goods experiments. American Economic Review. 2000;90:980–994. [Google Scholar]

- 10.Gurerk O, Irlenbusch B, Rockenbach B. The competitive advantage of sanctioning institutions. Science. 2006;312:108–111. doi: 10.1126/science.1123633. [DOI] [PubMed] [Google Scholar]

- 11.Rockenbach B, Milinski M. The efficient interaction of indirect reciprocity and costly punishment. Nature. 2006;444:718–723. doi: 10.1038/nature05229. [DOI] [PubMed] [Google Scholar]

- 12.Rand DG, Dreber A, Ellingsen T, Fudenberg D, Nowak MA. Positive Interactions Promote Public Cooperation. Science. 2009;325:1272–1275. doi: 10.1126/science.1177418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ule A, Schram A, Riedl A, Cason TN. Indirect Punishment and Generosity Toward Strangers. Science. 2009;326:1701–1704. doi: 10.1126/science.1178883. [DOI] [PubMed] [Google Scholar]

- 14.Janssen MA, Holahan R, Lee A, Ostrom E. Lab Experiments for the Study of Social-Ecological Systems. Science. 2010;328:613–617. doi: 10.1126/science.1183532. [DOI] [PubMed] [Google Scholar]

- 15.Boyd R, Gintis H, Bowles S, Richerson PJ. The evolution of altruistic punishment. Proc Natl Acad Sci USA. 2003;100:3531–3535. doi: 10.1073/pnas.0630443100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Nakamaru M, Iwasa Y. The coevolution of altruism and punishment: Role of the selfish punisher. Journal of theoretical biology. 2006;240:475–488. doi: 10.1016/j.jtbi.2005.10.011. [DOI] [PubMed] [Google Scholar]

- 17.Hauert C, Traulsen A, Brandt H, Nowak MA, Sigmund K. Via Freedom to Coercion: The Emergence of Costly Punishment. Science. 2007;316:1905–1907. doi: 10.1126/science.1141588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Fowler JH. Altruistic punishment and the origin of cooperation. Proc Natl Acad Sci U S A. 2005;102:7047–7049. doi: 10.1073/pnas.0500938102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Traulsen A, Hauert C, De Silva H, Nowak MA, Sigmund K. Exploration dynamics in evolutionary games. Proceedings of the National Academy of Sciences. 2009;106:709–712. doi: 10.1073/pnas.0808450106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Boyd R, Richerson P. Punishment Allows the Evolution of Cooperation (or Anything Else) in Sizable Groups. Ethology and Sociobiology. 1992;13:171–195. [Google Scholar]

- 21.Burnham T, Johnson D. The evolutionary and biological logic of human cooperation. Analyse & Kritik. 2005;27:113–135. [Google Scholar]

- 22.Janssen MA, Bushman C. Evolution of cooperation and altruistic punishment when retaliation is possible. Journal of theoretical biology. 2008;254:541–545. doi: 10.1016/j.jtbi.2008.06.017. [DOI] [PubMed] [Google Scholar]

- 23.Rand DG, Armao JJ, IV, Nakamaru M, Ohtsuki H. Anti-social punishment can prevent the co-evolution of punishment and cooperation. Journal of theoretical biology. 2010;265:624–632. doi: 10.1016/j.jtbi.2010.06.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Dreber A, Rand DG, Fudenberg D, Nowak MA. Winners don't punish. Nature. 2008;452:348–351. doi: 10.1038/nature06723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Cinyabuguma M, Page T, Putterman L. Can second-order punishment deter perverse punishment? Experimental Economics. 2006;9:265–279. [Google Scholar]

- 26.Denant-Boemont L, Masclet D, Noussair C. Punishment, counterpunishment and sanction enforcement in a social dilemma experiment. Economic Theory. 2007;33:145–167. [Google Scholar]

- 27.Herrmann B, Thoni C, Gächter S. Antisocial punishment across societies. Science. 2008;319:1362–1367. doi: 10.1126/science.1153808. [DOI] [PubMed] [Google Scholar]

- 28.Nikiforakis N. Punishment and Counter-punishment in Public Goods Games: Can we still govern ourselves? Journal of Public Economics. 2008;92:91–112. [Google Scholar]

- 29.Wu J-J, et al. Costly punishment does not always increase cooperation. Proceedings of the National Academy of Sciences. 2009;106:17448–17451. doi: 10.1073/pnas.0905918106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Gächter S, Herrmann B. Reciprocity, culture and human cooperation: previous insights and a new cross-cultural experiment. Philosophical Transactions of the Royal Society B: Biological Sciences. 2009;364:791–806. doi: 10.1098/rstb.2008.0275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Gächter S, Herrmann B. The Limits of Self-Governance when Cooperators Get Punished: Experimental Evidence from Urban and Rural Russia. Eur. Econ. Rev. (In press). [Google Scholar]

- 32.Rabin M. Incorporating Fairness into Game Theory and Economics. The American Economic Review. 1993;83:1281–1302. [Google Scholar]

- 33.Fehr E, Schmidt K. A theory of fairness, competition and cooperation. Quarterly Journal of Economics. 1999;114:817–868. [Google Scholar]

- 34.Bolton GE, Ockenfels A. ERC: A Theory of Equity, Reciprocity, and Competition. The American Economic Review. 2000;90:166–193. [Google Scholar]

- 35.Dufwenberg M, Kirchsteiger G. A theory of sequential reciprocity. Games and Economic Behavior. 2004;47:268–298. [Google Scholar]

- 36.Rand DG, Ohtsuki H, Nowak MA. Direct reciprocity with costly punishment: Generous tit-for-tat prevails. J Theor Biol. 2009;256:45–57. doi: 10.1016/j.jtbi.2008.09.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Sethi R. Evolutionary stability and social norms. Journal of economic behavior & organization. 1996;29:113–140. [Google Scholar]

- 38.Semmann D, Krambeck H-J, Milinski M. Volunteering leads to rock-paper-scissors dynamics in a public goods game. Nature. 2003;425:390–393. doi: 10.1038/nature01986. [DOI] [PubMed] [Google Scholar]

- 39.Nowak MA, Sasaki A, Taylor C, Fudenberg D. Emergence of cooperation and evolutionary stability in finite populations. Nature. 2004;428:646–650. doi: 10.1038/nature02414. [DOI] [PubMed] [Google Scholar]

- 40.Traulsen A, Shoresh N, Nowak M. Analytical Results for Individual and Group Selection of Any Intensity. Bulletin of Mathematical Biology. 2008;70:1410–1424. doi: 10.1007/s11538-008-9305-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Imhof LA, Fudenberg D, Nowak MA. Evolutionary cycles of cooperation and defection. Proceedings of the National Academy of Sciences of the United States of America. 2005;102:10797–10800. doi: 10.1073/pnas.0502589102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Fudenberg D, Imhof LA. Imitation processes with small mutations. Journal of Economic Theory. 2006;131:251–262. [Google Scholar]

- 43.Traulsen A, Semmann D, Sommerfeld RD, Krambeck H-J, Milinski M. Human strategy updating in evolutionary games. Proceedings of the National Academy of Sciences. 2010;107:2962–2966. doi: 10.1073/pnas.0912515107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Hauert C, De Monte S, Hofbauer J, Sigmund K. Volunteering as Red Queen Mechanism for Cooperation in Public Goods Games. Science. 2002;296:1129–1132. doi: 10.1126/science.1070582. [DOI] [PubMed] [Google Scholar]

- 45.Tajfel H, Billig RP, Flament C. Social categorization and intergroup behavior. European Journal of Social Psychology. 1971;1:149–178. [Google Scholar]

- 46.Rand DG, et al. Dynamic Remodeling of In-group Bias During the 2008 Presidential Election. Proc Natl Acad Sci U S A. 2009;106:6187–6191. doi: 10.1073/pnas.0811552106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Brewer MB. In-group bias in the minimal intergroup situation: A cognitive-motivational analysis. Psychological bulletin. 1979;86:307. [Google Scholar]

- 48.Yamagishi T, Jin N, Kiyonari T. Bounded Generalized Reciprocity: Ingroup Boasting and Ingroup Favoritism. Advances in Group Processes. 1999;16:161–197. [Google Scholar]

- 49.Fowler JH, Kam CD. Beyond the Self: Social Identity, Altruism, and Political Participation. Journal of Politics. 2007;69:813–827. [Google Scholar]

- 50.Fershtman C, Gneezy U. Discrimination in a Segmented Society: An Experimental Approach. The Quarterly Journal of Economics. 2001;116:351–377. [Google Scholar]

- 51.Horton JJ, Rand DG, Zeckhauser RJ. The Online Laboratory: Conducting Experiments in a Real Labor Market. Experimental Economics. 2011 [Google Scholar]

- 52.Rand DG. The promise of Mechanical Turk: How online labor markets can help theorists run behavioral experiments. Journal of theoretical biology. 2011 doi: 10.1016/j.jtbi.2011.03.004. [DOI] [PubMed] [Google Scholar]

- 53.Milinski M, Semmann D, Krambeck HJ. Reputation helps solve the 'tragedy of the commons'. Nature. 2002;415:424–426. doi: 10.1038/415424a. [DOI] [PubMed] [Google Scholar]

- 54.Ellingsen T, Johannesson M. Anticipated verbal feedback induces altruistic behavior. Evolution and Human Behavior. 2008;29:100–105. [Google Scholar]

- 55.Paolacci G, Chandler J, Ipeirotis PG. Running Experiments on Amazon Mechanical Turk. Judgment and Decision Making. 2010;5:411–419. [Google Scholar]

- 56.Buhrmester MD, Kwang T, Gosling SD. Amazon's Mechanical Turk: A New Source of Inexpensive, Yet High-Quality, Data? Perspectives on Psychological Science. 2011;6:3–5. doi: 10.1177/1745691610393980. [DOI] [PubMed] [Google Scholar]

- 57.Mason W, Suri S. Conducting Behavioral Research on Amazon's Mechanical Turk. 2010 doi: 10.3758/s13428-011-0124-6. Available at SSRN: http://ssrn.com/abstract=1691163. [DOI] [PubMed]

- 58.Suri S, Watts DJ. Cooperation and Contagion in Web-Based, Networked Public Goods Experiments. PLoS ONE. 2011;6:e16836. doi: 10.1371/journal.pone.0016836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Falk A, Fehr E, Fischbacher U. Driving Forces Behind Informal Sanctions. Econometrica. 2005;73:2017–2030. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.