Abstract

Granger causality (GC) is one of the most popular measures to reveal causality influence of time series and has been widely applied in economics and neuroscience. Especially, its counterpart in frequency domain, spectral GC, as well as other Granger-like causality measures have recently been applied to study causal interactions between brain areas in different frequency ranges during cognitive and perceptual tasks. In this paper, we show that: 1) GC in time domain cannot correctly determine how strongly one time series influences the other when there is directional causality between two time series, and 2) spectral GC and other Granger-like causality measures have inherent shortcomings and/or limitations because of the use of the transfer function (or its inverse matrix) and partial information of the linear regression model. On the other hand, we propose two novel causality measures (in time and frequency domains) for the linear regression model, called new causality and new spectral causality, respectively, which are more reasonable and understandable than GC or Granger-like measures. Especially, from one simple example, we point out that, in time domain, both new causality and GC adopt the concept of proportion, but they are defined on two different equations where one equation (for GC) is only part of the other (for new causality), thus the new causality is a natural extension of GC and has a sound conceptual/theoretical basis, and GC is not the desired causal influence at all. By several examples, we confirm that new causality measures have distinct advantages over GC or Granger-like measures. Finally, we conduct event-related potential causality analysis for a subject with intracranial depth electrodes undergoing evaluation for epilepsy surgery, and show that, in the frequency domain, all measures reveal significant directional event-related causality, but the result from new spectral causality is consistent with event-related time–frequency power spectrum activity. The spectral GC as well as other Granger-like measures are shown to generate misleading results. The proposed new causality measures may have wide potential applications in economics and neuroscience.

Keywords: Event-related potential, Granger or Granger-like causality, linear regression model, new causality, power spectrum

I. Introduction

GIVEN a set of time series, how to define causality influence among them has been a topic for over 2000 years and is yet to be completely resolved [1]–[3]. In the literature, one of the most popular definitions for causality is Granger causality (GC). Due to its simplicity and easy implementation, GC has been widely used. The basic idea of GC was originally conceived by Wiener [4] and later formalized by Granger in the form of linear regression model [5]. It can be simply stated as follows. If the variance of the prediction error for the first time series at the present time is greater than the variance of the prediction error by including past measurements from the second time series in the linear regression model, then the second time series can be said to have a causal (driving) influence on the first time series. Reversing the roles of the two time series, one repeats the process to address the question of driving in the opposite direction. GC value of is defined to describe the strength of the causality that the second time series has on the first one [5]–[10]. From GC value, it is clear that: 1) when there is no causal influence from the second time series to the first one and when there is, and 2) the larger the value of , the higher causal influence. In recent years, there has been significant interest to discuss causal interactions between brain areas which are highly complex neural networks. For instance, with GC analysis Freiwald et al. [7] revealed the existence of both unidirectional and bidirectional influences between neural groups in the macaque inferotemporal cortex. Hesse et al. [9] analyzed the electroencephalogram (EEG) data from the Stroop task and disclosed that conflict situations generate dense webs of interactions directed from posterior to anterior cortical sites and the web of directed interactions occurs mainly 400 ms after the stimulus onset and lasts up to the end of the task. Roebroeck et al. [11] explored directed causal influences between neuronal populations in functional magnetic resonance imaging (fMRI) data. Oya et al. [10] demonstrated causal interactions between auditory cortical fields in humans through intracranial evoked potentials to sound. Gow et al. [12] applied Granger analysis to MRI-constrained magnetoencephalogram and EEG data to explore the influence of lexical representation on the perception of ambiguous speech sounds. Gow et al. [13] showed a consistent pattern of direct posterior superior temporal gyrus influence over sites distributed over the entire ventral pathway for words, non-words, and phonetically ambiguous items.

Since frequency decompositions are often of particular interest for neurophysiological data, the original GC in time domain has been extended to spectral domain. Along this line, several spectral Granger or Granger-like causality measures have been developed such as spectral GC [6], [8], conditional spectral GC [6], [8], partial directed coherence (PDC) [14], relative power contribution (RPC) [15], directed transfer function (DTF) [16], and short-time direct directed transfer function (SdDTF) [17]. For two time series, spectral GC, PDC, RPC, and DTF are all equivalent in the sense that, when one of them is zero, the others are also zero. The applications of these measures to neural data have yielded many promising results. For example, Bernasconi and Konig [18] applied Geweke's spectral measures to describe causal interactions among different areas in the cat visual cortex. Liang et al. [19] used a time-varying spectral technique to differentiate feedforward, feedback, and lateral dynamical influences in monkey ventral visual cortex during pattern discrimination. Brovelli et al. [20] applied spectral GC to identify causal influences from primary somatosensory cortex to motor cortex in the beta band (15–30 Hz) frequency during lever pressing by awake monkeys. Ding et al. [6] discussed conditional spectral GC to reveal that causal influence from area S1 to interior posterior parietal area 7a was mediated by the inferior posterior parietal area 7b during monkey visual pattern discrimination task. Sato et al. [21] applied PDC to fMRI to discriminate physiological and nonphysiological components based on their frequency characteristics. Yamashita et al. [15] applied RPC to evaluate frequency-wise directed connectivity of BOLD signals. Kaminski and Liang [22] applied short-time DTF to show predominant direction of influence from hippocampus to supramammilary nucleus at the theta band (3.7–5.6 Hz) frequency. Korzeniewska et al. [17] used SdDTF to reveal frequency-dependent interactions, particularly in high gamma (> 60 Hz) frequencies, between brain regions known to participate in the recorded language task.

In time domain, the well-known GC value defined by is only related to noise terms of the linear regression models and has nothing to do with coefficients of the linear regression model of two time series. As a result, this definition may miss some important information and may not be able to correctly reflect the real strength of causality when there is directional causality from one time series to the other. That is, the larger GC value does not necessarily mean higher causality, or vice versa, although in general this definition is very useful to determine whether there is directional causality between two time series, that is, means no causality and means existence of causality. Therefore, the GC value may not correctly reflect real causal influence between two channels. In other words, GC values for different pairs of channels even from the same subject may not be comparable. As such, the common practice of using thickness of arrows in a diagram to represent the strength of causality for different pairs of channels even in the same subject may not be true. In the literature, the other GC value defined in [7] is only related to one column of the coefficient matrix of the linear regression model and has nothing to do with the terms in other columns and noise terms. This also makes this definition suffer from similar pitfalls. Therefore, a researcher must use caution when drawing any conclusion based on these two GC values. In the frequency domain, the spectral GC is defined by Granger [5] based on the inverse matrix of the transfer matrix and the noise terms of the linear regression model and called it causality coherence. This definition in nature is a generalization of coherence where researchers have already realized that coherence cannot be used to reveal real causality for two time series. For this reason, since then various definitions of GC in frequency domain have been developed. Among the most popular definitions are the spectral GC [6] and [8], PDC [14], RPC [15], and DTF [16]. The spectral GC can be applied to two time series or two groups of time series, PDC, RPC, and DTF can be applied to multidimensional time series. DTF and RPC are not able to distinguish between direct and indirect pathways linking different structures and as a result they do not provide the multivariate relationships from a partial perspective [21]. PDC lacks a theoretical foundation [23]. In general, the above spectral Granger or Granger-like causality definitions are based on the transfer function matrix (or its inverse matrix) of the linear regression model and thus may not be able to reflect the real strength of causality as pointed out later in detail in this paper. Note that the transfer function matrix or its inverse matrix is different from the coefficient matrix of the linear regression model (frequency model), on which we rely in this paper to propose new spectral causality.

In this paper, on one hand, in time domain we define a new causality from any time series Y to any time series X in the linear regression model of multivariate time series, which describes the proportion that Y occupies among all contributions to X. Especially, we use one simple example to show that both the new causality and GC adopt the concept of proportion, but they are defined on two different equations where one equation (for GC) is only part of the other equation (for new causality), and therefore the new causality is a natural extension of GC and GC is not the desired causal influence at all. As such, even when the GC value is zero, there still exists a real causality which can be revealed by a new causality. Therefore, the popular traditional GC cannot reveal the real strength of causality at all and researchers must apply caution in drawing any conclusion based on the GC value. On the other hand, in frequency domain we point out that any causality definition based on the transfer function matrix (or its inverse matrix) of the linear regression model in general may not be able to reveal the real strength of causality between two time series. Since almost all existing spectral causality definitions are based on the transfer function matrix (or its inverse matrix) of the linear regression model, we take the widely used spectral GC, PDC, and RPC as examples and point out their inherent shortcomings and/or limitations. To overcome these difficulties, we define a new spectral causality that describes the proportion that one variable occupies among all contributions to another variable in the multivariate linear regression model (frequency domain). By several simulated examples, one can clearly see that our new definitions (in time and frequency domain) are advantageous over existing definitions. In particular, for a real event-related potential (ERP) EEG data from a seizure patient, the application of new spectral causality shows promising and reasonable results. But the applications of spectral GC, PDC, and RPC all generate misleading results. Therefore, our new causality definitions may open a new window to study causality relationships and may have wide applications in economics and neuroscience.

This paper is organized as follows. Causality analyses in time domain and frequency domain are discussed in Section II and Section III, respectively. Several examples are provided in Section IV. Concluding remarks are given in Section V.

II. Granger Causality in Time Domain

In this section, we first introduce the well-known GC and conditional GC, and then we define a new causality.

We begin with a bivariate time series. Given two time series X1(t) and X2(t). which are assumed to be jointly stationary, their autoregressive representations are described as

| (1) |

and their joint representations are described as

| (2) |

where t = 0, 1, . . . , N, the noise terms are uncorrelated over time, εi and ηi have zero means and variances of , and , i = 1, 2. The covariance between η1 and η2 is defined by ση1η2 = cov(η1, η2).

Now consider the first equalities in (1) and (2). According to the original formulations in [4] and [5], if is less than in some suitable statistical sense, X2 is said to have a causal influence on X1. In this case, the first equality in (2) is more accurate than that in (1) to estimate X1. Otherwise, if , X2 is said to have no causal influence on X1. In this case, two equalities are same. Such kind of causal influence, called GC [6], [8], is defined by

| (3) |

Obviously, FX2→X1 = 0 when there is no causal influence from X2 to X1 and FX2→X1 > 0 when there is. Similarly, the causal influence from X1 to X2 is defined by

| (4) |

To show whether the interaction between two time series is direct or is mediated by another recorded time series, conditional GC [6], [24] was defined by

| (5) |

where and are the variances of two noise terms ε3 and η3 of the following two joint autoregressive representations:

| (6) |

and

| (7) |

According to this definition, FX2→X1|X3 = 0 means that no further improvement in the prediction of X1 can be expected by including past measurements of X2. On the other hand, when there is still a direct component from X2 to X1, the past measurements of X1, X2, and X3 together result in better prediction of X1, leading to , and FX2→X1|X3 > 0.

For GC, we point out some properties as follows.

Property 1:

- Consider the following model:

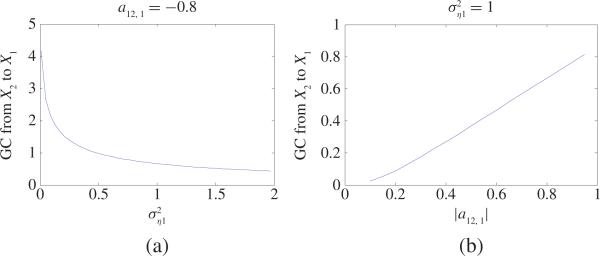

where η1, η2 are two independent white noise processes with zero mean and variances Fig. 1 shows GC values in two cases: a) a12, 1 = –0.8 and varies from 0.01 to 2; b) a12, 1 varies from –0.1 to –0.95 and . From Fig. 1(a) and (b), one can see that the GC value from X2 to X1 decreases as the variance increases (or increases as the amplitude |a12, 1| increases). That means that increasing the amplitude |a12, 1| or decreasing variance of the residual term η1 will increase the GC value from X2 to X1. Thus, we conclude that GC is actually a relative concept, a larger GC value from X2 to X1 means that the causal influence from the first term a12, 1X2,t– occupies a larger portion compared to the influence from the residual term η1, t, or vice versa.(8) - Consider the following model:

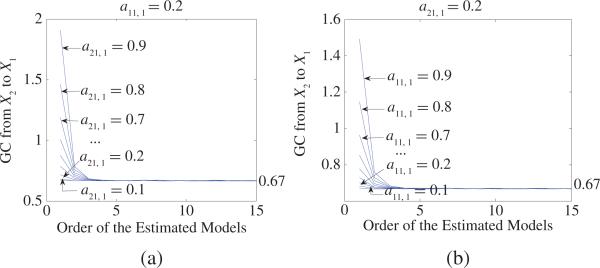

where 0 < a11 , 1, a21, 1 < 1 and for simplicity η1, η2 are assumed to be two independent white-noise processes with zero mean and variances . Fig. 2 shows GC from X2 to X1 for (9) under different parameters a11, 1 and a21, 1. When we calculate GC, it should be pointed out that for each specific (9) we generate a dataset of 200 realizations of 10 000 time points. For each realization, we estimate AR models [autoregressive representations (1) and joint representations (2)] with the order of 8 by using the least-squares method and calculate GC where the order 8 fits well for all examples throughout this paper [see Fig. 2(a) and (b) from which one can see GC keeps steady when the order of the estimated models is greater than 8]. Then we obtain the average value across all realizations and get GC from X2 to X1. From Fig. 2, one can clearly see that GC from X2 to X1 has nothing to do with parameters a11, 1 and a21, 1 [of course, choices of parameters a11, 1 and a21, 1 are such that (9) does not diverge]. This property is consistent with the spectral GC result introduced later.(9) For (2), if η2,t ≡ 0 or η1, t = η2, t, GC from X2 to X1 equals zero. This can be proved from (17.29), [6] and the spectral GC (32) introduced later.

Fig. 1.

(a) GC values from X2 to X1 in (8) as the variance changes from 0.01 to 2 where a12, 1 = –0.8. (b) GC values from X2 to X1 in (8) as a12, 1 changes from –0.1 to –0.95 where . From (a) one can see that GC value from X2 to X1 decreases as the variance increases. From (b) one can see that GC value from X2 to X1 increases as the amplitude |a12, 1| increases.

Fig. 2.

(a) GC from X2 to X1 as a function of the order of the estimated models for (9) when a11, 1 = 0.2 and a21, 1 changes from 0.1 to 0.9. (b) GC from X2 to X1 as a function of the order of the estimated models for (9) when a21, 1 = 0.2 and a11, 1 changes from 0.1 to 0.9. From (a) and (b), one can see that: 1) GC from X2 to X1 keeps steady and converges to 0.67 when the order p > 8, and 2) GC from X2 to X1 has nothing to do with parameters a11, 1 and a21, 1.

Except for the two specific cases in (iii) of Property 1, in general GC and conditional GC are useful to show whether theoretically there is directional interaction between two neurons or among three neurons. When there exists causal influence, a question arises: does the GC value or the conditional GC value reveal the real strength of causality? to answer this question, let us consider the following simple model:

| (10) |

where η1 and η2 are two independent white-noise processes with zero mean and a12, 1a21, 1 ≠ 0. From (10), one can get

| (11) |

So, the GC value

| (12) |

or equivalently

| (13) |

which is only related to the last two noise terms and has nothing to do with the first term. It is noted that all three terms make contributions to current X1,t, and a12, 1a21, 1X1, t–2 must have causal influence on X1, t and must be considered to illustrate real causality. Especially, when , we have X1, t = a12, 1a21, 1X1,t–2 + η1,t and FX2→X1 =0. Since a21, 1 X1,t–2 comes from X2,t–1, we can surely know that X2 has real nonzero causality on X1 because a12, 1a21, 1 ≠ 0. Thus, GC or conditional GC may not reveal real causality at all. As such, these two definitions have their inherent shortcomings and/or limitations to illustrate the real strength of causality. These comments are summarized in the following remark.

Remark 1:

- When there is causality from X2 to X1, FX2→X1 varies in (0, +∞). GC may not correctly reveal the real strength of causality. Thus, it is hard to say how much influence is caused only based on the value of FX2→X1 . For example, for two sets of different time series {X1, X2} and {X̄1, X̄2} their GC values may not be comparable. When FX2→X1 = 1 and FX̄2→X̄1 = 10, we cannot say that the influence caused from X2 to X1 in the set of time series {X1, X2} is smaller than that caused from X̄2 to X̄1 in the set of time series {X̄1, X̄2}. As such, a smaller value of FX2→X1 does not mean X2 has less causal influence on X1. Thus, for actual physical data when one obtains a small value of FX2→X1 (e.g., FX2→X1 = 0.1), it does not mean that there is no causality from X2 to X1 and the causal influence from X2 to X1 can be ignored. On the contrary, when one obtains a large value of FX2→X1 (e.g., FX2→X1 = 1 which can be ignored compared to FX2→X1 = +∞), it does not mean that there is strong causality from X2 to X1. Let us take a look at the following two models:

and(14)

where η1, η2, and η3 are three independent white-noise processes with zero mean and variances , and . For (14) we can obtain GC FX2→X1 = 4.86. For (15) we can obtain GC FX2→X1 = 4.18. In (14), the noise term η1 has smaller variance so that a snmall change (compared to ) of the variance of the residual ε1 of the estimated autoregressive representation model for X1 may lead to a bigger GC value [see GC definition in (3)] as shown in FX2→X1 = 4.86. A similar analysis holds for (15). Therefore, it seems that both of GC values are “intuitively reasonable” based on GC definition. Now a question arises: Does the “reasonable” GC value correctly reflect the real strength of causality? Unfortunately, the answer is no. Note that: 1) X2 is same in (14) and (15); 2) X1 is driven by X2 in (15); 3) X1 is driven by X1 and X2 in (14); and 4) influence from η1 or η3 is very small and can even be ignored because of their small variances, and thus intuitively we can draw a conclusion that the real causality from X2 to X1 in (14) should be weaker than that from X2 to X1 in (15). However, FX2→X1 = 4.86 for (14) is larger than FX2→X1 = 4.18 for (15). As such, the traditional GC may not be reliable at least in the above two cases, that means that the resulting GC value may not be truely reflect the real strength of causality. Hence, in general, it is questionable whether the GC value reveals causal influence between two neurons.(15) The same problem as (i) exists for conditional GC.

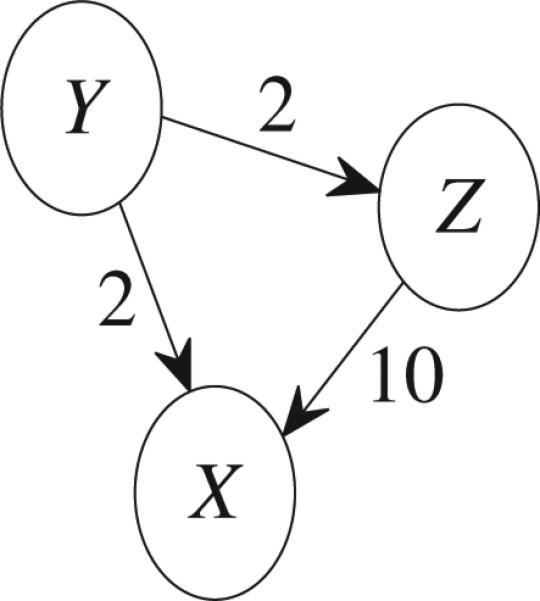

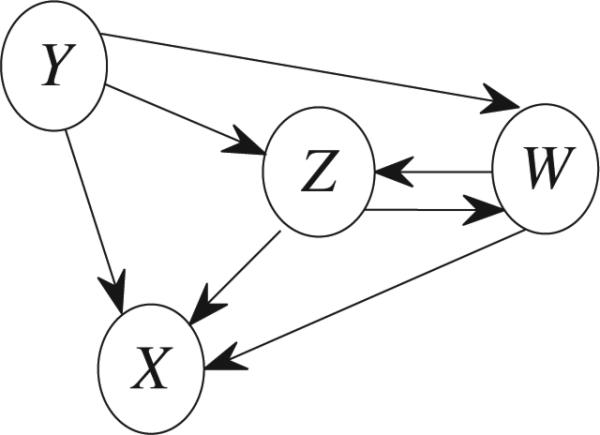

Consider Fig. 3 where 2 or 10 are direct GC values. The total causal influence from Y to X is the summation of the direct causal influence from Y to X (i.e., 2) and the indirect causal influence mediated by Z. Obviously, the indirect causal influence mediated by Z does not equal to 2 × 10 = 20 since this influence must less than the direct causal influence 2 from Y to Z. This implies that the indirect causality along the route Y → Z → X does not equal FY→Z × FZ→X.

Fig. 3.

Connectivities among three time series.

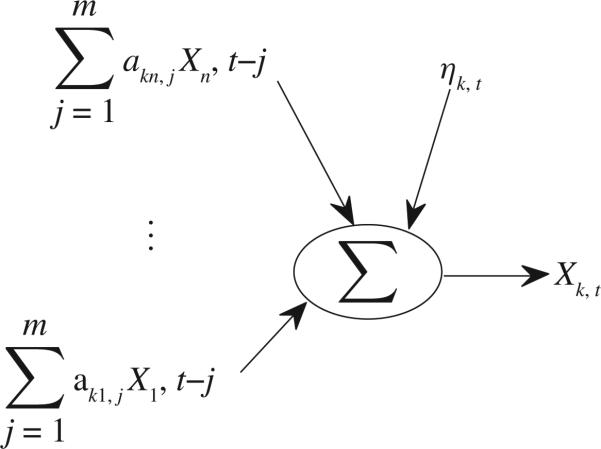

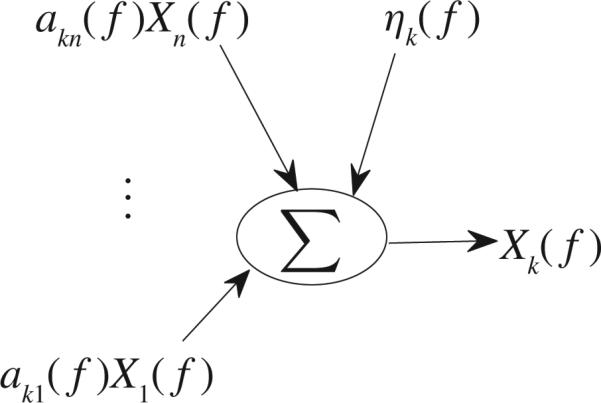

Because of the above-mentioned shortcomings and/or limitations of GC, we next give a new causality definition of multivariate time series. Let us consider the following general model:

| (16) |

where Xi(i = 1, . . . , n) are n time series, t = 0, 1, . . . , N, ηi has zero mean and variance of and σηiηk = cov(ηi, ηk), i, k = 1, . . . , n. Based on (16), Fig.4 clearly shows contributions to Xk,t, which include and the noise term ηk,t where the influence from is causality from Xk's own past values. Each contribution plays an important role in determining Xk,t . If occupies a larger portion among all those contributions, then Xi has stronger causality on Xk, or vice versa. Thus, a good definition for causality from Xi to Xk in time domain should be able to describe what proportion Xi occupies among all these contributions. This is a general guideline for proposing any causality method (i.e., all contributions must be considered). For (11), let us define

| (17) |

which is the summation of two noise terms and each noise term makes contributions to . To describe what proportion η2 occupies in , we define

| (18) |

which is the same as the GC defined in (13). Therefore, here, in nature, GC is actually defined based on the noise (17) and follows the above guideline. Motivated by this idea as well as (i) of Property 1, we can naturally extend the noise (17) to the kth equation of (16) and define a new direct causality from Xi to Xk as follows:

| (19) |

Fig. 4.

Contributions to Xk,t .

When N is large enough

Then, (19) can be approximated as

| (20) |

Throughout this paper, we always assume that N is large enough so that is always defined as (20).

When n = 2 in (16), in his early work [8] Geweke stated that GC FX2→X1 = 0 if and only if a12, j ≡ 0. According to this statement, if a12, j ≡ 0, then there is no causality (or GC). If a12, j ≢ 0, then there is GC. Similarly, for (20) we can make the following statement: if and only if a12, j ≡ 0. If , then a12, j ≢ 0, which implies that there is causality from X2 to X1. If , then a12, j ≡ 0 and there is no causality from X2 to X1 As such, the new causality defined in (20) extends a bivariate time series to a multivariate time series. The extension to multichannel data is very important because pairwise treatments of signals can lead to errors in the estimation of mutual influences between channels [25] and [26].

New causality based on (11) can be written as

| (21) |

which describes what proportion X2 occupies among two contributions in X1 [see (11)]. Note that for (11) GC FX2→X1 is proposed based on (17) and describes what proportion η2 occupies among two contributions in [see (18)]. Thus GC actually reveals causal influence from η2 to , but it does not reveal causal influence from X2 to X1 at all by noting that is only partial information of X1, i.e., the noise terms a12, 1η2,t–+η1,t. Any causality definition by using only partial information of X1 may inevitably be questionable. As such, new causality is totally different from GC. In general, we can make some key comments in the following remark.

Remark 2:

It is easy to see that . Moreover, it is meaningful and understandable that a larger new causality value reveals a larger causal influence for different sets of bivariate time series. Thus, in this way, the thickness of arrows in Fig. 13(a) representing the strength of the connections makes much sense. As pointed out in (i) of Remark 1, a larger GC value does not necessarily reveals a larger causal influence for different sets of bivariate time series. Hence, if one uses thickness to represent strength of connections based on GC values for different sets of bivariate time series, it may not make sense. However, unfortunately almost all researchers did in this way after calculating GC values for different sets (pairs) of bivariate time series.

- For (14) and (15), we can get and 0.994, respectively. For (15), means that influence from the noise term η3 to X1 is very small and X1 is almost completely driven by X2. Hence, the real causality from X2 to X1 for (15) is very close to that for the following model:

whose real causality from X2 to X1 obviously equals 1. The causality value correctly reveals the real causality from X2 to X1 for (15), which is close to 1. For (14), we can easily show that influence from the noise term η1 to X1 is very small compared to the causal influence from X2 to X1 and X1 is almost completely driven by X1 and X2. Hence, the real causality from X2 to X1 for (14) is very close to that for the following model:(22)

where X1's past value also makes a contribution to X1's current value. Comparing (22) to (23), one can clearly see that the real causality from X2 to X1 for (23) is surely weaker than that for (22). By noting small variances of the noise terms, this is why we mention the intuitive conclusion for (14) and (15) pointed in (i) of Remark 1. The causality value for (14) is smaller than causality value for (15). This result is consistent with the above analysis. However, the GC value FX2→X1 = 4.86 for (14) is larger than the GC value FX2→X1 = 4.18 for (15), which violates the above analysis. In fact, from for (14), one can see that X1's past value makes a rather major contribution to X1's current value. But this contribution is not considered in the GC as pointed in (ii) of Property 1 where the GC value from X2 to X1 has nothing to do with the parameters a11,1 and a21,1. Therefore, the causality definition in (20) is much more reasonable, stable, and reliable than GC.(23) - Consider the following two models:

and(24)

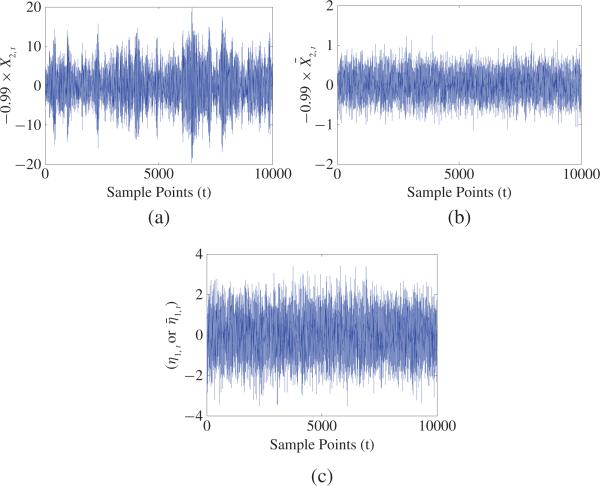

where η1 and η2 are two independent white-noise processes with zero mean and variances and the initial conditions X1, 0 = X̄1, 0 and X2, 0 = X̄2, 0. We can obtain FX2→X1 = 0.092 for both (24) and (25), for (24), and for (25). Fig. 5 shows trajectories –0.99X2, –0.99X̄2 and η1 for one realization of (24) and (25). From Fig. 5(a) and (c), one can clearly see that amplitudes of –0.99X2 are much larger than that of η1 and the contribution from –0.99X2, t–1 occupies much larger portion compared to that from η1, t, as a result, the causal influence from X2 to X1 occupies a major portion compared to the influence from η1 and the real strength of causality from X2 to X1 should have higher value. This fact is real. Our causality value for (24) is consistent with this fact. Similarly, from Fig. 5(b) and (c), one can clearly see that amplitude of –0.99X̄2 is much smaller than that of η1 and the contribution from –0.99X̄2, t–1 occupies much smaller portion compared to that from η1, t, as a result, the causal influence from X̄2 to X̄1 occupies a rather small portion compared to the influence from η1 and the real strength of causality from X̄2 to X̄1 should have a smaller value. This fact is also real. Our causality for (25) is consistent with this fact. However, GC always equals 0.092 for both of (24) and (25) and does not reflect such kind of changes at all, and violates the above two real facts.(25) For the two specific cases in (iii) of Property 1 and for any nonzero a12,j, we have FX2→X1 = 0 for (2). However, one can easily check if X2 ≢ 0, that is, there is real causality. Thus, GC FX2→X1 = 0 does not necessarily imply no real causality, but new causality must imply no real causality (no GC). So, new causality reveals real causality more correctly than GC.

Once (16) is evaluated based on the n time series X1, . . . , Xn, from (20) the direct bidirectional causalities between any two channels can be obtained. This process can save much computation time compared with the remodeling and recomputation by using conditional GC to compute the direct causality.

- Based on this definition, one may define the indirect causality from Xi to Xk via Xl as follows:

This cascade property does not hold for the previous GC definition as discussed in (iii) of Remark 1. - Given any route where , the indirect causality from Xi to Xk via this route R may be defined as

Let Sik be the set of all such kind of routes, we may define the total causality from Xi to Xk as(26)

Let nXi→Xk be the causality calculated based on the estimated AR model of the pair (Xi, Xk). The exact relationship between nXi→Xk and in (27) keeps unknown to us.(27) For the three time series as shown in Fig. 3, conditional GC can help to obtain the direct causality from Y to X (i.e., ). As a result, the indirect causality from Y to X via Z (i.e., ) equals , where is the total causality from Y to X. For multiple time series, given a route, one may not obtain the indirect causality based on previous GC definition. For example, in Fig. 6 the indirect causality via the route R’ : Y → W → Z → X cannot be obtained based on previous GC definition. However, using (26) we can get the indirect causality via the route R’ .

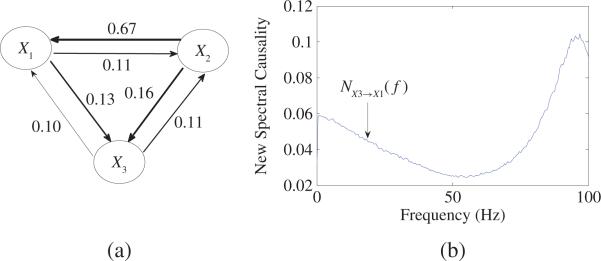

Fig. 13.

(a) Results of new causality based on (20) for (43) of three time series in time domain. Self-contributions are neglected. The resulting strength of the connections is schematically represented by the thickness of arrows. (b) New spectral causality from X3 to X1 is shown for (43) in frequency domain.

Fig. 5.

Plot of trajectories for –0.99X2,t, –0.99X̄2,t, and η1,t for one realization of (24) and (25) where Xi,0 = X̄i,0 and , i = 1, 2: (a) –0.99 X2,t 's trajectory for (24). (b) –0.99X̄2,t 's trajectory for (25)(c) η1,t 's or trajectory.

Fig. 6.

Connectivities among four time series.

For a given model, in general we do not know exactly what the real causality is. But as shown in (i)–(iv) of Remark 2, we point out that new causality value more correctly reveals the real strength of the causality than GC, and traditional GC may give misleading interpretation result.

III. GC in Frequency Domain

In the preceding section, we discussed causality in the time domain. In this section, we move to the counterpart: frequency domain. We first introduce a new spectral causality. Then we list three widely used Granger-like causality measures and point out their shortcomings and/or limitations.

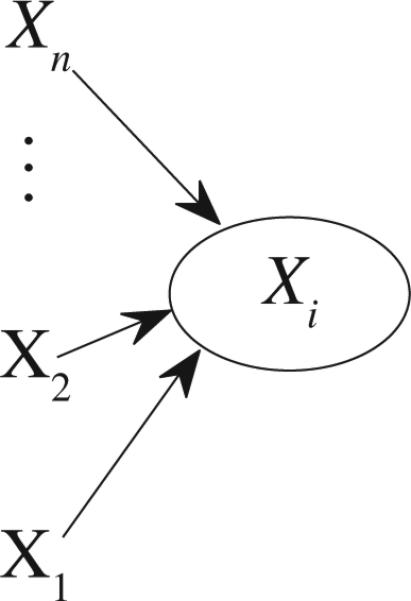

Consider the general (16). Taking Fourier transformation on both side of (16) leads to

| (28) |

where

| (29) |

From (28), one can see that contributions to Xk(f) include not only ak1(f)X1(f), . . . , akk–1(f)Xk–1(f) akk+1(f)Xk+1(f), . . . , akn(f)Xn(f) and the noise term ηk(f), but also akk(f)Xk(f). Fig. 7 describes the contributions to Xk(f), which include ak1(f)X1(f), . . . , akn(f)Xn(f) and the noise term ηk(f) where the influences from akk(f)Xk(f) are causality from Xk's own past contribution. So, it motivates us to define a new direct causality from Xi to Xk in the frequency domain as follows:

| (30) |

i, k = 1, . . . , n, i ≠ k, where SXlXl(f) is the spectrum of Xl, l = 1, . . . , n. Similar to previous new causality definition, the causality defined in (30) is called by new spectral causality.

Fig. 7.

Contributions to Xk(f).

Remark 3:

- It is easy to see that if and only if aki(f) ≡ 0, which means all coefficients aki, 1, . . . , aki,m are zeros. if and only if and akj(f) ≡ 0, j = 1, . . . , n, j ≠ i, which means there is no noise term ηk and all coefficients akj,1, . . . , akj,m are zeros j = 1, . . . , n, j ≠ i, i.e., the kth equality in (28) can be written as

from which one can see that Xk is completely driven by Xi's past values. - Given any route where , the indirect causality from Xi to Xk via this route R may be defined as

Let Sik be the set of all such routes, we may define the total causality from Xi to Xk is

| (31) |

Let NXi→Xk(f) be the causality value calculated based on estimated AR model of the pair (Xi, Xk) in frequency domain. The exact relationship between NXi→Xk(f) and in (31) is unknown to us so far.

In the literature, there are several other measures to define causality of neural connectivity in the frequency domain. In the following, we will introduce these measures and point out their shortcomings and/or limitations.

1) Spectral GC ( [6], [8], [24], [25], [27]): Given the bivariate (2), the Granger casual influence from X2 to X1 is defined by

| (32) |

where the transfer function is H(f) = A–1(f) whose components are

| (33) |

.

This definition has shortcomings and/or limitations as shown in the following remark.

Remark 4:

The same problems exist as in (i) and (iii) of Remark 1.

For the two specific cases in (iii) of Property 1 and for any coefficients a11,j, a12,j, a21,j, a22,j, we can check IX2 → X1 (f) ≡ 0 based on (32). However, one can easily check for (2) if a12(f)X2(f) ≠ 0: that is, there is real causality. Thus spectral GC IX2 → X1 (f) = 0 does not necessarily imply no real causality for the given frequency f, but new spectral causality must imply no real causality (of course, no spectral GC). As such, new spectral causality definition reveals real causality more correctly than spectral GC.

- From in (17.22), (17.23), (32), and (33) above, we can derive

where . Its detailed derivation is omitted here for space reason. One can see that IX2→X1(f) has nothing to do with ā11(f) and ā21(f), or, the real casuality from X2 to X1 in (2) has nothing to do with a11, j and a21, j . This is not true [similar to the analysis in (ii) and (iii) of Remark 2]. Thus, in general, spectral GC in (32) does not disclose the real casual influence at all.(34) GC was extended to conditional GC in frequency domain [6] and [8]. So, there exists the same problem as in (ii) above for conditional spectral GC.

| (35) |

where aij(f) is as in (29), and δij = 1 if i = j and 0 otherwise. Then in (16), PDC is defined to show frequency domain causality from Xj to Xi at frequency f as

| (36) |

The PDC πi←j(f) represents the relative coupling strength of the interaction of a given source, signal Xj, with regard to signal Xi, as compared with all of Xj's causing influences on other signals. Thus, PDC ranks the relative strength of causal interaction with respect to a given signal source and satisfies the following properties:

πi←i(f) represents how much of Xi's own past contributes to the evolution on itself that is not explained by other signals (see [29]). Fig. 8 describes contributions of signal Xj to all signals X1, . . . , Xn and can help the reader to better understand the definition of PDC. Comparing Fig. 8 with Fig. 7, one can see that these two figures have different structures. It is notable that πi←j(f) = 0 (i ≠ j) can be interpreted as absence of functional connectivity from signal Xj to signal Xi at frequency f. Hence, PDC can be used to multivariate time series to disclose whether or there exists casual influence from signal Xj to signal Xi. However, some researchers [30] already noted that PDC is hampered by a number of problems. In the following remark, we point out its shortcomings and/or limitations in a particular way.

Fig. 8.

Xj's contributions to all signals Xi, i = 1, 2, . . . , n.

Remark 5:

Note that πi←i(f) represents how much of Xi's own past contributes to the evolution on itself (see [29]). Then, πi←i(f) = 0 means no Xi's own past contributes to the evolution on itself. However, πi←i(f) = 0 implies āii(f) = 0, that is, 1 – aii(f) = 0 or aii(f) = 1(≠ 0), which means that there is Xi's own past contributes to the evolution on itself. A contradiction! On the other hand, πi←i(f) = 1 implies āki(f) = 0 (i.e., aki(f) = 0), k = 1, 2, . . . , n, k ≠ i, and āii(f) ≠ 0 (i.e., aii ≠ 1). Given a frequency f, now we further assume Xj(f) = Xi(f) = 1 and aij(f) = 1, aii(f) = 0.01 for some j ≠ i. Then, aij(f)Xj(f) = 1 and aii(f)Xi(f) = 0.01. Thus, based on the ith equality of (28) we know that Xi's own past contributes to the evolution on itself (i.e., aii(f)Xi(f) = 0.01) is much smaller than Xj's past contribution to Xi's evolution (i.e., aij(f)Xj(f) = 1) and can even be ignored. Thus, the number 1(= πi←i(f)) is not meaningful and PDC πi←i(f) is not reasonable.

High PDC πi←j(f), j ≠ i, near 1, indicates strong connectivity between two neural structures (see [21]). This is not true in general. For example, assume π1←j(f) = 1(j ≠ 1, 2), which implies ākj(f) = 0, k = 2, . . . , n and ā1j(f) ≠ 0 (or a1j(f) ≠ 0). Given a frequency f, now we further assume Xj(f) = X2(f) = 1 and a12(f) = 1, a1j(f) = 0.01. Then, a1j(f)Xj(f) = 0.01 and a12(f)X2(f) = 1. Thus, based on the first equality of (28) we know that Xj's past contributes to X1's evolution (i.e., a1j(f)Xj(f) = 0.01) is much smaller than X2's past contribution to X1's evolution (i.e., a12(f)X2(f) = 1) and can even be ignored. This demonstrates that there exists a very weak connectivity between Xj and X1. However, π1←j(f) = 1 indicates strong connectivity between Xj and X1. A contradiction!

Small PDC πi←k(f) does not means causal influence from Xk to Xi is small. For example, assume aik(f) = 0, i = 2, . . . , n and ā1k(f) = 0.1. Then, in terms of (36) one can see that π1←k(f) ≈ 0.1, which is a small number. However, note the first equality of (28), if Xk(f) is a big number such that ā1k(f)Xk(f) is much larger than any one of ā1j(f)Xj(f), j ≠ k. Then, Xk has major causal influence on X1 compared with other signals on X1.

πi←j(f) > πi←k(f) cannot guarantee that causality from Xj to Xi is larger than that from Xk to Xi where j ≠ i, k ≠ i. Actually (ii) and (iii) above support this statement.

Consider (2) and further assume a21,j = 0, j = 1, . . . , m. One can clearly see that π1←2(f) has nothing to do with ā11(f), or, the real casual influence from X2 to X1 in (2) has nothing to do with a11,j, j = 1, . . . , m. This is not true [similar to the analysis in (ii) and (iii) of Remark 2].

The same problems as in (i) above ~(v) exist for a form of PDC called generalized PDC (GPDC) [31], [32].

3) RPC [15]: From (28) it follows that the transfer function H(f) = [Hij(f)] = A–1(f) where A = [āij(f)]n×n and āij(f) is as (35). When all noise terms are mutually uncorrelated, a simple RPC is defined as

| (37) |

where the power spectrum

| (38) |

Equation (38) indicates that the power spectrum of Xi,t at frequency f can be decomposed into n terms , (j = 1, . . . , n), each of which can be interpreted as the power contribution of the jth innovation ηj,t transferring to Xi,t via the transfer function Hij(f). Thus, can be regarded as the power contribution of the innovation ηj,t on the power spectrum of Xi,t. RPC Ri←j(f) defined in (37) can be regarded as a ratio of the power contribution of the innovation ηj,t on the power spectrum of Xi,t to the power spectrum SXiXi(f). Hence, RPC gives a quantitative measurement of the strength of every connection for each frequency component and always ranges from 0 to 1. The ratio is used to define Xj's past contributes to Xi's evolution. Fig. 9 describes contributions to signal Xi's evolution from all signals Xk's past values (k = 1, . . . , n) and can help the reader to better understand the definition of RPC. Comparing Fig. 9 with Fig. 8, one can see that these two figures have different structures. Although RPC can be applied to multivariate time series, it has its shortcomings and/or limitations as pointed out in the following remark.

Fig. 9.

Past contributions to Xi's evolution from all signals Xj, j = 1, 2, . . . , n.

Remark 6:

RPC Ri←j(f) defined in (37) can only be regarded as a ratio of the power contribution of the innovation ηj,t on the power spectrum of Xi,t to the power spectrum SXiXi(f). In the literature, all researchers who use RPC to study neural connectivity view the ratio as Xj's past contribution to Xi's evolution. Unfortunately, the ratio cannot be used to define Xj's past contribution to Xi's evolution. It does not disclose real causal influence from Xj to Xi at all. For multivariate time series, Ri←j(f) = 0 does not mean no causal influence from Xj to Xi, Ri←j(f) = 1 does not mean strong causality from Xj to Xi. For example, given a frequency f, let the transfer function H(f) = [Hij(f)] = A–1(f) satisfy H1j(f) = 0 and ā1j(f) ≠ 0 (or a1j(f) ≠ 0), j ≠ 1. H1j(f) = 0 indicates R1←j(f) = 0. However, from the first equality of (28) and a1j(f) ≠ 0, one can see that there exists causality from Xj to X1. Let the transfer function H(f) = [Hij(f)] = A–1(f) satisfy H1j(f) ≠ 0, H1k(f) = 0, k = 1, . . . , n, k ≠ j and ā1j(f) = 0 (or a1j(f) = 0), j ≠ 1. From H1j(f) ≠ 0, H1k(f) = 0, k = 1, . . . , n, k ≠ j, it can be seen that R1←j(f) = 1. However, from the first equality of (28) and a1j(f) = 0, one can see that there exists no direct causality from Xj to X1 at all. Hence, RPC value Ri←j(f) is not meaningful as far as causality from Xj to Xi is concerned.

In general, RPC Ri←j(f) in (37) is unreasonable. The same analysis as (v) of Remark 5 leads to this statement.

When noise terms are mutually correlated, extended RPC (ERPC) was proposed in [15], and [33]. The same problems as above (i) and (ii) exist for ERPC.

4) DTF or Normalized DTF [16]: DTF is directly based on the transfer function H(f). It is defined as (37) where , j = 1, . . . , n. So, the same problems as (i) and (ii) of Remark 6 exist for normalized DTF.

In conclusion, for four widely used Granger or Granger-like causlity measures (spectral GC, PDC, RPC, and DTF), we clearly point out their inherent shortcomings and/or limitations. One of reasons for causing those shortcomings and/or limitations is the use of the transfer function H(f) or its inverse matrix. In fact, Fig. 7 gives a rather intuitive description for the contributions to Xk(f), which are summations of all causal influence terms ak1(f)X1(f), . . . , akn(f)Xn(f) and the instantaneous causal influence noise term ηk(f) where akk(f)Xk(f) is the causal influence from Xk's past value. defined in (30) describes how much causal influence of all influences on Xk(f) comes from Xi(f). Thus, new spectral causality defined in (30) is mathematically rather reasonable and understandable. Any other causality measure not directly depending on all these causal influence terms and the instantaneous causal influence noise term may not truly reveal causal influence among different channels. Note that the new definition is totally different from all existing Granger or Granger-like causality measures which are directly based on the transfer function (or its inverse matrix) of the AR model. The difference mainly lies in two aspects: 1) the element Hij(f) of the transfer function H(f) is totally different from aij(f) in (28) and as a result any causality measure based on H(f) may not reveal real causality relations among different channels, and 2) as pointed out in (iii) of Remark 4, (v) of Remark 5, and (ii) of Remark 6, Granger or Granger-like causality measures use only partial information and some information is missing [e.g., IX2→X1(f) in (34) has nothing to do with ā11(f) or –a11(f)] unlike new spectral causality defined as (30) in which all powers SXiXi (i = 1, . . . , n) (including all available information) are adopted. As such, spectral GC, PDC, RPC, DTF, and many other extended or variant forms like GPDC [31], extended RPC (ERPC) [33], normalized DTF [16], direct directed transfer function (dDTF) [34], SDTF [35], short-time direct directed transfer function (SdDTF) [17], to name a few, suffer from the loss of some important information and inevitably cannot completely reveal true causality influence among channels.

IV. Examples

In this section, we first compare our causality measures with the existing causality measures in the first four examples and in particular we show the shortcomings and/or limitations of the existing causality measures. In Examples 1–4, we always assume all noise terms are mutually independent and normal distribution with variance of 1 except for Example 4 which has a variance of 0.3. In the last example, we then conduct event-related causality analysis for a patient who suffered from seizure in left temporal lobe and was asked to recognize pictures viewed. In this example, we calculate new spectral causality, spectral GC, PDC, and RPC for two intracranial EEG channels where one channel is from left temporal lobe and the other channel is from right temporal lobe. The simulation results show that all measures clearly reveal causal information flow from right side to left side, but new spectral causality result reflects event-related activity very well and spectral GC, PDC, and RPC results are not interpretable and misleading.

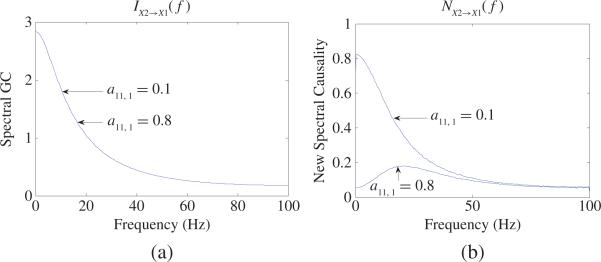

Example 1: Comparison between spectral GC and new spectral causality. Consider the following model:

| (39) |

We consider two cases: a11, 1 = 0.1 and a11, 1 = 0.8. Obviously, X2 should have different causal influence on X1 in these two cases. Fig. 10(b) shows the new spectral causality results in these two cases from which one can see that X2 has totally different causal influence on X1 in these two cases. However, based on (34), X2 has the same GC on X1 in these two cases [see Fig. 10(a)] because (34) has nothing to do with the coefficient a11, 1. Hence, we confirm that in general spectral GC may not reflect real causal influence between two neurons and may lead to wrong interpretation.

Fig. 10.

(a) Spectral GC results: IX2→X1(f) based on (34) for (39) of two time series in two cases: a11, 1 0.1 and a11, 1 = 0.8. (b) New spectral causality results: NX2→X1(f) for (39).

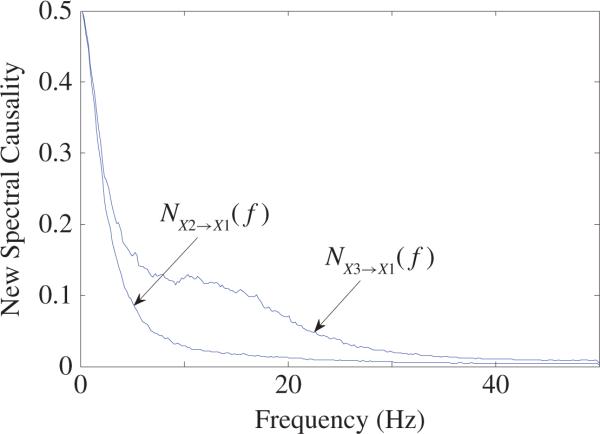

Example 2: Comparison between new spectral causality and PDC. Consider the following model with three time series:

| (40) |

For (40), it is easy to check that π1←2(f) = π1←3(f) for any frequency f > 0 based on PDC definition of (36). This is not true. The reason is that X2(f) ≠ X3(f) for most frequency f > 0 and, as a result, ā12(f)X2(f) ≠ ā13(f)X3(f) by noting ā12(f) = ā13(f) for any frequency f > 0. Thus, in the frequency domain, X2 and X3 should have different causal influence on X1. The new spectral causality plotted in Fig. 11 shows that the causality from X2 to X1 is indeed totally different from that from X3 to X1 for most frequency f > 0. So, we confirm that in general PDC value may not reflect real causality between two neurons and may be misleading.

Fig. 11.

New spectral causality results: and for (40).

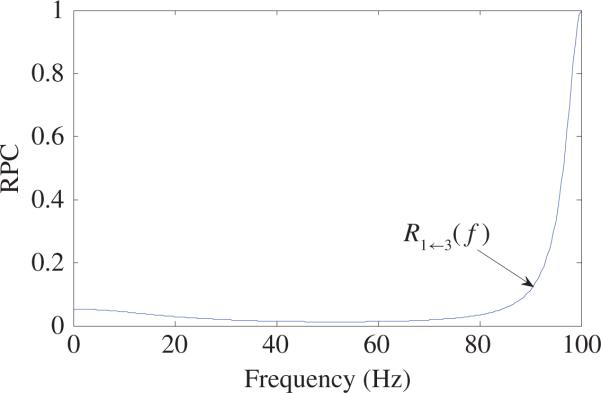

Example 3: Comparison between new spectral causality and RPC. First consider the following model with three time series:

| (41) |

From (41), one can get the transfer function H(f) = A–1(f), H11(f) = (1 – 0.2e–i2πf)(1 + 0.5e–i2πf – 0.5e–i4πf)/det(A), H12(f) = 0.5e–i2πf (1 + 0.5e–i2πf – 0.5e–i4πf)/det(A), H13(f) = 0.5e–i2πf × 0.4e–i2πf/det(A). We compute

| (42) |

The curve of RPC R1←3(f) based on (42) is shown in Fig. 12 from which one can see that there always exists direct causal influence from X3 to X1 for f > 0. Especially, when f = 100 Hz, R1←3(100) = 1, which indicates that there is very strong direct causal influence from X3 to X1. However, from the first equality of (41), one can see that there is no real direct causal influence from X3 to X1 at all.

Fig. 12.

Curve of RPC R1←3(f) calculated based on (42).

We then consider the following model with three time series:

| (43) |

From (43), one can get the transfer function H(f) = A–1(f) with H13(f) = 0, ∀f > 0. As a result, RPC R1←3(f) ≡ 0, ∀f > 0, which indicates no direct causal influence from X3 to X1. However, from (43) one can see that X3 indeed has causal influence on X1. Moreover, every two time series have bidirectional connectivities, which are shown in Fig. 13(a). Causality in frequency domain from X3 to X1 is plotted in Fig. 13(b), from which one can see that there always exists causal influence from X3 to X1 for a given frequency f > 0.

Hence, by above two models, we confirm that in general RPC value may not reflect real causal influence between two neurons and, as a result, may yield misleading results.

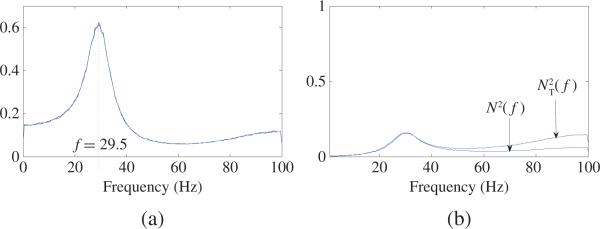

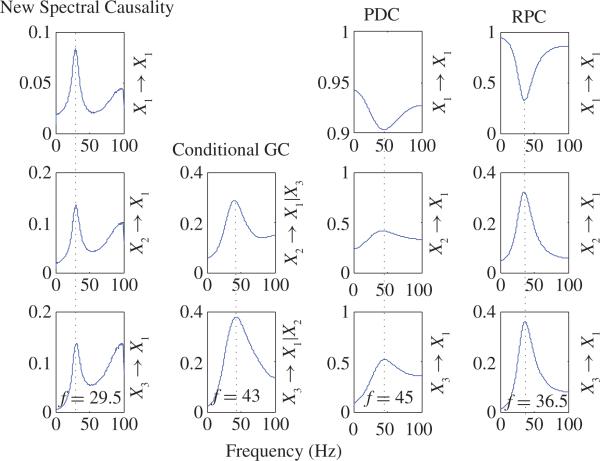

Example 4: Comparison among new spectral causality, conditional spectral GC, PDC, and RPC together. Consider the following model with three time series:

| (44) |

where the noise terms are normal distribution with variance of 0.3 and all noise terms are mutually independent. For each realization (200 realizations of 10 000 time points) of (44), we estimated AR model [as mentioned in (ii) of Property 1] and calculated power, new spectral causality, conditional spectral GC, PDC, and RPC values in frequency domain. Then average values across all realizations are reported in Figs. 14 and 15. Power spectra of X1, X2, and X3 are plotted in Fig. 14(a), from which one can see that X1, X2, and X3 have almost same power spectra across all frequencies and have an obvious peak at f = 29.5 Hz. New spectral causality, conditional spectral GC, PDC, and RPC are shown in Fig. 15. The first, third, and fourth columns in Fig. 15 shows direct new spectral causality, PDC, and RPC, respectively, from X1 to X1, from X2 to X1, and from X3 to X1. It is notable that new spectral causalities from X1 to X1, from X2 to X1, and from X3 to X1 are similar and have peaks at the same frequency of 29.5 Hz, which is consistent with the peak frequency of power spectra in Fig. 14(a). So, these new spectral causality results are rather interpretable and reasonable by noting the almost same power spectra in Fig. 14(a) for X1, X2, and X3. On the contrary, conditional GC in the second cloumn of Fig. 15, PDC, and RPC (from X2 to X1, and from X3 to X1) have peaks at different frequencies which are all different from 29.5 Hz. These results are not interpretable by noting the same peak frequency (29.5 Hz) of power spectra in Fig. 14(a). More specifically, PDC and RPC (from X1 to X1) achieve minimum at peak frequencies of PDC and RPC (from X2 to X1 or from X3 to X1). Obviously, these results are incorrect and misleading. N(f) and NT(f) are presented in Fig. 14(b), where based on (44) and NT(f) = NX3→X1(f) based on the estimated AR model of the pair (X1, X3). One can see that NT(f) (i.e., the total causality from X3 to X1) is very close to N(f) (i.e., summation of causalities along all routes from X3 to X1) before 40 Hz. But after 40 Hz, N(f) > NT(f). For a general model, the exact relationship between N(f) and NT(f) is unknown.

Fig. 14.

(a) Power spectra of X1, X2, X3. It can be seen that X1, X2, X3 have almost same powers across all frequencies and have an obvious peak at f = 29.5 Hz. (b) based on (44) and NT(f) = NX3→X1 (f) based on the estimated AR model of the pair (X1, X3). One can see that NT(f) (i.e., the total causality from X3 to X1) is very close to N(f) (i.e., summation of causalities along all routes from X3 to X1) before 40 Hz. But after 40 Hz, N(f) > NT(f). For a general model, the exact relationship between N(f) and NT(f) is not known.

Fig. 15.

Comparison among new spectral causality, conditional spectral GC, PDC, and RPC together. The first, third, and fourth columns show direct new spectral causality, PDC, and RPC, respectively, from X1 to X1, from X2 to X1, and from X3 to X1. The second column shows conditional GC. It is notable that: 1) new spectral causality from X1 to X1, from X2 to X1, and from X3 to X1 are similar and have peaks at the same frequence of 29.5 Hz, which is consistent with the peak frequency of power spectra [see Fig. 14(a)], and 2) conditional GC, PDC, and RPC (from X2 to X1, and from X3 to X1) have peaks at different frequencies which are all different from 29.5 Hz.

Hence, by this example, we confirm that in general spectral GC, PDC, and RPC may not reflect real causality.

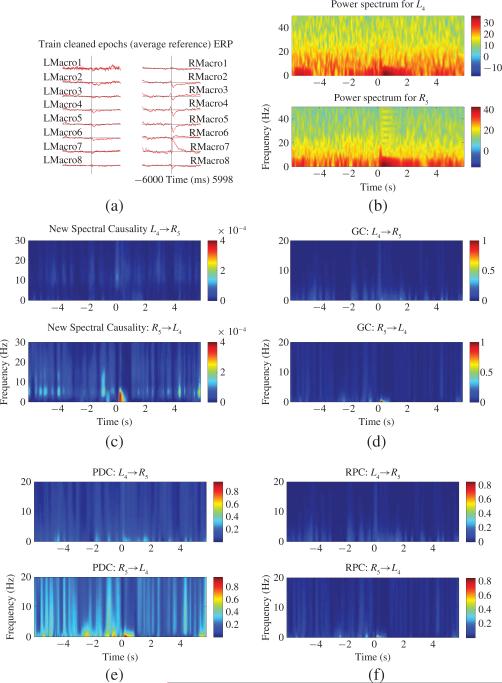

Example 5: In this example, we conduct ERP analysis for one seizure patient who suffered from seizure in left temporal lobe and was asked to recognize pictures viewed. There are two sessions for testing. During the first session, stimuli was presented and evaluated for their emotional impact (valence, arousal) and autonomic effects. Stimuli were chosen that are known to activate the amygdala from functional imaging studies. A second session was a recall task in which the subject was asked to recognize pictures viewed in session 1. The subject was comfortably seated in front of a computer monitor projecting and imaging from the International Affective Picture System (IAPS) every 22 s according to the above schema. Forty images were selected that span the range of emotional valence and arousal according to previously published standards. The image was held on the screen for 6 s, and then the subject was asked to rate the image on an emotional valence (0 = not emotionally intense to 3 = extremely emotionally intense). Reaction time to the rating was measured. After rating, the screen went blank for 15 s. Twenty-four hours later, a second session was held in which pictures were presented in random order. All 40 of the previously viewed pictures as well as another 80 pictures from the IAPS with similar overall valence and arousal ratings were presented. Each image was presented for 3 s and then the patient was asked if it had been previously seen or not and to what degree of certainty this can be stated (e.g., not seen before very certain to not seen before very unsure). Reaction time to the response was measured. A blank screen followed the response for 2 s. We recorded intracranial EEG and behavioral data (response times and choices) from 16 electrodes consisting of right and left temporal depth electrodes (RTD and LTD, the locations of these electrodes can be seen in [36, Fig. 2]) composed of eight platinum/iridium alloy contacts spaced at 10 mm intervals on an XLTek EEG 128 system which digitizes each channel at 625 Hz with a 0.01–100 Hz analog band-pass digital filter. All channels (16) were referred to the scalp suture electrode. Individual stimulus response trials were visually inspected and those with artifacts were excluded from subsequent analysis. After visual inspection, the remaining analyzed data involves 7500 sample points and 78 epochs.

As in many researchers have done, we use the average referenced iEEG. ERP images in Fig. 16(a) clearly show cortical activity changes after stimulus onset in most channels. To study task-related causality relationship between different channels, we use the moving window technique where the window size is 150 samples (240 ms) and overlap is 75 samples (120 ms). After a complete analysis for the MVAR model order in each window by using the Akaike information criterion [37]–[39] we found the chosen order of 8 fits well and thus a common model order of 8 was applied for all windows in our data set.

Fig. 16.

(a) ERPs for eight left Macrowire channels (LMacro1–LMacro8) and eight right Macrowire channels (RMacro1–RMacro8) where average reference is applied. (b) Power spectra for LMacro4 (or L4) and RMacro5 (or R5). (c) New spectral causality results between L4 and R5. (d) GC results between L4 and R5. (e) PDC results between L4 and R5. (f) RPC results between L4 and R5.

We took R5 and L4 as an example and calculated their causality relationship based on new spectral causality, spectral GC, PDC, and RPC. The power spectra for R5 and L4 averaged over all trials are shown in Fig. 16(b), from which one clearly sees that delayed lower frequency band (<8 Hz) activities are enhanced after stimulus onset (about 250 ms time delay). This result is consistent with previous findings that theta (2–10 Hz) oscillations have been observed to be prominent during a variety of cognitive processes in both animal and human studies [40]–[44] and may play a fundamental role in memory encoding, consolidation, and maintenance [45]–[47]. Time–frequency causality [or event-related causality (see, [17])] relationship between R5 and L4 based on new spectral causality, spectral GC, PDC, and RPC are shown in Fig. 16(c)–(f) respectively, from which one can find that: 1) the causal influence from R5 to L4 is significantly enhanced after stimulus onset (about 250 ms time delay) for all four measures (we use the statistical testing framework in [17] to test significance level for each measure). But only new spectral causality discloses the enhanced frequency band (<8 Hz), which is consistent with the delayed enhanced lower frequency band (<8 Hz) activities of R5 and L4 as shown in Fig. 16(b) and thus the result should be real. The enhanced frequency bands revealed by the other measures (spectral GC, PDC, RPC) are always less than 2 Hz and the enhanced lower frequency band (2–8 Hz) activities shown in Fig. 16(b) cannot be reflected and thus these results lead to misinterpretation, and 2) the causal influence from L4 to R5 is much smaller than that from R5 to L4 for each measure. These results indicate that interaction between the pair of R5 and L4 is not symmetric and R5 has a strong directional interaction on L4. A possible reason for this phenomenon is that the patient had left temporal lobe epilepsy and therefore some of brain functions were lost, which kept information flow in left temporal lobe from normally transmitting to right temporal lobe.

Hence, by this real neurophysiological data analysis, we further verify that new spectral causality may truly reveal causal interaction between two areas and the other measures like spectral GC, PDC, and RPC cannot do and thus may lead to wrong interpretation results.

V. Conclusion

In human cognitive neuroscience, researchers are now not limited to only the study of the putative functions of particular brain regions, but are moving toward how different brain regions (such as visual cortex, parietal or frontal regions, amygdala, or hippocampus) may causally influence on each other. These causal influences with different strengths and directionalities may reflect the changing functional demands on the network to support cognitive and perceptual tasks. Direct evidence to show the existence of causality among brain regions can be achieved from the activity changes of brain regions by using brain stimulation approach. Brain stimulation affects not only the targeted local region but also the activity in remote interconnected regions. These remote effects depend on cognitive factors (e.g., task-condition) and reveal dynamic changes in interplay between brain areas. GC, one of the most popular causality measures, was initially widely used in economics and has recently received growing attention in neuroscience to reveal causal interactions for neurophysiological data. Especially in frequency domain, several variant forms of GC have been developed to study causal influences for neurophysiological data in different frequency decompositions during cognitive and perceptual tasks.

In this paper, on one hand, in time domain we defined a new causality from any time series Y to any time series X in the linear regression model of multivariate time series, which describes the proportion that Y occupies among all contributions to X (where each contribution must be considered when defining any good causality tool, otherwise the definition inevitably cannot reveal well the real strength of causality between two time series). In particular, we used one simple example to clearly show that both of new causality and GC adopt the concept of proportion, but they are defined on two different equations where one equation (for GC) is only part of the other equation (for new causality). Therefore, new causality is a natural extension of GC and has a sound conceptual/theoretical basis, and GC is not the desired causal influence at all. As such, traditional GC has many pitfalls, e.g., a larger GC value does not necessarily mean higher real causality, or vice versa; even when GC value is zero, there still exists real causality which can be revealed by new causality. Therefore, the popular traditional GC cannot reveal the real strength of causality at all, and researchers must apply caution in drawing any conclusion based on GC value. On the other hand, in frequency domain we pointed out that any causality definition based on the transfer function matrix (or its inverse matrix) of the linear regression model in general may not be able to reveal the real strength of causality between two time series. Since almost all existing spectral causality definitions are based on the transfer function matrix (or its inverse matrix) of the linear regression model, we took the widely used spectral GC, PDC, and RPC as examples and pointed out their inherent shortcomings and/or limitations. To overcome these difficulties, we defined a new spectral causality which describes the proportion that one variable occupies among all contributions to another variable in the multivariate linear regression model (frequency domain). By several simulated examples, we clearly showed that our new definitions (in time and frequency domains) are advantageous over existing definitions. Moreover, for a real ERP EEG data from a patient with epilepsy undergoing monitoring with intracranial electrodes, the application of new spectral causality yielded promising and reasonable results. But the applications of spectral GC, PDC, and RPC all generated misleading results. Therefore, our new causality definitions appear to shed new light on causality interaction analysis and may have wide applications in economics and neuroscience. Apparently, before our methods can be widely used in economics and neuroscience, application of our methods to more time series data should be further investigated: 1) to evaluate our methods’ usefulness and advantages over other existing popular methods; 2) to do statistical significance level testing; and 3) to study bias issue in time domain [48] and frequency domain, i.e., whether the resulting causality is biased by using appropriate surrogate data.

Acknowledgments

This work was supported in part by the National Natural Science Foundation of China under Grant 61070127, and the International Cooperation Project of Zhejiang Province, China, under Grant 2009C14013, Grant NIH R01 MH072034, Grant NIH R01 NS054314, and Grant NIH R01 NS063039.

Biography

Sanqing Hu (M'05–SM'06) received the B.S. degree from the Department of Mathematics, Hunan Normal University, Hunan, China, in 1992, the M.S. degree from the Department of Automatic Control, Northeastern University, Shenyang, China, in 1996, and the Ph.D. degree from the Department of Automation and Computer-Aided Engineering, Chinese University of Hong Kong, Kowloon, Hong Kong, and the Department of Electrical and Computer Engineering, University of Illinois, Chicago, in 2001 and 2006, respectively.

Sanqing Hu (M'05–SM'06) received the B.S. degree from the Department of Mathematics, Hunan Normal University, Hunan, China, in 1992, the M.S. degree from the Department of Automatic Control, Northeastern University, Shenyang, China, in 1996, and the Ph.D. degree from the Department of Automation and Computer-Aided Engineering, Chinese University of Hong Kong, Kowloon, Hong Kong, and the Department of Electrical and Computer Engineering, University of Illinois, Chicago, in 2001 and 2006, respectively.

He was a Research Fellow at the Department of Neurology, Mayo Clinic, Rochester, MN, from 2006 to 2009. From 2009 to 2010, he was a Research Assistant Professor at the School of Biomedical Engineering, Science & Health Systems, Drexel University, Philadelphia, PA. He is now a Chair Professor at the College of Computer Science, Hangzhou Dianzi University, Hangzhou, China. He is the co-author of more than 60 papers published in international journal and conference proceedings. His current research interests include biomedical signal processing, congnitive and computational neuroscience, neural networks, and dynamical systems.

Prof. Hu is an Associate Editor of four journals: the IEEE Transactions on Biomedical Circuits and Systems, the IEEE Transactions on Systems, Man and Cybernetics—Part B, the IEEE Transactions on Neural Networks, and the Neurocomputing. He was a Guest Editor of Neurocomputing's special issue on neural networks in 2007 and Cognitive Neurodynamics’ special issue on cognitive computational algorithms in 2011. He is the Co-Chair of the Organizing Committee for the International Conference on Adaptive Science and Technology in 2011, and the Program Chair for the International Conference on Information Science and Technology in 2011, and the International Symposium on Neural Networks in 2011. He was also the Program Chair for the International Workshop on Advanced Computational Intelligence (IWACI) in 2010, and was the Special Sessions Chair of the International Conference on Networking, Sensing and Control in 2008, the International Symposium on Neural Networks in 2009, and IWACI in 2010. He also serves as a Member of the Program Committee for several international conferences.

Guojun Dai (M’90) received the B.E. and M.E. degrees from Zhejiang University, Hangzhou, China, in 1988 and 1991, respectively, and the Ph.D. degree from the College of Electrical Engineering, Zhejiang University, in 1998.

Guojun Dai (M’90) received the B.E. and M.E. degrees from Zhejiang University, Hangzhou, China, in 1988 and 1991, respectively, and the Ph.D. degree from the College of Electrical Engineering, Zhejiang University, in 1998.

He is currently a Professor and the Vice-Dean of the College of Computer Science, Hangzhou Dianzi University, Hangzhou. He is the author or co-author of more than 20 research papers and books, and holds more than 10 patents. His current research interests include biomedical signal processing, computer vision, embedded systems design, and wireless sensor networks.

Gregory A. Worrell (M’03) received the Ph.D. degree in physics from Case Western Reserve University, Cleveland, OH, and the M.D. degree from the University of Texas, Galveston.

Gregory A. Worrell (M’03) received the Ph.D. degree in physics from Case Western Reserve University, Cleveland, OH, and the M.D. degree from the University of Texas, Galveston.

He completed the neurology and epilepsy training at Mayo Clinic, Rochester, MN, where he is now a Professor of Neurology. His research is integrated with his clinical practice focused on patients with medically resistant epilepsy. His current research interests include the use of large-scale system electrophysiology, brain stimulation, and data mining to identify and track electrophysiological biomarkers of epileptic brain and seizure generation.

Prof. Worrell is a member of the American Neurological Association, the Academy of Neurology, and the American Epilepsy Society.

Qionghai Dai (SM’05) received the B.S. degree in mathematics from Shanxi Normal University, Xian, China, in 1987, and the M.E. and Ph.D. degrees in computer science and automation from Northeastern University, Liaoning, China, in 1994 and 1996, respectively.

Qionghai Dai (SM’05) received the B.S. degree in mathematics from Shanxi Normal University, Xian, China, in 1987, and the M.E. and Ph.D. degrees in computer science and automation from Northeastern University, Liaoning, China, in 1994 and 1996, respectively.

He has been with the faculty of Tsinghua University, Beijing, China, since 1997, and is currently a Professor and the Director of the Broadband Networks and Digital Media Laboratory. His current research interests include signal processing, video communication and computer vision, and computational neuroscience.

Hualou Liang (M’00–SM’01) received the Ph.D. degree in physics from the Chinese Academy of Sciences, Beijing, China. He studied signal processing at Dalian University of Technology, Dalian, China.

Hualou Liang (M’00–SM’01) received the Ph.D. degree in physics from the Chinese Academy of Sciences, Beijing, China. He studied signal processing at Dalian University of Technology, Dalian, China.

He has been a Post-Doctoral Researcher in Tel-Aviv University, Tel-Aviv, Israel, the Max-Planck-Institute for Biological Cybernetics, Tuebingen, Germany, and the Center for Complex Systems and Brain Sciences, Florida Atlantic University, Boca Raton. He is currently a Professor at the School of Biomedical Engineering, Drexel University, Philadelphia, PA. His current research interests include biomedical signal processing and cognitive and computational neuroscience.

Contributor Information

Sanqing Hu, the College of Computer Science, Hangzhou Dianzi University, Hangzhou 310018, China (sqhu@hdu.edu.cn)..

Guojun Dai, the College of Computer Science, Hangzhou Dianzi University, Hangzhou 310018, China (daigj@hdu.edu.cn)..

Gregory A. Worrell, the Department of Neurology, Division of Epilepsy and Electroencephalography, Mayo Clinic, Rochester, MN 55905 USA (Worrell.Gregory@mayo.edu)..

Qionghai Dai, the Department of Automation, Tsinghua University, Beijing 100084, China (daiqh@tsinghua.edu.cn)..

Hualou Liang, School of Biomedical Engineering, Science & Health Systems, Drexel University, Philadelphia, PA 19104 USA (Hualou.Liang@drexel.edu)..

References

- 1.Seth A. Granger causality. Scholarpedia. 2007;2(7):1667. [Google Scholar]

- 2.Sun R. A neural network model of causality. IEEE Trans. Neural Netw. 1994 Jul.5(4):604–611. doi: 10.1109/72.298230. [DOI] [PubMed] [Google Scholar]

- 3.Zou C, Denby KJ, Feng J. Granger causality versus dynamic Bayesian network inference: A comparative study. BMC Bioinf. 2009 Apr.10(1):401. doi: 10.1186/1471-2105-10-122. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wiener N. The theory of prediction. In: Beckenbach EF, editor. Modern Mathematics for Engineers. McGraw-Hill; New York: 1956. ch. 8. [Google Scholar]

- 5.Granger CWJ. Investigating causal relations by econometric models and cross-spectral methods. Econometrica. 1969 Jul.37(3):424–438. [Google Scholar]

- 6.Ding M, Chen Y, Bressler SL. Granger causality: Basic theory and applications to neuroscience. In: Schelter B, Winterhalder M, Timmer J, editors. Handbook of Time Series Analysis. Wiley-VCH; Weinheim, Germany: 2006. pp. 437–460. [Google Scholar]

- 7.Freiwald WA, Valdes P, Bosch J, Biscay R, Jimenez JC, Rodriguez LM, Rodriguez V, Kreiter AK, Singer W. Testing non-linearity and directedness of interactions between neural groups in the macaque inferotemporal cortex. J. Neurosci. Methods. 1999 Dec.94(1):105–119. doi: 10.1016/s0165-0270(99)00129-6. [DOI] [PubMed] [Google Scholar]

- 8.Geweke J. Measurement of linear dependence and feedback between multiple time series. J. Amer. Stat. Assoc. 1982 Jun.77(378):304–313. [Google Scholar]

- 9.Hesse W, Möller E, Arnold M, Schack B. The use of time-variant EEG Granger causality for inspecting directed interdependencies of neural assemblies. J. Neurosci. Methods. 2003 Mar.124(1):27–44. doi: 10.1016/s0165-0270(02)00366-7. [DOI] [PubMed] [Google Scholar]

- 10.Oya H, Poon PWF, Brugge JF, Reale RA, Kawasaki H, Volkov IO. Functional connections between auditory cortical fields in humans revealed by Granger causality analysis of intra-cranial evoked potentials to sounds: Comparison of two methods. Biosystems. 2007 May–Jun.89(1–3):198–207. doi: 10.1016/j.biosystems.2006.05.018. [DOI] [PubMed] [Google Scholar]

- 11.Roebroeck A, Formisano E, Goebel R. Mapping directed influence over the brain using Granger causality and fMRI. Neuroimage. 2005 Mar.25(1):230–242. doi: 10.1016/j.neuroimage.2004.11.017. [DOI] [PubMed] [Google Scholar]

- 12.Gow DW, Segawa JA, Alfhors S, Lin FH. Lexical influences on speech perception: A Granger causality analysis of MEG and EEG source estimates. Neuroimage. 2008 Nov.43(3):614–623. doi: 10.1016/j.neuroimage.2008.07.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Gow DW, Keller CJ, Eskandar E, Meng N, Cash SS. Parallel versus serial processing dependencies in the perisylvian speech network: A Granger analysis of intracranial EEG data. Brain Lang. 2009 Jul.110(1):43–48. doi: 10.1016/j.bandl.2009.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Baccal LA, Sameshima K. Partial directed coherence: A new concept in neural structure determination. Biol. Cybern. 2001 Jun.84(6):463–474. doi: 10.1007/PL00007990. [DOI] [PubMed] [Google Scholar]

- 15.Yamashita O, Sadato N, Okada T, Ozaki T. Evaluating frequency-wise directed connectivity of BOLD signals applying relative power contribution with the linear multivariate time-series models. Neuroimage. 2005 Apr.25(2):478–490. doi: 10.1016/j.neuroimage.2004.11.042. [DOI] [PubMed] [Google Scholar]

- 16.Kaminski M, Ding M, Truccolo-Filho W, Bressler SL. Evaluating causal relations in neural systems: Granger causality, directed transfer function and statistical assessment of significance. Biol. Cybern. 2001 Aug.85(2):145–157. doi: 10.1007/s004220000235. [DOI] [PubMed] [Google Scholar]

- 17.Korzeniewska A, Crainiceanu CM, Kus R, Franaszczuk PJ, Cronel NE. Dynamics of event-related causality in brain electrical activity. Human Brain Mapp. 2008 Oct.29(10):1170–1192. doi: 10.1002/hbm.20458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bernasconi C, Konig P. On the directionality of cortical interactions studied by structural analysis of electrophysiological recordings. Biol. Cybern. 1999 Sep.81(3):199–210. doi: 10.1007/s004220050556. [DOI] [PubMed] [Google Scholar]

- 19.Liang H, Ding M, Nakamura R, Bressler SL. Causal influences in primate cerebral cortex during visual pattern discrimination. Neuroreport. 2000 Sep.11(13):2875–2880. doi: 10.1097/00001756-200009110-00009. [DOI] [PubMed] [Google Scholar]

- 20.Brovelli A, Ding M, Ledberg A, Chen Y, Nakamura R, Bressler SL. Beta oscillations in a large-scale sensorimotor cortical network: Directional influences revealed by Granger causality. Proc. Nat. Acad. Sci. United States Amer. 2004 Jun.101(26):9849–9854. doi: 10.1073/pnas.0308538101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Sato JR, Takahashi DY, Arcuri SM, Sameshima K, Morettin PA, Baccal LA. Frequency domain connectivity identification: An application of partial directed coherence in fMRI. Human Brain Mapp. 2009 Feb.30(2):452–461. doi: 10.1002/hbm.20513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kaminski M, Liang H. Causal influence: Advances in neurosignal analysis. Crit. Rev. Biomed. Eng. 2005;33(4):347–430. doi: 10.1615/critrevbiomedeng.v33.i4.20. [DOI] [PubMed] [Google Scholar]

- 23.Guo S, Wu J, Ding M, Feng J. Uncovering interactions in the frequency domain. PLoS Comput. Biol. 2008;4(5):e1000087–1–e1000087-5. doi: 10.1371/journal.pcbi.1000087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Geweke JF. Measures of conditional linear dependence and feedback between time series. J. Amer. Stat. Assoc. 1984 Dec.79(388):907–915. [Google Scholar]

- 25.Blinowska KJ, Kus R, Kaminski M. Granger causality and information flow in multivariate processes. Phys. Rev. E. 2004 Nov.70(5):050902(R)-1–050902(R)-4. doi: 10.1103/PhysRevE.70.050902. [DOI] [PubMed] [Google Scholar]

- 26.Kus R, Kaminski M, Blinowska KJ. Determination of EEG activity propagation: Pair-wise versus multichannel estimate. IEEE Trans. Biomed. Eng. 2004 Sep.51(9):1501–1510. doi: 10.1109/TBME.2004.827929. [DOI] [PubMed] [Google Scholar]

- 27.Cui J, Xu L, Bressler SL, Ding M, Liang H. BSMART: A MATLAB/C measurebox for analysis of multichannel neural time series. Neural Netw., Spec. Issue Neuroinf. 2008 Oct.21(8):1094–1104. doi: 10.1016/j.neunet.2008.05.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Schelter B, Winterhalder M, Eichler M, Peifer M, Hellwig B, Guschlbauer B, Lucking CH, Dahlhaus R, Timmer J. Testing for directed influences among neural signals using partial directed coherence. J. Neurosci. Methods. 2005 Apr.152:210–219. doi: 10.1016/j.jneumeth.2005.09.001. [DOI] [PubMed] [Google Scholar]

- 29.Allefeld C, Graben PB, Kurths J. Advanced Methods of Electrophysiological Signal Analysis and Symbol Grounding. Nova; Commack, NY: Feb., 2008. pp. 276–296. [Google Scholar]

- 30.Schelter B, Timmer J, Eichler M. Assessing the strength of directed influences among neural signals using renormalized partial directed coherence. J. Neurosci. Methods. 2009 Apr.179(1):121–130. doi: 10.1016/j.jneumeth.2009.01.006. [DOI] [PubMed] [Google Scholar]

- 31.Baccal LA, de Medicina F. Proc. 15th Int. Conf. Digital Signal Process. Cardiff, U.K.: Jul., 2007. Generalized partial directed coherence; pp. 163–166. [Google Scholar]

- 32.Baccal LA, Takahashi YD, Sameshima K. Handbook of Time Series Analysis. Wiley-VCH; Berlin, Germany: 2006. Computer intensive testing for the influence between time series; pp. 365–388. [Google Scholar]

- 33.Tanokura Y, Kitagawa G. Power contribution analysis for multivariate time series with correlated noise sources. Adv. Appl. Stat. 2004;4(1):65–95. [Google Scholar]

- 34.Korzeniewska A, Mańczak M, Kamiński M, Blinowska KJ, Kasicki S. Determination of information flow direction among brain structures by a modified directed transfer function (dDTF) method. J. Neurosci. Methods. 2003 May;125(1–2):195–207. doi: 10.1016/s0165-0270(03)00052-9. [DOI] [PubMed] [Google Scholar]

- 35.Ginter JJ, Blinowska KJ, Kaminski M, Durka PJ, Pfurtscheller G, Neuper C. Propagation of EEG activity in beta and gamma band during movement imagery in human. Methods Inf. Med. 2005;44(1):106–113. [PubMed] [Google Scholar]