Abstract

Online labor markets such as Amazon Mechanical Turk (MTurk) offer an unprecedented opportunity to run economic game experiments quickly and inexpensively. Using Mturk, we recruited 756 subjects and examined their behavior in four canonical economic games, with two payoff conditions each: a stakes condition, in which subjects' earnings were based on the outcome of the game (maximum earnings of $1); and a no-stakes condition, in which subjects' earnings are unaffected by the outcome of the game. Our results demonstrate that economic game experiments run on MTurk are comparable to those run in laboratory settings, even when using very low stakes.

Introduction

Online labor markets such as Amazon Mechanical Turk (MTurk) are internet marketplaces in which people can complete short tasks (typically 5 minutes or less) in exchange for small amounts of money (typically $1 or less). MTurk is becoming increasingly popular as a platform for conducting experiments across the social sciences [1]–[7]. In particular, MTurk offers an unprecedented opportunity to run incentivized economic game experiments quickly and inexpensively. Recent work has replicated classical findings such as framing and priming on MTurk [8]–[10], found a high level of test-retest reliability on Mturk [10]–[12], and shown quantitative agreement in behavior between MTurk and the physical laboratory [6], [8]. Yet concerns remain regarding the low stakes typically used in MTurk experiments.

In this study, we directly examine the effect of such stakes by comparing un-incentivized play with play involving typical MTurk sized stakes (up to $1) in four canonical economic games - the dictator game, ultimatum game, trust game and public goods game. Our results are consistent with previous research conducted in the physical laboratory using an order of magnitude higher stakes.

Prior work on the dictator game found that subjects became significantly less generous when going from no stakes to low stakes [13], but that going from low stakes to high stakes did not affect donations [13], [14]. Consistent with these results, we find that the average donation on MTurk decreases from 44% with no stakes to 33% with $1 stakes.

Prior work on the ultimatum game has found that adding stakes does not affect the average proposal but may increase the variance in proposals [13], [15], while the results for responder behavior are more mixed, with one study finding no effect [13] and another finding a significant decrease in rejection rates [15]. It has also been found that increasing from low to high stakes has little effect on either proposals or rejection rates, unless the stakes are extremely large [13]–[17]. Our results when comparing no stakes with $1 stakes on MTurk are broadly consistent with these previous findings. In particular, we see no difference in Player 1 proposals, or the minimal amount accepted by Player 2 s when excluding ‘inconsistent’ players (people who accepted some offer  while also rejecting one or more offers greater than

while also rejecting one or more offers greater than  ). However we do find that adding stakes decreases the fraction of such inconsistent Player 2 s, and decreases rejection rates of some Player 1 offers when including inconsistent Player 2 s.

). However we do find that adding stakes decreases the fraction of such inconsistent Player 2 s, and decreases rejection rates of some Player 1 offers when including inconsistent Player 2 s.

There has been less study of the role of stakes in other social dilemma games. To our knowledge, comparisons between no stakes and low stakes have not been performed in the public goods game or the trust game. Considering the increase of stake size, Kocher, Martinsson and Visser [18] found no significant difference in subjects' contributions in the public goods game when going from low to high stakes, and Johansson-Stenman, Mahmud and Martinsson [19] found that in the trust game, the amount sent by investors decreased when using very high stakes but the fraction returned by trustees was not affected by the changes in stakes. We find no difference in cooperation in the public goods game or trust or trustworthiness in the trust game when comparing no stakes with $1 stakes on MTurk.

Materials and Methods

This research was approved by the committee on the use of human subjects in research of Harvard University, application number F17468-103. Informed consent was obtained from all subjects.

We recruited 756 subjects using MTurk and randomly assigned each subject to play one of four canonical games - the dictator game, ultimatum game, trust game and public goods game - either with or without stakes. In all eight conditions, subjects received a $0.40 show up fee. In the four stakes conditions, subjects had the opportunity to earn up to an additional $1.00 based on their score in the game (at an exchange rate of 1 point = 1 cent). In the four no-stakes conditions, subjects were informed of the outcome of the game, but the score in the game did not affect subjects' earnings. In all conditions, subjects had to complete a series of comprehension questions about the rules of the game and their compensation, and only subjects that answered all questions correctly were allowed to participate. We now explain the implementation details of each of the four games.

In the Dictator game (DG), Player 1 (the dictator) chose an amount  (

( ) to transfer to Player 2, resulting in Player 1 receiving a score of

) to transfer to Player 2, resulting in Player 1 receiving a score of  and Player 2 receiving a score of

and Player 2 receiving a score of  .

.

In the Ultimatum Game (UG), Player 1 (the proposer) chose an amount  (

( ) to offer to Player 2 (the responder). Player 2 could then accept, resulting in Player 1 receiving a score of

) to offer to Player 2 (the responder). Player 2 could then accept, resulting in Player 1 receiving a score of  and Player 2 receiving a score of

and Player 2 receiving a score of  ; or reject, resulting in both players receiving a score of 0. We used the strategy method to elicit Player 2 decisions (i.e., Player 2 indicated whether she would accept or reject each possible Player 1 offer). For each Player 2 we then calculated her Minimum Acceptable Offer (MAO) as the smallest offer she was willing to accept. As in the physical lab, some subjects were ‘inconsistent’ in that they were willing to accept some of the lower offers, but rejected higher offers (that is, they did not have a threshold for acceptance) [20]. When calculating MAOs, we did not include such inconsistent players. We also examined how the addition of stakes changed the fraction of inconsistent players, as well as the rejection rates for each possible Player 1 offer when including all Player 2 s (consistent and inconsistent).

; or reject, resulting in both players receiving a score of 0. We used the strategy method to elicit Player 2 decisions (i.e., Player 2 indicated whether she would accept or reject each possible Player 1 offer). For each Player 2 we then calculated her Minimum Acceptable Offer (MAO) as the smallest offer she was willing to accept. As in the physical lab, some subjects were ‘inconsistent’ in that they were willing to accept some of the lower offers, but rejected higher offers (that is, they did not have a threshold for acceptance) [20]. When calculating MAOs, we did not include such inconsistent players. We also examined how the addition of stakes changed the fraction of inconsistent players, as well as the rejection rates for each possible Player 1 offer when including all Player 2 s (consistent and inconsistent).

In the Trust Game (TG), Player 1 (the investor) chose an amount  (

( ) to transfer to Player 2 (the trustee). The transferred amount was multiplied by 3 and given to the trustee, who then chose a fraction

) to transfer to Player 2 (the trustee). The transferred amount was multiplied by 3 and given to the trustee, who then chose a fraction  (where

(where  ) to return to Player 1. As a result, Player 1 received a score of

) to return to Player 1. As a result, Player 1 received a score of  and Player 2 received a score of

and Player 2 received a score of  . We used the strategy method to elicit Player 2 decisions (i.e., Player 2 indicated the fraction she would return for each possible Player 1 transfer).

. We used the strategy method to elicit Player 2 decisions (i.e., Player 2 indicated the fraction she would return for each possible Player 1 transfer).

In the Public Goods Game (PGG), four players each received an initial endowment of 40 units, and simultaneously choose an amount  (

( ) to contribute to a public pool. The total amount in the pot was multiplied by 2 and then divided equally by all group members. As a result, player i received the score

) to contribute to a public pool. The total amount in the pot was multiplied by 2 and then divided equally by all group members. As a result, player i received the score  .

.

In the DG, UG and TG experiments, each subject played both roles, first making a decision as Player 1, and then making a decision as Player 2. Subjects were not informed that they would subsequently play as Player 2 when making their Player 1 decisions. Unless otherwise noted, all statistical tests use the Wilcoxon Rank-sum test.

Results and Discussion

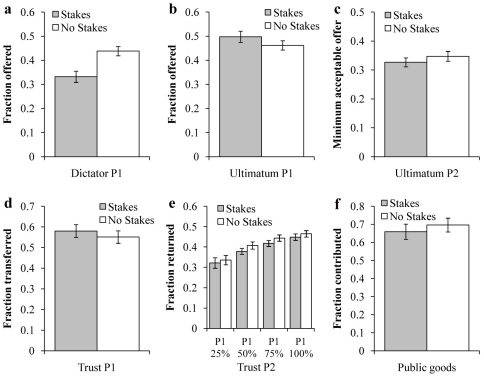

As can be seen in Figure 1, introducing stakes altered the distribution of offers in the DG, significantly reducing the average offer (no-stakes = 43.8%, stakes = 33.2%,  ). In the UG, we found a marginally significant positive effect of stakes on Player 1 proposals (no-stakes = 46.1%, stakes = 49.7%,

). In the UG, we found a marginally significant positive effect of stakes on Player 1 proposals (no-stakes = 46.1%, stakes = 49.7%,  ). Given the small effect size and borderline significant p-value, we conclude that stakes have little effect on P1 offers in the UG. We also find no significant effect on Player 2 MAOs in the UG (excluding inconsistent players) (

). Given the small effect size and borderline significant p-value, we conclude that stakes have little effect on P1 offers in the UG. We also find no significant effect on Player 2 MAOs in the UG (excluding inconsistent players) ( ). However, we do find a significantly higher proportion of inconsistent Player 2′s in the no-stakes condition compared to the stakes condition (

). However, we do find a significantly higher proportion of inconsistent Player 2′s in the no-stakes condition compared to the stakes condition ( test,

test,  ). As a result, we also find a significant effect of stakes on Player 2 rejection rates for some Player 1 offers in the UG when including inconsistent players (

). As a result, we also find a significant effect of stakes on Player 2 rejection rates for some Player 1 offers in the UG when including inconsistent players ( for the 30% offer, and

for the 30% offer, and  for all offers above 60%). There was no significant effect of stakes on transfers in the TG (

for all offers above 60%). There was no significant effect of stakes on transfers in the TG ( ), back-transfers in the TG (

), back-transfers in the TG ( for all possible Player 1 transfers), and contributions in the PGG (

for all possible Player 1 transfers), and contributions in the PGG ( ). We also test whether the variance in behavior differs between the stakes and no-stakes conditions using Levene's F-test. Consistent with our results above, we find that the variance in DG donations is significantly smaller in the stakes condition compared to the no-stakes condition (

). We also test whether the variance in behavior differs between the stakes and no-stakes conditions using Levene's F-test. Consistent with our results above, we find that the variance in DG donations is significantly smaller in the stakes condition compared to the no-stakes condition ( ), but that adding stakes did not have an effect on the variance of offers (

), but that adding stakes did not have an effect on the variance of offers ( ) and MAOs in the UG (

) and MAOs in the UG ( ), transfers (

), transfers ( ) and back-transfers in the TG (

) and back-transfers in the TG ( for back-transfers on all Player 1 transfers, except for the transfer of 25% where the variance of Player 2 back-transfers in the stakes condition was marginally higher,

for back-transfers on all Player 1 transfers, except for the transfer of 25% where the variance of Player 2 back-transfers in the stakes condition was marginally higher,  ), and contributions in the PGG (

), and contributions in the PGG ( ).

).

Figure 1. The effect of stakes on average behavior across games.

Furthermore, we find that the average behavior on MTurk is in line with behavior observed previously in the physical laboratory with higher stakes. The average donation of 33.2% in our $1 stakes DG is close to the average donation of 28.4% aggregated over more than 616 DG treatments as reviewed in a recent meta-analysis [21].

Since there was little difference in behavior between the stakes and no-stakes conditions in the UG, TG and PGG, we compare the aggregated averages from both conditions in these games to prior work. Considering the UG, it has been shown that using the strategy method significantly affects behavior [22], and to our knowledge no meta-analyses exist which focus on strategy-method UGs. Therefore, we examine behavior in various previous UG experiments that used the strategy method [23]–[29], and compare the range of outcomes to what we observe in our data. The average Player 1 offer of 48.1% in our experiment is within the range of behavior observed in those studies (35.4%–48.4%), as is our average Player 2 MAO of 33.7% (compared to the range of previous MAOs of 19.2%–36.0%).

Turning now to the TG, we find that the average percentage sent by Player 1 in our experiment (56.6%) is quite close to the average value of 50.9% reported in a recent trust game meta-analysis aggregating over approximately 80 experiments [30]. The fraction returned by Player 2 of 40.1% in our experiment is also close to the average returned fraction of 36.5% from the same meta-analysis.

For the public goods game, it is important to compare our results to those obtained in previous experiments using the same Marginal Per-Capita Return value (MPCR = 0.5 in our study). In the absence of a meta-analysis that breaks contributions down by MPCR, we compare the average contribution level in our experiment to the range of average contributions observed in various previous studies using the same MPCR [31]–[36]. The average fraction of the endowment contributed to the public good in our study of 67.7% is within the range observed in these studies (40%–70.4%).

To conclude, we have assessed the effect of $1 stakes compared to no stakes in economic games run in the online labor market Amazon Mechanical Turk. The results are generally consistent with what is observed in the physical laboratory, both in terms of the effect of adding stakes, and the average behavior in the stakes conditions. These experiments help alleviate concerns about the validity of economic game experiments conducted on MTurk and demonstrate the applicability of this framework for conducting large scale scientific studies.

Acknowledgments

We gratefully acknowledge helpful input and comments from Yochai Benkler and Anna Dreber.

Footnotes

Competing Interests: The authors have declared that no competing interests exist.

Funding: This work was funded by a research grant from Harvard University's Berkman Center for Internet and Society (http://cyber.law.harvard.edu) and DR is supported by a grant from the John Templeton Foundation (http://www.templeton.org). YG is supported by FP7 Marie Curie Reintagration Grant no. 268362. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Dreber A, Gerdes C, Gransmark P. 2010. Beauty queens and battling knights: Risk taking and attractiveness in chess.

- 2.Horton J. Employer expectations, peer effects and productivity: Evidence from a series of field experiments. SSRN eLibrary 2010 [Google Scholar]

- 3.Lawson C, Lenz G, Baker A, Myers M. Looking like a winner: Candidate appearance and electoral success in new democracies. World Politics. 2010;62:561–593. [Google Scholar]

- 4.Rand D, Nowak M. The evolution of antisocial punishment in optional public goods games. Nature Communications. 2011;2:434. doi: 10.1038/ncomms1442. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Rand D, Arbesman S, Christakis N. Dynamic social networks promote cooperation in experiments with humans. Proceedings of the National Academy of Sciences. 2011;108:19193–19198. doi: 10.1073/pnas.1108243108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Suri S, Watts D. Cooperation and contagion in web-based, networked public goods experiments. PLoS One. 2011;6:e16836. doi: 10.1371/journal.pone.0016836. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Chandler D, Kapelner A. Breaking monotony with meaning: Motivation in crowdsourcing markets. 2010. University of Chicago mimeo.

- 8.Horton J, Rand D, Zeckhauser R. The online laboratory: Conducting experiments in a real labor market. 2010. Technical report, National Bureau of Economic Research.

- 9.Paolacci G, Chandler J, Ipeirotis P. Running experiments on amazon mechanical turk. Judgment and Decision Making. 2010;5:411–419. [Google Scholar]

- 10.Buhrmester M, Kwang T, Gosling S. Amazons mechanical turk: A new source of inexpensive, yet high-quality, data? Perspectives on Psychological Science. 2011;6:3. doi: 10.1177/1745691610393980. [DOI] [PubMed] [Google Scholar]

- 11.Mason W, Suri S. Conducting behavioral research on amazons mechanical turk. Behavior Research Methods. 2010:1–23. doi: 10.3758/s13428-011-0124-6. [DOI] [PubMed] [Google Scholar]

- 12.Rand D. The promise of mechanical turk: How online labor markets can help theorists run behavioral experiments. Journal of Theoretical Biology. 2011 doi: 10.1016/j.jtbi.2011.03.004. [DOI] [PubMed] [Google Scholar]

- 13.Forsythe R, Horowitz J, Savin N, Sefton M. Fairness in simple bargaining experiments. Games and Economic behavior. 1994;6:347–369. [Google Scholar]

- 14.Carpenter J, Verhoogen E, Burks S. The effect of stakes in distribution experiments. Economics Letters. 2005;86:393–398. [Google Scholar]

- 15.Cameron L. Raising the stakes in the ultimatum game: Experimental evidence from indonesia. Economic Inquiry. 1999;37:47–59. [Google Scholar]

- 16.Hoffman E, McCabe K, Smith V. On expectations and the monetary stakes in ultimatum games. International Journal of Game Theory. 1996;25:289–301. [Google Scholar]

- 17.Andersen S, Ertac S, Gneezy U, Hoffman M, List J. Stakes matter in ultimatum games. American Economic Review. 2011;101:3427–39. [Google Scholar]

- 18.Kocher M, Martinsson P, Visser M. Does stake size matter for cooperation and punishment? Economics Letters. 2008;99:508–511. [Google Scholar]

- 19.Johansson-Stenman O, Mahmud M, Martinsson P. Does stake size matter in trust games? Economics Letters. 2005;88:365–369. [Google Scholar]

- 20.Bahry D, Wilson R. Confusion or fairness in the field? rejections in the ultimatum game under the strategy method. Journal of Economic Behavior & Organization. 2006;60:37–54. [Google Scholar]

- 21.Engel C. Dictator games: A meta study. Experimental Economics. 2010:1–28. [Google Scholar]

- 22.Oosterbeek H, Sloof R, Van De Kuilen G. Cultural differences in ultimatum game experiments: Evidence from a meta-analysis. Experimental Economics. 2004;7:171–188. [Google Scholar]

- 23.Güth W, Schmittberger R, Schwarze B. An experimental analysis of ultimatum bargaining. Journal of Economic Behavior & Organization. 1982;3:367–388. [Google Scholar]

- 24.Kahneman D, Knetsch J, Thaler R. Fairness and the assumptions of economics. Journal of business. 1986:285–300. [Google Scholar]

- 25.Larrick R, Blount S. The claiming effect: Why players are more generous in social dilemmas than in ultimatum games. Journal of Personality and Social Psychology. 1997;72:810. [Google Scholar]

- 26.Solnick S. Gender differences in the ultimatum game. Economic Inquiry. 2001;39:189–200. [Google Scholar]

- 27.Straub P, Murnighan J. An experimental investigation of ultimatum games: Information, fairness, expectations, and lowest acceptable offers. Journal of Economic Behavior & Organization. 1995;27:345–364. [Google Scholar]

- 28.Wallace B, Cesarini D, Lichtenstein P, Johannesson M. Heritability of ultimatum game responder behavior. Proceedings of the National Academy of Sciences. 2007;104:15631. doi: 10.1073/pnas.0706642104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Zak P, Stanton A, Ahmadi S. Oxytocin increases generosity in humans. PLoS One. 2007;2:e1128. doi: 10.1371/journal.pone.0001128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Johnson N, Mislin A. Cultures of kindness: A meta-analysis of trust game experiments. 2008. Available at Social Science Research Network: http://ssrn com/abstract 1315325.

- 31.Andreoni J. Why free ride?: Strategies and learning in public goods experiments. Journal of Public Economics. 1988;37:291–304. [Google Scholar]

- 32.Andreoni J. Warm-glow versus cold-prickle: the effects of positive and negative framing on cooperation in experiments. The Quarterly Journal of Economics. 1995;110:1. [Google Scholar]

- 33.Andreoni J. Cooperation in public-goods experiments: kindness or confusion? The American Economic Review. 1995:891–904. [Google Scholar]

- 34.Brandts J, Schram A, Institute T. Cooperative gains or noise in public goods experiments. Tinbergen Institute 1996 [Google Scholar]

- 35.Keser C, van Winden F. 1997. Partners contribute more to public goods than strangers.

- 36.Weimann J. Individual behaviour in a free riding experiment. Journal of Public Economics. 1994;54:185–200. [Google Scholar]