Abstract

Motivated by analysis of gene expression data measured over different tissues or over time, we consider matrix-valued random variable and matrix-normal distribution, where the precision matrices have a graphical interpretation for genes and tissues, respectively. We present a l1 penalized likelihood method and an efficient coordinate descent-based computational algorithm for model selection and estimation in such matrix normal graphical models (MNGMs). We provide theoretical results on the asymptotic distributions, the rates of convergence of the estimates and the sparsistency, allowing both the numbers of genes and tissues to diverge as the sample size goes to infinity. Simulation results demonstrate that the MNGMs can lead to better estimate of the precision matrices and better identifications of the graph structures than the standard Gaussian graphical models. We illustrate the methods with an analysis of mouse gene expression data measured over ten different tissues.

Keywords: Gaussian graphical model, Gene networks, High dimensional data, l1 penalized likelihood, Matrix normal distribution, Sparsistency

1. Introduction

Gaussian graphical models (GGMs) provide natural tools for modeling the conditional independence relationships among a set of random variables [1, 2]. Many methods of estimating the standard GGMs have been developed in recent years, especially in high-dimensional settings. Meinshausen and Bühlmann [3] took a neighborhood selection approach to this problem by fitting a l1 penalized regression or Lasso [4] to each variable using the other variables as predictors. They show that this neighborhood selection procedure consistently estimates the set of non-zero elements of the precision matrix. Other authors have proposed algorithms for the exact maximization of the l1-penalized log-likelihood. Yuan and Lin [5], Banerjee et al. [6] and Dahl et al. [7] adapted interior point optimization method for the solution to this problem. Based on the work of Banerjee et al. [6] and a block-wise coordinate descent algorithm, Friedman et al. [8] developed the graphical Lasso (glasso) for sparse inverse covariance estimation, which is computationally very efficient even when the dimension is greater than the sample size. Yuan [9] developed a linear programming procedure for high dimensional inverse covariance matrix estimation and obtained oracle inequalities for the estimation error in terms of several matrix norms. Some theoretical properties of this type of methods have also been developed by Yuan and Lin [5], Ravikumar et al. [10], Rothman et al. [11] and Lam and Fan [12]. Cai et al. [13] developed a constrained l1 minimization approach to sparse precision matrix estimation, extending the idea of Dantzig selector [14] developed for sparse high dimensional regressions.

The standard likelihood framework for building Gaussian graphical models assumes that samples are independent and identically distributed from a multivariate Gaussian distribution. This assumption is often limited in certain applications. For example, in genomics, gene expression data of p genes collected over q different tissues from the same subject are often correlated. For a given sample, let Y be the p × q matrix of the expression data, where the jth column corresponds to the expression data of p genes measured in the jth tissue, and the ith row corresponds to gene expressions of the ith gene over q different tissues. Instead of assuming that the columns or rows are independent, we assume that the matrix variate random variable Y follows a matrix normal distribution [15, 1, 16], where both row and column precision matrices can be specified. The matrix-variate normal distribution has been studied in analysis of multivariate linear model under the assumption of independence and homoscedasticity for the structure of the among-row and among-column covariance matrices of the observation matrix [17, 18]. Such a model has also be applied to spatio-temporal data [19, 20]. In genomics, Teng and Huang [21] proposed to use the Kronecker product matrix to model gene-experiment interactions, which leads to gene expression matrix following a matrix-normal distribution. The gene expression matrix measured over multiple tissues is transposable, meaning that potentially both the rows and/or columns are correlated. Such matrix-valued normal distribution was also used in Allen and Tibshirani [22] and Efron [23] for modeling gene expression data in order to account for gene expression dependency across different experiments. Dutilleul [24] developed the maximum likelihood estimation(MLE) algorithm for the matrix normal distribution. Mitchell et al [25] developed a likelihood ratio test for separability of the covariances. Muralidharan [26] used a matrix normal framework for detecting column dependence when rows are correlated and estimating the strength of the row correlation.

The precision matrices of the matrix normal distribution provide the conditional independence structures of the row and column variables [1], where the non-zero off-diagonal elements of the precision matrices correspond to conditional dependencies among the elements in row or column of the matrix normal distribution. The matrix normal models with specified non-zero elements of the precision matrices define the matrix normal graphical models (MNGMs). This is analogous to the relationship between the Gaussian graphical model and the precision matrix of a multivariate normal distribution. Despite the flexibility of the matrix normal distribution and the MNGMs in modeling the transposable data, methods for model selection and estimation of such models have not been developed fully, especially in high dimensional settings. Wang and West [27] developed a Bayesian approach for the MNGMs using Markov Chain Monte Carlo sampling scheme that employs an efficient method for simulating hyper-inverse Wishart variates for both decomposable and nondecomposable graphs. Allen and Tibshirani [22, 28] proposed penalized likelihood approaches for such the matrix normal models, where both l1-norm and l2-norm penalty functions are used on the precision matrices.

The focus of this paper is to develop a model selection and estimation method for the MNGMs based on a l1 penalized likelihood approach under the assumption of both row and column precision matrices being sparse. Our penalized estimation method is the same as that proposed in [22, 29, 28] when l1 penalty is used. Allen and Tibshirani [22, 28] only considered the setting when there is one observed matrix-variate normal data and used the estimated covariance matrices for imputing the missing data and for de-correlating the noise in the underlying data. We focus on evaluating how well such a l1 penalized estimation method recovers the underlying graphical structures that correspond to the row and column precision matrices when we have n i.i.d samples from a matrix normal distribution. In addition, we provide asymptotic justification of the estimates and show that the estimates enjoy similar asymptotic and oracle properties as the penalized estimates for the standard GGMs [30, 12, 5] even when the dimensions p = pn and q = qn diverge as the number of observations n → ∞. In addition, if consistent estimates of the precision matrices are available are used in the adaptive l1 penalty functions, the resulting estimates have the property of sparsistency.

The rest of the paper is organized as follows. We introduce the MNGMs as motivated by analysis of gene expression data across multiple tissues in Section 2. In Section 3 we present an l1 penalized likelihood estimate of such a MNGM and an iterative coordinate descent procedure for the optimization. We present the asymptotic properties of the estimates in Section 4 in both the classic setting when the dimensions are fixed and the setting allowing the dimensions to diverge as the sample size goes to infinity. In Section 5 we present simulation results and comparisons with the standard Gaussian graphical model. We present an application of the MNGM in Section 6 to an analysis of mouse gene expression data measured over 10 different tissues. Finally, in Section 7 we give a brief discussion. The proofs of all the theorems are given in the Appendix.

2. Matrix Normal Graphical Model for Multi-tissue Gene Expression Data

We consider the gene expression data measured over different tissues. Let Y be the random p × q matrix of the gene expression levels of p genes over q tissues. Let vec(A) be the vectorization of a matrix A obtained by stacking the columns of the matrix A on top of one another. Instead of assuming that the expression levels are independent over different tissues, following [21], we can model this gene expression matrix as

| (1) |

where G and T are expected (constant) effects from the genes and tissues respectively, IGT are the interaction effects that are assumed to be random with vec(IGT ) following a multivariate normal distribution with zero means and a covariance matrix , where the covariance matrices U and V respectively represent the gene and tissue dependencies, and ε represents small random normal noises with zero means arising from all nuisance sources. With negligible nuisance effects, vec(Y) follows a multivariate normal distribution with means vec(M) = vec(G + T) and a covariance matrix [21].

Treating the data Y as a matrix-valued random variable, we say Y follows a matrix normal distribution, if Y has a density function

| (2) |

where k(U, V) = (2π)−pq/2|U|−q/2|V|−p/2 is the normalizing constant, M is the mean matrix, U is row covariance matrix and V is column covariance matrix. This definition is equivalent to the definition via the Kronecker product [31, Section 8.8 and 9.2]. Specifically,

| (3) |

We denote the corresponding precision matrices as A = U−1, B = V−1 for U and V, respectively. This model assumes a particular decomposable covariance matrix for vec(Y) that is separable in the geostatistics context [32]. The parameters U and V are defined up to a positive multiplicative constant. We can set B11 to any positive constant to make the parameters identifiable.

The following proposition shows that there is a graphical model interpretation for the two precision matrices A and B in the matrix normal model (2).

Proposition 2.1. Assume that Y ~ MNp,q(M; U, V). If we partition the columns of Y as Y = (y1, ···, yq), then it holds for γ, μ ∈ = Γ = {1, ···, q} with γ ≠ μ that

| (4) |

where B = {bαβ]α,β∈Γ = V–1 is the column precision matrix of the distribution; similarly, if we partition the rows of Y as Y = (y1, ···, yp)T, then it holds for δ, β ∈ Ξ = (1, ..., p} with δ ≠ η that

| (5) |

where A = {aδη}δ,η∈Ξ = U−1 is the row precision matrix of the distribution.

This proposition is based on a proposition in Lauritzen [1]. Detailed proof can be found in the Appendix. Without loss of generality, we assume M = 0 in this paper since it can be easily estimated.

3. l1-Penalized Maximum Likelihood Estimation of the Precision Matrices

We propose to estimate the precision matrices A = U−1, B = V−1 in model (2) by maximizing a penalized likelihood function. Since for any c > 0, p(Y | A, B) = p(Y | cA, B/c), A and B are not uniquely identified. We set b11 = 1 for the purpose of parameter identification. We propose to estimate A and B by minimizing the following penalized negative log-likelihood function

| (6) |

where pλij (·) is the penalty function for the element aij of A with tuning parameter λij, while pρij (·) is the corresponding penalty function for bij with tuning parameter ρij. We consider both l1-penalty with pλij (aij) = λ|aij| and pρij (bij) = ρ|bij| and adaptive l1 penalty with pλij (aij) = λ|ãij|−γ1|aij| and pρij(b) = ρ|b̃ij|−γ2|bij|, where à = {ãij} and B̃ = {b̃ij} are some consistent estimate of A and B and γ1 > 0 and γ2 > 0 are two constants.

It is easy to check that the objective function (6) is a bi-convex function in A and B. We propose the following iterative procedure to minimize this function:

Initialization: B̂(0) = Iq.

- In ith step, given the current estimate of B, B̂(i), we update A by

where .(7) - In (i+1)th step, given the current estimate of A, Â(i+1), we update B by

when , .(8) Iterate step 2 and 3 until convergence.

Scale (Â, B̂) = (Â/c, cB̂) such that b̂11 = 1.

Optimizations (7) and (8) can be solved using the block coordinate descent algorithm in the same way as that developed for estimating the precision matrix in standard Gaussian graphical models [8]. We use the program glasso [8] in this paper for these optimizations when the l1 or the adaptive l1 penalty functions are used. The glasso algorithm guarantees that the estimates  and B̂ are positive definite.

Note that in Step 5 of the algorithm, we rescale the A and B matrices to ensure that b̂11 = 1. However, when l1 or the adaptive l1 penalty functions are used, the solution to (6) is always unique in the sense that for a given λ and ρ, there is a unique scaling factor c*,

in the equivalent class that minimizes Φ(A, B), where is matrix l1 norm. This can be seen by

based on the algebra-geometry inequality. Equality holds and hence the minimum is attained when . Hence A = c* A0, B = B0/c* are uniquely determined.

Finally, the tuning parameters λ and ρ in the l1 penalty functions are chosen using the cross-validated likelihood function.

4. Asymptotic Theorems

Throughout this paper, for a given p × q matrix A = (aij), we denote as operator or spectral norm of A, ||A||∞ = max|aij| as the element-wise l∞ norm of A, and as the matrix l∞ norm of A. Furthermore, we use as the Frobenius norm of A. Denote λmin(A) and λmax(A) the smallest and largest eigenvalues of the matrix A.

4.1. Asymptotic theorems when p and q are fixed

We first consider the asymptotic distributions of the penalized maximum like lihood estimates in the setting when p and q are fixed as n → ∞. The following theorem provides the asymptotic distribution of the estimate (Â, B̂).

Theorem 1. For n independent identically distributed observations Y1, ··· Yn from a matrix normal distribution MN (0; A−1, B−1), the optimizer (Â, B̂) of the penalized negative log-likelihood function (6) with the l1 penalty functions has the following property:

If n1/2 λ → λ0 ≥ 0, n1/2 ρ → ρ0 ≥ 0, as n → ∞, then

in distribution, where

in which W is a random variable such that W ~ N (0, σ2), where

This result parallels to that of Yuan and Lin [5] for the l1 penalized likelihood estimate of the precision matrix in the standard Gaussian graphical model.

Suppose that we have cn-consistent estimators of A and B, denoted by à = (ãij)1≤i,j≤p and B̃ = (b̃ij)1≤i,j≤q, that is cn(à – A) = Op(1), cn(B̃ – B) = Op(1), we consider the penalized likelihood estimates using the adaptive l1 penalty function

in the objective function (6), where γ1 and γ2 are two constants. The following theorem shows that the resulting estimates of the precision matrices have the oracle property that parallels to that of Fan et al. [30] for the standard Gaussian graphical model.

Theorem 2. For n independent identically distributed observations Y1, ···, Yn from a matrix normal distribution MN(0; A−1, B−1), the optimizer (Â, B̂) of the object function (6) with adaptive l1 penalty functions has the oracle property in the sense of Fan and Li [33]. That is, when n1/2λ = Op(1), n1/2ρ = Op(1), and as n → for some λ1 > 0 and γ2 > 0, then (1). asymptotically, the estimates  and B̂ have the same sparsity pattern as the true precision matrix A and B.

(2). the non-zero entries of  and B̂ are cn-consistent and asymptotically normal.

4.2. Asymptotic theorems when p = pn and q = qn diverge

The next two theorems provide the convergence rates and sparsistency properties of the estimates allowing p = pn, q = qn to diverge as n → ∞. We use and to denote the true precision matrices and and to denote the support of the true matrices, respectively. Let sn1 = card(SA) – pn and sn2 = card(SB) – qn be the number of nonzero elements in the off-diagonal entries of A0 and B0, respectively. We assume the following regularity conditions:

- There exist constants ε1 and ε2 such that

- There exist constants ε3 and ε4 such that

- The tuning parameter λn satisfies

- The tuning parameter ρn satisfies

Conditions (A) and (B) bound uniformly the eigenvalues of A0 and B0, which facilitates the proof of consistency. These conditions are also assumed for the penalized likelihood estimation for the standard Gaussian graphical models [34, 12]. The upper bounds on λn and ρn in condition (C) and (D) are related to the control of bias due to the l1 penalty terms in the objective function [33, 35, 12].

Denote . It is easy to check that Σ0 = (vec(U))(vec(V))T = ESn. We use the double indices (i, j) and (k, l) to refer to a row or a column in Sn or Σ0. The following Lemma provides the tail probability bound of (Sn – Σ0).

Lemma 4.1. Suppose the matrix observations Yk's are i.i.d. from a matrix normal distribution, Yk ~ MN(0; U, V), and , . Then we have the tail bound:

| (9) |

for some constants C1, C2 and δ that depend on ε1, ε3 only.

In this lemma, if we choose for some M such that |t| ≤ δ, then

The next theorem provides the rates of convergence of the penalized likelihood estimates  and B̂ in terms of the Frobenius norms.

Theorem 3 (Rate of convergence). Under the regularity conditions (A)-(D), if and qn(pn + sn1) (log pn + log qn)k/n = O(1) for some k > 1; and pn (qn + sn2) (log pn + log qn)l/n = O(1) for some l > 1. Then when the l1 penalty functions are used, there exists a local minimizer (Â, B̂) of (6) such that and .

Theorem 3 states explicitly how the number of nonzero elements and dimensionality of both precision matrices affect the rates of convergence of the estimates. Since there are (qn + sn2)(pn + sn1) nonzero elements in the Kronecker product and and each of them can be estimated at best with rate n−1/2, the total square errors are at least of rate qn(pn + sn1)/n for estimating A and pn(qn + sn2) for estimating B. The price that we pay for high dimensionality is a logarithmic factor (log pn + log qn). The estimates  and B̂ converge to their true values in Frobenius norm as long as qn(pn + sn1) and pn(qn + sn2) are at a rate O((log pn + log qn)−l) for some l > 1, which decays to zero slowly. This means that in practice pnqn can be comparable to n without violating the results. Compared to the rates of convergence of the l1 penalized likelihood estimates of the precision matrix in the standard GGM [12], the convergence rates for  and B̂ are increased by a factor qn and pn. If qn (or pn) is fixed as n → ∞, then the rate for  (or B̂) is exactly the same as that given in [12] for the standard Gaussian graphical models.

When an adaptive l1 penalty function is used, we have the following sparsistency of the penalized estimates. Here sparsistency refers to the property that all parameters in A0 and B0 that are zero are actually estimated as zero with probability tending to one. We use Sc to denote the complement of a set S.

Theorem 4 (Sparsistency). Under the conditions given in Theorem 3, when the penalty functions in (6) are adaptive l1 penalty, pλij (aij) = |aij|/|ãij|γ1, pρkl (bkl) =|bkl|/|b̃kl|γ2 for some γ1 > 0, γ2 > 0, where à = (ãij) and B̃ = (b̃kl) are any two en- and fn- consistent estimator, i.e. en ||à – A0||∞ = Op(1), fn||B̃ – B0||∞ = OP(1). For any local minimizer (Â, B̂) of (6) satisfying

and ||Â – A0||2 = OP(cn,), ||B̂ – B0||2 = OP(dn) for sequences cn → 0 and dn → 0, If

| (10) |

and

| (11) |

then with probability tending to 1, âij = 0 for all and b̂kl = 0 for all .

The sparsistency results requires a lower bound on the rates of the regularization parameters λn and ρn. On the other hand, the regularity conditions (C)(and (D) impose an upper bound on λn and ρn in order to control the estimation biases. These requirements on the tuning parameters are similar to those for the GGMs. However, in the case of the matrix normal estimation, the conditions for λn depend not only on the dimension pn of A, the rate of the consistent estimator à and the rate of error for  in l2 norm, but also depend on the dimension qn and its sparsity sn2 of the matrix B0. Similarly, the conditions for ρn depend not only on the rate of B̃ and rate of error for B̂ in l2 norm, but also on the dimension and sparsity of A0. In addition, the condition (10) in the theorem, combined with the regularity Condition (C), implies that

and

These are the requirements for both the rate of the consistent estimator à in its element-wise l∞ norm and rate of the operator norm of Â. Similarly, condition (11) and regularity Condition (D) imply that

and

5. Monte Carlo Simulations

5.1. Comparison candidates and measurements

In this section we present results form Monte Carlo simulations to examine the performances of the penalized likelihood method and to compare them to several naive methods for estimating the two precision matrices. The first method uses only data from one row or column in order to ensure that the observations are independent. Specifically, to estimate the precision matrix A, we choose the ith column from every observation matrix Yk (k = 1, ···, n), denoted by yk·i the ith column of Yk, to estimate the row precision matrix A. Since yk·i ~ N(M·i, viiU), we can estimate the precision matrix A up to a multiplier by fitting a standard GGM. Without loss of generality, we choose the first column yk·1 in our simulations. We call this procedure the Gaussian graphical model using the column data (GGM-C). Similarly, we can estimate the precision matrix B by choosing the first row yk1· from every Yk (k = 1, ···, n). We call this procedure the Gaussian graphical model using the row data (GGM-R). The second approach simply ignores the dependency of the data across the columns or rows and estimates A by treating the q columns as independent observations and estimates B by treating the p rows as independent observations using the Gaussian graphical model. We call this procedure the Gaussian graphical model assuming independence of row variables or column variables (GGM-I). For all three procedures (GGM-C, GGMC and GGM-I), we use glasso algorithm to estimate these two matrices. When p, q < n, we also consider the adaptive version of the glasso, where the maximum likelihood estimates are used as the initial consistent estimates of the precision matrices.

We compare the performance of different estimators of the precision matrices A and B by calculating different matrix norms of the estimation errors. Let ΔA = A –  and ΔB = B – B̂ be the estimation errors of the estimators  and B̂, respectively. We compare ||ΔA||∞, |||ΔA|||∞, ||ΔA|| and ||ΔA||F for Â, and ||ΔB||∞, |||ΔB|||∞, ||ΔB|| and ||ΔB||F for B̂.

In order to evaluate how well different procedures recover the graphical structures defined by the precision matrices, we define the non-zero entry in a sparse precision matrix as “positive” and define the specificity (SPE), sensitivity (SEN) and Matthews correlation coefficient (MCC) scores as following:

where TP, TN, FP and FN are the numbers of true positives, true negatives, false positives and false negatives.

5.2. Models and data generation

We generate sparse precision matrices A and B using a similar scheme as in [36] and [30]. To be specific, our generating procedure can be described as:

where i ≠ j and δij is a Bernoulli random variable with a success probability of p+. Then the off-diagonal elements of each row aij (j = 1, ···, p and j ≠ i) are divided by 1.5Σ|ai·|1 (off-diagonal elements only). A is then symmetrized and U = A−1 is obtained. Note that the diagonal elements in U generated in this way are heterozygous. We further modify A by WA where W is a diagonal matrix. Since A generated as above is diagonal dominant, W = diag(w1, ···, wp) is generated as the follows: first we choose the upper bound wmax for wi's, here we use wmax = 1.2. Then for each j, we generate a uniformly distributed random variable r in the interval (Σi,i≠j|aij|/|ajj|, 1) and let wj = rwmax. Thus we can guarantee the diagonal dominance of the matrix WA and hence the positive definiteness. We further define U = (WA)−1. Matrices B and V are generated in a similar way.

After we generate the parameter (A, B) and (U, V), we generate the matrix normal data by first generating a pq-dimensional normal vectors zk from and then rearranging them into a matrix Yk such that vec(yk) = zk for k = 1, ···, n.

In the following, let pA+ (or pB+) be the probability that an off-diagonal element of matrix A (or B) is non-zero, which measures the degree of the sparsity of the matrix. We consider five different models of different dimensions and different degrees of sparsity:

We use a 5-fold cross validation to tune the regularization parameters for Models 1-4 and 3-fold cross validation for Model 5 due to its small sample size. The simulations are repeated 50 times.

5.3. Simulation results

We present in Tables 1 and 2 the results of the three different procedures in terms of estimating the precision matrix and recovering the corresponding graphical structures when the l1 penalty functions are used. For all four models considered, we observe that the MNGM results in smaller estimation errors and better performances in identifying graphical structures defined by the precision matrices than the naive applications of the Gaussian graphical models. This is true both for the settings when p, q < n (Models 1 and 2) and when p, q > n (Models 3 and 4). We observe that when only one row or one column is chosen from each observation and the standard GGM is used (GGM-R or GGM-C), the estimation errors are much higher than the MNGM or the GGM when the rows or columns are treated as independent. Similarly, both sensitivities and specificities are also lower if only data from one row or one columns are used. This can be explained by the relatively small sample sizes. On the other hand, if the dependency of the columns or rows is ignored and the data of the columns or rows are treated as independent, direct application of the Gaussian graphical model (GGM-I) results in smaller specificities and higher false positives. As a benchmark comparison, for Models 1 and 2, we also present in Table 1 the errors of the MLEs of A and B. It is clear that the MNGM gives better estimates than the MLEs. MLEs for Models 3 and 4 do not exist.

Table 1.

Comparison of the performance for simulated data sets of different dimensions when l1 penalty functions are used.

| Precision Matrix | Measure | MNGM | GGM-I | GGM-C GGM-R | MLE |

|---|---|---|---|---|---|

| Model 1, n = 100, p = 30, q = 30 |

|||||

| A | ||ΔA|| | 0.17(0.026) | 0.27(0.015) | 0.62(0.057) | 0.25(0.037) |

| |||ΔA|||∞ | 0.35(0.042) | 0.53(0.064) | 1.23(0.133) | 0.64(0.059) | |

| ||ΔA||∞ | 0.08(0.019) | 0.15(0.013) | 0.34(0.066) | 0.09(0.021) | |

| ||ΔA||F | 0.43(0.051) | 0.73(0.025) | 1.73(0.130) | 0.60(0.044) | |

| SPEA | 0.68(0.025) | 0.32(0.156) | 0.82(0.147) | ||

| SENA | 1.00(0.000) | 1.00(0.000) | 0.36(0.298) | ||

| MCCA | 0.54(0.022) | 0.28(0.108) | 0.23(0.068) | ||

| B | ||ΔB|| | 0.15(0.026) | 0.25(0.013) | 0.61(0.052) | 0.22(0.027) |

| |||ΔB|||∞ | 0.32(0.044) | 0.48(0.040) | 1.28(0.100) | 0.60(0.051) | |

| ||ΔB||∞ | 0.08(0.018) | 0.14(0.013) | 0.31(0.056) | 0.07(0.016) | |

| ||ΔB||F | 0.38(0.049) | 0.68(0.026) | 1.64(0.099) | 0.57(0.033) | |

| SPEB | 0.68(0.031) | 0.40(0.060) | 0.70(0.067) | ||

| SENB | 0.99(0.009) | 1.00(0.006) | 0.63(0.142) | ||

| MCCB | 0.47(0.027) | 0.29(0.037) | 0.25(0.055) | ||

| Model 2, n = 100, p = 80, q = 80 |

|||||

| A | ||ΔA|| | 0.17(0.022) | 0.31(0.020) | 0.75(0.089) | 0.25(0.021) |

| |||ΔA|||∞ | 0.37(0.030) | 0.58(0.030) | 1.26(0.089) | 0.93(0.045) | |

| ||ΔA||∞ | 0.07(0.015) | 0.19(0.015) | 0.47(0.079) | 0.06(0.014) | |

| ||ΔA||F | 0.67(0.082) | 1.56(0.122) | 3.30(0.515) | 0.96(0.038) | |

| SPEA | 0.89(0.080) | 0.69(0.009) | 1.00(0.000) | ||

| SENA | 1.00(0.000) | 1.00(0.000) | 0.00(0.000) | ||

| MCCA | 0.68(0.100) | 0.43(0.008) | - | ||

| B | ||ΔB|| | 0.14(0.013) | 0.13(0.012) | 0.72(0.147) | 0.22(0.020) |

| |||ΔB|||∞ | 0.45(0.047) | 0.42(0.049) | 1.45(0.117) | 0.88(0.040) | |

| ||ΔB||∞ | 0.06(0.008) | 0.06(0.010) | 0.53(0.180) | 0.05(0.011) | |

| ||ΔB||F | 0.56(0.023) | 0.54(0.025) | 2.97(0.499) | 0.91(0.023) | |

| SPEB | 0.86(0.102) | 0.69(0.010) | 1.00(0.000) | ||

| SENB | 1.00(0.000) | 1.00(0.000) | 0.00(0.000) | ||

| MCCB | 0.64(0.124) | 0.42(0.008) | 0.06(0.000) | ||

MNGM: the matrix normal graphical model with l1 penalties; GGM-I: Gaussian graphical model treating rows or columns as independent; GGM-R/GGM-C: Gaussian graphical model that uses only data from the first column or the first row; MLE: maximum likelihood estimates. For each measurement, mean and standard deviation are calculated over 50 replications.

Table 2.

Comparison of the performance for simulated data sets of different dimensions when l1 penalty functions are used.

| Precision Matrix | Measure | MNGM | GGM-I | GGM-C GGM-R |

|---|---|---|---|---|

| Model 3, n = 100, p = 150, q = 150 |

||||

| A | ||ΔA|| | 0.12(0.014) | 0.31(0.013) | 0.78(0.094) |

| |||ΔA|||∞ | 0.32(0.028) | 0.59(0.027) | 1.45(0.092) | |

| ||ΔA||∞ | 0.05(0.011) | 0.20(0.010) | 0.49(0.074) | |

| ||ΔA||F | 0.61(0.069) | 2.26(0.120) | 4.72(0.802) | |

| SPEA | 0.84(0.005) | 0.80(0.004) | 1.00(0.000) | |

| SENA | 1.00(0.000) | 1.00(0.000) | 0.00(0.000) | |

| MCCA | 0.45(0.006) | 0.40(0.004) | 0.05(0.022) | |

| B | ||ΔB|| | 0.10(0.009) | 0.10(0.009) | 0.77(0.186) |

| |||ΔB||| | 0.29(0.022) | 0.32(0.025) | 1.38(0.208) | |

| ||ΔB||∞ | 0.04(0.007) | 0.04(0.007) | 0.58(0.236) | |

| ||ΔB||F | 0.53(0.025) | 0.56(0.024) | 4.25(0.856) | |

| SPEB | 0.83(0.005) | 0.80(0.003) | 1.00(0.000) | |

| SENB | 1.00(0.000) | 1.00(0.000) | 0.01(0.000) | |

| MCCB | 0.43(0.007) | 0.40(0.004) | 0.07(0.021) | |

| Model 4, n = 100, p = 500, q = 500 |

||||

| A | ||ΔA|| | 0.10(0.008) | 0.22(0.008) | 3.69(0.521) |

| |||ΔA|||∞ | 0.27(0.018) | 0.45(0.019) | 4.23(0.502) | |

| ||ΔA||∞ | 0.04(0.007) | 0.14(0.006) | 3.63(0.581) | |

| ||ΔA||F | 0.95(0.078) | 2.94(0.131) | 43.68(6.153) | |

| SPEA | 0.99(0.001) | 0.95(0.001) | 1.00(0.002) | |

| SENA | 1.00(0.00) | 1.00(0.00) | 0.01(0.038) | |

| MCCA | 0.76(0.008) | 0.52(0.003) | 0.13(0.030) | |

| B | ||ΔB|| | 0.08(0.006) | 0.08(0.006) | 1.17(0.026) |

| |||ΔB|||∞ | 0.26(0.019) | 0.26(0.019) | 6.88(0.809) | |

| ||ΔB||∞ | 0.03(0.003) | 0.03(0.004) | 0.34(0.088) | |

| ||ΔB||F | 0.79(0.028) | 0.76(0.031) | 13.07(0.773) | |

| SPEB | 0.98(0.001) | 0.97(0.001) | 0.64(0.055) | |

| SENB | 1.00(0.000) | 1.00(0.000) | 0.65(0.095) | |

| MCCB | 0.75(0.007) | 0.62(0.003) | 0.06(0.015) | |

MNGM: the matrix normal graphical model with l1 penalties; GGM-I: Gaussian graphical model treating rows or columns as independent; GGM-R/GGM-C: Gaussian graphical model that uses only data from the first column or the first row. For each measurement, mean and standard deviation are calculated over 50 replications.

When p, q < n, as in Models 1 and 2, we have also implemented the penalized likelihood estimation with adaptive l1 loss functions and performed simulation comparisons with the standard l1 loss functions, where the maximum likelihood estimates of A and B are obtained and used as weights in the adaptive l1 penalty functions. We present the results in Table 3. Comparing to the results in Table 1, we observe that using the adaptive penalties in the MNGM and the GGM-I can lead to better estimates of the precision matrixes and better recovery of the graphical structures defined by these precision matrices. However, if we only select one row or column and estimate the precision matrices using the GGM (GGM-R/GGM-C), the estimates based on the adaptive l1 penalty functions are in general not as good as those based on the l1 penalty functions. This is due to the fact that when only one row or one column is used, the sample size is small and the MLEs of the precision matrices may not provide sensible estimates of the weights in the adaptive penalty functions, which can lead to poor performance of the resulting estimates.

Table 3.

Comparison of the performance for simulated data sets of different dimensions when adaptive penalty functions are used.

| Precision Matrix | Measure | MNGM | GGM-I | GGM-C GGM-R |

|---|---|---|---|---|

| Model 1, n = 100, p = 30, q = 30 |

||||

| A | ||ΔA|| | 0.15(0.024) | 0.26(0.014) | 0.64(0.033) |

| |||ΔA|||∞ | 0.30(0.037) | 0.51(0.037) | 1.10(0.079) | |

| ||ΔA||∞ | 0.08(0.021) | 0.14(0.010) | 0.35(0.061) | |

| ||ΔA||F | 0.37(0.046) | 0.69(0.025) | 1.74(0.059) | |

| SPEA | 0.81(0.018) | 0.41(0.028) | 0.95(0.016) | |

| SENA | 1.00(0.000) | 1.00(0.000) | 0.24(0.069) | |

| MCCA | 0.67(0.022) | 0.34(0.018) | 0.27(0.059) | |

| B | ||ΔB|| | 0.14(0.024) | 0.24(0.012) | 0.66(0.040) |

| |||ΔB|||∞ | 0.28(0.047) | 0.48(0.037) | 1.14(0.091) | |

| ||ΔB||∞ | 0.08(0.019) | 0.13(0.012) | 0.35(0.043) | |

| ||ΔB||F | 0.35(0.052) | 0.65(0.025) | 1.70(0.078) | |

| SPEB | 0.81(0.023) | 0.42(0.023) | 0.94(0.012) | |

| SENB | 0.99(0.011) | 1.00(0.006) | 0.32(0.055) | |

| MCCB | 0.60(0.029) | 0.30(0.015) | 0.32(0.056) | |

| Model 2, n = 100, p = 80, q = 80 |

||||

| A | ||ΔA|| | 0.12(0.019) | 0.28(0.020) | 2.36(0.756) |

| |||ΔA|||∞ | 0.28(0.034) | 0.49(0.034) | 2.94(0.792) | |

| ||ΔA||∞ | 0.06(0.013) | 0.18(0.015) | 2.28(0.773) | |

| ||ΔA||F | 0.48(0.064) | 1.44(0.123) | 12.19(4.586) | |

| SPEA | 0.92(0.005) | 0.85(0.005) | 1.00(0.000) | |

| SENA | 1.00(0.000) | 1.00(0.000) | 0.00(0.000) | |

| MCCA | 0.73(0.012) | 0.59(0.008) | - | |

| B | ||ΔB|| | 0.12(0.011) | 0.12(0.012) | 4.78(1.206) |

| |||ΔB|||∞ | 0.33(0.045) | 0.34(0.049) | 12.91(3.251) | |

| ||ΔA||∞ | 0.06(0.009) | 0.06(0.011) | 0.74(0.320) | |

| ||ΔA||F | 0.44(0.027) | 0.47(0.030) | 10.48(2.145) | |

| SPEB | 0.92(0.005) | 0.85(0.008) | 0.12(0.010) | |

| SENB | 1.00(0.000) | 1.00(0.000) | 0.90(0.019) | |

| MCCB | 0.73(0.013) | 0.59(0.012) | 0.02(0.018) | |

MNGM: the matrix normal graphical model with adaptive l1 penalties; GGM-I: Gaussian graphical model treating rows or columns as independent; GGM-R/GGM-C: Gaussian graphical model that uses only data from the first column or the first row. For each measurement, mean and standard deviation are calculated over 50 replications.

As expected, since the precision matrices A and B are generated similarly and both are of the same dimensions, the estimates of these two precision matrices based on the MNGM are very comparable for all four models considered. Some differences in performances for estimating A and B in Model 4 are observed when the GGM-I or GGM-R/GGM-C is used. This is largely due to the large variability in selecting the tuning parameters when the dependence of the data is ignored as in GGM-I or when only partial data are used as in GGM-R/GGM-C.

Finally, Model 5 with n = 20, p = q = 600 mimics the scenario when n << min(p, q). The performances of the MNGM as shown in Table 4 are still quite comparable to the previous four models. However, estimates from the GGM-I or GGM-R/RRM-C are significantly worse, resulting much lower sensitivities and larger estimation errors.

Table 4.

Comparison of the performance for simulated data sets when n << min(p, q) and l1 penalty functions are used (Model 5).

| Precision Matrix | Measure | MNGM | GGM-I | GGM-C GGM-R |

|---|---|---|---|---|

| Model 5, n = 20, p = 600, q = 600 |

||||

| A | ||ΔA|| | 0.14(0.013) | 0.85(0.012) | 6.85(1.532) |

| |||ΔA||| | 0.67(0.041) | 1.52(0.015) | 10.9(3.799) | |

| ||ΔA||∞ | 0.05(0.009) | 0.43(0.01) | 6.78(1.563) | |

| ||ΔA||F | 1.58(0.091) | 10.17(0.188) | 56.92(14.796) | |

| SPEA | 0.84(0.005) | 1(0) | 0.98(0.025) | |

| SENA | 1(0) | 0.03(0.001) | 0.04(0.042) | |

| MCCA | 0.22(0.004) | 0.18(0.004) | 0.02(0.003) | |

| B | ||ΔB|| | 0.15(0.01) | 0.54(0.015) | 1.12(0.069) |

| |||ΔB||| | 0.75(0.037) | 1.32(0.024) | 4.06(0.344) | |

| ||ΔB||∞ | 0.05(0.009) | 0.23(0.005) | 0.74(0.191) | |

| ||ΔB||F | 1.68(0.06) | 6.84(0.023) | 11.96(0.807) | |

| SPEB | 0.81(0.006) | 1(0) | 0.93(0.001) | |

| SENB | 1(0) | 0.03(0.001) | 0.11(0.008) | |

| MCCB | 0.21(0.004) | 0.16(0.004) | 0.01(0.003) | |

MNGM: the matrix normal graphical model with l1 penalties; GGM-I: Gaussian graphical model treating rows or columns as independent; GGM-R/GGM-C: Gaussian graphical model that uses only data from the first column or the first row. For each measurement, mean and standard deviation are calculated over 50 replications.

6. Real Data Analysis

We applied the MNGM to an analysis of the mouse gene expression data measured over different tissues from the Atlas of Gene Expression in Mouse Aging (AGEMAP) database [37]. In this study, the authors profiled the effects of aging on gene expressions in different mouse tissues dissected from C57BL/6 mice. Mice were of ages 1, 6, 16, and 24 months, with ten mice per age cohort and five mice of each sex. Sixteen tissues, the cerebellum, cerebrum, striatum, hippocampus, spinal cord, adrenal glands, heart, lung, liver, kidney, muscle, spleen, thymus, bone marrow, eye, and gonads, were dissected from each mouse. For each issue, mRNA was isolated and hybridized to two filter membranes containing a total of 16,896 cDNA clones corresponding to 8,932 genes. In our analysis, we leave out the data from six tissues, including cerebellum, bone-marrows, heart, gonads, striatum and liver, from our analysis due to the fact that some mice did not have data on these tissues. Due to the small sample size n = 40, we consider a set of 40 genes that belong to the mouse vascular endothelial growth factor (VEGF) signaling pathway and have measured gene expression levels over all 10 tissues.

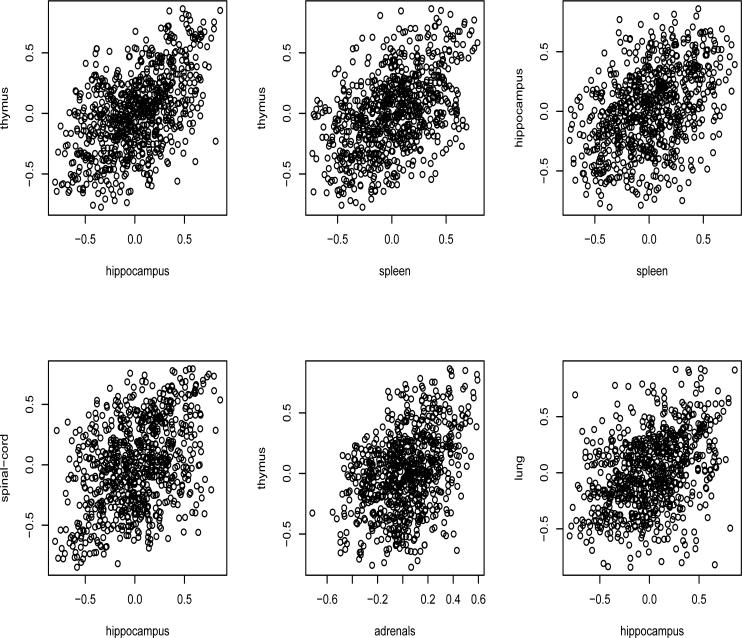

Figure 1 shows the scatter plots of the pair-wise correlations of expression levels of these 40 genes in different tissues, clearly indicating that many gene pairs have similar correlations across different tissues and also the gene expression levels are clearly not independent across multiple tissues. The plots indicate that the assumption of the Kronecker covariance structure for the gene-tissue matrix normal data would be helpful in studying the covariance structure of the genes across different tissues.

Figure 1.

Mouse gene expression data: scatter plots of pair-wise correlations of 40 genes across different tissues, showing that the expression levels in different issues are dependent.

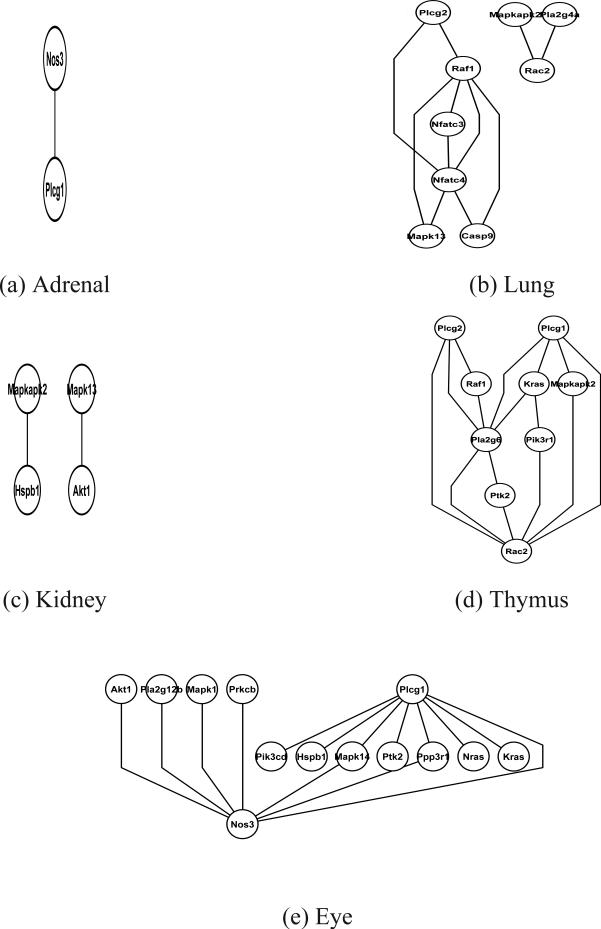

Our goal is to study the dependency structure of these 40 genes of the VEGF pathway using the expression data across all 10 tissues. When the standard GGM is used to the data of each of the tissues separately, gene networks are identified from five out of 10 tissues, including andrenal, kidney, lung, thymus and eye. However, no gene links are identified for the other five tissues. The corresponding gene network graphs are shown in Figure 2 for each of the five tissues. The networks identified based on the tissue-specific data only include a few VEGF genes, indicating lack of the power of the recovering biologically meaningful links based on data from single tissue. The differences of the identified networks from difference tissues might also be due to the fact that genes of the VEGF pathways are not perturbed enough in some tissues to make inferences on the conditional independence structures among the genes. On the other hand, if all the data are pooled together and the dependency of gene expression across issues is ignored, the GGM results in a very dense network with 373 links, which is biologically difficult to interpret given that the biological networks are expected to be sparse.

Figure 2.

Analysis of mouse gene expression data: networks identified by the GGM for each of the five tissues, including adrenal, lung, kidney, thymus and eye. The genes that belong to the mouse VEGF pathway are labeled on each of these network graphs. No networks are identified for other five tissues, including hippocampus, cerebral-cortex, spinal-cord, spleen and skeletal-muscle.

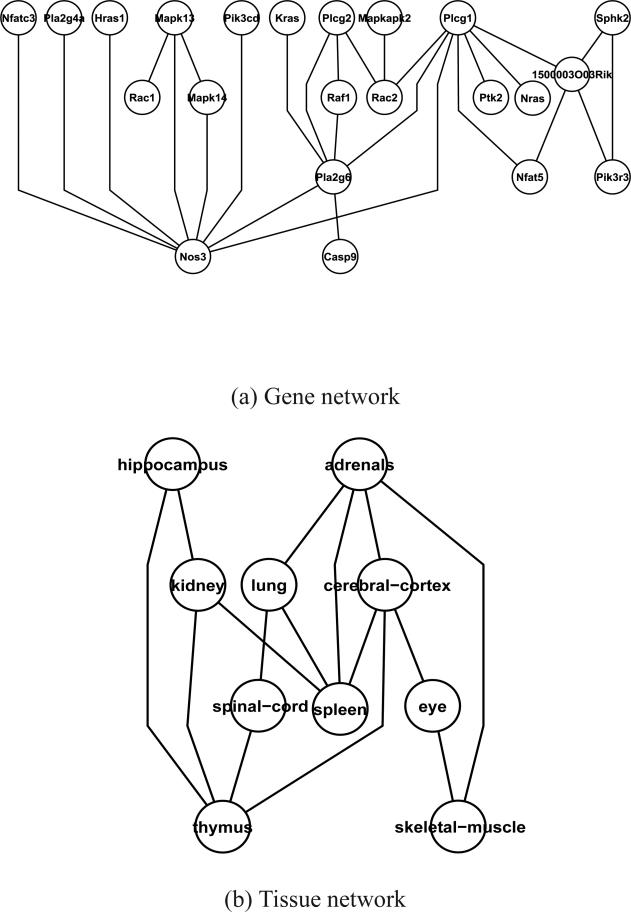

Figure 3 shows the gene and the tissue networks estimated by the proposed MNGM, including a gene network of 27 links among 22 VEGF genes and a tissue network with 15 edges among the 10 tissues. Compared to the networks estimated based on data from single tissue (see plots of Figure 2), we observe that more links are identified among these genes and many links identified by the MNGM appear in one of the graphs identified based on the issue-specific data. The difference between the overall network identified by the MNGM and the tissue-specific networks can also be due to the dependence structures of the VEGF genes are different in different tissues. It is interesting to note that many links identified by the MNGM may reflect the underlying VEGF signaling pathway [38]. For example, the binding of VEGF to VEGFR-2 leads to dimerization of the receptor, followed by intracellular activation of the PLCgamma (Plcg). It is interesting that several forms of the PLgamma gene such as Plcg1, Plcg2 and their downstream genes Nfat5 and Pla2g6 are part of network. Several genes on the PKC-Raf kinase-MEK-mitogen-activated protein kinase (MAPK) pathway such as Mapk13, Mapk14 and Mapkapk2 are interconnected.

Figure 3.

Analysis of mouse multi-tissue gene expression data using the MNGM: (a) Gene network, where the genes that belong to the mouse VEGF pathway are labeled on the network graph; (b) Tissue network based on gene expression data.

The tissue network as shown in Figure 3 (b) should be interpreted as the conditional dependency structure among the tissues with respect to the gene expression patterns observed among the genes on the VEGF pathway. It is interesting to observe links among lung, spleen and kidney in the vascular tissue group and links between eye and cerebral-cortex and between thymus and hippocampus in the neural tissue group. It is also interesting to observe that the adrenal tissue in the steroid responsive group is linked to both vascular and neural tissue groups. Similar clustering of tissue groups based on their gene expression data are also observed in [37].

7. Discussion

Motivated by analysis of gene expression data measured over different tissues on the same set of samples, we have proposed to apply the matrix normal distribution to model the data jointly and have developed a penalized likelihood method to estimate the row and column precision matrices assuming that both matrices are sparse. Our simulation results have clearly demonstrated such models can result in better estimates of the precision matrices and better identification of the corresponding graphical structures than naive application of the Gaussian graphical models. Our analysis of the mouse gene expression data demonstrated that by effectively combining the expression data from multiple tissues from the same subjects, the matrix normal graphical model can lead to conditional independence graph with meaningful biological interpretations. We also demonstrated that ignoring the dependency of gene expression across different tissues can lead to higher false positive links and dense graphs, which are difficult to interpret biologically.

The matrix normal distribution provides a nature way of modeling the dependency of data measured over different conditions. If the underlying precision matrices are sparse, the proposed penalized likelihood estimation can lead to identification of the non-zero elements in these precision matrix. We observe that the proposed l1 regularized estimation can lead to better estimates of these sparse precision matrices than the MLEs. Such estimated precision matrices can in turn be applied to the problem of co-expression analysis [21], differential expression analysis [22] and the problem of estimating missing gene expression data. Other applications of the proposed methods include face recognition [39].

The methods proposed in this paper and the related theorems can also be extended to array normal distribution by extending the matrix-variate normal to the tensor array setting using the Tucker product [40]. Such array normal distributions were recently studied by Hoff [41]. Allen [29] proposed an l1 penalized estimation for such an array normal distribution by regularizing separable tensor precision matrices. Similar techniques can be applied to derive the estimation error bounds and to prove the sparsistency when the adaptive l1 penalties are used. As multi-dimensional data with possible correlations among the variables of each dimension is becoming more prevalent, further development of estimation methods and relevant theorems are important.

Acknowledgement

This research was supported by NIH grants ES009911 and CA127334. We thank the reviewers for many helpful comments and for pointing out several omitted references.

Appendix

Proof of Proposition 1

Before we state the proof of Proposition 1, we need the following lemma [1]:

Lemma Appendix .1. Using the same notation as in the main text, if we partition the columns of Y as Y = (Y1, Y2), where Y1 is p × r, Y2 is p × s random matrix respectively, with r + s = q. Then the conditional distribution of Y1 given Y2 = y2 is , where M = (M1, M2) and .

Proof of Lemma Appendix .1. See Proposition C.8 in [1, Appendix C].

Proof of Proposition 2.1. From Lemma Appendix .1, we know Y{γ,μ} given YΓ{γ,μ} is distributed as matrix normal . From Proposition C.5 of [1, Appendix C], we have

So

From Proposition C.6 of [1, Appendix C] we know if and only if bγμ = 0. Similar argument can be applied to the rows.

Proof of Theorem 1

Proof. Let M = MT be p × p, N = NT be q × q symmetric random matrices. Denote

Using the same argument as in [5], we have

Let , then

Denote Zk = vecYk, then , . Next we compute E(Tk) and var(Tk). First, , so E(Tk) = n−1/2[qtr(MU) + ptr(NV) + n−1/2tr(NV)tr(MU)]. Next, and , where . If Z ~ N(0, Σ), then

| (.1) |

Using (.1), we obtain

and

Let , by the central limited theorem, Wn = n(T̄ – ETk) → N(0, σ2), where σ2 = 2[qtr(MUMU) + ptr(NVNV) + 2tr(MU)tr(NV)]. Finally,

so nfn(M, N) → f(M, N).

Proof of Theorem 2

Proof. We prove this theorem by verifying the regularity conditions (A), (B) and (C) of [33] [42, also]. We use (A)ij to denote the (i, j)th element of the matrix, aij. The log-likelihood function is

So

On the other hand, is Wishart-distributed, so

and E(∂l/∂aij) = 0. Similarly, one can verify E(∂l/∂bij) = 0, so the first part of condition (A) is verified. For the second part, we need to check

From the property of the Wishart distribution,

On the other hand, dA−1 = −A−1dAA−1, so

So E(∂l/∂aij∂l/∂akl) = E(−∂2l/(∂aij∂akl)) holds. We can similarly verify that E(∂l/∂bij∂l/∂bkl) = E(−∂2l/(∂bij∂bkl)). Denote the orthogonal bases ei = (0, ···, 1, ··· 0), which is a vector of all zero except the ith element. We have

Using the same notation as in the proof of Theorem 1, let Zk = vecYk, then . We then have

and , and . Denote , , so . Using (.1), we have

So E(∂l/∂bkl∂l/∂aij) = n/2uijvkl, and E{−∂2l/(∂bkl∂aij)} = E(∂l/∂bkl∂l/∂aij). The condition (A) is verified.

Next, we verify condition (B). We have

One can verify that , where is the (i, j)th entry of Yk's. So . Denote I(A, B) as the Fisher information matrix, then

| (.2) |

To see I(A, B) is non-negative definite, one only needs to check is so. This is equivalent to check that for any vector t ≠ 0 in ,

Denote q × q matrix D such that vecD = t, then

| (.3) |

Since V is non-negative definite, V1/2 is well defined, denote V1/2DV1/2 = A, then AT = V1/2DTV1/2 and (.3) = tr(ATA) – 1/q[tr(A)]2. But in general, one has the inequality [tr(ATB)]2 ≤ tr(ATA)tr(BTB), so , thus we proved the condition (B).

Since the third derivative of the log-likelihood function doesn't involve any random variable, condition (C) is easy to satisfy. Theorem 2 thus holds.

Proof of Lemma 4.1

Proof. We use the notation Y(k) to refer to Yk for convenience. We then have

Since vec(Y(s)) is normally distributed, Lemma A.3 of [34] leads to the fact that there exist some constants δ, C1 and C2 depending on ε1 and ε3 only such that

Hence we have

which proves the lemma.

Proof of Theorem 3

Proof. Let W1 be a symmetric matrix of dimension pn and W2 be a symmetric matrix of dimension qn. Let DW1, DW2 be their diagonal matrices, and RW1 = W1 – DW1, RW2 = W2 – DW2 be their off-diagonal matrices, respectively. Set ΔW1 = αnRW1 + βnDW1 and ΔW2 = δnRW2 + βnDW2. We show that, for , and , for a set defined as

for sufficiently large constants C1, C2, C3 and C4. Denote , , then

| (.4) |

So ϕ(A1, B1) − ϕ(A0, B0) = I1 + I2 + I3 + I4 + I5, where

Denote ΔA = ΔW1, ΔB = ΔW2 and recall the definitions of . Using Taylor's expansion with integral residues, we have

| (.5) |

where Av = A0 + vΔA, Bv = B0 + vΔB. One can easily check that

and similarly, (vecA0)TΣ0(vecΔB) = pntr(V0ΔB). Then I1 can be further simplified as I1 = K1 + K2 + K3 + K4 + K5 + K6, where

Note that

| (.6) |

| (.7) |

Since

hence

then

so

| (.8) |

and similarly

| (.9) |

Generally, for two squared p × p matrix M and q × q matrix N, we have and then

Let , , we have

Combining with (.8) and (.9) we know that |K5| is dominated by K1 + K2 with a large probability.

Next we bound |K3| and |K4|. We have

where if we use double index to indicate a row or column in Sn, Σ0 or a position in vecΔA, vecB0, vecA0 and vecΔB, we have

and

From Lemma 4.1 we know that

Then

| (.10) |

This together with (.6) shows that L1 is dominated by K1 by choosing sufficiently large C1 and C2. Symmetrically,

By choosing sufficiently large C3 and C4, this together with (.7) shows L3 can be dominated by K2. Also

| (.11) |

from the condition of λn in theorem, and using the similar technique in [12], it can be shown that L2 is dominated by I2. Similarly, L4 is dominated by I4. Thus we proved |K3| + |K4| can be dominated by K1 + K2 + I2 + I4. It is easy to show that |K6| is of smaller order of K3 and K4, hence is also dominated by K1 + K2 + I2 + I4. We next show that

| (.12) |

where the middle term in (.12) is from regularity condition (C), thus I3 is dominated by K1 if we choose sufficiently large constants C1 and C2. Similarly, we get and is dominated by K2 when C3 and C4 are large. Hence the proof.

Proof of Theorem 4

Proof. For (Â, B̂), a minimizer of (6), where  = (aij), B̂ = (bkl), the derivative of ϕ(A, B) with respect to aij for evaluated at  is

where Û = (uij) = –1 and and B0 are the true parameters. If we can show that the sign of ∂ϕ(Â, B̂)/∂aij depends on sgn(aij) only with probability tending to 1, the optimum is then at 0, so that aij = 0 for with probability tending to 1. Let

| (.13) |

we then have

Using the same argument as in [12],

and then .

Since Y(s) ~ MN(0; U0, V0), the Y(s)T ~ MN(0, V0, U0) and . Let , where is a qn × 1 vector,for i = 1, ···, pn. We have

| (.14) |

| (.15) |

and

| (.16) |

| (.17) |

I1 can be simplified as . We have the following proposition:

Proposition Appendix .1. Under the notations above, we have

Proof of Proposition Appendix .1. To save notation, we use q for qn here or there. Denote and . From (.17) we have

| (.18) |

Note that (.18) does not depends on the sample index s, and the sum in I1 is equivalent to nqn normal observations. By Lemma A.3 of [34], we have

Hence the Proposition Appendix .1 is proved.

From Proposition Appendix .1, we know . Next we bound |I3|. From (.14) we know that , and since

we have

Then we have |I3| ≤ L1 + L2, where

Since and , then

On the other hand, by (.16) and Lemma A.3 of [34],

Denoting vector of 1's, then

Therefore,

Then . Since the theorem requires the condition qn(pn + sn1)(log pn + log qn)k/n = O(1) for some k > 1, we know that . So

Concluding from above, we have

On the other hand, for for some constant c From the condition in the Theorem, we have

So the sign of ∂ϕ(Â, B̂)/∂aij is dominated by sgn(aij), and thus we proved the sparsistency for Â. Similar proof can be applied to B̂.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Lauritzen SL. Graphical Models. Clarendon Press; Oxford: 1996. [Google Scholar]

- 2.Whittaker J. Graphical Models in Applied Multivariate Analysis. Wiley; 1990. [Google Scholar]

- 3.Meinshausen N, Bühlmann P. High-dimensional graphs and variable selection with the lasso. Annals of Statistics. 2006;34:1436–1462. [Google Scholar]

- 4.Tibshirani R. Regression shrinkage and selection via the lasso. J. R. Statist. Soc. B. 1996;58:267–288. [Google Scholar]

- 5.Yuan M, Lin Y. Model selection and estimation in the gaussian graphical model. Biometrika. 2007;94:19–35. [Google Scholar]

- 6.Banerjee O, Ghaoui LE, d'Aspremont A. Model selection through sparse maximum likelihood estimation for multivariate gaussian or binary data. J. Machine Learning Research. 2008;9:485–516. [Google Scholar]

- 7.Dahl J, Vandenberghe L, Roychowdhury V. Covariance selection for non-chordal graphs via chordal embedding. Optimization Methods and Software. 2008;23:501–420. [Google Scholar]

- 8.Friedman J, Hastie T, Tibshirani R. Sparse inverse covariance estimation with the graphical lasso. Biostatistics. 2008;9:432–441. doi: 10.1093/biostatistics/kxm045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Yuan M. Sparse inverse covariance matrix estimation via linear programming. Journal of Machine Learning Research. 2010;11:2261–2286. [Google Scholar]

- 10.Ravikumar P, Wainwright M, Raskutti G, Yu B. High-dimensional covariance estimation by minimizing l1-penalized log-determinant divergence. Electronic Journal of Statistics. 2011;5:935–980. [Google Scholar]

- 11.Rothman A, Levina E, Zhu J. Generalized thresholding of large covariance matrices. Journal of the American Statistical Association. 2009;104:177–186. [Google Scholar]

- 12.Lam C, Fan J. Sparsistency and rates of convergence in large covariance matrices estimation. The Annals of Statistics. 2009;37:4254–4278. doi: 10.1214/09-AOS720. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Cai T, Liu W, Luo X. A constrained l1 minimization approach to sparse precision matrix estimation. Journal of American Statistical Association. 2011;106:594–607. [Google Scholar]

- 14.Candes E, Tao T. The dantzig selector: Statistical estimation when p is much larger than n. Annals of Statistics. 2007;35:2313–2351. [Google Scholar]

- 15.Dawid A. Some matrix-variate distribution theory: Notational considerations and a bayesian application. Biometrika. 1981;68:265–274. [Google Scholar]

- 16.Gupta A, Nagar D. Matrix variate distributions, Volume 104 of Monographs and Surveys in Pure and Applied Mathematics. Chapman & Hall, CRC Press; Boca Raton, FL: 1999. [Google Scholar]

- 17.Finn JD. A general model for multivariate analysis. Holt, Rinehart and Winston; New York: 1974. [Google Scholar]

- 18.Timm NH. Multivariate analysis of variance of repeated measurements. Handbook of Statistics. 1980;1:41–87. [Google Scholar]

- 19.Mardia KV, Goodall C. Spatial-temporal analysis of multivariate environmental monitoring data. Multivariate Environmental Statistics. 1993;6:347–385. [Google Scholar]

- 20.Huizenga HM, De Munck JC, Waldorp LJ, Grasman R. Spatiotemporal eeg/meg source analysis based on a parametric noisecovariance model. IEEE Trans. Biomed. Eng. 2002;49:533–9. doi: 10.1109/TBME.2002.1001967. [DOI] [PubMed] [Google Scholar]

- 21.Teng S, Huang H. A statistical framework to infer functional gene associations from multiple biologically interrelated microarray experiments. Journal of the American Statistical Association. 2009;104:465–473. [Google Scholar]

- 22.Allen G, Tibshirani R. Transposable regularized covariance models with an application to missing data imputation. Annals of Applied Statistics. 2010;4(2):764–790. doi: 10.1214/09-AOAS314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Efron B. Are a set of microarrays independent of each other? The Annals of Applied Statistics. 2009;13(3):922942. doi: 10.1214/09-AOAS236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Dutilleul P. The mle algorithm for the matrix normal distribution. Journal of Statistical Computating and Simulations. 1999;64:105–123. [Google Scholar]

- 25.Mitchell M, Genton M, Gumpertz M. A likelihood ratio test for separability of covariances. Journal of Multivariate Analysis. 2006;97(5):1025–1043. [Google Scholar]

- 26.Muralidharan O. Detecting column dependence when rows are correlated and estimating the strength of the row correlation. Electronic Journal of Statistics. 2010;4:1527–1546. [Google Scholar]

- 27.Wang H, West M. Bayesian analysis of matrix normal graphical models. Biometrika. 2009;96:821–834. doi: 10.1093/biomet/asp049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Allen G, Tibshirani R. Inference with transposable data: Modeling the effects of row and column correlations. Journal of the Royal Statistical Society, Series B (Theory & Methods) 2011 doi: 10.1111/j.1467-9868.2011.01027.x. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Allen G. Comment on article by hoff. Bayesian Analysis. 2011;6(2):197–202. [Google Scholar]

- 30.Fan J, Feng Y, Wu Y. Network exploration via the adaptive lasso and scad penalties. The Annals of Applied Statistics. 2009;3:521–541. doi: 10.1214/08-AOAS215SUPP. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Graybill FA. Matrices with applications in statistics. Second Edition Wadsworth; Belmont: 1983. [Google Scholar]

- 32.Cressie N. Statistics for Spatial Data. Wiley; New York: 1993. [Google Scholar]

- 33.Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American Statistical Association. 2001;96:1348–1360. [Google Scholar]

- 34.Bickel P, Levina E. Regularized estimation of large covariance matrices. Annals of Statistics. 2008;36(1):199–227. [Google Scholar]

- 35.Zou H. The adaptive lasso and its oracle properties. Journal of the American Statistical Association. 2006;101:1418–1429. [Google Scholar]

- 36.Li H, Gui J. Gradient directed regularization for sparse gaussian concentration graphs, with applications to inference of genetic networks. Biostatistics. 2006;7:302–317. doi: 10.1093/biostatistics/kxj008. [DOI] [PubMed] [Google Scholar]

- 37.Zahn JM, Poosala S, Owen A, Ingram DK, Lustig A, et al. Agemap: A gene expression database for aging in mice. PLoS Genetics. 2007;3(11):2326–2337. doi: 10.1371/journal.pgen.0030201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Holmes K, Roberts O, Thomas A, Cross M. Vascular endothelial growth factor receptor-2: Structure, function, intracellular signalling and therapeutic inhibition. 2007;19:2003–2012. doi: 10.1016/j.cellsig.2007.05.013. [DOI] [PubMed] [Google Scholar]

- 39.Zhang Y, Schneider J. Learning multiple tasks with a sparse matrix-normal penalty. In: Lafferty J, Williams CKI, Shawe-Taylor J, Zemel R, Culotta A, editors. Advances in Neural Information Processing Systems. Vol. 23. 2010. pp. 2550–2558. [Google Scholar]

- 40.Tucker LR. The extension of factor analysis to three-dimensional matrices. In: Gulliksen H, Frederiksen N, editors. Contributions to Mathematical Psychology. Holt, Rinehart and Winston; New York: 1964. [Google Scholar]

- 41.Hoff P. Separable covariance arrays via the tucker product, with applications to multivariate relational data. Bayesian Analysis. 2011;6(2):179–196. [Google Scholar]

- 42.Lehmann E. Theory of Point Estimation. Wadsworth and Brooks/Cole; Pacific Grove, CA: 1983. [Google Scholar]