Abstract

Learning theory suggests that animals attend to pertinent environmental cues when reward contingencies unexpectedly change so that learning can occur. We have previously shown that activity in basolateral nucleus of amygdala (ABL) responds to unexpected changes in reward value, consistent with unsigned prediction error signals theorized by Pearce and Hall. However, changes in activity were present only at the time of unexpected reward delivery, not during the time when the animal needed to attend to conditioned stimuli that would come to predict the reward. This suggested that a different brain area must be signaling the need for attention necessary for learning. One likely candidate to fulfill this role is the anterior cingulate cortex (ACC). To test this hypothesis, we recorded from single neurons in ACC as rats performed the same behavioral task that we have used to dissociate signed from unsigned prediction errors in dopamine and ABL neurons. In this task, rats chose between two fluid wells that produced varying magnitudes of and delays to reward. Consistent with previous work, we found that ACC detected errors of commission and reward prediction errors. We also found that activity during cue sampling encoded reward size, but not expected delay to reward. Finally, activity in ACC was elevated during trials in which attention was increased following unexpected upshifts and downshifts in value. We conclude that ACC not only signals errors in reward prediction, as previously reported, but also signals the need for enhanced neural resources during learning on trials subsequent to those errors.

Introduction

The Pearce and Hall theory of attention suggests that learning occurs via elevated processing of environmental cues when the outcomes that follow them are uncertain (Pearce and Hall, 1980; Pearce et al., 1982). One aspect of this model is that attention is increased when outcomes are both better and worse than predicted. This increased attention allows the animal to focus on trial events so that subsequent behavior is adaptive.

In a previous report, we suggested that activity in basolateral nucleus of the amygdala (ABL) represented such an unsigned prediction error (Roesch et al., 2010a). In that study, ABL neurons increased firing when an unexpected reward was either delivered or omitted, consistent with the idea of unsigned error signals; however, changes in firing in ABL were evident only at the time of the reward delivery and omission (Belova et al., 2007; Roesch et al., 2010a; Tye et al., 2010). Although such a signal might be providing unsigned prediction errors, it is unlikely, based on the timing of the activity, that it is the neural signal that marshals neural resources to enhance processing of subsequent trial events.

Here we ask whether activity in anterior cingulate cortex (ACC) might fire more strongly during trials after prediction errors. ACC has strong reciprocal connections with ABL and DA neurons (Sripanidkulchai et al., 1984; Cassell and Wright, 1986; Dziewiatkowski et al., 1998; Holroyd and Coles, 2002); has been shown to be involved in encoding errors of commission, reward prediction errors, and positive and negative trial feedback; and is thought to be critical for functions related to attention, decision making, and reinforcement learning (Carter et al., 1998; Scheffers and Coles, 2000; Paus, 2001; Holroyd and Coles, 2002; Shidara and Richmond, 2002; Ito et al., 2003; Walton et al., 2004; Amiez et al., 2005; Kennerley et al., 2006; Magno et al., 2006; Matsumoto et al., 2007; Quilodran et al., 2008; Rudebeck et al., 2008; Rushworth and Behrens, 2008; Kennerley and Wallis, 2009; Totah et al., 2009; Hillman and Bilkey, 2010; Wallis and Kennerley, 2010; Hayden et al., 2011). Still, none have asked the critical question of whether or not the activity of single neurons in ACC increases after trials in which rewards are unexpectedly delivered or omitted consistent with changes in behavioral measures of attention observed during learning on these trials.

To address this issue, we recorded from single neurons in ACC in the same task in which we characterized firing in ABL and VTA (Roesch et al., 2007, 2010a). We found that activity of single neurons in ACC increased on behavioral trials after reward contingencies changed unexpectedly for both upshifts and downshifts. In addition to this finding, we show that activity in ACC reflects both errors of commission and reward prediction errors after their occurrence. We also demonstrate that activity during the sampling of cues that predict reward is significantly stronger when a large reward was expected relative to a small reward, but not when that same cue predicted short delays relative to long delays.

Materials and Methods

Subjects.

Male Long–Evans rats (n = 4; weight, 175–200 g) were obtained from Charles River Laboratories. Rats were tested at the University of Maryland, College Park, in accordance with University of Maryland and NIH guidelines.

Surgical procedures and histology.

Surgical procedures followed guidelines for aseptic technique. Electrodes were manufactured and implanted as in prior recording experiments (Bryden et al., 2011). Rats had a drivable bundle of 10 25-μm-diameter FeNiCr wires (Stablohm 675, California Fine Wire) chronically implanted in the left hemisphere dorsal to ACC (n = 4; −0.2 mm anterior to bregma, 0.4–0.5 mm laterally, and 1.0 mm ventral to the brain surface). Immediately before implantation, the wires were freshly cut with surgical scissors to extend ∼1 mm beyond the cannula and electroplated with platinum (H2PtCl6, Aldrich) to an impedance of ∼300 kΩ. Cephalexin (15 mg/kg, p.o.) was administered twice daily for 2 weeks postoperatively to prevent infection. The rats were then perfused, and their brains were removed and processed for histology using standard techniques.

Behavioral task.

Recording was conducted in aluminum chambers ∼18 inches on each side with sloping walls narrowing to an area of 12 × 12 inches at the bottom. A central odor port was located above two adjacent fluid wells on a panel in the right wall of each chamber. Two house lights were located above the panel. The odor port was connected to an air flow dilution olfactometer to allow the rapid delivery of olfactory cues. Task control was implemented via computer. Port entry, well entry, and licking were monitored by disruption of photobeams.

The basic design of the task is illustrated in Figure 1a. Trials were signaled by illumination of the house lights inside the box. When these lights were on, nose poke into the odor port resulted in delivery of an odor cue to a small hemicylinder located behind this opening. One of three different odors was delivered to the port on each trial, in a pseudorandom order. At odor offset, the rat had 3 s to make a response at one of the two fluid wells located on the left or the right below the port. One odor (2-octanol, 97%) instructed the rat to go to the left to get reward, a second odor (pentyl acetate, 99%) instructed the rat to go to the right to get reward, and a third odor [(R)-(-)-carvone, 97%] indicated that the rat could obtain reward at either well. Odors were presented in a pseudorandom sequence such that the free-choice odor was presented in 7 of 20 trials and the left/right odors were presented in equal numbers (±1 over 250 trials). In addition, the same odor could be presented on no more than three consecutive trials.

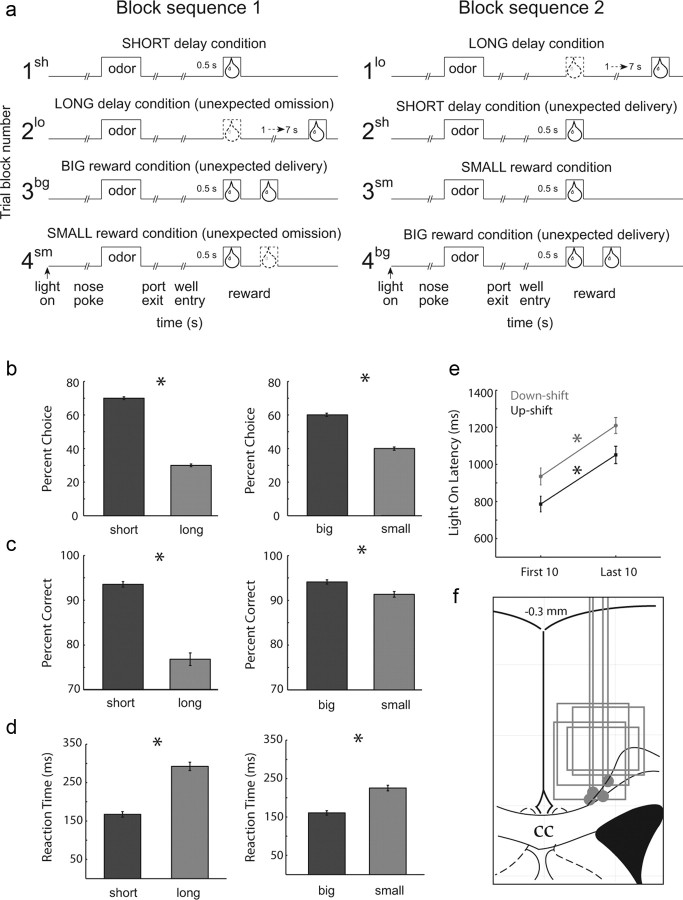

Figure 1.

Task, behavior, and recording sites. a, The sequence of events in each trial block. At the beginning of each recording session, one well was arbitrarily designated as short (a short 500 ms delay before reward) and the other was designated as long (a relatively long 1–7 s delay before reward) (Block 1). After the first block of trials (∼60 trials), the wells unexpectedly reversed reward contingencies (Block 2). With the transition to block 3, the delays to reward were held constant across wells (500 ms), but the size of the reward was manipulated. The well designated as long during the previous block now offered two fluid boli, whereas the opposite well offered one bolus. The reward stipulations again reversed in block 4. b, The impact of delay length (left) and reward size (right) manipulations on choice behavior during free-choice trials. c, Behavioral reflections of value comparing percentage correct on forced-choice trials for short versus long delay (left) and big versus small reward (right). d, Reaction times (odor offset to nose unpoke from odor port) on forced-choice trials (expressed in milliseconds) comparing short- versus long-delay trials and big- versus small-reward trials. e, Light-on latency measurements (house light on to nose poke in odor port) comparing the first 10 trials versus the last 10 trials per block for upshifts (2sh, 3bg, and 4bg) and downshifts (2lo and 4sm). Sh, Short; bg, big; lo, long; sm, small. f, Location of recording sites. Gray dots mark the final electrode position and gray boxes mark the locations of recording sites. Asterisks indicate planned comparisons revealing statistically significant differences (t test, p < 0.05). Error bars indicate SEMs. N = 4 rats.

During the first day of training, rats were first taught to simply nose poke into the odor port and then go to the well for reward. On the second day, the free-choice odor was introduced, and rats were free to respond to either well for reward. On each subsequent day, the number of forced-choice odors increased by two for each block of 20 trials. During this time, we introduced blocks in which we independently manipulated the size of the reward delivered at a given side and the length of the delay preceding reward delivery. Once the rats were able to maintain accurate responding (> 60%) on forced-choice trials through these manipulations and were able to switch their response bias in each of the four trial blocks on free-choice trials, surgery was performed and recording sessions began.

During recording, one well was randomly designated as short (500 ms) and the other long (1–7 s) at the start of the session (Fig. 1a, Block 1). In the second block of trials, these contingencies were switched (Fig. 1a, Block 2). The length of the delay under long conditions abided by the following algorithm: the side designated as long started off as 1 s and increased by 1 s every time that side was chosen (up to a maximum of 7 s). If the rat chose the side designated as long on <8 of the previous 10 free-choice trials, the delay was reduced by 1 s for each trial to a minimum of 3 s. The reward delay for long forced-choice trials was yoked to the delay in free-choice trials during these blocks. In later blocks, we held the delay preceding reward delivery constant (500 ms) while manipulating the size of the expected reward (Fig. 1a, Blocks 3 and 4). The reward was a 0.05 ml bolus of 10% sucrose solution. For big reward, an additional bolus was delivered 500 ms after the first bolus. At least 60 trials per block were collected for each neuron. Activity was analyzed only from sessions during which all four trial blocks were collected.

Single-unit recording.

Procedures were the same as described previously (Bryden et al., 2011). Wires were screened for activity daily; if no activity was detected, the rat was removed, and the electrode assembly was advanced 40 or 80 μm. Otherwise, active wires were selected to be recorded, a session was conducted, and the electrode was advanced at the end of the session. Neural activity was recorded using two identical Plexon Multichannel Acquisition Processor systems interfaced with odor discrimination training chambers. Signals from the electrode wires were amplified 20× by an op-amp headstage (HST/8o50-G20-GR, Plexon Inc), located on the electrode array. Immediately outside the training chamber, the signals were passed through a differential preamplifier (PBX2/16sp-r-G50/16fp-G50, Plexon Inc) where the single-unit signals were amplified 50× and filtered at 150–9000 Hz. The single-unit signals were then sent to the Multichannel Acquisition Processor box where they were further filtered at 250–8000 Hz, digitized at 40 kHz, and amplified at 1–32×. Waveforms (>2.5:1 signal-to-noise ratio) were extracted from active channels and recorded to disk by an associated workstation with event timestamps from the behavior computer. Waveforms were not inverted before data analysis.

Data analysis.

Units were sorted using Offline Sorter software from Plexon Inc using a template-matching algorithm. Sorted files were then processed in Neuroexplorer to extract unit timestamps and relevant event markers. These data were subsequently analyzed in Matlab (Mathworks). Task-related neural firing was examined over an analysis epoch that started with the onset of the odor and ended when the rat made its behavioral response. We determined whether activity was significantly increased during early learning versus late learning by examining the first and last 10 trials in the last three blocks. Activity was examined on the trials after trials in which a reward was either of high value (large reward or short delay) or low value (small reward or long delay). Trials after a high- and low-value reward will be referred to as “upshifts” and “downshifts,” respectively. Wilcoxon tests were used to measure significant shifts from zero in distribution plots (p < 0.05). The t test or ANOVA was used to measure within-cell differences in firing rate (p < 0.05).

Results

Neurons were recorded in the behavioral task illustrated in Figure 1a. On each trial, rats responded to one of two adjacent wells after sampling an odor at a central port (Fig. 1a). Rats were trained to respond to three different odor cues: one odor that signaled reward in the right well (forced-choice), a second odor that signaled reward in the left well (forced-choice), and a third odor that signaled reward at either well (free-choice). Across blocks of trials in each recording session, we manipulated either the length of the delay preceding reward delivery (Fig. 1a, Blocks 1 and 2) or the size of the reward (Fig. 1a, Blocks 3 and 4). Thus, at the start of different blocks of trials, we manipulated the timing or size of the reward in a particular reward well, thereby increasing (Fig. 1a, Blocks 2sh, 3bg, and 4bg) or decreasing (Fig. 1a, Blocks 2lo and 4sm) its value unexpectedly. As reported in several previous studies, rats changed their behavior across these training blocks, choosing the higher value reward more often on free-choice trials (Fig. 1b) and were more accurate (Fig. 1c) and faster (Fig. 1d) on forced-choice trials for high-value (short and big) than on low-value (long and small) trials (t test; t(110) values > 8; p values < 0.0001).

Rats also exhibited behavioral changes in attention due to unexpected changes in reward value. Rats approached the odor port more quickly on trials after block changes. This is illustrated in Figure 1e by plotting the average light-on latency (house light on to odor port entry) for the first and last 10 trials in each block during which reward contingencies changed unexpectedly. Rats oriented to the odor port faster during the first 10 trials after both upshifts and downshifts in value (t test; t(110) values > 8.0; p values < 0.05).

Latency to approach the odor port precedes any knowledge of the upcoming reward, thus this measure cannot reflect the value of the reward on the upcoming trial. It also cannot reflect a general reduction in motivation over the course of the session because latencies were also significantly faster on early trials than on trials (10 trials) that immediately preceded them (t(110) values > 9.1; p values < 0.05). Faster odor-port approach latencies are thought to reflect error-driven increases in the processing of trial events (e.g., cues, responses, and rewards) as rats accelerate the reception of those events when expectancy errors need to be resolved (Calu et al., 2010; Roesch et al., 2010a,b). Such behavior might be considered an investigatory reflex similar to what has been shown to recover from habituation when learned contingencies, which mirror theoretical changes in Pearce and Hall attention (Kaye and Pearce, 1984; Pearce et al., 1988; Swan and Pearce, 1988; Calu et al., 2010; Roesch et al., 2010a,b), are shifted. Alternatively, this behavior might reflect re-engagement of instrumental task performance when responding can no longer be based on previous reward contingencies or stimulus–response habits that might have developed over the course of the trial block. Regardless of what function this behavior might reflect, it was clear from this behavioral measure that rats were aware of violations in reward contingencies.

Activity in ACC was correlated with changes in attention after upshifts and downshifts in value

We recorded 111 ACC neurons in four rats across 54 sessions during performance of the behavioral task. Recording sites are illustrated in Figure 1f and were verified by histology. Thirty-eight of the 111 ACC neurons significantly increased neural firing during performance of the task (odor onset to fluid well entry) relative to baseline (1.5 ms before nose poke) across all trial types (t test, p < 0.05).

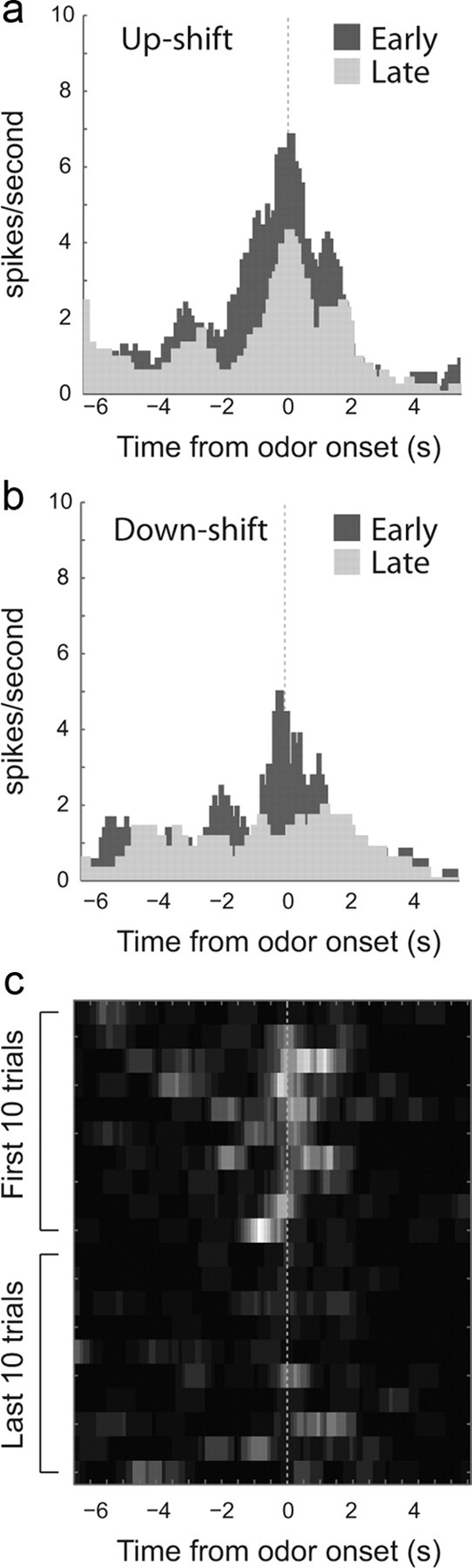

Many ACC neurons increased firing after unexpected upshifts and downshifts in value. This is illustrated in Figure 2 for a single neuron recorded from ACC. Activity was higher during the first 10 trials after rewards were unexpectedly delivered (Fig. 1a, Blocks 2sh, 3bg, and 4bg) or omitted (Fig. 1a, Blocks 2lo and 4sm) compared with the last 10 trials in these blocks after contingencies had been learned (Fig. 2, dark gray vs light gray). The heat plot underneath examines the development of these signals over the course of the first and last 10 trials. Importantly, changes in firing did not occur on the first trial after the shift, but took several trials to develop. The maximum firing that occurred after value shifts, on average, was five to six trials after the switch during these early trials (n = 38 cells; mean = 5.7; SD = 1.2).

Figure 2.

Single-cell example. a, b, Histogram represents firing of one neuron during the first 10 (dark gray) and last 10 (light gray) trials after upshifts and downshifts in value aligned on odor onset. c, Heat plot shows the average firing, of the same neuron, across shifts during the first and last 10 trials after reward contingencies change.

These effects are further illustrated across the population of task-related neurons. Figure 3a plots the average firing over time for all 38 ACC neurons during the first 10 (early) and last 10 (late) trials after upshifts (Fig. 1a, Blocks 2sh, 3bg, and 4bg) and downshifts (Fig. 1a, Blocks 2lo and 4sm) in value. Average activity for the early trials was stronger during the entire trial and was most prominent before and during presentation of odor cues (Fig. 3a).

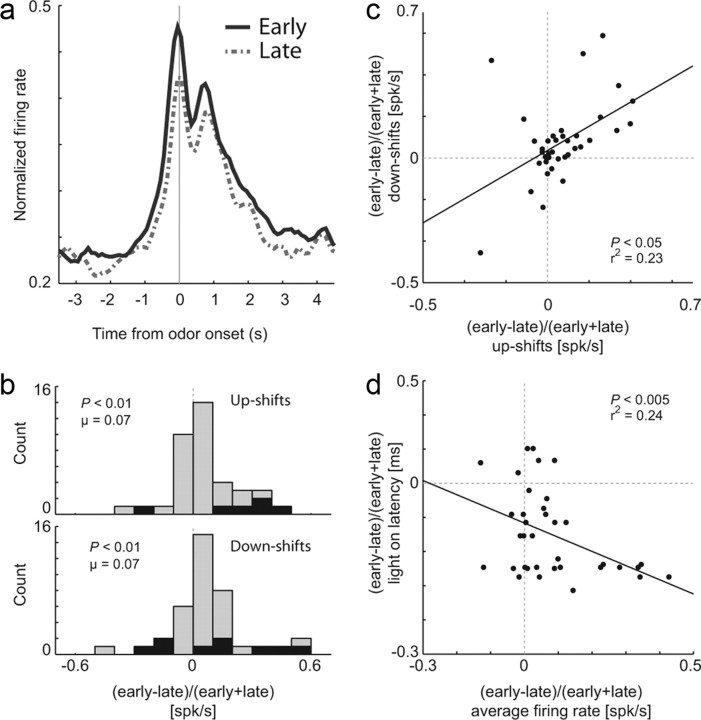

Figure 3.

Activity of ACC neurons fire more strongly after upshifts and downshifts and was correlated with attention. a, Average normalized neural activity for all 38 task-related neurons (odor onset to fluid well entry) comparing the first 10 trials in all blocks (early, solid black) to the last 10 trials in all blocks (late, dashed gray). b, Distribution reflecting the difference in task-related firing rate between early and late in a trial block (early − late)/(early + late) following either upshifts (top) or downshifts (bottom). Black bars represent the number of neurons that showed a significant difference between these responses (t test, p < 0.05). c, Correlation of firing rate changes between early and late trials after downshifts (y-axis) and upshifts (x-axis). d, Correlation between light-on latency (house light on until nose poke; y-axis) and firing rate (x-axis) either early or late within a block [(early − late)/(early + late)]. N = 4 rats.

To quantify this effect, for each neuron we computed the average firing from odor onset to fluid well entry separately for the first and last 10 trials after upshifts and downshifts in value. Figure 3b plots the distributions of difference scores between firing in early and late trials after upshifts and downshifts for each neuron. Both distributions were significantly shifted in the positive direction indicating that activity was stronger on trials after shifts in value compared with after learning (μ values > 0.7; p values < 0.01, Wilcoxon test). Finally, there was a positive correlation between the two distributions, indicating that neurons that tended to fire more strongly after upshifts tended to fire more strongly after downshifts in value (Fig. 3c) (p < 0.05; r2 = 0.23). Thus, activity was significantly stronger after both upshifts and downshifts in value, and these effects tended to occur in the same neurons.

Next, we asked whether activity in ACC observed after shifts in value was correlated to our behavioral measure of attention. As described above, rats increased attention after block changes as measured by how quickly they oriented to the odor port upon illumination of the house lights. Rats were faster to respond to the house light when reward contingencies changed at the start of trial blocks compared with later in trial blocks (Fig. 1e). The difference between light-on latencies during the first and last 10 trials is plotted against the changes in firing that occur on these trials (Fig. 3d). Changes in firing were negatively correlated with light-on latencies, indicating that when activity was higher, behavioral measures of attention were more intense (p < 0.005; r2 = 0.24). Note that this correlation was not a result of a gradual decline in activity or latencies that might have occurred over the course of each recording session. The correlation was still present and significant when the last 10 trials for each comparison were taken from the previous block of trials (p < 0.005; r2 = 0.20).

Increased firing after reward prediction errors was also present before odor onset (Fig. 3a). As above, this was quantified by examining distributions of differences between early and late trials at the population level and by asking whether the difference between early and late trials was significant at the single-cell level (t test, p < 0.05). Activity was analyzed during an epoch that encompassed house light onset to odor onset. At the population level, both distributions were shifted in the positive direction indicating higher firing during early trials (upshifts: μ = 0.1039; p < 0.0001; downshifts: μ = 0.612; p = 0.1190, Wilcoxon test). Upshift and downshift distributions were not significantly different from each other (Wilcoxon test, p = 0.1372). The counts of neurons exhibiting significantly higher firing on early versus late trials were in the significant majority for upshifts (16% vs 0%) but did not reach significance for downshifts (11% vs 5%); however, the two effects were correlated at the population level, indicating that neurons tended to fire more strongly after both upshifts and downshifts in value. Finally, as for activity after odor onset, activity preceding it was negatively correlated with light-on latencies (p < 0.05; r2 = 0.32).

ACC signals errors of commission and reward prediction

Several studies have reported that activity in ACC signals errors in task performance (Ito et al., 2003; Totah et al., 2009). To determine whether we also see activity related to detection of errors, we asked how many neurons increased firing after the first indication that an error had occurred (1 s following well entry) relative to baseline (1.5 s before nose poke; t test, p < 0.05). Errors were defined as breaking the photobeam in the well opposite to that signaled by the forced-choice odor. Incorrect responses immediately turned off the house light, indicating that an error had occurred. Sixteen neurons (15%) fired more strongly after this event relative to baseline (baseline = 1.5 s before nose poke; t test, p < 0.05). The average activity of these neurons is illustrated in Figure 4a, which plots firing during correct and incorrect forced-choice trials. Activity is aligned on well entry and is higher after an errant response had occurred. To quantify this effect, we computed an index contrasting activity 1 s after well entry for error and correct trials [(error − correct)/(error + correct)]. This distribution (Fig. 4b) was significantly shifted in the positive direction, indicating higher firing after incorrect responses on forced-choice trials (μ = 0.09; p < 0.05, Wilcoxon test). Thus, results from the present study are consistent with what has been described previously in humans, rats, and primates, in that activity in ACC signals errors of commission during performance of our task.

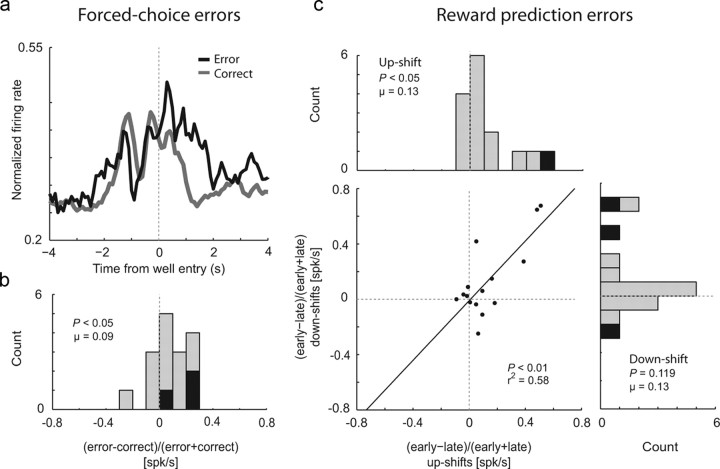

Figure 4.

Activity in ACC was modulated by forced-choice errors and reward-prediction errors. a, Average activity of error-related (1 s following well entry) neurons (n = 16) during forced-choice errors (black) and correct forced-choice trials (gray). b, Distribution reflecting the difference in activity between forced-choice errors and forced-choice correct trials [(error − correct) /(error + correct)]. Black bars represent the number of neurons that showed a significant difference between these responses (t test, p < 0.05). c, Distributions compare neural activity in early trials and late trials within blocks (early − late)/(early + late) for trials after upshifts (top) and downshifts (right) for all reward-response cells (n = 15). Black bars represent the number of neurons that showed a significant difference between these responses (t test, p < 0.05). Scatter plot (center) marks the correlation between the two distributions. N = 4 rats.

More recent reports have shown that ACC also signals errors in reward prediction similar to what we have described previously in ABL (Roesch et al., 2010a; Hayden et al., 2011). The above analysis examined the propensity for ACC to detect errors on trials after violations of expectancies. Here we examined activity during the actual delivery and omission of reward to determine whether ACC also signals reward prediction errors at the time of the violation. The following analysis is the same as previously performed in ABL (Roesch et al., 2010a). Neurons were first characterized as reward-responsive by comparing activity 1 s after reward delivery to baseline (t test, p < 0.05). Of the 111 neurons, 15 (14%) showed significant increases in activity at the time of reward delivery. Next, for each of these neurons, we compared activity (1 s after reward delivery or omission) during the first and last 10 trials after unexpected shifts in value. The distribution of indices representing the contrast between early and late trials [(early − late)/(early + late)] for both upshifts and downshifts is illustrated in Figure 4c. As in ABL, both distributions were shifted in the positive direction (upshift: μ = 0.13; p < 0.05; downshift: μ = 0.13; p = 0.119, Wilcoxon test) and were positively correlated with each other (p < 0.01; r2 = 0.58). Thus, as described previously for rat ABL and primate ACC, single cells in rat ACC reflected violations in reward prediction, regardless of valence.

ACC activity reflects expected reward size but not expected delay to reward

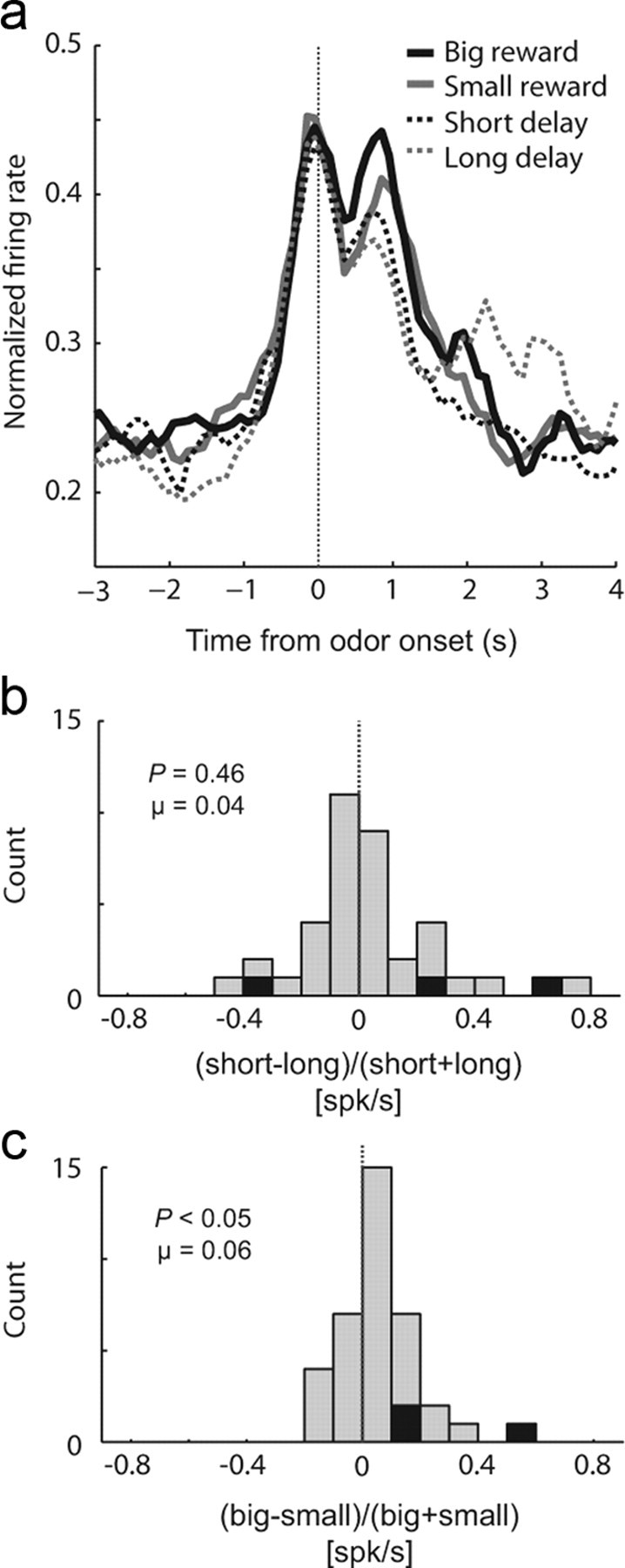

Finally, we asked whether activity in ACC might encode predictive value during odor sampling as reported during presentation of reward-predicting cues in previous studies (Oliveira et al., 2007; Sallet et al., 2007; Kennerley and Wallis, 2009; Kennerley et al., 2009; Hillman and Bilkey, 2010). Multiple reports suggest that ACC encodes several factors related to the value of expected reward, but none have tested the impact of time-discounted reward on firing in ACC. We found that activity in ACC represented expected reward size, but not delay. This is illustrated in Figure 5a, which plots the average firing rate over all task-related neurons (n = 38) aligned on odor onset. The first 10 trials of each block were excluded from this analysis so that activity could be examined after learning. Activity appeared higher during odor presentation when the odor cue predicted the large reward relative to the small reward, but did not appear to be significantly stronger on short-delay compared with long-delay trials. To quantify this across the population, we computed the average firing rate for each neuron, starting 100 ms after odor onset and ending at well entry for each of the four trial types: big, short, small, and long. We then contrasted activity during short-delay trials against long-delay trials [(short − long)/(short + long)] and contrasted activity during large-reward trials against small-reward trials [(big − small)/(big + small)]. These distributions are plotted in Figure 5, b and c. Distributions examining the difference between differently sized rewards were significantly shifted in the positive direction, indicating higher firing for large versus small reward trials (Fig. 5c) (μ = 0.06; p < 0.05, Wilcoxon test). The distribution examining differences between differently delayed rewards was not significantly shifted (Fig. 5b) (μ = 0.04; p = 0.46, Wilcoxon test). Thus, activity during the cue until the completion of the behavioral response reflects the size of the reward expected at the end of the trial but was not significantly modulated by the delay the rats anticipated that they would have to wait before receiving reward even though both short-delay and large-reward trials were preferred over long-delay and small-reward trials, respectively (Fig. 1b–d).

Figure 5.

ACC encodes expected reward size, but not delay (n = 38). a, Curves representing population firing (aligned on odor onset) for expected big reward (thick black), small reward (thick gray), short delay (thin black), and long delay (dashed gray). The first 10 trials of each block were excluded from this analysis so that activity could be examined after learning. Note that reward delivery occurs ∼1.5 s after odor onset for short-, big-, and small-reward trials. Reward on long-delay trials occurs ∼1.5–8.5 s after odor onset, thus comparisons between long-delay trials and the other three trial types should not be made later during the trial. b, c, Distributions reflecting the difference in neural activity (100 ms after odor onset to well entry) between short and long [(short − long)/(short + long)] and big and small [(big − small)/(big + small)], respectively. Black bars represent the number of neurons that showed a significant difference between these responses (t test, p < 0.05). N = 4 rats.

Discussion

When outcomes of behavior are uncertain, attention for learning signals, theorized by Pearce and Hall (1980), are thought to focus neural processing on events that predict those outcomes so learning can occur. According to this idea, the attention that an environmental event receives is equal to the average of the unsigned prediction error generated across the past few trials, such that attention on the current trial reflects attention on the prior trial plus the absolute value of the summed error in outcome prediction. Here we show that activity in ACC reflects such unsigned changes in attention. Activity was elevated on behavioral trials after both upshifts and downshifts, was correlated with behavioral measures of attention, and took several trials to develop. We also observed changes in firing consistent with previous reports, suggesting that ACC signals errors of commission, reward prediction errors, and reward predictions.

Error detection, prediction errors, and feedback

Much of the research related to elucidating the function of ACC has focused on error detection (Ito et al., 2003; Amiez et al., 2005; Quilodran et al., 2008; Rushworth and Behrens, 2008; Totah et al., 2009). Most of these studies report increases in ACC activity immediately after errors, defined as incorrect responding. More recent work has suggested that ACC does not simply detect errors, but is also important for signaling positive feedback (Kennerley et al., 2006; Oliveira et al., 2007; Rothé et al., 2011). Still others suggest that ACC's role in trial feedback might reflect some sort of prediction error encoding similar to what has been described for midbrain dopamine neurons and ABL (Roesch et al., 2010b).

We have previously used the task described in this article to dissociate signed and unsigned prediction error signaling in VTA dopamine neurons and ABL, respectively (Roesch et al., 2007, 2010a,b). Prediction error signals in ABL differed from VTA in that activity was not inhibited when delivered rewards were worse than expected and that prediction error signals in ABL did not detect errors upon the first violation, but took several trials to develop. Similar findings have been described in primate ACC (Hayden et al., 2011).

We add to these findings in that, in our task, rats actually learned from reward prediction error signals and adjusted subsequent behavior accordingly. Here, during learning trials, activity in ACC was high and was correlated with the level of attention dedicated to events during those trials as rats learned new contingencies. Our results suggest that activity in ACC does not just signal reward prediction errors, but also signals the need for increased attention on subsequent trials so that learning can occur.

Attention and conflict monitoring

Another focus of ACC research, different, but not entirely unrelated to error-related function, is the examination of ACC's role in “monitoring conflict” between two competing responses. Such competition requires increased attention so that participants can override an automatic response to perform the task-imposed appropriate one. Activity in ACC has been shown to be positively correlated with the degree of conflict in a number of different tasks (Pardo et al., 1990; Badgaiyan and Posner, 1998; Carter et al., 1998; Botvinick et al., 2001; Braver et al., 2001; Paus, 2001; van Veen et al., 2001; De Martino et al., 2006; Magno et al., 2006).

In light of these results, it could be argued that increases in ACC activity observed after shifts in value in our task might reflect response conflict. That is, rats were trying to override their habitual response of responding in the direction that previously produced the better reward to perform the block appropriate one, which is to respond in the opposite direction. One problem with this interpretation is that if ACC's job is to monitor conflict, it should do so for all conflicting situations. For example, we would expect increases in activity on forced-choice trials toward low-value reward. Under these conditions, conflict is created between making the habitual response of going toward the well with the better reward and following the task-appropriate rule, which is to move in the opposite direction (i.e., toward the low-value fluid well). Low-value forced-choice trials did not induce ACC neurons to fire more strongly, suggesting that ACC does not appear to be monitoring conflict during performance of this task, as suggested by several other single-unit recording studies (Ito et al., 2003; Nakamura et al., 2005; Brown and Braver, 2008; Quilodran et al., 2008; Hayden et al., 2011).

Recent theoretical work suggests that error detection, reward prediction errors, and conflict monitoring might reflect the same underlying process (Alexander and Brown, 2011). This work considers these signals to be part of a generalized surprise/attention system. In each of these cases, activity in ACC might reflect unexpected nonoccurrence of an expected outcome. That is, activity related to errors might reflect comparison of actual versus expected outcomes, and activity related to conflict might represent differences between possible responses and their outcomes or the unexpected resulting conflict that arises when predictions do not match actual outcomes. Our work is consistent with this theory, demonstrating that ACC is active after errors of commission and reward prediction errors, and during the initiation of trials after reward contingencies change unexpectedly.

Finally, several studies have suggested that ACC activity is particularly high during general arousal or attention (MacDonald et al., 2000; Paus, 2001; Brown and Braver, 2005; Totah et al., 2009). Our results are more in line with these results; however, the signals described in our study cannot be adequately explained by generic changes in attention because not all attention-grabbing events impacted ACC activity in our task. Activity was not elevated for cues that predicted a more immediate reward even though they induced faster reaction times and more accurate task performance.

Reward prediction signals

In addition to encoding prediction errors, ACC also appears to encode reward predictions. It has been reported that activity during presentation of conditioned stimuli is modulated by the reward it predicts (Shidara and Richmond, 2002; Amiez et al., 2006; Kennerley et al., 2009; Hayden and Platt, 2010; Hillman and Bilkey, 2010; Rushworth et al., 2011). In fact, ACC appears to be able to encode several factors that impact the value of expected outcomes (Kennerley and Wallis, 2009).

Consistent with this work, we show that activity in rat ACC was modulated by expected reward size. Interestingly though, activity in ACC was not influenced by manipulations of delay even though rats showed a clear subjective preference for cues that predicted the more immediate reward. At first glance, this seemed very surprising to us because most brain areas in the circuit that includes ACC have been shown to encode reward delays (Roesch et al., 2006, 2007, 2009, 2010a,b; Stalnaker et al., 2010). Further, primate work has clearly suggested that ACC incorporates many different aspects of value into its firing. However, this result does fit well with several papers that have suggested that ACC might not account for delays the same as it does other variables, such as effort, when computing the value of predicted rewards (Rushworth et al., 2004, 2007; Walton et al., 2004, 2006, 2007; Rudebeck et al., 2008). Specifically, it has been shown that rats with lesions of ACC are impaired on effort- but not delay-discounting tasks (Rudebeck et al., 2006), suggesting a critical role for ACC in signaling how much effort, but not time, is required to achieve reward.

In conclusion, proposed functions of ACC have included conflict monitoring, error detection, reward prediction error signaling, reinforcement learning, action-outcome encoding and effort discounting. Recently published theoretical work has tried to tie together these seemly disparate ACC signals as one generalized Pearce-Hall-like surprise signal (Alexander and Brown, 2011). This theory is built on the premise that activity in ACC has been shown to be involved in representing expectations about actions and in detecting surprising outcomes. This model predicts that activity in ACC represents learned predictions about the possible outcomes of actions and that activity should be maximal when those expected outcomes fail to occur. The purpose of this signal is to provide a training signal so that action-outcome predictions are updated when expectations are violated.

Here we show that activity patterns observed in rat ACC during learning are consistent with these predictions. Activity was high during trials after violations of reward expectations, regardless of valence. Activity in ACC also detected errors of commission and reward prediction, and signaled when the expected reward was to be large. These data suggest that ACC is important for marshaling neural resources so that animals can learn when reward contingencies change. This signal is similar to that described previously in ABL, reporting unsigned prediction errors that develop over several trials, but differed in that activity was increased before and during trial events. Detection of prediction errors and the subsequent changes in attention critical for learning might be dependent on the ABL–ACC circuit.

Footnotes

This work was supported by National Institute on Drug Abuse Grant R01DA031695 (M.R.R.).

References

- Alexander WH, Brown JW. Medial prefrontal cortex as an action-outcome predictor. Nat Neurosci. 2011;14:1338–1344. doi: 10.1038/nn.2921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amiez C, Joseph JP, Procyk E. Anterior cingulate error-related activity is modulated by predicted reward. Eur J Neurosci. 2005;21:3447–3452. doi: 10.1111/j.1460-9568.2005.04170.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amiez C, Joseph JP, Procyk E. Reward encoding in the monkey anterior cingulate cortex. Cereb Cortex. 2006;16:1040–1055. doi: 10.1093/cercor/bhj046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Badgaiyan RD, Posner MI. Mapping the cingulate cortex in response selection and monitoring. Neuroimage. 1998;7:255–260. doi: 10.1006/nimg.1998.0326. [DOI] [PubMed] [Google Scholar]

- Belova MA, Paton JJ, Morrison SE, Salzman CD. Expectation modulates neural responses to pleasant and aversive stimuli in primate amygdala. Neuron. 2007;55:970–984. doi: 10.1016/j.neuron.2007.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botvinick MM, Braver TS, Barch DM, Carter CS, Cohen JD. Conflict monitoring and cognitive control. Psychol Rev. 2001;108:624–652. doi: 10.1037/0033-295x.108.3.624. [DOI] [PubMed] [Google Scholar]

- Braver TS, Barch DM, Gray JR, Molfese DL, Snyder A. Anterior cingulate cortex and response conflict: effects of frequency, inhibition and errors. Cereb Cortex. 2001;11:825–836. doi: 10.1093/cercor/11.9.825. [DOI] [PubMed] [Google Scholar]

- Brown JW, Braver TS. Learned predictions of error likelihood in the anterior cingulate cortex. Science. 2005;307:1118–1121. doi: 10.1126/science.1105783. [DOI] [PubMed] [Google Scholar]

- Brown JW, Braver TS. A computational model of risk, conflict, and individual difference effects in the anterior cingulate cortex. Brain Res. 2008;1202:99–108. doi: 10.1016/j.brainres.2007.06.080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bryden DW, Johnson EE, Diao X, Roesch MR. Impact of expected value on neural activity in rat substantia nigra pars reticulata. Eur J Neurosci. 2011;33:2308–2317. doi: 10.1111/j.1460-9568.2011.07705.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calu DJ, Roesch MR, Haney RZ, Holland PC, Schoenbaum G. Neural correlates of variations in event processing during learning in central nucleus of amygdala. Neuron. 2010;68:991–1001. doi: 10.1016/j.neuron.2010.11.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carter CS, Braver TS, Barch DM, Botvinick MM, Noll D, Cohen JD. Anterior cingulate cortex, error detection, and the online monitoring of performance. Science. 1998;280:747–749. doi: 10.1126/science.280.5364.747. [DOI] [PubMed] [Google Scholar]

- Cassell MD, Wright DJ. Topography of projections from the medial prefrontal cortex to the amygdala in the rat. Brain Res Bull. 1986;17:321–333. doi: 10.1016/0361-9230(86)90237-6. [DOI] [PubMed] [Google Scholar]

- De Martino B, Kumaran D, Seymour B, Dolan RJ. Frames, biases, and rational decision-making in the human brain. Science. 2006;313:684–687. doi: 10.1126/science.1128356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dziewiatkowski J, Spodnik JH, Biranowska J, Kowianski P, Majak K, Morys J. The projection of the amygdaloid nuclei to various areas of the limbic cortex in the rat. Folia Morphol (Warsz) 1998;57:301–308. [PubMed] [Google Scholar]

- Hayden BY, Platt ML. Neurons in anterior cingulate cortex multiplex information about reward and action. J Neurosci. 2010;30:3339–3346. doi: 10.1523/JNEUROSCI.4874-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayden BY, Heilbronner SR, Pearson JM, Platt ML. Surprise signals in anterior cingulate cortex: neuronal encoding of unsigned reward prediction errors driving adjustment in behavior. J Neurosci. 2011;31:4178–4187. doi: 10.1523/JNEUROSCI.4652-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hillman KL, Bilkey DK. Neurons in the rat anterior cingulate cortex dynamically encode cost-benefit in a spatial decision-making task. J Neurosci. 2010;30:7705–7713. doi: 10.1523/JNEUROSCI.1273-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holroyd CB, Coles MG. The neural basis of human error processing: reinforcement learning, dopamine, and the error-related negativity. Psychol Rev. 2002;109:679–709. doi: 10.1037/0033-295X.109.4.679. [DOI] [PubMed] [Google Scholar]

- Ito S, Stuphorn V, Brown JW, Schall JD. Performance monitoring by the anterior cingulate cortex during saccade countermanding. Science. 2003;302:120–122. doi: 10.1126/science.1087847. [DOI] [PubMed] [Google Scholar]

- Kaye H, Pearce JM. The strength of the orienting response during Pavlovian conditioning. J Exp Psychol Anim Behav Process. 1984;10:90–109. [PubMed] [Google Scholar]

- Kennerley SW, Wallis JD. Evaluating choices by single neurons in the frontal lobe: outcome value encoded across multiple decision variables. Eur J Neurosci. 2009;29:2061–2073. doi: 10.1111/j.1460-9568.2009.06743.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley SW, Walton ME, Behrens TE, Buckley MJ, Rushworth MF. Optimal decision making and the anterior cingulate cortex. Nat Neurosci. 2006;9:940–947. doi: 10.1038/nn1724. [DOI] [PubMed] [Google Scholar]

- Kennerley SW, Dahmubed AF, Lara AH, Wallis JD. Neurons in the frontal lobe encode the value of multiple decision variables. J Cogn Neurosci. 2009;21:1162–1178. doi: 10.1162/jocn.2009.21100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacDonald AW, 3rd, Cohen JD, Stenger VA, Carter CS. Dissociating the role of the dorsolateral prefrontal and anterior cingulate cortex in cognitive control. Science. 2000;288:1835–1838. doi: 10.1126/science.288.5472.1835. [DOI] [PubMed] [Google Scholar]

- Magno E, Foxe JJ, Molholm S, Robertson IH, Garavan H. The anterior cingulate and error avoidance. J Neurosci. 2006;26:4769–4773. doi: 10.1523/JNEUROSCI.0369-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matsumoto M, Matsumoto K, Abe H, Tanaka K. Medial prefrontal cell activity signaling prediction errors of action values. Nat Neurosci. 2007;10:647–656. doi: 10.1038/nn1890. [DOI] [PubMed] [Google Scholar]

- Nakamura K, Roesch MR, Olson CR. Neuronal activity in macaque SEF and ACC during performance of tasks involving conflict. J Neurophysiol. 2005;93:884–908. doi: 10.1152/jn.00305.2004. [DOI] [PubMed] [Google Scholar]

- Oliveira FT, McDonald JJ, Goodman D. Performance monitoring in the anterior cingulate is not all error related: expectancy deviation and the representation of action-outcome associations. J Cogn Neurosci. 2007;19:1994–2004. doi: 10.1162/jocn.2007.19.12.1994. [DOI] [PubMed] [Google Scholar]

- Pardo JV, Pardo PJ, Janer KW, Raichle ME. The anterior cingulate cortex mediates processing selection in the Stroop attentional conflict paradigm. Proc Natl Acad Sci U S A. 1990;87:256–259. doi: 10.1073/pnas.87.1.256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paus T. Primate anterior cingulate cortex: where motor control, drive and cognition interface. Nat Rev Neurosci. 2001;2:417–424. doi: 10.1038/35077500. [DOI] [PubMed] [Google Scholar]

- Pearce JM, Hall G. A model for Pavlovian learning: variations in the effectiveness of conditioned but not of unconditioned stimuli. Psychol Rev. 1980;87:532–552. [PubMed] [Google Scholar]

- Pearce JM, Kaye H, Hall G. Predictive accuracy and stimulus associability: development of a model for Pavlovian learning. In: Commons ML, Herrnstein RJ, Wagner AR, editors. Quantitative analyses of behavior. Cambridge, MA: Ballinger; 1982. pp. 241–255. [Google Scholar]

- Pearce JM, Wilson P, Kaye H. The influence of predictive accuracy on serial conditioning in the rat. Q J Exp Psychol B. 1988;40:181–198. [Google Scholar]

- Quilodran R, Rothé M, Procyk E. Behavioral shifts and action valuation in the anterior cingulate cortex. Neuron. 2008;57:314–325. doi: 10.1016/j.neuron.2007.11.031. [DOI] [PubMed] [Google Scholar]

- Roesch MR, Taylor AR, Schoenbaum G. Encoding of time-discounted rewards in orbitofrontal cortex is independent of value representation. Neuron. 2006;51:509–520. doi: 10.1016/j.neuron.2006.06.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roesch MR, Calu DJ, Schoenbaum G. Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nat Neurosci. 2007;10:1615–1624. doi: 10.1038/nn2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roesch MR, Singh T, Brown PL, Mullins SE, Schoenbaum G. Ventral striatal neurons encode the value of the chosen action in rats deciding between differently delayed or sized rewards. J Neurosci. 2009;29:13365–13376. doi: 10.1523/JNEUROSCI.2572-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roesch MR, Calu DJ, Esber GR, Schoenbaum G. Neural correlates of variations in event processing during learning in basolateral amygdala. J Neurosci. 2010a;30:2464–2471. doi: 10.1523/JNEUROSCI.5781-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roesch MR, Calu DJ, Esber GR, Schoenbaum G. All that glitters. dissociating attention and outcome expectancy from prediction errors signals. J Neurophysiol. 2010b;104:587–595. doi: 10.1152/jn.00173.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rothé M, Quilodran R, Sallet J, Procyk E. Coordination of high gamma activity in anterior cingulate and lateral prefrontal cortical areas during adaptation. J Neurosci. 2011;31:11110–11117. doi: 10.1523/JNEUROSCI.1016-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudebeck PH, Walton ME, Smyth AN, Bannerman DM, Rushworth MF. Separate neural pathways process different decision costs. Nat Neurosci. 2006;9:1161–1168. doi: 10.1038/nn1756. [DOI] [PubMed] [Google Scholar]

- Rudebeck PH, Bannerman DM, Rushworth MF. The contribution of distinct subregions of the ventromedial frontal cortex to emotion, social behavior, and decision making. Cogn Affect Behav Neurosci. 2008;8:485–497. doi: 10.3758/CABN.8.4.485. [DOI] [PubMed] [Google Scholar]

- Rushworth MF, Behrens TE. Choice, uncertainty and value in prefrontal and cingulate cortex. Nat Neurosci. 2008;11:389–397. doi: 10.1038/nn2066. [DOI] [PubMed] [Google Scholar]

- Rushworth MF, Walton ME, Kennerley SW, Bannerman DM. Action sets and decisions in the medial frontal cortex. Trends Cogn Sci. 2004;8:410–417. doi: 10.1016/j.tics.2004.07.009. [DOI] [PubMed] [Google Scholar]

- Rushworth MF, Behrens TE, Rudebeck PH, Walton ME. Contrasting roles for cingulate and orbitofrontal cortex in decisions and social behaviour. Trends Cogn Sci. 2007;11:168–176. doi: 10.1016/j.tics.2007.01.004. [DOI] [PubMed] [Google Scholar]

- Rushworth MF, Noonan MP, Boorman ED, Walton ME, Behrens TE. Frontal cortex and reward-guided learning and decision-making. Neuron. 2011;70:1054–1069. doi: 10.1016/j.neuron.2011.05.014. [DOI] [PubMed] [Google Scholar]

- Sallet J, Quilodran R, Rothé M, Vezoli J, Joseph JP, Procyk E. Expectations, gains, and losses in the anterior cingulate cortex. Cogn Affect Behav Neurosci. 2007;7:327–336. doi: 10.3758/cabn.7.4.327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scheffers MK, Coles MG. Performance monitoring in a confusing world: error-related brain activity, judgments of response accuracy, and types of errors. J Exp Psychol Hum Percept Perform. 2000;26:141–151. doi: 10.1037//0096-1523.26.1.141. [DOI] [PubMed] [Google Scholar]

- Shidara M, Richmond BJ. Anterior cingulate: single neuronal signals related to degree of reward expectancy. Science. 2002;296:1709–1711. doi: 10.1126/science.1069504. [DOI] [PubMed] [Google Scholar]

- Sripanidkulchai K, Sripanidkulchai B, Wyss JM. The cortical projection of the basolateral amygdaloid nucleus in the rat: a retrograde fluorescent dye study. J Comp Neurol. 1984;229:419–431. doi: 10.1002/cne.902290310. [DOI] [PubMed] [Google Scholar]

- Stalnaker TA, Calhoon GG, Ogawa M, Roesch MR, Schoenbaum G. Neural correlates of stimulus-response and response-outcome associations in dorsolateral versus dorsomedial striatum. Front Integr Neurosci. 2010;4:12. doi: 10.3389/fnint.2010.00012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Swan JA, Pearce JM. The orienting response as an index of stimulus associability in rats. J Exp Psychol Anim Behav Process. 1988;14:292–301. [PubMed] [Google Scholar]

- Totah NK, Kim YB, Homayoun H, Moghaddam B. Anterior cingulate neurons represent errors and preparatory attention within the same behavioral sequence. J Neurosci. 2009;29:6418–6426. doi: 10.1523/JNEUROSCI.1142-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tye KM, Cone JJ, Schairer WW, Janak PH. Amygdala neural encoding of the absence of reward during extinction. J Neurosci. 2010;30:116–125. doi: 10.1523/JNEUROSCI.4240-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Veen V, Cohen JD, Botvinick MM, Stenger VA, Carter CS. Anterior cingulate cortex, conflict monitoring, and levels of processing. Neuroimage. 2001;14:1302–1308. doi: 10.1006/nimg.2001.0923. [DOI] [PubMed] [Google Scholar]

- Wallis JD, Kennerley SW. Heterogeneous reward signals in prefrontal cortex. Curr Opin Neurobiol. 2010;20:191–198. doi: 10.1016/j.conb.2010.02.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walton ME, Devlin JT, Rushworth MF. Interactions between decision making and performance monitoring within prefrontal cortex. Nat Neurosci. 2004;7:1259–1265. doi: 10.1038/nn1339. [DOI] [PubMed] [Google Scholar]

- Walton ME, Kennerley SW, Bannerman DM, Phillips PE, Rushworth MF. Weighing up the benefits of work: behavioral and neural analyses of effort-related decision making. Neural Netw. 2006;19:1302–1314. doi: 10.1016/j.neunet.2006.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walton ME, Rudebeck PH, Bannerman DM, Rushworth MF. Calculating the cost of acting in frontal cortex. Ann N Y Acad Sci. 2007;1104:340–356. doi: 10.1196/annals.1390.009. [DOI] [PMC free article] [PubMed] [Google Scholar]