Abstract

The ability to localize visual objects is a fundamental component of human behavior and requires the integration of position information from object components. The retinal eccentricity of a stimulus and the locus of spatial attention can affect object localization, but it is unclear whether these factors alter the global localization of the object, the localization of object components, or both. We used psychophysical methods in humans to quantify behavioral responses in a centroid estimation task. Subjects located the centroid of briefly presented random dot patterns (RDPs). A peripheral cue was used to bias attention towards one side of the display. We found that although subjects were able to localize centroid positions reliably, they typically had a bias towards the fovea and a shift towards the locus of attention. We compared quantitative models that explain these effects either as biased global localization of the RDPs or as anisotropic integration of weighted dot component positions. A model that allowed retinal eccentricity and spatial attention to alter the weights assigned to individual dot positions best explained subjects’ performance. These results show that global position perception depends on both the retinal eccentricity of stimulus components and their positions relative to the current locus of attention.

Introduction

In the natural course of a day, humans localize complex visual objects by integrating position information from object components, such as edges, contours, and other structural elements. Often, this localization is accurate, for instance when subjects make eye movements that are reliably close to the centroid (i.e., center of mass) of an extended stimulus (He & Kowler, 1991; Kowler & Blaser, 1995). However, accurate localization of visual targets is not a ubiquitous finding. Rather, localization of visual objects can be biased by many aspects of the relevant stimulus, including its spatial configuration (Denisova, Singh, & Kowler, 2006; Landy, 1993; Landy & Kojima, 2001; McGowan, Kowler, Sharma, & Chubb, 1998; Morgan, Hole, & Glennerster, 1990; Rose & Halpern, 1992; Vishwanath & Kowler, 2003), its motion trajectory (Krekelberg, 2001; Krekelberg & Lappe, 2001), and retinal eccentricity (Müsseler, van der Heijden, Mahmud, Deubel, & Ertsey, 1999).

When targets are presented briefly in the periphery, localization is biased in the direction of the fovea. This bias is evident for both single point stimuli (Mateeff & Gourevich, 1983; O’Regan, 1984; van der Heijden, van der Geest, de Leeuw, Krikke, & Musseler, 1999) and for spatially extended stimuli (Stork, Musseler, & van der Heijden, 2010). Notably, this bias increases in magnitude for more spatially extended stimuli (Müsseler, van der Heijden, Mahmud, Deubel, & Ertsey, 1999; Ploner, Ostendorf, & Dick, 2004; but see Kowler & Blaser, 1995), and the gradient of this effect across increasing eccentricities is steeper for spatially extended stimuli compared to single point stimuli (Müsseler et al.,1999). This suggests that localization of an object may result from an imperfect eccentricity-dependent integration of its component parts. However, McGowan and colleagues (1998) examined differential weighting of the components in a random dot pattern (RDP) and failed to find any significant differences in the utilization of dot components based on retinal eccentricity or relative to target center. In contrast, Drew and colleagues (2010) showed differential weighting of target components based on the distance relative to the target center. In that study, however, the effects of retinal eccentricity were not investigated. A key aim of our study was to understand and quantify the role of retinal eccentricity in this potentially imperfect spatial integration process.

In addition to the aforementioned stimulus variables, spatial localization is also affected by internal variables such as visual spatial attention. Directed attention can improve the accuracy (Bocianski, Müsseler, & Erlhagen, 2010; Fortenbaugh & Robertson, 2011) and reliability (Prinzmetal, Amiri, Allen, & Edwards, 1998) of localization but can also introduce systematic errors (Kosovicheva, Fortenbaugh, & Robertson, 2010; Suzuki & Cavanagh, 1997; Tsal & Bareket, 1999). Since these studies measured performance only for single targets, how spatial attention alters the integration of information when judging the location of a spatially extended object remains unknown. Previous studies have focused on subjects’ abilities to select specific target components using feature-based attention. These studies have shown that attention can be used to select features of a display and make reliable localization judgments on a subset of components (Cohen, Schnitzer, Gersch, Singh, & Kowler, 2007; Drew, Chubb, & Sperling, 2010). To our knowledge, however, there are no behavioral studies that have determined whether spatial attention influences object localization before or after integration of target components. Therefore, the second aim of our study was to understand how spatial attention modulates the integration of position information specifically in target localization and determine whether the effects of spatial attention influence the components of a target or the localization of the target as a whole.

To better understand position perception, integration, and the roles of retinal eccentricity and spatial attention in these processes, we developed quantitative, descriptive models of visual target localization. We then used these models to analyze behavioral data from an experiment in which human observers localized the centroid of RDPs. While an RDP is not a natural stimulus, it has a complexity between that of single dots and true extended objects, and is well suited to study spatial integration in a quantitative manner. Our results showed that subjects indicated the centroid of the RDPs reliably, but also had systematic, eccentricity-dependent biases in this localization process. Moreover, exogenous attention introduced its own bias and shifted the centroid toward the locus of attention. Our modeling results showed that both the effect of eccentricity and the effect of attention are explained most parsimoniously by assuming that the location of an extended object is determined as the weighted sum of its components. Each subject assigned weights to the components that varied with eccentricity; either higher or lower weights near the fovea. In addition, an exogenous cue led to a local increase in weights combined with an overall gradient toward the hemifield with the cue. This is consistent with the idea that attention acts on a representation of component positions (i.e., the dots), and not only on the outcome of the spatial integration process (i.e., a centroid estimate). Taken together these data suggest that spatial integration and its attentional modulation may take place in early visual representations.

Methods

This study consisted of two main experiments. All experimental conditions assessed centroid estimation, but each focused on a specific factor that could influence the final centroid determination: retinal eccentricity (Experiment 1 – Bilateral-Cue), lateralized spatial attention (Experiment 1 – Unilateral-Cue), and motor-response bias (Experiment 2). All experimental procedures were approved by the local Institutional Review Board and followed the National Institute of Health’s guidelines for the ethical treatment of human subjects. All subjects reported normal or corrected-to-normal vision and provided written informed consent.

Participants

Nine subjects participated in Experiment 1. We excluded two subjects because they could not complete the minimum number of trials (see Experimental Procedure) or could not perform the task. The remaining seven subjects ranged in age from 18 to 34 years. Two subjects were male and two subjects reported being left-handed. Subject 1 was an author (JMW); all remaining subjects were naïve to the purpose of the experiment.

Four subjects participated in Experiment 2. Subjects ranged in age from 21 to 34 years. One subject was female. All subjects were right-handed and two out of the four subjects also completed Experiment 1. All four subjects were naïve to the purpose of the experiment.

Apparatus

Stimuli appeared on a Sony FD Trinitron (GDM-C520) CRT monitor at a refresh rate of 120 Hz using custom software, Neurostim (http://neurostim.sourceforge.net), and viewed from a distance of 57 cm. The display measured 40° (width) by 30° (height) and had a resolution of 1024 × 768 pixels. A head-mounted Eyelink II eye tracker system (SR Research, Mississauga, Canada) recorded eye movements by tracking the pupils of both eyes at a sample rate of 500 Hz. Individually molded bite bars were used to reduce head movement.

Visual Stimuli

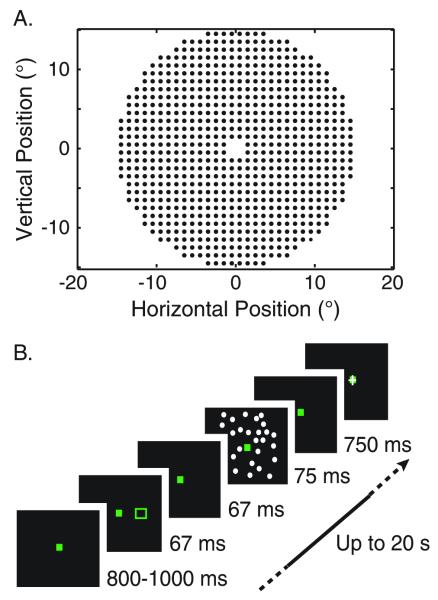

The main stimulus was a random dot pattern (RDP) consisting of 25 small white (50 cd/m2) squares (0.16° × 0.16°) on a black (0.4 cd/m2) background. On each trial, 25 unique dot positions were selected randomly from a grid of 712 possible dot positions within a radius of 15° from the fixation point. Each potential dot location in the grid was 1° away from its nearest horizontal and vertical neighbor. In addition, no dots appeared within a 2° × 2° square region surrounding the fixation point (Figure 1A). The actual centroids of the RDPs across all trials approximated a normal distribution with a horizontal and vertical mean of 0° and a standard deviation of 1.5°.

Figure 1.

Experimental paradigm. (A) Array of all possible dot positions. 25 positions were selected at random on each trial. (B) Example trial from Experiment 1 (Right-Cue condition). Subjects fixated centrally for the duration of the trial. A non-informative cue appeared at an eccentricity of 7.5° just before the onset of the RDP. Shortly after the offset of the RDP, a cursor appeared at the point of fixation and subjects moved the cursor to the perceived centroid location. In separate trials, the cues appeared on the left, right, or on both sides of the visual display.

A green square outline (1° × 1°; line width: 0.12°) appeared at an eccentricity of 7.5° along the horizontal meridian. This non-informative cue appeared to both the left and right of fixation (Bilateral-Cue) or only on one side of the visual display (Unilateral-Cue) to cue attention exogenously. The central fixation stimulus was a small green square (0.12° × 0.12°), which remained visible for the duration of the trial at the center of the display.

Eye Tracking

Subjects were required to maintain fixation within a 3° × 3° square at the center of the display for the duration of each trial, including the response epochs. Trials in which subjects failed to fixate appropriately were terminated immediately and repeated randomly at a later time within the block.

Trial Presentation

Each block consisted of 200 trials. Blocks of Bilateral-Cue trials were interleaved with blocks of Unilateral-Cue trials within a session. Blocks of Unilateral-Cue trials contained both Left-Cue and Right-Cue trials presented randomly within the block. Subjects completed blocks of trials from Experiment 2 in separate sessions. Typically, subjects completed three blocks of experimental trials per hour. All subjects received between one to two hours (three to six blocks) of training on the task prior to completing experimental trials. Data collected during the training blocks were not analyzed.

Experimental Procedure

Experiment 1

The experimental task was to estimate the centroid of an RDP (Figure 1B). In the Unilateral-Cue conditions, we examined the influence of exogenous attention on performance, and cued subjects to one side of the visual display; either to the left (Left-Cue condition) or right (Right-Cue condition) of fixation. The goal of the Bilateral-Cue condition in Experiment 1 was to assess effects of retinal eccentricity on centroid estimation. Therefore, we balanced the allocation of exogenous attention across both sides of the visual display by presenting non-informative cues simultaneously to the left and right of fixation. We presented bilateral cues instead of no cues to keep the visual display and the temporal structure of the task as similar as possible between the Unilateral- and Bilateral-Cue conditions.

Each trial began when the subject fixated the central fixation point. After a variable delay, the attentional cue(s) appeared for 67 ms (8 frames) just prior (134 ms) to the appearance of the RDP. This cue-target interstimulus interval was chosen to maximize effects of exogenous attention on behavioral performance (Cheal & Lyon, 1991; Muller & Rabbitt, 1989). The RDP remained visible for 75 ms (9 frames). A cursor (white cross-hair; 50 cd/m2, 0.51°) appeared at fixation 750 ms after target offset. Subjects were instructed to locate the centroid, i.e. average position, of all dots presented on a trial by moving a cursor to the centroid using a computer mouse in their right hand (regardless of handedness) and then clicking the left button.

All subjects completed a minimum of 600 experimental trials for the Bilateral-Cue condition and 800 experimental trials for each of the Left- and Right-Cue conditions.

Experiment 2

Experiment 2 re-examined centroid estimates using a different mode of behavioral response (i.e., two-alternative forced choice). The procedure was identical to the Unilateral-Cue trials in Experiment 1 except that instead of a cursor appearing 750 ms after target offset, a green probe line extending the full height of the display (0.04° width) appeared briefly (250 ms) to the left or right of the actual centroid of the RDP (offsets: −4°, −2.5°, −1.5°, −0.75°, −0.25°, 0.25°, 0.75°, 1.5°, 2.5°, 4°). Subjects indicated whether the perceived centroid was to the left or right of the probe line (Question A) by pressing the left or right arrow keys on each trial, respectively. To control for the possibility that the cue condition biased the subject’s key choice rather than their perception per se, subjects also completed blocks in which they were instructed to make the reverse comparison; that is, whether the probe was to the left or right of the perceived centroid (Question B). Subjects first completed all blocks answering one question and then completed all blocks answering the other question. The order was counterbalanced across subjects. All subjects completed a minimum of 600 trials per instruction condition.

Data Analysis

Experiment 1

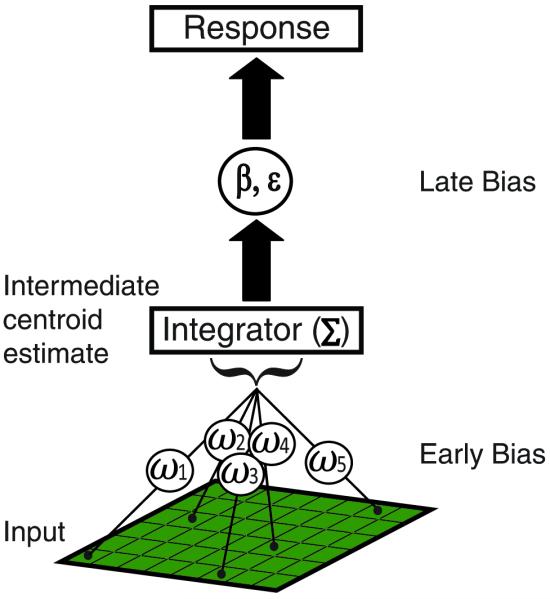

We conceptualize the estimation of centroids by human observers as a three-stage, weighted integration process in which the individual dot representations are first combined and then transformed into a behavioral response (Figure 2). The actual centroid of an RDP is the mean of the horizontal and vertical dot coordinates. Human centroid estimates are inevitably imperfect and include some degree of variable error (noise) as well as constant error (bias).

Figure 2.

Model of centroid estimation. This is conceptualized in three stages; input, integration and output, with up to two sources of perceptual bias (early & late). The integrator combines the veridical dot representations, which may differentially contribute to the final response. We model these different levels of contribution with specific weights, ωi, for each dot position (early bias). These weighted dot representations are summed in an integrator resulting in an intermediate centroid estimate. The intermediate centroid estimate can be subjected to multiplicative (β) or additive (ε) bias (late bias) resulting in the final centroid estimate, i.e., response.

These sources of error could arise either by altering the dot representations themselves (early bias) or by altering the output of the integration process (late bias).To gain insight into the computations that underlie the subjects’ centroid estimates, we developed quantitative models that describe the subject’s response as a function of the actual centroid position (Equation 1) or in terms of a weighted integration of individual dot positions (Equations 2-5). In the following sections, we show only the equations for the horizontal (X) coordinates, but analogous equations were used for the vertical (Y) coordinates.

Model Descriptions

Bilateral-Cue Condition

Late Bias Model

The Late Bias model assumes that subjects integrate the dot representations veridically, but the output of the integrator is perturbed by a linear, eccentricity-dependent bias and/or constant bias. Accordingly, the model computes the perceived centroid as a simple function of the actual centroid, cx. Here, we consider a simple linear bias according to

| (1) |

where βx is a slope parameter that quantifies the magnitude of the eccentricity-dependent horizontal bias and εx is an error term along the horizontal dimension. The value of β in the fitted model for a given subject indicates whether the observer had an overall linear foveofugal (β > 1) or foveopetal (β < 1) bias in their centroid estimates relative to the point of fixation. If β = 1, then there was no overall linear bias due to the retinal eccentricity of the centroid position. The parameter ε represents a constant bias in the centroid estimates across all trials regardless of the position of the actual centroid. We determined a separate β and ε for the vertical coordinates.

Although we report here only a linear late bias model, we did consider the possibility of other late bias models in which the perceived centroid is computed as a non-linear function of the actual centroid position (e.g. a sigmoid). Qualitative assessments of the relationship between perceived and actual centroids suggested that approximately linear effects predominated and that the addition of non-linear components to the late bias model was not necessary.

Early Bias Model – Weighted Average (Weighted Average)

The use of weighted average models in prior studies of localization (Landy, 1993; Landy & Kojima, 2001; McGowan, Kowler, Sharma, & Chubb, 1998) prompted us to also examine this descriptive model in our centroid estimation task. Unlike the Late Bias model, the Weighted Average model does not assume equal integration of all dot components. Rather, this model can capture an early bias in which individual dot representations contribute differently to the overall centroid estimate on the basis of their positions in the visual field. The Weighted Average model implements a normalized weighted sum of all dot positions on a given trial and also allows for a constant late bias, ε. Specifically, the Weighted Average model for displays containing 25 dots (as used in this study) is

| (2) |

where ωi = ω(xi, yi) is a weighting function that assigns a weight to the ith dot on the basis of its horizontal and vertical position in the visual field (see below). A dot position with a higher weight contributes more to the centroid estimate, , compared to a dot position with a lower weight. Preliminary, non-parametric analyses, in which we used a spatially-gridded model and allocated weights to specific grid locations (up to 120), showed that the effects of eccentricity were well described by a unimodal, Gaussian-shaped weighting function anchored at the point of fixation. Therefore, we chose the following form:

| (3) |

The free parameters in this function determine the width (σx and σy) of the Gaussian function and a constant offset across all spatial positions, b. The amplitude of the Gaussian, a, was either +1 or −1 to model an upright or inverted Gaussian, respectively. By definition, all weights should be positive in a weighted average calculation, therefore, we constrained the weighting function to prevent negative weights (see below).

Early Bias Model – Weighted Sum (Weighted Sum)

The Weighted Sum model is similar to our Weighted Average model in that it allows for an unequal integration of the dot representations, but differs in that it does not include normalization of the weights (Equation 4). While this is a relatively minor mathematical change, the Weighted Sum model can capture a wider range of response strategies (see Discussion).

| (4) |

We use the same two-dimensional Gaussian function for ω (Equation 3), now allowing the amplitude (a) to range freely. We again constrained the weighting function to only allow positive weights. While this is not imperative in a weighted sum calculation as it is in a weighted average calculation, in the context of our model, a negative weight would alter the sign of the dot component position. This would cause a dot to shift the perceived centroid towards the opposite hemifield. Preliminary (non-parametric) analyses showed that only one subject (S7) had a small subset (< 10%) of negative weights. Therefore, to maximize the similarity between Weighted Average and Weighted Sum models, parameter constraints remained consistent in both cases.

Unilateral-Cue Condition

Late Bias Model

This model is exactly the same as the Late Bias model for the Bilateral-Cue condition (Equation 1) and we model the Left- and Right-Cue conditions separately, resulting in a unique Late Bias model for each condition. This model captures whether attention yields a constant bias in the centroid estimates across trials, ε, or modulates an eccentricity-dependent bias, β. We hypothesized that εx would differ between the Left-Cue and Right-Cue conditions and, specifically, would be greater in the Right-Cue condition.

Early Bias Model – Weighted Sum (Weighted Sum)

The Weighted Sum model is the only early bias model we considered for the Unilateral-Cue conditions because it performed consistently better than the Weighted Average model in the Bilateral-Cue condition (see Results). Here, we used an identical Weighted Sum model (Equation 4) but modified the weighting function (Equation 3) to account for lateralized attentional effects. Specifically, we hypothesized that attentional differences across the visual field may have altered the peak position or the width of the Gaussian weighting function from Equation 3. Alternatively, or in addition, attention may have imparted a more global change in which the contributions of dot positions in the attended visual field are enhanced while those on the opposite visual field are attenuated. To account for such effects, we allowed a shift of the peak (or trough) along the horizontal and vertical dimensions (μx and μy) and extended the weighting function with linear gradients in both the horizontal and vertical dimensions (mx and my).

| (5) |

In addition, we investigated other types of weighting functions including one that used multiple Gaussians to allow for bimodal peaks in weights, but did not find enough evidence to support the use of these alternate models.

Model Fitting

Model parameters were estimated separately for each subject and condition (Bilateral-Cue, Left-Cue and Right-Cue). Least squares fitting methods were used to minimize the model error concurrently across X and Y coordinates. Pearson’s correlation analysis confirmed that each of the fitted models had a significant correlation between the model predictions and subject responses (t(>500) > 14, p < 10−6).

To determine the parameter values in the Late Bias model, we used the lsqcurvefit routine from the Optimization Toolbox in Matlab 7.9 (The MathWorks, Natick, MA). The non-negativity constraint on the weights (see above) required us to use constrained nonlinear optimization to fit the Weighted Average and Weighted Sum models. To do this we used the fmincon routine from the Optimization Toolbox in Matlab with the following constraint; (a + b)>0. We also constrained the lower and upper bounds for each parameter and set them as follows: μx and μy to −15 and 15 to keep the center of the Gaussian function within the stimulus display area, a , b, and ε to −100 and 100, and σx and σy to 0 and 7.5 so that the Gaussian function would reach an asymptote level within the stimulus presentation area. Preliminary non-parametric analyses supported the use of 7.5° as the maximum value. We then used repeated curve fits, starting from 1000 random initial parameter choices within these bounds to find the optimal set of parameters. We used this optimal set of parameter estimates for subsequent analysis.

We determined 95% bootstrap confidence intervals (95% CI Method) for each of the model parameters using the bootci function in Matlab. For each of 1000 bootstrapped sets, we re-sampled the data with replacement, and re-ran the fmincon procedure with the optimal parameters as initial values.

Comparing Models (AIC Method)

We used the Akaike Information Criterion (AIC) as a measure of relative model performance. This criterion allows the comparison of non-nested models that use different numbers of free parameters and penalizes a model for additional free parameters. Specifically, we used the Least Squares AIC;

| (6) |

where n is the number of trials, is the residuals for each trial, and K is the number of free parameters. This calculation assumes that the errors are normally distributed and have constant variance.

Note that for models with an equal number of parameters, the AIC value is essentially determined by the average squared residual error per trial (i.e.,,Model Prediction Squared Error, MPSE). We use this measure in the main text to provide an intuitive measure of performance. Statistically valid model selection, however, requires comparison of the full AIC values. Models with the lowest AIC value provide the most parsimonious account of the data. We followed the guidelines of Burnham and Anderson (2002) and considered a model to be notably better if its AIC value was less than another model’s AIC by four or more units. Models with an AIC difference less than four were considered to be statistically indistinguishable.

Experiment 2

For each offset of the probe relative to the true centroid position, we calculated the proportion of responses in which subjects judged the probe to be to the left of the centroid. The data from separate cueing conditions (Left-Cue, Right-Cue) were then fitted with separate cumulative Gaussian functions. The probe offset that corresponded to the inflection point of this psychometric function (point of subjective equality, PSE) was used as an index of the perceived centroid. Psychometric functions were fitted using the psignifit toolbox version 2.5.6 (Wichmann & Hill, 2001a, 2001b) in Matlab 7.9. We determined confidence intervals for the PSE using a bootstrapping method and used these confidence intervals to determine whether subject responses differed significantly between cueing conditions (95% CI Method).

Results

In all experiments, subjects estimated the centroid of a briefly presented random dot pattern, RDP (Figure 1). We first report these behavioral responses across all experiments showing that (a) subjects estimated centroids reliably; (b) exogenous attentional cues biased centroid estimates towards the attentional focus, and; (c) the bias due to attention cannot be explained by a motor-response bias. We then describe a number of quantitative models to account for performance with and without lateralized attention. Our modeling results demonstrate that both the retinal eccentricity of the dots and the locus of spatial attention modulate spatial integration, in part, by differentially altering the contribution of specific dot components within the RDP

Behavioral Results

Centroid Estimation (Experiment 1: Bilateral-Cue)

We first confirmed that subjects were capable of identifying the approximate centroid of the RDPs. The constant error (i.e., bias), defined as the mean of the difference between the subjects’ centroid estimates and the actual centroids, was 0.18° horizontally (STE = 0.09°) and −0.27° vertically (STE = 0.12°) across subjects (Figure 3A). Variable error, defined as the standard deviation of subject response error, averaged across subjects was 1.56° horizontally (STE = 0.18°) and 1.46° vertically (STE = 0.17°). We next calculated the correlation between the behavioral responses and the actual centroid on a trial-by-trial basis for each subject using Pearson’s correlation coefficient. All coefficients were significant and ranged from 0.43 to 0.83 (t(>500) > 14, p < 0.0001). This demonstrates that subjects used the positions of the dots on a trial-by-trial basis to guide their behavioral responses and did not just click at the center of the screen.

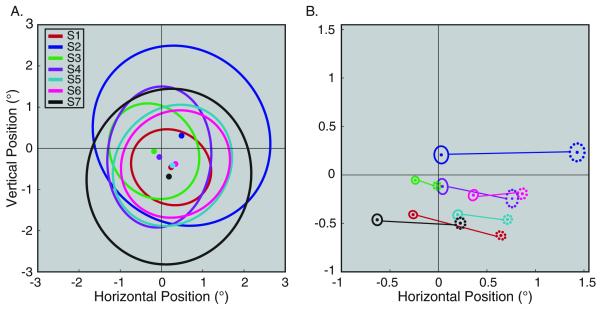

Figure 3.

Behavioral responses per subject. (A) Experiment 1. Bilateral-Cue condition. Constant error (center of ellipse, dots) relative to the actual centroid (0, 0) and variable error (ellipse = 1SD) for each subject. Negative values indicate a response to the left (X-axis) or down (Y-axis) relative to the actual centroid. (B) Experiment 1. Unilateral-Cue conditions: Constant (center of ellipse, dots) and variable (ellipse = 1STE) error for Left-Cue (solid ellipse) and Right-Cue (dotted ellipse) conditions. Each pair of ellipses denotes 1 subject. For all subjects, the centroid estimate in the Left-Cue condition was significantly to the left (t(>1500) < −4.5, p < 0.001) of the centroid estimate in the Right-Cue condition (arrows). Only S1 showed a significant shift in the vertical direction (t(1797) = 3.45, p < 0.001).

This correlation between subject response and the actual centroid does not eliminate the possibility that subjects may have used a subset of the dots in each trial to determine the centroid location. Previous studies have observed that some subjects place particular emphasis on the boundaries of objects (Findlay, Brogan, & Wenban-Smith, 1993), and localize a dot pattern at the centroid of the implied target shape rather than at the centroid of all the dot positions (Melcher & Kowler, 1999). Therefore, we investigated whether subjects determined the centroid of the implied shape, defined as the polygon formed by the dots along the convex hull of the RDPs, rather than the centroid of all the dot components. Because the centroid of the implied shape and the true centroid of all the dots are inevitably correlated, we used partial correlation analysis to disentangle these influences on performance. The partial correlation between subject responses and the actual centroid using all of the dots (group median: 0.60), given the centroid of the implied shape, was significantly higher (rank sum statistic = 110; p < 0.0001) than the partial correlation between subject responses and the centroid of the implied shape (group median: 0.14), given the centroid of all the dots. Using the same methods, we also investigated whether subjects used the average position of the dots on the convex hull, and found similar results. Therefore, there was no indication that subjects mainly used the outermost dots of the RDP when determining the centroid estimate. We will explore and quantify other behavioral strategies in more detail in the Model Selection & Analysis section.

Lateralized Spatial Attention (Experiment 1: Unilateral-Cue)

The main goal of the Unilateral-Cue condition was to determine how exogenous spatial attention influenced subjects’ centroid estimates. In this condition subjects localized a centroid after being cued to either the left (Left-Cue condition) or the right (Right-Cue condition) side of the visual display.

We again found that subjects responded reliably even when cued unilaterally. The Pearson correlation between the centroid estimates and the actual centroid ranged from 0.57 to 0.84 (t(>700) > 21, p < 0.0001). Importantly, attention yielded a significant horizontal bias in the direction of the attended locus for all subjects (t(>1500) < −4.5, p < 0.001; see Figure 3B). The constant error, averaged across subjects, was −0.07° (horizontal; STE = 0.13°) and −0.21° (vertical; STE = 0.09°) in the Left-Cue condition and 0.66° (horizontal; STE = 0.18°) and −0.27° (vertical; STE = 0.11°) in the Right-Cue condition. Only one subject (S1) had a significant difference, 0.22°, in the vertical direction (t(1797) = 3.45, p < 0.001). These differences are not due to subjects’ eye position as their mean horizontal eye position during presentation of the RDP did not differ significantly between the Left-Cue condition, −0.11° (STE = 0.08°), and the Right-Cue condition, −0.12° (STE = 0.08°), for any of the subjects.

In addition, attention did not alter subject response variability, which was consistent across all conditions, i.e. Left-Cue, Right-Cue and Bilateral-Cue (one-way repeated measures ANOVA: F = 1.848, p = 0.20). The variable error in the Left-Cue and Right-Cue conditions ranged from 1.28° to 2.74°.

Motor-response Bias (Experiment 2)

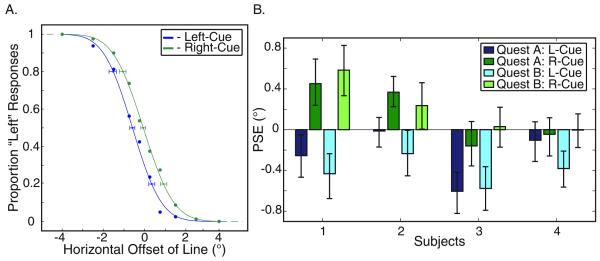

Given that subjects used the computer mouse to indicate the location of the centroid, it is possible that the findings of Experiment 1 were due to an effect of attention on the motor response rather than an effect on visual perception. Specifically, subjects might have simply clicked closer to the attentional cue without a true bias in the location of the perceived centroid. In Experiment 2, we assessed this possibility by repeating the Unilateral-Cue condition using a non-spatial, two alternative forced choice response paradigm rather than a spatially directed motor response. Specifically, subjects were asked to report whether their perceived centroid was to the left or right of a reference line that appeared briefly after the offset of the RDP. Even in this paradigm, though, there is the possibility for motor-response bias. To allow us to determine whether the cue biased the subjects’ selection of button presses or their perception, we also reversed the task instructions (in separate sessions); that is, subjects were asked to report whether the line was to the left or right of the perceived centroid.

Figure 4A plots for one subject the percentage of trials in which the line was reported to be to the left of the centroid as a function of the physical offset of the reference line. For this subject, as for the majority of subjects (Figure 4B), the point of subjective equality (PSE) shifted in the direction of spatial attention and remained consistent in direction regardless of the specific task instructions. Thus, Experiment 2 confirms that the perceived centroid of RDPs shifts toward the locus of attention, regardless of the specific modality of motor response.

Figure 4.

Centroid estimates in a 2AFC variant of the localization task. (A) Psychometric functions for one subject. Each data point is the proportion of trials in which the subject chose the line as being left of the centroid for Left-Cue (blue) and Right-Cue (green) trials. Horizontal axis indicates the physical offset between the reference line and the actual centroid. Error bars depict 95% confidence intervals for 25%, 50% (point of subjective equality, PSE) and 75% thresholds. In this plot, data from trials in which subjects were asked to report whether the centroid was to the left of the line (Question A) and whether the line was to the left of centroid (Question B), are combined. This subject has a perceived centroid (PSE) significantly to the left in the Left-Cue condition relative to the Right-Cue condition. (B) Constant error (PSE) relative to the actual centroid for each subject. Each bar shows the PSE for a specific combination of cue condition (Left-Cue: blue bars; Right-Cue: green bars) and Question Type (A: darker bars; B: lighter bars). Negative values indicate perceived centroids to the left of the actual centroid. Error bars depict 95% confidence intervals. For both question types, the predicted perceived centroid in the Left-Cue condition was significantly to the left relative to the Right Cue condition (t(1797) = 3.45, p < 0.001)) for all but 1 comparison (Subject 4, Question A).

Model Selection & Analysis

In the Behavioral Results section, we showed that subjects localized centroids accurately though imperfectly, and spatial attention introduced further biases in this process. The goal of this section is to describe and understand these results in the context of a three-stage, weighted integration model (Figure 2). In this scheme, inaccuracies in centroid estimates could arise from improper weighting of the individual dot representations (early bias), from a bias in the output of the integrator (late bias), or both. To assess these possibilities, we analyzed the data using three quantitative models that each implemented a different operation for the computation of centroids. In each case, the models were used to predict subjects’ centroid estimates on a trial-by-trial basis using knowledge of the RDP dot positions.

Retinal Eccentricity (Experiment 1: Bilateral-Cue)

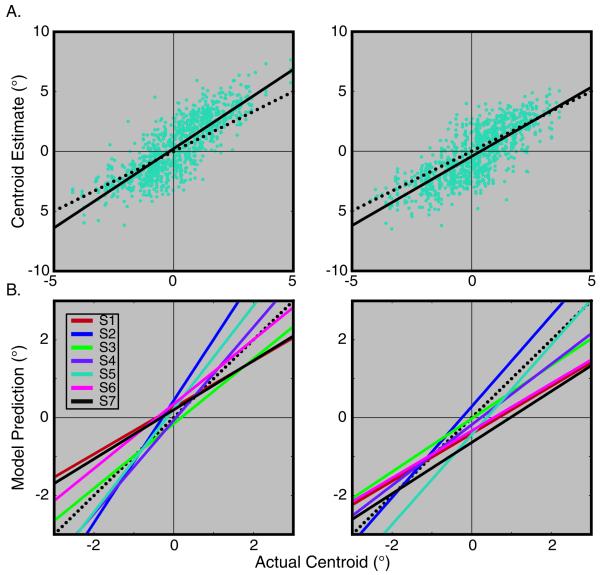

We first assessed the performance of a Late Bias model in which observers are assumed to compute a veridical centroid at an early stage of processing but then subject the output of this operation to a late bias that is a linear function of its retinal eccentricity (Equation 1). The slope parameter of this function, β, characterizes the eccentricity-related bias across the horizontal or vertical dimensions of the visual field. A β significantly less than one indicates an overall foveopetal bias in subjects’ centroid estimates, and thus subjects tended to report the centroid to be closer to the fovea than its true position. A value of β significantly greater than 1 indicates that the observer had an overall linear foveofugal bias. Four out of the seven subjects had a significant foveopetal bias in the perceived centroid in both the horizontal and vertical dimensions (i.e., β < 1 [95% CI Method]; mean = 0.68°, STE = 0.03°; Figure 5). In contrast, two out of the remaining three subjects had a significant foveofugal bias in the perceived centroid in both the horizontal and vertical dimensions, therefore their responses exaggerated the true eccentricity of the centroid (i.e., β > 1 [95% CI Method]; mean = 1.30°, STE = 0.1°). The remaining subject had a significant foveofugal bias (β = 1.20°) in the horizontal direction and a foveopetal bias (β = 0.78°) in the vertical direction.

Figure 5.

Late Bias model. (A) Centroid estimates of a single subject (S5) plotted against the actual centroid position for the horizontal (Left panel) and vertical (Right panel) dimensions. Fitted Late Bias linear regression model (solid line). This subject shows a foveofugal bias (i.e., β > 1). (B) Fitted Late Bias model for all subjects along the horizontal (Left panel) and vertical (Right panel) dimensions. Each solid line depicts the estimated behavioral response as a function of the actual centroid position for one subject using the fitted parameters from the Late Bias model. Four subjects show a significant foveopetal bias in both dimensions (red, black, green, pink), two subjects show a significant foveofugal bias in both dimensions (dark and light blue), and one subject shows a foveofugal bias along the horizontal dimension and a foveopetal bias along the vertical dimension (purple) [95% CI Method]. Unity (dotted) line shows where actual centroid equals the centroid estimate.

To probe for early biases, we evaluated models in which each dot in the RDP was assigned a weight based on its position in the visual field (Equations 2 and 4). In a preliminary analysis, we implemented a nonparametric model using a separate weight parameter for different regions across the visual field in which no assumptions were made about the shape of the underlying distribution of weights across the visual field. These analyses showed some subjects with higher weights in more foveal locations that gradually decreased toward the periphery and others with the reverse pattern. This suggested that a two-dimensional, Gaussian would provide an appropriate description of the distribution of weights across the visual field using only a small number of parameters. This Gaussian weighting function was used in two separate models: the Weighted Average (Equation 2) and Weighted Sum (Equation 4) models. The Weighted Average model differs from the Weighted Sum model in that it normalizes the weights assigned to each dot by the sum of the weights for all dots on a given trial (See Methods).

Of these three models, the Weighted Sum model clearly performed best. For a more intuitive comparative measure of model performance, we calculated the squared residual error per trial for each model. We refer to this measure as the model prediction squared error (MPSE; see Methods-AIC Method). The median MPSE, across subjects, for the Weighed Sum model was 3.48 deg2 (1.03 deg2 < MPSE < 7.69 deg2), whereas, the median MPSE for the Late Bias Model was 3.94 deg2 (1.09 deg2 < MPSE < 8.57 deg2) and 4.16 deg2 for the Weighted Average model (1.24 deg2 < MPSE < 9.28 deg2). These comparisons of relative model performance, however, do not take into account the fact that each of the models; Late Bias, Weighted Average and Weighted Sum, has a different number of free parameters. We used the Akaike Information Criterion (AIC) to overcome this limitation. Lower AIC values indicate a more parsimonious model, and one model is considered to outperform another model significantly if its AIC value is lower than the comparison model by four or more units (Burnham & Anderson, 2002).

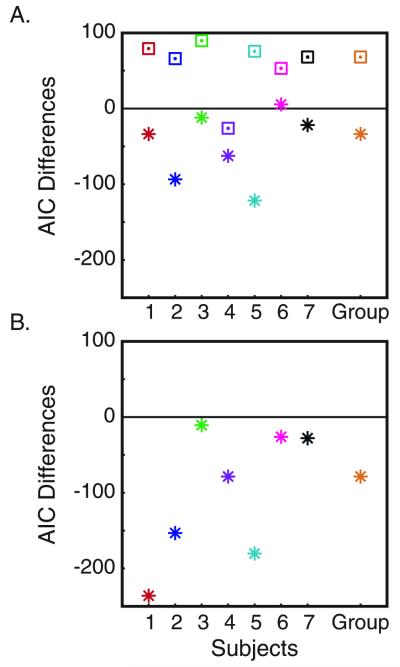

Figure 6a reports the AIC values for the early bias models (symbols) relative to that of the Late Bias model (horizontal line at zero). Thus, negative values indicate early bias models that outperform the Late Bias model. Using this criterion, the Late Bias model outperformed the Weighted Average model for six out of seven subjects. The Weighted Sum model, however, outperformed the Late Bias model for six out of seven subjects (AIC differences; Weighted Average median = 66.12, STE = 14.66; Weighted Sum median = −33.62, STE = 17.43). Hence, this statistical analysis shows strong support for the Weighted Sum model.

Figure 6.

A comparison of relative model performance using the Akaike Information Criterion (AIC). The AIC values for each model (Weighted Average [squares] and Weighted Sum [asterisks]) are plotted as difference scores relative to that observed for the Late Bias model (horizontal black line) for individual subjects (columns, color and number consistent with prior figures) and group median. Negative values indicate AICs lower than the Late Bias model. Lowest AIC value indicates best model (A) Experiment 1. Bilateral-Cue. Weighted Sum model has the lowest AIC in all but 1 case (S6) by four or more units. (B) Experiment 1. Unilateral-Cue. The AIC value for the Weighted Sum model was lower than the Late Bias model by four or more in all but one case (S6).

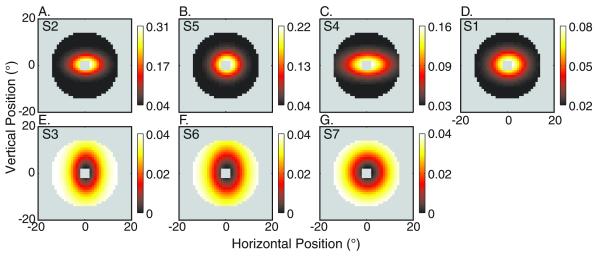

Given that the Weighted Sum model best described the data, we next examined the subject-specific weight distributions from this model to assess the contribution of each dot component to subjects’ centroid estimations. Four out of the seven subjects exhibited higher weights closer to the fovea (Figure 7, A-D), while the remaining three subjects displayed an opposite pattern, albeit with a smaller effect size (Figure 7, E-G). These weight patterns suggest that dot positions closer to the fovea influenced the centroid estimation more or less than would be expected from an equal integration of all dot positions. We confirmed that although some effects were small, the majority of subjects (6 out of 7) displayed significant differential weighting: the amplitudes of the Gaussian weight functions differed significantly from zero (95% CI Method).

Figure 7.

Eccentricity Weight Maps (Experiment 1. Bilateral-Cue condition). Weights maps were determined with the Weighted Sum model (Equation 3). The Weighted Sum model describes centroid estimates as a weighted sum of the dot positions. The color maps indicate the weight at a particular spatial location with white being largest and black being lowest. Gray regions depict areas without any dot positions. (A-D) Weight maps showing higher foveal weights. Ordered from greatest effect size to smallest effect size. (E-G) Weight maps showing lower foveal weights for the remaining three subjects. Each panel has its own color map to allow the visualization of all subject weight patterns, even when idiosyncratic effect sizes were small. All subjects except one (S3), however, had a significant eccentricity-dependent weight gradient (See Main Text for details).

It is important to note that the pattern of weights across the visual field (i.e., an upright or inverted Gaussian function) does not map directly onto an overall foveopetal or foveofugal bias in performance. For example, Subject 5 has an overall foveofugal bias (Figure 5A), but higher weights at the fovea (Figure 7B). This may seem counterintuitive, but in the Weighted Sum model, the overall magnitude of the weights indicates whether there is a general bias towards or away from the fovea. To understand this, consider a subject who calculates the true centroid of the dots; this subject’s weights should be 0.04, since there are 25 dots presented on a trial. However, if the subject weighted the majority of dot positions below 0.04 there will be an overall foveopetal bias (Figure 7, D-G), whereas, if the majority of weights are above 0.04 there will be an overall foveofugal bias (Figure 7, A & B). The relative magnitude of the foveal and peripheral weights only modulates this bias depending on the number of dots presented in each of those regions. For instance, a subject with the majority of weights above 0.04 and an upright Gaussian will overestimate the centroid of an RDP with many dots near the fovea less than an RDP with many dots in the periphery. The ability to capture such effects is a qualitative difference between the weighted sum and the less flexible weighted average model. We expand on this idea further in the Discussion.

The significant parameters of the Gaussian weighting function and the superior performance of the Weighted Sum model relative to the Late Bias model suggest that there is an influence of eccentricity on the encoding of individual dot positions and hence, an early bias. However, this does not imply there is not also a late bias. The error term, ε, in Equation 4 of the Weighted Sum model represents an additive late bias. For the majority of subjects (6 out of 7) this term differed significantly from zero in both the horizontal (mean = 0.17°; STE = 0.08°) and vertical directions (mean = −0.25°; STE = 0.12°). This suggests the application of a rightward and downward bias after the integration of dot components.

Lateralized Spatial Attention (Experiment 1: Unilateral-Cue)

We next examined how spatial attention altered perceived centroids, again in the context of our three-stage spatial integration framework (Figure 2). Just as inaccuracies in baseline performance due to retinal eccentricity could have arisen from early or late computational biases, so too could our observed attentional effects. Because the Weighted Average model fared poorly in the previous section, we did not consider it here. We did, however, compare model performance of the Late Bias model and the Weighted Sum model. In the Late Bias model attention induces a linear bias on the actual centroid (Equation 1). In the Weighted Sum model attention could shift the Gaussian weighting functions (to model localized attraction by the exogenous cues), or induce a linear gradient within the weighting function (to model a more global attraction towards the attended side) (Equation 5). Across subjects, the median model prediction squared error per trial for the Weighted Sum model was 3.77 deg2 (1.91 deg2 < MPSE < 10.03 deg2), whereas, the median MPSE was 3.89 deg2 for the Late Bias model (1.95 deg2 < MPSE < 11.08 deg2). To determine whether the Weighted Sum model is truly a better model given the additional free parameters, we compared the AIC values for the Weighted Sum model to the Late Bias model for the Unilateral-Cue conditions (Figure 6B). In all subjects, the Weighted Sum model significantly outperformed the Late Bias model (AIC difference; median = −78.58, STE = 33.41). Therefore, we conclude that the Weighted Sum model gave the most parsimonious account of the influence of attention on spatial integration.

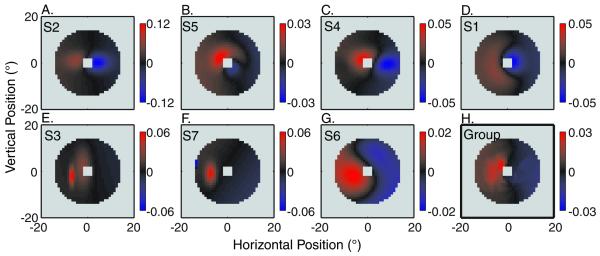

The specific pattern of weights in the Weighted Sum model during the lateralized attentional conditions provides insight into the mechanisms of spatial attention. To visualize the attention-induced changes in weights, we determined the differences between weights in the Left-Cue and Right-Cue conditions across the stimulus presentation area (Figure 8). While each subject’s weight map showed an idiosyncratic pattern, there were clear commonalities that were also reflected in the population weight map (Panel H): a peak in weight differences in the left visual field and a trough in weight differences in the right visual field. This qualitative understanding of the weight maps was confirmed by a statistical analysis of the free parameters in the model. The slope of the horizontal gradient (mx) in the Right-Cue condition was higher than that of the Left-Cue condition for all subjects (individually significant in four out of seven subjects, 95% CI Method). This shows a coarse effect of attention; weights in the attended visual field were generally greater than weights in the unattended field. At the same time, the peak of the weighting function (μx) was shifted rightward in the Right-Cue compared to the Left-Cue condition in five out of seven subjects (individually significant in two subjects, 95% CI Method). This shows a more focused shift of attention towards the exogenous cue. Lastly, and unexpectedly, the late constant horizontal bias, εx, was larger in the Left-Cue condition than to the Right-Cue in all subjects. This difference was individually significant in four out of seven subjects (95% CI Method). While this late bias is opposite to our expectation, additional analyses in which we omitted this term from the model generated qualitatively similar weight maps and had little effect on overall model performance.

Figure 8.

Attentional Weight Maps (Experiment 1. Unilateral-Cue conditions). Attentional weights were determined as the difference maps between the Left-Cue and Right-Cue condition in the Weighted Sum model (Equation 5). Red indicates a location where the Left-Cue weight was higher and blue indicates a location where the Right-Cue weight was higher. Gray regions depict areas without any dot positions. (A-G) Subject specific weight difference maps ordered the same as in Figure 7. In all cases, weights were enhanced in one or both of the visual fields that contained the exogenous cue. The local structure of these differences varied considerably across subjects. (H) Group weight difference map. Obtained using the median of each weight difference at a specific spatial position across subjects.

Discussion

Our experiments and models investigated the role of retinal eccentricity and the locus of spatial attention in spatial integration. Although subjects were reasonably accurate when determining the centroid of a random dot pattern (RDP), our Weighted Sum model revealed systematic differences in the utilization of specific dot positions. First, subjects put significantly different weight on foveal regions compared to more peripheral regions of the visual field. Second, exogenous cues yielded spatially specific increases in weights surrounding the focus of attention, as well as, global increases in weights in the attended visual field. We conclude that the localization of extended objects consisting of multiple components is modulated by eccentricity and exogenous attention, and that the influence of attention includes a modulation of the eccentricity-dependent influence on the components.

We first discuss why the Weighted Sum model outperformed the Weighted Average model, then discuss our work in the light of earlier research on the localization of visual objects, and end with a speculative proposal for a neural implementation of the Weighted Sum model.

Weighted sum versus weighted average

If subjects calculated the true centroid, then both the Weighted Sum model using weights of 0.04 and the Weighted Average model using weights of 1 would have predicted their performance accurately. Our data, however, clearly showed that there are systematic errors in the localization of centroids due in part to differential weighting of specific dot positions. These errors were most parsimoniously captured by the Weighted Sum model, and not by the Weighted Average model. The difference between these models is the normalization across all weights in the Weighted Average model. Consider the subjects with lower weights surrounding the foveal regions; the normalization of the Weighted Average model would inevitably lead to a foveofugal bias. Contrary to this intuition, some of our subjects with this inverted Gaussian weighting pattern nevertheless showed a foveopetal bias. Hence at an abstract level of description one can speculate that the subjects did not perform an appropriate normalization in their centroid calculation.

Retinal eccentricity

All subjects showed differential weighting of dot positions depending on retinal eccentricity. Higher foveal weights might have been expected given earlier reports of foveal biases in localization tasks (Mateeff & Gourevich, 1983; O’Regan, 1984; van der Heijden, van der Geest, de Leeuw, Krikke, & Musseler, 1999), and lower foveal detection thresholds (Johnson, Keltner, & Balestrery, 1978). We hypothesized initially that the three subjects with higher peripheral weights may have placed particular emphasis on the dots along the boundary of the stimulus (Findlay, Brogan, & Wenban-Smith, 1993). However, we eliminated the possibility that subjects actually calculated the centroid of the implied shape of the RDP using partial correlation analysis. In addition, there was no evidence to indicate that subjects with an inverted Gaussian pattern showed a higher partial correlation between the subject responses and the centroid of the implied shape given the actual centroid of the dots. Alternatively, enhanced sensitivity to transient stimuli for more peripheral targets may have contributed to this pattern. Given that the RDP was displayed very briefly, peripheral positions may have exerted greater influence on behavioral responses than foveal dots. An interesting prediction of this hypothesis is that individual variations in sensitivity should correlate with idiosyncratic localization weight patterns.

A previous study reported similar weighting patterns (Drew, Chubb, & Sperling, 2010) relative to the true centroid of an RDP. Since eye position was not restricted in that study, however, it is not possible to assess how the retinal eccentricity of each dot position influenced the final weighting, and how that may have played into the weighting relative to the true centroid. Conversely, because our true centroid positions were relatively close to the fovea, the eccentricity bias we observed could also reflect these “object-centered” effects of Drew et al. However, when we examined weighting relative to the true centroid of each RDP, we did not find similar weight patterns across subjects. To further disentangle centroid-centered and fovea-centered weighting a future study would need to both control eye position and vary centroid position systematically and over a wider range than in our study.

Related to this, even though we interpret our finding as an effect of eccentricity, we acknowledge that the subjects could have developed a bias to respond closer to the fovea because that was the average location of the centroid over all trials, or because they had to maintain fixation at the center of the display throughout the entire trial. Such effects, however, would depend only on the location of the centroid, not the positions of the individual dots; hence, our finding that an early bias model outperformed the Late Bias model speaks against this interpretation.

In contrast to our results, McGowan and colleagues (1998) found no evidence of differential weighting due to dot position. We believe this can be attributed to the small size of their RDPs; even in our experiments the weighting was relatively constant on a small scale. McGowan et al. also found a strong nonlinear effect of dot proximity, such that isolated dots had a stronger influence on the centroid than clustered dots. Although we found some support for such effects in our data, they were weak and not consistent across subjects. This may also be the consequence of our larger RDPs and concomitant larger spacing between the dots.

Lateralized spatial attention

Consistent with earlier reports of attentional mislocalization of single dots (Tsal & Bareket,1999), we showed that centroid estimates were shifted in the direction of (exogenously cued) attention. Our modeling results showed that this was most parsimoniously captured as a change in the weighting function in the Weighted Sum model such that locations near the cue received larger weights than locations remote from the cue. This enhancement was not restricted to the location of the cue as we also found a more general increase in weights across the attended side of the visual field.

To our knowledge ours is the first study to show that spatial attention differentially alters the usage of the components of a target in a localization task. One way to interpret these findings is that attentional modulation acts, at least in part, on early visual representations. Consistent with this, the weight patterns that our behavioral data reveal are similar to those found using functional imaging (Datta & DeYoe, 2009), which leads us to speculate about possible neural mechanisms.

Neural mechanisms

While the Weighted Sum model used in our experiments is a descriptive model, we speculate that it may be implemented neurally as distortions in the population activity of early visual neurons. In this view, the input layer in Figure 2 would correspond to an early retinotopic area where receptive fields correspond to specific locations on the retina; these neurons are labeled lines for position. If a downstream area performs the centroid computation by computing the inner product of each neuron’s label and its firing rate, then any distortion or inhomogeneity in the population firing rate would lead to a misperception. In terms of neural mechanisms, eccentricity-dependent weighting (i.e. distortions or inhomogeneities in the neural response) may result from differences in receptive field size, cortical magnification, latency differences, or other differences in local circuitry (Roberts, Delicato, Herrero, Gieselmann, & Thiele, 2007).

To account for a late bias, the region that computes the centroid or a region further downstream may further bias the centroid computation to yield the final perceived centroid. Given that our data show that subjects perform an imperfect normalization across all dots when performing the centroid task, one way to interpret these data is that they reflect changes in the neural activity normalization process (Heeger, 1992) with eccentricity or changes in attention (Reynolds & Heeger,2009).

Alternatively, the bias due to attention could be the result of well-known attentional modulation of neuronal activity of early visual areas (for review, see Kastner & Ungerleider, 2000; Reynolds & Chelazzi, 2004), attentional modulation of receptive field location (Womelsdorf, Anton-Erxleben, Pieper, & Treue, 2006; Womelsdorf, Anton-Erxleben, & Treue, 2008), or the eccentricity-dependent attentional modulation of spatial integration (Roberts, Delicato, Herrero, Gieselmann, & Thiele, 2007). Our behavioral data are too coarse to distinguish among the relative contributions of these processes; future studies using functional imaging or electrophysiological recordings are required to determine how visual cortex integrates spatial information and generates a percept of position.

Acknowledgements

We thank Jacob Duijnhouwer and Till Hartmann for comments on the manuscript. This work was supported by the National Eye Institute (EY017605), The Pew Charitable Trusts, and the National Health and Medical Research Council of Australia.

References

- Bocianski D, Müsseler J, Erlhagen W. Effects of attention on a relative mislocalization with successively presented stimuli. Vision Research. 2010;50:1793–1802. doi: 10.1016/j.visres.2010.05.036. PubMed. [DOI] [PubMed] [Google Scholar]

- Burnham KP, Anderson DR. Model Selection and Multi-Model Inference: A Practical Information-Theoretic Approach. 2nd ed. Springer-Verlag; New York: 2002. [Google Scholar]

- Cheal M, Lyon DR. Central and peripheral precuing of forced-choice discrimination. Quarterly Journal of Experimental Psychology. A, Human Experimental Psychology. 1991;43:859–880. doi: 10.1080/14640749108400960. PubMed. [DOI] [PubMed] [Google Scholar]

- Cohen EH, Schnitzer BS, Gersch TM, Singh M, Kowler E. The relationship between spatial pooling and attention in saccadic and perceptual tasks. Vision Research. 2007;47:1907–1923. doi: 10.1016/j.visres.2007.03.018. [PubMed] [Article] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Datta R, DeYoe EA. I know where you are secretly attending! The topography of human visual attention revealed with fMRI. Vision Research. 2009;49:1037–1044. doi: 10.1016/j.visres.2009.01.014. [PubMed] [Article] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Denisova K, Singh M, Kowler E. The role of part structure in the perceptual localization of a shape. Perception. 2006;35:1073–1087. doi: 10.1068/p5518. PubMed. [DOI] [PubMed] [Google Scholar]

- Drew SA, Chubb CF, Sperling G. Precise attention filters for Weber contrast derived from centroid estimations. Journal of Vision. 2010;10(10):1–16. doi: 10.1167/10.10.20. 20. http://www.journalofvision.org/content/10/10/20, doi: 10.1167/10.10.20. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Findlay JM, Brogan D, Wenban-Smith MG. The spatial signal for saccadic eye movements emphasizes visual boundaries. Perception & Psychophysics. 1993;53:633–641. doi: 10.3758/bf03211739. PubMed. [DOI] [PubMed] [Google Scholar]

- Fortenbaugh FC, Robertson LC. When here becomes there: Attentional distribution modulates foveal bias in peripheral localization. Attention, Perception, & Psychophysics. 2011;73:809–828. doi: 10.3758/s13414-010-0075-5. [PubMed] [Article] [DOI] [PMC free article] [PubMed] [Google Scholar]

- He P, Kowler E. Saccadic localization of eccentric forms. Journal of the Optical Society of America A. 1991;8:440–449. doi: 10.1364/josaa.8.000440. PubMed. [DOI] [PubMed] [Google Scholar]

- Heeger DJ. Normalization of cell responses in cat striate cortex. Visual Neuroscience. 1992;9:181–197. doi: 10.1017/s0952523800009640. PubMed. [DOI] [PubMed] [Google Scholar]

- Johnson CA, Keltner JL, Balestrery F. Effects of target size and eccentricity on visual detection and resolution. Vision Research. 1978;18:1217–1222. doi: 10.1016/0042-6989(78)90106-2. PubMed. [DOI] [PubMed] [Google Scholar]

- Kastner S, Ungerleider LG. Mechanisms of visual attention in the human cortex. Annual Review of Neuroscience. 2000;23:315–341. doi: 10.1146/annurev.neuro.23.1.315. PubMed. [DOI] [PubMed] [Google Scholar]

- Kosovicheva AA, Fortenbaugh FC, Robertson LC. Where does attention go when it moves? Spatial properties and locus of the attentional repulsion effect. Journal of Vision. 2010;10(12):1–13. doi: 10.1167/10.12.33. 33. http://www.journalofvision.org/content/10/12/33, doi: 10.1167/10.12.33. [PubMed] [Article] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kowler E, Blaser E. The accuracy and precision of saccades to small and large targets. Vision Research. 1995;35:1741–1754. doi: 10.1016/0042-6989(94)00255-k. PubMed. [DOI] [PubMed] [Google Scholar]

- Krekelberg B. The persistence of position. Vision Research. 2001;41:529–539. doi: 10.1016/s0042-6989(00)00281-9. PubMed. [DOI] [PubMed] [Google Scholar]

- Krekelberg B, Lappe M. Neuronal latencies and the position of moving objects. Trends in Neurosciences. 2001;24:335–339. doi: 10.1016/s0166-2236(00)01795-1. PubMed. [DOI] [PubMed] [Google Scholar]

- Landy MS. Combining multiple cues in texture edge localization. Proceedings of the SPIE. 1993;1913:506–517. [Google Scholar]

- Landy MS, Kojima H. Ideal cue combination for localizing texture-defined edges. Journal of the Optical Society of America A. 2001;18:2307–2320. doi: 10.1364/josaa.18.002307. PubMed. [DOI] [PubMed] [Google Scholar]

- Mateeff S, Gourevich A. Peripheral vision and perceived visual direction. Biological Cybernetics. 1983;49:111–118. doi: 10.1007/BF00320391. PubMed. [DOI] [PubMed] [Google Scholar]

- McGowan JW, Kowler E, Sharma A, Chubb C. Saccadic localization of random dot targets. Vision Research. 1998;38:895–909. doi: 10.1016/s0042-6989(97)00232-0. PubMed. [DOI] [PubMed] [Google Scholar]

- Melcher D, Kowler E. Shapes, surfaces and saccades. Vision Research. 1999;39:2929–2946. doi: 10.1016/s0042-6989(99)00029-2. PubMed. [DOI] [PubMed] [Google Scholar]

- Morgan MJ, Hole GJ, Glennerster A. Biases and sensitivities in geometrical illusions. Vision Research. 1990;30:1793–1810. doi: 10.1016/0042-6989(90)90160-m. PubMed. [DOI] [PubMed] [Google Scholar]

- Muller HJ, Rabbitt PM. Reflexive and voluntary orienting of visual attention: Time course of activation and resistance to interruption. Journal of Experimental Psychology: Human Perception and Performance. 1989;15:315–330. doi: 10.1037//0096-1523.15.2.315. PubMed. [DOI] [PubMed] [Google Scholar]

- Müsseler J, van der Heijden AHC, Mahmud SH, Deubel H, Ertsey S. Relative mislocalization of briefly presented stimuli in the retinal periphery. Perception & Psychophysics. 1999;61:1646–1661. doi: 10.3758/bf03213124. PubMed. [DOI] [PubMed] [Google Scholar]

- O’Regan JK. Retinal versus extraretinal influences in flash localization during saccadic eye movements in the presence of a visible background. Perception & Psychophysics. 1984;36:1–14. doi: 10.3758/bf03206348. PubMed. [DOI] [PubMed] [Google Scholar]

- Ploner CJ, Ostendorf F, Dick S. Target size modulates saccadic eye movements in humans. Behavioral Neuroscience. 2004;118:237–242. doi: 10.1037/0735-7044.118.1.237. PubMed. [DOI] [PubMed] [Google Scholar]

- Prinzmetal W, Amiri H, Allen K, Edwards T. Phenomenology of attention: 1. Color, location, orientation, and spatial frequency. Journal of Experimental Psychology: Human Perception and Performance. 1998;24:261–282. [Google Scholar]

- Reynolds JH, Chelazzi L. Attentional modulation of visual processing. Annual Review of Neuroscience. 2004;27:611–647. doi: 10.1146/annurev.neuro.26.041002.131039. PubMed. [DOI] [PubMed] [Google Scholar]

- Reynolds JH, Heeger DJ. The normalization model of attention. Neuron. 2009;61:168–185. doi: 10.1016/j.neuron.2009.01.002. [PubMed] [Article] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roberts M, Delicato LS, Herrero J, Gieselmann MA, Thiele A. Attention alters spatial integration in macaque V1 in an eccentricity-dependent manner. Nature Neuroscience. 2007;10:1483–1491. doi: 10.1038/nn1967. [PubMed] [Article] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rose D, Halpern DL. Stimulus mislocalization depends on spatial frequency. Perception. 1992;21:289–296. doi: 10.1068/p210289. PubMed. [DOI] [PubMed] [Google Scholar]

- Stork S, Musseler J, van der Heijden AH. Perceptual judgment and saccadic behavior in a spatial distortion with briefly presented stimuli. Advances in Cognitive Psychology. 2010;6:1–14. doi: 10.2478/v10053-008-0072-6. [PubMed] [Article] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Suzuki S, Cavanagh P. Focused attention distorts visual space: An attentional repulsion effect. Journal of Experimental Psychology: Human Perception and Performance. 1997;23:443–463. doi: 10.1037//0096-1523.23.2.443. PubMed. [DOI] [PubMed] [Google Scholar]

- Tsal Y, Bareket T. Effects of attention on localization of stimuli in the visual field. Psychonomic Bulletin & Review. 1999;6:292–296. doi: 10.3758/bf03212332. PubMed. [DOI] [PubMed] [Google Scholar]

- van der Heijden AH, van der Geest JN, de Leeuw F, Krikke K, Musseler J. Sources of position-perception error for small isolated targets. Psychological Research. 1999;62:20–35. doi: 10.1007/s004260050037. PubMed. [DOI] [PubMed] [Google Scholar]

- Vishwanath D, Kowler E. Localization of shapes: Eye movements and perception compared. Vision Research. 2003;43:1637–1653. doi: 10.1016/s0042-6989(03)00168-8. PubMed. [DOI] [PubMed] [Google Scholar]

- Wichmann FA, Hill NJ. The psychometric function: I. Fitting, sampling, and goodness of fit. Perception & Psychophysics. 2001a;63:1293–1313. doi: 10.3758/bf03194544. PubMed. [DOI] [PubMed] [Google Scholar]

- Wichmann FA, Hill NJ. The psychometric function: II. Bootstrap-based confidence intervals and sampling. Perception & Psychophysics. 2001b;63:1314–1329. doi: 10.3758/bf03194545. PubMed. [DOI] [PubMed] [Google Scholar]

- Womelsdorf T, Anton-Erxleben K, Pieper F, Treue S. Dynamic shifts of visual receptive fields in cortical area MT by spatial attention. Nature Neuroscience. 2006;9:1156–1160. doi: 10.1038/nn1748. PubMed. [DOI] [PubMed] [Google Scholar]

- Womelsdorf T, Anton-Erxleben K, Treue S. Receptive field shift and shrinkage in macaque middle temporal area through attentional gain modulation. Journal of Neuroscience. 2008;28:8934–8944. doi: 10.1523/JNEUROSCI.4030-07.2008. PubMed] [Article] [DOI] [PMC free article] [PubMed] [Google Scholar]