Abstract

Objectives

Current robotic training approaches lack criteria for automatically assessing and tracking (over time) technical skills separately from clinical proficiency. We describe the development and validation of a novel automated and objective framework for assessment of training.

Methods

We are able to record all system variables (stereo instrument video, hand and instrument motion, buttons and pedal events) from the da Vinci surgical systems using a portable archival system integrated with the robotic surgical system. Data can be collected unsupervised, and the archival system does not change system operation in any way. Our open-ended multi-center protocol is collecting surgical skill benchmarking data from 24 trainees to surgical proficiency, subject only to their continued availability. Two independent experts performed structured (OSATS) assessments on longitudinal data from 8 novice and 4 expert surgeons to generate ground truth for training and to validate our computerized statistical analysis methods in identifying ranges of operational and clinical skill measures.

Results

Objective differences in operational and technical skill between known experts and other subjects were quantified. Longitudinal learning curves and statistical analysis for trainee performance measures are reported. Graphical representations of skills developed for feedback to the trainees are also included.

Conclusions

We describe an open-ended longitudinal study and automated motion recognition system capable of objectively differentiating between clinical and technical operational skills in robotic surgery. Our results demonstrate a convergence of trainee skill parameters towards those derived from expert robotic surgeons over the course of our training protocol.

Keywords: robotic surgery, robotic surgery training, assessment, learning curves

Introduction

Minimally-invasive cardiothoracic operations have been facilitated with new surgical robotic technologies. Although over 1700 surgical robotic systems were in clinical use worldwide [1] by mid 2010, the application of robotics to cardiothoracic surgery has not caught up with other surgical disciplines due largely to steep learning curves in developing operational proficiency with surgical robotic platforms [2,3] coupled with comparatively lower tolerances for technical error and delay. Specifically, the technical challenges presented in performing precise and complex reconstructive techniques with limited access and the longer cardiopulmonary bypass and aortic cross clamp times associated with robot-assisted cardiac operations [2,3,4] have hampered widespread acceptance of robotics in the cardiothoracic surgical community. Smaller user base, slow refinement of the technology, and consequently slow accumulation of evidence of clinical benefit has also slowed the adoption of the new technology. Improved adoption and use of robotic surgery technology will require improvements in both technology, and training methods.

The traditional Halstedian principles of surgical training using a “see one, do one, teach one” apprenticeship model are not wholly applicable to surgical robotic training. To develop clinical proficiency, effective training and practice strategies to familiarize surgeons with new robotic technologies are required [2,3]. However, currently practiced robotic training approaches lack uniform criteria for automatically assessing and tracking technical and operational skills. Establishing standard, objective, and automated skill measures leading to effective training curricula and certification programs for robotic surgery will require: (1) a significant cohort of robotic surgeons-in-training of similar skill that can be tracked longitudinally (e.g., one year) during the acquisition of skills, (2) a set of standardized surgical tasks, (3) the ability to acquire and analyze large volumes of motion data, and (4) consistent “ground truth” assessment of the collected data by expert robotic surgeons.

Published research in robotic surgery training has been limited to quantification of skill measures from ab initio training [5,6] of relatively short duration. Previous efforts to objectively quantify measures of skill on a limited number of trainees [7, 8] have also been predicated upon comparing trainees of varying skill levels (e.g., postgraduate year of training) with “expert” surgeons. These studies use the experimental tasks for both training and assessment. Robotic surgical systems require complex man-machine interactions, and robotic training assessment protocols cannot yet differentiate between the objective assessment of clinical task skills and machine operational and technical skills.

Consequently, we opted to take a new approach by developing a novel automated motion recognition system capable of objectively differentiating between operational and technical robotic surgical skills and longitudinally tracking trainees during skill development. We establish multiple learning curves for each training step; provide comparative analysis of skill development, and develop methods for feedback to effectively address skill deficiencies. We also use our tasks as benchmark evaluations, not as training tasks. This is also the first longitudinal multi-center study involving robotic surgical training and comprises the largest trainee cohort to date.

Methods

The measurement of objective performance metrics in surgical training (i.e., efficiency of hand movement) has previously required instrumented prototype devices that are not widely available, interfere with surgical technique, and employ technologies that are not commonly available or easily integrated into conventional surgical instrumentation [9]. As a novel “transparent” alternative, we have developed a new infrastructure to collect motion and video data from robotic surgical training that does not require any special instrumentation and holds the promise of creating a training environment that does not require on-site supervision by an expert surgeon. While the methods described can be extended to use during human surgeries, the work here primarily describes their application during controlled, structured environment during ab initio training.

Data Collection

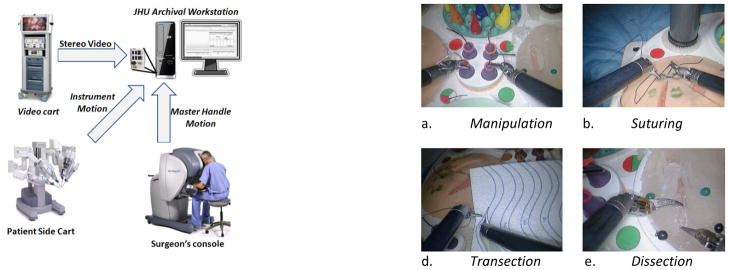

Our motion data collection platform is based upon the da Vinci surgical robotic system (Intuitive Surgical, Sunnyvale, California). Its Application Programming Interface (API [10]) provides a robust motion data set containing 334 position and motion parameters. The API automatically streams motion vectors including joint angles, Cartesian position and velocity, gripper angle, and joint velocity and torque data for the master console manipulators, stereoscopic camera, and instruments over an Ethernet connection to an encrypted archival workstation. The API also streams several system events, including instrumentation changes, manipulator “clutching”, and visual field adjustments. The API can provide faster motion data acquisition rates (up to 100 Hz) than those obtained with video recordings (typically up to 30 Hz). In addition, high-quality time-synchronized video can be acquired from the stereoscopic video system. Using the data collection framework (Figure 1, left) 334 system variables were sampled at 50 Hz and stereoscopic video streams collected at 30 Hz. This data was anonymized at source, assigned a unique subject identifier, and archived in a database according to an approved IRB protocol. For analysis, the archived data was further segmented into task or system operation sequences. This process generated 20–25 GB of data per hour. No special training was required to operate the archival workstation, which can be left connected in place without impacting surgical or other training use.

Figure 1.

Archival system configuration with the da Vinci system (left) and inanimate training pods (right) for the first robotic surgery training module.

Experimental Tasks

Training data was collected during all stages of training. Our training protocol was divided into different training modules:

Module I: System Orientation Skills

This training module is intended to familiarize the trainee with basic system and surgical skills, including master console clutching, camera control, manipulation scale change, retraction, suturing, tissue handling, bimanual manipulation, transaction, and dissection. Since robotic trainees already practice these basic skills in current training regimes, we felt that they were appropriate for benchmarking. On a monthly basis, we collected data from periodic benchmarking executions of four minimally invasive surgical skills taken from the Intuitive Surgical robotic surgery training practicum [11]. These tasks (Figure 1) are:

Manipulation: This task tests the subject’s system operation skills. It requires transfer of four rings from the center pegs of the task pod to the corresponding outer peg, followed by replacement of the rings to the inner pegs in sequence. Elementary task performance measures include task completion times and task errors (e.g., dropped ring/peg, moving instruments outside of field of view).

Suturing: This task involves the repair of a linear defect with three 10 cm lengths of 3–0 Vicryl suture. Elementary task performance measures include task completion times and task errors (e.g., dropped needles, broken sutures, inaccurate approximation).

Transection: This task involves cutting an “S” or circle pattern on a transection pod using curved scissors while stabilizing the pod with the third arm. Elementary task performance measures include task completion times and task errors (e.g., cutting outside of the pattern).

Dissection: The dissection task requires dissection of a superficial layer of the pod to gain exposure to a buried vessel, followed by circumferential dissection to fully mobilize the vessel. Task completion times and errors (e.g., damage to the vessel, incomplete mobilization, and excessive dissection) are measured.

These orientation laboratories typically produced an hour of training data. Upon successful acquisition of these basic skills, trainees were graduated Module II.

Module II: Minimally-Invasive Surgical Skills

This module is intended to familiarize the trainee with basic minimally invasive surgical (MIS) skills, including port placement, instrument exchange, complex manipulation, and resolution of instrument collisions.

In this manuscript, we highlight analysis of the first training module. Graduation between modules is based on the trainees reaching expert skill levels or upon completion of six months. For this study, we aim to continue to track our trainees to proficiency.

Recruitment and Status

Data from 12 subjects (8 trainees, and 4 expert robotic surgeons) from three academic surgical training programs (Johns Hopkins, Boston Children’s, and Stanford) was analyzed in this work. Additional training centers and subjects (target cohort of 24 subjects) are being added as approval is received from IRBs and their training robots are activated for data collection by Intuitive Surgical. Our subjects were stratified according to four skill levels: novice, beginner, intermediate, and expert. Novice trainees were defined as having no prior experience with the da Vinci robotic system. Beginner trainees possessed only limited dry-lab experience and no clinical experience with the da Vinci system. Intermediate trainees possessed limited clinical experience with the robotic system. Expert users were comprised of faculty surgeons with clinical robotic surgical practices. Performance data from each subject was collected at monthly intervals throughout their training period. Expert surgeons provided two executions of the training tasks to establish skill metrics. Between benchmarking sessions, subjects (resident, fellow, or surgeons, 4–23 years of clinical experience), performed varying amounts of additional training or assisted in the operating room as appropriate. Data from subjects was archived periodically in data cartridges shipped to Johns Hopkins for further analysis. In this article, we analyze 4 expert users and 8 other users of non-expert skill levels.

Structured Assessment

To validate our framework’s construct, we applied Objective Structured Assessment of Technical Skills (OSATS) [12, 13] evaluations for each task execution. The OSATS global rating scale consists of six skill-related variables in operative procedures that were graded on a five point Likert-like scale (i.e., 1 to 5). The middle and extreme points are explicitly defined. The six measured categories are: (1) Respect for Tissue (R), (2) Time & Motion (TM), Instrument Handling (H), Knowledge of Instruments (K), Flow of Procedure (F), and Knowledge of Procedure (KP). The “Use of Assistants” category is not generally applicable in the first training module, and was therefore not evaluated. A cumulative score totaling the individual scores for each of the six categories was obtained (minimum score = 0, maximum score = 30). OSATS evaluation constructs have been validated in terms of inter-rater variability and correlation with technical maturity [13, 14] and have been applied in evaluating technical facility with robotassisted surgery [15].

Automated Measures

There are at least two different types of automated measures that can be computed from the longitudinal data we have acquired. The first are aggregated motion statistics, task measures, and associated longitudinal assessments (i.e., learning curves). The second include measures computed using statistical analysis for comparing technical skills of trainees to those of expert surgeons.

Motion Statistics and Task Measures

Table 1 shows the computed elementary measures for the defined surgical task executions. Each of these measures is used to derive an associated learning curve over the longitudinally collected data.

Table 1.

Aggregate measures computed from longitudinal data Experts performed each task twice to reduce variability. - Sample task times (seconds, top), master handle motion distances (meters. middle), and number of camera foot pedal events (counts, bottom) are detailed for the tasks defined in the first training module.

| Task times(sec) | Task | Session 1 | Session 2 | Session 3 | Session 4 | Session 5 |

|---|---|---|---|---|---|---|

| Expert | Suturing | 348 | 322 | |||

| Manipulation | 238 | 238 | ||||

| Transection | 133 | 149 | ||||

| Dissection | 188 | 260 | ||||

| Trainee | Suturing | 454 | 58 | 255 | 289 | 279 |

| Manipulation | 867 | 577 | 311 | 282 | 442 | |

| Transection | 107 | 196 | 76 | 103 | 126 | |

| Dissection | 363 | 291 | 191 | 492 | 200 |

| Motion (m) | Task | Session 1 | Session 2 | Session 3 | Session 4 | Session 5 |

|---|---|---|---|---|---|---|

| Expert | Suturing | 13.0 | 10.3 | |||

| Manipulation | 14.9 | 14.2 | ||||

| Transection | 1.8 | 1.2 | ||||

| Dissection | 3.2 | 6.6 | ||||

| Trainee | Suturing | 12.9 | 15.0 | 6.1 | 6.1 | 6.8 |

| Manipulation | 27.8 | 17.8 | 15.1 | 16.5 | 21.1 | |

| Transection | 1.7 | 1.6 | 0.5 | 1.1 | 1.1 | |

| Dissection | 8.1 | 5.0 | 4.0 | 9.3 | 3.4 |

| Events (count) | Task | Session 1 | Session 2 | Session 3 | Session 4 | Session 5 |

|---|---|---|---|---|---|---|

| Expert | Suturing | 8 | 2 | |||

| Manipulation | 43 | 40 | ||||

| Transection | 3 | 2 | ||||

| Dissection | 0 | 2 | ||||

| Trainee | Suturing | 0 | 0 | 2 | 6 | 4 |

| Manipulation | 98 | 61 | 61 | 50 | 89 | |

| Transection | 1 | 1 | 1 | 5 | 3 | |

| Dissection | 5 | 7 | 4 | 7 | 5 |

Statistical Classification of Technical Skill

Our group and collaborators [16, 17, 18, 19] have previously used the da Vinci API motion data to develop statistical methodologies for the automatic segmentation and analysis of basic surgical motions for the quantitative assessment of surgical skills. Lin et al [16] used linear discriminant analysis (LDA) to reduce the motion parameters to three or four dimensions, and Bayesian classification to detect and segment basic surgical motions, termed “gestures”. Reiley et al [19] used a Hidden Markov Model (HMM) approach for modeling gestures. These studies report that experienced surgeons perform surgical tasks significantly faster, more consistently, more efficiently, and with lower error rates [19,20]. We summarize assessments of robotic system operational skills by using Support Vector machines (SVM) to cluster dimensionally reduced data, revealing different levels of competence. A SVM transforms the input data into a higher dimensional space using a kernel function. An optimization step then estimates hyperplanes separating the data into the two classes with maximum separation. For our binary expert vs. non-expert classification the SVM classifier is directly applicable. A trained classifier allows held-out or unseen data to be labeled by an appropriate class label for computation of traditional sensitivity and specificity measures.

Results

Structured Assessment

Table 1 shows a clear separation between trainees based on their system operational skills and clinical background, providing a validated “ground truth” for assessing our automated methods.

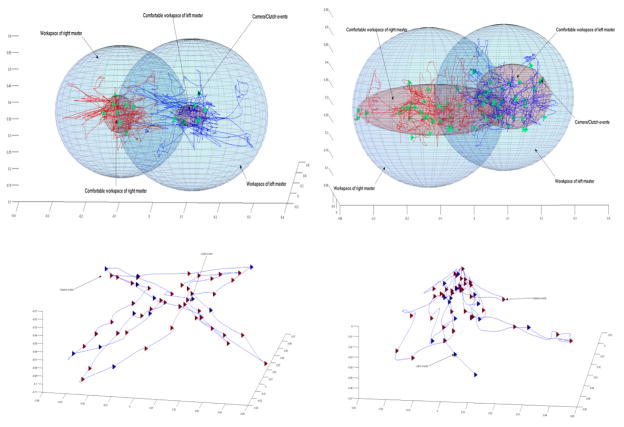

Workspace Management

Maintaining a compact operative workspace is an important robotic system operational skill. Expert robotic surgeons maintain an optimum field of view for a given operative task, keeping the instruments within the field of view at all times (Figure 2, bottom left) while trainees tend to zoom out to a broad field of view that is not adjusted during the task performance (Figure 2, bottom right).

Figure 2.

Master and camera workspaces used by experts (left, top, and bottom panels), and novices (right, top and bottom panels) during performance of the manipulation task. The red ellipsoids represent volumes of hand positions at the end of master workspace adjustments (clutching) The blue ellipsoids represent master hand volumes. The triangles represent master (green) and camera (red, blue) clutch adjustments.

Figure 2 (top) graphically illustrates the differences in workspace usage between trainees and expert robotic surgeons performing the manipulation task. The trajectories represent master handle motion. The enclosing volumes represent total volumes used and the positions of the master handles at the end of master clutch adjustments. The workspace usage evolves to become closer to the expert workspace usage as trainees learn to adjust their workspaces more efficiently. Expert task executions also include regularly spaced camera clutch events to maintain the instruments in the field of view.

We use master handle motion for computations as opposed to instrument tip motion reported in the literature. Master motion better measures operational skills by capturing all of the motion required to adjust the master workspace; this not captured by instrument tip motion. We measure both the master distance as well as the volume in which the master handles are moved. Analysis of instrument motion statistics and counts of other foot pedal actuations, instrument exchanges, and other system events will be reported separately.

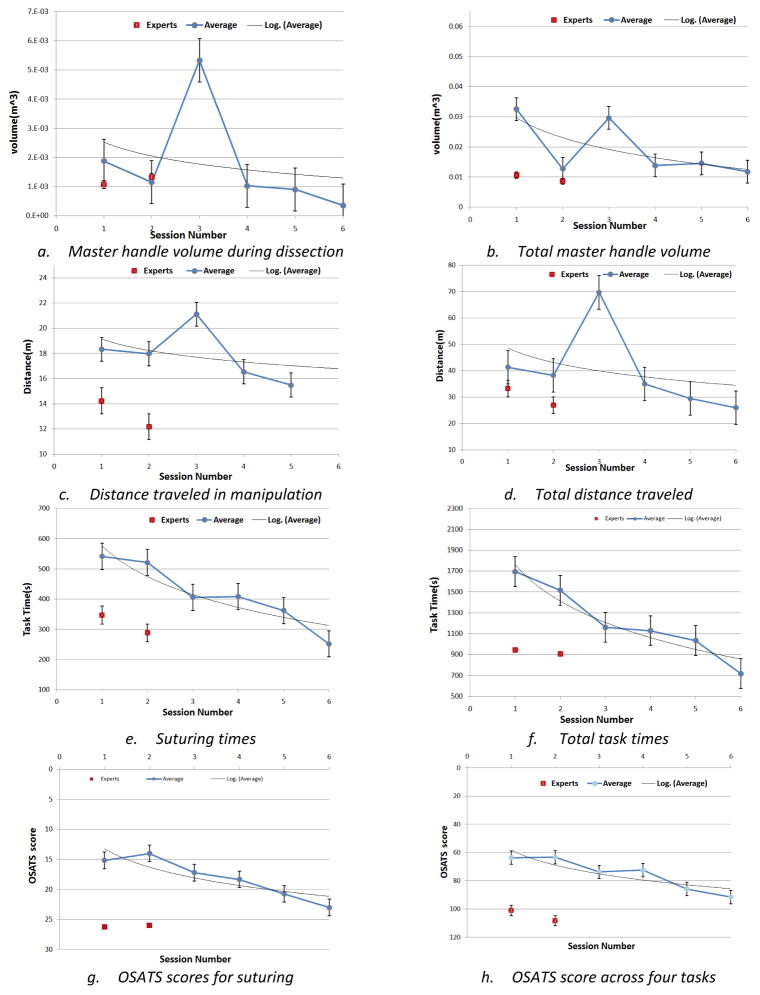

Learning curves

Figure 3 shows learning curves derived from task motion, the times required to complete the defined surgical tasks, and the corresponding learning curves based on expert OSATS structured assessments. ANOVA (F = 71.88 > 2.23, F =51.02 > 2.37, and F =71.4 > 2.57 at α = 0.05 at 1, 3 and 5 months) results were significant at the 5% level, indicating that the expected values for task completion times, OSATS scoring, master motion distances, and master volumes differed significantly. Trainee performance improved with time as indicated by lower task completion times, smaller volumes, and shorter motion paths. These correlated with improved OSATS scores. By comparison, expert measures displayed very small variability between the two executions.

Figure 3.

Learning curves based on time, master handle distance, master handle volumes, and OSATS structured assessment measurements for (1) individual tasks and (2) all four tasks. Note that the OSATS score scale has been inverted and that expert task metrics (red) appear in the bottom lower corner of the charts.

The computed measures (e.g. task completion times, total time, master motion distances, and master volumes) at 1, 3, and 5 month intervals correlated with OSATS scores for the corresponding sessions (p < 0.05). For the suturing task, at month 1, the mean OSATS score (M=77.58, V=527.35, N= 12) and suturing time (M=466.29, V=39392.63, N=12) were significantly greater than zero (t(11)=−6.27, two-tail p = 6.07E-5), providing evidence that task completion times correlate with our ground truth assessment. Table 2 details the p-values for alpha = 0.05.

Table 2.

(Top panel) Pair-wise t-tests comparing OSATS scores versus suturing time (suturing), total time (time), manipulation distance (manip), total task distance (distance), master handle volume in dissection (dissec), and total master handle volume (volume) at 1, 3, and 5 months training time intervals (two-tail p-values alpha = 0.05) (Bottom panel) P-values for one-factor analysis of variation (ANOVA) for all variables at 1, 3, and 5 month training time intervals.

| PAIRWISE t-TEST | N | OSATS/suturing | OSATS/time | OSATS/manipulation | OSATS/distance | OSATS/dissection | OSATS/volume |

|---|---|---|---|---|---|---|---|

| 1 | 12 | 6E-5 | 2.8E-5 | 9.9E-4 (N=8) | 0.0014 | 1.5E-7 | 1.5E-7 |

| 3 | 6 | 0.0016 | 1.4E-4 | 0.0067 | 0.9303 | 8.4E-5 | 8.5E-5 |

| 5 | 3 | 0.0227 | 2.3E-4 | 0.0052 | 0.0043 | 8.4E-4 | 8.4E-4 |

| ANOVA | N | P-value | F | F-critical |

|---|---|---|---|---|

| 1 | 12 | 8.4E-24 | 47.7 | 2.22 |

| 3 | 6 | 7.8E-20 | 90.5 | 2.37 |

| 5 | 3 | 2.5E-15 | 472.1 | 2.85 |

Skill Assessment

For a portion of the dataset (i.e., 2 experts, 4 non-experts) we clustered the motion data using principal component analysis (PCA) to reduce data dimensions for Cartesian instrument velocities signals. We then trained a binary support vector machine (SVM) classifier on a portion of the data and used the trained classifier to perform expert versus non-expert binary classification. This methodology correctly stratified our subjects according to their respective skill levels with 83.33% accuracy for the suturing task and 76.25% accuracy for the manipulation task. Detailed automated analysis on this and expanded datasets will be reported separately.

Discussion

Clinical skill measures should assess instrument-environment interactions. In our recent review (Reiley et al [21]), we describe related work on use of instrument motion in surgical skill assessment. While instrument motion is measured accurately using the sensors built into the robot, the interaction and effects of tools with the environment (i.e., the patient or model) and additional tools such as needles and sutures is not captured in the kinematic motion data. Presently, instrument motion has been primarily used as an indicator of “clinical” skill. In our approach, we focus on “operational” skills for robotic surgery systems. Robotic surgery entails a complex man-machine interface. It is the complexity of this interface that creates steep learning curves, even for laparoscopically trained surgeons.

We describe a longitudinal study of robotic surgery trainees, including preliminary assessment of structured expert assessment (OSATS) and automatically computed statistics and skill measures. Operational skill effects can be completely captured using the telemetry obtained from the robotic system. With appropriate tasks and measures, separate learning curves can be derived. In particular, we noted very strong agreement between structured assessment of task performance using OSATS and computed master workspace measures (i.e., distance, volume, time). Additional computed measures, not described here, include camera motion effects, instrument motion measures, learning curves based on system events, learning curves based on abnormal events, and reactions to abnormal events.

We performed longitudinal analysis to develop our learning curves. This is an essential exercise towards the development of both training curricula and metrics that are discriminative of operational skill. As noted above, the measures of skill based on master manipulation show large differences between experts and non-experts and convergence of non-experts towards the experts as training progresses. Ab initio training, where operational skills and system orientation are most important, is only the first step in robotic surgery training. Additional training modules will add port constraints, instrument collisions, and more complex tasks. The immediate application of these methods is in ab initio proficiency based training regimen (as against time limited) for robotic surgery trainees, and the first applications of the developed methods in training program (Johns Hopkins Division of Head and Neck Surgery) are currently underway.

The analysis employed only a portion of our data and reports only some of the measures computed. Additional larger studies involving larger datasets and alternative methods are underway. In ongoing work, we measure response times to errors and their development curves as additional skill measures. Finally, automated skill classification accuracies in excess of 80%, highlight the quality of our data. In ongoing work, we use alternative supervised and unsupervised multi-class classification for both operational and surgical task skills. The impact of amount of varying times and types of training between benchmarking sessions is also currently being investigated.

Acknowledgments

Grant Support: This work was supported in part by NIH grant # 1R21EB009143-01A1, National Science Foundation grants #0941362, and #0931805, and Johns Hopkins University internal funds. A collaborative agreement with Intuitive Surgical, Inc with no financial support provided access to the API data. The sponsors played no role in study development, data collection, interpretation of data, or analysis.

The authors thank Dr. Myriam Curet and Dr. Catherine Mohr for their insightful comments and help with this work. This work was supported in part by NIH grant # 1R21EB009143-01A1, National Science Foundation grants #0941362 and #0931805, a collaborative agreement with Intuitive Surgical, Inc., and Johns Hopkins University internal funds. This support is gratefully acknowledged.

Footnotes

Author Contributions: Author Kumar led the study design, was involved in data acquisition, developed the analysis methods, and performed the evaluation. Authors Jog, and Vagvolgyi were involved in the data collection. Authors Nguyen, Chen, and Yuh were involved in clinical interpretation of the data and analysis. Authors Hager and Yuh collaborated with Kumar in the study design, and participated in the interpretation of the data and analysis.

Disclosures: This work was supported by the NSF and NIH grants above. The study sponsors played no role in collection, analysis or interpretation of data. Dr. Kumar was employed by Intuitive Surgical prior to his moving to Johns Hopkins five years ago. Intuitive Surgical is a publically traded company. The other authors (Kumar, Jog, Tantillo, Vagvolgyi, Chen, Nguyen, Hager) may own or have owned small number of Intuitive securities (<$10,000).

Contributor Information

Rajesh Kumar, Department of Computer Science, Johns Hopkins University, 3400 North Charles St., Baltimore MD.

Amod Jog, Department of Computer Science, Johns Hopkins University, 3400 North Charles St., Baltimore MD.

Balazs Vagvolgyi, Department of Computer Science, Johns Hopkins University, 3400 North Charles St., Baltimore MD.

Hiep Nguyen, Department of Urology, Children’s Hospital, Boston, MA.

Gregory Hager, Department of Computer Science, Johns Hopkins University, 3400 North Charles St., Baltimore MD.

Chi Chiung Grace Chen, Department of Obstetrics and Gynecology, Johns Hopkins Hospital, Baltimore, MD.

David Yuh, Section of Cardiac Surgery, Yale University School of Medicine, New Haven, CT.

References

- 1.Intuitive Surgical Inc. 2010 http://www.intuitivesurgical.com/products/faq/index.aspx.

- 2.Novick RJ, Fox SA, Kiaii BB, Stitt LW, Rayman R, Kodera K, et al. Analysis of the learning curve in telerobotic, beating heart coronary artery bypass grafting: a 90 patient experience. The Annals of thoracic surgery. 2003;76(3):749–753. doi: 10.1016/s0003-4975(03)00680-5. [DOI] [PubMed] [Google Scholar]

- 3.Rodriguez E, Chitwood WR., Jr Outcomes in robotic cardiac surgery. Journal of Robotic Surgery. 2007;1:19–23. doi: 10.1007/s11701-006-0004-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Chitwood WR., Jr Current status of endoscopic and robotic mitral valve surgery. The Annals of thoracic surgery. 2005;79(6):S2248–S2253. doi: 10.1016/j.athoracsur.2005.02.079. [DOI] [PubMed] [Google Scholar]

- 5.Ro CY, Toumpoulis IK, Jr, RCA, Imielinska C, Jebara T, Shin SH, et al. A novel drill set for the enhancement and assessment of robotic surgical performance. Studies in Health Technology and Informatics. Proc Medicine Meeting Virtual Reality(MMVR) 2005;111:418–421. [PubMed] [Google Scholar]

- 6.Judkins TN, Oleynikov D, Stergiou N. Objective evaluation of expert and novice performance during robotic surgical training tasks. Surgical Endoscopy. 2009;23(3):590– 597. doi: 10.1007/s00464-008-9933-9. [DOI] [PubMed] [Google Scholar]

- 7.Sarle R, Tewari A, Shrivastava A, Peabody J, Menon M. Surgical robotics and laparoscopic training drills. Journal of Endourology. 2004;18(1):63–67. doi: 10.1089/089277904322836703. [DOI] [PubMed] [Google Scholar]

- 8.Narazaki K, Oleynikov D, Stergiou N. Objective assessment of proficiency with bimanual inanimate tasks in robotic laparoscopy. Journal of Laparoendoscopic & Advanced Surgical Techniques. 2007;17(1):47–52. doi: 10.1089/lap.2006.05101. [DOI] [PubMed] [Google Scholar]

- 9.Datta V, Mackay S, Mandalia M, Darzi A. The use of electromagnetic motion tracking analysis to objectively measure open surgical skill in the laboratory-based model. Journal of the American College of Surgeons. 2001;193(5):479–485. doi: 10.1016/s1072-7515(01)01041-9. [DOI] [PubMed] [Google Scholar]

- 10.DiMaio S, Hasser C. The da Vinci Research Interface, Systems and Architectures for Computer Assisted Interventions workshop (MICCAI 2008); 2008. http://www.midasjournal.org/browse/publication/622. [Google Scholar]

- 11.Mohr C, Curet M. The Intuitive Surgical System Skill Practicum. Intuitive Surgical, Inc; 2008. [Google Scholar]

- 12.Martin JA, Regehr G, Reznick R, MacRae H, Murnaghan J, Hutchison C, et al. Objective structured assessment of technical skill (OSATS) for surgical residents. British Journal of Surgery. 1997;84(2):273–278. doi: 10.1046/j.1365-2168.1997.02502.x. [DOI] [PubMed] [Google Scholar]

- 13.Faulkner H, Regehr G, Martin J, Reznick R. Validation of an objective structured assessment of technical skill for surgical residents. Academic Medicine. 1996;71(12):1363. doi: 10.1097/00001888-199612000-00023. [DOI] [PubMed] [Google Scholar]

- 14.Darzi A, Smith S, Taffinder N. Assessing operative skill. British Medical Journal. 1999;318(7188):887. doi: 10.1136/bmj.318.7188.887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hernandez JD, Bann SD, Munz Y, Moorthy K, Datta V, Martin S, et al. Qualitative and quantitative analysis of the learning curve of a simulated surgical task on the da Vinci system. Surgical Endoscopy. 2004;18:372–378. doi: 10.1007/s00464-003-9047-3. [DOI] [PubMed] [Google Scholar]

- 16.Lin HC, Shafran I, Yuh D, Hager GD. Towards automatic skill evaluation: Detection and segmentation of robot-assisted surgical motions. Computer Aided Surgery. 2006;11(5):220–230. doi: 10.3109/10929080600989189. [DOI] [PubMed] [Google Scholar]

- 17.Verner L, Oleynikov D, Hotmann S, Zhukov L. Measurements of level of surgical expertise using flight path analysis from Da Vinci robotic surgical system. Studies in Health Technology and Informatics. 2003;94:373–378. [PubMed] [Google Scholar]

- 18.Gallagher AG, Ritter EM, Satava RM. Fundamental principles of validation, and reliability: rigorous science for the assessment of surgical education and training. Surgical Endoscopy. 2003;17:1525–1529. doi: 10.1007/s00464-003-0035-4. [DOI] [PubMed] [Google Scholar]

- 19.Reiley CE, Hager GD. Task versus Subtask Surgical Skill Evaluation of Robotic Minimally Invasive Surgery. Proc Medical Image Computing and Computer-Assisted Intervention (MICCAI) 2009:435–442. doi: 10.1007/978-3-642-04268-3_54. [DOI] [PubMed] [Google Scholar]

- 20.Gallagher AG, Richie K, McClure N, McGuigan J. Objective psychomotor skills assessment of experienced, junior, and novice laparoscopists with virtual reality. World Journal of Surgery. 2001;25(11):1478–1483. doi: 10.1007/s00268-001-0133-1. [DOI] [PubMed] [Google Scholar]

- 21.Reiley CE, Lin HC, Yuh DD, Hager GD. A Review of Methods for Objective Surgical Skill Evaluation. Surgical Endoscopy. 2011;25(2):356–66. doi: 10.1007/s00464-010-1190-z. [DOI] [PubMed] [Google Scholar]