Abstract

Growing interest in promoting cross-disciplinary collaboration among health scientists has prompted several federal agencies, including the NIH, to establish large, multicenter initiatives intended to foster collaborative research and training. In order to assess whether these initiatives are effective in promoting scientific collaboration that ultimately results in public health improvements, it is necessary to develop new strategies for evaluating research processes and products as well as the longer-term societal outcomes associated with these programs. Ideally, evaluative measures should be administered over the entire course of large initiatives, including their near-term and later phases. The present study focuses on the development of new tools for assessing the readiness for collaboration among health scientists at the outset (during Year One) of their participation in the National Cancer Institute’s Transdisciplinary Research on Energetics and Cancer (TREC) initiative. Indexes of collaborative readiness, along with additional measures of near-term collaborative processes, were administered as part of the TREC Year-One evaluation survey. Additionally, early progress toward scientific collaboration and integration was assessed, using a protocol for evaluating written research products. Results from the Year-One survey and the ratings of written products provide evidence of cross-disciplinary collaboration among participants during the first year of the initiative, and also reveal opportunities for enhancing collaborative processes and outcomes during subsequent phases of the project. The implications of these findings for future evaluations of team science initiatives are discussed.

Introduction

To facilitate scientific efforts to solve complex public health problems such as cancer incidence, morbidity, and obesity-associated mortality, multidisciplinary teams of investigators drawn from a variety of different fields are being formed.1,2 The major goals of these teams are to develop new methods, theories, and conceptual models that integrate several disciplinary perspectives. Cross-disciplinary scientific collaboration is intended to move areas of research forward in ways that individual investigators working from a single disciplinary perspective could not accomplish on their own or in a timely manner.3,4

Conducting team science that bridges multiple disciplines can be expensive and labor intensive.5 Therefore, it is important to identify and understand those conditions that facilitate or hinder effective cross-disciplinary collaboration.6 Whereas the enhancement of public health is perhaps the most crucial intended outcome of cross-disciplinary health research, identifying the gains in health status attributable to a particular research program can be quite difficult, especially during the early phases of a team science initiative. Research takes time to develop, conduct, disseminate, and implement. The stage of research and the state of the infrastructure for translating research into tangible health benefits influences the length of time it takes for these improvements to become evident at the community and societal levels. In the interim, near-term markers of successful collaboration and integration are necessary for evaluating scientific progress during a research initiative.7 This paper presents new methods for assessing the antecedents of effective cross-disciplinary collaboration and near-term markers of collaborative processes and outcomes as evaluated during the early phase of a large-scale research and training initiative in the field of energetics and cancer.

Transdisciplinary Research on Energetics and Cancer Initiative

During the fall of 2005, the National Cancer Institute (NCI) established the Transdisciplinary Research on Energetics and Cancer (TREC) initiative comprising four research centers and one coordination center.8 The TREC centers are intended to foster collaboration among transdisciplinary teams of scientists with the goal of accelerating progress toward reducing cancer incidence; morbidity; and mortality associated with energy imbalance, obesity, and low levels of physical activity. They also aim to conduct research to elucidate the mechanisms linking energetics and cancer and to provide training opportunities for new and established scientists who can carry out integrative research on energetics and energy balance (www.compass.fhcrc.org/trec). This $54-million initiative was created through a combination of funding mechanisms that enable four research centers to have the support of a centralized coordination center. NCI is partnering with the centers to support developmental projects both within and between centers as well as an initiative-wide evaluation process.9

Previous evaluation studies have assessed collaborative processes and outcomes during the midterm or later stages of an initiative,7,10 but to the authors’ knowledge, no study to date has assessed antecedent factors present at the outset of an initiative that may influence the effectiveness of team collaboration over the duration of the program. The TREC Year-One evaluation study, summarized below, contributes to the science of team science by providing newly developed metrics for assessing collaboration-enhancing or -impairing factors present during the first year of a large-scale, cross-disciplinary research and training initiative, and for evaluating the empirical links between these antecedent conditions and near-term markers of scientific collaboration and integration.

Collaborative Readiness and Capacity

A number of circumstances can influence a team’s prospects for effective cross-disciplinary collaboration during the early stages of an initiative. These factors may enhance or hinder collaborative processes during the proposal-development phase, during preparations for project launch once funding has been received, and during the initial months once the project has commenced. They may also affect the longer-term success of the collaboration, its scientific outcomes, and, ultimately, the public health impacts of an initiative. A variety of circumstances that facilitate or constrain effective teamwork during the initial stages of a project have been identified as collaborative-readiness factors in earlier evaluations of cross-disciplinary scientific projects and research centers.6,7,11 In this discussion, at least three categories of collaborative-readiness factors are considered: (1) contextual–environmental conditions (e.g., institutional resources and supports or barriers to cross-departmental collaboration; the environmental proximity or electronic connectivity of investigators, or both); (2) intrapersonal characteristics (e.g., research orientation, leadership qualities); and (3) interpersonal factors (e.g., group size, the span of disciplines represented, investigators’ histories of collaboration on earlier projects).

Contextual–environmental influences on collaboration (e.g., environmental proximity among investigators, bureaucratic administrative infrastructures at universities or research labs) are more hard-wired into the physical and social environment, whereas intrapersonal and interpersonal collaborative-readiness factors are, perhaps, more-malleable human factors whose qualities change over time as a result of collaborative processes. Contextual factors such as geographic constraints on collaboration and institutional resources may also change over time, but these processes are perhaps more gradual and difficult to accomplish due to the rigidity of environmental and bureaucratic structures. Presumably, contextual–environmental conditions as well as intrapersonal and interpersonal factors interact with each other to influence the overall collaborative readiness of a scientific team, or the extent to which team members are likely to achieve the collaborative goals specified at the outset of the project.

Olson and Olson,11 in their studies of collaboration among team members who are geographically dispersed, have emphasized the importance of technology readiness, or the extent to which participants have the requisite technical infrastructure and expertise to establish and sustain electronic communications and information exchange with each other. In the context of the present study, collaborative readiness is conceptualized more broadly to encompass motivational factors;, leadership resources; investigators’ histories of prior collaboration and informal social relations with each other; spatial proximity; electronic connectivity; and other institutional supports for centers and teams (see also Stokols et al.5–7). Considering the diversity of collaborative-readiness factors, it is important not only to identify the range of potential influences on teamwork but also to understand which factors exert the greatest impact on team members’ collaborative processes and outcomes.

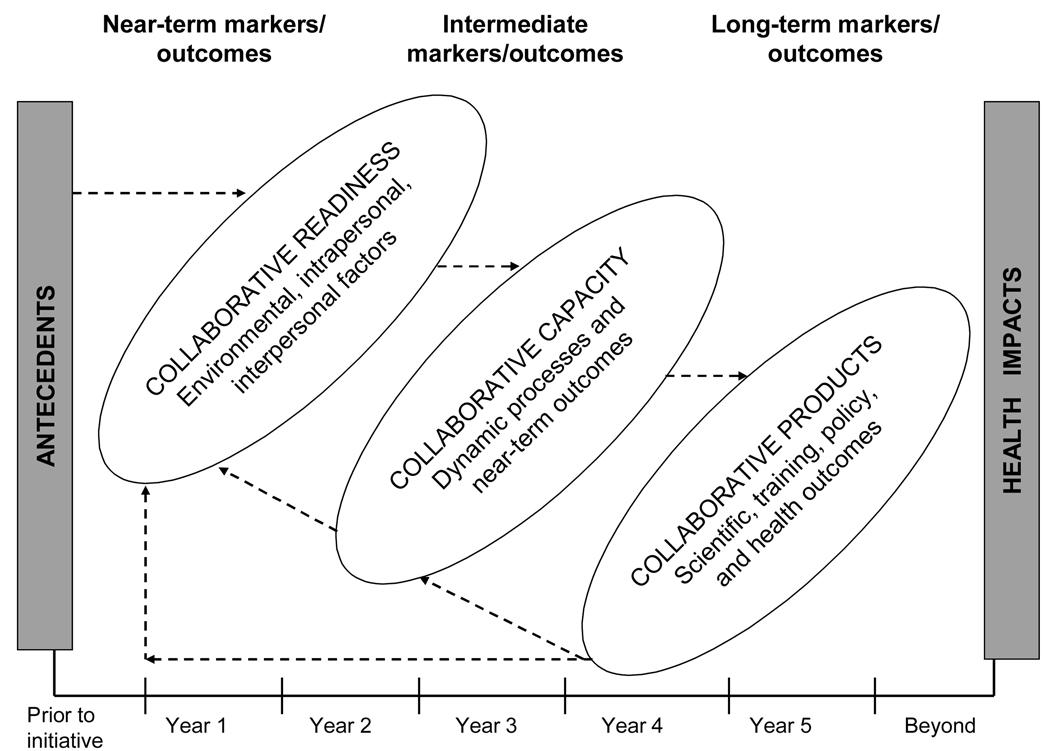

As a project moves into its mid- and later phases of development, the notion of readiness for collaboration becomes less salient or relevant. During the later stages of the project, the contextual–environmental factors, intrapersonal factors, and interpersonal factors that facilitate or constrain a team’s effectiveness are better construed as determinants of collaborative capacity among investigators rather than as readiness factors that influence participants’ prospects for effective collaboration primarily at the outset of an initiative. A conceptual model of the temporal relationships among collaboration-readiness factors, collaborative capacity, and collaborative outcomes is shown in Figure 1.

Figure 1.

Conceptual model for evaluation collaborative initiatives

Process and Product Measures of Scientific Collaboration

At least two methodologic approaches have been used for assessing the levels of cross-disciplinary collaboration and integration achieved by the members of research teams and centers. One strategy is to assess the ongoing processes of collaboration and scientific synergy as they occur within particular research and training settings such as investigators’ offices, conference rooms, and laboratories. A second approach is to evaluate the cross-disciplinary qualities (e.g., the quality and scope of integration among multiple disciplinary perspectives) reflected in tangible collaborative products such as manuscripts, grant proposals, published journal articles, and books.12 These research deliverables can serve as markers of collaborative progress during both the initial and later phases of a cross-disciplinary initiative. Although product assessments do not capture the dynamics of cross-disciplinary collaboration as it occurs over time, the development of objective criteria for evaluating the integrative scope and quality of written products has the advantage of establishing standardized criteria that can be applied reliably and validly across a wide range of research and training projects. In the current evaluation of the NCI TREC initiative, both process and product measures were used to gauge early progress toward cross-disciplinary collaboration among TREC investigators.

Two related studies are reported below. In the first, Year-One survey measures were developed and administered to assess collaborative-readiness factors and near-term (i.e., Year-One) evidence of cross-disciplinary collaboration within the TREC centers. In the second, an independent reviewer-rating protocol was designed to evaluate the integrative qualities of early-term research products—specifically, pilot-project grant proposals submitted by investigators during the first year of the TREC initiative. These two components of the TREC evaluation study extend earlier research in the field of team science by providing new methods for (1) assessing collaboration readiness among the members of cross-disciplinary research teams and centers and (2) gauging progress toward scientific collaboration and integration during the initial phase of a 5-year NCI scientific centers initiative, evidenced through survey measures of collaborative processes and inter-rater evaluations of the cross-disciplinary qualities of team members’ research products.

Methods of the TREC Year-One Survey

This study involved the development and implementation of a Year-One survey for measuring collaborative-readiness factors and early evidence of scientific collaboration during the first year of the TREC initiative. Development of the online Year-One survey was a collaborative effort between representatives of NCI’s evaluation team and the TREC coordination center, which gathered input from TREC center directors through the TREC evaluation working group over the course of the survey’s development and administration.

The TREC evaluation working group comprises members from all the TREC centers, the TREC coordination center, and the NCI evaluation team, which represents all partners within the TREC-initiative cooperative agreement.

The NCI evaluation team comprises NCI-affiliated staff who participate in evaluation activities for the TREC initiative. They work with the TREC evaluation working group on relevant activities, provide content leadership that complements expertise at the TREC centers, and consider issues at the programmatic level, keeping broader evaluation interests at hand.

The TREC coordination center serves as a central resource for the TREC research centers supported by an NCI U01 grant, handling activities and functions such as central communication and evaluation activities, training, and the conduct of original research, making it more than just an administrative unit. The coordination center provided intensive support in facilitating the evaluation of the four centers, and therefore members of this center were not included as research subjects in the current evaluation.

A TREC center, or TREC research center, is an institution-based research unit supported by an NCI U54 grant. Each research center is located at a specific university or cancer center and coordinates a variety of research projects, core resources for the individual center, training activities, and developmental grants.

Participants

Investigators, including center directors, co-investigators, and research staff from the four research centers who were active in the TREC initiative at the start of data collection, were eligible for the study. As mentioned previously, because of the central role played by the coordination center in conducting the evaluation, the coordination center’s investigators and staff were not included in the Year-One survey. The final sample size for the evaluation was 56 of a possible total 76 participants, resulting in a response rate of 74%.

Approval was received from the IRBs of the three agencies/institutions primarily involved in the development of the survey: the Fred Hutchinson Cancer Research Center (the coordination center); Westat Inc., the third-party contractor; and the NCI. Each respondent was presented with the online consent form before he or she received the online survey.

Survey Measures

Several new survey instruments were created for the TREC Year-One evaluation survey. Additionally, some of the measures were adapted from earlier studies of cross-disciplinary research centers and teams.5,6,10 These measures, administered during the first 6 months of the TREC initiative, can be found online in their entirety (cancercontrol.cancer.gov/trec/TREC-Survey-2006-01-31.pdf). The major scales developed for the TREC survey are described below.

These scales are grouped into two major categories. The first category includes collaborative-readiness measures of respondents’ research orientations, as well as antecedent measures of collaborative readiness including their assessments of the institutional resources available to support TREC-related activities at the outset of the initiative, their reports of prior collaboration with TREC colleagues on pre-TREC projects, and the number of years in which they had participated in interdisciplinary or transdisciplinary research centers and projects prior to the TREC initiative. The second category of measures include near-term (first 6 months) measures of collaborative processes, namely, respondents’ overall impressions of their research center and their assessments of interpersonal collaboration and productivity, the cross-disciplinary activities in which they had engaged, and their expectations that their TREC-related projects would be successful in achieving their previously specified Year-One deliverables.

Measures of Collaborative-Readiness Factors

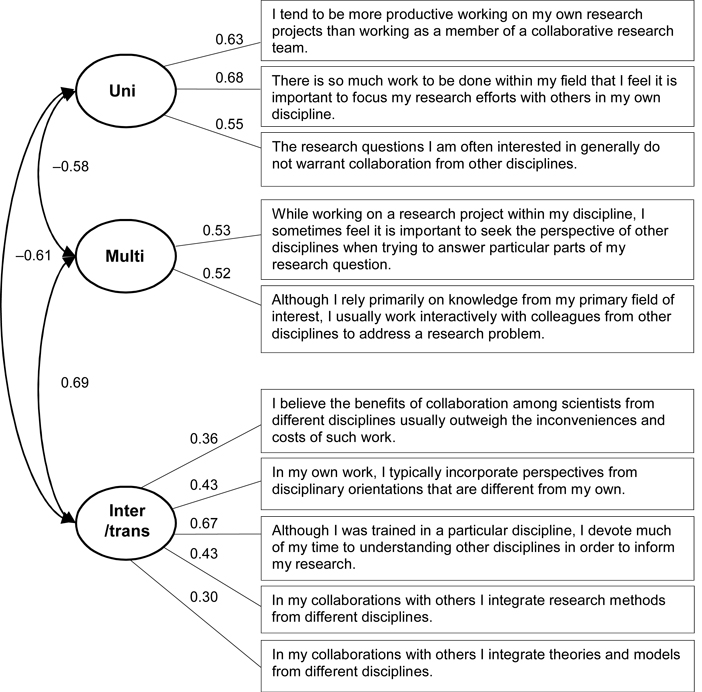

The research-orientation scale (Cronbach’s α=0.74) assessed the unidisciplinary or cross-disciplinary proclivity of the investigators’ values and attitudes toward research, using a 5-point scale ranging from strongly disagree to strongly agree. Previous measures of researchers’ orientations asked them to describe their transdisciplinary values and behaviors; in contrast, the research-orientation scale developed for this study was designed to assess the cross-disciplinary continuum as defined by Rosenfield13 by using items that measure each of four major research orientations: unidisciplinary, multidisciplinary, interdisciplinary, and transdisciplinary.

A unidisciplinary research orientation is characterized by the use of theories and methods drawn from a single field, whereas cross-disciplinary (i.e., multidisciplinary, interdisciplinary, transdisciplinary) research orientations entail the combined use of concepts and methods drawn from two or more distinct disciplines. Multidisciplinary collaborations involve researchers who share their own disciplinary insights and perspectives with colleagues who are trained and work in fields different from their own. Interdisciplinary collaborations involve a higher level of integration among the different disciplinary perspectives of team members than is evident in multidisciplinary collaborations. Transdisciplinary collaborations, like interdisciplinary ones, strive toward the integration of two or more disciplinary perspectives, but are uniquely characterized by the creation of novel conceptualizations and methodologic approaches that transcend or move beyond the individual disciplines represented among team members. The final items included in this scale are presented in Figure 2, along with a path diagram showing the grouping of the items and their factor loadings from a confirmatory-factor analysis (described below).

Figure 2.

Path diagram for the research-orientation scale, including factor loadings and factor correlations

The history-of-collaboration scale assessed the number of investigators at the participant’s TREC center with whom the participant had collaborated on prior projects (number of collaborators); it also assessed the participant’s satisfaction with the previous collaboration with each of those individuals, using a 5-point Likert scale ranging from not at all satisfied to completely satisfied (collaborative satisfaction rating). Also assessed were the number of years during which the respondent had participated in interdisciplinary or transdisciplinary research centers (number of years in inter/trans centers) and in interdisciplinary or transdisciplinary research projects (number of years in inter/trans projects) prior to the TREC initiative.

The institutional-resources scale (a=0.87) assessed investigators’ impressions of the availability and quality of resources (e.g., physical environment, computer support, personnel) at their centers for conducting TREC-related research. Each type of institutional resource was rated by respondents on 5-point Likert scales ranging from very poor to excellent.

Near-Term Markers of Collaborative Processes

The semantic-differential/impressions scale (α=0.98) assessed investigators’ impressions of their center as a whole, as well as how they feel as a member of their TREC center. The items in this scale included divergent terms listed at each end of a 7-point continuum on which respondents rated their impressions (e.g., conflicted–harmonious; not supportive–supportive; scientifically fragmented–scientifically integrated).

The interpersonal-collaboration scale (α=0.92) assessed investigators’ perceptions of the interpersonal collaborative processes occurring at their TREC center. Examples of these interpersonal processes included conflict resolution, communication, trust, and social cohesion, rated on 5-point Likert scales ranging from very poor to excellent and from strongly disagree to strongly agree.

The collaborative-productivity scale (α=0.95) assessed investigators’ perceptions of collaborative productivity within their own TREC center, including the productivity of scientific meetings and the center’s overall productivity, on a 5-point Likert scale ranging from very poor to excellent. They were also asked to respond to the statement In general, collaboration has improved your research productivity, on a 5-point scale ranging from strongly disagree to strongly agree.

The cross-disciplinary collaboration–activities scale (α=0.81) assessed the frequency with which each investigator engaged in collaborative activities outside his or her primary field, such as reading journals or attending conferences outside the primary field and establishing links with colleagues in different disciplines that led to collaborative work, on a 7-point scale ranging from never to weekly.

The TREC-related collaborative–activities scale (α=0.74) assessed the frequency with which each investigator engaged in TREC-specific activities, such as collaborating with fellow members of her or his own or another TREC center on new developmental projects or on activities other than developmental projects, on a 7-point scale ranging from never to weekly.

Finally, the completing-deliverables scale assessed investigators’ expectations that their research, core, and developmental projects would adhere to the agreed-upon schedule for completing year one-deliverables, on a 5-point Likert scale ranging from highly unlikely to highly likely. All projects being conducted at the participant’s center were listed, and each project was rated separately.

Survey Procedures

The TREC Year-One survey was administered to respondents via a third-party research contractor (Westat Inc.) through online administration. Participants completed the Year-One questionnaire by clicking a link—in an e-mail sent directly to them—to their individualized, password-protected survey. The survey required an average of 35 minutes to complete, and was launched 6 months after the start date of the initial award. Participants were given 8 weeks to complete the survey. Reminder e-mails were sent to those who had not completed the questionnaire at 1-, 2-, and 3-week intervals.

Analyses and Results of the the TREC Year-One Survey

Analyses of the Research-Orientation Scale

The research-orientation scale is a theoretically based measure designed to assess the cross-disciplinary continuum of researchers’ orientations as outlined by Rosenfield.13 Factor analyses were conducted to determine whether the relationships among the disciplinary types were, in fact, on a continuum, or best represented as separate factors. Exploratory analyses assessed the factor structure of the research-orientation scale by (1) identifying distinct factors and estimating the correlations between them; (2) computing factor loadings; and (3) eliminating items with poor loadings and high complexity (e.g., items that loaded highly on more than one factor). The final items included in each factor were selected on the basis of factor loadings, item clarity, minimum item redundancy, and the conceptual representativeness of each factor.

Although the use of four factors would be most consistent with the underlying theoretical model, the maximum-likelihood method and principal-axis factoring resulted in an ultra-Haywood case indicating either that there were too many common factors or not enough data to provide stable estimates of four distinct factors. Given the small sample size (n=56), there is likely insufficient power to extract the four theoretically hypothesized factors, even if they do exist. Convergence was obtained when extracting three factors using direct–oblique rotation employing a maximum-likelihood method. The Kaiser–Meyer–Olkin statistic (>0.6) predicts that the data are suitable for the factor analysis of three factors. The nonsignificance (p=0.103) of the goodness-of-fit test shows that the three-factor model fits well.

Following this, a confirmatory-factor analysis was conducted, based on the theoretical underpinnings of the research-orientation scale and the results of the exploratory-factor analysis. Four items were excluded from the model due to low loadings, double loadings on meaningful factors, or conceptual inconsistency. Three alternative models were examined and compared, based on theoretical conceptualizations of the model. The goodness-of-fit indexes for the confirmatory-factor analysis were all within the range of 0–1. The final model included three factors with acceptable goodness-of-fit (CFI=0.95, SRMR=0.073, and RMSEA=0.00; CI=0.0, 0.099). A path diagram of the final model, including factor loadings and items, is shown in Figure 2.

Bivariate Correlations

The Pearson correlation coefficients among key study variables are listed in Table 1. Means and ranges for the variables are also included there. Key associations among research-orientation scale factors are described below.

Table 1.

Bivariate correlations, means, and ranges of key Year-One survey-study variables

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| # of collaborators | — | −0.22 | 0.25 | 0.46** | −0.01 | −0.21 | 0.38** | 0.42** | −0.08 | −0.19 | −0.17 | 0.09 | 0.36* | 0.36* |

| Collaboration satisfaction | — | 0.20 | −0.03 | 0.09 | 0.34* | −0.04 | 0.04 | 0.31* | 0.43** | 0.40** | −0.02 | 0.04 | 0.02 | |

| Cross-disciplinary collaboration activities | — | 0.20 | 0.20 | 0.04 | 0.23 | −0.13 | 0.26 | 0.19 | 0.23 | −0.35* | 0.52** | 0.45** | ||

| TREC collaboration activities | — | 0.12 | 0.08 | 0.01 | −0.01 | 0.28 | 0.10 | 0.09 | 0.00 | 0.32* | 0.34* | |||

| Completing deliverables | — | 0.09 | –0.32* | –0.35* | 0.39** | 0.28* | 0.35* | −0.18 | −0.02 | −0.01 | ||||

| Institutional resources | — | 0.08 | 0.00 | 0.30* | 0.41** | 0.48** | −0.05 | 0.30* | 0.08 | |||||

| # yrs inter/trans centers | — | 0.51** | −0.18 | −0.17 | −0.14 | 0.09 | 0.10 | 0.14 | ||||||

| # yrs inter/trans projects | — | −0.39** | −0.30* | −0.32* | −0.01 | −0.08 | 0.11 | |||||||

| Impressions | — | 0.84** | 0.84** | −0.06 | 0.17 | −0.01 | ||||||||

| Interpersonal collaboration | — | 0.88** | −0.09 | 0.06 | −0.07 | |||||||||

| Collaboration productivity | — | −0.13 | 0.25 | 0.04 | ||||||||||

| Unidisciplinary | — | −0.32* | −0.28* | |||||||||||

| Multidisciplinary | — | 0.50** | ||||||||||||

| Interdisciplinary/transdisciplinary | — | |||||||||||||

| M | 7.72 | 4.55 | 4.97 | 3.56 | 4.30 | 4.19 | 4.96 | 10.31 | 5.63 | 4.32 | 4.26 | 3.82 | 4.54 | 4.26 |

| (Range) | (1–21) | (3.5–5) | (3–6.7) | (1–6) | (1–5) | (2.4–5) | (0–20) | (0–5) | (1.9–7) | (1.5–5) | (1–5) | (1.7–5) | (3–5) | (3–5) |

Note: n ranges from 45 to 56; dashes indicate a correlation of 1.0.

p<0.05;

p<0.01

#, number; inter, interdisciplinary; trans, transdisciplinary; TREC, Transdisciplinary Research on Energetics and Cancer; yrs, years

Research-orientation scale

Those participants who scored higher on the unidisciplinary factor engaged in fewer cross-disciplinary collaborative activities. Additionally, those who scored higher on the unidisciplinary factor scored lower on both the multidisciplinary and interdisciplinary/transdisciplinary factors. Those who scored higher on the multidisciplinary factor tended to engage in more cross-disciplinary and TREC-related collaborative activities, had more collaborators, reported better collaborative productivity at their center, and perceived more institutional resources. Those who scored higher on the interdisciplinary/transdisciplinary factor engaged in more cross-disciplinary and TREC-related collaborative activities, and were also found to score higher on the multidisciplinary factor.

History of interdisciplinary/transdisciplinary centers and projects

The fewer the number of years of involvement in interdisciplinary/transdisciplinary centers and projects, the fewer the number of collaborators the participants reported having, and the more likely they were to believe that Year-One deliverables would be completed on time. Additionally, the fewer the number of years of involvment in interdisciplinary/transdisciplinary projects, the more positively the respondents rated their interpersonal collaborations, their collaborative productivity, their impressions of their centers, and their participation as a center member.

Institutional resources

The better the researcher judged his or her center’s institutional resources to be, the more positive were her or his impressions of the center and the more satisfied he or she was with previous collaborators. Additionally, the better a respondent’s perceptions of institutional resources, the more positively he or she rated collaborative productivity and interpersonal collaboration within the respective center.

Regression Analyses of Year-One Survey Data

The correlational and factor analyses summarized above provided a basis for exploring potential associations between nine predictor variables and three outcome variables. The predictors included institutional resources, years in interdisciplinary/transdisciplinary centers, number of collaborators, collaboration productivity, interpersonal collaboration, collaboration satisfaction rating, unidisciplinary factor, multidisciplinary factor, and interdisciplinary/transdisciplinary factor. The following outcomes were included: cross-disciplinary collaboration activities, semantic-differential/impressions scale, and the completing-deliverables scale. Given the exploratory nature of the analysis and to help ensure that the models were not over-parameterized, stepwise regression was used to identify significant predictors. To identify potentially significant independent variables in this exploratory analysis, a criterion of p< 0.10 was used.

Table 2–Table 4 summarize the significant findings from the regression analyses. As shown in Table 2, the higher the ratings on multidisciplinary and interdisciplinary/transdisciplinary factors, the more cross-disciplinary activities the participant was engaged in. Also, as shown in Table 3, the fewer the number of years a participant had spent in interdisciplinary/transdisciplinary projects prior to the TREC initiative, the more positive the impressions of the respective TREC center and feelings as a member of that particular center, and the higher the ratings of collaborative productivity and interpersonal collaborative processes within the center. Table 4 indicates that the more favorably participants rated the collaboration productivity of their center, the more likely it was that they thought that the Year-One deliverables would be completed on time and that they had spent fewer years as members of interdisciplinary/transdisciplinary research centers prior to the TREC initiative.

Table 2.

Significant predictors (p<0.10) from stepwise regression analysis for outcome: cross-disciplinary collaboration activitiesa

| Variable | Parameter estimate | Pr>|t| |

|---|---|---|

| Multidisciplinary factor | 0.58 | 0.010 |

| Transdisciplinary factor | 0.44 | 0.090 |

Note: R-square=0.310; n=45; df=2, 42.

Higher scores indicate more cross-disciplinary collaborative activities.

Table 4.

Significant predictors (p<0.10) from stepwise regression analysis for outcome: investigators’ completing-deliverables scalea

| Variable | Parameter estimate | Pr>|t| |

|---|---|---|

| Number of yrs of inter/trans centers | −0.030 | 0.049 |

| Collaboration-productivity scale | 0.298 | 0.087 |

Note: R-square=0.195; n=45; df=2, 42.

Higher scores correspond to more optimism of completing deliverables. inter, interdisciplinary; trans, transdisciplinary; yrs, years

Table 3.

Significant predictors (p<0.10) from stepwise regression analysis for outcome: investigators’ impressions of their TREC center and as a TREC member (semantic/differential impressions scale)a

| Variable | Parameter estimate | Pr>|t| |

|---|---|---|

| Collaboration productivity scale | 0.63 | 0.008 |

| Interpersonal collaboration scale | 0.64 | 0.018 |

| Number of yrs of inter/trans centers | −0.17 | 0.099 |

Note: R-square=0.753; n=45; df=3, 41.

Higher scores indicate more positive impressions of center. inter, interdisciplinary; trans, transdisciplinary; TREC, Transdisciplinary Research on Energetics and Cancer; yrs, years

Methods of the Written-Products Protocol

Rating the Cross-Disciplinary Qualities of Developmental Proposals

To assess the near-term outcomes of cross-disciplinary collaboration and productivity, a written-products protocol (cancercontrol.cancer.gov/trec/TREC-Protocol-2006-09-27.pdf) was developed for evaluating the integrative qualities and scope of TREC developmental-project proposals. Each TREC center was allotted $250,000 of developmental funds (for which investigators apply through an internal application process, receiving final approval by the TREC steering committee). These funds are intended, in part, to support TREC members’ efforts to facilitate collaborative research above and beyond what was originally proposed in each team’s individual application for establishing a TREC center at its institution. Developmental-research projects are intended to provide an avenue for integrating the conceptual and methodologic perspectives of TREC investigators trained in different fields. The timing of this analysis, using only developmental-project proposals submitted during the first 6 months of the initiative, meant that no cross-center proposals were included; the first call for cross-center proposals came later in the initiative.

Protocol Criteria

Members of the NCI evaluation team created evaluation criteria for assessing the degree of cross-disciplinary integration and the conceptual breadth or scope of the proposed developmental projects. These criteria were adapted from the written-products protocol developed by Mitrany and Stokols12 to assess the cross-disciplinary scope of doctoral dissertations conducted in an interdisciplinary graduate program. The dimensions of cross-disciplinarity assessed included: disciplines represented in the content of the proposal; levels of analysis reflected in the proposed research (i.e., molecular and cellular; individual, group, and interpersonal; organizational and institutional; community and regional; societal; national; and global); the type of cross-disciplinary integration reflected in each proposal (i.e., unidisciplinarity, multidisciplinarity, interdisciplinarity, or transdisciplinarity); the scope of transdisciplinary integration reflected in each proposal (i.e., the breadth or extent to which there is integration of analytic levels, analytic methods, and discipline-specific concepts, rated on a 10-point Likert scale ranging from none to substantial); an overall assessment of the general scope of each proposal (i.e., its breadth, or the extent to which various disciplines are represented and investigators from different disciplines, analytic levels, and analytic methods are included in the proposal, rated on a 10-point Likert scale ranging from none to substantial).

Procedures for Reviewing TREC Developmental-Project Proposals

Independent assessments of each developmental proposal were completed by two independent reviewers using the TREC written-products protocol. A total of 21 proposals submitted during Year One of the TREC initiative were assessed. The reviewers were trained by members of the evaluation team to ensure consistent interpretations and applications of the written-products rating scales. Consensus conference calls were later held with a moderator and members of the NCI evaluation team. Members of the evaluation team included individuals with a wide range of cross-disciplinary clinical and research experience, as well as previous experience conducting evaluations of other large transdisciplinary initiatives. Discrepant scores on the various rating scales for each proposal were discussed among the group until consensus was reached.

Analyses and Results of the Written-Products Protocol

Inter-Rater Reliabilities

Inter-rater reliabilities based on Pearson’s correlations ranged from 0.24 to 0.69 across the different rating scales. The highest reliabilities were identified for the ratings of experimental types (0.69); the number of analytic levels (0.59); disciplines (0.59); the general scope reflected in the proposals (0.52); and the methods of analysis (0.41). The lowest inter-rater reliability (0.24) was found when the reviewers attempted to identify the specific type of cross-disciplinary integration reflected in the various proposals.

Descriptive Statistics

Disciplines represented within the developmental proposals

The average number of disciplines represented in the proposals was 3.7 (range 2.0–6.0); 43 % of the proposals included three disciplines, whereas 14 % of the proposals included two, four, five, or six disciplines. More than 35 different disciplines were represented across the 21 proposals.

Levels of analysis included in the developmental proposals

Four levels of analysis were identified across the proposals: molecular and cellular; individual; group and interpersonal; and community and regional.

Types of cross-disciplinary integration reflected in the proposals

Fourteen of the developmental proposals were identified by the raters as being interdisciplinary; six were classified as unidisciplinary; one was rated as being multidisciplinary; and none was judged to be transdisciplinary.

Cross-center collaboration

No proposals were found to include researchers or resources from more than one TREC center.

Correlations among dimensions of cross-disciplinarity

Significant correlations among the dimensions of cross-disciplinarity, assessed for each of the 21 developmental proposals, are reported in Table 5. Generally, the higher the number of disciplines reflected in a proposal, the broader its integrative scope (r =0.90) and the larger its number of analytic levels (r =0.70), as rated by independent reviewers of the proposal. Also, the higher the type of crossdisciplinarity—per Rosenfield’s continuum13—reflected in a proposal, the broader its overall scope was judged to be (r =0.68).

Table 5.

Bivariate correlations among key written-products study variables

| 1 | 2 | 3 | 4 | 5 | 6 | |

|---|---|---|---|---|---|---|

| Cross-disciplinary integration type | — | 0.68** | 0.40* | 0.21 | 0.20 | 0.37 |

| General scope | — | 0.90** | 0.74** | 0.38 | 0.67** | |

| Total proposal disciplines | — | 0.70** | 0.31 | 0.65** | ||

| Total proposal analysis levels | — | 0.49* | 0.62** | |||

| Total proposal experiment types | — | 0.69** | ||||

| Total methods of analysis | — |

Note: N=21; dashes indicate a correlation of 1.0.

p<0.05;

p<0.01

Discussion

This study contributes to the science of team science by (1) developing and testing new evaluation research tools (i.e., the TREC Year-One survey and the written-products protocol); and (2) by opening new avenues of investigation for evaluating the empirical links between collaboration readiness and near-term collaborative processes and products in the context of large-scale, cross-disciplinary research and training initiatives. The overall response rate for the TREC Year-One survey was 74%, but the overall sample size for this initial phase of the TREC evaluation study was relatively small (i.e., n=56 survey participants; n=21 developmental proposals). Given the small sample size, the analyses should be considered exploratory and the results preliminary.

The measures developed for the Year-One survey demonstrated good internal reliability (α range=0.74–0.98). The most novel measure developed in this study was the research-orientation scale, designed to assess the four facets of disciplinary collaboration ranging from unidisciplinarity to transdisciplinarity. Analyses clearly demonstrated that there are distinct factors within this scale, although—likely owing to the small sample size—it is not clear whether this scale represents four distinct factors as conceptualized by Rosenfield13 or if three factors (unidisciplinary, multidisciplinary, and interdisciplinary/transdisciplinary) better represent the cross-disciplinary continuum. Interestingly, there is an ongoing debate in the science of team science literature about the differentiation between interdisciplinary and transdisciplinary collaboration.14,15 Overall the current study found that those who scored high on the unidisciplinary factor scored lower on the multidisciplinary and interdisciplinary/transdisciplinary factors. Additionally, the cross-disciplinary aspects of the scale, the multidisciplinary and the transdisciplinary factors, were most strongly related.

The empirical associations observed in this study between the research-orientation–scale factors and other survey scales provide additional support for the conceptual factors, and shed light on scientists’ attitudes toward cross-disciplinary collaboration. For instance, those who scored higher on the unidisciplinary factor reported fewer cross-disciplinary collaborative activities, whereas those ranked higher on the multidisciplinary and interdisciplinary/transdisciplinary factors reported more cross-disciplinary and TREC-related collaborative activities. These relationships were corroborated through additional regression analyses. The reported finding that an investigator’s cross-disciplinary research orientation is related to greater engagement in cross-disciplinary activities (on a self-reported index of collaborative behaviors) offer preliminary cross-validation of the conceptual assumptions underlying the development of the research-orientation scale. Additional support for these relationships involves the number of collaborators associated with the three research-orientation–scale factors. Those who scored higher on the multidisciplinary and interdisciplinary/transdisciplinary factors reported more collaborators prior to TREC, whereas the unidisciplinary factor was not associated with the number of collaborators prior to TREC. The inverse relationship between scores on the unidisciplinary and the multidisciplinary and interdisciplinary/transdisciplinary factors implies that they may be mutually exclusive. Further examinations of these factors should aim to confirm this hypothesis. A logical next step would be to investigate whether individuals who begin a transdisciplinary initiative like TREC with a unidisciplinary orientation change over time as they engage in transdisciplinary collaborations.

It was also found that those who scored higher on the multidisciplinary factor felt that their center had more institutional resources. This finding suggests either that perhaps investigators with more resources might be better equipped to engage in collaborative endeavors with researchers in disparate disciplines, or that working with investigators from other disciplines might increase available resources. Future research is needed to further understand this relationship.

The number of years a researcher had been involved in pre-TREC interdisciplinary/transdisciplinary centers and projects revealed interesting associations among collaborative attitudes that may reflect certain challenges inherent in interdisciplinarytransdisciplinary collaboration. For instance, the fewer years a researcher had been involved in interdisciplinary/transdisciplinary projects prior to the TREC initiative, the more positive were his or her attitudes toward the respective TREC center’s collaborative productivity and interpersonal collaboration; his or her impressions of the center; and her or his feelings as a member of that center. Inversely, this finding suggests that those respondents who reported a greater number of years involved in interdisciplinary/transdisciplinary centers and projects rated these attitudinal factors less positively. A possible interpretation of this finding is that it reflects respondents’ realistic understanding of the substantial time and energy required to develop interpersonal, physical, and funding infrastructures for scientific collaboration. Alternatively, the more-experienced investigators in cross-disciplinary initiatives may be more likely to perceive the TREC project as laborious and time-consuming compared to other program projects (e.g. P01, P50, or multisite trials) that may be funded at their centers. Despite these findings, it is important to note that the majority of responses by the participants were in the upper range of the scale; that is, overall the investigators rated their experiences quite positively (see means and ranges in Table 1).

Investigators’ perceptions of greater institutional resources at their TREC centers were related to a more positive outlook for a variety of collaborative processes and outcomes (e.g., as reflected in their more-positive ratings of their center, their confidence in achieving transdisciplinary research and training goals, the collaborative productivity of their center, and the interpersonal qualities of their collaborations). Perhaps institutional resources provide a stable foundation for researchers that enable them to more effectively address the challenges of cross-disciplinary science and training. Moreover, not having to compete for scarce resources may facilitate greater trust and cohesion among center members as well as more favorable assessments of the lead principal investigators. Importantly, feelings of trust are an essential prerequisite for effective collaboration in cross-disciplinary teams.6,16–18

Finally, the collaborative-productivity and interpersonal-collaboration scales included in the Year-One survey were associated with investigators’ more-positive overall impressions of their center and more favorable feelings as members of the center. These associations suggest that the more favorably an investigator perceives the productivity and interpersonal relationships in a center, the more positive will be her or his overall assessment of the center. It remains to be determined in future studies whether more-positive assessments and interpersonal relationships among members of a cross-disciplinary center result in higher levels of research productivity and more-significant, longer-term impacts on science and society.

Turning to the ratings of the TREC investigators’ developmental proposals, the written-products protocol revealed evidence of successful collaboration and disciplinary integration during the first year of this large-scale, cross-disciplinary initiative. Within the 21 proposals submitted during the first 6 months of the initiative, more than 35 disciplines and four levels of analysis were represented. Thus, during the start-up phase of the TREC initiative, investigators not only had been able to launch their initially proposed research programs but also had made considerable progress in developing new collaborative studies, many of which were judged by independent reviewers as being broadly interdisciplinary in scope. The lack of proposals of a transdisciplinary nature is most likely due to the constraints of doing this work so soon after the initiative was funded. It is anticipated that analyses of subsequent developmental proposals in future years of the initiative will find them more transdisciplinary in their scope and orientation. Due to its timing, the near-term analysis of developmental-project proposals was limited to within-center projects; efforts by NCI, the TREC coordination center, and the TREC steering committee have been ongoing to support collaboration among the members of multiple TREC centers. An initial review of the developmental-project proposals submitted after the completion of these analyses indicated that cross-center collaborations were already taking place.

Limitations and Future Directions

As noted earlier, the results of this study are necessarily exploratory and preliminary due to the small size of the study sample. Future investigations should incorporate both larger sample sizes and other cross-disciplinary groups of researchers to validate this study’s results, especially those analyses using the research-orientation scale and the regression models. Additionally, measures of collaborative readiness and the written-products protocol should also be administered across multiple initiatives in order to more firmly establish the psychometric properties of the scale and to assess its applicability across multiple research teams and settings. In fact, the research-orientation–scale protocol developed in this study is currently being administered to investigators participating in another large-scale, NCI cross-disciplinary initiative. Along these lines, an important direction for future research is to enlarge the research-orientation–scale item pool to ensure that the conceptual underpinnings of the scale are well-represented, increasing the number of items per factor and maximizing the factor loadings. For instance, the inclusion of additional interdisciplinary items might increase the likelihood of identifying interdisciplinarity and transdisciplinarity as separable factors in a larger sample.

The response rate to the Year-One survey was lower than expected. Although evaluation was explicitly indicated in the cooperative agreement for the initiative and included as a role for the coordination center, many investigators felt that they were not aware of the evaluation component as intended before committing to participate in the grant submission, and thus possibly did not have buy-to the importance of participating in the evaluation; they also reported feeling that the communication regarding the specific evaluation efforts conducted in the first year was not sufficient, and that an adequate participatory process was not used to fully engage all investigators. Confidentiality agreements limit the capacity at this time to more clearly differentiate who did not respond to the survey. Some hypotheses include suppositions that the nonresponders were “loner” investigator types, were individuals with a small percentage of time to devote to the TREC initiative, or were individuals overburdened by starting up projects. Therefore it is unclear if the nonresponders were not ready to engage in transdisciplinary research collaboration or simply were not ready to engage in evaluation efforts perceived as peripheral to their scientific mission.

Another methodologic limitation imposed by the small sample size was the difficulty of conducting analyses linking the Year-One survey data with the developmental-proposal ratings. Twenty-six individuals listed as investigators in the 21 developmental proposals had also completed the Year-One survey. These researcher/proposal pairs were used to explore the relationships between participants’ self-reports of collaborative readiness and the independent reviewers’ external ratings of developmental-project proposals in terms of their cross-disciplinary integration and overall scope. Significant associations between the survey responses and the proposal ratings were negligible, possibly due to the small number of investigators for whom both survey and proposal data were available.a

The written-products protocol assesses behavioral evidence of cross-disciplinary integration that can be gathered over the course of an initiative to gauge changes in the quantity and qualities of collaborative products. The consensual rating procedure used in this study suggests that reviewers’ assessments of the development proposals were ultimately reliable. However, the inter-rater reliabilities of the reviewers prior to the consensus process were somewhat low, thereby potentially limiting the generalizability of this protocol to other research teams and settings. In some cases, the reviewers were challenged by the breadth of the scientific content of the proposals, which increased the need for the consensus process. It is recommended that additional refinements be made to this tool in order to enhance the clarity of the protocol criteria and the levels of inter-rater reliability on each evaluative dimension. More-detailed descriptions of the criteria and the inclusion of concrete examples (e.g., narrow vs broad integrative scope) are likely to facilitate greater accuracy and consistency of reviewers’ ratings of research products in future studies.

An additional limitation of this study is the retrospective measurement of antecedents and the collection of baseline data several months into the award cycle. Unfortunately, the timing of the award and the necessity of involving the coordination center and other TREC members in planning the evaluation study precluded starting the evaluation from Day 1. It was not possible to know what centers or groups of investigators were going to be funded before they received the award. Also, in order to establish buy-in of the investigators for the evaluation, time was needed for the participatory development of the baseline measures. If baseline measurement at the immediate onset of the award is desired, then a participatory process cannot occur and it is likely that a mandate for evaluation by the funding agency can have alternative impacts and limitations that will need to be taken into account.

The coordination center is a unique and important feature of this initiative, but because of its role in facilitating the evaluation and given its priorities on administration over scientific research, the coordination center itself was not evaluated. Therefore, this decision was based primarily on resource and potential-bias issues. In future studies, the broader evaluation of the structural organization of the initiative as well as the collaborative factors relevant to the coordination center should be examined. This would be accomplished best through an evaluation process conducted fully by a team external to the initiative.

In conclusion, this study was conducted during the start-up phase of a 5-year, transdisciplinarycenter initiative. Subsequent studies will be needed to determine the empirical links between collaborative-readiness factors at the outset of an initiative and subsequent collaborative processes and outcomes. Further investigations are needed to identify the highest-leverage determinants of collaboration readiness and capacity—that is, those that are linked most closely to important scientific and health advances as they emerge over the course of a team science initiative. A broader understanding of the relationships among collaborative-readiness factors, collaborative capacity, and longer-term collaborative impacts on health science, clinical practice, and population well-being will enable funding agencies to more effectively identify and support the teams of researchers with the greatest potential to succeed in complex cross-disciplinary research.

Acknowledgments

This article is based on a paper presented at the National Cancer Institute Conference on The Science of Team Science: Assessing the Value of Transdisciplinary Research on October 30–31, 2006, in Bethesda MD. This research was supported by an Intergovernmental Personnel Act (IPA) contract to Daniel Stokols from the Division of Cancer Control and Population Sciences of the NCI; by Cancer Research and Training Award fellowships to Kara L. Hall and Brandie K. Taylor; and by the NCI TREC initiative. Additionally this research was supported by the following TREC-center grants funded by the NCI: U01 CA-116850-01; U54 CA-116847-01; U54 CA-116867-01; U54 CA-116848-01; and U54 CA-116849-01.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Also, the fact that some of the developmental-project proposals already had been outlined as part of the original parent proposal submitted to NCI, while others were created after the TREC centers were launched, precluded analyses of the temporal links between collaboration readiness during the start-up phase of a center and the integrative qualities of collaborative projects that were presumed to have been initiated once the TREC initiative was underway.

No financial disclosures were reported by the authors of this paper.

References

- 1.Kessel FS, Rosenfield PL, Anderson NB, editors. New York: Oxford University Press; 2003. Expanding the boundaries of health and social science: case studies in interdisciplinary innovation. [Google Scholar]

- 2.Facilitating interdisciplinary research. Washington DC: The National Academies Press; 2005. Committee on Facilitating Interdisciplinary Research, National Academy of Sciences, National Academy of Engineering, Institute of Medicine. www.nap.edu/catalog.php?record_id=11153#toc. [Google Scholar]

- 3.Hiatt RA, Breen N. The social determinants of cancer: a challenge for transdisciplinary science. Am J Prev Med. 2008;35(2) doi: 10.1016/j.amepre.2008.05.006. XXX-XXX. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Klein JT. Evaluation of interdisciplinary and transdisciplinary research: a literature review. Am J Prev Med. 2008;35(2) doi: 10.1016/j.amepre.2008.05.010. XXX-XXX. [DOI] [PubMed] [Google Scholar]

- 5.Stokols D, Fuqua J, Gress J, et al. Evaluating transdisciplinary science. Nicotine Tob Res. 2003;5(1S):S21–S39. doi: 10.1080/14622200310001625555. [DOI] [PubMed] [Google Scholar]

- 6.Stokols D, Misra S, Moser RP, Hall KL, Taylor BK. The ecology of team science: understanding contextual influences on transdisciplinary collaboration. Am J Prev Med. 2008;35(2S) doi: 10.1016/j.amepre.2008.05.003. XXX-XXX. [DOI] [PubMed] [Google Scholar]

- 7.Stokols D, Harvey R, Gress J, Fuqua J, Phillips K. In vivo studies of transdisciplinary scientific collaboration: lessons learned and implications for active living research. Am J Prev Med. 2005;28(2S2):202–213. doi: 10.1016/j.amepre.2004.10.016. [DOI] [PubMed] [Google Scholar]

- 8.National Cancer Institute. Transdisciplinary research on energetics and cancer. 2006 https://www.compass.fhcrc.org/trec/

- 9.Hall KL, Stokols D, Moser RP, Thornquist M, Taylor BK, Nebeling L. The collaboration readiness of transdisciplinary research teams and centers: early findings from the NCI TREC baseline evaluation study. Bethesda MD. Proceedings of the NCI–NIH Conference on The Science of Team Science: Assessing the Value of Transdisciplinary Research; 2006 Oct; pp. 30–31. [Google Scholar]

- 10.Mâsse LC, Moser RP, Stokols D, et al. Measuring collaboration and transdisciplinary integration in team science. Am J Prev Med. 2008;35(2S) doi: 10.1016/j.amepre.2008.05.020. XXX-XXX. [DOI] [PubMed] [Google Scholar]

- 11.Olson GM, Olson JS. Distance matters. Human-Computer Interaction. 2000;15(2/3):139–178. [Google Scholar]

- 12.Mitrany M, Stokols D. Gauging the transdisciplinary qualities and outcomes of doctoral training programs. J Plan Educ Res. 2005;24(4):437–449. [Google Scholar]

- 13.Rosenfield PL. The potential of transdisciplinary research for sustaining and extending linkages between the health and social sciences. Soc Sci Med. 1992;35:1343–1357. doi: 10.1016/0277-9536(92)90038-r. [DOI] [PubMed] [Google Scholar]

- 14.Mabry PL, Olster DH, Morgan GD, Abrams D. Interdisciplinary and system science to improve population health: a view from the NIH office of behavioral and social sciences research. Am J Prev Med. 2008;35(2S) doi: 10.1016/j.amepre.2008.05.018. XXX-XXX. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Stokols D, Hall KL, Taylor BK, Moser RP. The science of team science: overview of the field and introduction to the supplement. Am J Prev Med. 2008;35(2) doi: 10.1016/j.amepre.2008.05.002. XXX-XXX. [DOI] [PubMed] [Google Scholar]

- 16.Jarvenpaa SL, Leidner DE. Communication and trust in global virtual teams. Organizational Science. 1999;10(6):791–815. [Google Scholar]

- 17.Mayer RC, Davis JH, Schoorman FD. An integrative model of organizational trust. Acad Manage Rev. 1995;20(3):709–734. [Google Scholar]

- 18.Sonnenwald DH. Managing cognitive and affective trust in the conceptual R&D organization. In: Houtari M, Iivonen M, editors. Trust in knowledge management and systems in organizations. Hershey PA: Idea Publishing; 2003. [Google Scholar]