Abstract

INTRODUCTION

This study aims to establish face, content and construct validation of the SEP Robot (SimSurgery, Oslo, Norway) in order to determine its value as a training tool.

SUBJECTS AND METHODS

The tasks used in the validation of this simulator were arrow manipulation and performing a surgeon's knot. Thirty participants (18 novices, 12 experts) completed the procedures.

RESULTS

The simulator was able to differentiate between experts and novices in several respects. The novice group required more time to complete the tasks than the expert group, especially suturing. During the surgeon's knot exercise, experts significantly outperformed novices in maximum tightening stretch, instruments dropped, maximum winding stretch and tool collisions in addition to total task time. A trend was found towards the use of less force by the more experienced participants.

CONCLUSIONS

The SEP robotic simulator has demonstrated face, content and construct validity as a virtual reality simulator for robotic surgery. With steady increase in adoption of robotic surgery world-wide, this simulator may prove to be a valuable adjunct to clinical mentorship.

Keywords: SEP Robot, Validation, Training tool

Introduction

Surgery is a profession that relies on a surgeon's experience in previous cases and hands-on experience with the appropriate tools. With the number of training hours for surgical trainees decreasing, simulation offers an opportunity to fill some of the gaps.1,2 Surgical simulators also allow the trainee to develop their psychomotor skills and practice to the required skill level without the risk of danger to patients.3,4 Simulation is an established component of laparoscopic training and may prove to be a valuable tool in robotic surgery. Several forms of simulation exist such as mechanical trainers, animal models and virtual reality (VR).5 VR simulators may prove to be more useful as they provide statistical feedback of performance to the surgeon.

To ensure that VR simulators provide a realistic comparison to real-life surgery, they must undergo scientific validation.6,7 This study aims to establish face, content and construct validation of the SEP Robot (SimSurgery, Oslo, Norway) in order to determine its value as a training tool.

Subjects and Methods

System

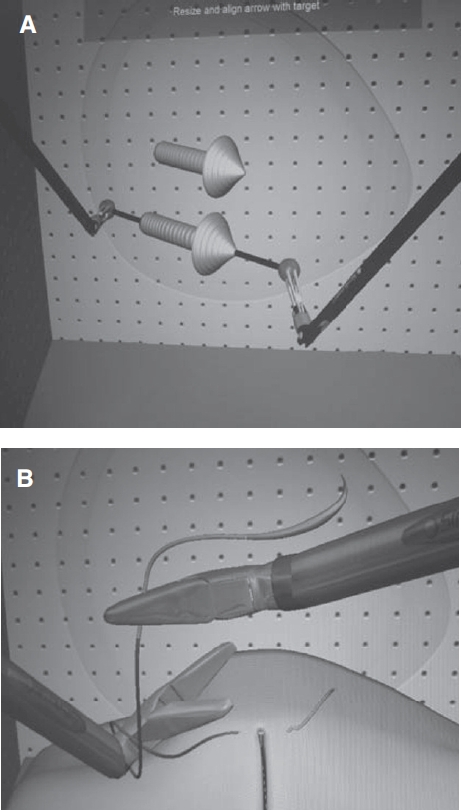

The SEP Robot is a VR robotic simulator (Fig. 1) with a console connected to two instruments with 7° of freedom (DOF) as in clinical robotic surgery. The master console contains a motion tracking device, which detects the position of the controllers in space and recreates these movements onto a computer screen. Like the da Vinci robot (Intuitive Surgical, Sunnyvale, CA, USA) the simulator does not provide haptic feedback. However, unlike the da Vinci system, the images are not three dimensional (3D). The simulator allows the trainee to perform tasks, such as arrow manipulation and suturing. The simulator records time taken, instrument tip trajectory and error scores for each task.

Figure 1.

The SEP Robot, virtual reality simulator.

Subjects and protocol

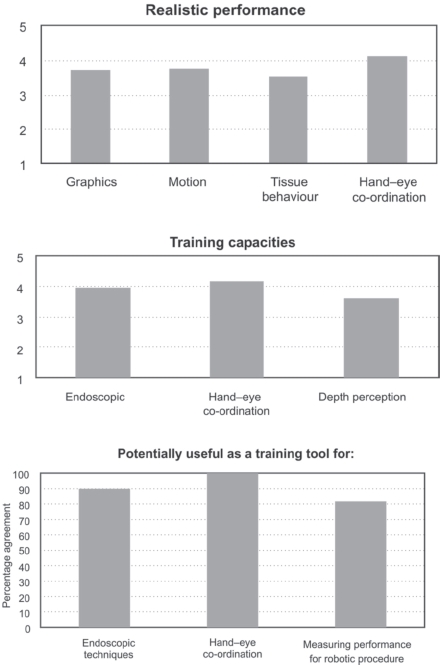

The tasks used in the validation of this simulator were arrow manipulation and performing a surgeon's knot (Fig. 2). In the arrow manipulation task, the volunteer must grab the balls on either end of the arrow and reposition it as the computer demonstrates without over stretching the arrow. Exercise time and incidences of arrow drop (arrow overstretch leading to the resetting of the original arrow position) were evaluated. This exercise assesses hand-eye co-ordination and the ability to move objects using robotic tools. The second exercise consisted of performing two knots consecutively and alternating the arm handing the needle. Time, equipment drop (thread drop and grab point drop) and a percentage of the maximum stretch of the tightening and winding steps were evaluated for this exercise.

Figure 2.

(A) Task 1: place an arrow; (B) Task 2: surgeon’s knot.

Thirty participants (18 novices and 12 experts) received a demonstration of each task and then attempted it themselves. All participants then filled out a questionnaire to assess face and content validity (Appendix 1, online only). The questionnaire consisted of 19 questions predominantly scored on yes/no answers and a 5-point Likert scale: 5 (excellent/very useful) to 1 (very bad/useless). Construct validity was based on the performance data (metrics) recorded by the simulator.

Statistical analysis

All data were entered into a Microsoft Excel 2003 (Microsoft Corporation, Redmond, WA, USA) spreadsheet. Construct validity was analyzed by comparing the mean performance variables for the two groups (novice and experts), using a two-tailed independent samples t-test. Statistics were calculated using the Statistical Package for Social Sciences, v15.0 (SPSS, Chicago, IL, USA).

Results

Participants

Thirty participants (18 novices, 12 experts) completed the procedures. The novice group consisted of 3rd and 4th year students in the School of Medicine at King's College London. The experienced group consisted of six senior residents and six consultants in urology. The median age of the 12 experienced surgeons was 41 years (range, 32-54 years), whereas the median age of the 18 novices was 22 years (range, 21-23 years). There was no difference between novices and experts regarding sex and handedness. The novices had no experience in robotic surgery, while experienced subjects had performed an average of 148 robotic cases (range, 30-500). No subject had previous experience with the SEP Robot simulator.

Face and content validity

All 30 participants filled out the questionnaire (Appendix 1, online only). Usefulness of tasks, features, and movement realism were scored between a mean value of 3.8 for depth perception and 3.6 for appreciation of training with the instrument. There were no significant differences between the mean values of the scores given by the experts and surgical trainees (mean Likert scores 3.5 and 3.7; Fig. 3).

Figure 3.

Face and content validity.

Participants rated the graphics of the trainer as 3.7 on a Likert-scale. Of participants, 90% rated the trainer realistic and easy to use, 87% considered it generally useful for training and 90% agreed that the simulator was useful for hand-eye co-ordination and suturing.

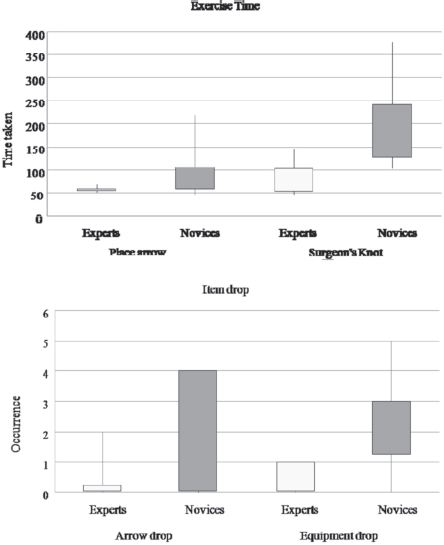

Construct validity

The simulator was able to differentiate between experts and novices in several respects (Table 1). All the parameters that showed a significant difference between the two groups are shown in Figure 4. The novice group required more time to complete the tasks than the expert group, especially suturing (mean time 78.8 s for experts versus 198.3 s for novices; P = 0.001).

Table 1.

Performance data

| Task | Performance evaluation | Novices (n = 18) | Experts (n = 12) | P-value |

|---|---|---|---|---|

| Place an arrow | ||||

| Time to accomplish task(s) | ||||

| Mean | 86.94 | 57.42 | 0.03 | |

| SEM | 10.69 | 1.45 | ||

| Dropped arrows | ||||

| Mean | 1.94 | 0.33 | 0.005 | |

| SEM | 0.416 | 0.188 | ||

| Lost arrows | ||||

| Mean | 0.222 | 0 | 0.3 | |

| SEM | 0.17 | - | ||

| Tool collision sum | ||||

| Mean | 0 | 0 | NS | |

| SEM | - | - | ||

| Close entry sum | ||||

| Mean | 3.28 | 0.42 | 0.02 | |

| SEM | 0.946 | 0.26 | ||

| Surgeon's knot | ||||

| Time to accomplish task(s) | ||||

| Mean | 198.33 | 78.83 | 0.000 | |

| SEM | 22.17 | 9.51 | ||

| Max tightening stretch | ||||

| Mean | 164.81 | 72.62 | 0.002 | |

| SEM | 21.064 | 6.56 | ||

| Equipment dropped sum | ||||

| Mean | 2.33 | 0.33 | 0.000 | |

| SEM | 0.31 | 0.14 | ||

| Max winding stretch | ||||

| Mean | 229.97 | 85.28 | 0.027 | |

| SEM | 50.44 | 3.57 | ||

| Tool collision sum | ||||

| Mean | 13.56 | 4.42 | 0.001 | |

| SEM | 1.83 | 0.9 | ||

Figure 4.

Comparison of novices and experts performance in the two tasks.

During surgeon's knot, experts significantly outperformed novices in maximum tightening stretch, instruments dropped, maximum winding stretch and tool collisions in addition to total task time. A trend was found towards the use of less force by the more experienced participants.

Discussion

Technological advances such as robotics and VR siulators will change our surgical practice and training in the future. With ever increasing pressures on surgical performance, the profession is constantly looking for training systems that are novel, reproducible and validated. With surgical trainees operating less1 than ever before due to shortened training programmes, reduced working hours2 and the advancement of medical and minimally invasive therapies, the application of VR technology is evident.

For more than a decade, advancing computer technologies have incorporated VR into surgical training.5 This has become especially important in training for minimal invasive procedures, which often are complex and leave little room for error. With the advent of robotic surgery, a valid VR robotic surgery simulator may reduce the learning curve and improve patient safety.8,9

VR simulators afford a unique opportunity for trainees to practice reality-based surgical skills without any risk to the patients. VR laparoscopic surgical simulators have shown great promise in several areas of surgical training, and repeated practice with some VR simulators has shown improved performance in the operating room in prospective studies.10-14 The principles of evaluating surgical simulators are well established. Common benchmarks on which simulators arejudged include reliability, face, content, construct, concurrent and predictive validities.6 Face validity is an assessment of virtual realism by any user. Content validity is defined as ‘an estimate of the validity of a testing instrument based on detailed examination of the contents of the test items'. The evaluation is carried out by reviewing each item to determine whether it is appropriate for the test and by assessing the overall cohesiveness of the items, such as whether the test contains the steps and skills that are used in a procedure. Establishing content validity is also largely subjective and relies on the judgments of experts about the relevance of the materials used.

Construct validity is regarded as one of the most important aspects of simulator evaluation, because it determines whether the device can differentiate between expert and novice. For a simulator to have construct validity, experts must outperform novices during standardised simulated tasks. Construct validity is defined as ‘a set of procedures for evaluating a testing instrument based on the degree to which the test items identify the quality, ability, or trait it was designed to measure'.

While there are some validated training devices and protocols for standard laparoscopy, the area of robot-assisted surgical training has few well established or validated tools.15-17 To date, a few papers have shown validity of VR simulators for robotic surgery.17-23 The first such article appeared in 2008. In a randomised, blinded, pilot study, the validity of a da Vinci robotic VR simulator platform was tested during a paediatric robotic surgery course at the annual American Urological Association meeting in 2007. Participants performed robotic skills tasks on the da Vinci robot and on an offline dV-Trainer. Most felt that VR simulation was useful for teaching robotic skills and the offline trainer was able to discriminate between experts and novices.23

Our study has shown face, content and construct validity of the SEP Robotic simulator for two specific tasks. Data revealed that the simulator can discriminate novices from experts. For each task, mean procedure time defined skill levels, with experts taking significantly less time than novices. Moreover, the experts showed fewer errors compared with novices in the two tasks and in addition decreased tendency to use unnecessary movements.

There is, however, room for improvement. The tasks assessed here are generic rather than specific to complex procedures such as robotic-assisted radical prostatectomy or robotic hysterectomy. As VR robotic simulators improve, it is expected that they will become more procedure specific. The da Vinci Si system has the ability to be linked with the MIMIC VR robotic simulator21 across a computerised interface and is a precursor to more detailed procedural tasks. This new system was unveiled at the World Robotics Symposium in Florida in 2010. Likewise, the RoSS simulator (Roswell Park, NY, USA) provides a new platform for practising robotic surgery22 without causing harm to patients.

Our results in 30 participants need to be validated in larger participant groups. While these early results are encouraging, it remains to be seen as to whether this simulator can achieve predictive validity in a randomised trial.

The findings of this study contribute to the underlying knowledge of how simulators can support surgical training and suggest optimal prospective measures for incorporating the SEP robot into surgical training curricula. It is now an important part of robotic training within the Simulation and Technology Enhanced Learning Initiative (STeLi) of the London Deanery.

Conclusions

The SEP robotic simulator has demonstrated face, content and construct validity as a VR simulator for robotic surgery. With steady increase in adoption of robotic surgery worldwide, this simulator may prove to be a valuable adjunct to clinical mentorship.

Acknowledgments

Prokar Dasgupta acknowledges financial support from the Department of Health via the National Institute for Health Research (NIHR) comprehensive Biomedical Research Centre award to Guy's and St Thomas’ NHS Foundation Trust in partnership with King's College London and King's College Hospital NHS Foundation Trust. He also acknowledges the support of the MRC Centre for Transplantation.

The simulation project at Guy's is supported by programme grants from The London Deanery, School of Surgery and Olympus. None of the authors have any financial interests in the SEP Robotic simulator.

References

- 1.Chikwe J, de Souza AC, Pepper JR. No time to train the surgeons. BMJ. 2004;328:418–9. doi: 10.1136/bmj.328.7437.418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Mundy AR. The future of urology. BJU Int. 2003;92:337–9. doi: 10.1046/j.1464-410x.2003.04381.x. [DOI] [PubMed] [Google Scholar]

- 3.Balasundaram I, Aggarwal R, Darzi A. Short-phase training on a virtual reality simulator improves technical performance in tele-robotic surgery. Int J Med Robot. 2008;4:139–45. doi: 10.1002/rcs.181. [DOI] [PubMed] [Google Scholar]

- 4.Lerner MA, Ayalew M, Peine WJ, Sundaram CP. Does training on a virtual reality robotic simulator improve performance on the da Vinci surgical system? J Endourol. 2010;24:467–72. doi: 10.1089/end.2009.0190. [DOI] [PubMed] [Google Scholar]

- 5.McCloy R, Stone R. Virtual reality in surgery. BMJ. 2001;323:912–5. doi: 10.1136/bmj.323.7318.912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.McDougall EM. Validation of surgical simulators. J Endourol. 2007;21:244–7. doi: 10.1089/end.2007.9985. [DOI] [PubMed] [Google Scholar]

- 7.Aucar JA, Groch NR, Troxel SA, Eubanks SW. A review of surgical simulation with attention to validation methodology. Surg Laparosc Endosc Percutan Tech. 2005;15:82–9. doi: 10.1097/01.sle.0000160289.01159.0e. [DOI] [PubMed] [Google Scholar]

- 8.Grover S, Tan GY, Srivastava A, Leung RA, Tewari AK. Residency training program paradigms for teaching robotic surgical skills to urology residents. Curr Urol Rep. 2010;11:87–92. doi: 10.1007/s11934-010-0093-9. [DOI] [PubMed] [Google Scholar]

- 9.Sun LW, Van Meer F, Schmid J, Bailly Y, Thakre AA, Yeung CK. Advanced da Vinci Surgical System simulator for surgeon training and operation planning. Int J Med Robot. 2007;3:245–51. doi: 10.1002/rcs.139. [DOI] [PubMed] [Google Scholar]

- 10.Gallagher AG, McClure N, McGuigan J, Crothers I, Browning J. Virtual reality training in laparoscopic surgery: a preliminary assessment of minimally invasive surgical trainer virtual reality (MIST VR) Endoscopy. 1999;31:310–3. doi: 10.1055/s-1999-15. [DOI] [PubMed] [Google Scholar]

- 11.Grantcharov TP, Kristiansen VB, Bendix J, Bardram L, Rosenberg J, Funch-Jensen P. Randomized clinical trial of virtual reality simulation for laparoscopic skills training. Br J Surg. 2004;91:146–50. doi: 10.1002/bjs.4407. [DOI] [PubMed] [Google Scholar]

- 12.Hyltander A, Liljegren E, Rhodin PH, Lonroth H. The transfer of basic skills learned in a laparoscopic simulator to the operating room. Surg Endosc. 2002;16:1324–8. doi: 10.1007/s00464-001-9184-5. [DOI] [PubMed] [Google Scholar]

- 13.Munz Y Kumar BD, Moorthy K, Bann S, Darzi A. Laparoscopic virtual reality and box trainer: is one superior to the other? Surg Endosc. 2004;18:485–94. doi: 10.1007/s00464-003-9043-7. [DOI] [PubMed] [Google Scholar]

- 14.Seymour NE, Gallagher AG, Roman SA, O'Brien MK, Bansal VK, et al. Virtual reality training improves operating room performance: results of a randomized, double-blinded study. Ann Surg. 2002;236:458–63. doi: 10.1097/00000658-200210000-00008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Halvorsen FH, Elle OJ, Dalinin VV, Mørk BE, Sørhus V, et al. Virtual reality simulator training equals mechanical robotic training in improving robot-assisted basic suturing skills. Surg Endosc. 2006;20:1565–9. doi: 10.1007/s00464-004-9270-6. [DOI] [PubMed] [Google Scholar]

- 16.Narazaki K, Oleynikov D, Stergiou N. Robotic surgery training and performance: identifying objective variables for quantifying the extent of proficiency. Surg Endosc. 2006;20:96–103. doi: 10.1007/s00464-005-3011-3. [DOI] [PubMed] [Google Scholar]

- 17.Narazaki K, Oleynikov D, Stergiou N. Objective assessment of proficiency with bimanual inanimate tasks in robotic laparoscopy. J Laparoendosc Adv Surg Tech. 2007;17:47–52. doi: 10.1089/lap.2006.05101. [DOI] [PubMed] [Google Scholar]

- 18.Albani JM, Lee DI. Virtual reality-assisted robotic surgery simulation. J Endourol. 2007;21:285–7. doi: 10.1089/end.2007.9978. [DOI] [PubMed] [Google Scholar]

- 19.Katsavelis D, Siu KC, Brown-Clerk B, Lee IH, Lee YK, et al. Validated robotic laparoscopic surgical training in a virtual-reality environment. Surg Endosc. 2009;23:66–73. doi: 10.1007/s00464-008-9894-z. [DOI] [PubMed] [Google Scholar]

- 20.Kenney PA, Wszolek MF, Gould JJ, Libertino JA, Moinzadeh A. Face, content, and construct validity of dV-trainer, a novel virtual reality simulator for robotic surgery. Urology. 2009;73:1288–92. doi: 10.1016/j.urology.2008.12.044. [DOI] [PubMed] [Google Scholar]

- 21.Sethi AS, Peine WJ, Mohammadi Y Sundaram CP. Validation of a novel virtual reality robotic simulator. J Endourol. 2009;23:503–8. doi: 10.1089/end.2008.0250. [DOI] [PubMed] [Google Scholar]

- 22.Seixas-Mikelus SA, Kesavadas T Srimathveeravalli G, Chandrasekhar R, Wilding GE, Guru KA. Face validation of a novel robotic surgical simulator. Urology. 2010;76:357–60. doi: 10.1016/j.urology.2009.11.069. [DOI] [PubMed] [Google Scholar]

- 23.Lendvay TS, Casale P, Sweet R, Peters C. VR robotic surgery: randomized blinded study of the dV-Trainer robotic simulator. Stud Health Technol Inform. 2008;132:242–4. [PubMed] [Google Scholar]