Abstract

Faces are one of the most significant social stimuli and the processes underlying face perception are at the intersection of cognition, affect, and motivation. Vision scientists have had a tremendous success of mapping the regions for perceptual analysis of faces in posterior cortex. Based on evidence from (a) single unit recording studies in monkeys and humans; (b) human functional localizer studies; and (c) meta-analyses of neuroimaging studies, I argue that faces automatically evoke responses not only in these regions but also in the amygdala. I also argue that (a) a key property of faces represented in the amygdala is their typicality; and (b) one of the functions of the amygdala is to bias attention to atypical faces, which are associated with higher uncertainty. This framework is consistent with a number of other amygdala findings not involving faces, suggesting a general account for the role of the amygdala in perception.

Keywords: Amygdala, Face perception, Face evaluation, Social cognition

Introduction

In one of the first attempts to formulate a model of the social brain, Brothers (1990) considered a few regions primarily focusing on the amygdala, orbitofrontal cortex, and the superior temporal sulcus. Since then, the number of regions implicated in social cognition has rapidly proliferated (Adolphs 2009; Lieberman 2010; Todorov et al. 2011a). The two major reasons for this proliferation are methodological advances in functional neuroimaging research and the introduction of multiple experimental paradigms tapping diverse aspects of social cognition. These aspects range from the study of perception of emotional expressions to the study of representation of others’ mental states and actions. At the same time, various proposals have been made about the core region/s underlying social cognition. Depending on one’s interests, the seat of social cognition is either in the medial prefrontal cortex (Amodio and Frith 2006), the temporoparietal junction (Saxe and Wexler 2005), or in the inferior frontal gyrus and the inferior parietal lobule (Gallese et al. 2004). Although these proposals have great merits, they have been derived from narrowing down social cognition to specific tasks such as understanding beliefs and understanding goal-directed actions. Ultimately, multiple functional brain networks underlie the complexity of social cognition.

Arguably, a good starting point for building a comprehensive model of social cognition is the ability to represent others as distinct individuals. Understanding actions, beliefs, and intentions presupposes the ability to perceive and represent other people as agents. For most people, face perception and memory is critical for representing others, and people are extremely adept at that task. Decades of computer science research have yet to produce a computer model that approximates human performance of face recognition (Bowyer et al. 2006; Sinha et al. 2006). Moreover, faces are not only used to represent and track individuals over time, but also provide a wealth of social information ranging from the individual’s membership in social categories (e.g., age, sex, race) to his or her mental and emotional states (e.g., bored, anxious, etc.). Not surprisingly, after extremely brief exposures or highly degraded visual input, people can identify faces (Grill-Spector and Kanwisher 2005; Yip and Sinha 2002), their race and gender (Cloutier et al. 2005; Martin and Macrae 2007), recognize their emotional expressions (Esteves and Öhman 1993), and make a variety of social judgments such as aggressiveness (Bar et al. 2006), trustworthiness (Todorov et al. 2009), and sexual orientation (Rule and Ambady 2008). Perception of faces is inherently imbued with affect (Todorov et al. 2008).

Yet, until recently face perception has been generally considered a cognitive area of research with forays into other areas only when emotional expressions or affective associations with faces are the focus of research. Standard cognitive models (Bruce and Young 1986) and their corresponding neural equivalents (Haxby et al. 2000) are not framed in social terms, and regions that are dedicated to face processing are rarely framed as “social regions.” To a large extent, this probably reflects disciplinary divisions and interests. The neural underpinnings of face perception have been primarily studied by vision scientists for whom faces are a well-defined category of complex stimuli that can be contrasted to other categories of complex stimuli such as houses.

Face selectivity in the brain

Vision scientists have had a tremendous success in mapping the regions responsible for face perception. Until the middle of Twentieth century, it was not even established that the inferior temporal (IT) cortex is involved in vision and, in particular, object recognition (Gross 1994). Face selective neurons were discovered in the IT cortex of the macaque brain in the 1970s (Bruce et al. 1981; Desimone 1991; Perrett et al. 1982). Consistent with these findings, Positron Emission Tomography studies of humans in the early 1990s reported face responsive regions in fusiform and inferior temporal regions (Haxby et al. 1993; Sergent et al. 1992). Electrophysiological studies recording from the same regions in epileptic patients found negative potentials (N200) evoked by faces (Allison et al. 1994). Subsequent functional Magnetic Resonance Imaging (fMRI) studies using a variety of categories established a face selective region in the fusiform gyrus (Kanwisher et al. 1997; McCarthy et al. 1997). This region—labeled the fusiform face area (FFA; Kanwisher et al. 1997)—can be reliably identified in individual subjects and its location is robust with respect to task demands (Berman et al. 2010). Two other regions can be consistently identified across most subjects: a region in the posterior Superior Temporal Sulcus (pSTS) and a region in the occipital gyrus—labeled the occipital face area (OFA). These regions are usually referred to as comprising the core system for perceptual analysis of faces (Haxby et al. 2000; Said et al. 2011).

Two of the most exciting recent developments in the field are the combination of fMRI and single cell recordings in macaques (Tsao et al. 2006) and the use of Transcranial Magnetic Stimulation (TMS) in humans (Pitcher et al. 2007). Tsao and her colleagues used fMRI to identify face selective patches in the macaque brain and then recorded from these patches. They identified a stunning number of face selective neurons in these patches. In contrast to previous studies, which have rarely reported more than 20% of face selective neurons from the sample of recorded neurons, Tsao and her colleagues reported more than 90% of face selective neurons in some of the patches. Pitcher and his colleagues used TMS to transiently disrupt the activity of the right OFA (it is not possible to target the FFA) and found that this affected performance on face perception tasks.

Undoubtedly, we have accumulated rich evidence for the importance of the “core” regions in face perception. However, given the affective and social significance of faces, the question is whether the core regions are sufficient to describe face perception. Of course, researchers have acknowledged the participation of other regions, including both subcortical and prefrontal, but these regions are usually considered as part of the “extended” as opposed to “core” system of face processing (Haxby et al. 2000).

In the rest of the paper, I argue that faces automatically evoke responses not only in the core regions but also in regions in the medial temporal lobe (MTL). In particular, I focus on the amygdala and argue that it is an integral part of the functional network dedicated to face processing. In the next section of the paper, I review evidence consistent with a general role of the amygdala in face processing. This evidence comes from (a) single cell recording studies in both monkeys and humans; (b) human functional localizer studies; and (c) meta-analyses of neuroimaging studies involving faces. In the last section of the paper, I propose a hypothesis that the key property of faces represented in the amygdala is their typicality. I also attempt to place this hypothesis into an overall framework that accommodates not only face findings but also findings from other stimuli and other modalities.

Face selective responses in the primate amygdala

The importance of the amygdala for perception, learning, memory, and behavior is well established (Aggleton 2000). In almost all cases, the role of the amygdala is related to the affective significance of stimuli. In this context, it is not surprising that the first functional neuroimaging studies that targeted the amygdala and face perception used faces expressing emotions (Breiter et al. 1996; Morris et al. 1996). However, it is unlikely that the role of the amygdala in face processing is limited to processing of emotional expressions.

At about the time of the discovery of face selective neurons in IT cortex, it was also discovered that there are visually responsive neurons in the macaque’s amygdala and that some of these neurons respond to faces (Sanghera et al. 1979). A number of subsequent neurophysiology studies reported face responsive neurons in the amygdala (Perrett et al. 1982; Leonard et al. 1985; Rolls 1984; Wilson and Rolls 1993; for a review see Rolls 2000). Recent studies have confirmed these findings. Nakamura et al. (1992) showed that the amygdala responds to visual stimuli that are not relevant to the immediate task, and that a high proportion of the visual neurons are category selective with some of the neurons preferring monkey’s faces and a smaller proportion human faces. Other studies have found selective responses for emotional expressions and identity (Gothard et al. 2007) and supramodal neurons responding to both visual (faces) and auditory (sounds) social cues (Kuraoka and Nakamura 2007).

Importantly, the monkey neurophysiology findings have been confirmed in human studies (Fried et al. 1997; Kreiman et al. 2000). Fried and his colleagues recorded from neurons in the MTL of patients undergoing treatment for epilepsy. They found face selective neurons in the amygdala, hippocampus, and entorhinal cortex. Subsequent studies have shown that the responses of some of these neurons are modulated by face familiarity (Quiroga et al. 2005; Viskontas et al. 2009). These findings are consistent with findings from patients with amygdala lesions who show impairments at face recognition (Aggleton and Shaw 1996), although the most studied patient with bilateral amygdala damage, SM (Adolphs and Tranel 2000), seems to be primarily impaired at recognition of fearful expressions.

The logic of neurophysiology studies on category selectivity is to present stimuli representing different categories (e.g., faces, everyday objects, novel objects, etc.) and look for neurons that show preference for one or more categories. The same logic underlies neuroimaging studies that use functional localizers. In such studies, human subjects are presented with faces and a number of other categories such as houses, hands, chairs, flowers, etc. Such studies identified the FFA (Kanwisher et al. 1997; McCarthy et al. 1997; Tong et al. 2000), the OFA (Gauthier et al. 2000; Puce et al. 1996), and face selective regions in the pSTS (Allison et al. 2000; Puce et al. 1996). Despite some controversy about the value of functional localizers (Friston et al. 2006; Saxe et al. 2006), they are an excellent tool for identifying category selective regions and then probe the response properties of these regions. A recent meta-analysis also shows that, at least in the case of localizing the FFA, the results are robust with respect to task demands and control categories (Berman et al. 2010).

If there are face selective neurons in the amygdala, as suggested by neurophysiology studies, why is it that fMRI studies that use functional localizers do not detect face selective voxels in the amygdala? There are, at least, two primary sets of reasons. First, the amygdala is a very small structure that is difficult to image not only because of its size but also because of its location (LaBar et al. 2001; Zald 2003). Moreover, in almost all neurophysiology studies, the number of face selective neurons is small, rarely exceeding 10% of the recorded neurons. This suggests that there would be a few face selective voxels in the amygdala. Second, given the expected small size of face selective clusters, it would be difficult to find these clusters unless one is looking for them. In fact, there is a large variation across individual subjects in functional localizer studies. The typical approach in such studies is to threshold the statistical map of the contrast of faces and the control category (or categories) at a specified probability value (e.g., p = .005) and then to record the locations of face selective regions for each subject. However, the number of observed peaks can vary from a few or none in some subjects to a few dozens in other subjects. Researchers would typically record peaks from the fusiform gyri, occasionally from the occipital gyri, and pSTS, and rarely from other regions. Some of this individual variation in observed peaks is due to a measurement error, which can be reduced by averaging across subjects. However, functional localizers were specifically introduced to map category selective regions for individual brains and, hence, to avoid the need to conduct group analyses (Kanwisher et al. 1997). The rationale for using localizers is that brains are individually different and, hence, group alignment can distort the data.

Not surprisingly, researchers who use face localizers rarely report group analyses, although these analyses can be informative and more reliable than individual level analyses (Poldrack et al. 2009). In a recent meta-analysis of studies that used functional localizers to localize the FFA, Berman and colleagues (2010) selected 49 out of 339 papers. These were studies on healthy adults that reported both the coordinates of the localized FFA and the localization task. Out of these papers, only nine reported the group analysis from the face localizer (Chen et al. 2007; Downing et al. 2006; Eger et al. 2004, 2005; Henson and Mouchlianitis 2007; Kesler-West et al. 2001; Maurer et al. 2007; Pourtois et al. 2005; Zhang et al. 2008). Four of the nine studies reported amygdala activation (see Table 1). Another study did not report a group analysis but reported that face selective voxels in the amygdala were identified by anatomical location and contrast between intact and scrambled faces (Ganel et al. 2005). Occasionally, researchers would report that they observed amygdala activation in face localizer contrasts but would not investigate this further or report the coordinates (Berman et al. 2010, p. 69; Jiang et al. 2009, p. 1085). It should be noted that the opposite is also true: emotion researchers interested in the amygdala would compare faces with another category of stimuli but not report group analyses or activations in posterior areas (Goossens et al. 2009; Hariri et al. 2002). In other cases, researchers would perform a group analysis but not individual level analyses (Fitzgerald et al. 2006; Wright and Liu 2006).

Table 1.

Coordinates of face selective voxels in the amygdala from fMRI studies that compared activation to faces with activation to other categories

| Study | Control category | Sample size | R. amygdala | L. amygdala | ||||

|---|---|---|---|---|---|---|---|---|

| N | x | y | z | x | y | z | ||

| Fitzgerald et al. (2006) | Portable radios | 20 | −24 | −3 | −20 | |||

| Ganel et al. (2005) | Scrambled faces | 11 | 18 | −7 | −9 | |||

| Hariri et al. (2002) | Emotional scenes | 12 | 16 | −5 | −13 | |||

| Kesler-West et al. (2001) | Scrambled faces | 21 | 17 | −7 | −8 | −17 | −9 | −8 |

| Maurer et al. (2007) | Houses and common household objects | 12 | 20 | −9 | −20 | |||

| Pourtois et al. (2005) | Houses | 14 | −21 | −15 | −9 | |||

| Goossens et al. (2009) | Houses | 20 | 22 | −3 | −12 | −18 | −3 | −16 |

| Said et al. (2010) | Chairs | 37 | 17 | −5 | −10 | −17 | −2 | −10 |

| Wright and Liu (2006) | Pixilated patterns | 12 | 22 | −7 | −10 | −9 | −3 | −10 |

| Zhang et al. (2008) | Chinese characters, common objects, and scrambled images | 16 | 18 | −1 | −18 | |||

The coordinates are reported in Talairach space. Four of the studies (Kesler-West et al. 2001; Maurer et al. 2007; Pourtois et al. 2005; Zhang et al. 2008) are from the sample of studies in Berman et al. (2010). Two of the studies (Fitzgerald et al. 2006; Wright and Liu 2006) compared emotional and neutral faces with a control category. To extract face selective voxels in the amygdala, they performed a conjunction analysis of the individual face contrasts with the control category

Comparing the studies that found amygdala activation in response to faces and those that did not shows that the former had greater statistical power to detect such activations. First, studies that found amygdala activation tended to have larger samples (mean n = 14.8 vs. 12.4). Second, these studies used a less stringent statistical criterion in the group analysis (the most stringent threshold was p < .001 uncorrected, which was the minimum criterion in the other studies). To take two extreme examples, Kesler-West et al. (2001) and Chen et al. (2007) used the same contrast (faces vs. scrambled faces) but only Kessler/West et al. reported amygdala activation in the group analysis. However, whereas Kessler/West et al.’s study had 21 subjects and used uncorrected p < .001, Chen et al.’s study had 5 subjects and used Bonferroni corrected p < .001 across all voxels. In principle, it is better to be statistically conservative, but conservative procedures would penalize small regions, particularly when the sample size of the study is small. As shown in Table 1, many human studies report amygdala activation in functional localizer tasks. This is consistent with high resolution fMRI studies of monkeys that also find face selective voxels in the amygdala (Hoffman et al. 2007; Logothetis et al. 1999).

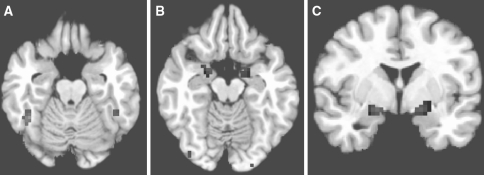

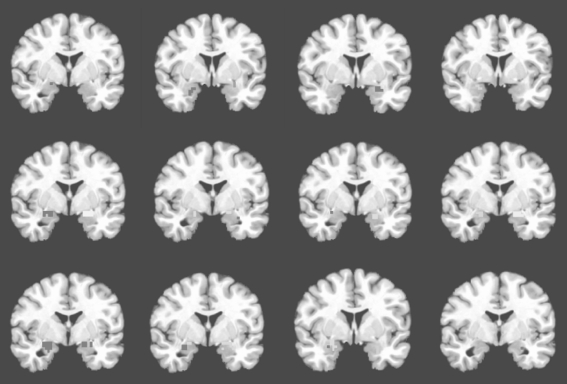

In one of our recent studies (Said et al. 2010), we used a face localizer and following standard practices recorded the peaks in fusiform gyri, occipital gyri, and pSTS. These data are revisited here.1 In the localizer task, subjects were presented with blocks of faces and chairs and asked to press a button when an image was repeated (one back task). As shown in Fig. 1, in addition to the clusters in the fusiform gyri (Fig. 1a), the group analysis showed large clusters in bilateral amygdala that were more active for faces than chairs (Fig. 1b, c). An analysis of individual subjects data showed that 30 out of 37 subjects had face responsive voxels in the amygdala. For this analysis, the map of the-faces-greater-than-chairs contrast was liberally thresholded at p < .05 for each individual and then intersected with an anatomical mask of the amygdala. As with the FFA, there was individual variation across subjects with respect to the size and location of the clusters of face selective voxels (Fig. 2).

Fig. 1.

Brain regions responding more strongly to faces than to chairs: bilateral fusiform gyri (a) and bilateral amygdala (b, c). The regions were identified in a group analysis (n = 37), p < .001 (uncorrected)

Fig. 2.

Clusters of voxels in the amygdala of individual subjects responding more strongly to faces than to chairs. The statistical maps for individual subjects were thresholded at p < .05 and intersected with an anatomical mask of the amygdala. Different colors indicate different clusters within the amygdala. The clusters are shown on a standardized brain image

These findings suggest that standard functional localizers can be used to identify face selective voxels in the amygdala. However, the conclusions may be limited given that we used a single control category. At the same time, using a single control category (e.g., scrambled faces, houses, etc.) to localize the posterior face selective network is a common practice and it seems that the type of category does not seriously affect the localization (Berman et al. 2010; Downing et al. 2006). Nevertheless, we need more targeted studies that use multiple categories to test for face selectivity in the amygdala.

In addition to data from single unit recordings and functional localizer studies, data from meta-analyses of functional neuroimaging studies also support a general role of the amygdala in face processing. Two large meta-analyses of PET and fMRI studies on emotional processing showed that faces are one class of stimuli that most consistently elicits responses in the amygdala (Costafreda et al. 2008; Sergerie et al. 2008). The only stimulus class that was more potent in eliciting amygdala responses was gustatory and olfactory stimuli (Costafreda et al. 2008).

Two other meta-analyses (Bzdok et al. in press; Mende-Siedlecki, Said, and Todorov, under review) analyzed fMRI studies on face evaluation. These studies typically presented emotionally neutral faces that varied either on attractiveness or perceived trustworthiness. Using an Activation Likelihood Estimation approach, Bzdok and colleagues analyzed 16 studies. Using a Multi-level Kernel Density Analysis (MKDA) approach, which treats contrast maps rather than individual activation peaks as the unit of analysis (Wager et al. 2008), Mende-Siedlecki and colleagues analyzed 30 studies. In both meta-analyses, one of the most consistently activated regions across studies was the amygdala (see Table 2).

Table 2.

Coordinates of voxels in the amygdala identified in (a) meta-analyses of fMRI studies on face evaluation; (b) face localization studies (see Table 1); and (c) meta-analyses of studies on emotion processing irrespective of faces

| Meta-analyses | R. amygdala | L. amygdala | ||||

|---|---|---|---|---|---|---|

| x | y | z | x | y | z | |

| Face evaluation studies | ||||||

| Bzdok et al. in press (n = 16) | 26 | −1 | −18 | |||

| 18 | −8 | −11 | −18 | −7 | −15 | |

| Mende-Siedlecki et al. under review (n = 30) | 20 | −3 | −12 | −18 | −3 | −12 |

| Average coordinates for face selective voxels weighted by sample size (Table 1) | 18.5 | −5.2 | −11.9 | −18.0 | −5.1 | −12.1 |

| Emotional processing studies | ||||||

| Costafreda et al. 2008 (n = 94) | 22 | −6 | −12 | −22 | −6 | −12 |

| Sergerie et al. 2008 (n = 148) | 22 | −5 | −12 | −21 | −6 | −14 |

The coordinates are reported in Talairach space

To sum up, both single unit recording data and neuroimaging data suggest that the primate amygdala contains neurons that respond to faces.

The role of the amygdala in face processing

The question about the computational role of the amygdala in face processing is much harder than the question about establishing face selectivity in the amygdala. Although initial fMRI studies focused on the role of the amygdala in processing of fearful expressions (Morris et al. 1996; Whalen et al. 1998), subsequent studies supported a much broader role in face processing. First, many studies have observed amygdala responses not only to fearful but also to other emotional expressions, including positive expressions (e.g., Pessoa et al. 2006; Sergerie et al. 2008; Winston et al. 2003; Yang et al. 2002). Second, as described above, meta-analyses of fMRI studies on face evaluation that typically use emotionally neutral faces show that the amygdala is one of the most consistently activated regions in these studies (Bzdok et al. in press; Mende-Siedlecki et al. under review). Moreover, many studies have observed non-linear amygdala responses with stronger responses to both negative and positive faces than to faces at the middle of the continuum (Said et al. 2009, 2010; Todorov et al. 2011b; Winston et al. 2007). Third, amygdala responses have been observed to bizarre faces (faces with inverted features; Rotshtein et al. 2001) and to novel faces (Kosaka et al. 2003; Schwartz et al. 2003).

To start answering the question about the computational role of the amygdala in face processing, one needs to have a working model of how faces are represented. According to the idea of face space (Valentine 1991), faces are represented as points in a multi-dimensional face space (MDFS). Face space is a high dimensional space in which every face can be approximated as a point defined by its coordinates on the face dimensions. These dimensions define abstract, global properties of the faces. Valentine (1991) used this idea to account for a number of face recognition findings, including effects of distinctiveness (recognition advantage for distinctive faces) and race (recognition advantage for own race faces). Subsequently, face space models have been successfully used to account for a number of other face perception findings (Rhodes and Jeffery 2006; Tsao and Freiwald 2006) and to model social perception of faces (Oosterhof and Todorov 2008; Todorov and Oosterhof 2011; Walker and Vetter 2009). Finally, both single unit recording and fMRI studies have shown increased responses in face selective regions as a function of the distance from the average face (Leopold et al. 2006; Loffler et al. 2005).

Recently, using a MDFS model, we studied whether the amygdala and the FFA respond to social properties of faces or more general properties related to the distance of the faces from the average face in the model (Said et al. 2010). In terms of perception, the distance from the average face could be described as indicating the typicality of the face, where more distant faces are less typical. We used a parametric face model (Oosterhof and Todorov 2008) to generate faces that varied on valence and faces that differed on valence to a much smaller extent. Importantly, both types of faces were matched on their distance from the average face. Behavioral studies also confirmed that the faces were matched on their perceived typicality.

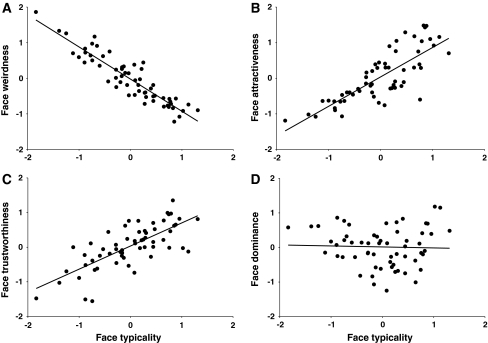

Contrary to our initial expectation, we found that both the FFA and the amygdala responded to the distance from the average face rather than to changes in valence. With hindsight, coding faces according to their typicality is more parsimonious than coding faces according to their social value, because the former requires only statistical learning that extracts the average and variance of the faces encountered in one’s life. Further, in real life, social attributions from facial appearance and face typicality are highly correlated (Fig. 3). Finally, the typicality explanation resolved a previous puzzle in the literature on face evaluation. Whereas some studies have observed linear responses to face valence with stronger responses to negative faces (Engell et al. 2007; Todorov and Engell 2008; Winston et al. 2002), others have observed non-linear responses with stronger responses to both positive and negative faces than to faces at the middle of the continuum (Todorov et al. 2011b). It turned out that in studies that observed linear responses, face typicality was linearly related to face valence (with more negative faces perceived as less typical). In studies that observed non-linear responses, face typicality was non-linearly related to face valence (with more negative and more positive faces perceived as less typical). Both patterns of responses could be explained by the hypothesis that the amygdala responds more strongly to less typical faces.

Fig. 3.

Scatter plots of judgments of face typicality (“How likely would you be to see a person who looks like this walking down the street?”) and judgments of face weirdness (a), attractiveness (b), trustworthiness (c), and dominance (d). Each point represents a face. Judgments are in standardized units. Typicality judgments were correlated with 13 out of 14 social judgments (Said et al. 2010). The only exception was judgments of dominance (panel D)

What is the functional value of coding face typicality? Atypical faces, by definition, are less likely to be encountered and as such are less predictable. That is, they are associated with higher uncertainty and may require deployment of additional attentional resources to resolve uncertainty. The amygdala, which receives input from IT cortex and projects back not only to IT but also to striate and extrastriate cortex (Amaral et al. 2003), is in the perfect position to modulate attention to infrequent, unexpected stimuli that have motivational significance. In other words, salient, unexpected stimuli can trigger amygdala responses, which in turn can bias attention to these stimuli (Vuilleumier 2005). There is a large body of animal work showing that the amygdala is critical for regulation of attention (Davis and Whalen 2001; Gallagher 2000; Holland and Gallagher 1999). Recent work also shows that unpredictable sound sequences evoke sustained activity in the amygdala in both mice and humans (Herry et al. 2007).

The typicality findings suggest that in the context of face perception, one of the functions of the amygdala is to regulate attention. This proposal is consistent with several other proposals about the role of the amygdala in maintaining vigilance (Whalen 2007) and detection of salient or motivationally relevant stimuli (Adolphs 2010; Sander et al. 2003). This hypothesis could account for stronger responses to bizarre faces (Rotshtein et al. 2001), novel faces (Kosaka et al. 2003; Schwartz et al. 2003), and emotional expressions (Whalen et al. 2009). It is important to note that both expressions and differences in identity could be represented within the same MDFS model (Calder and Young 2005). Finally, this hypothesis is also consistent with findings about the importance of individual differences in amygdala functioning (Aleman et al. 2008; Bishop 2008; Hariri 2009). According to the MDFS model, typicality of faces and emotional expressions can vary across individuals and such differences can result in different amygdala responses to the same face stimuli. This is an important research question to pursue in future studies.

In this framework, face information processed in face selective regions (e.g., the FFA) is further processed in the amygdala, where faces that are atypical or unexpected augment the amygdala’s responses, which in turn augment responses in face selective regions via feedback projections. Such general principles can also account for a variety of other non-face findings. These include stronger responses to both highly positive and negative visual stimuli (Sabatinelli et al. 2005), high intensity positive and negative odors (Anderson et al. 2003) and tastes (Small et al. 2003); loud sounds (Bach et al. 2008); and unpredictable sound sequences (Herry et al. 2007).

Conclusions

Although this article started with the proliferation of neural systems involved in social cognition, I focused on one specific region, the amygdala, and one category of stimuli, faces. A justification for this choice is that both the amygdala and perception of faces are at the intersection of cognition, affect, and motivation. I argued that faces robustly activate the amygdala and that one of its functions is to regulate attention to salient, atypical faces.

Undoubtedly, this proposal is an oversimplification. The amygdala consists of several nuclei with different structures, connectivity, and functions (Aggleton 2000; Amaral et al. 2003) that may play different roles in face processing. In fact, it is likely that the population of neurons that are face selective is different from the population of neurons that participate in the regulation of attention. Face selective neurons are usually located in the basolateral amygdala, whereas neurons involved in attention are located in the central nucleus. Unfortunately, current fMRI techniques do not have a sufficient spatial resolution to study subdivisions in the amygdala. It should be noted that although the activation peaks from our meta-analysis of face evaluation studies (Mende-Siedlecki et al. under review) and the face selective peaks were different (Table 2), they were in close proximity (about 3 mm distance).

At a larger scale, the network involved in face processing involves a number of regions in addition to regions in IT cortex and the amygdala. In fact, studies have shown face selectivity in lateral orbitofrontal cortex (Ó Scalaidhe et al. 1997; Rolls et al. 2006; Tsao et al. 2008). In our meta-analysis of face evaluation studies, in addition to the amygdala, we observed consistent activations across studies in ventromedial prefrontal cortex, pregenual anterior cingulate cortex, and left caudate/NAcc. Understanding face perception would require understanding the cognitive functions of all these regions and how they interact in the context of perceiving (and evaluating) faces.

Acknowledgments

I thank Andy Engell, Charlie Gross, and Winrich Freiwald for comments on previous versions of this manuscript. This work was supported by National Science Foundation grant 0823749 and the Russell Sage Foundation.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Footnotes

For this analysis, 13 new subjects were added to the 24 subjects from Said et al. (2010). These 13 subjects participated in a different experimental task but in the same face localizer task at the end of the scanning session.

References

- Adolphs R. The social brain: Neural basis of social knowledge. Annual Review of Psychology. 2009;60:693–716. doi: 10.1146/annurev.psych.60.110707.163514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R. What does the amygdala contribute to social cognition? Annals of the New York Academy of Sciences. 2010;1191:42–61. doi: 10.1111/j.1749-6632.2010.05445.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Tranel D. Emotion, recognition, and the human amygdala. In: Aggleton JP, editor. The Amygdala: A functional analysis. New York: Oxford University Press; 2000. pp. 587–630. [Google Scholar]

- Aggleton JP, editor. The amygdala: A functional analysis. New York: Oxford University Press; 2000. [Google Scholar]

- Aggleton JP, Shaw C. Amnesia and recognition memory: A re-analysis of psychometric data. Neuropsychologia. 1996;34:51–62. doi: 10.1016/0028-3932(95)00150-6. [DOI] [PubMed] [Google Scholar]

- Aleman A, Swart M, van Rijn S. Brain imaging, genetics and emotion. Biological Psychology. 2008;79:58–69. doi: 10.1016/j.biopsycho.2008.01.009. [DOI] [PubMed] [Google Scholar]

- Allison T, McCarthy G, Nobre A, Puce A, Belger A. Human extrastriate visual cortex and the perception of faces, words, numbers, and colors. Cerebral Cortex. 1994;4:544–554. doi: 10.1093/cercor/4.5.544. [DOI] [PubMed] [Google Scholar]

- Allison T, Puce A, McCarthy G. Social perception from visual cues: Role of the STS region. Trends in Cognitive Sciences. 2000;4:267–278. doi: 10.1016/s1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- Amaral DG, Behniea H, Kelly JL. Topographic organization of projections from the amygdala to the visual cortex in the macaque monkey. Neuroscience. 2003;118:1099–1120. doi: 10.1016/s0306-4522(02)01001-1. [DOI] [PubMed] [Google Scholar]

- Amodio DM, Frith CD. Meeting of minds: The medial frontal cortex and social cognition. Nature Reviews Neuroscience. 2006;6:268–277. doi: 10.1038/nrn1884. [DOI] [PubMed] [Google Scholar]

- Anderson AK, Christoff K, Stappen I, Panitz D, Ghahremani DG, Glover G, Gabrieli JDE, Sobel N. Dissociated neural representations of intensity and valence in human olfaction. Nature Neuroscience. 2003;6:196–202. doi: 10.1038/nn1001. [DOI] [PubMed] [Google Scholar]

- Bach DR, Schächinger H, Neuhoff JG, Esposito F, Di Salle F, et al. Rising sound intensity: An intrinsic warning cue activating the amygdala. Cerebral Cortex. 2008;18:145–150. doi: 10.1093/cercor/bhm040. [DOI] [PubMed] [Google Scholar]

- Bar M, Neta M, Linz H. Very first impressions. Emotion. 2006;6:269–278. doi: 10.1037/1528-3542.6.2.269. [DOI] [PubMed] [Google Scholar]

- Berman MG, Park J, Gonzalez R, Polk TA, Gehrke A, Knaffla S, Jonides J. Evaluating functional localizers: The case of the FFA. NeuroImage. 2010;50:56–71. doi: 10.1016/j.neuroimage.2009.12.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bishop SJ. Neural mechanisms underlying selective attention to threat. Annals of the New York Academy of Sciences. 2008;1129:141–152. doi: 10.1196/annals.1417.016. [DOI] [PubMed] [Google Scholar]

- Bowyer KW, Chang K, Flynn P. A survey of approaches and challenges in 3D and multi-modal 3D + 2D face recognition. Computer Vision and Image Understanding. 2006;101:1–15. [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, Kennedy WA, Rauch SL, Buckner RL, et al. Response and habituation of the human amygdala during visual processing of facial expression. Neuron. 1996;17:875–887. doi: 10.1016/s0896-6273(00)80219-6. [DOI] [PubMed] [Google Scholar]

- Brothers L. The social brain: A project for integrating primate behavior and neurophysiology in a new domain. Concepts in Neuroscience. 1990;1:27–51. [Google Scholar]

- Bruce C, Desimone R, Gross CG. Visual properties of neurons in a polysensory area in superior temporal sulcus of the macaque. Journal of Neurophysiolology. 1981;46:369–384. doi: 10.1152/jn.1981.46.2.369. [DOI] [PubMed] [Google Scholar]

- Bruce V, Young A. Understanding face recognition. British Journal of Psychology. 1986;77:305–327. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- Bzdok, D., Langner, R., Caspers, S., Furth, F., Habel, U., Zilles, K., et al. (2011). ALE meta-analysis on facial judgments of trustworthiness and attractiveness. Brain Structure and Function, 215, 209–223. [DOI] [PMC free article] [PubMed]

- Calder AJ, Young AW. Understanding the recognition of facial identity and facial expression. Nature Reviews Neuroscience. 2005;6:641–651. doi: 10.1038/nrn1724. [DOI] [PubMed] [Google Scholar]

- Chen AC, Kao KLC, Tyler CW. Face configuration processing in the human brain: the role of symmetry. Cerebral Cortex. 2007;17:1423–1432. doi: 10.1093/cercor/bhl054. [DOI] [PubMed] [Google Scholar]

- Cloutier J, Mason MF, Macrae CN. The perceptual determinants of person construal: Reopening the social-cognitive toolbox. Journal of Personality and Social Psychology. 2005;88:885–894. doi: 10.1037/0022-3514.88.6.885. [DOI] [PubMed] [Google Scholar]

- Costafreda SG, Brammer MJ, David AS, Fu CHY. Predictors of amygdala activation during the processing of emotional stimuli: A meta-analysis of 385 PET and fMRI studies. Brain Research Reviews. 2008;58:57–70. doi: 10.1016/j.brainresrev.2007.10.012. [DOI] [PubMed] [Google Scholar]

- Davis M, Whalen PJ. The amygdala: Vigilance and emotion. Molecular Psychiatry. 2001;6:13–34. doi: 10.1038/sj.mp.4000812. [DOI] [PubMed] [Google Scholar]

- Desimone R. Face-selective cells in the temporal cortex of monkeys. Journal of Cognitive Neuroscience. 1991;3:1–8. doi: 10.1162/jocn.1991.3.1.1. [DOI] [PubMed] [Google Scholar]

- Downing PE, Chan AWY, Peelen MV, Dodds CM, Kanwisher N. Domain specificity in visual cortex. Cerebral Cortex. 2006;16(10):1453–1461. doi: 10.1093/cercor/bhj086. [DOI] [PubMed] [Google Scholar]

- Eger E, Schweinberger SR, Dolan RJ, Henson RN. Familiarity enhances invariance of face representations in human ventral visual cortex: FMRI evidence. NeuroImage. 2005;26(4):1128–1139. doi: 10.1016/j.neuroimage.2005.03.010. [DOI] [PubMed] [Google Scholar]

- Eger E, Schyns PG, Kleinschmidt A. Scale invariant adaptation in fusiform face-responsive regions. NeuroImage. 2004;22(1):232–242. doi: 10.1016/j.neuroimage.2003.12.028. [DOI] [PubMed] [Google Scholar]

- Engell AD, Haxby JV, Todorov A. Implicit trustworthiness decisions: Automatic coding of face properties in human amygdala. Journal of Cognitive Neuroscience. 2007;19:1508–1519. doi: 10.1162/jocn.2007.19.9.1508. [DOI] [PubMed] [Google Scholar]

- Esteves F, Öhman A. Masking the face: Recognition of emotional facial expressions as a function of the parameters of backward masking. Scandinavian Journal of Psychology. 1993;34:1–18. doi: 10.1111/j.1467-9450.1993.tb01096.x. [DOI] [PubMed] [Google Scholar]

- Fitzgerald DA, Angstadt M, Jelsone LM, Nathan PJ, Phan KL. Beyond threat: Amygdala reactivity across multiple expressions of facial affect. Neuroimage. 2006;30:1441–1448. doi: 10.1016/j.neuroimage.2005.11.003. [DOI] [PubMed] [Google Scholar]

- Fried I, MacDonald KA, Wilson C. Single neuron activity in human hippocampus and amygdala during recognition of faces and objects. Neuron. 1997;18:753–765. doi: 10.1016/s0896-6273(00)80315-3. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Rotshtein P, Geng JJ, Sterzer P, Henson RN. A critique of functional localisers. NeuroImage. 2006;30:1077–1087. doi: 10.1016/j.neuroimage.2005.08.012. [DOI] [PubMed] [Google Scholar]

- Gallagher M. The amygdala and associative learning. In: Aggleton JP, editor. The Amygdala: A functional analysis. New York: Oxford University Press; 2000. pp. 311–330. [Google Scholar]

- Gallese V, Keysers C, Rizzolatti G. A unifying view of the basis of social cognition. Trends in Cognitive Sciences. 2004;8:396–403. doi: 10.1016/j.tics.2004.07.002. [DOI] [PubMed] [Google Scholar]

- Ganel T, Valyear KF, Goshen-Gottstein Y, Goodale MA. The involvement of the fusiform face area in processing facial expression. Neuropsychologia. 2005;43(11):1645–1654. doi: 10.1016/j.neuropsychologia.2005.01.012. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Moylan J, Skudlarski P, Gore JC, Anderson AW. The fusiform face area is part of a network that processes faces at the individual level. Journal of Cognitive Neuroscience. 2000;12(3):495–504. doi: 10.1162/089892900562165. [DOI] [PubMed] [Google Scholar]

- Goossens L, Kukolja J, Onur OA, Fink GR, Maier W, et al. Selective processing of social stimuli in the superficial amygdala. Human Brain Mapping. 2009;30:3332–3338. doi: 10.1002/hbm.20755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gothard KM, Battaglia FP, Erickson CA, Spitler KM, Amaral DG. Neural responses to facial expression and face identity in the monkey amygdala. Journal of Neurophysiology. 2007;97:1671–1683. doi: 10.1152/jn.00714.2006. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Kanwisher N. Visual recognition: As soon as you know it is there, you know what it is. Psychological Science. 2005;16:152–160. doi: 10.1111/j.0956-7976.2005.00796.x. [DOI] [PubMed] [Google Scholar]

- Gross CG. How inferior temporal cortex became a visual area. Cerebral Cortex. 1994;5:455–469. doi: 10.1093/cercor/4.5.455. [DOI] [PubMed] [Google Scholar]

- Hariri AR. The neurobiology of individual differences in complex behavioral traits. Annual Review of Neuroscience. 2009;32:225–247. doi: 10.1146/annurev.neuro.051508.135335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hariri AR, Tessitore A, Mattay VS, Fera F, Weinberger DR. The amygdala response to emotional stimuli: A comparison of faces and scenes. NeuroImage. 2002;17:317–323. doi: 10.1006/nimg.2002.1179. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Grady CL, Horwitz B, Salerno J, Ungerleider LG, Mishkln M, Schapiro MB. Dissociation of object and spatial visual processing pathways in human extra-striate cortex. In: Gulyas B, Ottoson D, Roland PE, editors. Functional organisation of the human visual cortex. Oxford: Pergamon; 1993. pp. 329–340. [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends in Cognitive Sciences. 2000;4:223–233. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Henson RN, Mouchlianitis E. Effect of spatial attention on stimulus-specific haemodynamic repetition effects. NeuroImage. 2007;35(3):1317–1329. doi: 10.1016/j.neuroimage.2007.01.019. [DOI] [PubMed] [Google Scholar]

- Herry C, Bach DR, Esposito F, Di Salle F, Perrig WJ, et al. Processing of temporal unpredictability in human and animal amygdala. The Journal of Neuroscience. 2007;27:5958–5966. doi: 10.1523/JNEUROSCI.5218-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoffman KL, Gothard KM, Schmid MC, Logothetis NK. Facial-expression and gaze-selective responses in the monkey amygdala. Current Biology. 2007;17:766–772. doi: 10.1016/j.cub.2007.03.040. [DOI] [PubMed] [Google Scholar]

- Holland PC, Gallagher M. Amygdala circuitry in attentional and representational processes. Trends in Cognitive Sciences. 1999;3:65–73. doi: 10.1016/s1364-6613(98)01271-6. [DOI] [PubMed] [Google Scholar]

- Jiang F, Dricot L, Blanz V, Goebel R, Rossion B. Neural correlates of shape and surface reflectance information in individual faces. Neuroscience. 2009;163:1078–1091. doi: 10.1016/j.neuroscience.2009.07.062. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in human extrastriate cortex specialized for face perception. Journal of Neuroscience. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kesler-West ML, Andersen AH, Smith CD, Avison MJ, Davis CE, Kryscio RJ, et al. Neural substrates of facial emotion processing using fMRI. Cognitive Brain Research. 2001;11(2):213–226. doi: 10.1016/s0926-6410(00)00073-2. [DOI] [PubMed] [Google Scholar]

- Kosaka H, Omori M, Iidaka T, Murata T, Shimoyama T, Okada T, Sadato N, Yonekura Y, Wada Y. Neural substrates participating in acquisition of facial familiarity: An fMRI study. NeuroImage. 2003;20:1734–1742. doi: 10.1016/s1053-8119(03)00447-6. [DOI] [PubMed] [Google Scholar]

- Kreiman G, Koch C, Fried I. Category-specific visual responses of single neurons in the human medial temporal lobe. Nature Neuroscience. 2000;3:946–953. doi: 10.1038/78868. [DOI] [PubMed] [Google Scholar]

- Kuraoka K, Nakamura K. Responses of Single Neurons in Monkey Amygdala to Facial and Vocal Emotions. Journal of Neurophysiology. 2007;97:1379–1387. doi: 10.1152/jn.00464.2006. [DOI] [PubMed] [Google Scholar]

- LaBar KS, Gitelman DR, Mesulam M-M, Parrish TB. Impact of signal-to-noise on functional MRI of the human amygdala. Neuroreport. 2001;12:3461–3464. doi: 10.1097/00001756-200111160-00017. [DOI] [PubMed] [Google Scholar]

- Leonard CM, Rolls ET, Wilson FAW, Baylis GC. Neurons in the amygdala of the monkey with responses selective for faces. Behavioural Brain Research. 1985;15:159–176. doi: 10.1016/0166-4328(85)90062-2. [DOI] [PubMed] [Google Scholar]

- Leopold DA, Bondar IV, Giese MA. Norm-based face encoding by single neurons in the monkey inferotemporal cortex. Nature. 2006;442(7102):572–575. doi: 10.1038/nature04951. [DOI] [PubMed] [Google Scholar]

- Lieberman MD. Social cognitive neuroscience. In: Fiske ST, Gilbert DT, Lindzey G, editors. Handbook of social psychology. 5. New York, NY: McGraw-Hill; 2010. pp. 143–193. [Google Scholar]

- Loffler G, Yourganov G, Wilkinson F, Wilson HR. fMRI evidence for the neural representation of faces. Nature Neuroscience. 2005;8(10):1386–1390. doi: 10.1038/nn1538. [DOI] [PubMed] [Google Scholar]

- Logothetis NK, Guggenberger H, Peled S, Pauls J. Functional imaging of the monkey brain. Nature Neuroscience. 1999;2:555–562. doi: 10.1038/9210. [DOI] [PubMed] [Google Scholar]

- Martin D, Macrae CN. A boy primed Sue: Feature based processing and person construal. European Journal of Social Psychology. 2007;37:793–805. [Google Scholar]

- Maurer D, O’Craven KM, Le Grand R, Mondloch CJ, Springer MV, Lewis TL, et al. Neural correlates of processing facial identity based on features versus their spacing. Neuropsychologia. 2007;45(7):1438–1451. doi: 10.1016/j.neuropsychologia.2006.11.016. [DOI] [PubMed] [Google Scholar]

- McCarthy G, Puce A, Gore JC, Allison T. Face-specific processing in the human fusiform gyrus. Journal of Cognitive Neurosciences. 1997;9:605–610. doi: 10.1162/jocn.1997.9.5.605. [DOI] [PubMed] [Google Scholar]

- Mende-Siedlecki, P., Said, C. P., & Todorov, A. The social evaluation of faces: A meta-analysis of functional neuroimaging studies (under review). [DOI] [PMC free article] [PubMed]

- Morris JS, Frith CD, Perrett DI, Rowland D, Young AW, Calder AJ, Dolan RJ. A differential neural response in the human amygdala to fearful and happy expressions. Nature. 1996;383:812–815. doi: 10.1038/383812a0. [DOI] [PubMed] [Google Scholar]

- Nakamura K, Mikami A, Kubota K. Activity of single neurons in the monkey amygdala during performance of a visual discrimination task. Journal of Neurophysiology. 1992;67:1447–1463. doi: 10.1152/jn.1992.67.6.1447. [DOI] [PubMed] [Google Scholar]

- Ó. Scalaidhe SP, Wilson FA, Goldman-Rakic PS. Areal segregation of face-processing neurons in prefrontal cortex. Science. 1997;278:1135–1138. doi: 10.1126/science.278.5340.1135. [DOI] [PubMed] [Google Scholar]

- Oosterhof NN, Todorov A. The functional basis of face evaluation. Proceedings of the National Academy of Sciences of the USA. 2008;105:11087–11092. doi: 10.1073/pnas.0805664105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perrett DI, Rolls ET, Caan W. Visual neurons responsive to faces in the monkey temporal cortex. Experimental Brain Research. 1982;47:329–342. doi: 10.1007/BF00239352. [DOI] [PubMed] [Google Scholar]

- Pessoa L, Japee S, Sturman D, Underleider LG. Target visibility and visual awareness modulate amygdala responses to fearful faces. Cerebral Cortex. 2006;16:366–375. doi: 10.1093/cercor/bhi115. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Walsh V, Yovel G, Duchaine B. TMS evidence for the involvement of the right occipital face area in early face processing. Current Biology. 2007;17:1568–1573. doi: 10.1016/j.cub.2007.07.063. [DOI] [PubMed] [Google Scholar]

- Poldrack RA, Halchenko Y, Hanson SJ. Decoding the large-scale structure of brain function by classifying mental states across individuals. Psychological Science. 2009;20:1364–1372. doi: 10.1111/j.1467-9280.2009.02460.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourtois G, Schwartz S, Seghier ML, Lazeyras F, Vuilleumier P. Portraits or people? Distinct representations of face identity in the human visual cortex. Journal of Cognitive Neuroscience. 2005;17(7):1043–1057. doi: 10.1162/0898929054475181. [DOI] [PubMed] [Google Scholar]

- Puce A, Allison T, Asgari M, Gore JC, McCarthy G. Differential sensitivity of human visual cortex to faces, letterstrings, and textures: A functional magnetic resonance imaging study. Journal of Neuroscience. 1996;16(16):5205–5215. doi: 10.1523/JNEUROSCI.16-16-05205.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quiroga QR, Reddy L, Kreiman G, Koch C, Fried I. Invariant visual representation by single neurons in the human brain. Nature. 2005;435:1102–1107. doi: 10.1038/nature03687. [DOI] [PubMed] [Google Scholar]

- Rhodes G, Jeffery L. Adaptive norm-based coding of facial identity. Vision Research. 2006;46:2977–2987. doi: 10.1016/j.visres.2006.03.002. [DOI] [PubMed] [Google Scholar]

- Rolls ET. Neurons in the cortex of the temporal lobe and in the amygdala of the monkey with responses selective for faces. Human Neurobiology. 1984;3:209–222. [PubMed] [Google Scholar]

- Rolls E. Neurophysiology and function of the primate amygdala, and neural basis of emotion. In: Aggleton JP, editor. The amygdala: A functional analysis. Oxford: Oxford University Press; 2000. pp. 447–478. [Google Scholar]

- Rolls ET, Critchley HD, Browning AS, Inoue K. Face-selective and auditory neurons in the primate orbitofrontal cortex. Experimental Brain Research. 2006;170:74–87. doi: 10.1007/s00221-005-0191-y. [DOI] [PubMed] [Google Scholar]

- Rotshtein P, Malach R, Hadar U, Graif M, Hendler T. Feeling or features: Different sensitivity to emotion in high-order visual cortex and amygdala. Neuron. 2001;32:747–757. doi: 10.1016/s0896-6273(01)00513-x. [DOI] [PubMed] [Google Scholar]

- Rule NO, Ambady N. Brief exposures: Male sexual orientation is accurately perceived at 50 ms. Journal of Experimental Social Psychology. 2008;44:1100–1105. [Google Scholar]

- Sabatinelli D, Bradley MM, Fitzsimmons JR, Lang PJ. Parallel amygdala and inferotemporal activation reflect emotional intensity and fear relevance. Neuroimage. 2005;24:1265–1270. doi: 10.1016/j.neuroimage.2004.12.015. [DOI] [PubMed] [Google Scholar]

- Said CP, Baron S, Todorov A. Nonlinear amygdala response to face trustworthiness: Contributions of high and low spatial frequency information. Journal of Cognitive Neuroscience. 2009;21:519–528. doi: 10.1162/jocn.2009.21041. [DOI] [PubMed] [Google Scholar]

- Said CP, Dotsch R, Todorov A. The amygdala and FFA track both social and non-social face dimensions. Neuropsychologia. 2010;48:3596–3605. doi: 10.1016/j.neuropsychologia.2010.08.009. [DOI] [PubMed] [Google Scholar]

- Said, C. P., Haxby, J. V., & Todorov, A. (2011). Brain systems for the assessment of the affective value of faces. Philosophical Transactions of the Royal Society, B, 336, 1660–1670. [DOI] [PMC free article] [PubMed]

- Sander D, Grafman J, Zalla T. The human amygdala: an evolved system for relevance detection. Reviews in the Neurosciences. 2003;14(4):303–316. doi: 10.1515/revneuro.2003.14.4.303. [DOI] [PubMed] [Google Scholar]

- Sanghera MF, Rolls ET, Roper-Hall A. Visual response of neurons in the dorsolateral amygdala of the alert monkey. Experimental Neurology. 1979;63:61–62. doi: 10.1016/0014-4886(79)90175-4. [DOI] [PubMed] [Google Scholar]

- Saxe R, Brett M, Kanwisher N. Divide and conquer: A defense of functional localizers. NeuroImage. 2006;30:1088–1096. doi: 10.1016/j.neuroimage.2005.12.062. [DOI] [PubMed] [Google Scholar]

- Saxe R, Wexler A. Making sense of another mind: The role of the right temporo-parietal junction. Neuropsychologia. 2005;43:1391–1399. doi: 10.1016/j.neuropsychologia.2005.02.013. [DOI] [PubMed] [Google Scholar]

- Schwartz CE, Wright CI, Shin LM, Kagan J, Whalen PJ, McMullin KG, Rauch SL. Differential amygdalar response to novel versus newly familiar neutral faces: A functional MRI probe developed for studying inhibited temperament. Biological Psychiatry. 2003;53:854–862. doi: 10.1016/s0006-3223(02)01906-6. [DOI] [PubMed] [Google Scholar]

- Sergent J, Ohta S, MacDonald B. Functional neuroanatomy of face and object processing: a positron emission tomography study. Brain. 1992;115:15–36. doi: 10.1093/brain/115.1.15. [DOI] [PubMed] [Google Scholar]

- Sergerie K, Chochol C, Armony JL. The role of the amygdala in emotional processing: A quantitative meta-analysis of functional neuroimaging studies. Neuroscience and Biobehavioral Reviews. 2008;32:811–830. doi: 10.1016/j.neubiorev.2007.12.002. [DOI] [PubMed] [Google Scholar]

- Sinha P, Balas B, Ostrovsky Y, Russell R. Face recognition by humans: Nineteen results all computer vision researchers should know about. Proceedings of the IEEE. 2006;94:1948–1962. [Google Scholar]

- Small DM, Gregory MD, Mak YE, Gitelman D, Mesulam MM, Parrish T. Dissociation of neural representation of intensity and affective valuation in human gestation. Neuron. 2003;39:701–711. doi: 10.1016/s0896-6273(03)00467-7. [DOI] [PubMed] [Google Scholar]

- Todorov A, Engell A. The role of the amygdala in implicit evaluation of emotionally neutral faces. Social, Cognitive, and Affective Neuroscience. 2008;3:303–312. doi: 10.1093/scan/nsn033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todorov A, Fiske ST, Prentice D, editors. Social Neuroscience: Toward understanding the underpinnings of the social mind. Oxford: Oxford University Press; 2011. [Google Scholar]

- Todorov A, Oosterhof NN. Modeling social perception of faces. Signal Processing Magazine IEEE. 2011;28:117–122. [Google Scholar]

- Todorov A, Pakrashi M, Oosterhof NN. Evaluating faces on trustworthiness after minimal time exposure. Social Cognition. 2009;27:813–833. [Google Scholar]

- Todorov A, Said CP, Engell AD, Oosterhof NN. Understanding evaluation of faces on social dimensions. Trends in Cognitive Sciences. 2008;12:455–460. doi: 10.1016/j.tics.2008.10.001. [DOI] [PubMed] [Google Scholar]

- Todorov, A., Said, C. P., Oosterhof, N. N., & Engell, A. D. (2011b). Task-invariant brain responses to the social value of faces. Journal of Cognitive Neuroscience. Advanced online publication: January 21, 2011. doi:10.1162/jocn.2011.21616. [DOI] [PubMed]

- Tong F, Nakayama K, Moscovitch M, Weinrib O, Kanwisher N. Response properties of the human fusiform face area. Cognitive Neuropsychology. 2000;17:257–279. doi: 10.1080/026432900380607. [DOI] [PubMed] [Google Scholar]

- Tsao DY, Freiwald WA. What’s so special about the average face? Trends in Cognitive Sciences. 2006;10(9):391–393. doi: 10.1016/j.tics.2006.07.009. [DOI] [PubMed] [Google Scholar]

- Tsao DY, Freiwald WA, Tootell RBH, Livingstone MS. A cortical region consisting entirely of face-selective cells. Science. 2006;311:670–674. doi: 10.1126/science.1119983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsao DY, Schweers N, Moeller S, Freiwald WA. Patches of face-selective cortex in the macaque frontal lobe. Nature Neuroscience. 2008;11:877–879. doi: 10.1038/nn.2158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Valentine T. A unified account of the effects of distinctiveness, inversion, and race in face recognition. Quarterly Journal of Experimental Psychology. 1991;43(2):161–204. doi: 10.1080/14640749108400966. [DOI] [PubMed] [Google Scholar]

- Viskontas IV, Quiroga RQ, Fried I. Human medial temporal lobe neurons respond preferentially to personally relevant images. Proceedings of the National Academy of Sciences of the USA. 2009;106:21329–21334. doi: 10.1073/pnas.0902319106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P. How brains beware: neural mechanisms of emotional attention. Trends In Cognitive Sciences. 2005;9:585–594. doi: 10.1016/j.tics.2005.10.011. [DOI] [PubMed] [Google Scholar]

- Wager TD, Barrett LF, Bliss-Moreau E, Lindquist K, Duncan S, Kober H, et al. The neuroimaging of emotion. In: Lewis M, Haviland-Jones JM, Barrett LF, et al., editors. Handbook of emotion. 3. New York: Guilford; 2008. pp. 249–271. [Google Scholar]

- Walker, M., & Vetter, T. (2009). Portraits made to measure: Manipulating social judgments about individuals with a statistical face model. Journal of Vision, 9(11), 12, 1–13. [DOI] [PubMed]

- Whalen PJ. The uncertainty of it all. Trends in Cognitive Sciences. 2007;11(12):499–500. doi: 10.1016/j.tics.2007.08.016. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Davis FC, Oler JA, Kim H, Kim MJ, Neta M. Human amygdala responses to facial expressions of emotion. In: Whalen PJ, Phelps EA, editors. The human amygdala. New York: Guilford Press; 2009. pp. 265–288. [Google Scholar]

- Whalen PJ, Rauch SL, Etcoff NL, McInerney SC, Lee MB, Jenike MA. Masked presentations of emotional facial expression modulate amygdala activity without explicit knowledge. Journal of Neuroscience. 1998;18:411–418. doi: 10.1523/JNEUROSCI.18-01-00411.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson FAW, Rolls ET. The effects of stimulus novelty and familiarity on neuronal activity in the amygdala of monkeys performing recognition memory tasks. Experimental Brain Research. 1993;93:367–382. doi: 10.1007/BF00229353. [DOI] [PubMed] [Google Scholar]

- Winston J, O’Doherty J, Dolan RJ. Common and distinct neural responses during direct and incidental processing of multiple facial emotions. NeuroImage. 2003;20:84–97. doi: 10.1016/s1053-8119(03)00303-3. [DOI] [PubMed] [Google Scholar]

- Winston J, O’Doherty J, Kilner JM, Perrett DI, Dolan RJ. Brain systems for assessing facial attractiveness. Neuropsychologia. 2007;45:195–206. doi: 10.1016/j.neuropsychologia.2006.05.009. [DOI] [PubMed] [Google Scholar]

- Winston J, Strange B, O’Doherty J, Dolan R. Automatic and intentional brain responses during evaluation of trustworthiness of face. Nature Neuroscience. 2002;5:277–283. doi: 10.1038/nn816. [DOI] [PubMed] [Google Scholar]

- Wright P, Liu Y. Neutral faces activate the amygdala during identity matching. Neuroimage. 2006;29:628–636. doi: 10.1016/j.neuroimage.2005.07.047. [DOI] [PubMed] [Google Scholar]

- Yang TT, Menon V, Eliez S, Blasey C, White CD, et al. Amygdalar activation associated with positive and negative facial expressions. NeuroReport. 2002;13:1737–1741. doi: 10.1097/00001756-200210070-00009. [DOI] [PubMed] [Google Scholar]

- Yip AW, Sinha P. Contribution of color to face recognition. Perception. 2002;31(8):995–1003. doi: 10.1068/p3376. [DOI] [PubMed] [Google Scholar]

- Zald DH. The human amygdala and the emotional evaluation of sensory stimuli. Brain Research Reviews. 2003;41:88–123. doi: 10.1016/s0165-0173(02)00248-5. [DOI] [PubMed] [Google Scholar]

- Zhang H, Liu J, Huber DE, Rieth CA, Tian J, Lee K. Detecting faces in pure noise images: A functional MRI study on top-down perception. Neuroreport. 2008;19(2):229–233. doi: 10.1097/WNR.0b013e3282f49083. [DOI] [PubMed] [Google Scholar]