Abstract

Evidence that self-face recognition is dissociable from general face recognition has important implications both for models of social cognition and for our understanding of face recognition. In two studies, we examine how adaptation affects the perception of personally familiar faces, and we use a visual adaptation paradigm to investigate whether the neural mechanisms underlying the recognition of one’s own and other faces are shared or separate. In Study 1 we show that the representation of personally familiar faces is rapidly updated by visual experience with unfamiliar faces, so that the perception of one’s own face and a friend’s face is altered by a brief period of adaptation to distorted unfamiliar faces. In Study 2, participants adapted to images of their own and a friend’s face distorted in opposite directions; the contingent aftereffects we observe are indicative of separate neural populations, but we suggest that these reflect coding of facial identity rather than of the categories “self” and “other.”

Keywords: self-face, familiar face, adaptation, personal familiarity

Introduction

Adaptation is a general feature of perceptual processing which describes an adjustment of neural sensitivity to sensory input. During adaptation, exposure to a stimulus causes a change in the distribution of neural responses to that stimulus with consequent changes in perception. The measurement of the perceptual changes or aftereffects produced by adaptation provides insight into the neural mechanisms which underlie different aspects of perception. Aftereffects have been extensively used to investigate the neural coding of basic visual properties such as color, motion, size, and orientation (Barlow, 1990) and of more complex visual properties such as face shape and identity (see Webster and MacLeod, 2011 for a review). Central to functional accounts of adaptation is the idea that neural sensitivity is adjusted to the average input, so that differences or deviations from this mean are signaled (Barlow, 1990; Webster et al., 2005).

In a seminal study of aftereffects in high-level vision, Webster and MacLin (1999) demonstrated that adapting to faces which were distorted in some way (compressed, expanded) led to subsequently viewed normal faces being perceived as distorted in the opposite direction (expanded, compressed). A number of subsequent studies have demonstrated robust adaptation aftereffects for faces, with manipulations of face shape using different forms of distortion (Rhodes et al., 2003; Carbon and Leder, 2005; Carbon et al., 2007; Jeffery et al., 2007; Carbon and Ditye, 2011; Laurence and Hole, 2011) or through the creation of anti-faces which manipulate aspects of facial shape that are crucial to identification (Leopold et al., 2001; Anderson and Wilson, 2005; Fang et al., 2007). These studies suggest that faces are coded with respect to a prototypical or “average face” and show that sensitivity changes with adaptation, so that perceptual judgments are made with respect to a shifted norm.

That these effects are present at a high-level of representation rather than solely the image-based level is reflected in the fact that the face distortion aftereffect transfers across faces of different sizes (Leopold et al., 2001; Zhao and Chubb, 2001; Anderson and Wilson, 2005), across different viewpoints (Jiang et al., 2006, 2007), across different facial expressions (Fox et al., 2008), and across different aspect ratios (Hole, 2011). Further evidence comes from studies demonstrating that naming famous faces (Hills et al., 2008) and imagining recently learned (Ryu et al., 2008) or famous faces (Hills et al., 2010) is sufficient to produce identity aftereffects in the subsequent visual perception of faces (see also Ghuman et al., 2010; Lai et al., 2012 for evidence of body-to-face and hand-to-face adaptation, respectively).

The study of contingent aftereffects offers a particularly useful tool for studying the neural coding of complex stimuli. If stimuli are coded separately, contingent aftereffects will occur, whereby adaptation to stimuli from different categories leads to aftereffects that are contingent on the category of the test stimulus. For example, adapting to green horizontal and red vertical lines leads to color aftereffects that are contingent on the orientation of the test stimulus (red horizontal and green vertical lines) because neurons are differentially tuned to the processing of horizontal and vertical lines (McCollough effect; McCollough, 1965; these effects are usually short-lived in face perception, e.g., Leopold et al., 2001; Rhodes et al., 2007; though see Webster et al., 2004; Carbon and Ditye, 2011). Contingent aftereffects provide evidence that distinct neural populations are involved in coding different categories of stimulus. By comparison, a cancellation of aftereffects across stimuli would suggest that they were coded by the same population of neurons (Rhodes et al., 2004). Interestingly, contingent aftereffects in face processing can tell us about the neural coding of social categories.

Little et al. (2005) report sex-contingent aftereffects for unfamiliar faces. That is, when participants adapted to a female face distorted in one direction, and a male face distorted in the opposite direction, contingent aftereffects occurred such that subsequently perceived female and male faces were perceived as distorted in opposite directions. The authors interpret this finding as suggesting separate neural populations for the coding of female and male faces. Others report aftereffects contingent on the sex (Jaquet and Rhodes, 2008), race (Jaquet et al., 2007; Little et al., 2008), and age (Little et al., 2008) of faces, suggesting that these attributes are coded by specific neural networks. These effects likely reflect separate coding along the lines of social category information; Bestelmeyer et al. (2008) report sex-contingent aftereffects for male and female faces (differ in sex category and structurally), but not for female and hyper-female faces (differ structurally), and Jaquet et al. (2007) report race-contingent adaptation, with larger opposite aftereffects for morphed faces which lie on different sides of a race category boundary than for faces which lie on the same side but differ physically from each other. These findings suggest that neurons representing faces may be tuned to high-level social category information. Adaptation to categories of faces may help us to identify them (Rhodes et al., 2010), and to enhance discrimination of faces from those categories (Yang et al., 2011), which may be useful for distinguishing the self-face (or kin-face; DeBruine, 2005; DeBruine et al., 2008; Platek et al., 2009) from other categories of face.

Familiarity affects how a face is recognized (e.g., Bruce and Young, 1986), and unfamiliar face recognition may be weaker and less stable than familiar face processing (Bruce et al., 1999; Hancock et al., 2000; Rossion et al., 2001; Liu et al., 2003). As such, testing for adaptation effects using familiar faces should increase our understanding of coding mechanisms specifically involved in the representation of familiar faces. Indeed, increasing familiarity with a recently learned face increases the magnitude of the face identity aftereffect (Jiang et al., 2007). While the majority of studies of face aftereffects have utilized unfamiliar face stimuli, some studies have begun to test the effects of familiarity. Several recent studies have demonstrated distortion aftereffects for famous faces (Carbon and Leder, 2005; Carbon et al., 2007; Carbon and Ditye, 2011), and Hole (2011) demonstrates identity-specific adaptation effects for famous faces, which are robust against changes in viewpoint, inversion and stretching. These are the first studies to demonstrate rapid visual adaptation for familiar faces. That is, although we demonstrate extremely high accuracy rates for remembering famous faces (Ge et al., 2003), these representations can still be rapidly updated by new visual experience.

Growing evidence suggests that our representation of personally familiar faces is different from our representation of recently learned faces and familiar famous faces that are not personally known to us. Tong and Nakayama (1999) introduced the idea of robust representation to explain difference in performance in visual search for one’s own face and more recently learned faces. Despite hundreds of trials of exposure to a new target face, participants could find their own face faster and more efficiently. Tong and Nakayama (1999) suggest that robust representations are laid down over long periods of time and require less attention to process. Indeed, Carbon (2008) has shown that recognition of personally familiar others is robust to both minor and major changes in the appearance of the face, whereas recognition of famous and celebrity faces decreases dramatically with changes to the familiar, “iconic” appearance of these faces. This is because we have experience in viewing personally familiar faces over a variety of conditions (e.g., lighting, angle), and thus our representations of those faces should be more robust to change (see also Herzmann et al., 2004 for evidence from EEG). These findings suggest that studies of familiar face processing may benefit particularly from the use of personally familiar faces.

To date, few studies have investigated the effects of personal familiarity on adaptation effects. Although Webster and MacLin (1999) focus largely on unfamiliar face processing, they show that adaptation to distortion of one’s own face is possible, and Rooney et al. (2007) report that people’s perception of their own faces and of their friends’ faces is rapidly changed by adaptation to distorted stranger faces. More recently, Laurence and Hole (2011) demonstrate that figural aftereffects are smaller when participants adapted to and were tested with their own face, compared with famous faces and unfamiliar faces. While Laurence and Hole demonstrate differences in self-/other face adaptation, their research did not compare adaptation effects for self-faces with effects for other personally familiar faces; in the investigation of self-/other face adaptation, level of personal familiarity with the “other” face may be an important consideration.

The conditions under which adaptation effects will transfer across faces is much debated. While several studies report that face adaptation aftereffects transfer across different adapting and test stimuli for unfamiliar faces (Webster and MacLin, 1999; Benton et al., 2007; Fang et al., 2007) and for famous faces (Carbon and Ditye, 2011), others report only identity-specific effects (unfamiliar faces: Leopold et al., 2001; Anderson and Wilson, 2005; famous faces: Carbon et al., 2007). Of interest is whether adaptation effects will transfer across images of different personally familiar faces (Study 2 of the current paper), and whether personally familiar face representations will be updated by adaptation to unfamiliar faces (Study 1 of the current paper), considering that personally familiar faces may have stronger representations relative to unfamiliar (e.g., Tong and Nakayama, 1999) and famous (e.g., Carbon, 2008) faces.

There is much debate as to the neural specialization of self-face processing, with interest focusing on how self and other are distinguished. Gillihan and Farah (2005) argue that one way that self-face representation might be considered “special” is if it engages neural systems that are physically or functionally distinct from those involved in representing others. Both neuroimaging and neuropsychological studies point to separate anatomical substrates for self-face processing, but the way in which these different regions contribute to recognition is not well understood. Evidence that self-face processing is special comes in part from studies of hemispheric specialization. Studies of split-brain patients, whereby the corpus callosum is severed and communication between the two hemispheres of the brain is inhibited, have produced evidence of the dissociation of self-face and other face processing (Sperry et al., 1979; Turk et al., 2002; Uddin et al., 2005b), as have several behavioral studies investigating the laterality of self-face specific processing (Keenan et al., 1999, 2000; Brady et al., 2004, 2005; Keyes and Brady, 2010), but these studies disagree as to the neural substrates underlying the dissociation. Brain-imaging studies also support the idea that self is somehow “special,” and point to the involvement of large-scale, distributed neural networks in self-face recognition (Sugiura et al., 2000; Kircher et al., 2001; Platek et al., 2006; for EEG evidence see Keyes et al., 2010). In the current study we use visual adaptation to explore whether the neural mechanisms involved in representing one’s own and other faces are shared or separate (Study 2).

The present paper

The current paper has two aims. First, we test whether exposure to highly distorted unfamiliar faces changes the perception of attractiveness and normality of participants’ own faces and their friends’ faces by comparing ratings before and after adaptation (Study 1). It is not known whether aftereffects will transfer from unfamiliar faces, with which we have very limited visual experience, to personally familiar faces (self, friend), for which we have developed robust representations. If there is a common coding mechanism for all faces, we predict that aftereffects will transfer from unfamiliar to personally familiar faces. However, if distorted representations of unfamiliar faces are not substantial enough to update established representations of personally familiar faces, then we predict minimal transfer of adaptation effects from the unfamiliar adapting stimuli to the personally familiar test stimuli.

Our second aim is to test for the presence of distinct neural populations for the coding of self- and other faces using a contingent aftereffects paradigm. In Study 2, participants adapt to images of their own and a friend’s face which have been distorted in opposite directions (either compressed or expanded) and we measure aftereffects in the perception of both the faces used as adapting stimuli (Self, Friend 1) and of a second friend’s face (Friend 2). If separate categories exist for self and other at the neural level, we expect dissociated coding for self- and other personally familiar faces, as evidenced by self/other-contingent adaptation effects. Specifically, adapting to Self in one direction and Friend 1 in the opposite direction should lead to subsequently viewed images of Self being distorted toward the adapting Self stimulus and images of Friend 1 being distorted toward the adapting Friend 1 stimulus. Importantly, if “self” and “other” are coded as distinct social categories, test images of Friend 2 should be perceived as being distorted toward the Friend 1 adapting stimulus, as it belongs to the “other” category. Alternatively, if self and other do not represent dissociated neural populations, but rather are represented by a shared mechanism, we expect a cancellation of aftereffects.

Study 1

Methods

Participants

Twenty-four students (11 males, M = 21.8 years, SD = 1.83 years) from University College Dublin volunteered to participate. The sample comprised 12 pairs of friends matched for gender and race, where each member of a pair was very familiar with the other’s face. The study was approved by the UCD Research Ethics Committee, and informed consent was gained from all participants.

Stimuli

Each participant was photographed in identical conditions under overhead, symmetrical lighting while holding a neutral expression. Eleven images were created from each digitized photograph as follows: an oval region encompassing the inner facial features was selected in Adobe Photoshop® and distorted using the software’s “spherize” function set to 11 different levels (−50, −40, −30, −20, −10, 0, +10, +20, +30, +40, +50). The resulting set included the original undistorted photograph, and two sets of five images in which the facial features were either compressed or expanded to different degrees (Figure 1). This process was repeated for each of the 24 participants’ photographs. A set of test stimuli was created for each participant, comprising 11 “self” images and 11 “friend” images. Sets of test stimuli were paired such that the “self” and “friend” stimuli for one participant would serve as the “friend” and “self” images, respectively, for another participant. For each participant, the “self” image was mirror-reversed, as participants prefer and are more familiar with a mirror image of their own face over a true image (Mita et al., 1977; Brédart, 2003). A further 10 unfamiliar faces, unknown to any of the participants were photographed in identical conditions to the participants. These 10 images were distorted at the two most extreme levels (−50 and +50) to create two sets of 10 “adapting” faces for the “compressed” and “expanded” conditions respectively. For all images, an oval vignette (measuring 277 × 400 to 304 × 400 pixels) was used to select the face with inner hairline but excluding the outer hairline. The vignettes were presented on a fixed size gray background and the images saved as grayscale with pixel depth of 8 bits.

Figure 1.

An original, undistorted face is shown in the center with increased expansion and compression toward the right and left sides, respectively.

Procedure

The experiment was run using Presentation® on a Dell Precision 360 personal computer. The display was run at 75 Hz and a resolution of 1024 × 768 pixels. The images subtended a visual angle of ∼ 8° in width and 18° in height at a viewing distance of approximately 50 cm.

Testing comprised participants rating a face for either attractiveness or normality on a scale of 1–9 (1 = unattractive/unusual, 9 = attractive/normal) both before and after a period of adaptation. Prior to testing, each participant ran a practice session, whereby they rated an unfamiliar face at 11 levels of distortion; these practice images were not used again. In the first block of testing, 110 images were presented in a randomized order [22 images (11 self and 11 friend) × 5 repetitions each]. Images were displayed for 1.5 s and then replaced with a rating scale, shown on a gray background. Participants rated the face on a scale of 1–9 by pressing the numbers across the top of a keyboard. This initial rating phase was followed by the adaptation phase, where participants were asked to pay close attention to a sequence of faces, which were either expanded (+50; viewed by participants in the “expanded” condition) or compressed (−50; viewed by participants in the “compressed” condition) distortions of unfamiliar faces. The adaptation phase lasted for 5 min with each image – chosen at random with replacement from the set of 10 – displayed for 4 s with a gray background ISI of 200 ms.

After adaptation, the participants rated the 110 test faces [22 images (11 self and 11 friend) × 5 repetitions] a second time, under the same conditions as the first block of testing. To maintain the effects of adaptation an adapting face was presented for 8 s (followed by a gray screen for 500 ms) before each test face. To distinguish adapting from test faces, the word “RATE” was printed above each test face.

Design and analyses

Twelve participants rated the faces for normality and 12 for attractiveness. Six of each group adapted to compressed faces and six adapted to expanded faces. The data were analyzed using mixed model ANOVA with a between-subjects factor of “type of adaptation” (compressed/expanded) and within-subjects factors of “time of rating” (pre- and post-adaptation) and “test stimulus” (self/friend). The dependent variables were the distortion level of the face that was rated most normal/attractive, which was calculated pre and post-adaptation as explained below.

Results

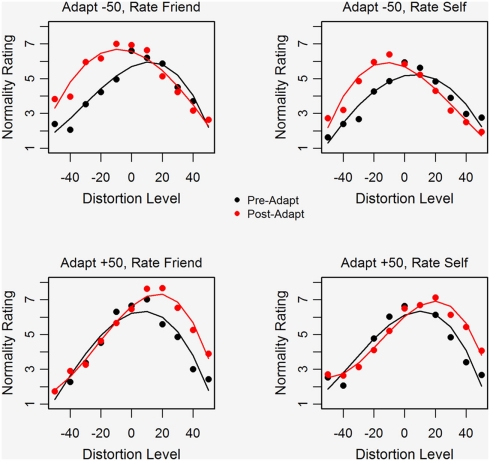

Figure 2 plots average normality ratings against distortion level for ratings made prior to and after adaptation. Separate plots are shown for ratings of Self and Friend (right and left panels) and for conditions in which participants adapted to extremely compressed or expanded faces (top and bottom panels). The solid curves (third-order polynomials fitted to the data generated by the six participants in each condition) are shown for both ratings made prior to (black) and after adaptation (red). Note that prior to adaptation participants rated faces that were slightly expanded as most normal, i.e., the maximum point of the black curve falls slightly to the right of the original, undistorted face. This preference for a slightly expanded face is also evident in the attractiveness data (not shown) and in the data of Rhodes et al. (2003) and may occur because the expansion of facial features leads to bigger, more widely spaced eyes which look more attractive. Following adaptation the distortion level rated as most normal shifts in the direction of the adapting stimulus, so that the maximum of the solid red line shifts further rightward in the case of adapting to expanded faces and leftward in the case of adapting to compressed faces.

Figure 2.

Average normality ratings plotted as a function of face distortion level using black symbols for pre-adaptation ratings and red symbols for post-adaptation ratings. The right and left panels show ratings for Self and Friend respectively, for conditions in which participants adapted to compressed faces (top panel) or to expanded faces (bottom panel).

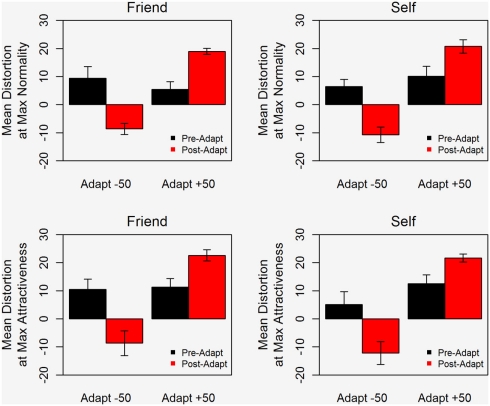

Adaptation effects are clearly evident in Figure 3 which plots the mean distortion level corresponding to the maximum rating for normality and for attractiveness. After adaptation, the rating of the most normal and most attractive face shifts in the direction of the adapting stimulus. Notably, the data for Self and Fiend exhibit very similar patterns. The same trends were seen in the attractiveness and normality data, reinforcing the idea that ratings of normality and attractiveness are both based on perceived “averageness” (Rhodes et al., 2003).

Figure 3.

Mean distortion level corresponding to the maximum rating of normality (top) and attractiveness (bottom) for images of Self (right) and Friend (left). Error bars show ±1 standard error of the mean.

Statistical analyses confirm these trends. Third-order polynomials were fitted to each participant’s ratings of normality or attractiveness using R (R Development Core Team, 2010) and the maximum of the curve was estimated to calculate the distortion level corresponding to the maximum rating both pre- and post-adaptation in all conditions. This served as the dependent variable.

For the normality data, ANOVA showed a significant interaction between “type of adaptation” (compressed or expanded) and “time of rating” (pre- or post-adaptation), F(1,10) = 133.03, p < 0.001. Planned comparisons showed that after adapting to compressed faces, participants chose a maximum normality rating at a distortion level that was significantly shifted toward the “compressed” end of the continuum, t(11) = −8.44, p < 0.001 [mean difference, −17.62; 95% CI (−22.22, −13.02)]. Similarly, after adapting to expanded faces, the distortion level at maximum normality was significantly shifted toward the “expanded” end of the continuum, t(11) = 7.22, p < 0.001 [mean difference, −12.12; 95% CI (8.42, 15.81)].There was no main effect of “test stimulus” (Self or Friend), F(1,10) = 0.025, p = 0.88, and “test stimulus” did not interact with any other variables.

For the attractiveness data, there was also a significant interaction between “type of adaptation” and “time of rating,” F(1,10) = 135.66, p < 0.001. Planned comparisons showed the shift in the distortion level at maximum attractiveness was significant for both compression, t(11) = −8.12, p < 0.001 [mean difference, −18.22; 95% CI (−23.17, −13.29)] and for expansion, t(11) = 6.25, p < 0.001 [mean difference, 10.28; 95% CI (6.67, 13.90)]. Again, there was no main effect of “test stimulus,” F(1,10) = 0.35, p = 0.56, and “test stimulus” did not interact with any other variables.

Discussion

Study 1 shows that the representation of highly familiar faces, including our own face, is rapidly updated by visual experience. This is consistent with recent reports of shifts in perceived identity following exposure to distorted celebrity faces (Carbon and Leder, 2005; Carbon et al., 2007). Here we show that comparable aftereffects – shifts in perceived attractiveness and normality – are rapidly obtained for personally familiar faces and that these effects can be achieved by exposure to unfamiliar faces. The fact that adaptation generalizes from unfamiliar to highly familiar faces, and that the aftereffects are of comparable magnitude for self-faces and friend faces, indicates a shared representation for all classes of face.

Our second study further explores whether aspects of the perceptual coding of self- and other faces are separate, but investigates for the presence of “opposite” or “contingent aftereffects,” in contrast to the “simple aftereffects” induced in Study 1. A number of recent studies have shown that it is possibly to induce aftereffects that are contingent upon characteristics of the adapting faces, such as their sex (Little et al., 2005; Jaquet and Rhodes, 2008), race (Jaquet et al., 2007; Little et al., 2008), and age (Little et al., 2008). This methodology allows us explore the extent to which separate neural populations are involved in coding different categories of face.

Study 2

In Study 2 participants adapted simultaneously to their own face and to another highly familiar face (“Friend 1”) distorted in opposite directions. If self and other faces are coded by common mechanisms we expect a cancellation of aftereffects, whereas contingent aftereffects would suggest separate coding of self and other faces. To address the possibility that any contingent aftereffects observed may reflect identity-specific coding, rather than separate neural representation of “self” and “other,” a third type of test face was introduced: Friend 2. If “self” and “other” faces are represented as discrete social categories and are represented by separate neural populations, then aftereffects for Friend 2 should follow the pattern of contingent aftereffects observed for Friend 1. If, however, identity-specific coding is in play, then contingent aftereffects observed for Self and Friend 1 faces should “cancel” for Friend 2 faces.

Methods

The general methods are the same as in Study 1.

Participants

Thirty students (12 males, M = 21.8 years, SD = 2.82 years) participated in Study 2. The sample comprised 10 groups of three friends matched for gender and race, where each member of a group was very familiar with the others’ faces.

Stimuli

Four photographs were taken of each participant, one while smiling, one while biting the bottom lip, and two, taken on separate occasions, with a neutral expression. These served as different examples of the participant’s face and comprised each participant’s adapting and test Self images. For each participant, four further images of a close friend of the same sex were taken (one smiling, one biting lip, and two neutral), and these comprised the Friend 1 adapting and test images. Finally, for each participant, three images of a different close friend of the same sex were taken (one smiling, two neutral), and these comprised the Friend 2 test images. Different images – smiling, lip biting, neutral – were used to ensure that any adaptation effects would not be solely based on low-level properties of the stimulus.

The biting lip image and one of the neutral expression images were used as adapting stimuli (Self, Friend 1) and the smiling image and the two neutral expression images were used as the test stimuli (Self, Friend 1, Friend 2). The adapting and test stimuli were created in Photoshop® by selecting a circular region encompassing the eyes and nose region only, and distorting using the “Spherize” function. As the different face examples included different expressions, the mouth region was not included in the distortion so as to make a more uniform set of distorted images. For the adapting stimuli the distortion was set to either −50 or +50 for a highly compressed or expanded face. In total, there were 4 adapting stimuli: 2 (Self, Friend 1) × 2 (biting lip, neutral). There were 45 test images: 3 (Self, Friend 1, Friend 2) × 3 (1 smiling and 2 neutral) × 5 distortion levels (−26, −12, 0, +12, +26). Self images were always mirror-reversed while Friend images were shown in the original photographed orientation.

Procedure

The procedure was similar to that used in Study 1. Testing comprised participants rating a face for distortedness on a scale of 1–7 (1 = least distorted, 7 = most distorted) both before and after a period of adaptation. Prior to testing, each participant ran a practice session, whereby they rated an unfamiliar face at five levels of distortion. In the first block of testing, 135 images were presented in a randomized order [3 face identities (Self, Friend 1, Friend 2) × 3 examples (1 smiling, 2 neutral) × 5 levels of distortion × 3 repetitions each). Images were displayed for 1.5 s and then replaced with a rating scale, shown on a gray background. Participants rated the face on a scale of 1–7 by pressing the numbers across the top of a keyboard.

During the adaptation phase, participants attended to a sequence of adapting images which lasted for a total of 3 min. The sequence included equal numbers of their own face (from two examples compressed to −50) and their friend’s face (Friend 1, from two examples expanded to +50) which were presented in random order. Each adapting image was displayed for 4 s with a gray background ISI of 200 ms.

In the post-adaptation testing phase, participants again rated the 135 test images for perceived distortedness. In order to maintain the effects of adaptation, an adapting face was presented for 6 s (followed by a gray screen for 500 ms) before each test face. This “top-up” adaptation contained equal numbers of highly compressed Self and highly expanded Friend 1 images which were presented in random order. Test faces were distinguished by the word “RATE” printed above each test face.

Design and analysis

The data were analyzed using within-subjects ANOVA with dependent variable of distortedness rating and factors of “time of rating” (pre- and post-adaptation), “level of distortion” (−26, −12, 0, +12, +26), and “test stimulus” (Self, Friend 1, Friend 2).

Results

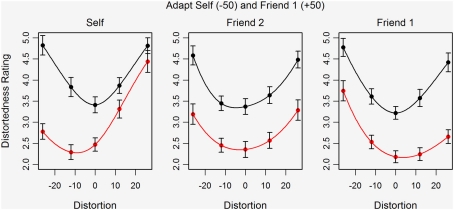

Figure 4 shows the mean distortedness ratings for the five test images before and after adaptation for Self, Friend 1, and Friend 2. The pattern of results is of primary interest here and suggests contingent aftereffects. Simultaneous adaptation to self and friend images distorted in opposite directions does not lead to a cancellation of aftereffects but rather to a shift in perceived distortedness that is biased in different directions for Self and Friend 1 images. For Self stimuli, the shift in perceived distortedness is greater for the compressed than for the expanded test images of Self (left plot). For Friend 1, however, the shift in perceived distortedness is greater for the expanded than for the compressed test images (right plot). Interestingly, the effects of adaptation on the perceived distortedness of the Friend 2 test images (center plot) are more evenly distributed across the distortion levels, as shown by the parallel downward shift of the ratings curve. The data are polynomial fitted to help illustrate these effects.

Figure 4.

Mean distortedness ratings for five versions of the test images both before (black) and after adaptation (red) to highly compressed Self and highly expanded Friend 1 faces in Study 2. Error bars show ±standard error of the mean. Separate plots are shown for Self (left), Friend 1 (right), and Friend 2 (center).

These observations are confirmed by statistical analyses. A three-way within-subjects ANOVA showed a three-way interaction between “time of rating,” “test stimulus,” and “level of distortion” to be significant, F(8,232) = 13.54, p < 0.001. This was further analyzed by conducting three 2-way ANOVAs separately on the Self, Friend 1, and Friend 2 data. Family-wise error was controlled using Bonferroni adjustment (0.05/3 = 0.017). ANOVA for the Self images shows a significant interaction between time of rating and distortion level on distortion ratings, F(4,116) = 20.26, p < 0.001, and planned comparisons of the pre- and post-adaptation mean ratings showed significant differences for levels 0, −12, and −26 only, with the estimated mean difference increasing as the images became more compressed [95% CI at “0” (0.58, 1.31); 95% CI at “−12” (1.06, 2.03); and 95% CI at “−26” (1.59, 2.50)].

Similarly, ANOVA for the Friend 1 images showed a significant time of rating by distortion level interaction, F(4,116) = 5.91, p < 0.001; here, planned comparisons of the pre- and post-adaptation mean ratings showed significant differences for all levels of distortion with the estimated differences increasing as the images became more expanded [95% CI at “−26” (0.62, 1.44); 95% CI at “−12” (0.70, 1.47); 95% CI at “0” (0.72, 1.31); 95% CI at “+12” (0.97, 1.70); 95% CI at +26” (1.38, 1.82)].

In contrast, ANOVA for Friend 2 images did not show a significant interaction between time of rating and distortion level, F(4,116) = 1.88, p = 0.12, suggesting that any perceptual change following adaptation is evenly distributed across distortion levels. Here, main effects of time of testing, F(1,29) = 63.56, p < 0.001, and distortion level, F(4,116) = 23.65, p < 0.001, were significant. Participants rated faces as less distorted following adaptation (pre = 3.91, SE = 0.10; post = 2.77, SE = 0.10), and rated faces overall as more distorted at higher levels of distortion (“−26” = 3.89, SE = 0.19; “−12” = 2.95, SE = 0.13; “0” = 2.86, SE = 0.15; “+12” = 3.10, SE = 0.15; “ + 26” = 3.89, SE = 0.17). All planned comparisons reported are significant after Bonferroni correction to 0.05/5 = 0.01.

Discussion

In line with other studies that have shown aftereffects contingent on characteristics of the adapting faces, these results show aftereffects that are contingent upon the identity of the adapting stimulus. Specifically, adaptation leads to a shift in participants’ perception of distortion that is biased in the direction of the adapting stimuli: here the shift is greatest for compressed relative to expanded Self faces and for expanded relative to compressed Friend 1 faces. However, the perceptual change is evenly distributed across the spectrum of distortion for Friend 2 faces, suggesting that coding is at the level of individual facial identity and not in terms of “self” and “other.”

These results also suggest shared or common coding of all faces. In the case of Friend 2, simultaneous adaptation to two other familiar faces adapted in different directions leads to a significant main effect of adaptation, i.e., faces at all levels of distortion are judged to be less distorted, suggesting that, on average and across all participants tested, Friend 2 faces share structural properties with both Friend 1 and Self faces. Similarly, in the case of Self and Friend 1, simultaneous adaptation to highly distorted versions of these images (in different directions) leads to an overall downward shift of the rating curves, albeit with significant bias in the direction of the adapting stimulus. This is in marked contrast to Study 1 where participants adapted to faces that were either compressed or expanded and the pre- and post-adaptation curves typically cross each other (see Figure 2). This suggests that, on average, Self faces share structural similarity to Friend 1 faces, so that we see a mixture of simple and contingent aftereffects. This is similar to what has been recently observed in studies of sex-contingent aftereffects (Jaquet and Rhodes, 2008). That these aftereffects are due to adaptation to the distorted faces, rather than simply to viewing faces, is supported by Webster and MacLin (1999), who show that viewing undistorted faces does not lead to aftereffects.

General Discussion

In two studies we show that the visual representation of personally familiar faces, including one’s own face, is subject to rapid adaptation. Aftereffects, characterized by shifts in the perception of attractiveness and normality (Study 1) and the perception of distortedness (Study 2), were demonstrated after exposure to distorted unfamiliar faces (Study 1), and after exposure to distorted self and friend faces (Study 2).

The fact that perceptions of one’s own and a close friend’s face are rapidly changed by exposure to distorted unfamiliar faces in Study 1 demonstrates that there exists a common representation for all classes of faces. Although adaptation effects have been shown previously for recently learned faces (Leopold et al., 2001) and for celebrity faces (Carbon and Leder, 2005; Carbon et al., 2007), this is among the first studies to date to demonstrate that personally familiar faces are subject to the same rapid effects of adaptation, and that adaptation effects can transfer from unfamiliar faces to more robustly represented personally familiar faces. Indeed, while Laurence and Hole (2011) demonstrated figural aftereffects for personally familiar faces (the self-face), their research focused on within-identity adaptation. In the current paper, we demonstrate cross-identity adaptation from unfamiliar to personally familiar, robustly represented faces. A more “robust” representation for personally familiar faces may involve a more detailed representation of facial configuration (e.g., Balas et al., 2007), and the observation here of aftereffects following exposure to faces with distorted configuration suggests that this configural representation can be tapped into and rapidly updated (see Allen et al., 2009, for evidence of a similarly robust configural representation for self-faces and other personally familiar faces).

Although our representation of and memory for highly familiar faces is more stable than that for recently encountered faces (e.g., Bruce et al., 1999; Hancock et al., 2000), a representation that is updated to incorporate both short- and long-term changes to facial shape and expression is useful for the recognition of familiar and more recently learned faces (Carbon and Leder, 2005; Carbon et al., 2007; Carbon and Ditye, 2011). This proposal is consistent with functional accounts of adaptation. Just as in “low-level” light adaptation where average luminance is discounted so that variations about the average are signaled, so “high-level” face adaptation may involve discounting some perceptual characteristics of a face (e.g., those associated with race) so as to better signal changes in identity or expression (Webster et al., 2005). Insofar as we have a particularly efficient representation for personally familiar faces, we conjecture that people may be particularly sensitive to subtle changes in expression in the faces of close friends and loved ones.

It is important to note that a large proportion of facial aftereffects can be attributed to low-level or retinotopic image-based properties (e.g., Xu et al., 2008; Afraz and Cavanagh, 2009; see Hills et al., 2010 for an estimation of the size of this contribution). In the two studies presented here, we avoided an over-reliance on image-based cues in several ways. First, the identities of the adapting (unfamiliar) and test (self, friend) faces were different (Study 1), and aftereffects were observed to transfer across identities. Second, where the identities of the adapting and test faces were the same (Study 2), we elicited aftereffects using adapting faces which were holding different facial poses than the test faces. Along with Carbon and Ditye (2011), we interpret the transfer of aftereffects across identities and across different images of the same person as evidence of perceptual adjustment at the representational level, rather than merely image-based artifacts. Further study is warranted to test the robustness of these aftereffects to image manipulation (size, viewpoint) and retinotopic displacement. Considering Afraz and Cavanagh’s (2009) finding that such alterations reduce but do not remove face identity aftereffects, we expect any future investigation to confirm our interpretation that the results presented here represent aftereffects which are present at the representational level.

Study 2 demonstrates aftereffects that are contingent on facial identity in that concurrent adaptation to compressed Self faces and expanded Friend 1 faces leads to aftereffects that are more pronounced for compressed Self faces but for expanded Friend 1 faces. The data, in fact, show a mixture of simple and contingent aftereffects with an overall downward shift in the distortedness rating curves after adaptation. This is what we would expect if Self and Friend 1 faces are structurally similar, and parallels Jaquet and Rhodes (2008), who show dissociable but not distinct coding of male and female faces. While the aftereffects for Self and Friend 1 faces do transfer to Friend 2 faces, here faces at all levels of distortion tested were judged as “less distorted” after adaptation. We conclude that adaptation is operating at the level of facial identity and not at the level of a categorical distinction between self and other. Across the sample of participants tested, which comprised ten groups of three friends, Friend 2 faces will be structurally similar to both Self and Friend 1 faces.

We conclude that shared neural processes underlie the visual recognition of self- and other-faces. Our results do reveal separate or dissociable coding of individual faces but not a more general dissociation between self and other. The current evidence for a separation in self and other face recognition remains of great interest to the study of social cognition and we conclude that these differences must operate at a level beyond the representation of face shape and identity studied here. Indeed, while the self-face may be represented as “special” in the brain, this does not appear to be due to separate neural representation for the categories of self- and other face. Rather, any special status self-face representation may claim to hold might be dependent on a qualitatively different way of processing and representing the self-face relative to other faces (e.g., Keyes and Brady, 2010), with the literature to date revealing a promisingly consistent emphasis on differences in lateralization of self- and other-face recognition (e.g., Turk et al., 2002; Uddin et al., 2005a; Keyes et al., 2010).

In summary, we conclude that the representation of personally familiar faces can be rapidly updated by visual experience, and that while dissociable coding for individual faces seems likely, there is no evidence for separate neural processes underlying self- and other-face recognition.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank all the students who participated in these studies. The images in Figure 1 are published with permission.

References

- Afraz A., Cavanagh P. (2009). The gender-specific face aftereffect is based in retinotopic not spatiotopic coordinates across several natural image transformations. J. Vis. 9, 1–17 10.1167/9.2.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allen H., Brady N., Tredoux C. (2009). Perception of “best likeness” to highly familiar faces of self and friend. Perception 38, 1821–1830 10.1068/p6424 [DOI] [PubMed] [Google Scholar]

- Anderson N. D., Wilson H. R. (2005). The nature of synthetic face adaptation. Vision Res. 45, 1815–1828 10.1016/j.visres.2005.01.012 [DOI] [PubMed] [Google Scholar]

- Balas B., Cox D., Conwell E. (2007). The effect of real-world personal familiarity on the speed of face information processing. PLoS ONE 2, e1223. 10.1371/journal.pone.0001223 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barlow H. B. (1990). “A theory about the functional role of visual after-effects,” in Vision: Coding and Efficiency, ed. Blakemore C. (Cambridge, UK: Cambridge University Press; ), 363–375 [Google Scholar]

- Benton C. P., Etchells P. J., Porter G., Clark A. P., Penton-Voak I. S., Nikolov S. G. (2007). Turning the other cheek: the viewpoint dependence of facial expression after-effects. Proc. R. Soc. Lond. B Biol. Sci. 274, 2131–2137 10.1098/rspb.2007.0473 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bestelmeyer P. E. G., Jones B. C., DeBruine L. M., Little A. C., Perrett D. I., Schneider A., Welling L. L., Conway C. A. (2008). Sex-contingent face aftereffects depend on perceptual category rather than structural encoding. Cognition 107, 353–365 10.1016/j.cognition.2007.07.018 [DOI] [PubMed] [Google Scholar]

- Brady N., Campbell M., Flaherty M. (2004). My left brain and me: a dissociation in the perception of self and others. Neuropsychologia 42, 1156–1161 10.1016/j.neuropsychologia.2004.02.007 [DOI] [PubMed] [Google Scholar]

- Brady N., Campbell M., Flaherty M. (2005). Perceptual asymmetries are preserved in memory for highly familiar faces of self and friend. Brain Cogn. 58, 334–342 10.1016/j.bandc.2005.01.001 [DOI] [PubMed] [Google Scholar]

- Brédart S. (2003). Recognising the usual orientation of one’s own face: the role of asymmetrically located details. Perception 32, 805–811 10.1068/p3354 [DOI] [PubMed] [Google Scholar]

- Bruce V., Henderson Z., Greenwood K., Hancock P. J. B., Burton A. M., Miller P. (1999). Verification of face identities from images captured on video. J. Exp. Psychol. Appl. 5, 339–360 10.1037/1076-898X.5.4.339 [DOI] [Google Scholar]

- Bruce V., Young A. (1986). Understanding face recognition. Br. J. Psychol. 77, 305–327 10.1111/j.2044-8295.1986.tb02199.x [DOI] [PubMed] [Google Scholar]

- Carbon C.-C. (2008). Famous faces as icons. The illusion of being an expert in the recognition of famous faces. Perception 37, 801–806 10.1068/p5789 [DOI] [PubMed] [Google Scholar]

- Carbon C.-C., Ditye T. (2011). Sustained effects of adaptation on the perception of human faces. J. Exp. Psychol. Hum. Percept. Perform. 37, 615–625 10.1037/a0019949 [DOI] [PubMed] [Google Scholar]

- Carbon C.-C., Leder H. (2005). Face adaptation: changing stable representations of familiar faces within minutes? Adv. Exp. Psychol. 1, 1–7 [Google Scholar]

- Carbon C.-C., Strobach T., Langton S. R. H., Harsányi G., Leder H., Kovács G. (2007). Adaptation effects of highly familiar faces: immediate and long lasting. Mem. Cogn. 35, 1966–1976 10.3758/BF03192929 [DOI] [PubMed] [Google Scholar]

- DeBruine L. M. (2005). Trustworthy but not lust-worthy: context-specific effects of facial resemblance. Proc. R. Soc. Lond. B Biol. Sci. 272, 919–922 10.1098/rspb.2004.3003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeBruine L. M., Jones B. C., Little A. C., Perrett D. I. (2008). Social perception of facial resemblance in humans. Arch. Sex. Behav. 37, 64–77 10.1007/s10508-007-9266-0 [DOI] [PubMed] [Google Scholar]

- Fang F., Ijichi K., He S. (2007). Transfer of the face viewpoint aftereffect from adaptation to different and inverted faces. J. Vis. 7, 1–9 10.1167/7.13.6 [DOI] [PubMed] [Google Scholar]

- Fox C. J., Oruç I., Barton J. S. J. (2008). It doesn’t matter how you feel. The facial identity aftereffect is invariant to changes in facial expression. J. Vis. 8, 1–13 10.1167/8.16.1 [DOI] [PubMed] [Google Scholar]

- Ge L., Luo J., Nishimura M., Lee K. (2003). The lasting impression of chairman Mao: hyperfidelity of familiar-face memory. Perception 32, 601–614 10.1068/p5022 [DOI] [PubMed] [Google Scholar]

- Ghuman A. S., McDaniel J. R., Martin A. (2010). Face adaptation without a face. Curr. Biol. 20, 32–36 10.1016/j.cub.2009.10.077 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gillihan S. J., Farah M. J. (2005). Is self special? A critical review of evidence from experimental psychology and cognitive neuroscience. Psychol. Bull. 131, 76–97 10.1037/0033-2909.131.1.76 [DOI] [PubMed] [Google Scholar]

- Hancock P. J., Bruce V. V., Burton A. M. (2000). Recognition of unfamiliar faces. Trends Cogn. Sci. 4, 330–337 10.1016/S1364-6613(00)01519-9 [DOI] [PubMed] [Google Scholar]

- Herzmann G., Schweinberger S. R., Sommer W., Jentzsch I. (2004). What’s special about personally familiar faces? A multimodal approach. Psychophysiology 41, 688–701 10.1111/j.1469-8986.2004.00196.x [DOI] [PubMed] [Google Scholar]

- Hills P. J., Elward R. L., Lewis M. B. (2008). Identity adaptation is mediated and moderated by visualisation ability. Perception 37, 1241–1257 10.1068/p5834 [DOI] [PubMed] [Google Scholar]

- Hills P. J., Elward R. L., Lewis M. B. (2010). Cross-modal face identity aftereffects and their relation to priming. J. Exp. Psychol. 36, 876–891 [DOI] [PubMed] [Google Scholar]

- Hole G. (2011). Identity-specific face adaptation effects: evidence for abstractive face representations. Cognition 119, 216–228 10.1016/j.cognition.2011.01.011 [DOI] [PubMed] [Google Scholar]

- Jaquet E., Rhodes G. (2008). Face aftereffects indicate dissociable, but not distinct, coding of male and female faces. J. Exp. Psychol. Hum. Percept. Perform. 34, 101–112 10.1037/0096-1523.34.1.101 [DOI] [PubMed] [Google Scholar]

- Jaquet E., Rhodes G., Hayward W. G. (2007). Opposite aftereffects for Chinese and Caucasian faces are selective for social category information and not just physical face differences. Q. J. Exp. Psychol. 60, 1457–1467 10.1080/17470210701467870 [DOI] [PubMed] [Google Scholar]

- Jeffery L., Rhodes G., Busey T. (2007). Broadly-tuned, view-specific coding of face shape: opposing figural aftereffects can be induced in different views. Vision Res. 47, 3070–3077 10.1016/j.visres.2007.08.018 [DOI] [PubMed] [Google Scholar]

- Jiang F., Blanz V., O’Toole A. J. (2006). Probing the visual representation of faces with adaptation. Psychol. Sci. 17, 493–500 10.1111/j.1467-9280.2006.01734.x [DOI] [PubMed] [Google Scholar]

- Jiang F., Blanz V., O’Toole A. J. (2007). The role of familiarity in three-dimensional view-transferability of face identity adaptation. Vision Res. 47, 525–531 10.1016/j.visres.2006.10.012 [DOI] [PubMed] [Google Scholar]

- Keenan J. P., Freund S., Hamilton R. H., Ganis G., Pascual-Leone A. (2000). Hand response differences in a self-face identification task. Neuropsychologia 38, 1047–1053 10.1016/S0028-3932(99)00145-1 [DOI] [PubMed] [Google Scholar]

- Keenan J. P., McCutcheon B., Freund S., Gallup G. G., Jr., Sanders G., Pascual-Leone A. (1999). Left hand advantage in a self-face recognition task. Neuropsychologia 37, 1421–1425 10.1016/S0028-3932(99)00025-1 [DOI] [PubMed] [Google Scholar]

- Keyes H., Brady N. (2010). Self-face recognition is characterised by faster, more accurate performance, which persists when faces are inverted. Q. J. Exp. Psychol. 63, 830–837 [DOI] [PubMed] [Google Scholar]

- Keyes H., Brady N., Reilly R. B., Foxe J. J. (2010). My face or yours? Event-related potential correlates of self-face processing. Brain Cogn. 72, 244–254 10.1016/j.bandc.2009.09.006 [DOI] [PubMed] [Google Scholar]

- Kircher T. T. J., Senior C., Phillips M. L., Rabe-Hesketh S., Benson P. J., Bullmore E. T., Brammer M., Simmons A., Bartels M., David A. S. (2001). Recognizing one’s own face. Cognition 78, B1–B15 10.1016/S0010-0277(00)00104-9 [DOI] [PubMed] [Google Scholar]

- Lai M., Oruç I., Barton J. J. S. (2012). Facial age after-effects show partial identity invariance and transfer from hands to faces. Cortex 48, 477–486 10.1016/j.cortex.2010.11.014 [DOI] [PubMed] [Google Scholar]

- Laurence S., Hole G. (2011). The effect of familiarity on face perception. Perception 40, 450–463 10.1068/p6774 [DOI] [PubMed] [Google Scholar]

- Leopold D. A., O’Toole A. J., Vetter T., Blanz V. (2001). Prototype-referenced shape encoding revealed by high-level after-effects. Nat. Neurosci. 4, 89–94 10.1038/82947 [DOI] [PubMed] [Google Scholar]

- Little A. C., DeBruine L. M., Jones B. C. (2005). Sex-contingent face after-effects suggest distinct neural populations code male and female faces. Proc. R. Soc. Lond. B Biol. Sci. 272, 2283–2287 10.1098/rspb.2005.3220 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Little A. C., DeBruine L. M., Jones B. C., Waitt C. (2008). Category contingent aftereffects for faces of different races, ages and species. Cognition 106, 1537–1547 10.1016/j.cognition.2007.06.008 [DOI] [PubMed] [Google Scholar]

- Liu C. H., Seetzen H., Burton A. M., Chaudhuri A. (2003). Face recognition is robust with incongruent image resolution: relationship to security video images. J. Exp. Psychol. Appl. 9, 33–41 10.1037/1076-898X.9.1.33 [DOI] [PubMed] [Google Scholar]

- McCollough C. (1965). Adaptation of edge-detectors in the human visual system. Science 149, 1115–1116 10.1126/science.149.3688.1115 [DOI] [PubMed] [Google Scholar]

- Mita T. H., Dermer M., Knight J. (1977). Reversed facial images and the mere-exposure hypothesis. J. Pers. Soc. Psychol. 35, 597–601 10.1037/0022-3514.35.8.597 [DOI] [Google Scholar]

- Platek S. M., Krill A. L., Wilson B. (2009). Implicit trustworthiness ratings of self-resembling faces activate brain centers involved in reward. Neuropsychologia 47, 289–293 10.1016/j.neuropsychologia.2008.12.027 [DOI] [PubMed] [Google Scholar]

- Platek S. M., Loughead J. W., Gur R. C., Busch S., Ruparel K., Phend N., Panyavin I. S., Langleben D. D. (2006). Neural substrates for functionally discriminating self-face from personally familiar faces. Hum. Brain Mapp. 27, 91–98 10.1002/hbm.20168 [DOI] [PMC free article] [PubMed] [Google Scholar]

- R Development Core Team (2010). R: A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing; ISBN 3-900051-07-0, URL: http://www.R-project.org [Google Scholar]

- Rhodes G., Jeffery L., Clifford C. W. G., Leopold D. A. (2007). The timecourse of higher-level face aftereffects. Vision Res. 47, 2291–2296 10.1016/j.visres.2006.12.010 [DOI] [PubMed] [Google Scholar]

- Rhodes G., Jeffery L., Watson T. L., Clifford C. W. G., Nakayama K. (2003). Fitting the mind to the world: face adaptation and attractiveness aftereffects. Psychol. Sci. 14, 558–566 10.1046/j.0956-7976.2003.psci_1465.x [DOI] [PubMed] [Google Scholar]

- Rhodes G., Jeffrey L., Watson T. L., Jaquet E., Winkler C., Clifford C. W. G. (2004). Orientation-contingent face after-effects and implications for face-coding mechanisms. Curr. Biol. 14, 2119–2123 10.1016/j.cub.2004.11.053 [DOI] [PubMed] [Google Scholar]

- Rhodes G., Watson T. L., Jeffery L., Clifford C. W. G. (2010). Perceptual adaptation helps us identify information. Vision Res. 50, 963–968 10.1016/j.visres.2010.03.003 [DOI] [PubMed] [Google Scholar]

- Rooney B. D., Brady N. P., Benson C. (2007). Are the neural mechanisms that underlie self-face and other-face recognition shared or separate? An adaptation study. Perception 36 (ECVP Abstract Supplement). [Google Scholar]

- Rossion B., Schiltz C., Robaye L., Pirenne D., Crommelinck M. (2001). How does the brain discriminate familiar and unfamiliar faces? A PET study of face categorical perception. J. Cogn. Neurosci. 13, 1019–1034 10.1162/089892901753165917 [DOI] [PubMed] [Google Scholar]

- Ryu J.-J., Borrmann K., Chaudhuri A. (2008). Imagine Jane and identity John: face identity aftereffects induced by imagined faces. PLoS ONE 3, e2195. 10.1371/journal.pone.0002195 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sperry R. W., Zaidel E., Zaidel D. (1979). Self recognition and social awareness in the deconnected minor hemisphere. Neuropsychologia 17, 153–166 10.1016/0028-3932(79)90006-X [DOI] [PubMed] [Google Scholar]

- Sugiura M., Kawashima R., Nakamura K., Okada K., Kato T., Nakamura A., Hatano K., Itoh K., Kojima S., Fukuda H. (2000). Passive and active recognition of one’s own face. Neuroimage 11, 36–48 10.1016/S1053-8119(00)90970-4 [DOI] [PubMed] [Google Scholar]

- Tong F., Nakayama K. (1999). Robust representations for face: evidence from visual search. J. Exp. Psychol. Hum. Percept. Perform. 25, 1016–1035 10.1037/0096-1523.25.4.1016 [DOI] [PubMed] [Google Scholar]

- Turk D. J., Heatherton T. F., Kelley W. M., Funnell M. G., Gazzaniga M. S., Macrae C. N. (2002). Mike or me? Self-recognition in a split-brain patient. Nat. Neurosci. 5, 841–842 10.1038/nn907 [DOI] [PubMed] [Google Scholar]

- Uddin L. Q., Kaplan J. T., Molnar-Szakacs I., Zaidel E., Iacoboni M. (2005a). Self-face recognition activates a frontoparietal “mirror” network in the right hemisphere: an event-related fMRI study. Neuroimage 25, 926–935 10.1016/j.neuroimage.2004.12.018 [DOI] [PubMed] [Google Scholar]

- Uddin L. Q., Rayman J., Zaidel E. (2005b). Split-brain reveals separate but equal self-recognition in the two cerebral hemispheres. Conscious. Cogn. 14, 633–640 10.1016/j.concog.2005.01.008 [DOI] [PubMed] [Google Scholar]

- Webster M. A., Kaping D., Mizokami Y., Duhamel P. (2004). Adaptation to natural facial categories. Nature 428, 557–561 10.1038/nature02468 [DOI] [PubMed] [Google Scholar]

- Webster M. A., MacLeod D. I. A. (2011). Visual adaptation and face perception. Philos. Trans. R. Soc. Lond. B Biol. Sci. 366, 1702–1725 10.1098/rstb.2010.0360 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webster M. A., MacLin O. H. (1999). Figural aftereffects in the perception of faces. Psychon. Bull. Rev. 6, 647–653 10.3758/BF03212974 [DOI] [PubMed] [Google Scholar]

- Webster M. A., Werner J. S., Field D. J. (2005). “Adaptation and the phenomenology of perception,” in Fitting the Mind to the World: Adaptation and Aftereffects in High Level Vision, eds Clifford C. W. G., Rhodes G. (Oxford: Oxford University Press; ), 241–277 [Google Scholar]

- Xu H., Dayan P., Lipkin R. M., Qian N. (2008). Adaptation across the cortical hierarchy: low-level curve adaptation affects high-level facial expression judgements. J. Neurosci. 28, 3374–3383 10.1523/JNEUROSCI.4126-07.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang H., Shen J., Chen J., Fang F. (2011). Face adaptation improves gender discrimination. Vision Res. 51, 105–110 10.1016/j.visres.2011.08.022 [DOI] [PubMed] [Google Scholar]

- Zhao L., Chubb C. (2001). The size-tuning of the face-distortion after-effect. Vision Res. 41, 2979–2994 10.1016/S0042-6989(01)00202-4 [DOI] [PubMed] [Google Scholar]