Abstract

Objective

There is little information about which intimate partner violence (IPV) policies and services assist in the identification of IPV in the emergency department (ED). The objective of this study was to examine the association between a variety of resources and documented IPV diagnoses.

Methods

Using billing data assembled from 21 Oregon EDs from 2001 to 2005, we identified patients assigned a discharge diagnosis of IPV. We then surveyed ED directors and nurse managers to gain information about IPV-related policies and services offered by participating hospitals. We combined billing data, survey results and hospital-level variables. Multivariate analysis assessed the likelihood of receiving a diagnosis of IPV depending on the policies and services available.

Results

In 754,597 adult female ED visits, IPV was diagnosed 1,929 times. Mandatory IPV screening and victim advocates were the most commonly available IPV resources. The diagnosis of IPV was independently associated with the use of a standardized intervention checklist (OR 1.71, 95% CI 1.04–2.82). Public displays regarding IPV were negatively associated with IPV diagnosis (OR 0.56, 95% CI 0.35–0.88).

Conclusions

IPV remains a rare documented diagnosis. Most common hospital-level resources did not demonstrate an association with IPV diagnoses; however, a standardized intervention checklist may play a role in clinicians’ likelihood diagnosing IPV.

Keywords: domestic violence, spouse abuse, battering, hospital services, emergency medicine

Introduction

Intimate partner violence (IPV) has been described as a health care problem of epidemic proportions, occurring in an estimated 1.9 million U.S. women each year and in 25% of U.S. women over their lifetime[1–4]. The consequences are wide-ranging and profound. IPV adversely affects eight of ten of the leading health indicators identified by the Department of Health and Human Services and is responsible for an estimated $4.1 billion in direct medical and mental health care costs[5].

The Joint Commission, which accredits and certifies U.S. healthcare organizations, has defined basic standards for policies and procedures to increase the identification of IPV within emergency departments (EDs) [6]. Specific means of meeting the standards vary widely. A comprehensive hospital IPV program may include a physical environment that encourages awareness and reporting of abuse, ongoing training programs for clinical staff, a written hospital policy for the assessment and management of IPV, and advocacy services to assist victims with legal counsel, counselling, and safe shelter[7]. However, most hospitals do not maintain a full menu of services; fewer than half of EDs have algorithms for the management of abused women[8]. With little evidence about which practices translate into increased identification and treatment of abuse[9], hospitals with limited resources have little on which to base decisions about selective use of policies and services.

ED visits represent a great opportunity to identify IPV. The ED sees a disproportionately high prevalence of IPV [2, 10–13] and is a frequent point of contact for victims of abuse in the period before IPV escalates to police intervention or homicide[14, 15]. The objective of this study was to examine availability of a variety of hospital-based IPV policies and services among Oregon hospitals and assess their association with the ED diagnosis of IPV. Given the paucity of existing information regarding the effectiveness of specific IPV-related resources, this study was aimed at examining potential associations between IPV and hospital services and policies, rather than testing a priori hypotheses.

Methods

Study Design

This was an observational study with two main components: first, secondary analysis of previously collected administrative billing data from 21 Oregon EDs and second, standardized telephone survey of ED directors and nurse managers at the same hospitals. Survey data were merged with billing data, hospital-level information, and zip-code based demographic information. The study was approved by our institutional review board.

Study Setting and Population

The data were electronic claims records from a purposive sample of hospitals representing a range of practice settings[16]. A number of factors were considered in selecting participating hospitals, including patient volume, urban versus rural location, and the region of Oregon where hospitals were located. The final dataset contained information on 2,228,169 visits to 21 Oregon EDs between August 1, 2001 and February 28, 2005, a total of 42 months, representing about 52% of all visits to Oregon’s 57 EDs.

We targeted ED directors and nurse managers for participation in the telephone survey, reasoning that they would be most familiar with current ED protocols and services. Our final response rate was 100% (21 out of 21 EDs in the billing dataset).

We chose to limit this study to women because use of IPV-related ICD-9 codes in male patients and ED IPV policies around male victims were too rare for a meaningful analysis. Due to the inability to accurately differentiate between IPV and elder abuse using administrative data, we limited the analysis to patients less than 65 years of age.

Measurements

The billing dataset included standard administrative fields such as patient demographics, hospital disposition, and discharge diagnoses. In addition, unemployment rate was acquired for all Oregon zip codes from Nielsen Claritas Services for the years 2001–2004. Data for 2005 were unavailable so were imputed using linear extrapolation. Hospital-level variables, including bed size and urban/rural designation, were obtained from the Office for Oregon Health Policy and Research.

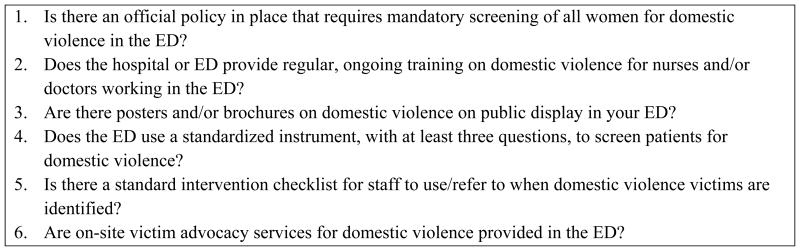

The survey tool was adapted from the “Delphi Instrument for Hospital-Based Domestic Violence Programs” developed by the Agency for Healthcare Research and Quality using a panel of IPV experts; in field testing, it was found to have high inter-rater reliability[17]. We limited our survey to 6 questions addressing resources most relevant to the ED setting (Figure 1). Prior to implementation, the survey was pilot tested among ED staff unaffiliated with the study.

Figure 1.

Survey of ED Administrators*

* The term ”domestic violence” was used for the survey, assuming that this term would be most familiar to clinicians.

The telephone survey was administered to ED directors and nurse managers over a one-month period from September to October, 2008. One person was interviewed at each site, based on availability. To characterize the resources available during the period represented by the ED billing data (2001–2005), we asked the respondent if a resource was present and if so, whether it had been implemented before, during or after the study period. If the policies or services were known to have been implemented after 2005, they were considered absent.

Our outcome was the ED diagnosis of IPV as identified and coded by clinicians. Various ICD-9 code groupings have been used to capture IPV diagnoses[18–20]. We defined abuse by ICD-9 codes of 995.80–995.83 (adult maltreatment; physical, emotional/psychological, and sexual abuse), 995.85 (multiple forms of abuse) and/or the external cause of injury code E967.3 (by spouse or partner, ex-spouse or ex-partner).

Data Analysis

To minimize bias associated with complete case analysis and to allow inclusion of all eligible observations in the sample, we assigned values to missing data points in the dataset using multiple imputation [21, 22]. Multiple imputation, which estimates missing values by examining existing patterns of other covariates, has been used in many areas of clinical research [23, 24].

We used descriptive statistics to characterize the sample and multivariable logistic regression to test the association between hospital resources and diagnosis of IPV. We selected variables that had previously described associations with IPV or that were logical potential predictors of IPV, including age, injury, and insurance status (as a measure of socioeconomic status). Hospital-level characteristics that might influence the availability of resources were also incorporated into the model, including bedsize and rural/urban setting. To account for the non-independence of observations at hospitals, standard errors were adjusted by clustering at the hospital level. We assessed multicollinearity with Eigenvalues and goodness-of-fit with the Hosmer-Lemeshow statistic.

Statistical significance was defined as a probability of a type I error of less than 5% (2-tailed). Results are expressed as odds ratios (ORs) with 95% confidence intervals (CIs). Analyses were conducted with Stata, version 10.1 (StataCorp LP, College Station, TX).

Results

There were 754,597 adult female ED visits to the 21 hospitals in our database over the 42-month study period. IPV was a recorded diagnosis 1,929 times (0.26% of visits). Descriptive results are displayed in Table 1. Results of the ED survey are shown in Table 2. Most hospitals (81%) had a policy mandating screening in the ED and some level of on-site victim advocacy (76%), whether part-time or full-time. Regular clinician education about IPV (48%) and use of public display materials related to IPV (48%) were also common among the hospitals in our study.

Table 1.

Characteristics of ED patients with and without IPV diagnoses

| Patient Characteristic | IPV + | IPV− | P value | ||

|---|---|---|---|---|---|

| N | % | N | % | ||

| Total | 1,929 | 100 | 752,668 | 100 | |

| Age | <0.001 | ||||

| 18–33 | 985 | 51 | 314,813 | 42 | |

| 34–49 | 830 | 43 | 290,073 | 39 | |

| 50–64 | 114 | 6 | 147,782 | 20 | |

| Race/Ethnicity | <0.001 | ||||

| Black | 177 | 9 | 39,526 | 5 | |

| Hispanic | 85 | 5 | 39,456 | 5 | |

| White | 1,573 | 82 | 644,773 | 86 | |

| Other | 94 | 4 | 28,913 | 4 | |

| Insurance | |||||

| Commercial | 400 | 21 | 279,992 | 37 | <0.001 |

| Medicare/Other | 156 | 8 | 118,922 | 16 | |

| Medicaid | 836 | 43 | 222,790 | 30 | |

| Uninsured | 537 | 28 | 130,964 | 17 | |

| Percent unemployment | <0.001 | ||||

| <5.96% | 857 | 44 | 374,686 | 50 | |

| ≥5.96% | 1,072 | 56 | 377,982 | 50 | |

| Day of presentation | <0.001 | ||||

| Weekday | 1,289 | 67 | 525,187 | 70 | |

| Weekend | 640 | 33 | 227,481 | 30 | |

| Shift of presentation | <0.01 | ||||

| Day | 522 | 30 | 278,778 | 37 | |

| Evening | 741 | 43 | 352,711 | 47 | |

| Night | 468 | 27 | 121,179 | 16 | |

| ED disposition | <0.001 | ||||

| Admitted | 79 | 4 | 77,264 | 10 | |

| Discharged | 1,850 | 96 | 675,404 | 90 | |

| Selected discharge diagnoses | |||||

| Injury | 1,683 | 87 | 171,477 | 23 | <0.001 |

| Mental health | 147 | 8 | 67,152 | 9 | 0.045 |

| Alcohol | 109 | 6 | 15,584 | 2 | <0.001 |

| Drugs | 22 | 1 | 11,684 | 2 | 0.14 |

| Pregnancy | 44 | 2 | 30,616 | 4 | <0.001 |

Table 2.

Results of ED Administrator Survey of IPV Resources (N=21)

| Resources | N(%) with resource |

|---|---|

| Mandatory screening policy | 17(81) |

|

| |

| Regular clinician training | 10 48) |

|

| |

| Public displays | 10(48) |

|

| |

| Standard intervention checklist | 3(14) |

|

| |

| Standardized screening instrument | 6(29) |

|

| |

| On-site victim advocacy services | |

| Part-time | 10(48) |

| Full-time | 6(29) |

| Any | 16(76) |

The results of the multivariate analysis are shown in Table 3. Younger age and black and other non-white race were independently associated with greater odds of receiving an IPV diagnosis. Compared to patients with commercial insurance, Medicaid enrollees and the uninsured were more likely to receive a diagnosis of IPV. Of the hospital policies and services assessed, only one was independently associated with increased odds of receiving an IPV diagnosis: a standardized intervention checklist for the management of IPV (OR 1.71, 95% CI 1.04–2.82). The use of public displays regarding IPV was associated with decreased odds of receiving an IPV diagnosis (OR 0.56, 95% CI 0.35–0.88). We did not find an association between IPV diagnosis and the presence of an IPV policy (OR 1.48, 95% CI 0.70–3.14), clinician training (OR 1.12, 95% CI 0.70–1.80), ED advocacy (OR 1.00, 95% CI 0.37–2.69), or standardized screening questions (OR 0.82, 95% CI 0.42–1.62).

Table 3.

Adjusted odds of IPV diagnosis using multivariable logistic regression*

| Patient & hospital factors | OR (95%CI) |

|---|---|

| Age | |

| 18–33 | Reference |

| 34–49 | 0.95(0.84–1.07) |

| 50–64 | 0.34(0.29–0.40) |

|

| |

| Race/Ethnicity | |

| Black | 1.63(1.20–2.21) |

| Hispanic | 1.01(0.72–1.42) |

| White | Reference |

| Other | 1.43(1.03–1.99) |

|

| |

| Insurance status | |

| Commercial | Reference |

| Medicare/Other | 0.22(0.31–0.57) |

| Medicaid | 2.48(2.16–2.85) |

| Uninsured | 2.41(2.16–2.70) |

|

| |

| Practice setting | |

| Rural | 0.57(0.39–2.44) |

| Urban | Reference |

|

| |

| Mandatory screening policy | 1.48(0.70–3.14) |

|

| |

| Regular clinician training | 1.12(0.70–1.80) |

|

| |

| Public displays | 0.56(0.35–0.88) |

|

| |

| Standard intervention checklist | 1.71(1.04–2.82) |

|

| |

| Screening instrument | 0.82(0.42–1.62) |

|

| |

| On-site victim advocacy services | |

| Part-time | 1.00( 0.38–2.66) |

| Full-time | 1.01(0.34–3.01) |

| Any | 1.00(0.37–2.69) |

Other model variables not shown: presenting shift, presenting day, disposition, % unemployed, other selected diagnoses (injury, alcohol & drug use, mental health, pregnancy).

Discussion

In this study of Oregon EDs, we found an extremely low rate of identification of IPV and no association between identification rates and many recommended hospital IPV policies and services. We did find a positive association between use of a standardized intervention checklist and ED diagnosis of IPV. It may not be intuitive that an intervention checklist may influence clinicians’ ability to diagnose IPV; after all, one must first identify IPV in order to use a checklist. However, fear of “opening Pandora’s Box” has long been recognized as an important barrier to providers assessing for IPV[25]. Clinicians have been shown to respond with uncertainty to disclosures of abuse and to have difficulty following through with formal diagnoses or referrals to services[26]. Our findings may reflect that clinicians are empowered to identify IPV when they know they can respond with definitive action.

Standardized resources such as an intervention checklist [27, 28] are attractive for a number of reasons. They can be administered with good reproducibility by any practitioner, providing consistency of care in a chaotic, high-acuity setting. They are low-technology and inexpensive so can be implemented in EDs of any size. As with the Pronovost checklist [29], which reminds physicians of the minimum requirements for sterile central line placement, an intervention checklist has the potential to be powerful tool reminding ED clinicians to take basic, critical steps for victims of violence: assess immediate safety, ask about children at home at risk for abuse, offer IPV counselling services or safe shelter, remind the patient to call 911 should they feel unsafe. These actions may seem mere common sense, but clinicians rarely perform them[15].

Education of clinicians did not show an association with IPV diagnosis in our study, similar to prior research demonstrating only short-term gains in IPV identification or referrals after educational interventions, even with rigorous training[30, 31]. On-site victim advocacy services also were not associated with IPV diagnosis in the ED. It may be that advocates take ownership in cases of abuse and minimize the physician’s role, decreasing physician documentation of abuse. Alternatively, the lack of association may be related to the fact that, in many EDs, the advocacy role is assumed by staff with many other responsibilities who may not be consistently available to address IPV.

We did not find associations between a formal screening policy or the use of standardized screening questions and IPV diagnosis. Despite the lack of evidence supporting effectiveness of IPV screening[32, 33], there is compelling logic for screening[34, 35] and routine assessment for victimization is supported by most major medical societies[36]. However, requiring screening questions does not guarantee that clinicians consistently or effectively implement them, nor that they respond appropriately to disclosures of abuse[26]. Our findings may reflect that mandating screening without providing effective and accessible means of intervening will have a limited impact on victims.

The use of posters or brochures has been described as part of an integrated approach to improving clinical identification of IPV[31, 37]. We found a negative association between the use of public displays and the diagnosis of IPV, findings similar to a previous study[38] in which fewer women stated they would disclose abuse after IPV posters and hotline cards were put on display. It may be that patients who obtain IPV resources from such materials feel they no longer need to discuss violence with a clinician, or that public displays inadvertently project an unwelcoming message to victims. Another possibility is that public displays, which require minimal expense or personnel involvement, represent a passive approach to addressing violence or lack of other resources.

Limitations

Our primary outcome, the presence of a discharge diagnosis of IPV, is only as accurate as the documentation and coding practices of clinicians. The comparison group must include victims who were not identified or who were not documented due to diagnostic uncertainty. Others may have been definitively diagnosed and treated for abuse, but without adequate documentation. On the other hand, IPV is known to be under-diagnosed in the ED, and our findings are consistent with prior studies showing extremely low rates of detection[20].

Having a policy or service in place does not mean it was used with all patients. Larkin et al found that a “mandatory” screening policy was used in only 30% of patients [39]. If providers at times failed to implement “standard” policies or services, this would likely weaken the strength of a true association and may have contributed to the lack of association we found with many of the resources and IPV detection in our analysis.

Our survey instrument was not a validated assessment tool. Although we reference the Delphi instrument, the full 78-item survey was impractical for use and most of the content was not directly relevant to the ED setting, which was the focus of this study.

Our findings may also be limited by recall bias. ED directors or nurse managers may have been more likely to report the presence of resources when uncertain. Further, since we were attempting to correlate services with data obtained between 2001 and 2005, we asked survey participants to think back several years, creating more potential for error. However, our questions did not demand knowledge of specifics; we asked about broad date ranges rather than specific dates.

Because our study was limited to the state of Oregon, the findings may not be fully generalizable. However, the hospitals sampled represented a range of practice settings, with varying bed sizes, trauma capabilities, and urban and rural locations. Furthermore, the characteristics of the identified IPV victims in our sample are consistent with prior studies of IPV victims in the ED, and estimates of IPV incidence and prevalence in the state of Oregon are comparable to national statistics[40]. General characteristics of the patients diagnosed with IPV, including disparities in race and clinical profile, were also similar to those noted in previous ED studies[10, 41–43].

Conclusions

IPV is still extremely under-recognized in ED settings. A standardized intervention checklist is one resource that may aid in the identification of victims of IPV. Targeting effective hospital-level IPV resources for use in the ED setting may help to increase detection of IPV, a first step in addressing the healthcare needs of abused women. Further studies are needed to prospectively test the effectiveness of individual and combination resources for addressing IPV among ED patients.

Acknowledgments

Grant Support: This work was supported in part by the National Heart, Lung, and Blood Institute, supplement to U01/HL-04-001 “Training Core – Resuscitation Research” and the National Institutes of Health (NIH) Health Disparities Loan Repayment Program.

References

- 1.Greenfeld L, Rand M, Craven D, Klaus P, Perkins C, Ringel C, et al. Violence by intimates: analysis of data on crimes by current or former spouses, boyfriends, and girlfriends. Bureau of Justice Statistics; 1998. [Google Scholar]

- 2.Coker A, Smith P, Bethea L, King M, McKeown R. Physical health consequences of physical and psychological intimate partner violence. Arch Fam Med. 2000;9:451–457. doi: 10.1001/archfami.9.5.451. [DOI] [PubMed] [Google Scholar]

- 3.Tjaden P, Thoennes N. Full report on the prevalence, incidence, and consequences of violence against women. Washington D.C: 2000. [Google Scholar]

- 4.Costs of Intimate Partner Violence Against Women in the United States. Atlanta (GA): National Center for Injury Prevention and Control, Centers for Disease Control and Prevention; 2003. [Google Scholar]

- 5.DHHS. National Center for Injury Prevention and Control: Costs of Intimate Partner Violence Against Women in the United States. Atlanta (GA): Centers for Disease Control and Prevention; 2003. [Google Scholar]

- 6.Flitcraft A. Physicians and domestic violence: challenges for prevention. Health Aff. 1993;12:154–161. doi: 10.1377/hlthaff.12.4.154. [DOI] [PubMed] [Google Scholar]

- 7.Consensus Guidelines: On Identifying and Responding to Domestic Violence Victimization in Health Care Settings. San Francisco, CA: Family Violence Prevention Fund; 2004. [Google Scholar]

- 8.Conti CT., Sr Emergency departments and abuse: policy issues, practice barriers, and recommendations. J Assoc Acad Minor Phys. 1998;9:35–39. [PubMed] [Google Scholar]

- 9.Datner EM, O’Malley M, Schears RM, Shofer FS, Baren J, Hollander JE. Universal screening for interpersonal violence: inability to prove universal screening improves provision of services. Eur J Emerg Med. 2004;11:35–38. doi: 10.1097/00063110-200402000-00007. [DOI] [PubMed] [Google Scholar]

- 10.Dearwater SR, Coben JH, Campbell JC, Nah G, Glass N, McLoughlin E, et al. Prevalence of intimate partner abuse in women treated at community hospital emergency departments. JAMA. 1998;280:433–438. doi: 10.1001/jama.280.5.433. [DOI] [PubMed] [Google Scholar]

- 11.McCloskey L, Lichter E, Ganz M, Williams C, Gerber M, Sege R, et al. Intimate partner violence and patient screening across medical specialties. Academic Emergency Medicine. 2005;12:712–722. doi: 10.1197/j.aem.2005.03.529. [DOI] [PubMed] [Google Scholar]

- 12.Weinsheimer RL, Schermer CR, Malcoe LH, Balduf LM, Bloomfield LA. Severe intimate partner violence and alcohol use among female trauma patients. J Trauma. 2005;58:22–29. doi: 10.1097/01.ta.0000151180.77168.a6. [DOI] [PubMed] [Google Scholar]

- 13.Control NCfIPa. Costs of Intimate Partner Violence Against Women in the United States. Atlanta (GA): Centers for Disease Control and Prevention; 2003. [Google Scholar]

- 14.Kothari CL, Rhodes KV. Missed opportunities: emergency department visits by police-identified victims of intimate partner violence. Ann Emerg Med. 2006;47:190–199. doi: 10.1016/j.annemergmed.2005.10.016. [DOI] [PubMed] [Google Scholar]

- 15.Wadman M, Muelleman R. Domestic violence homicides: ED use before victimization. Am J Emerg Med. 1999;17:689–691. doi: 10.1016/s0735-6757(99)90161-4. [DOI] [PubMed] [Google Scholar]

- 16.Lowe RA, McConnell KJ, Vogt ME, Smith JA. Impact of Medicaid cutbacks on emergency department use: the Oregon experience. Ann Emerg Med. 2008;52:626–634. doi: 10.1016/j.annemergmed.2008.01.335. [DOI] [PubMed] [Google Scholar]

- 17.Evaluating Domestic Violence Programs. AHRQ. Agency for Healthcare Research and Quality; Aug 1, http://www.ahrq.gov/research/domesticviol/ [Google Scholar]

- 18.Schafer SD, Drach LL, Hedberg K, Kohn MA. Using diagnostic codes to screen for intimate partner violence in Oregon emergency departments and hospitals. Public Health Rep. 2008;123:628–635. doi: 10.1177/003335490812300513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Biroscak BJ, Smith PK, Roznowski H, Tucker J, Carlson G, Biroscak BJ, et al. Intimate partner violence against women: findings from one state’s ED surveillance system. Journal of Emergency Nursing. 2006;32:12–16. doi: 10.1016/j.jen.2005.11.002. [DOI] [PubMed] [Google Scholar]

- 20.Btoush R, Campbell JC, Gebbie KM, Btoush R, Campbell JC, Gebbie KM. Visits coded as intimate partner violence in emergency departments: characteristics of the individuals and the system as reported in a national survey of emergency departments. J Emerg Nurs. 2008;34:419–427. doi: 10.1016/j.jen.2007.10.015. [DOI] [PubMed] [Google Scholar]

- 21.Rubin DB, Schenker N. Multiple imputation in health-care databases: an overview and some applications. Stat Med. 1991;10:585–598. doi: 10.1002/sim.4780100410. [DOI] [PubMed] [Google Scholar]

- 22.Newgard CD, Haukoos JS. Advanced statistics: missing data in clinical research--part 2: multiple imputation. Acad Emerg Med. 2007;14:669–678. doi: 10.1197/j.aem.2006.11.038. [DOI] [PubMed] [Google Scholar]

- 23.Moore L, Lavoie A, LeSage N, Liberman M, Sampalis JS, Bergeron E, et al. Multiple imputation of the Glasgow Coma Score. J Trauma. 2005;59:698–704. [PubMed] [Google Scholar]

- 24.Shaffer ML, Chinchilli VM. Including multiple imputation in a sensitivity analysis for clinical trials with treatment failures. Contemp Clin Trials. 2007;28:130–137. doi: 10.1016/j.cct.2006.06.006. [DOI] [PubMed] [Google Scholar]

- 25.Sugg NK, Inui T. Primary care physicians’ response to domestic violence. Opening Pandora’s box. JAMA. 1992;267:3157–3160. [PubMed] [Google Scholar]

- 26.Rhodes KV, Frankel RM, Levinthal N, Prenoveau E, Bailey J, Levinson W. “You’re not a victim of domestic violence, are you?”. Provider patient communication about domestic violence. Ann Intern Med. 2007;147:620–627. doi: 10.7326/0003-4819-147-9-200711060-00006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Gerber M, Leiter K, Hermann R, Bor D. How and why community hospital clinicians document a positive screen for intimate partner violence: a cross-sectional study. BMC Fam Pract. 2005;6:48. doi: 10.1186/1471-2296-6-48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Guidelines for Clinical Assessment and Intervention. Appendix 1. Family Violence Prevention Fund. 2011 April 15; http://endabuse.org/userfiles/file/HealthCare/ClinicalAssessment.pdf.

- 29.Pronovost P, Needham D, Berenholtz S, Sinopoli D, Chu H, Cosgrove S, et al. An intervention to decrease catheter-related bloodstream infections in the ICU. N Engl J Med. 2006;355:2725–2732. doi: 10.1056/NEJMoa061115. [DOI] [PubMed] [Google Scholar]

- 30.Campbell JC, Coben JH, McLoughlin E, Dearwater S, Nah G, Glass N, et al. An evaluation of a system-change training model to improve emergency department response to battered women. Acad Emerg Med. 2001;8:131–138. doi: 10.1111/j.1553-2712.2001.tb01277.x. [DOI] [PubMed] [Google Scholar]

- 31.Dienemann J, Trautman D, Shahan JB, Pinnella K, Krishnan P, Whyne D, et al. Developing a domestic violence program in an inner-city academic health center emergency department: the first 3 years. J Emerg Nurs. 1999;25:110–115. doi: 10.1016/s0099-1767(99)70155-8. [DOI] [PubMed] [Google Scholar]

- 32.USPSTF. Screening for family and intimate partner violence: recommendation statement. Ann Intern Med. 2004;140:382–386. doi: 10.7326/0003-4819-140-5-200403020-00014. [DOI] [PubMed] [Google Scholar]

- 33.MacMillan HL, Wathen CN, Jamieson E, Boyle MH, Shannon HS, Ford-Gilboe M, et al. Screening for intimate partner violence in health care settings: a randomized trial. JAMA. 2009;302:493–501. doi: 10.1001/jama.2009.1089. [DOI] [PubMed] [Google Scholar]

- 34.Nicolaidis C. Screening for family and intimate partner violence. Ann Intern Med. 2004;141:81–82. doi: 10.7326/0003-4819-141-1-200407060-00033. discussion 82. [DOI] [PubMed] [Google Scholar]

- 35.Lachs MS. Screening for family violence: what’s an evidence-based doctor to do? Ann Intern Med. 2004;140:399–400. doi: 10.7326/0003-4819-140-5-200403020-00017. [DOI] [PubMed] [Google Scholar]

- 36.Policy Statement: Domestic Family Violence. American College of Emergency Physicians; Feb 3, http://www.acep.org/practres.aspx?id=29184. [Google Scholar]

- 37.Thompson RS, Rivara FP, Thompson DC, Barlow WE, Sugg NK, Maiuro RD, et al. Identification and management of domestic violence: a randomized trial. Am J Prev Med. 2000;19:253–263. doi: 10.1016/s0749-3797(00)00231-2. [DOI] [PubMed] [Google Scholar]

- 38.Bair-Merritt MH, Mollen CJ, Yau PL, Fein JA. Impact of domestic violence posters on female caregivers’ opinions about domestic violence screening and disclosure in a pediatric emergency department. Pediatr Emerg Care. 2006;22:689–693. doi: 10.1097/01.pec.0000238742.96606.20. [DOI] [PubMed] [Google Scholar]

- 39.Larkin GL, Hyman KB, Mathias SR, D’Amico F, MacLeod BA. Universal screening for intimate partner violence in the emergency department: importance of patient and provider factors. Ann Emerg Med. 1999;33:669–675. doi: 10.1016/s0196-0644(99)70196-4. [DOI] [PubMed] [Google Scholar]

- 40.Alexander J, Drach L, Kohn M, Mederios L, Millet L, Sullivan A. Intimate Partner Violence in Oregon, findings from the Oregon Women’s Health and Safety Survey. Portland, OR: Oregon Department of Human Services; 2004. [Google Scholar]

- 41.Ernst AA, Weiss SJ, Nick TG, Casalletto J, Garza A. Domestic violence in a university emergency department. South Med J. 2000;93:176–181. [PubMed] [Google Scholar]

- 42.Kramer A, Lorenzon D, Mueller G. Prevalence of intimate partner violence and health implications for women using emergency departments and primary care clinics. Womens Health Issues. 2004;14:19–29. doi: 10.1016/j.whi.2003.12.002. [DOI] [PubMed] [Google Scholar]

- 43.Lipsky S, Caetano R, Field CA, Bazargan S. The role of alcohol use and depression in intimate partner violence among black and Hispanic patients in an urban emergency department. Am J Drug Alcohol Abuse. 2005;31:225–242. [PubMed] [Google Scholar]