A significant number of patients continue to experience unrelieved, moderate-to-severe postoperative pain, which can have cardiovascular, pulmonary, gastrointestinal and immunological consequences. Poor pain assessment skills and many common misconceptions about pain among health care professionals (HCPs) are among the factors contributing to inadequate postoperative pain assessment and relief. Although standardized patients (SP) have been successfully used as a simulation method to improve HCP’s assessment skills, not all centres have SP programs. Accordingly, this pilot study examined the efficacy of an alternative simulation method – deteriorating patient simulation – for improving HCP’s pain assessment skills.

Keywords: Pain assessment, Randomized controlled trial, Simulation

Abstract

BACKGROUND/OBJECTIVES:

Pain-related misbeliefs among health care professionals (HCPs) are common and contribute to ineffective postoperative pain assessment. While standardized patients (SPs) have been effectively used to improve HCPs’ assessment skills, not all centres have SP programs. The present equivalence randomized controlled pilot trial examined the efficacy of an alternative simulation method – deteriorating patient-based simulation (DPS) – versus SPs for improving HCPs’ pain knowledge and assessment skills.

METHODS:

Seventy-two HCPs were randomly assigned to a 3 h SP or DPS simulation intervention. Measures were recorded at baseline, immediate postintervention and two months postintervention. The primary outcome was HCPs’ pain assessment performance as measured by the postoperative Pain Assessment Skills Tool (PAST). Secondary outcomes included HCPs knowledge of pain-related misbeliefs, and perceived satisfaction and quality of the simulation. These outcomes were measured by the Pain Beliefs Scale (PBS), the Satisfaction with Simulated Learning Scale (SSLS) and the Simulation Design Scale (SDS), respectively. Student’s t tests were used to test for overall group differences in postintervention PAST, SSLS and SDS scores. One-way analysis of covariance tested for overall group differences in PBS scores.

RESULTS:

DPS and SP groups did not differ on post-test PAST, SSLS or SDS scores. Knowledge of pain-related misbeliefs was also similar between groups.

CONCLUSIONS:

These pilot data suggest that DPS is an effective simulation alternative for HCPs’ education on postoperative pain assessment, with improvements in performance and knowledge comparable with SP-based simulation. An equivalence trial to examine the effectiveness of deteriorating patient-based simulation versus standardized patients is warranted.

Abstract

HISTORIQUE ET OBJECTIFS :

Les opinions erronées au sujet de la douleur sont courantes chez les professionnels de la santé (PDS) et contribuent à une évaluation inefficace de la douleur postopératoire. Les patients standardisés (PS) ont été utilisés avec efficacité pour améliorer les habiletés d’évaluation des PDS, mais les centres ne disposent pas tous de programmes de PS. Le présent essai pilote d’équivalence aléatoire et contrôlé a permis d’évaluer l’efficacité d’une autre méthode de simulation, la simulation de patients dont l’état se détériore (SPD), par rapport aux PS pour améliorer les connaissances et les habiletés d’évaluation des PDS à l’égard de la douleur.

MÉTHODOLOGIE :

Soixante-douze PDS ont été répartis au hasard entre une intervention de trois heures avec des PS ou une SPD. Les chercheurs ont consigné les mesures au début, immédiatement après l’intervention et deux mois après l’intervention. L’issue primaire était le rendement des PDS en matière d’évaluation de la douleur, mesurée d’après l’outil d’habiletés d’évaluation de la douleur (OHÉD) postopératoire. Les issues secondaires incluaient la connaissance des opinions erronées au sujet de la douleur, la satisfaction perçue et la qualité de la simulation par les PDS. Ces issues étaient mesurées d’après l’échelle des croyances au sujet de la douleur (ÉCD), l’échelle de satisfaction à l’égard de l’apprentissage par la simulation (ÉSAS) et l’échelle de conception de la simulation (ÉCS), respectivement. Les tests t de Student ont permis de vérifier les différences globales entre les groupes pour ce qui est des indices d’OHÉD, d’ÉSAS et d’ÉCSaprès l’intervention. L’analyse unidirectionnelle de covariance a permis d’évaluer les différences globales entre les groupes pour ce qui est des indices d’ÉCD.

RÉSULTATS :

Les groupes de SPD et de PS ne différaient pas selon les indices d’OHÉD, d’ÉSAS ou d’ÉCS après l’essai. Les deux groupes avaient des connaissances similaires des opinions erronées au sujet de la douleur.

CONCLUSIONS:

Ces données pilotes laissent croire que la SPD est une possibilité de simulation efficace pour former les PDS à l’égard de l’évaluation de la douleur postopératoire, car elle améliore leur rendement et leurs connaissances de manière comparable à la simulation par PS. Un essai d’équivalence pour examiner l’efficacité de la SPD par rapport aux PS s’impose.

Unrelieved postsurgical pain is a ubiquitous health care crisis. Despite pain management standards, position statements and recommendations from nongovernmental organizations – such as the International Association for the Study of Pain (1), the Joint Commission on Accreditation of Healthcare Organizations (2) and the Canadian Pain Society (3) – cumulative evidence documents inadequate and/or problematic pain management practices and attitudes as the norm across health care settings, and significant numbers of postoperative patients who experience unrelieved moderate-to-severe pain (4–18). Unrelieved postoperative pain has many cardiovascular, pulmonary, gastrointestinal and immunological consequences including tachycardia, hypertension, increased peripheral vascular resistance, arrhythmias, atelectasis and decreased immune response (18–25). Due to plasticity in the nervous system, unrelieved postoperative pain can also lead to persistent pain that persists long after the usual time for healing (ie, three to six months) (26–28). Reported incidences of persistent postoperative pain range between 5% and 50% (28). Aside from these major negative physiological consequences, unrelieved postoperative pain is also associated with delayed ambulation and discharge, and long-term functional impairment (29).

Health care professional (HCP) factors contributing to the problem of inadequate pain relief after surgery include poor pain assessment skills and common misbeliefs about pain, which are barriers to effective pain assessment (6,10,30–35). These misbeliefs are defined as incorrect beliefs that are held despite current research evidence to the contrary. For example, HCPs routinely believe that: pain is directly proportional to the degree of trauma and/or surgery-related tissue injury; patients must demonstrate pain before receiving medication; one pain management strategy is all that is needed; patients can clearly articulate their pain and ask for help; observable signs are more reliable indicators of pain than patients’ self-reports; and patients should be encouraged to endure as much pain as possible before using an opioid (6,10,30–35). Other pain-related misbeliefs, common to HCPs and patients alike, include: patients should expect to endure pain after surgery, and the use of opioids for pain will inevitably lead to addiction (10).

Successful pain curricula, such as the University of Toronto (Toronto, Ontario) Centre for the Study of Pain Interfaculty Pain Curriculum (30,36), have effectively used standardized patients (SPs) and other simulation models to achieve students’ rehearsal and integration of complex affective and cognitive skills required to take a pain-related history, and address gaps in pain knowledge and pain-related misbeliefs. While the use of simulation methods for pre-licensure pain education is a burgeoning field of study, little has been done in the way of simulation-based methods for practising HCPs. Moreover, although SPs are realistic and ideal for HCP continuing education in patient assessment and interviewing, they may be unavailable to some health care institutions with resource constraints. We could identify no alternative realistic simulation methods for improving HCPs’ postoperative pain assessment skills that would potentially be low resource intensive. Therefore, the purpose of the present study was to examine the efficacy of an alternate simulation method – deteriorating patient-based simulation (DPS) – versus SPs for improving HCPs’ pain knowledge and assessment skills. Specific outcomes included HCPs’ observed pain assessment skills and knowledge of pain-related misbeliefs (primary outcomes), and satisfaction with and perceived quality of the simulation experience (secondary outcomes).

METHODS

Study design

The present study was a pilot equivalence trial. According to the Consolidated Standards of Reporting Trials (CONSORT) statement, equivalence randomized controlled trials seek to determine whether new interventions are ‘no worse’ than a reference intervention (37). The intention of this design is to demonstrate whether new intervention alternatives have at least as much efficacy as an accepted standard or widely used intervention, referred to as the active control (37). In the current study, the active control was SP-based simulation; the new comparison intervention was DPS (38). On completion of demographic and baseline measures, participants were randomly allocated to either an SP or DPS simulation intervention. Postintervention outcomes were evaluated immediately and two months following intervention. A short-term follow-up period was chosen for the present pilot study, which forms the basis of a future larger-scale trial with long-term follow-up. Ethics approval was granted from a university in central Canada and five university-affiliated teaching hospitals.

Study population and procedure

The present study was conducted in central Canada over a 14-month period. The target population was HCPs involved in the direct care of postoperative patients. Members of acute pain management teams were not eligible to participate. Instead, acute pain management team clinicians acted as ‘recruitment champions’ who facilitated the recruitment strategy, which included: presentations at in-services and clinical rounds; notifications in hospital bulletins and newsletters; and e-mail and hardcopy notices to HCPs working in surgical hospital units. All interested HCPs were initially assessed for eligibility by the trial coordinator (TC) via telephone. Willing participants were then interviewed by the TC to confirm eligibility and obtain informed consent. Demographic and baseline measures were completed on site and participants were randomly assigned to either the SP group or the DPS group. Random assignment was centrally controlled using www.randomize.org, a tamper-proof random assignment service. An external research assistant kept a secure list of random allocations (generated by www.randomize.org) that was matched to participant study numbers. As each participant enrolled, the TC called the external research assistant to receive his/her random allocation. Once randomly assigned, participants were scheduled to participate in the next available SP or DPS simulation intervention.

Outcome data collection occurred in two phases: immediately postintervention and two months postintervention. Participants completed immediate postintervention questionnaires (onsite) on an individual basis. Two months postintervention, participants completed two individual and consecutive objective structured clinical examinations (OSCE) at their respective hospital sites. Assiduous follow-up procedures were used to maximize participation in these follow-up OSCEs.

Follow-up OSCE procedure

Follow-up OSCEs were designed to evaluate participants’ postoperative pain assessment skills. OSCE sessions took place at participants’ respective hospital sites and were 45 min in length, including: 10 min briefing with the TC; two consecutive 10 min OSCEs; and 15 min of debriefing and feedback. On arrival, each participant was oriented to the specifics of the overall procedure and informed that he/she would be evaluated while conducting postoperative pain assessments on two consecutive simulated patients, portrayed by SPs. Before each assessment, participants were given a one-paragraph summary (‘spec’) of the patient case. The TC kept time, allowing for a maximum of 10 min for each assessment. Participants’ performance was scored via a two-way mirror by two independent expert OSCE assessors, using an evaluation tool developed for this trial (see Measures). At one hospital site, where two-way mirror observation rooms were unavailable, participants were observed on television monitors by OSCE assessors located in a separate room via a live feed from a camcorder.

In addition to the assessment provided by OSCE assessors, the SPs also scored the participants’ performance. The OSCE session concluded with a structured debriefing, wherein participants received verbal feedback from the SPs. The purpose of this debriefing was to discuss key learning points from the OSCEs and related implications for assessing pain in participants’ future clinical practice.

Interventions

The aim of the simulation interventions (SP and DPS) was to improve participants’ pain assessment skills and knowledge of common pain-related misbeliefs that interfere with optimal pain assessment and management. These interventions were delivered in small groups (five to eight participants) by an expert facilitator at the study site. Intervention structure was standardized at 2 h in length and consisted of three components:

Participants were briefed for 30 min on common pain-related misbeliefs and key components of a comprehensive pain assessment. The use of empathy and affective involvement to address patient and family concerns effectively was emphasized (8,39).

Participants worked as a team to conduct a postoperative pain assessment on a patient case, via the SP or DPS method. The simulation lasted 45 min, allowing for engagement of each participant (one at a time) and facilitator-led ‘time-outs’ for group discussion and problem solving.

After the simulation, the facilitator conducted a 45 min structured debriefing, focused on pain-related misbeliefs that arose and key learning points from the team pain assessment. A facilitator guide specified the protocol in detail to ensure consistent intervention delivery across all sessions; only the specific simulation method varied between study groups, ie, SP or DPS.

Simulation methods: SP and DPS

Active control – SPs: Four SPs, from an established SP program, were selected according to demographic requirements of the patient case, with attention to their skill in providing feedback on pain assessment. Standardized patient training consisted of three meetings of 2 h duration each. Two SPs were trained to portray the patient and two were trained to portray the patient’s sister, who was also part of the case (see Patient cases). The training sessions concluded with a complete ‘dry run’ of the case at the study site.

All SP intervention sessions were conducted in a simulation laboratory at the study site. A simulated hospital room was used with prerecorded background hospital noise, real telemetry monitoring and authentic postsurgical equipment to ensure that the simulation was as realistic as possible. At the beginning of each SP session, the intervention facilitator provided participants with a brief introduction to the case and the rules for the simulation. Each participant was given approximately 5 min to conduct a part of the assessment. During this time, other participants silently observed. Only the learner or facilitator could call for a ‘timeout’ to problem solve the next steps, if needed. On entering the patient’s room, the facilitator and participants encountered two SPs, portraying the patient and his sister, respectively. The SPs remained in character throughout the simulation.

Comparison intervention – DPS: Wiseman and Snell’s (38) DPS method was developed based on the premise that it is difficult to reproduce the need to ‘think on one’s feet’, often required in complex clinical environments and situations. According to Wiseman and Snell, DPS is an inexpensive, portable and rapidly created simulation that reproduces – in real time – the roles, decisions and emotions involved in HCP-patient interactions; the reasoning skills required to manage a ‘deteriorating patient’ are also made explicit (38). Pilot data show that DPS improves learners’ perceptions of readiness, knowledge and prioritization skills by 30% to 45% (1.5 to 2 points, 5-point Likert scale) (38).

In DPS, no SP or actor is present. The simulation method consists of a trained facilitator who verbally introduces a simulated patient scenario to a small group of learners in a classroom setting. The scenario is predetermined to have a number of plausible outcomes, depending on the response of the learner engaging in the simulation (38). The ideal patient assessment/care must also be predetermined. If a learner’s approach to the scenario is less than ideal, the facilitator changes the patient condition/situation by a decrement specific to the learner’s weaknesses (38). If, however, the learner reacts appropriately, the patients’ condition/situation will not deteriorate (38).

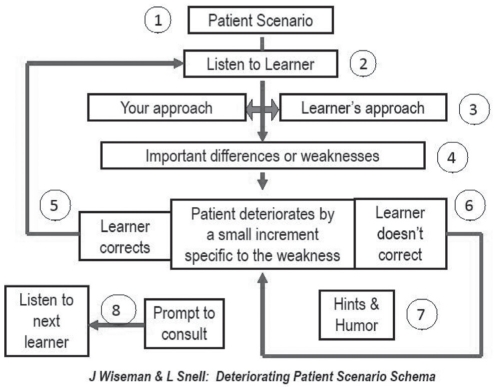

In the context of the present trial, the concept of ‘deterioration’ was adapted to reflect typical communication breakdowns that can occur in relation to common pain-related misbeliefs (for clinicians and patients) and ineffective postoperative pain assessment and management. In keeping with Wiseman and Snell’s DPS principles (38), this ‘deterioration’ was executed incrementally and to degrees appropriate to participants’ abilities and learning needs. Figure 1 depicts the DPS method, as performed by the intervention facilitator at the study site.

Figure 1).

The deteriorating patient simulation method. Reproduced with permission from reference 38

Patient cases

Intervention case:

The simulated patient for the intervention was a 52-year-old man suffering from moderate-to-severe postoperative pain following triple coronary artery bypass graft surgery. He had pre-existing pain from diabetic neuropathy and neuropathic pain from the site of internal thoracic artery harvesting. The case was set on post-operative day 4, while he was being visited in hospital by his sister. He reported experiencing 8/10 (numerical rating scale) pain on movement and 2/10 pain at rest. Based on a real patient case, details of the simulation included: inadequate pain relief in the early postoperative phase; common pain misbeliefs that blocked effective pain assessment and management; patient and family concerns; and problematic communication about pain between the patient and HCPs involved in his postoperative care. The case content was identical for SP and DPS intervention groups.

OSCE cases:

Simulated patient cases for the OSCEs were designed to encourage the participants’ critical thinking and application of the pain assessment skills they learned during the intervention. Two cases were developed, and involved visceral and musculoskeletal postsurgical pain, respectively. The first case was a 40-year-old woman who had undergone a hysterectomy. The scenario was two weeks after surgery, and she was suffering moderate to severe abdominal pain, interfering with sleep and recovery. The second case was a 58-year-old man who had undergone a total knee replacement. Several weeks after the operation, he reported severe knee pain that was interfering with rehabilitation. Similar to the intervention case, both OSCE cases were based on real patient situations, featuring common pain misbeliefs, communication issues around pain and inadequate pain relief.

MEASURES

Primary outcomes – pain assessment skills and pain-related misbeliefs:

Pain assessment skills – OSCEs:

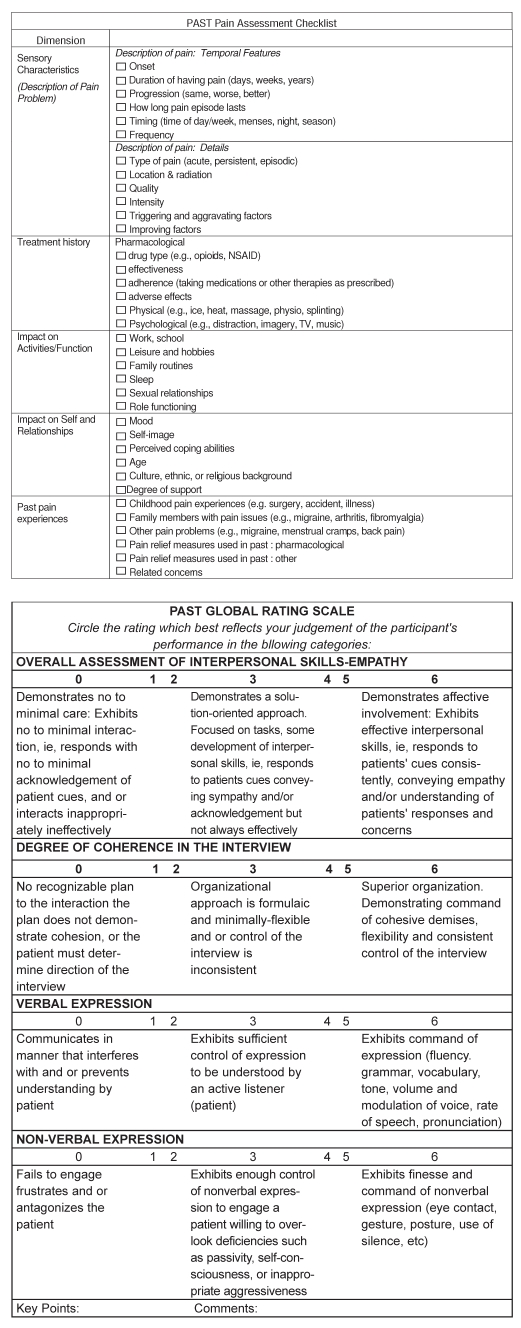

The reliability and validity of OSCE-based performance assessment has been widely debated (40). As Hodges et al (40,41) have demonstrated, the traditional use of binary OSCE checklists to capture complex cognitive appraisal and communications skills is suboptimal. While global rating indicators can augment such checklists and improve reliability and validity, their sole use also remains controversial, with mixed results. Ideally, a combination of checklist and global rating components should be used. We found no such combined evaluation method for examining HCP’s postoperative pain assessment skills. Therefore, a Pain Assessment Skills Tool (PAST) was decveloped for the present trial (Appendix A). The PAST was adapted from a pain assessment template reported by Watt-Watson et al (10) and an OSCE template developed by Cleo Boyd in 1996. With permission, a combination of relevant items from both tools were used.

A series of three focused team meetings was held to determine the relevant content domains and objective criteria for each component of the tool, assemble the relevant items and delineate a scoring method. The PAST is divided into two components, including a pain assessment checklist and a global rating scale. The assessment checklist comprises a series of items spanning the following content domains: pain sensory characteristics; treatment history; impact of pain on functional status, perception of self, and relationships; and past pain experiences. The global rating scale uses a series of four Likert scales to evaluate interpersonal skills and empathy, degree of coherence of pain assessment, and verbal and nonverbal expression. Two continuous summary scores are derived, ranging from 0 to 36 for the pain assessment checklist, and 0 to 24 for the global rating scale.

The face and content validity of the PAST was evaluated via expert opinion. Sixteen pain experts used the PAST to evaluate a prerecorded pain assessment online. Feedback was requested on overall usability of the tool and relevance of items. Items deemed by the majority to be irrelevant were deleted. The remaining items were refined for clarity and accurate representation of the content domains. The inter-rater reliability of the PAST was pilot tested in the present trial (see Data analyses and Results).

Knowledge of pain-related misbeliefs:

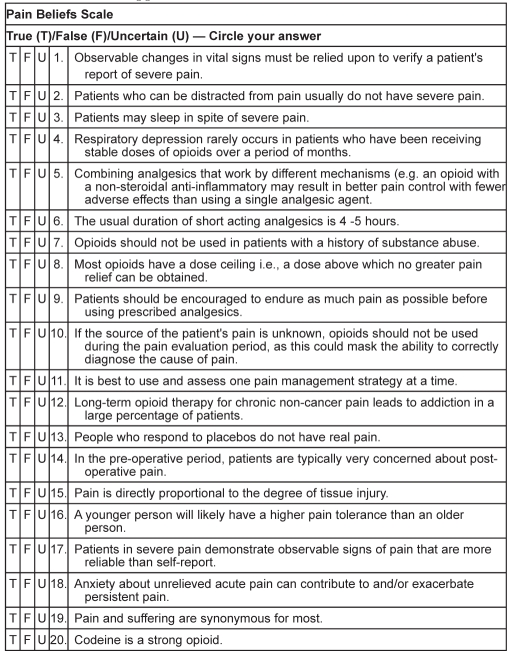

Knowledge of pain-related misbeliefs was measured using the Pain Beliefs Scale (PBS). Developed by McGillion et al (42), the PBS is an adaptation of Ferrell and McCaffery’s (43) Knowledge and Attitudes Regarding Pain Survey. This tool was designed to assess clinicians’ attitudes and knowledge of various aspects of pain mechanisms, assessment and management. The PBS (Appendix B) includes 20 items that reflect common pain-related misbeliefs. Responders may indicate ‘true’, ‘false’ or ‘uncertain’ for each item. A score of 1 is assigned to each correct response; all incorrect or ‘uncertain’ responses receive a score of 0. The overall summary score range is 0 to 20.

Kuder-Richardson Formula-20 (44) statistics for scales comprised of dichotomous variables (43) were used to evaluate the internal consistency reliability of the PBS on a sample of 150 prelicensure health sciences students enrolled in a pain education randomized controlled trial (42). Reliability estimates ranged from 0.67 (pretest) to 0.70 (post-test), suggesting moderately high internal consistency of the tool (42).

Secondary outcomes – HCP satisfaction and quality of the simulation experience:

Participants’ perceived satisfaction and quality of the simulation experience were evaluated by the Satisfaction with Learning Scale (SSLS) and the Simulation Design Scale (SDS), respectively (45). The SSLS is a 13-item tool designed to measure levels of learner satisfaction (SSLS-satisfaction) with simulation-related activities and self-confidence (SSLS-confidence) in learning (45). Content validity of the SSLS has been established by nine clinical simulation experts; internal consistency reliability on a sample of 395 nursing students was α =0.94 (45).

The SDS (20 items) uses a 5-point scale to assess the quality of simulations with respect to clarity of objectives, learner support features, problem-solving opportunities, feedback mechanisms and fidelity (45). The SDS is subdivided into two parts, one assessing specific simulation features (SDS-total) and the other examining learners’ perceived importance of those features (SDS-importance). The SDS also has established content validity as well as reliability (α=0.92 to 0.96) (45).

Data analyses and statistical power

Evaluation of the PAST:

As discussed, real-time evaluations of each participant’s pain assessment skills were conducted by two independent raters and one SP at each OSCE station. Intraclass correlation coefficients (ICC) (46) were used to estimate the inter-rater reliability of the PAST pain history pain assessment checklist and global assessment template; ICC>0.7 were considered satisfactory (46), indicating strong agreement between raters.

Intervention effects:

It was not within the scope of the present pilot trial to collect a sufficiently sized sample for ‘true’ equivalence testing. Analyses were based on intention-to-treat principles. A one-way analysis of covariance (ANCOVA) of post-test scores was used to test for overall differences in PBS scores between SP and DPS groups; pretest PBS scores were the covariates (47). Student’s t tests were used to test for group differences in post-test PAST, SLSS and SDS scores (47). Bonferroni-adjusted post-hoc and multiple comparisons between groups were planned if overall associations were to be found at the P≤0.05 significance level (47). All data were cleaned and assessed for departures from normality; assumptions of all parametric analyses were met.

Statistical power:

It was not possible to conduct ‘true’ equivalence testing for the present pilot study as a meaningful margin of difference in change scores (on the primary outcome) between treatment arms was not yet known. A priori, the study assumed the following: a refusal/loss-to-follow-up rate of 10%; a mean (± SD) post-test PAST score of 17.0±3.0 in the active control SP group; equal group sizes of approximately 25 HCPs per group; and an overall type I error rate of 0.05. With a target sample size of 50, it was estimated that this would have approximately 80% power to detect as small a difference as 2 points in the primary outcome between groups.

RESULTS

Derivation of the sample and attrition

In total, 73 potential participants were assessed for inclusion over a 12-month period. Of these potential participants, 72 were included and one was excluded because she did not work directly with postoperative patients. The acceptance rate for enrollment among those eligible was 100%. Of the 72 consenting participants, 34 were randomly assigned to the DPS group and 38 were randomly assigned to the SP group. Twenty-three participants (DPS group, n=15; SP group, n=8) did not complete an intervention session or immediate postintervention measures. Of these, seven participants withdrew without explanation and could not be contacted and 15 withdrew because of unexpected scheduling conflicts in their respective clinical settings. An additional 10 participants were unable to attend an OSCE session, also because of scheduling problems. In total, data for analyses were available from 49 participants who completed preintervention and immediate postintervention measures, and 39 who completed the follow-up OSCE sessions. The overall attrition rate, from baseline to follow up, was 46% .

Participant characteristics

Baseline characteristics of the study groups are presented in Table 1. The mean age of the sample was 38±11 years, with 12±11 years of clinical experience, on average. The sample comprised mainly female registered nurses. The highest degree held for most was an under-gradate degree, with a small number holding a graduate degree. Level of educational preparation was not reported by some.

TABLE 1.

Baseline characteristics according to group

| Characteristic | DPS (n=34) | SP (n=38) |

|---|---|---|

| Mean age, years, mean ± SD | 40±11 | 37±11 |

| Years of clinical experience, mean ± SD | 13±11 | 11±10 |

| Female | 31 (88) | 35 (95) |

| Registered nurse | 34 (97) | 34 (97) |

| Advanced practice nurse | 1 (3) | 1 (3) |

| Physician | 0 (0) | 1 (3) |

| Diploma | 9 (26) | 12 (32) |

| Undergraduate degree | 21 (62) | 21 (55) |

| Graduate degree | 4 (12) | 5 (13) |

Data presented as n (%) unless otherwise indicated. DPS Deteriorating patient-based simulation; SP Standardized patients

Evaluation of the PAST

Table 2 presents the results of the PAST inter-rater reliability assessment. Overall, the ICCs were within the acceptable range (ie, 0.72 to 0.89) across both OSCE stations, indicating good to excellent inter-rater reliability of the tool. The ICCs for the PAST global rating template did not vary significantly according to OSCE station, whereas the ICC for the pain assessment checklist was highest for OSCE station 2. The additional SP assessments only marginally improved the ICCs of the global assessment template, indicating a high degree of reliability of the independent assessors’ ratings.

TABLE 2.

PAST intraclass correlations across OSCE stations

| POPA-OCSE scale | ICC | F (df) | P |

|---|---|---|---|

|

OSCE station 1 | |||

| PAC | 0.79 | 4.73 (38, 38) | <0.0001 |

| GRT assessors only | 0.75 | 3.91 (38, 38) | <0.0001 |

| GRT with SP | 0.78 | 4.60 (38, 76) | <0.0001 |

|

OSCE station 2 | |||

| PAC | 0.89 | 8.91 (38, 38) | <0.0001 |

| GRT assessors only | 0.70 | 3.01 (38, 38) | 0.001 |

| GRT with SP | 0.72 | 3.36 (38, 76) | <0.0001 |

GRT Global Rating Scale; ICC Intraclass correlation coefficient; PAC Pain assessment checklist; PAST Pain Assessment Skills Tool; POPA Postoperative Pain Assessment; OSCE Objective Structured Clinical Examination; SP Standardized patients

Intervention effects

Primary outcomes – observed pain assessment skills and knowledge of pain-related misbeliefs:

Mean scores according to group and results of Student’s t test and ANCOVA testing for significant differences between groups in PAST scales and PBS scores are presented in Tables 3 and 4, respectively. Mean scores indicate that both groups performed well during the OSCEs and demonstrated improved understanding of pain-related misbeliefs postintervention. There were no significant differences between groups in PAST pain assessment checklist scores or global assessment ratings. Similarly, no significant differences in postintervention PBS scores between SP and DPS groups were found.

TABLE 3.

Comparison* of PAST scores between DPS and SP groups

| Outcome | DPS | SP | t (df) | P† |

|---|---|---|---|---|

| PAC total | 14.66±2.54 | 14.35±2.39 | 0.38 (37) | 0.705 |

| Overall skill assessment – empathy | 3.83±0.64 | 4.03±0.78 | 0.79 (37) | 0.434 |

| Degree of coherence in the interview | 3.94±0.59 | 3.91±0.68 | 0.13 (37) | 0.901 |

| Verbal expression | 4.25 ±0.80 | 4.38±0.85 | 0.47 (37) | 0.642 |

| Nonverbal expression | 4.23±0.73 | 4.28±0.70 | 0.830 (37) | 0.830 |

Data presented as mean ± SD unless otherwise indicated.

Using Student’s t test;

Statistically significant at P≤0.05. DPS Deteriorating patient simulation; PAC Pain assessment checklist; PAST Pain Assessment Skills Tool; SP Standardized patients

TABLE 4.

| Outcome | DPS | SP | F (df) | P† |

|---|---|---|---|---|

| Preintervention PBS | 13.80±2.62 | 14.01±2.68 | – | – |

| Postintervention PBS | 16.53±1.95 | 16.37±1.85 | 0.15 (1, 46) | 0.701 |

Data presented as mean ± SD unless otherwise indicated.

Using analysis of covariance;

Statistically significant at P≤0.05. DPS Deteriorating patient simulation; SP Standardized patients

Secondary outcomes – satisfaction and perceived quality of simulation experience:

Mean scores according to group and results of participants’t tests for significant differences in satisfaction (SSLS-satisfaction) and confidence (SSLS-confidence) in learning, and perceived quality (SDS-total) and importance (SDS-importance) of learning are presented in Table 5. Both groups rated their simulations highly with respect to learner satisfaction and design quality. No significant differences across SSLS or SDS scores were found between groups.

TABLE 5.

Comparison* of SSLS and SDS scores between DPS and SP groups

| Outcome | DPS | SP | t (df) | P† |

|---|---|---|---|---|

| SSLS-satisfaction | 22.37±2.77 | 22.97±2.09 | 0.86 (47) | 0.395 |

| SSLS-confidence | 34.26±3.69 | 34.60±3.11 | 0.34 (47) | 0.733 |

| SDS-total | 87.58±18.60 | 91.83±6.49 | 0.96 (21) | 0.349 |

| SDS-importance | 91.76±8.79 | 91.45±7.44 | 0.13 (44) | 0.897 |

Data presented as mean ± SD unless otherwise indicated.

Using Student’s t test;

Statistically significant at P≤0.05. DPS Deteriorating patient simulation; SDS Satisfaction Design Scale; SP Standardized patients; SSLS Satisfaction with Simulated Learning Scale

DISCUSSION

Our simulation interventions were found to be equivalent, suggesting that DPS is an effective simulation alternative for HCPs’ education on postoperative pain assessment, with improvements in knowledge and performance comparable with SP-based simulation. Participants’ satisfaction and quality ratings were high in both groups, suggesting that both simulation methods provided valuable learning experiences. The fact that our analyses yielded values of P<0.300 across outcomes suggests that potential lack of statistical power was not a strong factor in the lack of differences between groups (47). The present pilot study will be followed by an adequately powered equivalence trial, allowing for more definitive conclusions to be made about statistical equivalence of our SP and DPS methods.

A 100% acceptance rate among those screened for eligibility suggests that the opportunity to learn more about postoperative pain assessment via simulation was appealing to clinicians, especially nurses who constituted 99% of the sample. While all those who consented to participate intended to complete their assigned simulation interventions and follow-up OSCEs, scheduling problems in the clinical setting resulted in a higher than anticipated attrition rate. Our sample consisted of senior clinicians with an average of 12 years of clinical experience. In future work, it may be important to target clinicians on entry to practice, so that participation may be incorporated in entry to practice orientation, such an arrangement would enable more flexibility in scheduling.

Our primary outcome, pain assessment skills, was measured via the PAST. We found the ICC for the PAST pain assessment checklist to be higher at follow-up OSCE station 2 than at station 1. This may indicate that either the participants (as a group) performed their pain assessments more uniformly the second time, or that our assessors became more familiar with using the checklist at station 2. The ICC for the global assessment template was stable across both stations and only marginally improved with the inclusion of our SPs’ assessments, indicating strong inter-rater reliability among assessors. Overall, the PAST appears to be a reliable measure of pain assessment skills. Subsequent evaluation of the tool in an equivalence trial with repeated measures (ie, three assessments or more) will allow for further examination of ICC stability.

While the measures we used in the pilot trial provide preliminary, summative evaluation of the effectiveness of DPS versus SP-based simulation, they cannot answer key questions about the formative aspects of learning that occurred in either group. For example, our adaptation of Wiseman and Snell’s DPS method (38) required a priori distillation of key learning points we wished to illustrate with respect to the potential consequences of conducting a postoperative pain assessment without empathy or skilled attention to patient and family concerns. As a part of our larger-scale equivalence trial, we plan to embed design research (49,50) elements to examine the processes of participants’ situated learning in context during both types of simulation.

Design research (48,49) is concerned with uncovering the processes inherent in innovative educational methods. In addition to addressing definitively the question of equivalence of effectiveness, we also want to know whether, by virtue of design, DPS engages learners in cognitive uptake and rehearsal of postoperative pain assessment skills differently than SP-based simulation. Several design research methods have been proposed, such as videotaping learners in action to examine critical design elements. In our future work, we will incorporate design research methods to examine differences and similarities in learning that occur during SP and DPS simulations.

The methodological strengths of the present pilot study were the robust methods used to minimize biases and random error, including centrally controlled randomization, valid and reliable measures, controls placed on outcome data collection and intention-to-treat analyses. Intervention integrity was also maximized by using a standardized intervention protocol. Performance bias cannot be ruled out because it is not possible to blind participants or interveners in an education-based intervention study. Social desirability bias may also be possible due to our use of self-report measures for some outcomes. However, randomization should have equally distributed those prone to socially desirable responses. Our follow-up period was limited to two months postintervention. Therefore, long-term sustainability of observed improvements in knowledge of pain-related misbeliefs and levels of pain assessment skill are not known. In addition, for the purposes of the present pilot study, our simulation interventions were delivered by a single facilitator. Our subsequent equivalence trial should employ multiple facilitators to enhance external validity.

CONCLUSION

Common pain-related misbeliefs contribute to the problem of unrelieved postoperative pain. Deteriorating patient-based simulation may be an effective, low-tech simulation alternative for HCPs’ education on postoperative pain assessment, with improvements in knowledge and performance comparable with SP-based simulation. Our pilot study results suggest that an adequately powered equivalence trial to examine the effectiveness of DPS versus SPs is warranted.

Acknowledgments

The authors are grateful to the participants of this trial who generously gave of their time and effort. Many clinicians and administrators supported logistics and recruitment; special thanks to Mary Agnes Beduz, Irene Wu Lau, Maria Maione, Patti Kastanias, Jiao Jiang, Maria Tassone, Kasia Niemcyzk and Susan Robinson for their expertise and advice. This trial was funded by the Network of Excellence in Simulation for Clinical Teaching and Learning and the Standardized Patient Program, University of Toronto, and the Nursing Education, Research and Development Fund, Lawrence S Bloomberg Faculty of Nursing, University of Toronto.

Appendix A. The Pain Assessment Skills Tool (PAST), including the Pain Assessment Checklist and Global Rating Scale

Appendix B. The Pain Beliefs Scale

REFERENCES

- 1.Charlton JE, editor. Core Curriculum for Professional Education in Pain. Seattle: IASP Press; 2005. [Google Scholar]

- 2.Joint Commission on Accreditation of Healthcare Organizations . Comprehensive Accreditation Manual for Hospitals The Official Handbook (CAMH) Oakbrook Terrace: JCAHO; 2010. 2009. [Google Scholar]

- 3.Watt-Watson JH, Clark JA, Finley GA, Watson CP. Canadian Pain Society position statement on pain relief. Pain Res Manage. 1999;4:75–8. [Google Scholar]

- 4.Marks RM, Sachar EJ. Under-treatment of medical inpatients with narcotic analgesics. Ann Intern Med. 1973;78:173. doi: 10.7326/0003-4819-78-2-173. [DOI] [PubMed] [Google Scholar]

- 5.Watt-Watson J, Stevens B, Streiner D, Garfinkel P, Gallop R. Relationship between pain knowledge and pain management outcomes for postoperative cardiac patients. J Adv Nurs. 2001;36:535–45. doi: 10.1046/j.1365-2648.2001.02006.x. [DOI] [PubMed] [Google Scholar]

- 6.Furstenberg C, Ahles TA, Whedon MB, et al. Knowledge and attitudes of health-care providers toward cancer pain management: A comparison of physicians, nurses, and pharmacists in the State of New Hampshire. J Pain Symptom Manage. 1998;16:335–49. doi: 10.1016/s0885-3924(98)00023-2. [DOI] [PubMed] [Google Scholar]

- 7.Strong J, Tooth L, Unruh A. Knowledge of pain among newly graduated occupational therapists: Relevance for curriculum development. Can J Occup Ther. 1999;66:221–8. doi: 10.1177/000841749906600505. [DOI] [PubMed] [Google Scholar]

- 8.Watt-Watson J, Garfinkel P, Gallop R, Stevens B, Streiner D. The impact of nurses’ empathic responses on patients’ pain management in acute care. Nurs Res. 2000;49:191–200. doi: 10.1097/00006199-200007000-00002. [DOI] [PubMed] [Google Scholar]

- 9.Watt-Watson J, Chung F, Chan VW, McGillion M. Pain management following discharge after ambulatory same-day surgery. J Nurs Man. 2004;12:153–61. doi: 10.1111/j.1365-2834.2004.00470.x. [DOI] [PubMed] [Google Scholar]

- 10.Watt-Watson J. Misbeliefs about pain. In: Watt-Watson J, Donovan M, editors. Pain management: Nursing perspective. St Louis: Mosby; 1992. pp. 36–58. [Google Scholar]

- 11.Watt-Watson J. Nurses’ knowledge of pain issues: A survey. J Pain Symptom Manage. 1987;2:207–11. doi: 10.1016/s0885-3924(87)80058-1. [DOI] [PubMed] [Google Scholar]

- 12.Seers K, Watt-Watson J, Bucknall T. Challenges of pain management for the 21st century. J Advan Nurs. 2006;55:4–6. doi: 10.1111/j.1365-2648.2006.03892_3.x. [DOI] [PubMed] [Google Scholar]

- 13.Watson CP, Watt-Watson JH. Inadequate teaching about pain. CMAJ. 1989;141:189–92. [PMC free article] [PubMed] [Google Scholar]

- 14.Watt-Watson J, Stevens B, Costello J, Katz J, Reid G. Impact of preoperative education on pain management outcomes after coronary artery bypass graft surgery: A pilot. Can J Nurs Res. 2000;31:41–56. [PubMed] [Google Scholar]

- 15.Peter E, Watt-Watson J. Unrelieved pain: An ethical and epistemological analysis of distrust in patients. Can J Nurs Res. 2002;34:65–80. [PubMed] [Google Scholar]

- 16.Rathmell J, Wu CL, Sinatra RS, et al. Acute post-surgical pain management: A critical appraisal of current practice. Reg Anesthes Pain Med. 2006;31:1–42. doi: 10.1016/j.rapm.2006.05.002. [DOI] [PubMed] [Google Scholar]

- 17.Tripp DA, VanDenKerkhof EG, McAlister M. Prevalence and determinants of pain and pain-related disability in urban and rural settings in southeastern Ontario. Pain Res Manage. 2006;11:225–33. doi: 10.1155/2006/720895. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Watt-Watson J, Stevens B. Managing pain after coronary artery bypass surgery. J Cardiovasc Nurs. 1998;12:39–51. doi: 10.1097/00005082-199804000-00006. [DOI] [PubMed] [Google Scholar]

- 19.Bendetti C, Chapman C, Morrica G, editors. Recent Advances in the Management of Pain. New York: Raven Press; 1984. [Google Scholar]

- 20.Dahl JL, Gordon D, Ward S, Skemp M, Wochos S, Schurr M. Institutionalizing pain management: The post-operative pain management quality improvement project. J Pain. 2003;4:361–71. doi: 10.1016/s1526-5900(03)00640-0. [DOI] [PubMed] [Google Scholar]

- 21.Kehlet H. The stress response to surgery: release mechanisms and the modifying effect of pain relief. Acta Chir Scand Suppl. 1989;550:22–8. [PubMed] [Google Scholar]

- 22.Kehlet H. The endocrine-metabolic response to postoperative pain. Acta Anaesthesiol Scand Suppl. 1982;74:173–5. doi: 10.1111/j.1399-6576.1982.tb01872.x. [DOI] [PubMed] [Google Scholar]

- 23.Kolleff MH. Trapped lung syndrome after cardiac surgery: A potentially preventable complication of pleural injury. Heart Lung. 1990;19:671–5. [PubMed] [Google Scholar]

- 24.O’Gara P. The hemodynamic consequences of pain and its management. J Intensive Care Med. 1988;3:3–5. [Google Scholar]

- 25.Watt-Watson J, Graydon J. Impact of surgery on head and neck cancer patients and their caregivers. Nurs Clin North Am. 1995;30:659–71. [PubMed] [Google Scholar]

- 26.Woolf CJ, Salter M. Neuronal plasticity: Increasing the gain in pain. Science. 2000;288:1765–9. doi: 10.1126/science.288.5472.1765. [DOI] [PubMed] [Google Scholar]

- 27.Katz J. Perioperative predictors of long-term pain following surgery. In: Jensesn T, Turner J, Wiesenfeld-Hallin Z, editors. Proceedings of the 8th World Congress on Pain. Seattle: IASP Press; 1997. pp. 231–42. [Google Scholar]

- 28.Kehlet H, Macrae WA, Stubhaug A. Persistent postoperative pain: Pathogenic mechanisms and preventive strategies. In: Mogil J, editor. Pain 2010: An updated review: Refresher course syllabus. Seattle: IASP Press; 2010. pp. 181–92. [Google Scholar]

- 29.Mossison RS, Magaziner J, McLaughlin MA, et al. The impact of post-operative pain on outcomes following hip fracture. Pain. 2003;103:303–11. doi: 10.1016/S0304-3959(02)00458-X. [DOI] [PubMed] [Google Scholar]

- 30.Watt-Watson J, Hunter J, Pennefather P, et al. An integrated undergraduate pain curriculum, based on IASP curricula, for six health science faculties. Pain. 2004;10:140–8. doi: 10.1016/j.pain.2004.03.019. [DOI] [PubMed] [Google Scholar]

- 31.Lebovitz A, Florence I, Bathina R, Hunko V, Fox M, Bramble C. Pain knowledge and attitudes of health care providers: Practice characteristic differences. Clin J Pain. 1997;13:237–43. doi: 10.1097/00002508-199709000-00009. [DOI] [PubMed] [Google Scholar]

- 32.McGillion MH, Watt-Watson JH, Kim J, Graham A. Learning by heart: A focused groups study to determine the psychoeducational needs of chronic stable angina patients. Can J Cardiovasc Nurs. 2004;14:12–22. [PubMed] [Google Scholar]

- 33.Simpson K, Kautzman L, Dodd S. The effects of a pain management education program on the knowledge level and attitudes of clinical staff. Pain Manage Nurs. 2002;3:87–93. doi: 10.1053/jpmn.2002.126071. [DOI] [PubMed] [Google Scholar]

- 34.Strong J, Tooth L, Unruh A. Knowledge of pain among newly graduated occupational therapists: relevance for curriculum development. Can J Occup Ther. 1999;66:221–8. doi: 10.1177/000841749906600505. [DOI] [PubMed] [Google Scholar]

- 35.Scudds R, Solomon P. Pain and its management. A new pain curriculum for occupational therapists and physical therapists. Physiother Can. 1995;47:77–87. [Google Scholar]

- 36.Hunter J, Watt-Watson J, McGillion M, et al. An interfaculty pain curriculum: Lessons learned from six years experience. Pain. 2008;140:74–86. doi: 10.1016/j.pain.2008.07.010. [DOI] [PubMed] [Google Scholar]

- 37.Piaggio G, Elbourne DR, Altman DG, Pocock ST, Evans SJW, the CONSORT Group Reporting of non-inferiority and equivalence randomized trials: An extension of the CONSORT statement. JAMA. 2006;295:1152–60. doi: 10.1001/jama.295.10.1152. [DOI] [PubMed] [Google Scholar]

- 38.Wiseman J, Snell L. The deteriorating patient: a realistic but ‘low-tech’ simulation of emergency decision-making. Clin Teach. 2008;5:93–7. [Google Scholar]

- 39.Gallop R, Lancee WJ, Garfinkel PE. The empathic process and its mediators. A heuristic model. J Nerv Ment Dis. 1990;178:649–54. doi: 10.1097/00005053-199010000-00006. [DOI] [PubMed] [Google Scholar]

- 40.Hodges B, McNaughton N, Regehr G, Tiberuis R, Hanson M. The challenge of creating new OSCE measures to capture the characteristics of expertise. Med Ed. 2002;36:742–8. doi: 10.1046/j.1365-2923.2002.01203.x. [DOI] [PubMed] [Google Scholar]

- 41.Hodges B, Regehr G, McNaughton N, Tiberius R, Hanson M. OSCE checklists do not capture increasing levels of expertise. Acad Med. 1991;74:1129–34. doi: 10.1097/00001888-199910000-00017. [DOI] [PubMed] [Google Scholar]

- 42.McGillion M, Watt-Watson J, Stremler R, et al. Efficacy, quality and student satisfaction across three simulation learning conditions for pre-licensure nursing students’ education about pain: A randomized controlled trial. Pain Res Manag. 2009;14:156. (Abst) [Google Scholar]

- 43.Ferrell B, McMaffery M. Knowledge and attitudes survey regarding pain, revised. 2005. < http://prc.coh.org> (Accessed on November 29, 2011).

- 44.Kuder GF, Richardson MW. The theory of estimation of test reliability. Psychometrika. 1937;2:151–60. [Google Scholar]

- 45.Jeffries P, Rizzolo MA. Summary report: Designing and implementing models for innovative use of simulation to teach nursing care of ill adults and children: A national, multi-site, multi-method study. 2006. < www.nln.org/research/LaerdalReport.pdf> (Accessed on January 30, 2008).

- 46.Streiner DL, Norman GR. Health Measurement Scales: A Practical Guide to Their Development and Use. 3rd edn. Oxford: Oxford University Press; 2003. [Google Scholar]

- 47.Cook TD, DeMets DL. Introduction to Statistical Methods for Clinical Trials. London: Chapman & Hall; 2008. [Google Scholar]

- 48.Bereiter C. Design research for sustained innovation. Cognitive Studies, Bulletin of the Japanese Cognitive Science Society. 2002;9:321–7. [Google Scholar]

- 49.Collins A, Joseph D, Bielaczyc K. Design research: Theoretical and methodological issues. J Learning Science. 2004;13:15–42. [Google Scholar]