Abstract

Statistical image reconstruction using penalized weighted least-squares (PWLS) criteria can improve image-quality in X-ray CT. However, the huge dynamic range of the statistical weights leads to a highly shift-variant inverse problem making it difficult to precondition and accelerate existing iterative algorithms that attack the statistical model directly. We propose to alleviate the problem by using a variable-splitting scheme that separates the shift-variant and (“nearly”) invariant components of the statistical data model and also decouples the regularization term. This leads to an equivalent constrained problem that we tackle using the classical method-of-multipliers framework with alternating minimization. The specific form of our splitting yields an alternating direction method of multipliers (ADMM) algorithm with an inner-step involving a “nearly” shift-invariant linear system that is suitable for FFT-based preconditioning using cone-type filters. The proposed method can efficiently handle a variety of convex regularization criteria including smooth edge-preserving regularizers and nonsmooth sparsity-promoting ones based on the ℓ1-norm and total variation. Numerical experiments with synthetic and real in vivo human data illustrate that cone-filter preconditioners accelerate the proposed ADMM resulting in fast convergence of ADMM compared to conventional (nonlinear conjugate gradient, ordered subsets) and state-of-the-art (MFISTA, split-Bregman) algorithms that are applicable for CT.

Index Terms: Statistical Image Reconstruction, Regularization, Iterative Algorithm, Method of Multipliers, Alternating Minimization

I. Introduction

Statistical image reconstruction methods in X-ray CT minimize a cost function consisting of a data-fidelity term that accommodates the measurement statistics and the geometry of the data-acquisition process, and a regularization term that reduces noise. For example, PWLS cost functions for X-ray CT use a (statistically) weighted quadratic data-fidelity term [1], [2] and can provide improved image-quality compared to filtered back-projection (FBP) [1], [2]. However, computation-intensive iterative methods are needed to minimize such cost functions. This paper describes a new minimization algorithm that uses variable splitting to provide accelerated convergence.

Several types of iterative algorithms have been proposed for statistical image reconstruction in X-ray CT, including iterative coordinate descent (ICD) methods [1], block-based coordinate descent [3], ordered subsets (OS) algorithms based on separable quadratic surrogates (SQS) [4], [5] and (preconditioned) nonlinear conjugate gradient (NCG) methods [6]. For fast computation on multiprocessor computers, (P)NCG-type methods appear to be particularly amenable to efficient parallelization because they update all voxels simultaneously using all measurements.

Developing suitable preconditioners for (P)NCG is challenging for X-ray CT because the enormous dynamic range of the transmission data causes the Hessian of the statistical data-fidelity term to be highly shift-variant [6]. Clinthorne et al. [7] showed that for unweighted least-squares reconstruction, one can precondition the problem effectively using FFTs with a kind of cone filter. This cone filter amplifies high spatial frequencies, helping to accelerate convergence. But that cone filter is ineffective for (P)NCG in the PWLS case [6]. Delaney et al. [8] considered a very special type of shift-invariant weighting and also demonstrated accelerated convergence, but for low-dose X-ray CT the appropriate statistical weighting does not satisfy the assumptions in [8]. Shift-variant pre-conditioners based on multiple FFTs were proposed in [6] for 2D transmission tomography, but never became popular due to their complexity and were never investigated for 3D problems. Another way to introduce a cone filter is the iterative FBP approach [9], [10]. Initially these algorithms “converge” rapidly compared to (P)NCG methods, but typically they do not have any theoretical convergence properties and “too many” iterations lead to undesirably noisy images. Furthermore, it is unclear how to include regularization while ensuring convergence.

The challenges described above apply regardless of the form of the regularizer. Additional difficulties arise when one uses nonsmooth regularizers such as total variation (TV) [11] and sparsity-promoting ones based on the ℓ1-norm [12]. These regularizers are not differentiable everywhere precluding optimization by conventional gradient-descent methods (e.g., NCG). Differentiable approximations (e.g., using “corner-rounding” [12, Sec. VI.A], [13, App. A]) can be employed, but even with such modifications the Hessian of the regularizer can have very high curvature leading to slow convergence of conventional gradient-descent methods [14]. While some state-of-the-art algorithms such as (M)FISTA [15], [16] and split-Bregman-type schemes (that split only the regularization term) [17], [18] are able to handle nonsmooth regularizers exactly (i.e., without corner rounding), when applied to X-ray CT, they must minimize a cost function that involves the original statistical data-fidelity term and are in turn hindered by the shift-variance of its Hessian (see Sections II-B, IV).

In this work, we propose to use a variable-splitting technique that not only decouples the regularization term in the spirit of [17], but also dissociates the statistical and geometrical components in the data-fidelity term. This forms the key feature of our approach that enables us to “isolate” the shift-variant element in the statistical data-fidelity term thereby alleviating the problem. Our splitting procedure uses auxiliary constraint variables to transform the original PWLS problem into an equivalent constrained optimization task that we solve using the classical method-of-multipliers [19], [20] and alternating direction optimization [21]–[23] frameworks. This leads to an alternating direction method of multipliers (ADMM) algorithm for solving the original PWLS problem that, apart from requiring simple operations (such as inverting a diagonal matrix, solving 1D denoising problems), involves the solving of a “nearly” shift-invariant linear system, which is amenable to FFT-based preconditioning using cone-type filters [7]. Experimental results with synthetic and real in vivo human data indicate that the proposed ADMM converges faster than conventional (NCG and ordered subsets) and state-of-the-art (MFISTA and split-Bregman) methods, illustrating the efficacy of our splitting scheme and the potential of cone-filter preconditioners for accelerating the proposed ADMM. The proposed ADMM can also be used with a variety of convex regularization criteria (see Section VI-A) including smooth edge-preserving regularizers and nonsmooth ones such as TV and ℓ1-regularization.

The paper is organized as follows. In Section II, we mathematically formulate X-ray CT reconstruction as a PWLS problem and briefly discuss drawbacks of some existing algorithms for X-ray CT. Section III discusses the proposed splitting strategy and the development of the ADMM algorithm in detail. In Section IV, we compare our ADMM algorithm with the split-Bregman technique applied for CT, schematically. Section V is dedicated to numerical experiments and results, while Section VI discusses possible extensions of this work to 3D CT and other statistical models. Finally, we draw our conclusions in Section VII.

II. Statistical X-ray CT Reconstruction

A. Problem Formulation

For CT, an accurate statistical model for the data is quite complicated [24], [25] and is often replaced by a Gaussian approximation [1], [2] with a suitable diagonal weighting term W whose components {wi} are inversely proportional to the measurement variances [1], [2]. We consider a penalized weighted least-squares (PWLS) formulation of statistical CT reconstruction [1]:

| (1) |

| (2) |

where y is the M × 1 data vector (log of transmission data), A is the M × N system matrix, Ax represents the forward projection operation (e.g., line integrals), W = diag{wi} is a M × M diagonal matrix consisting of statistical weights,1 and . We use a general family of regularizers of the form [12]

| (3) |

where λ > 0 is the regularization parameter, κr > 0 ∀r are user-provided weights that govern the spatial resolution in the reconstructed output [26], Φr are potential functions, the R × N matrix R ≜ [R1⊤ ··· RP⊤]⊤ constitutes regularization operators Rp (e.g., finite differences, frames, etc) of size L × N, where R = PL. We concentrate on values of m and instances of Φ that result in a convex regularizer Ψ in (3).

The above general regularizer is in the “analysis” form [27], i.e., Ψ is specified as a function of the reconstructed image x. The method proposed in this paper can also be easily extended to handle “synthesis” forms [27], e.g., by writing x = Sθ and considering J(θ) = Jdata(y, ASθ) + Φ(θ) in P0, for some potential function Φ and synthesis operator S. We focus on the analysis form (3) as it includes popular nonsmooth criteria such as TV (for and m = 2), analysis ℓ1-wavelets (for Φr(x) = x, m = 1) and a variety of smooth convex edge-preserving regularizers (e.g., Huber [28], [29], Fair [6], [30] etc).

B. Previous Approaches

Conventional gradient-descent methods, e.g., NCG, for P0 depend on the Hessian of Jdata: Hdata = A⊤WA, which is highly shift-variant in CT particularly due to the large dynamic range of W. As a result, it becomes difficult to precondition and accelerate such methods [6]. Fessler et al. [6] directly attacked P0 using NCG and proposed a shift-variant preconditioner to tackle Hdata. But their preconditioner is data-dependent and requires at least one pair of FFT-iFFT operations per NCG-iteration.

Iterative shrinkage-thresholding (IST) [31] and its variants ((M)FISTA [15], [16], and (M)TWIST [32]) that are applicable to P0 depend on the Lipschitz constant Ldata of Jdata(y, Ax):

| (4) |

where σmax represents the maximum eigenvalue. The convergence speed of these algorithms is primarily determined by (4): A large value of Ldata results in small gradient steps [15, Sec. 1.1] leading to slow convergence. Since W has a large dynamic range and due to the (approximately) 1/r-type decay of the elements of A⊤A, Ldata can be large for CT decreasing convergence speed of IST-type algorithms. Optimization transfer-based methods (e.g., [33, Sec. IV-B.1]) face a similar issue in that the surrogate functions end up having high curvature [5] due to W, which again leads to small update-steps and slow convergence.

In summary, the weighting term W, although crucial for improving reconstruction quality, poses a challenge for optimization. Compared to A⊤WA, the term A⊤A is “more” shift-invariant and is appropriate for preconditioning using cone filters. This property has been used to accelerate un-weighted least-squares reconstruction for tomographic image reconstruction [7]. Therefore, our idea to mitigate the shift-variance of Hdata is to untangle W from Hdata thereby making the resulting problem “more” shift-invariant and suitable to circulant preconditioning. To do so, we adopt a variable-splitting strategy.

Variable splitting (VS) refers to the process of introducing auxiliary constraint variables to separate coupled components in the cost function [12], [17], [18], [34]–[42]. This procedure transforms the original minimization problem into an equivalent constrained optimization problem that can be effectively solved using classical constrained optimization schemes [19], [20]. The VS approach is appealing as it renders the resulting constrained problem tractable to alternating minimization schemes that decouple it in terms of the auxiliary variables and simplify optimization [12], [17], [18], [34], [36], [37], [39]–[42].

The VS approach has become popular recently for solving reconstruction problems in image processing [17], [34]–[37], MRI [12], [39], [40] and CT [18], [41], [42]. Many authors have focussed on splitting the regularization term [17], [18], [34], [37], [39]–[41] as it is hard to tackle in inverse problems (especially nonsmooth ones such as TV and ℓ1-regularization). Splitting the regularization term enables one to handle it exactly (i.e., without the need for “corner-rounding” [12, Sec. VI.A], [13, App. A] for nonsmooth criteria) via simple denoising problems [12], [17], [37], [42]. However, in PWLS problems for CT, the data-term adds to the complexity (as it leads to a shift-variant hessian Hdata) and therefore demands attention. So in this work, besides splitting the regularization term, we also split the data-term.

III. Proposed Method

A. Equivalent Constrained Optimization Problem

We introduce auxiliary constraint variables u ∈ ℝM and v ∈ ℝR and write P0 as the following equivalent constrained problem:

| (5) |

where u separates the effect of W on Ax and v splits the regularization term as in [17]. Afonso et al. [36] and Figueiredo et al. [38] have utilized data-term-splitting in the context of image restoration [36], [38] and reconstruction from partial Fourier observations [36]. However, our emphasis here is on CT reconstruction where u plays an important role: It leads to a sub-problem that is “nearly” shift-invariant and suitable to preconditioning using cone filters [42] as explained in Section III-C.

In general, the proposed splitting strategy (5) can be applied to any PWLS problem of the form P0 so as to exploit shift-invariant features in the data-model, e.g., deconvolution of blurred images corrupted with non-stationary noise.

Before proceeding, we rewrite (5) concisely as

| (6) |

where

| (7) |

Since P1 is equivalent to P0, solving P1 for x yields the desired reconstruction in (1).

B. Method of Multipliers

To solve P1, we use the classical framework of the method of multipliers [19], [20] and construct an augmented Lagrangian (AL) function [12], [19], [20], [42]

| (8) |

that combines a multiplier term γ⊤(z − Cx) with Lagrange multiplier and a quadratic penalty term , where μ > 0 is the AL penalty parameter and Λ ≻ 0 is a symmetric weighting matrix. The multiplier term can be absorbed into the penalty term in (8) (by completing the square) for ease of manipulation leading to

| (9) |

where and is a constant independent of x and z. Unlike standard approaches [36], [38] that set Λ = IM+R, we propose to use

| (10) |

where ν > 0. This is crucial in CT because the elements of A and R can differ by several orders of magnitude and it is imperative to balance them to avoid numerical instabilities in the resulting algorithm and to achieve faster convergence [42].

The classical AL scheme for solving P1 alternates between a joint-minimization step and an update step [12, Sec. III]:

| (11) |

| (12) |

respectively. Unlike pure penalty methods, remarkably, the AL formalism does not require increasing μ → ∞ to ensure convergence of (11)–(12) to a solution of P1 [19].

C. Alternating Direction Minimization

It is numerically appealing to replace the more difficult joint-minimization step (11) by alternating direction optimization that decouples (11) as [21]–[23]

| (13) |

| (14) |

Thus, at the jth iteration, instead of (11)–(12), we perform (ignoring constant terms)

| (15) |

| (16) |

| (17) |

where we write to mean that ||x − x★||2 ≤ ε, i.e., we allow for inexact updates in (15)–(16) in the spirit of [43]. Although (15)–(16) is an approximation to (11), the following theorem adapted from [43, Theorem 8] to P1 guarantees convergence of (15)–(17) to a solution of (5) (and P0).

Theorem 1

Consider P1 in (6) where f is closed, proper, convex2 and C has full column-rank. Let η(0) ∞ ℝM+R, μ > 0,

| (18) |

If P1 has a solution (x★, z★), then the sequence of updates {(x(j), z(j))}j generated by (15)–(17) converges to (x★, z★). If P1 has no solution, then at least one of the sequences {(x(j), z(j))}j or {η(j)}j diverges.

The result of Eckstein et al. [43, Theorem 8] uses an AL function with Λ = I, so we apply [43, Theorem 8] to (9) with Λ in (10) through a simple change of variables.3 For CT, it can be readily ensured that C has full column-rank for a variety of regularization operators R. In the sequel, we explain how to perform the minimizations in (15)–(16).

Firstly, we see that due to the structure of f(z) and C, (16) further dissociates into the following:

| (19) |

| (20) |

These sub-problems are independent of each other and can therefore be solved simultaneously, where . Sub-problem (19) is quadratic and has a closed form solution:

| (21) |

where Dμ ≜ (W + μIM). Since W is diagonal, Dμ can be inverted exactly, so that in (19) ∀ j.

Minimization w.r.t. v (20) corresponds to a denoising problem that can be solved efficiently and/or exactly for a variety of instances of (3) including TV: This has been elucidated by many authors [12], [17], [36]–[40], [45], e.g., the techniques developed in [12, Sec. IV.A-2 – IV.A-6] can be directly applied to (20). For brevity, we concentrate on two particular instances of (3) and solve (20) exactly so that in (20) ∀ j.

-

Analysis ℓ1-regularization [m = 1, Φr(x) = x ∀ r in (3)]:

(22) with the shift-invariant Haar wavelet transform (excluding the approximation level) for R, which is a sparsity-promoting criterion [12], [27], [36], [38].

-

Smooth edge-preserving regularization [P = 1, m = 1, Φr = ΦFP ∀ r in (3)]:

(23) using the Fair potential ΦFP(x) = x/δ−log(1+x/δ) with δ > 0 [30] (also the smoothed Laplace function in [45, Eq. 4.11]) and finite-differences for R. This regularizer ensures a unique solution to P0 as ΦFP is strictly convex. It has also been successfully applied to PWLS problems in tomography [6].

For these regularizers, (20) separates into R 1D minimization problems in terms of the components of v:

| (24) |

where is the rth component of . For (22), the solution of (24) is given by the shrinkage rule4 [46]

| (25) |

For (23), (24) leads to a quadratic equation in vr [45, Eq. 4.13] that yields

| (26) |

where .

Having addressed (16), we now consider (15) which can be easily solved analytically:

| (27) |

where x(j+1)★ represents the exact solution to (15) and

| (28) |

is non-singular because Λ ≻ 0 and R is chosen so that C has full column-rank. Although (27) is an exact analytical solution, the enormous size of Gν for CT makes it impossible to store and “invert” Gν exactly. So we propose to use the conjugate gradient (CG) method for (27) and obtain an approximate update . Since Gν is non-singular, we have that

| (29) |

where is the corresponding residue and σmin{Gν} > 0 is the minimum eigenvalue of Gν that depends only on A and R and can be precomputed e.g., using stochastic techniques [47] or the Power method.5 Therefore, using (29), one can monitor in the CG-loop and design a suitable stopping rule to satisfy (18).

D. Preconditioning Using Cone Filter

We see that Gν contains A⊤A, which is “nearly” shift-invariant, so for shift-invariant6 R⊤R, Gν is amenable to preconditioning using suitable cone filters [6], [7]. We constructed a circulant matrix G̃ν from the central column of Gν:

| (30) |

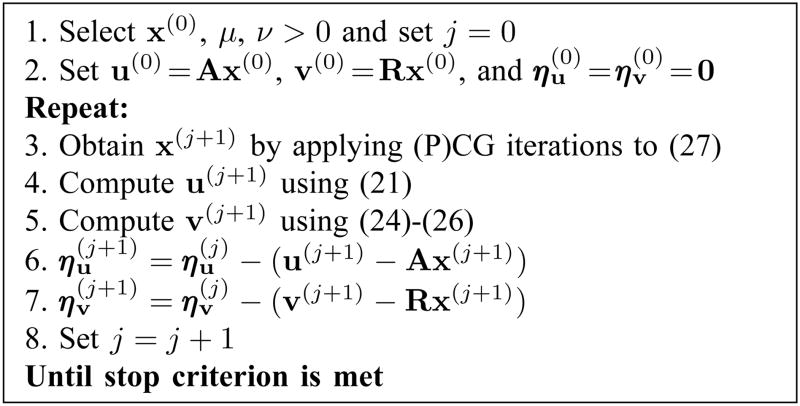

and used its inverse, , as the preconditioner, where ec is a standard basis vector of ℝN corresponding to the center pixel of the image and circ{α} represents the construction of a circulant matrix from a vector α. The proposed preconditioner corresponds to a cone-type filter that amplifies high spatial frequencies and accelerates convergence of both the CG-loop for (27) and the overall ADMM scheme as demonstrated in Section V. Implementing requires only one FFT-iFFT per CG iteration and its construction7 uses a product with R⊤R and only one forward-backward projection that can be performed offline as G̃ν is independent of W. In our experiments, we applied at most two preconditioned CG (PCG) iterations with warm starting [12] and found that decreased sufficiently rapidly. Based on (15)–(29), we present our algorithm in Fig. 1 for solving P1 (and thus P0). In principle, Steps 4 and 5 of ADMM may be executed in parallel as they are independent of each other, but in our implementation, we chose to execute all the steps sequentially for simplicity.

Fig. 1.

ADMM for statistical X-ray CT reconstruction.

E. Selection of μ and ν

The parameters μ and ν do not affect the solution of P1, but only regulate the convergence speed of the proposed ADMM [12], [35, Sec. 4.4]. In general, choosing appropriate values for AL penalty parameters (such as μ and ν) is a nontrivial and application-dependent task. Several empirical rules have been put forth by many authors for setting AL penalty parameters (to obtain good convergence speeds for AL-based iterative reconstruction schemes) in many applications, see e.g., [37], [38] for image restoration, [17] for denoising and compressed-sensing MRI, and [12], [39] for parallel MRI reconstruction.

In this paper, Step 3 is the only inexact step of the proposed ADMM. So the computational speed of ADMM is primarily determined by how efficiently (27) is solved, which in turn is governed by ν. We use an empirical rule for selecting ν that is based on [17]: Since ν balances A⊤A and R⊤R in Gν that have disjoint non-trivial null-spaces, the condition number κ(Gν) of Gν exhibits a minimum for some νmin > 0: νmin = arg minν κ(Gν). It was suggested in [17] to use this property to choose AL penalty parameters to ensure quick convergence of the CG-algorithm for solving a linear system such as (27). For A implemented using the distance-driven (DD) projector [49] and R in (22)–(23), νmin ≈ 105, which yielded a very small (λ/μν) in (20) and subsequently resulted in slow convergence of ADMM in our experiments. On the other extreme, setting ν = 1 (corresponding to the standard case of Λ = IM+R) yields a poorly conditioned Gν that was not favorable either.

Based on our experience with 2D CT experiments, we found the empirical rule8 to yield good overall convergence speeds for ADMM, where G̃ν is the circulant matrix in (30). We also observed that ADMM was slightly more robust to the choice of μ than ν. We selected μ = median{wi} to avoid outliers in W; this yielded a well-conditioned Dμ (with κ{Dμ} ∈ [10, 40]) that improved the numerical stability of ADMM.

IV. Comparison with the Split-Bregman Approach

The split-Bregman (SB) method [17] uses constraint variables to split the regularization term alone. For (1), this corresponds to using only v = Rx which leads to following equivalent constrained problem

| (31) |

| (32) |

This type of splitting has been investigated for CT reconstruction in [18], [41]. Applying the Bregman iterations [17, Eq. 3.7–3.8] with alternating minimization [17, Sec. 3.1] to (31) yields the following SB scheme:9

| (33) |

| (34) |

| (35) |

The minimization in (34) is same as that in (20), so the techniques described for (20) apply to (34) as well. The main difference between the proposed method (15)–(17) and the SB scheme (33)–(35) is in the way x is updated. The minimization in (33) leads to

| (36) |

where x(j+1)★ represents the exact solution to (36) and

| (37) |

The matrix Bμ contains the shift-variant component W that makes standard preconditioners (including cone filters) less effective for CG-based solving of (36). Nevertheless, we used PCG for (36) with a circulant preconditioner (obtained by setting μ ≡ ν in (30)) in our implementation of the SB scheme and found that it improved upon the standard CG method for (36). We selected10 μ = Ldata/(100 σmax{R⊤R}) for SB11 (see (4) for definitions of Ldata and σmax). This choice is motivated by the discussion pertaining to ν in Section III-E.

In principle, it is possible to construct a shift-variant preconditioner for Bμ in the spirit of [6], but such a preconditioner would invariably be data-dependent and may be computationally involved. Our approach (15)–(17) provides a simple and effective alternative using an extra constraint variable u in (5): Compared to the SB scheme (33)-(35), our method requires only an extra trivial operation of inverting a diagonal matrix Dμ in (21).

V. Experimental Results

We present numerical results for 2D CT reconstruction from simulated NCAT phantom data and in vivo human head data. The proposed ADMM is also applicable, in principle, to 3D CT reconstruction (see Section VI-A). We implemented the following algorithms in Matlab and conducted the experiments on a quad-core PC with 3.07 GHz Intel Xeon processors and 12 GB RAM.

NCG-n: unpreconditioned nonlinear conjugate gradient algorithm with n line-search iterations that monotonically decrease the cost function J [6],

MFISTA-n: Monotone Fast Iterative Shrinkage-Thresholding Algorithm [16] with n iterations for solving auxiliary denoising sub-problems similar to [16, Eq. 3.13],

OS-n: Ordered subsets algorithm [5] with n blocks,

SB-(P)CG-n: Split-Bregman scheme from Section IV with n (P)CG iterations for solving (36),

ADMM-(P)CG-n: Proposed ADMM with n (P)CG iterations for solving (27).

MFISTA is a state-of-the-art method developed by Beck et al. [16] for image restoration that is readily applicable to P0 with the Lipschitz constant Ldata in (4). Beck et al. [15] also proposed a back-tracking strategy that does not require explicit computation of Ldata, but we chose to estimate and use11 Ldata both for ease of implementation and because it is the smallest possible value [15, Ex. 2.2] that yields the fastest convergence for MFISTA. We applied the Chambolle-type method [50] for the inner-step (i.e., computing the proximal map [16, Eq. 3.13]) of MFISTA as that does not require smoothing of (“corners” of) ℓ1-regularizers such as (22).

Since our task is to solve P0, we fixed the cost function J (that led to a visually appealing reconstruction) and focussed on the convergence speed of the algorithms. We quantified the convergence rate using the normalized ℓ2-distance between x(j) and x★:

| (38) |

where x★ is a solution to P0 obtained numerically by running one of the above algorithms as described next. Since the algorithms have different computation load per (outer) iteration, we evaluated ξ(j) as a function of algorithm run-time12 tj, i.e., the time elapsed from start until iteration j. We also plot ξ(j) as a function of the iteration index j for completeness. We used the DD-projector [49] (with 8 threads) for implementing matrix-vector products such as Ax, A⊤u and initialized all the algorithms with the image reconstructed using FBP (with the ramp filter) in all experiments.

Products with A and A⊤ (corresponding to forward- and back-projections, respectively) are computation intensive in CT reconstruction problems and dominate the overall computation load of a reconstruction algorithm.13 NCG and MFISTA both require only one product with A and A⊤, respectively, per iteration. The OS method breaks products with A and A⊤ in terms of block-rows of A and block-columns of A⊤, respectively, and cycles through each block once per every iteration, so effectively, OS also requires only one product with A and A⊤, respectively, per iteration. However, for each block, the OS method demands the evaluation of the gradient of the regularization term that increases computation time per iteration as indicated in Table I. For the SB scheme, we employ (P)CG for “inverting” Bμ (that depends on A⊤WA) in (36), so SB-(P)CG-n requires n products with A and A⊤, respectively, per iteration of (33)-(35). In the case of ADMM, we apply (P)CG at Step 3 (see Fig.1) for “inverting” Gν in (27), but that step also requires a product with A⊤ in the RHS of (27), so overall ADMM-(P)CG-n uses n products with A and n + 1 products with A⊤ per iteration of Steps 3–7 in Fig.1. Table I summarizes this discussion and also shows the mean computation time per iteration (averaged over 10 iterations) of the above algorithms. Although the proposed ADMM(-PCG) requires more forward- and back-projections per iteration (and accordingly exhibits higher computation time per iteration) compared to other algorithms (with the exception of the OS method) in Table I, we demonstrate in the sequel that it converges faster in terms of algorithm runtime.

TABLE I.

Computation Time and Number of Projections required per Iteration of Algorithms Compared in Section V

| Algorithm | Time/Iteration (in seconds) | Projection operations/Iteration | ||

|---|---|---|---|---|

|

| ||||

| Section V-A | Section V-B | Forward (A) | Backward (A⊤) | |

|

| ||||

| NCG-5 | 1.56 | 4.85 | 1 | 1 |

| NCG-10 | - | 8.83 | 1 | 1 |

| MFISTA-5 | 2.49 | 8.87 | 1 | 1 |

| MFISTA-25 | 5.23 | - | 1 | 1 |

| OS-4 | - | 10.19 | 1 (effective) | 1 (effective) |

| OS-41 | - | 61.84 | 1 (effective) | 1 (effective) |

| SB-CG-1 | 2.29 | 6.22 | 1 | 1 |

| SB-CG-2 | 3.29 | 8.93 | 2 | 2 |

| SB-PCG-1 | 2.29 | 6.25 | 1 | 1 |

| SB-PCG-2 | 3.30 | 9.07 | 2 | 2 |

| ADMM-CG-1 | 3.29 | 8.91 | 1 | 2 |

| ADMM-CG-2 | 4.31 | 11.61 | 2 | 3 |

| ADMM-PCG-1 | 3.32 | 8.94 | 1 | 2 |

| ADMM-PCG-2 | 4.34 | 11.70 | 2 | 3 |

A. Simulation with the NCAT Phantom

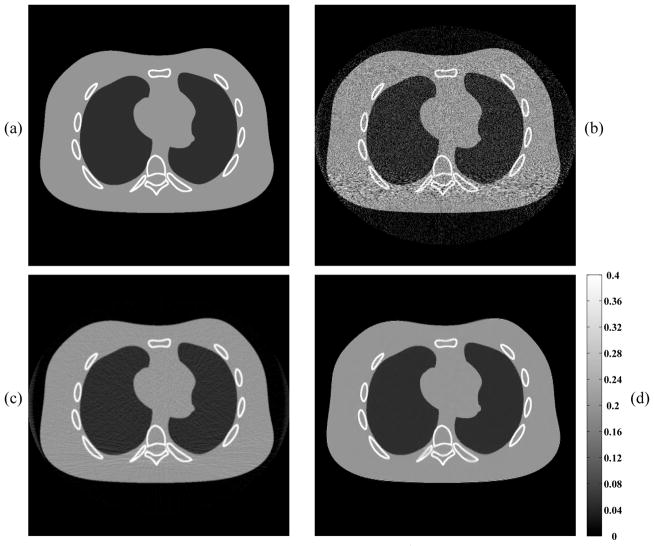

We used a 1024 × 1024 2D slice of the NCAT phantom [51] and numerically generated a 888 × 984-view noisy sinogram with GE LightSpeed fan-beam geometry [52] corresponding to a monoenergetic source with 2.5 × 104 incident photons per ray and no background events. We used the ℓ1-regularization in (22) with κr = ω(r mod N), where is based on [26]. We reconstructed 512 × 512 images over a FOV of 65 cm; we obtained x★ by running 5000 iterations of MFISTA-25 as it does not require “corner-rounding” and is therefore guaranteed to converge to a solution of P0. NCG cannot directly handle nonsmooth criteria such as (22) without smoothing it [13, App. A], so we used a smoothing value of 10−6 cm−1. The FBP reconstructions in Figs. 2b, 2c corresponding to the ramp and Hanning filters, respectively, are either noisy or blurred and streaked with artifacts. The ℓ1-regularized reconstruction x★ in Fig. 2d preserves image features and has lower RMSE than both FBP outputs.

Fig. 2.

Simulation with the NCAT phantom: (a) Noisefree NCAT phantom (in cm−1), (b) FBP reconstruction with ramp filter, also the initial guess x(0) for all iterative algorithms, (c) FBP reconstruction with Hanning filter, and (d) ℓ1-regularized reconstruction, also the solution x★ to P0. Images in (a)–(d) have been normalized to the same color scale [as that of (a)] indicated beside (d). The ℓ1-regularized reconstruction (d) is less noisy and has almost no streaky-artifacts compared to both FBP results.

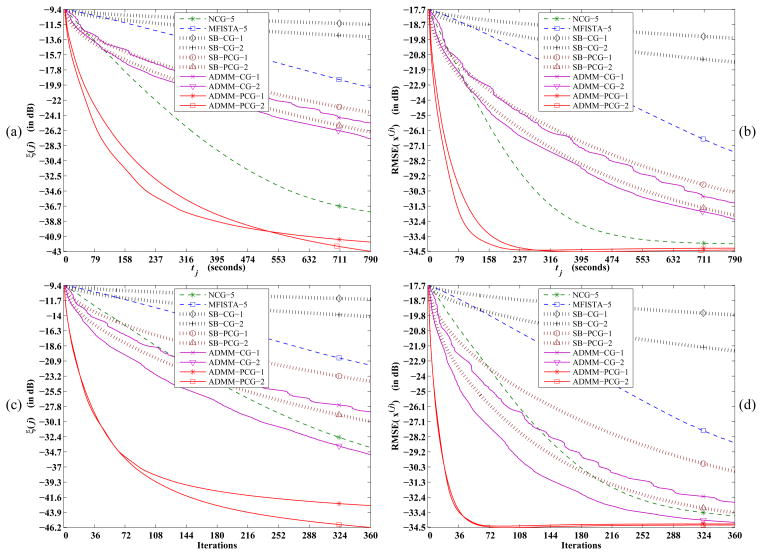

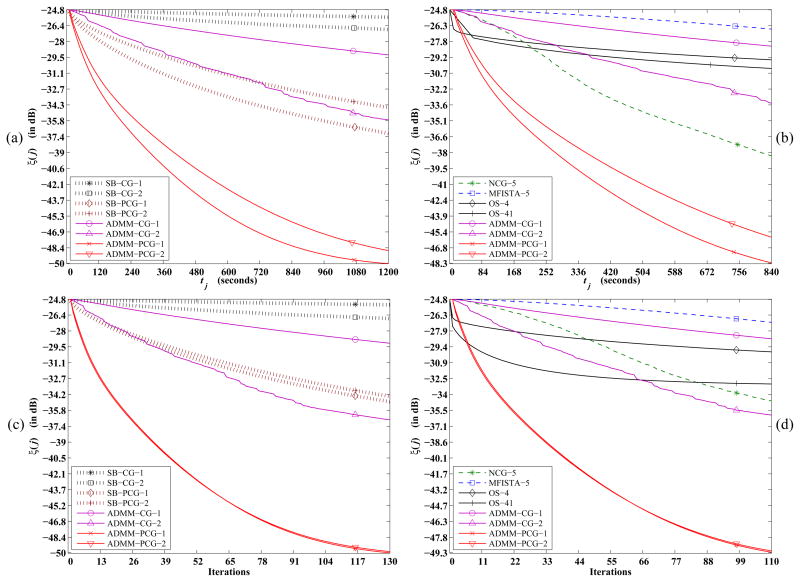

We plot ξ(j) for various algorithms as a function of time in Fig. 3a. The SB-CG scheme appears to converge the slowest, while SB-PCG is faster indicating that the circulant preconditioner provides a moderate acceleration of CG for (36). MFISTA is slower than most of the algorithms for the reason explained in Section II. The CG-version of the proposed method, ADMM-CG, is slightly faster than MFISTA and SB-(P)CG but slower than NCG. The preconditioned version ADMM-PCG is the fastest among all algorithms illustrating that the cone-filter preconditioner is very effective in accelerating convergence of CG applied to (27) and ADMM-PCG. This is also corroborated by Fig. 3c where for a given number of iterations, ADMM-PCG produces a reconstruction that is closest to x★ in terms of ξ(j). Figs. 3b, 3d further substantiate the reconstruction speed-up of ADMM-PCG over other methods, where (both in terms of algorithm run-time and number of iterations) it rapidly leads to a RMSE-value close to RMSE(x★).

Fig. 3.

Simulation with the NCAT phantom: (a), (b) Plot of ξ(j) and RMSE(x(j)), respectively, as a function of time tj and (c), (d) Plot of ξ(j) and RMSE(x(j)), respectively, with respect to iterations, for various algorithms considered in this work. The unpreconditioned version of the proposed method, ADMM-CG, converges slightly faster than MFISTA and the split-Bregman scheme SB-(P)CG but is slower than NCG as seen in (a) and (b). But the preconditioned version, ADMM-PCG, converges rapidly both in terms of ξ(j) and RMSE indicating that the cone-filter-preconditioner ( in Section III-E) greatly accelerates convergence of the proposed ADMM.

B. Experiments with a in vivo Human Head Data-set

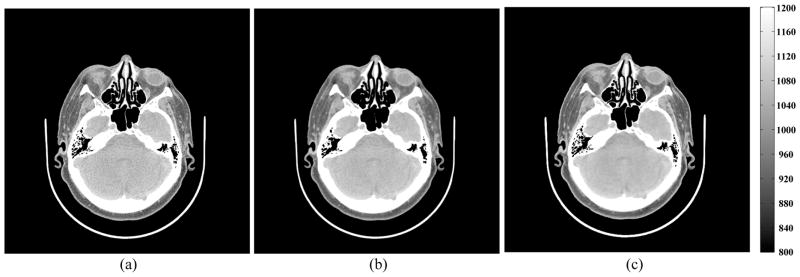

In this experiment, we used a in vivo human head data-set acquired with a GE scanner using 120 kVp source potential and 585 mA tube current with 0.6 s rotation. We reconstructed a 1024 × 1024 2D slice with 50 cm FOV and 0.625 mm thickness from a 888 × 984-view sinogram. For Ψ in (1), we used the strictly convex regularizer (23) (with δ = 10 HU) that guarantees a unique solution x★ to P0. As NCG generally had faster convergence than MFISTA in our experiments, we obtained x★ by running 5000 iterations of NCG-10. Fig. 4 shows the reconstruction results for this experiment. The regularized solution x★ in Fig. 4c has reduced noise and better preserves the anatomical features compared to the FBP reconstructions in Figs. 4a, 4b obtained using the ramp and Hanning filters, respectively.

Fig. 4.

Experiment with the in vivo human head data-set: (a) FBP reconstruction with the ramp filter, also the initial guess x(0) for all iterative algorithms, (b) RBP reconstruction with Hanning filter, and (c) PWLS reconstruction with the strictly convex regularizer (23), also the unique solution x★ to P0. Images in (a)–(c) are displayed in Hounsfield units indicated beside (c). The regularized reconstruction (c) is less noisy and preserves anatomical features compared to both FBP results.

Figs. 5a–d plot ξ(j) as a function of tj and iteration index j for all algorithms considered in this work. Here, we additionally compare the standard OS algorithm (that is not guaranteed to converge) in Figs. 5b, 5d, where we used the implementation from [53] available currently for regularization criteria such as (23). The OS algorithm is faster than all algorithms (including ADMM-PCG) for the first few iterations but it does not converge to the minimizer as expected. In practice, it may be advantageous to run a few iterations of OS and use its output to initialize a more sophisticated iterative algorithm. Figs. 5a, 5b indicate that the convergence trends for MFISTA, NCG, SB-(P)CG and ADMM-CG are generally similar to those in Figs. 3a, 3b. ADMM-PCG again provides notable reconstruction speed-up compared to all algorithms. This substantiates the potential of the cone-filter preconditioner (30) for the proposed ADMM and also demonstrates the benefit of our splitting scheme (5).

Fig. 5.

Experiment with the in vivo human head data-set: (a), (b) Plot of ξ(j) as a function of time tj and (c), (d) Plot of ξ(j) with respect to iterations, for various algorithms considered in this work. MFISTA and SB-CG appear to be the slowest. The proposed ADMM-(P)CG is generally faster than the split-Bregman scheme SB-(P)CG as seen in (a). Although ADMM-CG converges slower than NCG as seen in (b), the preconditioned version ADMM-PCG is the fastest among the considered algorithms, illustrating the benefit of the cone-filter-based preconditioner (G̃ν in Section III-E) for the proposed ADMM.

VI. Discussion

A. Memory Requirements

Splitting-based algorithms simplify optimization at the expense of manipulating and storing auxiliary constraint variables (and corresponding Lagrange multipliers in the AL formalism) and therefore have additional memory requirements compared to conventional algorithms such as NCG. Although this does not pose much concern for 2D reconstruction problems, it can represent a significant memory overhead for 3D problems. Specifically, the SB (Section IV) and the proposed ADMM14 (in Fig. 1) schemes use the constraint v = Rx that requires the storage of 2P vectors (v and ηv) of size L × 1. For instance, typically, the size of an image-volume in 3D CT is N = 512 × 512 × 512 (≈ 1 GB of memory when stored in double-precision format in Matlab). Then, for finite-differences with P = 13 (there are 13 nearest-neighbors on one side of any voxel), this corresponds to storing at least 26 image-volumes (≈ 26 GB of memory) that might set a practical limitation on these methods from an implementation perspective.

A quick remedy is to consider the TV regularizer with finite-differences only along the three orthogonal directions (P = 3 corresponds to 6 image-volumes) which considerably reduces the memory load. Alternatively, one could also consider using an orthonormal transform (such as orthonormal wavelets15) for R, so P = 1 and L = N. The SB and ADMM14 schemes would then require storing only 2 image-volumes (corresponding to v and ηv). Moreover, an orthonormal R satisfies R⊤R = IN that facilitates ADMM: Gν in (28) becomes Gν = (A⊤A + νIN) that is still “nearly” shift-invariant and can be effectively preconditioned using circulant preconditioners. With orthonormal wavelets, one also has the option of excluding the approximation coefficients from the regularization (as they are not sparse) by using scale dependent regularization parameters [50] and setting those parameters corresponding to the approximation level to zero.

B. Inclusion of Nonnegativity Constraint

In CT, a nonnegativity constraint is often imposed [1, Eq. 18], [11, Sec. 2.2] to model the positivity of the attenuation coefficient that is being reconstructed. Although we have not considered such a constraint in P0, it can be easily accommodated [38] as follows. We start with

| (39) |

where g is an indicator function

| (40) |

that imposes the nonnegativity constraint, x ≥ 0, taken component-wise in (40). We then consider the following equivalent constrained version [38] that has an additional constraint compared to (5):

| (41) |

Writing and using Λ = diag{IM, ν1IR, ν2IN} in (9), we can design an ADMM-type algorithm similar to (15)–(17) for solving (41). It can be shown that the updates for u and v in this algorithm will be similar to (19) and (20), respectively, while the update of x would involve the “inversion” of Gν1ν2 = (A⊤A+ ν1R⊤R+ν2IN) and that of w would require a simple projection onto the positive orthant [38, Eq. 32]. Since Gν1ν2 is also “nearly” shift-invariant, a cone-filter-type preconditioner similar to [see (30)] can be used for effective preconditioning of . Moreover, the above C has full column-rank, so this algorithm also satisfies Theorem 1 and is guaranteed to converge to a solution of (41) and (39).

C. Poisson-Likelihood Model for X-ray CT Reconstruction

The proposed strategy of splitting the data-term [i.e., the use of u in (5) and (41)] is also applicable for X-ray CT reconstruction using the Poisson-likelihood (PL) statistical model [5, Eq. 1] that may be more suitable for low-dose acquisitions. It can be shown that splitting the PL data-term yields separable 1D problems in that can be solved simultaneously similar to [38, Eq. 30]. However, the PL model for X-ray CT may preclude exact updates like (21) for {ui}. Moreover, the general PL model [5, Eq. 1] includes background events and can be (“mildly”) nonconvex, so Theorem 1 cannot be directly applied to an ADMM-type algorithm developed for this problem. We plan to explore cost functions involving the PL model [5, Eq. 1] for transmission tomography reconstruction as part of future extensions to this work.

VII. Summary and Conclusions

Statistical X-ray CT reconstruction using penalized weighted least-squares (PWLS) criteria involve a diagonal weighting matrix W that poses a hindrance to several optimization methods due to its huge dynamic range and highly shift-variant nature. In this work, we employed a variable-splitting technique that, in addition to separating the regularization term like [17], also dissociates the statistical (W) and the system (A) components in the data term to decouple and mitigate the effect of W. We applied the method of multipliers [19] with alternating minimization [21]–[23] for the resulting equivalent constrained problem and developed an alternating direction method of multipliers (ADMM) algorithm that chiefly involves three simple operations at each iteration: (i) inverting a diagonal matrix that depends on W, (ii) minimizing a set of 1D auxiliary denoising-cost-functions that can be performed efficiently and/or exactly for a variety of regularizers, and (iii) solving a “nearly” shift-invariant linear system (involving A⊤A) using FFT-based preconditioning with cone-type filters [7].

The proposed ADMM algorithm is guaranteed to converge to a solution of the original PWLS problem under a mild condition on the accuracy of operation (iii) above. We demonstrated using simulations and experiments with real in vivo human data that cone-filter-type preconditioners are very effective for solving the linear system in (iii) and that the preconditioned version of the proposed ADMM converges faster than conventional (NCG and ordered subsets) and state-of-the-art (MFISTA and split-Bregman) algorithms for CT. The proposed ADMM can handle a variety of regularization criteria for 2D CT reconstruction and is also applicable to 3D CT reconstruction, perhaps by using certain memory-conserving regularizers.

Acknowledgments

This work was supported by the National Institutes of Health under Grant R01-HL-098686.

The authors would like to thank GE Healthcare, particularly J. Seamans, J. B. Thibault, and B. DeMan for providing CT sinograms and code for the DD-projector, and M. McGaffin, University of Michigan, for proofreading the manuscript.

Footnotes

For simplicity we used wi = e−yi in our experiments.

A convex function h is closed if and only if it is lower semi-continuous (LSC) [44, pp. 51–52] and is proper if h(x) < +∞ for at least one x and h(x) > −∞ ∀ x [44, p. 24]. It can be shown that the convex functions Jdata, Ψ (for a variety of regularizers such as TV and ℓ1-regularization), and their sum, f (5), are LSC and proper [38].

Writing , and , it is easy to see that (15)–(17) solve the constrained problem s.t. z0 = Mx that is equivalent to P0 using the AL function with an unweighted penalty term.

An analytical update formula similar to (25) is available for the TV regularizer that is based on a vector shrinkage-rule, see e.g., [12, Sec. IV.A-6].

Since the Power method (PM) iteratively estimates the maximum eigenvalue (in absolute magnitude) of a matrix [48, p. 488], an estimate σ̂max{Gν} of σmax{Gν} is first computed by applying PM on Gν. Next, applying PM on K ≜ Gν − σ̂max{Gν}IN yields |σ̂min{Gν} − σ̂max{Gν}| (as σmin{Gν} − σ̂max{Gν} is the largest eigenvalue of K in absolute magnitude) from which σ̂min{Gν} can be easily obtained.

We only store the frequency response corresponding to to save memory.

It would be ideal to consider κ(Gν) instead of κ(G̃ν) for selecting νemp, but estimating κ(Gν) (e.g., using the Power method) for a given ν for CT is computationally expensive (ignoring the fact that it is independent of W and could be computed offline). But as Gν is approximately shift-invariant, κ(G̃ν) ≈ κ(Gν), which leads to νemp.

Theorem 1 may not be applicable to the SB scheme (33)–(35) as the constraint matrix, which is simply R in this case, usually does not have full column-rank. Convergence of SB-type schemes are studied in [17].

Similar to νmin, one could consider for SB, but estimating κ(Bμ) is impractical mainly due to its dependence on W. We chose to use the above rational-form for μ, which yields a rough estimate of μmin.

We estimated Ldata using the Power method applied to A⊤WA. Since Ldata is W-dependent, its use is less appealing for practical applications in CT.

We excluded the computation time spent on estimating Ldata for MFISTA in the plots. Even with this “unfair advantage” the ADMM method was much faster than MFISTA.

NCG, MFISTA, OS and SB require the evaluation of A⊤Wy (e.g., see RHS of (36) for the SB scheme), but this quantity needs to be computed only once, so we ignore this computation need for these schemes.

For ADMM, we have to additionally store two M × 1 vectors, u and the associated multiplier ηu. This additional memory requirement is moderate for 2D CT and can be high for 3D CT depending on the size of the data.

Quality-wise, shift-invariant wavelets are preferable to orthonormal ones [54], but due to their over-complete nature, they require significantly more memory (similar to finite differences) than orthonormal wavelets.

Contributor Information

Sathish Ramani, Email: sramani@umich.edu, Department of Electrical Engineering and Computer Science, University of Michigan, 1301 Beal Ave., Ann Arbor, MI 48109-2122, U.S.A.

Jeffrey A. Fessler, Email: fessler@umich.edu, Department of Electrical Engineering and Computer Science, University of Michigan, 1301 Beal Ave., Ann Arbor, MI 48109-2122, U.S.A.

References

- 1.Thibault JB, Sauer K, Bouman C, Hsieh J. A three-dimensional statistical approach to improved image quality for multi-slice helical CT. Med Phys. 2007 Nov;34(11):4526–44. doi: 10.1118/1.2789499. [DOI] [PubMed] [Google Scholar]

- 2.Sauer K, Bouman C. A local update strategy for iterative reconstruction from projections. IEEE Trans Sig Proc. 1993 Feb;41(2):534–48. [Google Scholar]

- 3.Benson TM, Man BKBD, Fu L, Thibault J-B. Block-based iterative coordinate descent; Proc IEEE Nuc Sci Symp Med Im Conf; 2010. pp. 2856–9. [Google Scholar]

- 4.Kamphuis C, Beekman FJ. Accelerated iterative transmission CT reconstruction using an ordered subsets convex algorithm. IEEE Trans Med Imag. 1998 Dec;17(6):1001–5. doi: 10.1109/42.746730. [DOI] [PubMed] [Google Scholar]

- 5.Erdogan H, Fessler JA. Ordered subsets algorithms for transmission tomography. Phys Med Biol. 1999 Nov;44(11):2835–51. doi: 10.1088/0031-9155/44/11/311. [DOI] [PubMed] [Google Scholar]

- 6.Fessler JA, Booth SD. Conjugate-gradient preconditioning methods for shift-variant PET image reconstruction. IEEE Trans Im Proc. 1999 May;8(5):688–99. doi: 10.1109/83.760336. [DOI] [PubMed] [Google Scholar]

- 7.Clinthorne NH, Pan TS, Chiao PC, Rogers WL, Stamos JA. Preconditioning methods for improved convergence rates in iterative reconstructions. IEEE Trans Med Imag. 1993 Mar;12(1):78–83. doi: 10.1109/42.222670. [DOI] [PubMed] [Google Scholar]

- 8.Delaney AH, Bresler Y. A fast and accurate Fourier algorithm for iterative parallel-beam tomography. IEEE Trans Im Proc. 1996 May;5(5):740–53. doi: 10.1109/83.495957. [DOI] [PubMed] [Google Scholar]

- 9.Wallis JW, Miller TR. Rapidly converging iterative reconstruction algorithms in single-photon emission computed tomography. J Nuc Med. 1993 Oct;34(10):1793–800. [PubMed] [Google Scholar]

- 10.Nuyts J, De Man B, Dupont P, Defrise M, Suetens P, Mortel-mans L. Iterative reconstruction for helical CT: A simulation study. Phys Med Biol. 1998 Apr;43(4):729–37. doi: 10.1088/0031-9155/43/4/003. [DOI] [PubMed] [Google Scholar]

- 11.Sidky EY, Pan X. Image reconstruction in circular cone-beam computed tomography by constrained, total-variation minimization. Phys Med Biol. 2008 Sep;53(17):4777–808. doi: 10.1088/0031-9155/53/17/021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ramani S, Fessler JA. Parallel MR image reconstruction using augmented Lagrangian methods. IEEE Trans Med Imag. 2011 Mar;30(3):694–706. doi: 10.1109/TMI.2010.2093536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Lustig M, Donoho D, Pauly JM. Sparse MRI: The application of compressed sensing for rapid MR imaging. Mag Res Med. 2007 Dec;58(6):1182–95. doi: 10.1002/mrm.21391. [DOI] [PubMed] [Google Scholar]

- 14.Ramani S, Fessler JA. An accelerated iterative reweighted least squares algorithm for compressed sensing MRI. Proc. IEEE Intl. Symp. Biomed. Imag.; 2010. pp. 257–60. [Google Scholar]

- 15.Beck A, Teboulle M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J Imaging Sci. 2009;2(1):183–202. [Google Scholar]

- 16.Beck A, Teboulle M. Fast gradient-based algorithms for constrained total variation image denoising and deblurring problems. IEEE Trans Im Proc. 2009 Nov;18(11):2419–34. doi: 10.1109/TIP.2009.2028250. [DOI] [PubMed] [Google Scholar]

- 17.Goldstein T, Osher S. The split Bregman method for L1-regularized problems. SIAM J Imaging Sci. 2009;2(2):323–43. [Google Scholar]

- 18.Vandeghinste B, Goossens B, Beenhouwer JD, Pizurica A, Philips W, Vandenberghe S, Staelens S. Split-Bregman-based sparse-view CT reconstruction. Proc. Intl. Mtg. on Fully 3D Image Recon. in Rad. and Nuc. Med; 2011. pp. 431–4. [Google Scholar]

- 19.Bertsekas DP. Multiplier methods: A survey. Automatica. 1976 Mar;12(2):133–45. [Google Scholar]

- 20.Nocedal J, Wright SJ. Numerical optimization. Springer; New York: 1999. [Google Scholar]

- 21.Gabay D, Mercier B. A dual algorithm for the solution of nonlinear variational problems via finite element approximations. Computers and Mathematics with Applications. 1976;2:17–40. [Google Scholar]

- 22.Glowinski R, Marroco A. Sur l’approximation par element finis d’ordre un, et la resolution, par penalisation-dualité, d’une classe de problemes de Dirichlet nonlineares. Revue Française d’Automatique, Informatique et Recherche Opérationelle 9. 1975;R-2:41–76. [Google Scholar]

- 23.Fortin M, Glowinski R. On decomposition-coordination methods using an augmented Lagrangian. In: Fortin M, Glowinski R, editors. Augmented Lagrangian Methods: Applications to the Solution of Boundary-Value Problems. Studies in Mathematics and Its Applications. Vol. 15. Elsevier; North-Holland, Amsterdam: 1983. pp. 97–146. [Google Scholar]

- 24.Elbakri IA, Fessler JA. Efficient and accurate likelihood for iterative image reconstruction in X-ray computed tomography. Proc. SPIE 5032, Medical Imaging 2003: Image Proc.; 2003. pp. 1839–50. [Google Scholar]

- 25.Whiting BR. Signal statistics in x-ray computed tomography. Proc. SPIE 4682, Medical Imaging 2002: Med. Phys.; 2002. pp. 53–60. [Google Scholar]

- 26.Fessler JA, Rogers WL. Spatial resolution properties of penalized-likelihood image reconstruction methods: Space-invariant to-mographs. IEEE Trans Im Proc. 1996 Sep;5(9):1346–58. doi: 10.1109/83.535846. [DOI] [PubMed] [Google Scholar]

- 27.Elad Michael, Milanfar Peyman, Rubinstein Ron. Analysis versus synthesis in signal priors. Inverse Prob. 2007;23:947–68. [Google Scholar]

- 28.Huber PJ. Robust statistics. Wiley; New York: 1981. [Google Scholar]

- 29.Nikolova M, Ng MK. Analysis of half-quadratic minimization methods for signal and image recovery. SIAM J Sci Comp. 2005;27(3):937–66. [Google Scholar]

- 30.Fair RC. On the robust estimation of econometric models. Ann Econ Social Measurement. 1974 Oct;2:667–77. [Google Scholar]

- 31.Daubechies I, Defrise M, Mol CD. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Comm Pure Appl Math. 2004 Nov;57(11):1413–57. [Google Scholar]

- 32.Bioucas-Dias JM, Figueiredo MAT. A new twIST: two-step iterative shrinkage/Thresholding algorithms for image restoration. IEEE Trans Im Proc. 2007 Dec;16(12):2992–3004. doi: 10.1109/tip.2007.909319. [DOI] [PubMed] [Google Scholar]

- 33.Ramani S, Thévenaz P, Unser M. Regularized interpolation for noisy images. IEEE Trans Im Proc. 2010 Feb;29(2):543–58. doi: 10.1109/TMI.2009.2038576. [DOI] [PubMed] [Google Scholar]

- 34.Wang Y, Yang J, Yin W, Zhang Y. A new alternating minimization algorithm for total variation image reconstruction. SIAM J Imaging Sci. 2008;1(3):248–72. [Google Scholar]

- 35.Ng MK, Weiss P, Yuan X. Solving constrained total-variation image restoration and reconstruction problems via alternating direction methods. SIAM J Sci Comp. 2010;32(5):2710–36. [Google Scholar]

- 36.Afonso MV, Biouscas-Dias JM, Figueiredo MAT. An augmented Lagrangian approach to the constrained optimization formulation of imaging inverse problems. IEEE Trans Im Proc. 2011;20(3):681–95. doi: 10.1109/TIP.2010.2076294. [DOI] [PubMed] [Google Scholar]

- 37.Afonso MV, Bioucas-Dias José M, Figueiredo Mário AT. Fast image recovery using variable splitting and constrained optimization. IEEE Trans Im Proc. 2010 Sep;19(9):2345–56. doi: 10.1109/TIP.2010.2047910. [DOI] [PubMed] [Google Scholar]

- 38.Figueiredo MAT, Biouscas-Dias JM. Restoration of Poissonian images using alternating direction optimization. IEEE Trans Im Proc. 2010;19(12):3133–45. doi: 10.1109/TIP.2010.2053941. [DOI] [PubMed] [Google Scholar]

- 39.Ye X, Chen Y, Huang F. Computational acceleration for MR image reconstruction in partially parallel imaging. IEEE Trans Med Imag. 2011;30(5):1055– 63. doi: 10.1109/TMI.2010.2073717. [DOI] [PubMed] [Google Scholar]

- 40.Ye X, Chen Y, Lin W, Huang F. Fast MR image reconstruction for partially parallel imaging with arbitrary k-space trajectories. IEEE Trans Med Imag. 2011;30(3):575– 85. doi: 10.1109/TMI.2010.2088133. [DOI] [PubMed] [Google Scholar]

- 41.Xu Q, Mou X, Wang G, Sieren J, Hoffman EA, Yu H. Statistical interior tomography. IEEE Trans Med Imag. 2011 May;30(5):1116–28. doi: 10.1109/TMI.2011.2106161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Ramani S, Fessler JA. Convergent iterative CT reconstruction with sparsity-based regularization. Image Recon in Rad and Nuc Med; Proc. Intl. Mtg. on Fully 3D; 2011. pp. 302–5. [Google Scholar]

- 43.Eckstein J, Bertsekas DP. On the Douglas-Rachford splitting method and the proximal point algorithm for maximal monotone operators. Math Prog. 1992 Apr;55(1–3):293–318. [Google Scholar]

- 44.Rockafellar RT. Convex analysis. Princeton University Press; Princeton: 1970. [Google Scholar]

- 45.Chaux C, Combettes PL, Pesquet J-C, Wajs Valérie R. A variational formulation for frame-based inverse problems. Inverse Prob. 2007 Aug;23(4):1495–518. [Google Scholar]

- 46.Chambolle A, DeVore RA, Lee NY, Lucier BJ. Nonlinear wavelet image processing: Variational problems, compression, and noise removal through wavelet shrinkage. IEEE Trans Im Proc. 1998 Mar;7(3):319–35. doi: 10.1109/83.661182. [DOI] [PubMed] [Google Scholar]

- 47.Dixon JD. Estimating extremal eigenvalues and condition numbers of matrices. SIAM J Num Anal. 1983;20(4):812–814. [Google Scholar]

- 48.Anton H, Rorres C. Elementary linear algebra - applications version. John Wiley & Songs; 2010. [Google Scholar]

- 49.De Man B, Basu S. Distance-driven projection and backprojection. Proc. IEEE Nuc. Sci. Symp. Med. Im. Conf.; 2002. pp. 1477–80. [Google Scholar]

- 50.Selesnick IW, Mário A, Figueiredo T. spie-7446. Wavelets XIII: 2009. Signal restoration with overcomplete wavelet transforms: comparison of analysis and synthesis priors; p. 74460D. [Google Scholar]

- 51.Segars WP, Tsui BMW. Study of the efficacy of respiratory gating in myocardial SPECT using the new 4-D NCAT phantom. IEEE Trans Nuc Sci. 2002 Jun;49(3):675–9. [Google Scholar]

- 52.Wang J, Li T, Lu H, Liang Z. Penalized weighted least-squares approach to sinogram noise reduction and image reconstruction for low-dose X-ray computed tomography. IEEE Trans Med Imag. 2006 Oct;25(10):1272–83. doi: 10.1109/42.896783. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Fessler JA. Image Reconstruction Toolbox. University of Michigan; 2011. http://www.eecs.umich.edu/~fessler/code/index.html. [Google Scholar]

- 54.Figueiredo MAT, Nowak RD. Wavelet-based image estimation: an empirical Bayes approach using Jeffrey’s noninformative prior. IEEE Trans Im Proc. 2001 Sep;10(9):1322–31. doi: 10.1109/83.941856. [DOI] [PubMed] [Google Scholar]