Abstract

Objectives. To develop and pilot test a multiple mini-interview (MMI) to select students for admission to a pharmacy degree program.

Methods. A nominal group process was used to identify 8 important nonacademic attributes of pharmacists, with relative importance determined by means of a paired-comparison survey of pharmacy stakeholders (ie, university-affiliated individuals with a vested interest in the quality of student admitted to the pharmacy program, such as faculty members, students, admissions staff members, and practitioners). A 10-station MMI based on the weighted-attribute blueprint was pilot tested with 30 incoming pharmacy students. MMI score reliability (intraclass correlation coefficient [ICC]) and correlation with other admissions tool scores were determined.

Results. Station scores provided by student interviewers were slightly higher than those of faculty member or practitioner interviewers. While most interviewers judged a 6-minute interview as “just right” and an 8-minute interview “a bit long,” candidates had the opposite opinion. Station scenarios had face validity for candidates and interviewers. The ICC for the MMI was 0.77 and correlations with prepharmacy average (PPA) and Pharmacy College Admission Test (PCAT) composite were negligible.

Conclusions. MMI feasibility was confirmed, based on the finding that interview scores were reliable and that this admissions tool measures different attributes than do the PCAT and PPA.

Keywords: pharmacy admissions, multiple mini interviews, pilot test

INTRODUCTION

The bachelor of science in pharmacy degree program at the Leslie Dan Faculty of Pharmacy (LDFP), University of Toronto, is a 4-year program (after a minimum 1-year of university at the time of the study) with an annual enrollment of 240 students. In 2007, the faculty's admissions committee formed a task force of internal and external stakeholders (ie, university-affiliated individuals with a vested interest in the quality of student admitted to the pharmacy program, such as faculty members, students, admissions staff, and practitioners, to examine whether the addition of interviews to existing admissions tools (ie, university prepharmacy grade point average [PPA] and the University of Toronto Pharmacy Admissions Test [UTPAT]) would add sufficient value to the admissions process to justify the resource implications. This action was prompted by the committee's growing recognition that inadequate assessment of applicants’ nonacademic attributes might be contributing to issues related to student communication skills and professional behavior, as reported by instructors and preceptors. Other factors included awareness that interviews are required by the American Accreditation Council for Pharmacy Education (ACPE)1 and the release in 2007 of draft standards from the Canadian Council for Accreditation of Pharmacy Programs (CCAPP), which stated that an admissions interview would be required. Although the 2007 draft was not approved, the current standards (2006) encourage development and testing of instruments such as interviews to measure nonacademic criteria (Standard 14).2

After an environmental scan of other pharmacy programs in Canada, other health professional programs at the University of Toronto, and a literature review of admission interview studies in all health professions, the task force recommended that: (1) interviews be incorporated into the current admissions process; (2) interviews be conducted with applicants who met or exceeded minimum standards on existing admissions tools; and (3) if adopted, use of interviews be evaluated to determine whether intended benefits could be achieved at a reasonable cost.3 The conditions the task force set for their recommendations were that the interviews must be structured, interviewers must undergo rigorous training, and multiple independent assessments of a candidate's interview performance must be obtained.

The task force also generated a list of 15 proposed nonacademic attributes to be assessed in an interview. These were identified from the Accreditation Standards and Guidelines of the CCAPP (2006)2 and the ACPE (2006),1 the Association of Faculties of Pharmacy of Canada Educational Outcomes for Entry-level Doctor of Pharmacy Graduates (2007),4 the University of Toronto Standards of Professional Practice Behaviour for all Health Professional Students (2008),5 and members’ personal experience mentoring pharmacy students.

The admissions committee recommended that interviews be adopted and, based on predictive validity evidence, selected as the preferred format the multiple mini-interview (MMI), which was pioneered by the Michael DeGroote Medical School at McMaster University in Canada.6-14 The MMI is modeled after the objective structured clinical examination (OSCE) used for health professional licensing examinations in Canada and other countries. Its underlying premise is that testing an array of domains of competency across MMIs, each with a different interviewer, avoids bias attributable to context specificity.8 The MMI typically consists of a circuit of 7 to 12 stations at each of which a 5- to 8-minute “interview” (encounter) takes place. Each station topic is designed to measure 1 or more nonacademic attributes that are generally not reflected in PPA or admission tests, such as oral communication skills, ethical decision-making, and problem-solving. Multiple stations offer the flexibility to measure many different attributes.

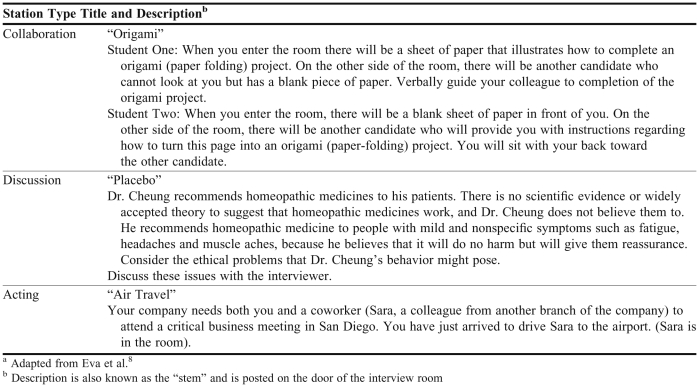

The process is as follows: a candidate is randomly placed outside each station room and given 2 minutes to read the station scenario and then enters the room to participate in the mini-interview. At the end of the allocated interview time, the candidate moves to the next station in the sequence and repeats the process. MMI stations may have various formats, including scenario-based discussions between the candidate and the interviewer, simulated situations requiring the candidate to interact with an actor (while the interviewer observes and rates the candidate), as well as collaborative stations in which 2 candidates perform a task together or debate 2 perspectives on a situation (observed by 2 raters, each rating his or her assigned candidate.) Examples are provided in Appendix 1.

The MMI has been adopted by many health programs, including medicine,11,12,14,15 physical and occupational therapy,16 medical radiation sciences,17 and physician assistant.18 The only reported usage found in pharmacy was a pilot study at 1 US pharmacy school using a 5-station MMI with 13 candidates.19 Since the current pilot test was completed, 2 other faculties of pharmacy in Canada are also using the MMI for interviews.

This paper reports on the development and pilot testing of a 10-station MMI in a Canadian pharmacy school. Research ethics board approval from the University of Toronto was granted. The study objectives were: 1) to determine specific nonacademic attributes to be assessed in an MMI designed for admission to the Leslie Dan Faculty of Pharmacy; 2) to assess the feasibility (resources and procedures) and acceptability of the MMI to candidates (interviewees) and interviewers; 3) to determine optimal station duration; 4) to assess the discriminant validity of the MMI; and 5) to assess the reliability of the overall MMI score.

METHODS

In April 2009, the Leslie Dan Faculty of Pharmacy licensed McMaster's Multiple Mini-Interview System and bank of scenarios (ProFit HR, Hamilton, Ontario, Canada) through Advanced Psychometrics For Transitions, Inc,20 with the intention of pilot testing a customized version of the MMI in September 2009 and incorporating the resulting version in the spring 2010 admissions cycle. An Implementation Planning Team (IPT) was formed to plan, implement, and evaluate the interview process.

Development of Customized MMI

Constructing a content-valid circuit of multiple stations requires a blueprint containing a concise and relevant list of nonacademic attributes required by students in the program (and upon graduation)8 along with relative importance or weight assigned to each attribute.21 A modified nominal group technique (NGT) was used to select the nonacademic attributes for the current blueprint.22 The NGT is a consensus-development method that uses a structured-meeting approach to encourage creativity, balance participation among members, and incorporate voting techniques to aggregate group judgment on a specific topic. The technique involves 4 primary steps22: (1) silent generation of ideas in writing; (2) round-robin feedback from group members to record each idea in a concise phrase on a flip chart; (3) discussion of each recorded idea for clarification and evaluation; and (4) individual voting on priority ideas, with the group decision being mathematically derived by means of rank-ordering or rating.

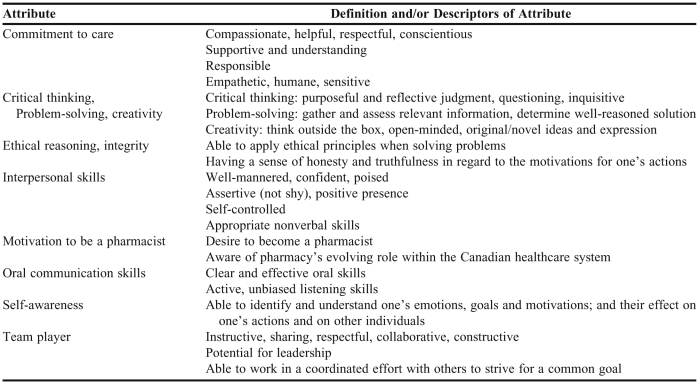

Participants in this case were 17 pharmacy program stakeholders divided into 2 groups, each facilitated by one of the study coauthors. We modified step 1 by providing participants with a list of 15 attributes identified by the task force, along with draft definitions and, if relevant, the authoritative source (eg, accreditation guidelines). Participants were able to choose from this list and/or identify attributes not on the list. Steps 2 and 3 took place within each group. In step 4, participants were asked to indicate what they believe to be the 8 most important nonacademic attributes that should be assessed during admissions interviews with candidates for the undergraduate pharmacy program. The decision to set the target number of attributes for the blueprint at 8 was based on the number used in the ProFit HR matrix and the approach used at the Michael DeGroote Medical School, McMaster University.20,21,23 Participants were further instructed to independently rank their top 8 attributes with respect to importance. These anonymous votes were then recorded beside each attribute on the flip charts, and the lists and votes from the 2 groups were presented to the merged groups for discussion. Participants then independently selected their 8 most important attributes and assigned each an importance rating on a scale of 0 to 10. Attribute ratings were then summed across participants. A second round of voting was subsequently conducted by e-mail to seek consensus on consolidation of conceptually similar attributes. The resulting 8 highest-ranked attributes are shown in Table 1.

Table 1.

Desirable Attributes in a Candidate for Entry Into the Undergraduate Pharmacy Program at the Leslie Dan Faculty of Pharmacy

The paired-comparison technique21 was used to determine relative importance of each attribute in our blueprint. This technique involves using 1 or more judges to compare pairs of objects and then to select the member of the pair that has greater importance, thus ensuring “forced” ranking.23 In the current study, this was accomplished by developing a questionnaire soliciting choices between paired attributes. Because there was a total of 8 attributes, the questionnaire consisted of (8 × 7)/2 = 28 questions, listed in random order. The questionnaire was distributed, along with a cover letter and the attribute definitions in Table 1, to 663 stakeholders via Survey Monkey (Palo Alto, CA). The sample size was based on estimating a proportion of 50%, with a 5% margin of error and 95% confidence interval (CI) and an estimated response rate of 50%. All faculty members (n = 104) and all hospital pharmacy directors/managers (n = 68) who participated in the Faculty's experiential program were included, along with a random sample (n = 279) of 960 enrolled students and a random sample (n = 212) of the 1,205 preceptors in the Faculty's experiential database. A reminder regarding survey tool completion was sent 7 days after the initial distribution. After 10 days, data were downloaded from Survey Monkey into Microsoft Excel (Redmond, WA).

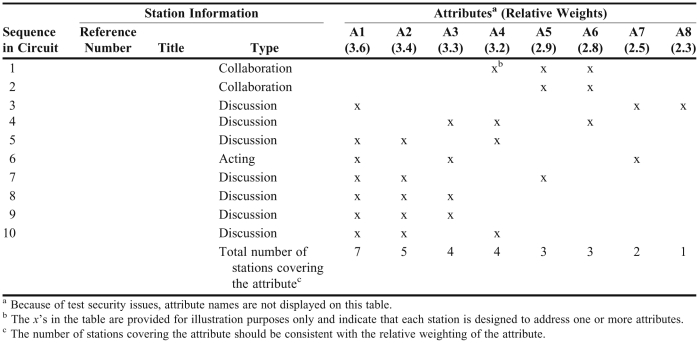

The probability of each attribute being selected was calculated and converted to a standardized (z) score. A constant of 3 was then added to each score to make the values positive, revealing the attribute's relative importance on an interval scale.24 Table 2 shows a matrix of the 8 weighted attributes (displayed in columns) and MMI stations (displayed in rows) that was set up as a blueprint to guide station selection for MMI circuits. The reported number of MMI stations (7-12) and station interview duration (5-8 minutes, excluding transition time between stations) has varied across institutions.8,11,12,15, 17, 25 For efficient use of time and resources, the shortest time per station and fewest number of stations that would not significantly compromise the reliability or validity of scores are considered optimal.7,8,15 For applicant pools that may be narrowly dispersed after applying preadmission requirements, such as those in the current study, the number of stations should be high enough to enable reliable measurement and differentiation of applicant performance. Considering the above factors, a 10-station MMI was developed and used.

Table 2.

Sample Blueprint for Selection of Stations for Each Unique Set of Concurrent Circuits in the Multiple Mini-Interview

A study of Deakin medical school applicants compared station lengths of 5 minutes vs 8 minutes, observing minimal effect on candidate ranking or score reliability.15 Interviewer feedback supported the 5-minute time; candidates were not asked about their perception of the length.15 We decided to compare station lengths of 8 minutes vs 6 minutes. This was accomplished by allocating 8 minutes for interviews at the first 5 stations that each candidate experienced and 6 minutes for the remaining 5 stations for all candidates. Thus, all candidates experienced five 8-minute stations followed by five 6-minute stations. Since the 10 candidates for each circuit had different starting stations, they each had a unique set of 8-minute and 6-minute stations.

The process for planning and conducting the pilot MMI was guided by the ProFitHR Admissions Users Guide.20 Using the blueprint, a 10-station MMI was designed to assess the 8 nonacademic attributes. Stations were taken from the ProFitHR bank and edited as required for pharmacy school applicants. One of the stations involved a standardized actor. Three concurrent circuits for 10 candidates each were arranged: 1 on each of 2 floors of the Faculty building, using seminar and private office space configured to minimize candidate travel between stations, and 1 in an ambulatory clinic at an adjacent hospital, using patient examination rooms. Candidate performance at each station was rated on a 10-point global scale, on which 1 = unsuitable and 10 = outstanding. For each station, interviewers were asked to consider communication skills, empathy, and the strength of the arguments displayed. Station scores were summed to obtain a score for each candidate based on a maximum possible score of 100. Interviewers did not have access to any information about candidates and were not informed of interview scores at any other stations.

Pilot Study of Customized MMI

Candidates were volunteers recruited from the incoming 2009 pharmacy class. Invitations were sent by e-mail a few days after acceptance of their admission offer. The initial plan was to recruit 60 candidates to enable a test of 6 concurrent circuits; however, when only 30 volunteered, the number of circuits was reduced to 3. Thirty interviewers were selected from 323 who volunteered in response to an e-mail invitation to faculty members, practitioners (eg, preceptors, alumni), and current students. All participants (interviewers and candidates) signed informed consent agreements.

Interviewers received 2 hours of structured training the week prior to the MMI, including guidance on using the full range of the 10-point assessment scale. On the MMI day, interviewers registered and received written materials about their assigned station and then participated in a 45-minute briefing with other interviewers assigned to that station. Members of each group discussed the content of their station, potential candidate responses, and behavioral scale descriptors.

Candidates and interviewers provided anonymous feedback using structured questionnaires (adapted with permission from Eva and colleagues8), which they completed immediately following the MMI session and in-group debriefings held immediately afterward. Staff, actors, and all helpers also provided feedback on a questionnaire completed at the end of the MMI session. A formal debriefing meeting of the IPT occurred 3 days after the pilot test. Members of the IPT prospectively recorded resources used for implementing the MMI on a form designed for this purpose and self-reported their time on log forms.

Descriptive statistics were calculated for questionnaire items and MMI scores, and qualitative data from questionnaires were content analyzed. Pearson r correlation coefficients were calculated between the MMI, PPA, and PCAT composite scores 26 to determine whether the MMI was assessing different attributes than those measured by the PPA and PCAT (discriminant validity). (Note: in 2009 the PCAT replaced use of the UTPAT as an admissions tool at LDFP.) To assess the reliability of the overall MMI score, a G coefficient (ICC) was calculated using a 2-way random effects ANOVA model.24

RESULTS

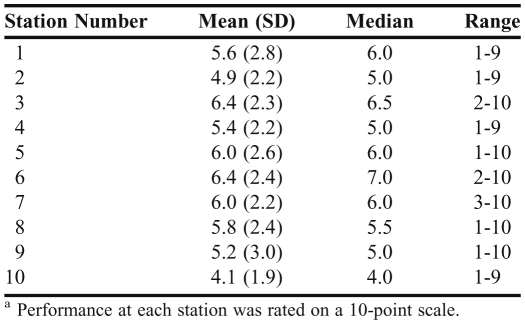

The overall response rate of the paired comparison survey was 24%; 161 of 663 questionnaires were completed. Standardized (z) scores for each of the 8 attributes ranged from -0.7 to 0.6; when transformed to positive values, scores varied from 2.3 to 3.6 (Table 2). Of the 30 first-year students who volunteered to be candidates, 63% were female (vs 55% in the full class) and 63% had 1 to 2 prior years in university (vs. 45% in the full class). The mean station score ± standard deviation (SD) was 5.6 ± 2.4 with a range of 4.1 to 6.4 (Table 3). There was good dispersion of scores within a station: minimum scores of 1 to 3 and maximum scores of 9 or 10. Mean station scores assigned by the 8 student interviewers were slightly higher (6.0 ± 2.5) than those by the 7 faculty members (5.5 ± 2.2) or 15 practitioners (5.4 ± 2.5). The mean overall score ± SD per interviewee was 55.8 ± 14.0 with a range of 26 to 79. The ICC for the overall 10-station score was 0.77 (95% CI, 0.63-0.88); for five 8-minute stations was 0.54 (95% CI, 0.23-0.76); and for five 6-minute stations was 0.66 (95% CI, 0.42-0.82). Nine candidates scored <50; of these, 3 scored <30. Mean scores ± SD by circuit were 54.2 ± 16.4, 54.4 ± 14.0, and 58.8 ± 12.3. The mean score on the PPA was 85.0% ± 5.8%, with a range of 73.5% to 96.0%. The mean PCAT composite percentile score was 88.6% ± 7.4%, with a range of 71% to 99%. The Pearson r coefficient for MMI and PPA was -0.025, and for MMI and PCAT composite score was 0.042. The coefficient for PPA and PCAT composite was 0.370 (p = 0.048).

Table 3.

Multiple Mini-Interview (MMI) Station Scores for Pharmacy Students (n = 30)a

Sixty-nine percent of interviewers judged a 6-minute interview as “just right” and 8 minutes as “a bit long.” Forty-seven percent of candidates judged a 6-minute interview as “just right” while 50% felt it was “a bit short,” whereas 50% found 8 minutes to be “just right” and 43% felt it was “a bit long.” Questionnaire responses revealed that station scenarios had face validity for both candidates and interviewers: 28/30 candidates (93%), and 29/30 interviewers (97%) agreed or strongly agreed that the stations used were relevant to pharmacy school. Written comments indicated that both types of participants were enthusiastic about the MMI process and experience and included specific suggestions for improving the process.

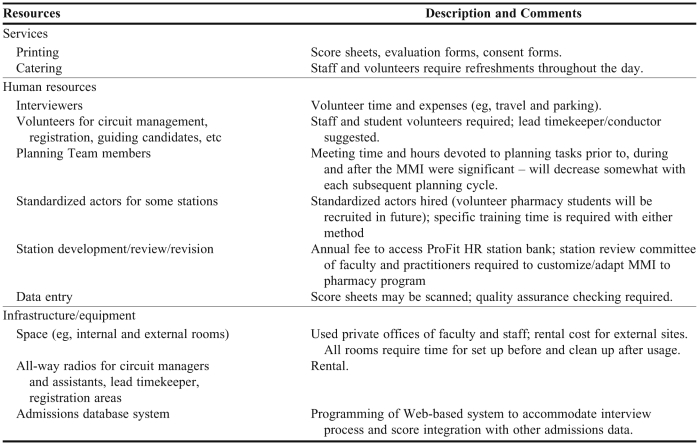

Sixty-six percent of interviewers strongly agreed and 34% agreed with the statement, “I am willing to volunteer to be an interviewer again in the spring of 2010.” Feedback from the debriefing session suggested that student interviewers should be in their third or fourth year. The stated rationale was that omitting first- and second-year students would reduce the chances for conflict of interest (ie, students knowing candidates) and increase the “experience/maturity” of interviewers, as perceived by candidates. The implementation team did not encounter any significant logistical problems on the interview day. While a cost analysis was not performed, the resources required were itemized (Table 4).

Table 4.

Resources Required for Conducting the Multiple Mini-Interview (MMI)

DISCUSSION

To our knowledge, this is the first paper describing the use of an MMI in a pharmacy school admissions process. Using systematic methods that involved several hundred of our institutional stakeholders, we customized an MMI developed for medical school applicants by identifying nonacademic attributes desired in incoming pharmacy students and by deriving weights for each attribute, based on perceived importance to our stakeholders.

Having access to an established station bank was a key factor in our ability to implement the MMI in a timely manner. However, because the bank was developed for medical school applicants, it had to be searched for stations that were, with little or no modification, relevant to pharmacy applicants. Feedback from pilot study candidates and interviewers revealed that selected stations were relevant to pharmacy applicants, thus establishing face validity. Some specific suggestions for improving the stations were also provided. After the pilot study, a station bank review committee was established to categorize each station in terms of attributes in our MMI blueprint and to eliminate or adapt stations for relevance and/or clarity. This work is ongoing.

Overall positive feedback from our participants supported the feasibility and acceptability of the MMI and was consistent with participant feedback published by McMaster and Calgary medical schools.8,14 The high number of practitioner volunteers for interviewer roles suggested a strong interest by these stakeholders in this component of the admissions process. The agreement of all interviewers to participate the following year supported the viability of recruiting several hundred volunteers for a full-scale MMI. With 5 concurrent circuits, 50 candidates are accommodated; circuits can be repeated up to 4 times in 1 day. Each interviewer is scheduled for 2 sequential circuits; thus, 50 are needed in the morning and 50 in the afternoon of each day. One logistical suggestion relevant in a full-scale MMI is to include a “rest station” on each circuit. This would allow scheduling of 11 candidates per circuit to ensure that each circuit would still have at least 10 candidates, in the event a few candidates do not arrive. This would prevent any gaps in the station cycle, which would pose a difficulty for functioning of the debate or collaboration stations.

Variation in the mean scores by circuit, particularly for the third circuit compared with the other 2, suggests that to ensure fairness to candidates, raw scores should be standardized within each circuit prior to pooling with scores from other circuits. Our full-scale MMI administrations (2010, 2011) have included this step.

The reliability of the overall MMI score (ICC = 0.77) in this sample of 30 candidates was above the suggested minimum of 0.75.24 Given this adequate reliability, our finding of negligible correlations between the MMI and other admissions tool scores supports the discriminant validity of the MMI; ie, the MMI measured attributes that were not captured in PPA and PCAT composite scores.

The G coefficient for our 6-minute stations (0.66) was greater than for our 8-minute stations (0.54). These reliability coefficients are low because each candidate experienced only 5 stations of each time duration. This finding is counterintuitive and does not concur with the finding of Dodson and colleagues that there is no difference in the reliability of 8-minute vs 5-minute MMI stations.15 This variation is perhaps explained by the current study's small sample size of 30 (compared with 175 in the study by Dodson and colleagues). Our observation that reliability was no worse with 6-minute stations, combined with the mixed feedback from interviewers and candidates about station length, led to a decision to assign 7 minutes per station for future MMI sessions.

Attribute weights were derived from responses to a questionnaire with a low return rate (24%). That being said, all 3 stakeholder groups were represented: pharmacists = 98/280 (35%), students = 51/279 (18.3%), and faculty members = 12/104 (11.4%). Factors contributing to the low response rate included administration of the survey tool during the summer months and only a short time being permitted for completion.

Another limitation was that pilot study candidates were volunteers from the first-year class. By definition, these individuals had already met admissions standards and, thus, represented a narrower and higher range of academic talent than would an actual cohort of interview applicants. To the extent that intelligence affects interview performance, this factor may have skewed interview results. Another possible limitation was that the number of candidates was lower than originally planned, although it was considered sufficient to test the feasibility of the MMI process.

CONCLUSIONS

Pilot study results confirmed the feasibility of the adapted MMI and its acceptability. Optimal station length was determined to be 7 minutes. The reliability of the overall MMI score was reasonably strong. Low correlations found with other admission criteria support the premise that the MMI would add value to the admissions process through measurement of attributes not captured by the study institution's other admissions tools, the PCAT and PPA. Based on favorable pilot study results, the MMI was approved for use in our 2010 admissions cycle, during which nearly 600 candidates were interviewed over 4 days in the spring of 2010. Predictive validity analysis of data from the 2010 cohort is under way.

ACKNOWLEDGMENTS

The Leslie Dan Faculty of Pharmacy is a licensee of the ProFitHR-ADMISSIONS interview system (http://www.profithr.com/). The guidance and expertise of the developers of the MMI at Michael G. DeGroote School of Medicine at McMaster University is sincerely appreciated. We acknowledge advice and guidance from Lisa Slack (The Michener Institute, University of Toronto) and Carlton Aylett (Krista Slack + Aylett Inc.) regarding implementation of the MMI. We also extend appreciation to Alina Varghese for her assistance in nominal group meeting design/development. All members of the MMI Implementation Team at the Leslie Dan Faculty of Pharmacy were invaluable throughout the process. We further extend sincere thanks to the many faculty members, pharmacists throughout Ontario, and pharmacy students and staff at LDFP for participation in the paired comparison survey tool and in the pilot MMI day, as interviewers, candidates, and other volunteer roles. Finally, we extend appreciation to Dr. Alex Kiss, of the Institute of Clinical and Evaluative Studies at Sunnybrook Health Sciences Centre, for statistical test verification. This study was presented at the Association of Faculties of Pharmacy of Canada Annual Meeting, June 2010, in Vancouver, Canada.

Appendix 1. Examples of Multiple Mini-Interview (MMI) Stationsa

REFERENCES

- 1. Accreditation Standards and Guidelines for the Professional Program in Pharmacy leading to the Doctor of Pharmacy Degree, Adopted January 15, 2006; effective July 1, 2007, American Accreditation Council for Pharmacy Education. http://www.acpe-accredit.org/pdf/ACPE_Revised_PharmD_Standards_Adopted_Jan152006.pdf. Accessed November 16, 2011.

- 2. Accreditation Standards, The Canadian Council for Accreditation of Pharmacy Programs (CCAPP). http://www.ccapp-accredit.ca/obtaining_accreditation/degree/standards/. Accessed November 16, 2011.

- 3.Report of the Task force on the Use of Interviews in the Professional Program Admissions Process at the Leslie Dan Faculty of Pharmacy. Final Report: June 16, 2008. (Unpublished)

- 4. Educational Outcomes for Entry-level Doctor of Pharmacy Graduates (2007), Association of Faculties of Pharmacy of Canada. http://www.afpc.info/downloads/1/Entry_level_PharmD_outcomes_AFPCAGM2007.pdf. Accessed November 16, 2011.

- 5. Standards of Professional Practice Behaviour for all Health Professional Students June 2008, University of Toronto. http://www.governingcouncil.utoronto.ca/policies/ProBehaviourHealthProStu.htm. Accessed November 16, 2011.

- 6.Albanese MA, Snow MH, Skochelak SE, Huggett KN, Farrell PM. Assessing personal qualities in medical school admissions. Acad Med. 2003;78(3):313–321. doi: 10.1097/00001888-200303000-00016. [DOI] [PubMed] [Google Scholar]

- 7.Eva KW, Reiter HI, Rosenfeld J, Norman GR. The relationship between interviewers’ characteristics and ratings assigned during a multiple mini-interview. Acad Med. 2004;79(6):602–609. doi: 10.1097/00001888-200406000-00021. [DOI] [PubMed] [Google Scholar]

- 8.Eva KW, Rosenfeld J, Reiter HI, Norman GR. An admissions OSCE: the multiple mini-interview. Med Educ. 2004;38(3):314–326. doi: 10.1046/j.1365-2923.2004.01776.x. [DOI] [PubMed] [Google Scholar]

- 9.Eva KW, Reiter HI, Rosenfeld J, Norman GR. The ability of the multiple mini interview to predict preclerkship performance in medical school. Acad Med. 2004;79(10 Suppl):S40–S42. doi: 10.1097/00001888-200410001-00012. [DOI] [PubMed] [Google Scholar]

- 10.Reiter HI, Eva KW, Rosenfeld J, Norman GR. Multiple mini-interview predicts for clinical clerkship performance, national licensure examination performance. Med Educ. 2007;41(4):378–384. doi: 10.1111/j.1365-2929.2007.02709.x. [DOI] [PubMed] [Google Scholar]

- 11.Harris S, Owen C. Discerning quality: using the multiple mini-interview in student selection for the Australian National University Medical School. Med Educ. 2007;41(3):234–241. doi: 10.1111/j.1365-2929.2007.02682.x. [DOI] [PubMed] [Google Scholar]

- 12.Roberts C, Walton M, Rothnie I, Crossly J, Lyon P, Kumar K, Tiller D. Factors affecting the utility of the multiple mini-interview in selecting candidates for graduate-entry medical school. Med Educ. 2008;42(4):396–404. doi: 10.1111/j.1365-2923.2008.03018.x. [DOI] [PubMed] [Google Scholar]

- 13.Rosenfeld JM, Reiter HI, Trinh K, Eva KW. A cost-efficiency comparison between the multiple mini-interview and traditional admissions interviews. Adv Health Sci Educ Theory Pract. 2008;13(1):43–58. doi: 10.1007/s10459-006-9029-z. [DOI] [PubMed] [Google Scholar]

- 14.Brownell K, Jockyer J, Collin T, Lemay JF. Introduction of the multiple mini interview into the admissions process at the University of Calgary: acceptability and feasibility. Med Teach. 2007;29(4):394–396. doi: 10.1080/01421590701311713. [DOI] [PubMed] [Google Scholar]

- 15.Dodson M, Crotty B, Prideaux D, Carne R, Ward A, de Leeuw E. The multiple mini-interview: how long is long enough? Med Educ. 2009;43(2):168–174. doi: 10.1111/j.1365-2923.2008.03260.x. [DOI] [PubMed] [Google Scholar]

- 16.Reiter HI, Salvatori P, Rosenfeld J, Trinh K, Eva K. The effect of defined violations of test security on admissions outcomes using multiple mini-interviews. Med Educ. 2006;40(1):36–42. doi: 10.1111/j.1365-2929.2005.02348.x. [DOI] [PubMed] [Google Scholar]

- 17. General Admission Information, The Michener Institute for Applied Health Sciences, Toronto. http://www.michener.ca/admissions/gen_admission_info.php?main=1&sub=8&sub2=0. Accessed November 16, 2011.

- 18. Physician Assistant Professional Degree Program, The Consortium of PA Education, Ontario. Available at: http://www.facmed.utoronto.ca/programs/healthscience/PAEducation.htm. Accessed Dec 5, 2011.

- 19.Stowe CD, Flowers SK, Warmack T, O'Brien CE, Gardner SF. 2008-07-19 Admission Objective Structure Clinical Examination (OSCE): Multiple Mini-Interview (MMI) (Abstract of Paper presented at the annual meeting of the American Association of Colleges of Pharmacy. 2009-03-04.) http://citation.allacademic.com/meta/p_mla_apa_research_citation/2/5/8/1/3/p258135_index.html. Accessed November 16, 2011.

- 20. ProFIT HR (Professional Fit Assessment Tool for Human Resources Experts, Advanced Psychometrics for Transitions Inc. (developed by McMaster University). http://www.profithr.com/. Accessed July 2, 2011.

- 21.Reiter HI, Eva KW. Reflecting the relative values of community, faculty and students in the admissions tools of medical school. Teach Learn Med. 2005;17(1):4–8. doi: 10.1207/s15328015tlm1701_2. [DOI] [PubMed] [Google Scholar]

- 22.Delbecq AL, Van de Ven AH. Gustafson. Group Techniques for Program Planning: A Guide to Nominal Group and Delphi Processes. Glenview, Ill: Scott, Foresman and Company; 1975. p. 8. [Google Scholar]

- 23.Marrin ML, McIntosh KA, Keane D, Schmuck ML. Use of the paired-comparison technique to determine the most valued qualities of the McMaster Medical Programme Admissions process. Adv Health Sci Educ Theory Pract. 2004;9(2):129–135. doi: 10.1023/B:AHSE.0000027439.18289.00. [DOI] [PubMed] [Google Scholar]

- 24.Streiner DL, Norman GR. Health Measurement Scales: A Practical Guide to Their Development and Use. 2nd ed. Oxford, UK: Oxford University Press; 1995. [Google Scholar]

- 25.Lemay JF, Lockyer JM, Collin YT, Brownell AKW. Assessment of non-cognitive traits through the admissions multiple mini-interview. Med Educ. 2007;41(6):573–579. doi: 10.1111/j.1365-2923.2007.02767.x. [DOI] [PubMed] [Google Scholar]

- 26.Pharmacy College Admission Test. Available at: http://www.pearsonassessments.com/haiweb/Cultures/en-US/site/Community/PostSecondary/Products/pcat/pcathome.htm. Accessed November 16, 2011.